Su Cient Statistics

Diunggah oleh

Muhamad Fikri AnwarJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Su Cient Statistics

Diunggah oleh

Muhamad Fikri AnwarHak Cipta:

Format Tersedia

1

Sucient statistics

A statistic is a function T = r(X1, X2, , Xn) of the random sample X1, X2, , Xn. Examples are

The last statistic is a bit strange (it completely igonores the random sample), but it is still a statistic. We say a statistic T is an estimator of a population parameter if T is usually close to . The sample mean is an estimator for the population mean; the sample variance is an estimator for the population variation. Obviously, there are lots of functions of X1, X2, , Xn and so lots of statistics. When we look for a good estimator, do we really need to consider all of them, or is there a much smaller set of statistics we could consider? Another way to ask the question is if there are a few key functions of the random sample which will by themselves contain all the information the sample does. For example, suppose we know the sample mean and the sample variance. Does the random sample contain any more information about the population than this? We should emphasize that we are always assuming our population is described by a given family of distributions (normal, binomial, gamma or ...) with one or several unknown parameters. The answer to the above question will depend on what family of distributions we assume describes the population. We start with a heuristic definition of a sucient statistic. We say T is a sucient statistic if the statistician who knows the value of T can do just as good a job of estimating the unknown parameter as the statistician who knows the entire random sample. The mathematical definition is as follows. A statistic T = r(X1, X2, , Xn) is a sucient statistic if for each t, the conditional distribution of X1, X2, , Xn given T = t and does not depend on .

To motivate the mathematical definition, we consider the following ex -periment. Let T = r(X1, , Xn) be a sucient statistic. There are two statisticians; we will call them A and B. Statistician A knows the entire ran-dom sample X1, , Xn, but statistician B only knows the value of T , call it t. Since the conditional distribution of X1, , Xn given and T does not depend on , statistician B knows this conditional distribution. So he can use his computer to 0 0 generate a random sample X1 , , Xn which has this conditional distribution. But then his random sample has the same distri-bution as a random sample drawn from the population (with 0 0 its unknown value of ). So statistician B can use his random sample X1 , , Xn to com-pute whatever statistician A computes using his random sample X1, , Xn, and he will (on average) do as well as statistician A. Thus the mathematical definition of sucient statistic implies the heuristic definition.

It is dicult to use the definition to check if a statistic is sucient or to find a sucient statistic. Luckily, there is a theorem that makes it easy to find sucient statistics.

Theorem 1. (Factorization theorem) Let X1, X2, , Xn be a random sample with joint density f(x1, x2, , xn|). A statistic T = r(X1, X2, , Xn) is sucient if and only if the joint density can be factored as follows:

where u and v are non-negative functions. The function u can depend on the full random sample x1, , xn, but not on the unknown parameter . The function v can depend on , but can depend on the random sample only through the value of r(x1, , xn).

It is easy to see that if f(t) is a one to one function and T is a sucient statistic, then f(T ) is a sucient statistic. In particular we can multiply a sucient statistic by a nonzero constant and get another sucient statistic. We now apply the theorem to some examples.

Example (normal population, unknown mean, known variance) We consider a normal 2 population for which the mean is unknown, but the the variance is known. The joint density is

By the factorization theorem this shows that tic. It follows that the sample mean

is a sucient statis-

is also a sucient statistic.

Example (Uniform population) Now suppose the Xi are uniformly dis-tributed on [0, ] where is unknown. Then the joint density is f(x1, , xn|) = n 1(xi , i = 1, 2, , n) Here 1(E) is an indicator function. It is 1 if the event E holds, 0 if it does not. Now xi for i = 1, 2, , n if and only if max{x1, x2, , xn} . So we have f(x1, , xn|) = n 1(max{x1, x2, , xn} ) By the factorization theorem this shows that T = max{X1, X2, , Xn} is a sucient statistic. What about the sample mean? Is it a sucient statistic in this example? By the factorization theorem it is a sucient statistic only if we can write 1(max{x1, x2, , xn } ) as a function of just the sample mean and . This is impossible, so the sample mean is not a sucient statistic in this setting. Example (Gamma population, unknown, known) Now suppose the pop-ulation has a gamma distribution and we know but is unknown. Then the joint density is

By the factorization theorem this shows that

is a sucient statistic. Note that exp(T ) the sample mean is not a sucient statistic

is also a sucient statistic. But

Now we reconsider the example of a normal population, but suppose that both and 2 are both unknown. Then the sample mean is not a sucient statistic. In this case we need to use more than one statistic to get suciency. The definition (both heuristic and mathematical) of suciency extends to several statistics in a natural way. We consider k statistics

We say T1, T2, , Tk are jointly sucient statistics if the statistician who knows the values of T1, T2, , Tk can do just as good a job of estimating the unknown parameter as the statistician who knows the entire random sample. In this setting typically represents several parameters and the number of statistics, k, is equal to the number of unknown parameters. The mathematical definition is as follows. The statistics T1, T2, , Tk are jointly sucient if for each t1, t2, , tk, the conditional distribution of X1, X2, , Xn given Ti = ti for i = 1, 2, , n and does not depend on . Again, we dont have to work with this definition because we have the following theorem: Theorem 2. (Factorization theorem) Let X1, X2, , Xn be a random sam-ple with joint density f(x1, x 2, , xn|). The statistics

are jointly sucient if and only if the joint density can be factored as follows: f(x1, x2, , xn|) = u(x1, x2, , xn) v(r1(x1, , xn), r2(x1, , xn), , rk(x1, , xn), ) where u and v are non-negative functions. The function u can depend on the full random sample x1, , xn, but not on the unknown parameters . The function v can depend on , but can depend on the random sample only through the values of ri(x1, , x n), i = 1, 2, , k. Recall that for a single statistic we can apply a one to one function to the statistic and get another sucient statistic. This generalizes as follows. Let g(t1, t2, , tk) be a k function whose values are in R and which is one to one. Let gi(t1, , tk), i = 1, 2, , k be the component functions of g. Then if T1, T2, , Tk are jointly sucient, then gi(T1, T2, , Tk) are jointly sucient. We now revisit two examples. First consider a normal population with unknown mean and variance. Our previous equations show that

are jointly sucient statistics. Another set of jointly sucent statistics is the sample mean and sample variance. (What is g(t1, t2) ?)

Now consider a population with the gamma distribution with both and unknown. Then

are jointly sucient statistics

Anda mungkin juga menyukai

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (588)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (74)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2259)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (121)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- From Input To Affordance: Social-Interactive Learning From An Ecological Perspective Leo Van Lier Monterey Institute Oflntemational StudiesDokumen15 halamanFrom Input To Affordance: Social-Interactive Learning From An Ecological Perspective Leo Van Lier Monterey Institute Oflntemational StudiesKayra MoslemBelum ada peringkat

- Hydrodynamic Calculation Butterfly Valve (Double Disc)Dokumen31 halamanHydrodynamic Calculation Butterfly Valve (Double Disc)met-calcBelum ada peringkat

- Inferring The Speaker's Tone, ModeDokumen31 halamanInferring The Speaker's Tone, Modeblessilda.delaramaBelum ada peringkat

- Cisco 2500 Series RoutersDokumen16 halamanCisco 2500 Series RoutersJull Quintero DazaBelum ada peringkat

- Sony Cdm82a 82b Cmt-hpx11d Hcd-hpx11d Mechanical OperationDokumen12 halamanSony Cdm82a 82b Cmt-hpx11d Hcd-hpx11d Mechanical OperationDanBelum ada peringkat

- Basic Geriatric Nursing 6th Edition Williams Test BankDokumen10 halamanBasic Geriatric Nursing 6th Edition Williams Test Bankmaryrodriguezxsntrogkwd100% (49)

- WPBSA Official Rules of The Games of Snooker and Billiards 2020 PDFDokumen88 halamanWPBSA Official Rules of The Games of Snooker and Billiards 2020 PDFbabuzducBelum ada peringkat

- EPCC Hydrocarbon Downstream L&T 09.01.2014Dokumen49 halamanEPCC Hydrocarbon Downstream L&T 09.01.2014shyaminannnaBelum ada peringkat

- Twilight PrincessDokumen49 halamanTwilight PrincessHikari DiegoBelum ada peringkat

- 1762 Ob8 PDFDokumen16 halaman1762 Ob8 PDFRodríguez EdwardBelum ada peringkat

- Introduction To LCCDokumen32 halamanIntroduction To LCCGonzalo LopezBelum ada peringkat

- The Light Fantastic by Sarah CombsDokumen34 halamanThe Light Fantastic by Sarah CombsCandlewick PressBelum ada peringkat

- NCP Orif Right Femur Post OpDokumen2 halamanNCP Orif Right Femur Post OpCen Janber CabrillosBelum ada peringkat

- Crouse Hinds XPL Led BrochureDokumen12 halamanCrouse Hinds XPL Led BrochureBrayan Galaz BelmarBelum ada peringkat

- Toshiba: ® A20SeriesDokumen12 halamanToshiba: ® A20SeriesYangBelum ada peringkat

- Midterm Exam Gor Grade 11Dokumen2 halamanMidterm Exam Gor Grade 11Algelle AbrantesBelum ada peringkat

- Release From Destructive Covenants - D. K. OlukoyaDokumen178 halamanRelease From Destructive Covenants - D. K. OlukoyaJemima Manzo100% (1)

- EN Manual Lenovo Ideapad S130-14igm S130-11igmDokumen33 halamanEN Manual Lenovo Ideapad S130-14igm S130-11igmDolgoffBelum ada peringkat

- H107en 201906 r4 Elcor Elcorplus 20200903 Red1Dokumen228 halamanH107en 201906 r4 Elcor Elcorplus 20200903 Red1mokbelBelum ada peringkat

- Significance of GodboleDokumen5 halamanSignificance of GodbolehickeyvBelum ada peringkat

- Safe Lorry Loader Crane OperationsDokumen4 halamanSafe Lorry Loader Crane Operationsjdmultimodal sdn bhdBelum ada peringkat

- 3-History Rock Cut MonumentDokumen136 halaman3-History Rock Cut MonumentkrishnaBelum ada peringkat

- Save Water SpeechDokumen4 halamanSave Water SpeechHari Prakash Shukla0% (1)

- C.Abdul Hakeem College of Engineering and Technology, Melvisharam Department of Aeronautical Engineering Academic Year 2020-2021 (ODD)Dokumen1 halamanC.Abdul Hakeem College of Engineering and Technology, Melvisharam Department of Aeronautical Engineering Academic Year 2020-2021 (ODD)shabeerBelum ada peringkat

- Esteem 1999 2000 1.3L 1.6LDokumen45 halamanEsteem 1999 2000 1.3L 1.6LArnold Hernández CarvajalBelum ada peringkat

- Flow Chart - QCDokumen2 halamanFlow Chart - QCKarthikeyan Shanmugavel100% (1)

- Middle Range Theory Ellen D. Schulzt: Modeling and Role Modeling Katharine Kolcaba: Comfort TheoryDokumen22 halamanMiddle Range Theory Ellen D. Schulzt: Modeling and Role Modeling Katharine Kolcaba: Comfort TheoryMerlinBelum ada peringkat

- Biophoton RevolutionDokumen3 halamanBiophoton RevolutionVyavasayaha Anita BusicBelum ada peringkat

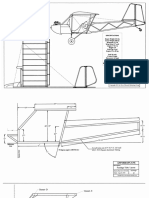

- Plans PDFDokumen49 halamanPlans PDFEstevam Gomes de Azevedo85% (34)

- Indor Lighting DesignDokumen33 halamanIndor Lighting DesignRajesh MalikBelum ada peringkat