Bitsclass 2013

Diunggah oleh

api-233433206Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

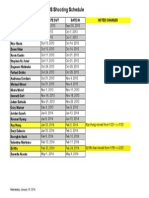

Bitsclass 2013

Diunggah oleh

api-233433206Hak Cipta:

Format Tersedia

Intro -Guardian of the Image -- Bits and Bytes For the Cinematographer -Principals and underpinnings rather than

prescriptions. -Fundamental information that won't become obsolete as technologies change -- maybe you can even lead the technology change using some of these principals. -There are so many current and possible future permutations of not only cameras and formats but of ways to rate the cameras and subsequent pipelines. Amongst other things, this seminar should provide some tools that are not specic to today's technology only for evaluating which attributes of an image come from which step in the pipeline. Not lumping these things together and/or misattributing them. What is a digital image? -Just the images subdivided into pixels -- each pixel is three numbers from zero to one. That's all. -Effectively (though sometimes indirectly, since there is often an implied transform) a digital image is a numeric address system to look up all the possible states of an image sensor or a display device. -An image le is literally just a three-array that you can do any math on as such -- does not even have to be thought of as an image. An image could be a table and you could use a spreadsheet instead of an image editor to edit it. -RGB is the system we care most about -- we effectively use always use it, even when the underlying system is different. And all implemented systems are still merely this same kind of lookup. -There need to be enough elements in the image array not to see pixels and there needs to be enough steps from zero to 1 to not see increments. In other words: both must be subdivided nely enough to for the increments to be invisible and to appear smooth. -There is the conventional description in image science of "scene referenced" versus "display referenced," but this has a limited value (let's come back to it when we talk about density characteristics). The reason the concept is is tenuous to me is that, on the one hand, it is based entirely on the premise that a mathematical expression exists and is known that can convert between image le values and actual light values on set, but on the other hand, that the user cannot implement this expression. The distinction made more sense when video cameras had shading and caused clipping to the sensor signal and whatnot. -What attributes reside in the camera/sensor, which in the in-camera processing, and

which in the le? We'll have to examine this after we understand the attributes themselves, but it's important to understand the attributes of each individually and not mix up a part with the whole or a part with another part. Why We're Using Nuke For This Seminar -It is full quality and "agnostic," has direct pixel access. -It's mathematic transparency goes with the philosophy of the seminar: for image transforms not to obfuscated or made to sound magical. Bit Depth/Quantization -Breaking a smooth gradient into steps. Enough steps not to see increments and to appear perfectly smooth. -Bit depth is not latitude. -Bit depth is like resolution for color depth: not extensive, but intensive. You need to subdivide the image into enough pieces to not see the components and maintain the illusion of smoothness. -Conventional bit depth sizes and naming conventions (including per-channel versus per-pixel). Density characteristics: -the general types: logarithmic, rec709 (or gamma encoded), and linear light. Linear light and rec709 are NOT the same. -How general types differ from all the specic avors. -Implied/expected transforms: gamma, log/rec709, etc. -Quantization allocation. Linear is untenable as a storage format. Should only be used for calculations, not for storage. Show the allocation chart and give the measuring stick analogy. -A very concrete example of why linear is not a storage format: The way that ArriRaw is unpacked to linear light goes to around 36 on a scale of 0-1. -Float representation is inherently logarithmic. However, it puts much of its energy into fractions (smaller increments than the smallest integer quanta) and into negative numbers. So, the net after the gain and the loss is a wash and it's still inefcient as a camera le. -Densities characteristics (at least those that don't have horizontal lines in the graph)

can be inverted -- so the decision on density characteristics for storage should be made based on quantization allocation and coherence. -Using the descriptive term "tone map." -What is a LUT: fundamentally, conventionally, philosophically (including the philosophical and technical differences between a LUT and a grade). Compare ArriLUT to YedLUT for Alexa. -DP must understand relation of light in front of lens to sensor response, then to mapping of camera format, then to mapping for viewing. To optimize the camera and to accurately predict the resulting image after it goes through the pipeline, each step in the process must be known and understood discreetly, without collapsing several steps. We'll look at this again after each successive step. -Merely saying that it looked good on some set monitor is meaningless. -Any initial densities can be pushed to lmic densities, but NOT using lift/gamma/gain. (Including the math of lift/gamma/gain). -Let's get into some specic tests on cameras that can be done to understand this. -Now we can see why scene-referenced versus display referenced isn't really an either/ or dichotomy as much as an emphasis on one of two active functions. It may have been more meaningful with old style video cameras, which, unlike digital cinema cameras, used to have all kinds of image processing that included area shading and data clipping that could not be inverted. (Also, analog signal processing prevented inverting using math expressions rather than hardware.) Compression -Uncompressed has a certain xity: if you dene your specications, then the data size is xed (the size of a DPX or TIFF le is predictable and meaningful). Compression untethers this xity. -Throwing data away and hoping no one notices. By its very denition, compressed formats do not meet their own spec (otherwise they wouldn't be compressed.) So, compression is a method of throwing data away. -Compression formats are perceptual. So, you can't tell how good they are by the numbers. -There are two kinds of spatial compression (DCT and wavelet), and there's temporal compression. -The problem with them being perceptual is that they may work for a simple test but fall apart for color correction, for green screen keying, and/or for use as a master format for

subsequent compression layers. -Successive compression steps are cumulative -- it's the equivalent of duplication generations in analog. -Some are more open than others -- publishing the compression/decompression algorithms or keeping them only for business partners. Red does not let you transcode TO its codec, so you can't test the codec separate from the camera. -Coda: separating attributes of various steps in the pipeline. Resolution versus Apparent Sharpness -MTF versus resolution -Photosite count is only one factor in resolution. (Including compression falsifying resolution count -- let's look at down-rezing versus compressing.) -Green channel dominance and color subsampling. -The fact that sharpening is used is proof that subpixel resolution is quackery. -Real example where something called "5k" is only a tiny bit more resolute than "2k." -At high nominal resolutions, how things are scaled and/or sharpened has more to do with the look than true resolution does. -Noise and lm grain and perceptual sharpness. -Coda: separating attributes of various steps in the pipeline. Bayer vs RGB and "Raw" nonsense -What the two systems are. -As we saw, green is most important for resolution, so using the real and limited surface area and circuitry with green weight makes sense in a camera. And RGB makes sense for le formats to manipulate: it resolves the spacial issues that will eventually need to be addressed with Bayer. So, it's not an issue of one being better -- it's just complex. -Confusion of naming conventions. Bayer and RGB are both good systems but vendors use the confusion about the difference to cloud the meaning of specs. Notice that when we say "2k" or "4k" we almost never say the units -- "k" is not a unit, it means "thousand." But Bayer and RGB are different units. -"Raw" is a red herring. "Not-yet-debayered" is not necessarily a benet. "Raw" is meant to evoke mystical adulation, but it's still a computer le with numbers in it. It

doesn't mean you magically have access to more data than the le can store. "Raw" is not a magical incantation that makes a computer le no longer subject to realities of how computer les work. -Some "raw" formats are also uncompressed. Uncompressed is indeed an advantage over compressed, but the fact that some les are both raw and uncompressed is not necessarily an advantage. Video Range / Full Range -It's too bad that this convention exists and we have to cover it but it is one of the most pervasive pitfalls for monitoring and workow. It's so pervasive that this almost accidental thing is actually one of the most practically important things in the seminar. -Coda: separating attributes of various steps in the pipeline. Spectral Response -Let's not go too deep into this -- it's a little off topic, but we'll explain the basics. Analogy to sound waves summing up the multitudinous components into a single amplitude. -This is the complexity that cannot be inverted. Once many wavelengths have been summed together and coded as single (or three) amplitudes, inverting is not possible. 3-channel data can be inverted to other 3 channel data (as long as there is no clipping/ clamping), but it cannot be inverted to original multi-wavelength components. -Coda: separating attributes of various steps in the pipeline. Testing Camera And Formats -Separating noise, latitude, rating, of a camera. -Formats: pushing and prodding on full quality images with full quality software. Including re-compressing. -Let's also think of some tricks to try to isolate variables when a device doesn't want you to (like if there's a camera that has only compressed output with no option for uncompressed). Thinking About Real Workows and Not Falling For Big Numbers -If you don't think about every step, it doesn't work. Both for practicality and for quality. (A higher quality format can yield a worse end-result if you're not properly prepared to handle the format downstream.) Review Some Specic Devices: File Formats, Software, Cameras

-image sequences versus clips -wrappers versus media streams -DPX, EXR, TIFF, ProRes4444, ProResHQ, R3D, XAVC, H.264 -Nuke, Shake, Adobe, Color Correctors -Alexa, Film, Red Epic. -Also color interchange: ICC, LUT, CDL. Some Fallacies About Real Workows: -"colorbaked" nonsense -compression and VFX: both le handling and keying issues -we're still not there for "ofine is online" to really work -- for multiple reasons. Some lmic attributes for color correction: -Toe and Shoulder -Crossover -Red goes towards yellow -- red is the most idiosyncratic primary. (Maybe partially because it's a wider swath of the visible wavelengths and because it's the color that wraps around.) -Max saturation is achieved earlier but has lower overall density. (Low density high saturation is what gives the impression of low saturation.) Politics: Communicating With Technicians And Creative People -Speaking to emphasize a common goal, not departmental or individual needs.

Anda mungkin juga menyukai

- Film Production Course OutlineDokumen3 halamanFilm Production Course OutlineMatthew MorrisBelum ada peringkat

- Eopa Study GuideDokumen59 halamanEopa Study Guideapi-242462678Belum ada peringkat

- Digital Cameras /digicams: Name - Prabhat Pandey Class - Xii-A Roll No - 1022 Subject - PhotographyDokumen11 halamanDigital Cameras /digicams: Name - Prabhat Pandey Class - Xii-A Roll No - 1022 Subject - PhotographyprabhatBelum ada peringkat

- Cinematography Video Essay ScriptDokumen9 halamanCinematography Video Essay Scriptapi-480303503100% (1)

- Camera Shots and AnglesDokumen38 halamanCamera Shots and Anglessara alawadhiBelum ada peringkat

- Camera FramingDokumen5 halamanCamera FramingThiện Dĩnh OfficialBelum ada peringkat

- FinalpowerpointDokumen16 halamanFinalpowerpointapi-293793567Belum ada peringkat

- Oracle Process Manufacturing Master SetupsDokumen42 halamanOracle Process Manufacturing Master SetupsMadhuri Uppala100% (2)

- ARRI Lighting Handbook 3rd Edition March 2012Dokumen36 halamanARRI Lighting Handbook 3rd Edition March 2012João Paulo MachadoBelum ada peringkat

- Field Guide: Cinematographer'SDokumen91 halamanField Guide: Cinematographer'Sapi-3759623Belum ada peringkat

- NX1 Brochure PDFDokumen17 halamanNX1 Brochure PDFJamie Steeg100% (2)

- Steps The: MistakesDokumen6 halamanSteps The: MistakesvamcareerBelum ada peringkat

- Development of The Principles of EditingDokumen5 halamanDevelopment of The Principles of Editingapi-264459464Belum ada peringkat

- Cinematography ScriptDokumen7 halamanCinematography Scriptapi-477398143Belum ada peringkat

- Holzman CamerareportsDokumen3 halamanHolzman Camerareportsapi-233433206Belum ada peringkat

- Chapter 4: Fundamentals of Camerawork and Shot CompositionDokumen11 halamanChapter 4: Fundamentals of Camerawork and Shot CompositionBlancaAlvarezPulidoBelum ada peringkat

- ESPKey Tool Manual v1.0.0Dokumen36 halamanESPKey Tool Manual v1.0.0Jad HaddadBelum ada peringkat

- How To Analyse A Photograph: SubjectDokumen3 halamanHow To Analyse A Photograph: SubjectNoemi MarxBelum ada peringkat

- 001 Lighting For VideoDokumen6 halaman001 Lighting For VideoJesse JhangraBelum ada peringkat

- Cinematography: - The Frame, Shots and Scenes, - Camera Distance, Depth of Field, - Camera Angles and MovementsDokumen33 halamanCinematography: - The Frame, Shots and Scenes, - Camera Distance, Depth of Field, - Camera Angles and MovementsFrancisco GonzalezBelum ada peringkat

- Digital Cinematography Camera System: Hd900F Operation Manual Hardware Version /3Dokumen138 halamanDigital Cinematography Camera System: Hd900F Operation Manual Hardware Version /3Abdelwahad LabiadBelum ada peringkat

- Lesson Plan DigitalDokumen3 halamanLesson Plan Digitalapi-316401614Belum ada peringkat

- An Introduction To GenreDokumen16 halamanAn Introduction To GenrepancreatinfaceBelum ada peringkat

- Making Simple ShortsDokumen10 halamanMaking Simple ShortsArun KumarBelum ada peringkat

- UCLA Extension CinematographyDokumen4 halamanUCLA Extension CinematographystemandersBelum ada peringkat

- Ernest Holzman - One Light Day-NightDokumen12 halamanErnest Holzman - One Light Day-Nightapi-233433206Belum ada peringkat

- Short Film Equipment ListDokumen3 halamanShort Film Equipment Listapi-666940920Belum ada peringkat

- VideoDokumen24 halamanVideoSindhu SmilyBelum ada peringkat

- VF Film Format Comparison 1 3xDokumen1 halamanVF Film Format Comparison 1 3xKiruba NidhiBelum ada peringkat

- Polanski's Lenses 2Dokumen6 halamanPolanski's Lenses 2Michael JamesBelum ada peringkat

- SoundDokumen2 halamanSoundapi-690485844Belum ada peringkat

- Dewatering Screens: Single-Deck Twin VibratorDokumen8 halamanDewatering Screens: Single-Deck Twin Vibratorekrem0867Belum ada peringkat

- Cmotion Cableguide 2017 1012Dokumen74 halamanCmotion Cableguide 2017 1012karkeraBelum ada peringkat

- Summary of Chapter TwoDokumen5 halamanSummary of Chapter Twomahirwecynhtia1Belum ada peringkat

- 06-23-15 Low Light Scene TestDokumen14 halaman06-23-15 Low Light Scene Testapi-233433206Belum ada peringkat

- Structural Design and Optimization - Part IIDokumen448 halamanStructural Design and Optimization - Part IIFranco Bontempi100% (1)

- Combined VE SLMDokumen150 halamanCombined VE SLMAnzar khanBelum ada peringkat

- Episodic TV - CTPR 479 Cinematography Basics: OutlineDokumen8 halamanEpisodic TV - CTPR 479 Cinematography Basics: Outlineapi-3759623Belum ada peringkat

- Street Sony f65 NotesDokumen3 halamanStreet Sony f65 Notesapi-233433206Belum ada peringkat

- Basic Tool Kit & Resource Guide For Young Filmmakers: Children'S FoundationDokumen35 halamanBasic Tool Kit & Resource Guide For Young Filmmakers: Children'S FoundationIulia SoceaBelum ada peringkat

- A Powerpoint Presentation On Film NoirDokumen7 halamanA Powerpoint Presentation On Film Noirnic_bunbyBelum ada peringkat

- Master Class With Dave Perkal Asc - Conquering The 3-Person SceneDokumen8 halamanMaster Class With Dave Perkal Asc - Conquering The 3-Person Sceneapi-233433206Belum ada peringkat

- Master Class With Dave Perkal Asc - Conquering The 3-Person SceneDokumen8 halamanMaster Class With Dave Perkal Asc - Conquering The 3-Person Sceneapi-233433206Belum ada peringkat

- Jacek Laskus Lighting NotesDokumen5 halamanJacek Laskus Lighting Notesapi-233433206Belum ada peringkat

- Chressanthis Notes and ReadingsDokumen2 halamanChressanthis Notes and Readingsapi-233433206Belum ada peringkat

- Camera Notes Steve Yedlin pt1Dokumen1 halamanCamera Notes Steve Yedlin pt1api-233433206Belum ada peringkat

- Nakonechnyi Worshop Ge UsedDokumen1 halamanNakonechnyi Worshop Ge Usedapi-233433206Belum ada peringkat

- Edwu Lightingplot 100913Dokumen2 halamanEdwu Lightingplot 100913api-233433206Belum ada peringkat

- Afi 35mosschedule Final 13-14Dokumen1 halamanAfi 35mosschedule Final 13-14api-233433206Belum ada peringkat

- Camera Notes Steve Yedlin pt2 10 5Dokumen1 halamanCamera Notes Steve Yedlin pt2 10 5api-233433206Belum ada peringkat

- CINEMATOGRAPHYDokumen29 halamanCINEMATOGRAPHYShailaMae VillegasBelum ada peringkat

- Arri 235 BrochureDokumen12 halamanArri 235 Brochurefnv2001Belum ada peringkat

- Arri Camera Product Comparison Includes Alexa Mini Classic SXT and XRDokumen37 halamanArri Camera Product Comparison Includes Alexa Mini Classic SXT and XRUdit NijhawanBelum ada peringkat

- D16 White Paper 1.3Dokumen20 halamanD16 White Paper 1.3text100% (1)

- Arri ZMU 3A ManualDokumen24 halamanArri ZMU 3A ManualElectra/Off TraxBelum ada peringkat

- Lesson 9 Types Camera FramingDokumen19 halamanLesson 9 Types Camera FramingJessaBelum ada peringkat

- 1617 Film VideoDokumen88 halaman1617 Film VideoAAABUNBelum ada peringkat

- DSLR - Beyond The StillDokumen41 halamanDSLR - Beyond The StillRevanth HegdeBelum ada peringkat

- Cinematography ScriptDokumen5 halamanCinematography Scriptapi-481638701Belum ada peringkat

- Instruction Manual Mount Change Instructions Zeiss Cinema Zoom LensesDokumen24 halamanInstruction Manual Mount Change Instructions Zeiss Cinema Zoom LensesdimodimoBelum ada peringkat

- ARRI Lenses Brochure 2016Dokumen78 halamanARRI Lenses Brochure 2016ggtd4553t6gbBelum ada peringkat

- The Difference Between Film and Digital PhotographyDokumen17 halamanThe Difference Between Film and Digital PhotographyelitestnBelum ada peringkat

- FilmDokumen4 halamanFilmgapcenizalBelum ada peringkat

- Darkroom TimerDokumen7 halamanDarkroom TimerHuy NguyễnBelum ada peringkat

- Chapman DolliesDokumen3 halamanChapman DolliesroobydoOBelum ada peringkat

- 16sr3 User GuideDokumen113 halaman16sr3 User GuideAbdelwahad LabiadBelum ada peringkat

- Notes PaulmaibaumDokumen23 halamanNotes Paulmaibaumapi-233433206Belum ada peringkat

- Lazar Lighting Scene OverheadDokumen1 halamanLazar Lighting Scene Overheadapi-233433206Belum ada peringkat

- Michael Grady Mole OverheadDokumen1 halamanMichael Grady Mole Overheadapi-233433206Belum ada peringkat

- Lazar Lighting SceneDokumen1 halamanLazar Lighting Sceneapi-233433206Belum ada peringkat

- Vasco Nunes Lighting DiagramsDokumen6 halamanVasco Nunes Lighting Diagramsapi-233433206Belum ada peringkat

- Nakonechnyi Worshop Ge UsedDokumen1 halamanNakonechnyi Worshop Ge Usedapi-233433206Belum ada peringkat

- Jess Hall Lighting NotesDokumen6 halamanJess Hall Lighting Notesapi-233433206Belum ada peringkat

- Cin 621 Negrin Camera Lighting NotessmDokumen3 halamanCin 621 Negrin Camera Lighting Notessmapi-233433206Belum ada peringkat

- Uta Briesewitz Lighting NotesDokumen9 halamanUta Briesewitz Lighting Notesapi-233433206Belum ada peringkat

- Afi 35mosschedule Final 13-14Dokumen1 halamanAfi 35mosschedule Final 13-14api-233433206Belum ada peringkat

- Bill Bennett Lighting NotesDokumen4 halamanBill Bennett Lighting Notesapi-233433206Belum ada peringkat

- Edwu Lightingplot 100913Dokumen2 halamanEdwu Lightingplot 100913api-233433206Belum ada peringkat

- Slut Scene CineclassDokumen2 halamanSlut Scene Cineclassapi-233433206Belum ada peringkat

- Slut FloorplanDokumen1 halamanSlut Floorplanapi-233433206Belum ada peringkat

- Camera Notes Steve Yedlin pt2 10 5Dokumen1 halamanCamera Notes Steve Yedlin pt2 10 5api-233433206Belum ada peringkat

- WuDokumen2 halamanWuapi-233433206Belum ada peringkat

- Kang Class Overview - HandoutDokumen3 halamanKang Class Overview - Handoutapi-233433206Belum ada peringkat

- Cin 621 Day 2 Day Exterior With Curtis Clark Asc Lighting and Camera NotesDokumen8 halamanCin 621 Day 2 Day Exterior With Curtis Clark Asc Lighting and Camera Notesapi-233433206Belum ada peringkat

- Cin 621 Day 1 Day Exterior With Curtis Clark Asc Lighting and Camera NotesDokumen15 halamanCin 621 Day 1 Day Exterior With Curtis Clark Asc Lighting and Camera Notesapi-233433206Belum ada peringkat

- Kliman Scene Luminance With Curtis ClarkDokumen3 halamanKliman Scene Luminance With Curtis Clarkapi-233433206Belum ada peringkat

- f65 and f55 Nico and Daniella 2Dokumen9 halamanf65 and f55 Nico and Daniella 2api-233433206Belum ada peringkat

- Bipolar Junction Transistor ModelsDokumen21 halamanBipolar Junction Transistor ModelsecedepttBelum ada peringkat

- Zipato MQTTCloudDokumen34 halamanZipato MQTTClouddensasBelum ada peringkat

- Biology Paper 6 NotesDokumen5 halamanBiology Paper 6 NotesbBelum ada peringkat

- Physics Oly Practice Test 01Dokumen7 halamanPhysics Oly Practice Test 01Nguyễn Trần Minh TríBelum ada peringkat

- BMTC 132Dokumen16 halamanBMTC 132Deepak Chaudhary JaatBelum ada peringkat

- Cswip Appendix 01Dokumen23 halamanCswip Appendix 01Nsidibe Michael EtimBelum ada peringkat

- ProNest 2012 Data SheetDokumen2 halamanProNest 2012 Data Sheetalejandro777_eBelum ada peringkat

- Ratio Worksheet AKDokumen12 halamanRatio Worksheet AKChika AuliaBelum ada peringkat

- Lazauskas 2013Dokumen15 halamanLazauskas 2013Youcef FermiBelum ada peringkat

- Dw384 Spare Part ListDokumen5 halamanDw384 Spare Part ListLuis Manuel Montoya RiveraBelum ada peringkat

- Class Progress Chart Electrical Installation and Maintenance NC Ii (196 HRS)Dokumen2 halamanClass Progress Chart Electrical Installation and Maintenance NC Ii (196 HRS)Shairrah Claire Bañares BatangueBelum ada peringkat

- Handheld Vital Signs Monitor: XH-30 SeriesDokumen4 halamanHandheld Vital Signs Monitor: XH-30 SeriesTopan AssyBelum ada peringkat

- Smith Meter Microloadnet Operator Reference Manual-A Voir PDFDokumen96 halamanSmith Meter Microloadnet Operator Reference Manual-A Voir PDFmehrezBelum ada peringkat

- Wrong Number Series 23 June by Aashish AroraDokumen53 halamanWrong Number Series 23 June by Aashish AroraSaurabh KatiyarBelum ada peringkat

- Tractor Engine and Drawbar PerformanceDokumen3 halamanTractor Engine and Drawbar PerformancemaureenBelum ada peringkat

- Certificate of Analysis: Sulfate IC CRM - 1000 MG/LDokumen2 halamanCertificate of Analysis: Sulfate IC CRM - 1000 MG/LasanalyticalBelum ada peringkat

- Amta5 8 Applying Tungsten Inert Gas Tig Welding TechniquesDokumen115 halamanAmta5 8 Applying Tungsten Inert Gas Tig Welding TechniquesAbu RectifyBelum ada peringkat

- Magnetron PDFDokumen1 halamanMagnetron PDFmytrya debBelum ada peringkat

- Heavy Welding ShopDokumen6 halamanHeavy Welding ShopSaurabh Katiyar100% (1)

- Integrating Theory, Experiments, and FEA To Solve Challenging Nonlinear Mechanics ProblemsDokumen17 halamanIntegrating Theory, Experiments, and FEA To Solve Challenging Nonlinear Mechanics ProblemsBodieTechBelum ada peringkat

- BioFluid Mechanics 1Dokumen29 halamanBioFluid Mechanics 1roxannedemaeyerBelum ada peringkat

- 2021 SEM 4 CC 9 OrganicDokumen3 halaman2021 SEM 4 CC 9 OrganicGaurav KumarBelum ada peringkat

- Tài Liệu CAT Pallet Truck NPP20NDokumen9 halamanTài Liệu CAT Pallet Truck NPP20NJONHHY NGUYEN DANGBelum ada peringkat

- Investment Model QuestionsDokumen12 halamanInvestment Model Questionssamuel debebe0% (1)

- Bafbana Module 5Dokumen12 halamanBafbana Module 5VILLANUEVA, RAQUEL NONABelum ada peringkat

- OS Prelude-1Dokumen16 halamanOS Prelude-1YayatiBelum ada peringkat