Aca Unit1

Diunggah oleh

skarthikmtechJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Aca Unit1

Diunggah oleh

skarthikmtechHak Cipta:

Format Tersedia

EC6009 ADVANCED COMPUTER ARCHITETCURE

SSM INSTITUTE OF ENGINEERING AND TECHNOLOGY

DEPARTMENT OF ELECTRONICS AND COMMUNICATION ENGINEERING

EC6009 ADVANCED COMPUTER ARCHITECTURE

COURSE MATERIAL

The study of the structure, behavior and design of computers

- John P Hayes

Prepared by

KARTHIK. S -

KARTHIK. S

Approved BY

HOD(ECE) -

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

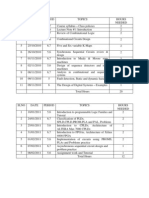

SYLLABUS:

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

UNIT 1 FUNDAMENTALS OF COMPUTER DESIGN

-

And now for something completely different.

Monty Pythons Flying Circus

1. INTRODUCTION:

The technical term for a PC is micro data processor. Computers have their roots 300

years back in history. Mathematicians and philosophers like Pascal, Leibnitz, Babbage

and Boole made the foundation with their theoretical works. Only in the second half of

this century was electronic science sufficiently developed, to make practical use of their

theories.

The modern PC has roots back to USA in the 1940's. Among the many scientists, I like to

remember John von Neumann (1903-57). He was a mathematician, born in Hungary. We

can still use his computer design today. A great majority of the computers of our daily

use are known as general purpose machines. These are machines that are built with no

specific application in mind, but rather are capable of performing computation needed by

a diversity of applications. These machines are to be distinguished from those built to

serve (tailored to) specific applications. The latter are known as special purpose

machines.

Definitions:

It may also be defined as the science and art of selecting and interconnecting hardware

components to create computers that meet functional, performance and cost goals

It is the arrangement of computer components and their relationships.

Computer architecture is the theory behind the design of a computer. In the same way as

a building architect sets the principles and goals of a building project as the basis for the

draftsman's plans, so too, a computer architect sets out the computer architecture as a

basis for the actual design specifications.

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

2. Architecture Vs Organization:

Architecture is the set of attributes visible to the programmer

Examples: Does this processor have a multiply instr.?

How does the compiler create object code?

How best is memory handled by the O/S?

Organization is how features are implemented

Examples:

Is there a hardware multiply unit or is it done by repeated addition?

What type of memory is used to store the program codes?

Figure 1 Computer Architecture

A general purpose computer is like an island that helps span the gap between the desired

behavior (application) and the basic building blocks (electronic devices) using COA.

3. Basic Structure of Computer Hardware & Software:

The basic functional structure of a computer is shown in the figure.

Figure 2 Basic computer structure

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

Figure 3 Basic block diagram of a computer

The functional units of a computer are Arithmetic and logic unit, Control unit, Memory unit

Input unit & Output unit.

Arithmetic & Logic Unit:

It is the unit of the system where most of the computer operations are carried out.

For example if we perform any arithmetic or logical operation we have to fetch the required

operands from the memory into processor and in processor the required operation is carried out

by ALU.

The result may be stored in memory or retained in the registers for immediate use.

Control Unit:

It is the unit which co-ordinates the operations of ALU, Memory, Input and output units.

It is the nerve center of the computer system that sends control signals to other units.

Data transfer between computer and memory is controlled by control unit through timing signals.

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

Memory Unit:

The function of the memory unit is to store program and data.

Memory is organized in the form of memory words. Each memory word will have unique

address.

Memory words can be accessed sequentially or randomly.

Memory words accessed randomly is referred as Random Access Memory. The time required to

access all the words is fixed.

In sequential access memory the words are accessed one by one. The fourth memory word is

accessed after accessing 0, 1, 2 and 3rd memories only.

Output unit:

The main purpose of the output unit is to send the processed results to external world.

Commonly used output units are Printers and monitors.

Input unit:

These devices are used for accepting input information such as program and data from external

world or users.

Commonly used input devices are Keyboard, Mouse and joystick.

Basic Operational Steps:

The following are the basic steps for execution of program,

Program is stored in the memory through the input unit.

Program counter will point to the first instruction of a program at the starting of the

execution of the program.

Contents of PC are transferred to MAR and a read control signal is sent to memory.

After memory access time is completed the requested word is read out of the memory and

placed in the MDR.

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

Next the contents of the MDR are transferred to IR and now the instruction is ready to be

executed.

If the instruction involves any operation to be performed by ALU, it is necessary to

obtain the operands.

If the operands resides in the memory, it has to be fetched by sending its address to

MAR and initiating a read cycle.

When the operand has been read from the memory into MDR, it is transferred from MDR

to ALU.

After fetching one or more operands in this way ALU can perform the desired operation.

If the result of the operation is to be stored in the memory then result is sent to MDR.

The address of location where the result is to be stored is transferred to MAR and write

cycle is initiated.

Somewhere during the execution of the current instruction the contents of the PC is

incremented and the PC contains address of the next instruction to be executed.

Figure 4 CPU with memory and I/O

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

As soon as the execution of the current instruction is completed, a new instruction fetch

may be started.

Bus Structure:

The group of lines that connect CPU and the peripherals is termed as BUS. The three types of

buses are Address bus, Data bus and Control bus. Typically, a bus consists of 50 to hundreds of

separate lines. On any bus the lines are grouped into three main function groups: data, address,

and control. There may also be power distribution lines for attached modules. The Address bus is

unidirectional because it carries only the address information from CPU to Memory. The Data

bus is bidirectional since it carries data to and from the CPU. In conventional systems multiple

buses were used but in modern systems single bus structure is used as shown in the figure.

Figure 5 Basic Bus structure

The single bus structure reduces the complexity of the bus connections, but it allows only single

bus transfer between the units at one time.

Basic computer functions:

The main functions performed by any computer system are,

o

o

o

o

Fetch

Decode

Execute

Store

The basic function performed by a computer is execution of a program, which consists of a set of

instructions stored in memory. The processor does the actual work by executing instructions

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

Figure 6 State diagram of fetch and execute cycle

specified in the program. In the simplest form, instruction processing consists of two steps: the

processor reads (fetches) instructions from memory one at a time and executes each instruction.

The processing required for a single instruction is called an instruction cycle. An instruction

cycle is shown below:

The state diagram below shows a more detailed look at the instruction cycle:

Figure 7 Detailed state diagram of instruction cycle

4. The Changing Face of Computing:

In the 1960s, the dominant form of computing was on large mainframes, machines

costing millions of dollars and stored in computer rooms with multiple operators

overseeing their support.

In 1970s birth of the minicomputer, a smaller sized machine initially focused on

applications in scientific laboratories

The 1980s rise of the desktop computer based on microprocessors, in the form of both

personal computers and workstations.

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

The 1990s emergence of the Internet and the world-wide web, the first successful

handheld computing devices (personal digital assistants or PDAs), and the emergence of

high-performance digital consumer electronics,

These changes have set the stage for a dramatic change in how we view computing,

computing applications, and the computer markets at the beginning of the millennium.

Figure 8 Growth in microprocessor performance

These changes in computer use have led to three different computing markets each

characterized by different applications, requirements, and computing technologies.

Desktop Computing:

Desktop computing spans from low-end systems that sell for under $1,000 to high end,

heavily-configured workstations that may sell for over $10,000.

the desktop market tends to be driven to optimize price-performance

Servers:

The role of servers to provide larger scale and more reliable file and computing services

The emergence of the world-wide web accelerated tremendous growth in demand for

web servers and web-based services.

For servers, different characteristics are

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

Availability.

Scalability.

Lastly,

efficient throughput.(transactions per minute or

web pages served per second)

Embedded Computers:

Computers lodged in other devices where the presence of the computer is not

immediately obvious

Examples--microwaves and washing machines, palmtops, cell phones, and smart cards

Characteristics :

performance need at a minimum price,

need to minimize memory

need to minimize power

The Task of a Computer Designer:

The task the computer designer faces is a complex one: Determine what attributes are important

for a new machine, and then design a machine to maximize performance while staying within

cost and power constraints. This task has many aspects, including instruction set design,

functional organization, logic design, and implementation. The implementation may encompass

integrated circuit design, packaging, power, and cooling. Optimizing the design requires

familiarity with a very wide range of technologies, from compilers and operating systems to

logic design and packaging.

In the past, the term computer architecture often referred only to instruction set design. Other

aspects of computer design were called implementation, often insinuating that implementation is

uninteresting or less challenging.. The architects or designers job is much more than instruction

set design, and the technical hurdles in the other aspects of the project are certainly as

challenging as those encountered in doing instruction set design. This challenge is particularly

acute at the present when the differences among instruction sets are small and at a time when

there are three rather distinct applications areas.

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

Computer architects must design a computer to meet functional requirements as well as price,

power, and performance goals. Often, they also have to determine what the functional

requirements are, and this can be a major task.

5. Technology Trends:

The main computer architecture goals are

Rapid technological changes

Functional requirements

Cost & Price

Power

Performance

If an instruction set architecture is to be successful, it must be designed to survirapid changes in

computer technology. To plan for the evolution of a machine, the designer must be especially

aware of rapidly occurring changes in implementation technology. Four implementation

technologies, which change at a dramatic pace, are critical to modern implementations:

Integrated circuit logic technology Transistor density increases by about 35% per year,

quadrupling in somewhat over four years. Increases in die size are less predictable and slower,

ranging from 10% to 20% per year. The combined effect is a growth rate in transistor count on a

chip of about 55% per year. Device speed scales more slowly, as we discuss below.

Semiconductor DRAM (dynamic random-access memory)Density increases by between

40% and 60% per year, quadrupling in three to four years. Cycle time has improved very slowly,

decreasing by about one-third in 10 years. Bandwidth per chip increases about twice as fast as

latency decreases. In addition, changes to the DRAM interface have also improved the

bandwidth

Magnetic disk technology recently, disk density has been improving by more than 100% per

year, quadrupling in two years. Prior to 1990, density increased by about 30% per year, doubling

in three years. It appears that disk technology will continue the faster density growth rate for

some time to come. Access time has improved by one-third in 10 years.

Network technology Network performance depends both on the performance of switches and

on the performance of the transmission system, both latency and bandwidth can be improved,

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

though recently bandwidth has been the primary focus. For many years, networking technology

appeared to improve slowly:

6. Cost, Price and their Trends:

Although there are computer designs where costs tend to be less importantspecically

supercomputerscost-sensitive designs are of growing importance: more than half the PCs sold

in 1999 were priced at less than $1,000, and the average price of a 32-bit microprocessor for an

embedded application is in the tens of dollars. Indeed, in the past 15 years, the use of technology

improvements to achieve lower cost, as well as increased performance, has been a major theme

in the computer industry.

Price is what you sell a nished good for, and cost is the amount spent to produce it, including

overhead.

The Impact of Time, Volume, Commodification:

The cost of a manufactured computer component decreases over time even without major

improvements in the basic implementation technology. The underlying principle that drives costs

down is the learning curve manufacturing costs decrease over time. The learning curve itself

is best measured by change in the percentage of manufactured devices that survives the testing

procedure. Whether it is a chip, a board, or a system, designs that have twice the yield will have

basically half the cost.

Volume is a second key factor in determining cost. Increasing volumes affect cost in several

ways. First, they decrease the time needed to get down the learning curve, which is partly

proportional to the number of systems (or chips) manufactured. Second, volume decreases cost,

since it increases purchasing and manufacturing efficiency. As a rule of thumb, some designers

have estimated that cost decreases about 10% for each doubling of volume. Also, volume

decreases the amount of development cost that must be amortized by each machine, thus

allowing cost and selling price to be closer. We will return to the other factors inuencing selling

price shortly.

Commodities are products that are sold by multiple vendors in large volumes and are essentially

identical. Virtually all the products sold on the shelves of grocery stores are commodities, as are

standard DRAMs, disks, monitors, and keboards. In the past 10 years, much of the low end of the

computer business has become a commodity business focused on building IBM-compatible PCs.

There are a variety of vendors that ship virtually identical products and are highly competitive.

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

Cost of an Integrated Circuit:

Integrated circuit costs are becoming a greater portion of the cost that varies between machines,

Figure 9 Wafer chopped into dies

especially in the high-volume, cost-sensitive portion of the market. Thus computer designers

must understand the costs of chips to understand the costs of current computers. Although the

costs of integrated circuits have dropped exponentially, the basic procedure of silicon

manufacture is unchanged: A wafer extremely is still tested and chopped into dies that are

packaged (see Figure above). Thus the cost of a packaged integrated circuit is

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

wafer yield accounts for wafers that are completely bad and so need not be tested. --is a

parameter that corresponds inversely to the number of masking levels, a measure of

manufacturing complexity, critical to die yield.

Example:

Find the number of dies per 30-cm wafer for a die that is 0.7 cm on a side.

Solution:

2

The total die area is 0.49 cm thus,

But this only gives the maximum number of dies per wafer. The critical question is, What is the

fraction or percentage of good dies on a wafer number, or the die yield? A simple empirical

model of integrated circuit yield, which assumes that defects are randomly distributed over the

wafer and that yield is inversely proportional to the complexity of the fabrication process, leads

to the following:

For todays multilevel metal CMOS processes, a good estimate is = 4.0

Example:

Find the die yield for dies that are 1 cm on a side and 0.7 cm on a side, assuming a defect density

of 0.6 per cm2

The total die areas are 1 cm2 and 0.49 cm2. For the larger die the yield is

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

Cost Versus PriceWhy They Differ and By How Much:

Costs of components may conne a designers desires, but they are still far from representing

what the customer must pay. Cost goes through a number of changes before it becomes price,

and the computer designer should understand how a design decision will affect the potential

selling price. For example, changing cost by $1000 may change price by $3000 to $4000.

Without understanding the relationship of cost to price the computer designer may not

understand the impact on price of adding, deleting, or replacing components.

Figure 10 Cost performance

Direct costs refer to the costs directly related to making a product. These include labor

costs, purchasing components, scrap (the left over from yield), and warranty

Gross margin, the companys overhead that cannot be billed directly to one product.

Indirect cost- It includes the companys research and development (R&D), marketing,

sales, manufacturing equipment maintenance, building rental, cost of financing, pretax

,profits, and taxes.

Average selling pricethe money that comes directly to the company for each product

sold.

List price-that companies offer volume discounts, lowering the average selling price.

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

Measuring and Reporting Performance:

When we say one computer is faster than another, what do we mean? The user of a desktop

machine may say a computer is faster when a program runs in less time, while the computer

center manager running a large server system may say a computer is faster when it completes

more jobs in an hour. The computer user is interested in reducing response timethe time

between the start and the completion of an eventalso referred to as execution time.

The manager of a large data processing center may be interested in increasing throughputthe

total amount of work done in a given time.

In comparing design alternatives, we often want to relate the performance of two

different machines, say X and Y. The phrase X is faster than Y is used here to mean that the

response time or execution time is lower on X than on Y for the given task. In particular, X is n

times faster than Y will mean

Since execution time is the reciprocal of performance, the following relationship holds:

The phrase the throughput of X is 1.3 times higher than Y signifies here that the number of

tasks completed per unit time on machine X is 1.3 times the number completed on Y. Because

performance and execution time are reciprocals, increasing Performance decreases execution

time.

Measuring Performance:

The most straightforward dentition of time is called wall-clock time, response time, or elapsed

time, which is the latency to complete a task, including disk accesses, memory accesses,

input/output activities, operating system overheadeverything. With multiprogramming the

CPU works on another program while waiting for I/O and may not necessarily minimize the

elapsed time of one program. Hence we need a term to take this activity into account. CPU time

recognizes this distinction and means the time the CPU is computing, not including the time

waiting for I/O or running other programs. (Clearly the response time seen by the user is the

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

elapsed time of the program, not the CPU time.) CPU time Can be further divided into the CPU

time spent in the program, called user CPU time, and the CPU time spent in the operating system

performing tasks requested by the program, called system CPU time.

There are ve levels of programs used in such circumstances, listed below in decreasing order of

accuracy of prediction.

Real applications

Real applications have input, output, and options that a user can select when

running the program.

Real applications often encounter portability problems arising from dependences

on the operating system or compiler.

Modified (or scripted) applications

Applications are modified for two primary reasons: to enhance portability or to

focus on one particular aspect of system performance.

For example, to create a CPU-oriented benchmark, I/O may be removed or

restructured to minimize its impact on execution time.

Kernels--Kernels are best used to isolate performance of individual features of a machine to

explain the reasons for differences in performance of real programs.

Toy benchmarks

Toy benchmarks are typically between 10 and 100 lines of code and produce a

result the user already knows before running the toy program.

example Puzzle, and Quicksort

Synthetic benchmarks

Synthetic benchmarks try to match the average frequency of operations and

operands of a large set of programs.

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

7. Dependability:

The Infrastructure providers offer Service Level Agreement (SLA) or Service Level

Objectives (SLO) to guarantee that their networking or power services would be

dependable.

Systems alternate between 2 states of service with respect to an SLA:

1. Service accomplishment, where the service is delivered as specified in SLA

2. Service interruption, where the delivered service is different from the SLA

Failure = transition from state 1 to state 2

Restoration = transition from state 2 to state 1

The two main measures of Dependability are Module Reliability and Module Availability.

Module reliability is a measure of continuous service accomplishment (or time to failure) from a

reference initial instant

1. Mean Time To Failure (MTTF) measures Reliability

2. Failures In Time (FIT) = 1/MTTF, the rate of failures

Mean Time To Repair (MTTR) measures Service Interruption

Mean Time between Failures (MTBF) = MTTF+MTTR

Module availability measures service as alternate between the 2 states of accomplishment and

interruption Module availability = MTTF / (MTTF + MTTR)

8. Quantitative Principles of Computer Design:

While designing the computer, the advantage of the following points can be exploited to enhance the

performance

Parallelism:

It is one of most important methods for improving performance.

One of the simplest ways to do this is through pipelining ie, to over lap the instruction Execution

to reduce the total time to complete an instruction sequence.

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

Parallelism can also be exploited at the level of detailed digital design.

Set- associative caches use multiple banks of memory that are typically searched n parallel.

Carry look ahead which uses parallelism to speed the process of computing.

Principle of locality:

Program tends to reuse data and instructions they have used recently. The rule of thumb is that

program spends 90 % of its execution time in only 10% of the code. With reasonable good

accuracy, prediction can be made to find what instruction and data the program will use in the

near future based on its accesses in the recent past

Focus on the common case:

While making a design trade off, favor the frequent case over the infrequent case. This principle

applies when determining how to spend resources, since the impact of the improvement is higher

if the occurrence is frequent.

Amdahls Law:

Amdahls law is used to find the performance gain that can be obtained by improving some

portion or a functional unit of a computer Amdahls law defines the speedup that can be gained

by using a particular feature.

Speedup is the ratio of performance for entire task without using the enhancement when possible

to the performance for entire task without using the enhancement. Execution time is the

reciprocal of performance. Alternatively, speedup is defined as the ratio of execution time for

entire task without using the enhancement to the execution time for entire task using the

enhancement when possible. Speedup from some enhancement depends on two factors:

i.

The fraction of the computation time in the original computer that can be converted to

take advantage of the enhancement. Fraction enhanced is always less than or equal to

Example: If 15 seconds of the execution time of a program that takes 50 seconds in

total can use an enhancement, the fraction is 15/50 or 0.3

ii.

ii. The improvement gained by the enhanced execution mode; ie how much faster the

task would run if the enhanced mode were used for the entire program. Speedup

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

EC6009 ADVANCED COMPUTER ARCHITETCURE

iii.

Enhanced is the time of the original mode over the time of the enhanced mode and is

always greater than 1.

The Processor performance Equation:

Processor is connected with a clock running at constant rate. These discrete time events are

called clock ticks or clock cycle. CPU time for a program can be evaluated:

KARTHIK. S

DEPARTMENT OF ECE - SSMIET

Anda mungkin juga menyukai

- Crossword Puzzle Maker - Final Puzzle PDFDokumen2 halamanCrossword Puzzle Maker - Final Puzzle PDFskarthikmtechBelum ada peringkat

- AcaDokumen21 halamanAcaskarthikmtechBelum ada peringkat

- Interfacing Temperature Sensors and ADC/DAC with 8051 MicrocontrollerDokumen8 halamanInterfacing Temperature Sensors and ADC/DAC with 8051 MicrocontrollerskarthikmtechBelum ada peringkat

- 3sensor Source CodeDokumen1 halaman3sensor Source CodeskarthikmtechBelum ada peringkat

- KeywordsDokumen1 halamanKeywordsskarthikmtechBelum ada peringkat

- Ec 6504 Microprocessors and Microcontrollers: Unit V Interfacing Microcontroller 1. 8051 TimersDokumen20 halamanEc 6504 Microprocessors and Microcontrollers: Unit V Interfacing Microcontroller 1. 8051 TimersskarthikmtechBelum ada peringkat

- RF Unit 5Dokumen13 halamanRF Unit 5skarthikmtechBelum ada peringkat

- Squre Wave GeneratorDokumen2 halamanSqure Wave GeneratorskarthikmtechBelum ada peringkat

- MPMC LPDokumen1 halamanMPMC LPskarthikmtechBelum ada peringkat

- 3.7 Timer/counter 8253/8254Dokumen15 halaman3.7 Timer/counter 8253/8254skarthikmtechBelum ada peringkat

- CeDokumen2 halamanCeskarthikmtechBelum ada peringkat

- Tution For Carnatic Vocal andDokumen1 halamanTution For Carnatic Vocal andskarthikmtechBelum ada peringkat

- AWP QST Cycle Test 2Dokumen2 halamanAWP QST Cycle Test 2skarthikmtechBelum ada peringkat

- Arduino Seminar DetailsDokumen8 halamanArduino Seminar DetailsskarthikmtechBelum ada peringkat

- Ec6464-Electronics and MicroprocessorsDokumen17 halamanEc6464-Electronics and MicroprocessorsskarthikmtechBelum ada peringkat

- Pulse Modulation: Analog-To-Digital ConversionDokumen4 halamanPulse Modulation: Analog-To-Digital ConversionskarthikmtechBelum ada peringkat

- BALADokumen3 halamanBALAskarthikmtechBelum ada peringkat

- Amp 8thsem m1 NopDokumen28 halamanAmp 8thsem m1 NopskarthikmtechBelum ada peringkat

- Bipolar Junction Transistors ExplainedDokumen43 halamanBipolar Junction Transistors ExplainedskarthikmtechBelum ada peringkat

- Arduino CodeDokumen1 halamanArduino CodeskarthikmtechBelum ada peringkat

- OBSTACLE DETECTION GUIDING STICKDokumen12 halamanOBSTACLE DETECTION GUIDING STICKskarthikmtechBelum ada peringkat

- 8086 Instruction SetDokumen30 halaman8086 Instruction SetRajesh PathakBelum ada peringkat

- Arithmetic Operations Using Masm AIMDokumen35 halamanArithmetic Operations Using Masm AIMskarthikmtechBelum ada peringkat

- DCT Theory and ApplicationDokumen32 halamanDCT Theory and ApplicationAhmed SoulimanBelum ada peringkat

- Mi QBDokumen6 halamanMi QBskarthikmtechBelum ada peringkat

- KarthikDokumen92 halamanKarthikskarthikmtechBelum ada peringkat

- Mi LPDokumen8 halamanMi LPskarthikmtechBelum ada peringkat

- SLNODokumen35 halamanSLNOskarthikmtechBelum ada peringkat

- B Tech Scheme Syl Lab UsDokumen112 halamanB Tech Scheme Syl Lab UsDavid PaulBelum ada peringkat

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5784)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (119)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- P-Delta Analysis ParametersDokumen1 halamanP-Delta Analysis Parametersmathewsujith31Belum ada peringkat

- Automated Mobile Testing Using AppiumDokumen34 halamanAutomated Mobile Testing Using Appiumsaravana67Belum ada peringkat

- AZ-300.examcollection - Premium.exam.288q: Number: AZ-300 Passing Score: 800 Time Limit: 120 Min File Version: 15.0Dokumen285 halamanAZ-300.examcollection - Premium.exam.288q: Number: AZ-300 Passing Score: 800 Time Limit: 120 Min File Version: 15.0Sandeep MadugulaBelum ada peringkat

- Create Cluster Objects by Using Imperative CommandsDokumen7 halamanCreate Cluster Objects by Using Imperative CommandsRani KumariBelum ada peringkat

- All About Monitors: Beginner WorkshopDokumen29 halamanAll About Monitors: Beginner WorkshopjakeBelum ada peringkat

- PHP IniDokumen35 halamanPHP IniAITALI MohamedBelum ada peringkat

- SimcenterDokumen2 halamanSimcenterNfotec D4Belum ada peringkat

- UNIT IV Normalization PDFDokumen18 halamanUNIT IV Normalization PDFAryanBelum ada peringkat

- FR8280 DatasheetDokumen9 halamanFR8280 DatasheetRobertBelum ada peringkat

- AJP (22517) Unit Test 2 CMIF5I 2022-23Dokumen4 halamanAJP (22517) Unit Test 2 CMIF5I 2022-23Dhaval SarodeBelum ada peringkat

- OKA40i-C-docker Environment GuidelineDokumen4 halamanOKA40i-C-docker Environment GuidelineRaja MustaqeemBelum ada peringkat

- Rendering With AutoCAD Using NXtRender - Albert HartDokumen150 halamanRendering With AutoCAD Using NXtRender - Albert HartJoemarie MartinezBelum ada peringkat

- Linux 2Dokumen47 halamanLinux 2viraj14Belum ada peringkat

- ZM-Ve350 Manual 150528 0Dokumen6 halamanZM-Ve350 Manual 150528 0Игорь ИвановBelum ada peringkat

- Usb Cam LogDokumen3 halamanUsb Cam Logﹺ ﹺ ﹺ ﹺBelum ada peringkat

- System Development & SDLCDokumen9 halamanSystem Development & SDLCproshanto salmaBelum ada peringkat

- Creating PDF Files Using OOTB Tools PDFDokumen18 halamanCreating PDF Files Using OOTB Tools PDFch_deepakBelum ada peringkat

- Cse 370 Spring 2006 Introduction To Digital Design Lecture 23: Factoring Fsms Design ExamplesDokumen7 halamanCse 370 Spring 2006 Introduction To Digital Design Lecture 23: Factoring Fsms Design ExamplesYASH MISHRABelum ada peringkat

- Easergy P3 Protection Relays - REL52004Dokumen6 halamanEasergy P3 Protection Relays - REL52004aditya agasiBelum ada peringkat

- Unit3 Part 2Dokumen15 halamanUnit3 Part 2Husam Abu ZourBelum ada peringkat

- Technology and Livelihood Education: Ict-Computer Hardware ServicingDokumen21 halamanTechnology and Livelihood Education: Ict-Computer Hardware ServicingJanbrilight GuevarraBelum ada peringkat

- A Maturity Model For Assessing Industry 4.0 Readiness and Maturity of Manufacturing EnterprisesDokumen7 halamanA Maturity Model For Assessing Industry 4.0 Readiness and Maturity of Manufacturing EnterprisesAB1984Belum ada peringkat

- Transfer Credit Processing20091012Dokumen44 halamanTransfer Credit Processing20091012dwi kristiawanBelum ada peringkat

- ONLINE CRIME MANAGEMENT SYSTEMDokumen18 halamanONLINE CRIME MANAGEMENT SYSTEMShalabh Nigam100% (1)

- Ministry of Corporate Affairs - MCA ServicesDokumen1 halamanMinistry of Corporate Affairs - MCA ServicesNipun MakwanaBelum ada peringkat

- PHPDokumen26 halamanPHPqwrr rewqBelum ada peringkat

- Mobile Programming: Engr. Waqar AhmedDokumen20 halamanMobile Programming: Engr. Waqar AhmedSajid AliBelum ada peringkat

- BIM Documentation (ISO 19650)Dokumen48 halamanBIM Documentation (ISO 19650)Daniyal Wani100% (1)

- E-BANKING-ONE-STOPDokumen11 halamanE-BANKING-ONE-STOPBalaraman Gnanam.sBelum ada peringkat

- Unit 30 - Assignment 1Dokumen3 halamanUnit 30 - Assignment 1BSCSComputingIT100% (1)