Bionic Glass

Diunggah oleh

Nguyen Ngoc Khanh HangDeskripsi Asli:

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Bionic Glass

Diunggah oleh

Nguyen Ngoc Khanh HangHak Cipta:

Format Tersedia

2006 10th International Workshop on Cellular Neural Networks and Their Applications, Istanbul, Turkey, 28-30 August 2006

Colo-r

'

Processin

in

earable

Bionic

eglass

Robert Wagner*,Mihaly Szuhajt *Peter Pazmany Catholic University, wagnerr@sztaki.hu tHungarian National Association of Blind and Visually Impaired People, Budapest,

Hungary

Abstract- Abstract - This paper presents color processing tasks of the bionic eyeglass project, which helps blind people to gather chromatic information. A color recognition method is presented, which specifies the colors of the objects on the scene. The method adapts to varying illumination and smooth shadow on the objects. It performs local adaptation on the intensity and global chromatic adaptation. Another method is also shown that improves the extraction of display data based on chromatic information.

blind people.

Index Terms- Color processing, image segmentation, guiding

I. INTRODUCTION

Espite the impressive advances related to retinal pros theses there is no imminent promise to make them soon available with a realistic performance to help navigating blind or visually impaired people in everyday needs. In the Bionic Eyeglass project we are designing a wearable visual sensingprocessing system that helps blind and visually impaired people to gather information about the surrounding environment. The system is designed and implemented using the Cellular Wave Computing principle and the adaptive Cellular Nonlinear Network (CNN) Universal Machine architecture [1], [2]. Similarly to the Bi-i architecture ( [3]) it will have an accompanying digital platform - in this case a mobile phone - which will provide binary and logic operations. This paper deals with the color related tasks of the Bionic Eyeglass project A large part of the color processing related operations are more suitable on digital hardw are because of sophisticated nonlinear transformation between color spaces On the other hand many operations require local processing. These are computationally intensive, but fast performed on an analog processor-array. The chosen CNN architecture has the advantage of large computing power, and the ability to perform image processing tasks which comes from the topographic and parallel structure. Using this architecture we can exploit the fact, that mammalian retinal processes were successfully implemented on it ( [4]), which enables us the realization of retina-like methods. In section II. we write about the role of color processing in the wearable eyeglass for blind people project. In section III we describe the color specification algorithm, in IV the extraction of displays based on color information and in section V we write about the planned architecture.

polka-ed. The second task is different from the first one in that here the focus is not the color of a given object, we use color in order to alleviate the extraction of other useful information. A good example of this is the reading of displays. Usually displays can be identified by their larger intensity, however the use of chromatic information helps to distinguish them from natural light sources. We take advantage of their color although we are not interested in what color they have.

III. COLOR SPECIFICATION

II COLOR PROCESSING FOR BLIND PEOPLE In the bionic eyeglass project there are two main application of color processing: 1) Determination of the color of the scene. 2) Filtering based on color to extract non color related information. (e.g. displays) The first task is considered despite the fact that blind people do not perceive color, because the color information of some objects is important for them. A good example for such an application is the color of clothes. Although they have few imagination of color, the color of clothes is important in order not to take clothes of unmatched colors. Our task is to determine the color of the piece of cloth recorded by the camera. Beside the color of the cloth we have to determine the texture of it this can also determine the matching of two clothes. At the next stage of the project, after determining the color we will classify the texture in one of the following categories: uniform, striped, checkered and

their location inside the image. The algorithm consists of a color space transformation, luminance adaptation, clustering, white balance, color-naming and location specifying steps. The flowchart of the algorithm can be seen on fig. and the steps are described in sections III-A-III-E.

A. Luv Color Space The specification of the colors and the subsequent computations are done in the CIE-Luv space ( [5]). The Luv space has the property, that the Euclidean difference of two colors L,uty,v coordinates is closer to the perceptual difference between them than that of the R,G,B coordinates.

Our aim is to determine the color of the objects as seen on an RGB image. Our system will provide the color names and

1-4244-0640-41061$20.00 2006 IEEE

a.)

b.)

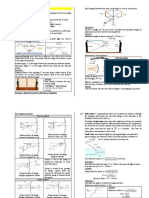

Fig. 2. Luminance adaptation. a.) shows the input picture taken by a standard digital camera. b.) is the adapted result. We can see that the differences in the illumination are reduced.

L(i,j)= L(i,j) *

mean(L)

L(i. jf

(1)

Fig. 1. Processing steps of the color specification algorithm. Tick boxes show operations that will be implemented or partially implemented on CNN architecture.

In order to compute the L,u*,v* values we converted the R,G,B values to X,Y,Z and then to L,u*,v*. The first transformation is a linear transformation, the second is a nonlinear. The detailed description can be found in [6]. We can also use the CIE-Lab color space ( [5]) using which we had similar results. However its computation requires the calculation of a cube root, while L,u*,v* values need only

division.

Both the Lab and Luv color spaces have the advantage, that the L coordinate represents the luminance information and the resting two coordinates the chromatic information. This enables us to deal with the luminance separately and reduce the luminance differences due to illumination changes. This part of the algorithm is explained in details in section III-B.

B. Luminance Adaptation Color determination is relatively easy if everything is ideal: we have reference illumination, the illumination is constant over the scene. However in most cases this is not satisfied. Especially not with blind people, who can only guess the illumination conditions while taking the picture. We plan our system to cope with smooth illumination changes, which mean gradual changes over the whole scene. Such changes can be filtered out in that the differences in the spatial low-pass component of the intensity are eliminated. In the Luv space the L value carries the information about the intensity, so during luminance adaptation we change only the L values. At each pixel we perform the following operation

(1):

where (i, j) are the coordinates of the pixel. L(i, j) the luminance value of the pixel, L(i, j) the value of the lowpass filtered luminance at the pixel and mean(L) is the mean of the intensity over the whole image. This modification changes the pixel value according to the local average around it (L(i, j)). If the local average is smaller than the mean of the image (which is also the mean of the local average), then we enhance the pixel, otherwise we reduce its value. Hence the luminance differences between the regions are reduced. This works similarly to [7]. An example can be seen on fig. 2. We applied a strong low-pass filtering, so that only the very low-pass components are eliminated during the adaptation. The low-pass component was calculated using a diffusion ( [12]) that is equivalent to a Gaussian convolution with a sigma parameter: uf = 0.7 * image width. Such a very low frequency low-pass component does not surely eliminate all the illumination differences, but it has the advantage, that only the illumination differences are reduced. The texture information is maintained because it changes more sudden over the scene.

C. Clustering of Colors The clustering step is the main part of the algorithm. This is the stage where we specify the colors seen on the image. As in [8] we used a perceptually equalized color space, the CIE-Luv space (later we also used the Lab space without having large performance differences). [8] also used region information in a second stage of clustering. In this stage of the project we omitted the region information in the clustering stage and have a simpler and faster algorithm. We had challenges in the elimination of shadow borders and unequal illumination rather than in the identification of uniform regions. Furthermore in case of striped or checkered clothes the regions of a color are not continuous. Hence we clustered based on only the 3D color values of the pixels and not the color plus spatial coordinates.

For the clustering we used the well known K-means algorithm [8], [9]. It consists of the following main steps: 1) Determination of K initial cluster centers. 2) Assigning the image pixels to the centers. 3) Recalculate the cluster centers as the mean of the pixel values belonging to a given cluster. 4) Go back to step 2. if the cluster centers change more then a predefined criterion.

a.)

As a result of the clustering, we get a map (index map) in which all pixels will have a value depending on the cluster to which it was assigned, and the cluster centers Luv coordinates will be the average of the pixels coordinates, that belong to the cluster. An example of the clustering can be seen on fig. 3. Experimentally tested we choose 16 initial cluster centers. The number of the initial cluster centers is crucial since it determines the number of resulting clusters. Using a smaller number of clusters, pixels of different color can be assigned to the same cluster.

b.)

On the other hand in case of more homogeneous cases we have the problem, that a region of the same color is splitted between different clusters. To overcome this problem, we extended the clustering method by a post processing step, in which we merged similar clusters. Such splitting cases occur mostly at unequal illumination of the same texture e.g. along shadow borders (see fig.3 b.). In these cases the different clusters of the same color have similar chromaticity but different luminance. Hence we made two criterions for merging of two clusters. These are both based on the Luv color coordinates of the cluster centers.

D:

c.)

d.)

Fig. 3. Clustering and merging of clusters. a.) shows the original picture taken by a standard digital camera. On b.) we can see the result of clustering. On this picture the pixels have the color of the cluster they assigned to. Regions of a given color represent a cluster. c.) shows the clusters after merging of similar clusters. The main cluster colors can be seen on d.)

1) The difference of the L,u*,v* values of the cluster centers is smaller than a predefined PLu, parameter 2) The difference of the L value is arbitrary large, but the difference of the u* and v* values is smaller than a parameter: P,,. This is only applied in case saturation of the colors is larger than: p.

When a cluster C1 is merged to another one C2 the pixels of C1 are assigned to C2 (their indexes are changed) and the cluster centers Luv values become the weighted average of the two centers. The weights are the number of pixels in C1 and

An example of merging can be seen on fig. 3. We specified strong criteria for the merging, in order not to merge inconsistent clusters. This has the effect, that some regions are not merged (e.g. shadowy white on the lower right corner and the large white region on fig. 3 c.). The merging could be avoided if we applied anisotropic diffusion ( [12]) before the clustering, this will be feasible on nonlinear CNN chips. D. White Balance In spite of the luminance adaptation illumination variation is still a problem for the recognition of colors. Luminance adaptation equals the differences within the scene, but does not eliminate a luminance change that affects the whole scene (e.g. the whole scene is in shadow, fig. 4 a.).

C2.

The criterion for the saturation is applied in order not to merge achromatic values, which correspond to different colors but have no chromaticity difference, like dark-gray and white. In the Luv space the saturation can be computed as (2).

saturation

U2 H v2

(2)

a.)

b.)

the diagonal model in [10]. If we cannot assume white areas on the scene, we can use scene statistics, such as mean value or local average of R,G,B channels, and scale the channels based on the ratio of the desired values and the calculated statistic. This method is described more detailed in sec. IV. The method that specifies the uniform regions has the following free parameters: . Amount of diffusion to specify the low-pass component. . Initial number of clusters. . Criteria at the merging of clusters (2 free parameters). We tested the algorithm on about 20 pictures of different clothes or under different illumination and adjusted the parameters to recognize the regions of same colors as seen on fig.3 and 4. The average number of clustering iteration was 4-5 iterations. The last stage of the algorithm is to specify the color names based on the Luv coordinates, and to retrieve the location of the clusters based on the cluster data.

E. Retrieval of Color and Location Names At this stage we extract verbal information based on the Luv values, and the location names based on the map which shows the pixels which are assigned to a cluster. We classified each cluster center (and so the cluster) to given predefined colors. We used the Wiki list of colors ( [11]) as predefined colors. We chose the color from the list based on the Euclidean distance of the Luv coordinates between the cluster and the predefined values. For the location information we computed the following measures, based on a binary mask which contained the pixels that were assigned to the given cluster. . Center of gravity (CoG) coordinates. . Deviance from the center of gravity. The CoG coordinates are then classified vertically and horizontally to 3 categories: up, down, middle horizontally: left, right, middle. The deviance is needed in case of non continuous region (e.g. white region on fig.3.) these might have the CoG in the middle although they are located on the sides. If the deviance is high, then they are classified as regions lying on both sides.

-

c.)

d)

Fig. 4. Clustering and white balance. a.) shows the original image taken by a mobile phone, which is more sensitive to blue colors. b.) shows the result of clustering and merging of the clusters. Homogeneous regions of the same color represent the clusters. On c.) we can see the clusters after white balance. d.) shows the colors of four main clusters.

Here

we

recalculate the Luv coordinates of the cluster

centers. White balancing means in our case that the cluster center whose color lays close to white color (saturated RGB

values) is amplified so that it becomes white. This step assumes that the scene contains white regions. Consulting with blind people we were assured that this condition can be fulfilled. We perform the following steps: 1) We choose the cluster center whose Luv coordinates lie the closest to the saturated white value. 2) We convert the L,u*,v* values of the cluster centers to R,G,B values and compute the ratio between the RGB values of white color (255,255,255) and those of the chosen cluster center (3):

C

C*Crw

Cc

(3)

IV. COLOR CORRECTION AND FILTERING

This section deals with a different task, here our aim is to extract useful information of the scene based on chromatic information. We filter out displays of predefined colors (see fig. 6). In order to be able to filter out predefined colors we have to

remove a

,where C is one of the R,G,B channels. Crw is the channels value for the reference white and Cc is the value of the channel for the chosen close to white cluster. This operation is especially important in case of mobile phone cameras the sensor of the planned device. These cameras make less balanced photos than standard digital cameras and their pictures have often a large proportion of a given color (fig. 4). The operation of white balancing is illustrated on fig. 4. The separate scaling of the R,G,B channels corresponds to

-

chromatic distortion from the scene. We implemented method similar to the white correction in sec. III-D., we scaled the R,G,B channels separately. The correction can be seen in (4):

c

=C

Tnean(C)(4

'g

(4)

I C

~~~~~~~~~~~~~~~~~~~~~~~~~~~..... . . w~~

a.)

c.)

d.)

Fig. 5. Color correction. a.) shows the original image taken by a mobile phone. We can see the bluish color of it. b.) shows the blue channel of a.) c.) shows the smooth correction. d.) shows the blue channel of c.)

b.)

,where C is one of the R,G,B channels. Crg is the channels value for the reference gray and mean(C) is the mean value for the channel for the whole scene. In our application Crg was chosen 128 for all the channels, this means, when a channels mean value is larger then the half of maximal intensity, than it is attenuated otherwise it is enhanced. On fig. 5 we can see an example of this color correction. The color correction can be only applied if it can be assured that the average of the color channels on the reference illuminated scene is nearly 128. In this case all deviations from this value are caused by chromatic illumination or different sensitivity of the color sensors (see fig. 5). In [10] such methods are called gray world algorithms. In case we apply our method for outdoor views (e.g. the shown color filtering case fig.6), we regard many different textures whose average is usually nearly gray. In the color specification task we regard clothes and the texture of one cloth can occupy the whole visual filed. After this we extract the predefined color that we need for the specific application. In this case we filtered the greenish color of the display based on the hue and saturation data. The hue range was specified so that the most important parts of the display are extracted, even if we extract non display regions. see fig.6. The color-based filtering is able to reduce the number of possible location of the displays. On the other hand in these applications the color-based filtering is considered as a simple preprocessing step, other methods should be also applied to locate the displays these are out of the scope of this paper.

V. REALIZATION For the use of our algorithm it is important that at a final stage of the project we implement our method on the wearable architecture. In our case this is a CNN array accompanied by

C.)

Fig. 6. Color filtering, a.) shows the original picture taken by a mobile phone, b.) shows the adapted version. On c.) we can see the result of filtering for green-yellow color.

a mobile phone. Mobil us:

provide

phones

are

advantageous, because they

* camera input * speaker * power supply The camera input - image sensor can be realized on CNN architecture as well ( [3]), so the CNN array is important for: * parallel image processing * adaptive image sensing On fig. 1. we can see the steps of the algorithm which we are planning on CNN architecture. Steps that are easier realized on digital architecture: * Operations in which the pixels are processed separately: * Strong nonlinear transformations: conversion between color spaces. * Rule based evaluation of the results: determination of the color name based on cluster L,u,v, data, and specification of the location names based on CoG coordinates. Operations that require local processing can be more efficiently realized on CNN architecture: * Local adaptation algorithm. * Calculation of statistics for white correction and color correction.

clustering, merging.

* Determination of the location of the clusters.

In the design phase we are using PC simulation and then we plan to implement our algorithm on mobile phone. At the final stage when the hardware integration of the mobile phone and the CNN-UM array makes it possible, we realize the method on the target platform.

VI. FURTHER PLANS

We plan to extend the color specification method with the analyzing of the texture of clothes. The display recognition requires non color based methods to locate the displays, and then we can design the character recognition methods. On the field of implementation we will have to begin to transfer the algorithms to mobile phone platform.

VII. ACKNOWLEDGMENT This work is a part of the Bionic Eyeglass project under the supervision of Tama's Roska. We would also like to thank to: Kristof Karacs, Anna Lazair and Daivid Bailya. REFERENCES

[1] L. 0. Chua, T. Roska, Cellular Neural Networks and Visual Computing, Cambridge University Press, Cambridge, UK, 2002. [2] T. Roska, "Computational and Computer Complexity of Analogic Cellular Wave Computers", Journal of Circuits, Systems, and Computers, Vol. 12, No. 4, pp. 539-562, 2003 [3] A Zarandy, Cs. Rekeczky, P. Foldesy, I. Szatmari, "The new framework of applications - The Aladdin system," J. Circuits Systems Computers Vol. 12, pp. 769-782, 2003. [4] D. Balya, B. Roska, T. Roska, F. S. Werblin, "A CNN Framework for Modeling Parallel Processing in a Mammalian Retina," International Journal on Circuit Theory and Applications, Vol. 30, pp. 363-393, 2002 [5] Publ. CIE 15.2-1986, Colorimetry, 2nd ed., CIE Central Bureau, Vienna, 1986. [6] M. Tkalcic, J. Tasic: "Colour spaces - perceptual, historical and applicational background", Eurocon 2003 Proceedings, Editor: Baldomir Zajc, Marko Tkalcic, September, IEEE Region 8, 2003 available at: http://ldos.fe.uni-lj.si/docs/documents/20030929092037 markot.pdf [7] R. Wagner, A. Zarandy and T. Roska: "Adaptive Perception with LocallyAdaptable Sensor Array", IEEE Transactions on Circuits and Systems I, : Regular Papers, Vol. 51, No.5, pp. 1014-1023, 2004 [8] I. Kompatsiaris, E. Triantafillou and M. G. Strintzis, "Region-Based Color Image Indexing and Retrieval", 2001 International Conference on Image Processing (ICIP2001), Thessaloniki, Greece, October 7-10, 2001. available at: http://mkg.iti.gr/publications/icip2001.pdf [9] Web page about k-means clustering: url = http://fconyx.ncifcrf.gov/ lukeb/kmeans.html [10] Kobus Barnard, "Practical Colour Constancy," Chapter Two, pages 16,17 and 21, Phd thesis, Simon Fraser University, School of Computing available at: http://kobus.ca/research/publications/PHD-99/KobusBarnard-PHD.pdf [11] Wiki list of colors. http://en.wikipedia.org/wiki/List of colors [12] T. Roska, L. Kek, L. Nemes, A. Zarandy and P. Szolgay (ed), "CNN Software Library (Templates and Algorithms), Version 7.3", Analogical and Neural Computing Laboratory, Computer and Automation Research Institute, Hungarian Academy of Sciences (MTA SzTAKI), DNS-CADET15, Budapest, 1999

(1999),

Anda mungkin juga menyukai

- Business Plan ReportDokumen24 halamanBusiness Plan ReportNguyen Ngoc Khanh HangBelum ada peringkat

- The Effect of Touch ScreenDokumen11 halamanThe Effect of Touch ScreenNguyen Ngoc Khanh HangBelum ada peringkat

- Analysis of Pca Based and Fisher Ant Based Image Recognition AlgorithmsDokumen76 halamanAnalysis of Pca Based and Fisher Ant Based Image Recognition AlgorithmsNguyen Ngoc Khanh HangBelum ada peringkat

- Design and Implementation of An Elevator System For FourDokumen6 halamanDesign and Implementation of An Elevator System For FourNguyen Ngoc Khanh HangBelum ada peringkat

- Design and Implementation of An Elevator System For FourDokumen6 halamanDesign and Implementation of An Elevator System For FourNguyen Ngoc Khanh HangBelum ada peringkat

- DF Lesson 03Dokumen37 halamanDF Lesson 03Nguyen Ngoc Khanh HangBelum ada peringkat

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (587)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (73)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (265)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (119)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- Brilliant: Pulsed ND: YAG LasersDokumen6 halamanBrilliant: Pulsed ND: YAG LasersMalika Achouri100% (1)

- Dwnload Full Introduction To Operations and Supply Chain Management 3rd Edition Bozarth Solutions Manual PDFDokumen35 halamanDwnload Full Introduction To Operations and Supply Chain Management 3rd Edition Bozarth Solutions Manual PDFsquiffycis9444t8100% (14)

- HW 4 Solution PDFDokumen5 halamanHW 4 Solution PDFyesBelum ada peringkat

- Electromagnetic and Magnetic VectorDokumen4 halamanElectromagnetic and Magnetic Vectorsergiodomingues100% (1)

- Celestron c10 N Users Manual 393252Dokumen71 halamanCelestron c10 N Users Manual 393252TestBelum ada peringkat

- Optical Fiber Connection Chapter 4: Fiber Splicing and ConnectorizationDokumen39 halamanOptical Fiber Connection Chapter 4: Fiber Splicing and ConnectorizationFaizal EngintechBelum ada peringkat

- Ut Q 4Dokumen3 halamanUt Q 4Avijit DebnathBelum ada peringkat

- F3 Science (Physics Part)Dokumen20 halamanF3 Science (Physics Part)chihin yimBelum ada peringkat

- LBMDokumen22 halamanLBMrkhurana00727Belum ada peringkat

- Clear Glass: Indof LotDokumen4 halamanClear Glass: Indof LotSteviani TeddyBelum ada peringkat

- LightDokumen84 halamanLightReagan de GuzmanBelum ada peringkat

- Jds 15 117Dokumen6 halamanJds 15 117maroun ghalebBelum ada peringkat

- Btech Ec 7 Sem Digital Image Processing Nec 032 2017 18Dokumen2 halamanBtech Ec 7 Sem Digital Image Processing Nec 032 2017 18Deepak SinghBelum ada peringkat

- 10th Light Numerical Question Test Paper-4Dokumen1 halaman10th Light Numerical Question Test Paper-4UvigigigBelum ada peringkat

- Immersion LithographyDokumen5 halamanImmersion LithographyGary Ryan DonovanBelum ada peringkat

- ITT American Electric HPS Luminaire Series 53 & 54 Spec Sheet 11-80Dokumen8 halamanITT American Electric HPS Luminaire Series 53 & 54 Spec Sheet 11-80Alan MastersBelum ada peringkat

- Modelos de ColimadoresDokumen8 halamanModelos de ColimadoreslauraBelum ada peringkat

- BAIYILED TGC LED FloodlightDokumen2 halamanBAIYILED TGC LED FloodlightBAIYILED EUROPE B.V.Belum ada peringkat

- F4 Chapter 6 LightDokumen7 halamanF4 Chapter 6 Lightcyric wongBelum ada peringkat

- Chapter 8 Light, Shadows and ReflectionsDokumen3 halamanChapter 8 Light, Shadows and ReflectionsPrabhu VelBelum ada peringkat

- Answers Solutions JEE Advanced 2023 Paper 1Dokumen58 halamanAnswers Solutions JEE Advanced 2023 Paper 1vihaangoyal31Belum ada peringkat

- UV-2800 Series Spectrophotometer: User's ManualDokumen47 halamanUV-2800 Series Spectrophotometer: User's ManualAngelaBelum ada peringkat

- Mci Catálogo Hofflights Fixtures Novelties by Grupo MciDokumen72 halamanMci Catálogo Hofflights Fixtures Novelties by Grupo MciVEMATELBelum ada peringkat

- Photography and CommunicationDokumen2 halamanPhotography and CommunicationLuisa Frances Soriano FernandezBelum ada peringkat

- HDQ-2K40: 40,000 Lumens, 2K, 3-Chip DLP ProjectorDokumen4 halamanHDQ-2K40: 40,000 Lumens, 2K, 3-Chip DLP ProjectorBullzeye StrategyBelum ada peringkat

- Glossary - Online - Visual TestingDokumen22 halamanGlossary - Online - Visual TestingMohamad JunaedyBelum ada peringkat

- OC - UNIT 3notesDokumen37 halamanOC - UNIT 3notesAmit SivaBelum ada peringkat

- Photoelectric Detector: Atsumielectric Co.,LtdDokumen12 halamanPhotoelectric Detector: Atsumielectric Co.,LtdnastrapuleagraniceruBelum ada peringkat

- Method of Extending The Range of The KeratometerDokumen3 halamanMethod of Extending The Range of The KeratometerDanielle SangalangBelum ada peringkat

- ExperimentalDokumen5 halamanExperimentalbabuBelum ada peringkat