Impact of Mit Database

Diunggah oleh

Praveen Chand GDeskripsi Asli:

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Impact of Mit Database

Diunggah oleh

Praveen Chand GHak Cipta:

Format Tersedia

The Impact of the MIT-BIH Arrhythmia Database

Digital Vision

History, Lessons Learned, and Its Influence on Current and Future Databases

dard test material for evaluation of arrhythmia detectors, and it has been used for that purpose as well as for basic research into cardiac dynamics at about 500 sites worldwide since 1980. It has lived a far longer life than any of its creators ever expected. Together with the American Heart Association (AHA) Database, it played an interesting role in stimulating manufacturers of arrhythmia analyzers to compete on the basis of objectively measurable performance, and much of the current appreciation of the value of common databases, both for basic research and for medical device development and evaluation, can be attributed to this experience. In this article, we briefly review the history of the database, describe its contents, discuss what we have learned about database design and construction, and take a look at some of the later projects that have been stimulated by both the successes and the limitations of the MIT-BIH Arrhythmia Database.

Tthe first generally available set of stan-

he MIT-BIH Arrhythmia Database was

George B. Moody and Roger G. Mark

Harvard-MIT Division of Health Sciences and Technology

Nature of the Data

Electrocardiograms (ECGs) are very widely used as an inexpensive and noninvasive means of observing the physiology of the heart. In 1961, Holter [1] introduced techniques for continuous recording of the ECG in ambulatory subjects over periods of many hours; the long-term ECG (Holter recording), typically with a duration of 24 hours, has since become the standard technique for observing transient aspects of cardiac electrical activity. Since the mid-1970s, our research group has studied abnormalities of cardiac rhythm (arrhythmias) as reflected in long-term ECGs as well as automated methods for identifying arrhythmias. Many other research groups in academia and industry

have had similar interests. Until 1980, it was necessary for those wishing to pursue such work to collect their own data. Although the recordings themselves are plentiful, access to these data is not universal, and thorough characterization of the recorded waveforms is a tedious and expensive process. Furthermore, there is very wide variability in ECG rhythms and in details of waveform morphology, both between subjects and within individuals over time, so that a useful representative collection of long-term ECGs for research must include many recordings. During the 1960s and 1970s, development of automated arrhythmia analysis algorithms was hampered by a lack of universally accessible data. Each group that performed such work acquired its o w n s e t o f r e c o rd in g s a nd of t e n self-evaluated their algorithms using the same data that had been used to develop those algorithms. From the earliest days, it was clear that performance of these algorithms was invariably data-dependent, and the use of different data for the evaluation of each algorithm did not permit objective comparisons of algorithms from different groups.

Selection of Data

In 1975, recognizing that we would need a suitable set of well-characterized long-term ECGs for our own research, we began collecting, digitizing, and annotating long-term ECG recordings obtained by the Arrhythmia Laboratory of Bostons Beth Israel Hospital (BIH; now the Beth Israel Deaconess Medical Center). From the outset, however, we planned to make these recordings available to the research community at large, in order to stimulate work in this field and to encourage strictly reproducible and objectively comparable evaluations of different algorithms [2]. We expected that the availability of a common database

0739-5175/01/$10.002001IEEE 45

May/June 2001

IEEE ENGINEERING IN MEDICINE AND BIOLOGY

We expected that the availability of a common database would foster rapid and quantifiable improvements in the technology of automated arrhythmia analysis.

aged 23 to 89 years; approximately 60% of the subjects were inpatients. The ECG leads varied among subjects as would be expected in clinical practice, since surgical dressings and variations in anatomy do not permit use of the same electrode placement in all cases. In most records, one channel is a modified limb lead II (MLII), obtained by placing the electrodes on the chest as is standard practice for ambulatory ECG recording, and the other channel is usually V1 (sometimes V2, V4, or V5, depending on the subject).

Digitization

Five years were needed to complete the MIT-BIH Arrhythmia Database [3]. By current standards, the tools used to create the database were primitive. The ECG recordings were made using Del Mar Avionics model 445 two-channel reel-to-reel Holter recorders, and the analog signals were recreated for digitization using a Del Mar Avionics model 660 playback unit. The computers used for digitization were designed and built in our laboratory, including the tape-drive controllers and the analog-to-digital converter (ADC) interfaces; they used then state-of-the-art 1 MHz 8-bit CPUs and 11-bit offset binary ADCs. The digitization rate (360 samples per second per channel) was chosen to accommodate the use of simple digital notch filters to remove 60 Hz (mains frequency) interference. Ultimately, the digitization rate was constrained by the speed at which the data could be written to mass storage (the RAM was typically only 16 or 24 kB, so storing the data there was not an option). There were no disks of any kind. All storage was on DC300 digital cartridge tapes, which had four tracks with a capacity of about 400 kB per track. Normally, Holter tapes are read at many times real time (the playback unit offered speeds of 60 and 120 times real time, as well as twice real time, used when printing

on its chart recorder). At first, even twice real time was too fast; the digital tape could only be written one track at a time, always in the same direction, and there was not enough memory to buffer the incoming samples that would be accumulated while the tape was rewinding between tracks. We modified the playback unit to reduce its speed by a factor of two, using a specially constructed capstan. Many years later, we discovered that this capstan was very slightly eccentric, thanks to early heart-rate variability studies by Sergio Cerutti in Milan, who found unexpected subtle periodicities in some of our recordings.

Technical Limitations of the Data

Variations in recording and playback speed should be considered carefully in the context of heart-rate variability studies, since flutter compensation was not possible in these recordings. We investigated the sources of frequency-domain artifacts by recording and digitizing synthesized ECGs of known rates using the same equipment used to prepare the database, and we identified artifacts related to specific mechanical components of the recorders and the playback unit. Since the two signals are recorded at very slow tape speed on parallel tracks, minute differences between the orientations of the two-channel recording and playback heads result in skew between the signals, which was as great as 40 ms in some cases. Furthermore, microscopic vertical wobbling of the tape, either during recording or playback, introduces a time-varying skew that may be of the same magnitude as the fixed skew. This problem is generic to analog multi-track tape recorders and appears in the AHA and European databases mentioned below as well. Inter-signal skew must be anticipated in the design of algorithms intended to analyze such recordings, but it is an unwanted complication for those who intend to use their algorithms to analyze ECGs that are digitized in real time. Although playback at real time was possible, friction between the analog tape and the large playback head caused frequent jams requiring us to repeat the digitization procedure. After 30 of the recordings had been digitized, we were able to install a second digital tape drive on the digitizing computer. This made it possible to digitize at twice real time, writing track 1 on the first tape, then continuing with track 2 on the second tape while the first

May/June 2001

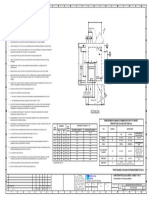

would foster rapid and quantifiable improvements in the technology of automated arrhythmia analysis. For the MIT-BIH Arrhythmia Database, we selected 48 half-hour excerpts of two-channel, 24-hour, ECG recordings obtained from 47 subjects (records 201 and 202 are from the same subject) studied by the BIH Arrhythmia Laboratory between 1975 and 1979. Of these, 23 (the 100 series) were chosen at random from a collection of over 4000 Holter tapes, and the other 25 (the 200 series) were selected to include examples of uncommon but clinically important arrhythmias that would not be well represented in a small random sample (see Fig. 1). The subjects included 25 men aged 32 to 89 years and 22 women

N V

A V V V V V V V V (VT

N (N

F V V VV V (VT

1. Ten seconds from record 205 of the MIT-BIH Arrhythmia Database. Rigorously reviewed beat annotations (A: atrial premature beat, F: ventricular fusion beat, N: normal beat, V: ventricular premature beat) and rhythm annotations ((N: normal sinus rhythm, (VT: ventricular tachycardia) appear in the center, between the two ECG signals (above: MLII, below: V1).

46 IEEE ENGINEERING IN MEDICINE AND BIOLOGY

tape rewound, then writing track 3 on the first tape, etc. During digitization, the analog signals from the playback unit were filtered to limit saturation in analog-to-digital conversion and for anti-aliasing, using a passband of 0.1 to 100 Hz relative to real time, well beyond the range of frequencies reproduced by the recordings. Since the recorders were battery-powered, most of the 60 Hz noise in the recordings was introduced during playback. This noise appears at 30 Hz (and multiples of 30 Hz) relative to real time in the recordings that were digitized at twice real time. Four of the 48 recordings include paced beats. Pacemaker artifacts are not accurately reproduced in the original analog recordings, since most of the energy in these artifacts is at frequencies in the kilohertz range, far above the passband of the recorders. The digitized recordings in the database faithfully reproduce the analog recordings, so that software intended to analyze analog tapes containing paced beats can be evaluated using these recordings. One of the major remaining gaps in the publicly available collections of ECGs is a representative set of high-fidelity recordings of paced rhythms, which would be helpful for those designing software for real-time analysis of such signals. In order to record 30 min of data in the available space, it was necessary to convert the digitized 11-bit samples into 8-bit first differences on the fly, which had the effect of limiting the slew rate to 225 mV/s, a limit that was exceeded by the input signals very infrequently, only on a few records during periods of severe noise. The effect of this procedure on the signal quality was negligible.

Annotation

Once the digital tapes had been prepared, we annotated them using a simple slope-sensitive QRS detector. Next, each tape was played back through a digital-to-analog converter to a thermal chart recorder that had been equipped with a pair of seven-element print heads. The playback software, written in assembly language as was all of the other software, generated the appropriate signals to form characters, printing periodic elapsed time markers on one edge of the paper, and the annotations on the other edge. Each half-hour tape was used to produce two identical 150-foot (46-m) paper chart recordings. The charts for each recording were given to two cardiologists, who worked inMay/June 2001

dependently, adding additional beat labels and deleting false detections as necessary, and changing the labels for abnormal beats. The cardiologists also added rhythm and signal quality labels. The paper charts with the cardiologists annotations were then transcribed into computer-readable form using an interactive annotation editor that displayed the waveforms on an oscilloscope using the same digital-to-analog converter board that was used to make the chart recordings. The result of this process was a tape containing two sets of cardiologist annotations. At this point the two sets of annotations were compared automatically and another chart recording was printed, showing the cardiologists annotations in the margin, with all discrepancies highlighted. Each discrepancy was examined and resolved by consensus. Corrections were then entered using the annotation editor, and all of the annotations were then audited using a program that checked them for consistency. (The auditing program also identified the ten shortest and longest inter-beat intervals, to identify possible false detections or missed beats.) Approximately 110,000 annotations were created and verified in this way. Notably, six of the 48 records contain a total of 33 beats that remain unclassified, because the cardiologist-annotators were unable to reach agreement on the beat types. In these cases, as in clinical practice, it occasionally happens that some beats cannot be classified with certainty, either because of technical defects in the recording, or because there is insufficient information in the record to permit a confident choice between two or more reasonable hypotheses. It is important that a database intended to represent real world signals should contain the broadest possible range of waveforms, including these ambiguous cases, which may represent the most interesting challenges for automated analysis. The annotators were instructed to use all evidence available from both signals to identify every detectable QRS complex. The database contains seven episodes of loss of signal or noise so severe in both channels simultaneously that QRS complexes cannot be detected; these episodes are all quite short and have a total duration of about 10 s. In all of the remaining data, every QRS complex was annotated, about 109,000 in all.

IEEE ENGINEERING IN MEDICINE AND BIOLOGY

A database intended to represent real world signals should contain the broadest possible range of waveforms, including ambiguous cases, which may be the most interesting challenges for automated analysis.

Completing the Database

All of this work was done by necessity on our custom-built microcomputers. As the processing neared completion, we began transferring the data over a 9600 baud serial line to our laboratorys minicomputer, which was equipped with a nine-track tape drive; this process required several weeks. The first copies of the completed database were distributed on sets of 800 bpi nine-track tape in the summer of 1980. Our initial expectation was that perhaps as many as ten academic and industry groups might obtain copies, probably within the first six months of the databases release, and then we could exit gracefully from the mail-order business. Indeed, after six months, this prediction still seemed plausible but orders continued to arrive steadily, at a rate averaging one per month, for the next nine years! During this period, we distributed about 100 copies of the database on nine-track half-inch digital tape at 800 and 1600 bpi, and a much smaller number of copies on quarter-inch IRIG-format FM analog tape.

47

The general standard of performance of commercial arrhythmia detectors improved rapidly, stimulated by the availability of the databases.

In early copies of the database, most beat annotations were placed at the R-wave peak, but manually inserted labels and those that occurred during periods of noise were not always placed consistently at the peak. In 1983, we adjusted the positions of the beat annotations using software that digitally bandpass-filtered the primary signal (usually MLII) to emphasize the QRS complexes, and then positioned each annotation at the major local extremum within 100 ms of the original location, after correcting for phase shift in the filter. We reviewed the placement of the annotations that had been repositioned by the largest amounts; for a very few of these beats, which were severely corrupted by noise, we manually repositioned the annotations. This postprocessing step allowed the beat annotations to be used as reliable fiducials for studies requiring waveform averaging, as well as for high-precision measurement of inter-beat intervals in studies of heart-rate variability (once the mechanical sources of tape speed variability were understood). Our own use of the database, and feedback from early users, allowed us to identify errors in the roughly 109,000 beat annotations. Sixteen such errors were corrected between 1980 and 1987, and in addition, the dominant beats in record 214, which had originally been identified as normal, were relabeled as left bundle branch block beats. No other beat annota48

tion errors have been found since 1987, despite intense scrutiny by many more users, and it is likely that none remain. The roughly 1000 rhythm annotations received more revisions, and now include annotations of ventricular bigeminy and trigeminy, and of paced rhythm, that were not present in early copies. Throughout the 1980s, we collected many more recordings to support studies of important arrhythmias that were not well represented in the original MIT-BIH Arrhythmia Database. Although we distributed a few of these, the impact of a major tape replication activity on the function of our research laboratory was a limiting factor. In 1989, we were able to produce a CD-ROM containing not only the MIT-BIH Arrhythmia Database but also seven additional ECG databases (the current edition includes two more). Approximately 400 copies of these CD-ROMs have been distributed to date [4]. In 1999, we established PhysioNet ( ht t p: / /w w w . p h y s io n e t. o r g /) , a web-based resource for research on complex physiologic signals [5]. More than half of the MIT-BIH Arrhythmia Database is now available via PhysioNet, making it possible for students and others to use a significant portion of these data for exploratory studies without cost.

in [7] and adopted in the recommended practice and both of the national standards. In simplest terms, this principle requires that the algorithm or device under test must produce for each test recording either an annotation file in the format of the reference annotation files supplied with the database, or an equivalent information stream that can be transformed into a such a file using an algorithm or accessory device, the details of operation of which must be fully disclosed. All performance measurements are then determined by automated comparisons of the algorithms annotation files with the reference annotation files, using standard comparison software specified by the standards. Regulatory agencies and end users of arrhythmia analyzers are able to verify test results [11], since all materials needed (the test data, the comparison software, and any required accessory device needed to produce annotation files) are available to anyone.

Other Long-Term ECG Databases

No discussion of the MIT-BIH Arrhythmia Database would be complete without mention of the two other important collections of long-term ECGs that are also available to researchers: the AHA Database for Evaluation of Ventricular Arrhythmia Detectors [12] and the European ST-T Database [13]. The AHA Database was created between 1977 and 1985 by a group led by G. Charles Oliver at Washington University in St. Louis. This database has many features in common with the MIT-BIH Arrhythmia Database. N o ta b ly , b o th d a ta b a s e s c ont a i n two-channel Holter recordings, with each recording containing 30 minutes of signals that have been meticulously hand-annotated beat-by-beat. Close and sustained cooperation between the groups at Washington University and at MIT ensured that these databases would appear in compatible formats and that their contents would be complementary. Recordings included in the AHA Database were chosen to satisfy one of eight sets of stringently defined selection criteria based on the severity of ventricular ectopy. As a result, the AHA Database has excellent representation of the most severe types of ventricular ectopy. Twenty recordings were chosen for each of the eight sets. Each of these was divided into equal subsets, one for algorithm development and one for evaluation of performance. The first of the development records were distributed in

May/June 2001

Using the Database

We initially avoided prescribing methods for using the database to evaluate arrhythmia detectors, to allow ourselves and other users of the database an opportunity to develop performance measures that might be predictive of real world performance [6]. In 1984, we proposed methods for beat-by-beat and episode-byepisode comparison of reference and algorithm-generated annotation files [7]. These methods became the basis for a recommended practice for evaluating ventricular arrhythmia detectors [8] developed under the aegis of the Association for the Advancement of Medical Instrumentation (AAMI) between 1984 and 1987. More recently, we have participated in the development of the current American National Standards for ambulatory electrocardiographs [9] and for evaluating arrhythmia and ST segment measurement algorithms [10], both of which specify evaluation protocols based on those in the earlier recommended practice. Although the details of the evaluation protocols are beyond the scope of this article, an important principle was proposed

IEEE ENGINEERING IN MEDICINE AND BIOLOGY

1982, and all 80 have been distributed since 1985 by ECRI [12]. In 2000, ECRI made the 80 evaluation records available for the first time. The AHA Database contains relatively few examples of supraventricular ectopy, conduction defects, and noise-contaminated waveforms, all of which are commonly encountered in clinical practice. By contrast, a number of records in the MIT-BIH Arrhythmia Database were selected specifically because they contain complex combinations of rhythm, morphologic variation, and noise that can be expected to provide multiple challenges for automated arrhythmia analyzers. In 1985, the group headed by Carlo Marchesi at the CNR Institute for Clinical Physiology in Pisa assessed how they might contribute most usefully to the collection of reference ECG recordings available to researchers. They chose to take on the challenge of creating a database for development and evaluation of changes in the ST segment and the T wave indicative of myocardial ischemia. (The portion of the ECG waveform that follows the QRS complex in each cardiac cycle, consisting of the ST segment and the T wave, reflects ventricular repolarization. If the amount of oxygen delivered by the coronary arteries to the ventricular myocardium is insufficient to meet the demand for oxygen, ischemia results, and usually produces distinctive changes in the ST segment and the T wave.) In the years following the creation of the MIT-BIH and AHA Databases, improvements in ambulatory ECG recorders permitted accurate reproduction of components of the ECG in the 0.01-0.10 Hz frequency range, needed in order to observe these changes. With the support of the European Society for Cardiology, the Pisa group coordinated data collection from clinical laboratories in 11 European Union nations, eventually selecting 90 two-hour excerpts of two-channel long-term ECG recordings. These were annotated in their entirety, following the protocol used for the MIT-BIH Arrhythmia Database with the addition of new annotation types to indicate episodes of ST and T-wave change. The first 50 records of the European ST-T Database were completed and made available to researchers in 1990 [13], and the remainder of the database was completed in 1991. The database is available on a CD-ROM in the same format as the MIT-BIH Arrhythmia DataMay/June 2001

base, a result of close cooperation between the Pisa and MIT groups.

Results

The experience of the past 20 years since the publication of the MIT-BIH Arrhythmia Database, and the AHA Database shortly thereafter, can be regarded as a grand experiment in shaping the direction of development of arrhythmia detectors. Until the databases became available, performance statistics were of little or no value since it was widely understood that each manufacturer designed its products using its own data, and designed its statistics to present the products in a favorable light. Conscientious developers who sought to make their algorithms more accurate faced pressure to match their competitors products feature for feature, rather than spending effort and money making improvements that could not be quantified, and therefore added no perceived value to the product. What, then, were the results of the experiment? In the early 1980s, the appearance of the databases marked a sea change in development efforts. End users and regulatory agencies began to ask manufacturers how well their devices worked on standard tests. Manufacturers had little choice but to perform the tests and report the results, and those whose algorithms did not measure up to their competitors spent their development budgets in concentrated efforts to improve performance. The general standard of performance of commercial arrhythmia detectors improved rapidly, stimulated by the availability of the databases. It would be incorrect, however, to suggest that manufacturers were capable of producing much better products in the late 1970s and chose instead to add bells and whistles in response to their customers apparent lack of interest in performance. Rather, a lack of wellcharacterized data hindered the manufacturers progress as well as that of academic researchers. Many industry and academic groups performed tedious evaluations in which unknown data were analyzed by their algorithms, and then the outputs of the algorithms were examined for errors. This evaluation approach is superficially attractive, because the process can be started at any time, using any available data. The major flaw in this approach is not that it introduced bias (although it did); it is that at the end of the process, it was just as expensive to evaluIEEE ENGINEERING IN MEDICINE AND BIOLOGY

The future in development of these databases may be in web-based collaborations between geographically scattered researchers.

ate the next version of the algorithm, because no investment was made in studying the data, only in characterizing the algorithm errors. Furthermore, without a reproducible test, it was impossible to know if any differences in measured performance of two algorithms were due to differences in the algorithms or differences in the data. The successes of the MIT-BIH Arrhythmia Database, the AHA Database, and the European ST Database demonstrated the value of creating generally available, representative, and well-characterized collections of ECGs. Their limitations stimulated the development of other databases. In particular, the needs of academic researchers are frequently for much longer recordings, so that temporal patterns of change within a single subject (for example, diurnal variations over a period of 24 or 48 hours) can be observed in detail. Advances such as inexpensive high-capacity mass storage, laser printers, color graphics displays, high-speed/ high-resolution analog-to-digital converters, and digital multichannel ECG recorders can allow us to avoid many of the problems we faced 25 years ago, as well as to gather higher quality data. The effort required to annotate the data in detail remains tedious and demanding, however. The future in development of these databases may be in web-based collabo49

rations between geographically scattered researchers, as in an ongoing project to develop a long-term ST database of 24-hour recordings [14]. This project is led by Franc Jager in Ljubljana (Slovenia), with participation from the developers of the European ST Database in Pisa and from our group at MIT. Using web servers, we are able to share data with our distant colleagues and to annotate these data collaboratively. Since our goal is to characterize ST changes in detail, and not primarily to identify arrhythmias (which are less common in these recordings than in the arrhythmia databases), we can use much more automation in the annotation process than would be reasonable when developing an arrhythmia database, where the introduction of bias in beat labels would be a greater concern. We meet as a group two or three times per year to confer on annotations and to plan work schedules for the next several months. In this way, we are creating a database roughly two orders of magnitude larger than the MIT-BIH Arrhythmia Database, in about the same amount of time. George B. Moody is a research staff scientist in the Harvard-MIT Division of Health Science and Technology, and the designer and webmaster of PhysioNet. His research interests include automated analysis of physiologic signals, statistical pattern recognition, heart-rate variability, multivariate trend analysis and forecasting, and applications of artificial intelligence and advanced digital signal processing techniques to multiparameter physiologic monitoring.

Roger G. Mark, M.D., Ph.D., is distinguished professor of Health Sciences & Technology and professor of electrical engineering at MIT. He is a Co-PI of the Research Resource for Complex Physiologic Signals. Dr. Mark served as the co-director of the Harvard-MIT Division of Health Sciences & Technology from 1985 to 1996. Dr. Marks research activities include physiological signal processing and database development, cardiovascular modeling, and intelligent patient monitoring. He led the group that developed the MIT-BIH Arrhythmia Database. Address for Correspondence: George B. Moody, MIT, Room E25-505A, Cambridge, MA 02139 USA. E-mail: george@mit.edu.

References

[1] N.J. Holter, New methods for heart studies, Science, vol. 134, p. 1214, 1961. [2] P.S. Schluter, R.G. Mark, G.B. Moody et al., Performance measures for arrhythmia detectors, in Computers in Cardiology 1980. Long Beach, CA: IEEE Comput. Soc. Press, 1981. [3] R.G. Mark, P.S. Schluter, G.B. Moody et al., An annotated ECG database for evaluating arrhythmia detectors, in Frontiers of Engineering in Health Care1982, Proc. 4th Annu. Conf. IEEE EMBS. Long Beach, CA: IEEE Comput. Soc. Press, pp. 205-210. [4] G.B. Moody and R.G. Mark, The MIT-BIH Arrhythmia Database on CD-ROM and software for use with it, in Computers in Cardiology 1990. Los Alamitos, CA: IEEE Comput. Soc. Press, 1991, pp. 185-188. [5] G.B. Moody, R.G. Mark, and A.L. Goldberger, PhysioNet: A web-based resource for study of physiologic signals, IEEE Eng. Med. Biol. Mag., vol. 20, no. 70-75, 2001. [6] G.B. Moody and R.G. Mark, How can we predict real-world performance of an arrhythmia

detector? in Computers in Cardiology 1983. Long Beach, CA: IEEE Comput. Soc. Press, 1984, pp. 71-76. [7] R.G. Mark and G.B. Moody, Evaluation of automated arrhythmia monitors using an annotated ECG database, in Ambulatory Monitoring: Cardiovascular System and Allied Applications. The Hague: Martinus Nijhoff, 1984, pp. 339-357. [8] Testing and Reporting Performance Results of Ventricular Arrhythmia Detection Algorithms [AAMI ECAR]. Assoc. for the Advancement of Medical Instrumentation, Arlington, VA, 1987. [9] American National Standard for Ambulatory Electrocardiographs. AAMI/ANSI Standard EC38:1998, 1998. [10] American National Standard for Testing and Reporting Performance Results of Cardiac Rhythm and ST Segment Measurement Algorithms. AAMI/ANSI Standard EC57:1998, 1998. [11] G.B. Moody, C.L. Feldman, and J.J. Bailey, Standards and applicable databases for l ong- t e r m ECG m oni t ori n g , J. Electrocardiology, vol. 26 (suppl.), pp. 151-155, 1993. [12] R.E. Hermes, D.B. Geselowitz, and G.C. Oliver, Development, distribution, and use of the American Heart Association database for ventricular arrhythmia detector evaluation, in Computers in Cardiology 1980. Long Beach, CA: IEEE Comput. Soc. Press, 1981, pp. 263-266. [The AHA Database is available from: ECRI, 5200 Butler Pike, Plymouth Meeting, PA 19462 USA (http://www.healthcare.ecri.org/); Contact: Ms. Hedda Shupack, hshupack@ecri.org.] [13] A. Taddei, A. Biagini, G. Distante et al., The European ST-T database: development, distribution, and use, in Computers in Cardiology 1990. Los Alamitos, CA: IEEE Comput. Soc. Press, 1991, pp. 177-180. [The European ST-T Database is available from: Dept. of Bioengineering and Medical Informatics, National Research Council (CNR) Institute of Clinical Physiology, via Trieste, 41, 56126 Pisa, Italy; Contact: Alessandro Taddei, taddei@ifc.pi.cnr.it.] [14] F. Jager, G.B. Moody, A. Taddei et al., A long-term ST database for development and evaluation of ischemia detectors, in Computers in Cardiology 1998. Piscataway, NJ: IEEE Press, 1998, pp. 301-304.

50

IEEE ENGINEERING IN MEDICINE AND BIOLOGY

May/June 2001

Anda mungkin juga menyukai

- Time-Frequency Domain for Segmentation and Classification of Non-stationary Signals: The Stockwell Transform Applied on Bio-signals and Electric SignalsDari EverandTime-Frequency Domain for Segmentation and Classification of Non-stationary Signals: The Stockwell Transform Applied on Bio-signals and Electric SignalsBelum ada peringkat

- Applied Sciences: P-Wave Detection Using A Fully Convolutional Neural Network in Electrocardiogram ImagesDokumen17 halamanApplied Sciences: P-Wave Detection Using A Fully Convolutional Neural Network in Electrocardiogram ImagesnadhemBelum ada peringkat

- SLPDB PCN 1999Dokumen3 halamanSLPDB PCN 1999KSI ShawonBelum ada peringkat

- Electronics 09 00121Dokumen15 halamanElectronics 09 00121praba821Belum ada peringkat

- Arrhy HD ResearchPaperDokumen25 halamanArrhy HD ResearchPaperidesign whatBelum ada peringkat

- 12 Lead ECG Data Compression Using Principal Component AnalysisDokumen6 halaman12 Lead ECG Data Compression Using Principal Component AnalysisInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Biomedical Signal Processing and Control: V. Mondéjar-Guerra, J. Novo, J. Rouco, M.G. Penedo, M. OrtegaDokumen8 halamanBiomedical Signal Processing and Control: V. Mondéjar-Guerra, J. Novo, J. Rouco, M.G. Penedo, M. OrtegamuraliBelum ada peringkat

- Raj 2015Dokumen10 halamanRaj 2015YamanBelum ada peringkat

- R-HRV: An R-Based Software Package For Heart Rate Variability Analysis of ECG RecordingsDokumen9 halamanR-HRV: An R-Based Software Package For Heart Rate Variability Analysis of ECG RecordingsFiras ZakBelum ada peringkat

- A Database For Evaluation of Algorithms For Measurement of QT and OtherDokumen4 halamanA Database For Evaluation of Algorithms For Measurement of QT and OtherPabloMartinezBelum ada peringkat

- NIH Public Access: Author ManuscriptDokumen10 halamanNIH Public Access: Author ManuscriptRhirin JiehhBelum ada peringkat

- David Et Al HeartrateDokumen5 halamanDavid Et Al HeartrateDae Hyun BaikBelum ada peringkat

- Cardiac Arrhythmia Detection Using Deep LearningDokumen9 halamanCardiac Arrhythmia Detection Using Deep LearningRevati WableBelum ada peringkat

- Heartbeat Classification Fusing Temporal and Morphological Information of ECGs Via Ensemble of Classifiers2Dokumen15 halamanHeartbeat Classification Fusing Temporal and Morphological Information of ECGs Via Ensemble of Classifiers2hari krishna reddyBelum ada peringkat

- Principles of Electrocardiography: Indiana University-Purdue UniversityDokumen11 halamanPrinciples of Electrocardiography: Indiana University-Purdue UniversityqhqhqBelum ada peringkat

- Choosing The Appropriate QRS Detector: Justus Eilers, Jonas Chromik, and Bert ArnrichDokumen11 halamanChoosing The Appropriate QRS Detector: Justus Eilers, Jonas Chromik, and Bert ArnrichAndres RojasBelum ada peringkat

- A Simple Real-Time QRS Detection Algorithm: January 1996Dokumen8 halamanA Simple Real-Time QRS Detection Algorithm: January 1996Fernando Vega LaraBelum ada peringkat

- Sensors 19 04708Dokumen34 halamanSensors 19 04708Dr. Md. Salah Uddin YusufBelum ada peringkat

- Adapted HeartbeatDokumen9 halamanAdapted HeartbeatqwBelum ada peringkat

- Ecg Compression Thesis Filetype PDFDokumen7 halamanEcg Compression Thesis Filetype PDFpzblktgld100% (2)

- Jec-Ecc 2016 7518962Dokumen4 halamanJec-Ecc 2016 7518962jesusBelum ada peringkat

- Final 2022 FallDokumen4 halamanFinal 2022 FallHan hoBelum ada peringkat

- Accepted Manuscript: Computer Methods and Programs in BiomedicineDokumen31 halamanAccepted Manuscript: Computer Methods and Programs in Biomedicinearya segaraBelum ada peringkat

- Systematic Design and HRV Analysis of A Portable ECG System Using Arduino and LabVIEW For Biomedical Engineering TrainingDokumen11 halamanSystematic Design and HRV Analysis of A Portable ECG System Using Arduino and LabVIEW For Biomedical Engineering Trainingsantosra211216Belum ada peringkat

- A Different Approach For Cardiac Arrhythmia Classification Using Random Forest AlgorithmDokumen5 halamanA Different Approach For Cardiac Arrhythmia Classification Using Random Forest AlgorithmAllam Jaya PrakashBelum ada peringkat

- Robust Classification of Cardiac Arrhythmia Using Machine LearningDokumen9 halamanRobust Classification of Cardiac Arrhythmia Using Machine LearningIJRASETPublicationsBelum ada peringkat

- Design, Implementation and Evaluation of Amicrocomputer-Based Portable Arrhythmia MonitorDokumen9 halamanDesign, Implementation and Evaluation of Amicrocomputer-Based Portable Arrhythmia MonitorJoao GabrielBelum ada peringkat

- CNN SkipDokumen13 halamanCNN Skipjaviera.quirozBelum ada peringkat

- Wa0012.Dokumen6 halamanWa0012.23Riya Khot VcetBelum ada peringkat

- Real-Time 3D Vectorcardiography: An Application For Didactic UseDokumen8 halamanReal-Time 3D Vectorcardiography: An Application For Didactic Usealejandroescobar264Belum ada peringkat

- Development and Evaluation of Multilead PDFDokumen10 halamanDevelopment and Evaluation of Multilead PDFfelixsafar3243Belum ada peringkat

- ECG ST Ischemic Disease Diagnosis PDFDokumen6 halamanECG ST Ischemic Disease Diagnosis PDFAkash YadavBelum ada peringkat

- Real Time Feature Extraction of ECG Signal On Android PlatformDokumen6 halamanReal Time Feature Extraction of ECG Signal On Android PlatformphatvhupBelum ada peringkat

- Arrhythmia Detection - A Machine Learning BasedDokumen5 halamanArrhythmia Detection - A Machine Learning BasedRyan Azmi Zuhdi DamanikBelum ada peringkat

- A Real-Time QRS Detection AlgorithmDokumen7 halamanA Real-Time QRS Detection AlgorithmHoang ManhBelum ada peringkat

- A Robust Approach To Wavelet Transform Feature Extraction of ECG Signal-IJAERDV03I1271886Dokumen10 halamanA Robust Approach To Wavelet Transform Feature Extraction of ECG Signal-IJAERDV03I1271886Editor IJAERDBelum ada peringkat

- Computers in Biology and MedicineDokumen10 halamanComputers in Biology and Medicineisaí lozanoBelum ada peringkat

- 2010 Ieee Titb OreskoDokumen7 halaman2010 Ieee Titb OreskoPedro ContrerasBelum ada peringkat

- A Fast ECG Diagnosis by Using Non-Uniform Spectral AnalysisDokumen21 halamanA Fast ECG Diagnosis by Using Non-Uniform Spectral AnalysisjyotiBelum ada peringkat

- 2.0 S2352711020303526 MainDokumen8 halaman2.0 S2352711020303526 MainMhd rdbBelum ada peringkat

- Research Article: An Effective LSTM Recurrent Network To Detect Arrhythmia On Imbalanced ECG DatasetDokumen11 halamanResearch Article: An Effective LSTM Recurrent Network To Detect Arrhythmia On Imbalanced ECG DatasettestBelum ada peringkat

- Full TextDokumen6 halamanFull Textluani59Belum ada peringkat

- Ecg Segmentation and P-Wave Feature Extraction: Application To Patients Prone To Atrial FibrillationDokumen6 halamanEcg Segmentation and P-Wave Feature Extraction: Application To Patients Prone To Atrial FibrillationNasim HamaydehBelum ada peringkat

- Stages-Based ECG Signal Analysis From Traditional Signal Processing To Machine Learning Approaches A SurveyDokumen22 halamanStages-Based ECG Signal Analysis From Traditional Signal Processing To Machine Learning Approaches A SurveyAzahel RangelBelum ada peringkat

- An Efficient Real Time Arrhythmia Detector Model Using - PERDokumen8 halamanAn Efficient Real Time Arrhythmia Detector Model Using - PERRyan Azmi Zuhdi DamanikBelum ada peringkat

- Cardiac Computed TomographyDokumen26 halamanCardiac Computed TomographyNajihBelum ada peringkat

- Winslow-Ncbo DBP MTGDokumen18 halamanWinslow-Ncbo DBP MTGOscar BarqueroBelum ada peringkat

- Three-Heartbeat Multilead ECG Recognition Method FDokumen16 halamanThree-Heartbeat Multilead ECG Recognition Method Fbvkarthik2711Belum ada peringkat

- 4 PBDokumen12 halaman4 PBkhouloud pfeBelum ada peringkat

- Tach PaperDokumen9 halamanTach Papersarkodie kwameBelum ada peringkat

- Luzi Etal SRLDokumen16 halamanLuzi Etal SRLuphyomgmgBelum ada peringkat

- Oakson 1981Dokumen3 halamanOakson 1981DANIEL ARTEAGA MENDOZABelum ada peringkat

- Thesis Heart FailureDokumen8 halamanThesis Heart Failurebspq3gma100% (2)

- Journal Pre-Proof: Computer Methods and Programs in BiomedicineDokumen23 halamanJournal Pre-Proof: Computer Methods and Programs in Biomedicinepuneet5246Belum ada peringkat

- Neural Networks Key To Improve EcgDokumen10 halamanNeural Networks Key To Improve Ecgshivani1275Belum ada peringkat

- Heartrate MiniprojDokumen4 halamanHeartrate MiniprojAravindanelangovanBelum ada peringkat

- Desing Implementation TelecardiologyDokumen6 halamanDesing Implementation TelecardiologyAntoni Asasa AsBelum ada peringkat

- Fischer 2010Dokumen9 halamanFischer 2010Torres Pineda OsvaldoBelum ada peringkat

- ECG Analysis SystemDokumen16 halamanECG Analysis SystemSurabhi MishraBelum ada peringkat

- Biomedical Signal Processing and ControlDokumen10 halamanBiomedical Signal Processing and ControlSERGIO ANDRES ROMERO TORRESBelum ada peringkat

- Sources of Hindu LawDokumen9 halamanSources of Hindu LawKrishnaKousikiBelum ada peringkat

- How To Configure PowerMACS 4000 As A PROFINET IO Slave With Siemens S7Dokumen20 halamanHow To Configure PowerMACS 4000 As A PROFINET IO Slave With Siemens S7kukaBelum ada peringkat

- Executive Summary-P-5 181.450 To 222Dokumen14 halamanExecutive Summary-P-5 181.450 To 222sat palBelum ada peringkat

- WarringFleets Complete PDFDokumen26 halamanWarringFleets Complete PDFlingshu8100% (1)

- Design of Penstock: Reference Code:IS 11639 (Part 2)Dokumen4 halamanDesign of Penstock: Reference Code:IS 11639 (Part 2)sunchitk100% (3)

- Sakui, K., & Cowie, N. (2012) - The Dark Side of Motivation - Teachers' Perspectives On 'Unmotivation'. ELTJ, 66 (2), 205-213.Dokumen9 halamanSakui, K., & Cowie, N. (2012) - The Dark Side of Motivation - Teachers' Perspectives On 'Unmotivation'. ELTJ, 66 (2), 205-213.Robert HutchinsonBelum ada peringkat

- Measurement Assignment EssayDokumen31 halamanMeasurement Assignment EssayBihanChathuranga100% (2)

- Notes:: Reinforcement in Manhole Chamber With Depth To Obvert Greater Than 3.5M and Less Than 6.0MDokumen1 halamanNotes:: Reinforcement in Manhole Chamber With Depth To Obvert Greater Than 3.5M and Less Than 6.0Mسجى وليدBelum ada peringkat

- 01 托福基础课程Dokumen57 halaman01 托福基础课程ZhaoBelum ada peringkat

- WAQF Podium Design Presentation 16 April 2018Dokumen23 halamanWAQF Podium Design Presentation 16 April 2018hoodqy99Belum ada peringkat

- MMS - IMCOST (RANJAN) Managing Early Growth of Business and New Venture ExpansionDokumen13 halamanMMS - IMCOST (RANJAN) Managing Early Growth of Business and New Venture ExpansionDhananjay Parshuram SawantBelum ada peringkat

- TriPac EVOLUTION Operators Manual 55711 19 OP Rev. 0-06-13Dokumen68 halamanTriPac EVOLUTION Operators Manual 55711 19 OP Rev. 0-06-13Ariel Noya100% (1)

- Delonghi Esam Series Service Info ItalyDokumen10 halamanDelonghi Esam Series Service Info ItalyBrko BrkoskiBelum ada peringkat

- Biblical World ViewDokumen15 halamanBiblical World ViewHARI KRISHAN PALBelum ada peringkat

- Prevention of Waterborne DiseasesDokumen2 halamanPrevention of Waterborne DiseasesRixin JamtshoBelum ada peringkat

- Bachelor of Arts in Theology: Christian Apologetics/ Seventh-Day Adventist Contemporary IssuesDokumen13 halamanBachelor of Arts in Theology: Christian Apologetics/ Seventh-Day Adventist Contemporary IssuesRamel LigueBelum ada peringkat

- PFEIFER Angled Loops For Hollow Core Slabs: Item-No. 05.023Dokumen1 halamanPFEIFER Angled Loops For Hollow Core Slabs: Item-No. 05.023adyhugoBelum ada peringkat

- Pidsdps 2106Dokumen174 halamanPidsdps 2106Steven Claude TanangunanBelum ada peringkat

- IEC TC 56 Dependability PDFDokumen8 halamanIEC TC 56 Dependability PDFsaospieBelum ada peringkat

- Illustrating An Experiment, Outcome, Sample Space and EventDokumen9 halamanIllustrating An Experiment, Outcome, Sample Space and EventMarielle MunarBelum ada peringkat

- Application Form InnofundDokumen13 halamanApplication Form InnofundharavinthanBelum ada peringkat

- World Insurance Report 2017Dokumen36 halamanWorld Insurance Report 2017deolah06Belum ada peringkat

- Development Developmental Biology EmbryologyDokumen6 halamanDevelopment Developmental Biology EmbryologyBiju ThomasBelum ada peringkat

- Miniature Daisy: Crochet Pattern & InstructionsDokumen8 halamanMiniature Daisy: Crochet Pattern & Instructionscaitlyn g100% (1)

- EPAS 11 - Q1 - W1 - Mod1Dokumen45 halamanEPAS 11 - Q1 - W1 - Mod1Alberto A. FugenBelum ada peringkat

- LP For EarthquakeDokumen6 halamanLP For Earthquakejelena jorgeoBelum ada peringkat

- ASHRAE Journal - Absorption RefrigerationDokumen11 halamanASHRAE Journal - Absorption Refrigerationhonisme0% (1)

- Lesson 6 - Vibration ControlDokumen62 halamanLesson 6 - Vibration ControlIzzat IkramBelum ada peringkat

- WBDokumen59 halamanWBsahil.singhBelum ada peringkat

- 1 - 2020-CAP Surveys CatalogDokumen356 halaman1 - 2020-CAP Surveys CatalogCristiane AokiBelum ada peringkat