SVM

Diunggah oleh

ameer405Deskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

SVM

Diunggah oleh

ameer405Hak Cipta:

Format Tersedia

Solaris Volume Manager Basically what you want and is most important for you is that active data

is available even after say hardware failure. RAID (Redundant Array of Independent Disks) Levels 1. RAID 0 (stripes and concatenation) there is no redundancy here, provides fast I/O 2. RAID 1 (mirroring) data is mirrored on two or more disks, data can be read from drives simultaneously. SVM also supports RAID 1+0 or 0+1 3. RAID 5 (striping with parity) each dish has data and parity stripe. If possible use hot spares here.

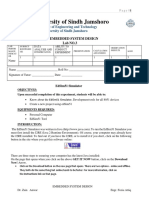

RAID 0 Stripe RAID 0 without Concatenation RAID 5 parity (writes data to RAID 1 - Stripe Requirement (spreads first disk until Mirror set with data it is full, then parity equally moves to next one) across all disks) Redundancy No No Yes Improved read No Yes Yes performance Improved write No Yes No performance More than 8 slices per No No No device Lager available Yes Yes No storage space SVM is using virtual disks to manage physical ones. volume or metadevice. Yes Yes

Soft Partition (divide volume into more smaller volumes)

No No

No

No

No

Yes

Yes

No

Virtual disk is

File system sees volume as physical disk. SVM converts I/O directed to/from volume into I/O to/from physical member disk. So basically, SVM is layer of software that sits between Physical Disks and File System. SVM volumes are built from disk slices or other volumes. SVM is integrated into SMF:

# svcs -a |egrep "md|meta" disabled Jun_10 svc:/network/rpc/mdcomm:default disabled Jun_10 svc:/network/rpc/metamed:default disabled Jun_10 svc:/network/rpc/metamh:default online Jun_10 svc:/system/metainit:default online Jun_10 svc:/network/rpc/meta:default online Jun_10 svc:/system/mdmonitor:default online Jun_10 svc:/system/fmd:default State Database and Replicas SVM State Database has configuration and status information for all volumes, hot spares and disk sets. For redundancy, multiple replicas (copies) of database are maintained. Minimum of 3 replicas are needed (in case of same failure, SVM is using majority consensus algorithm to determine valid replicas, and majority is half + 1). Replicas facts: 1. Max number of replicas per disk set is 50 2. By default, replica is 4Mb (8192 disk sectors) 3. Do not store replicas on external storages 4. Replicas cannot be placed on root (/), /usr and swap slices 5. Even replica(s) fail, system continues to run if there are at least half of them available. If there are less then half replicas available, system will panic Creating State Database Replicas The Solaris hard drive is traditionally limited to 8 slices (partition), 0-7. Use seventh one for replicas. Use "format" to determine how disks are defined. # metadb -afc 3 c1t1d0s7 -a = add replicas -f = force creation of initial replica -c = number of replica # metadb -i flags first blk block count a u r 16 8192 /dev/dsk/c1t1d0s7 a u r 8208 (8192+16) 8192 /dev/dsk/c1t1d0s7 a u r 16400(8208+8192)8192 /dev/dsk/c1t1d0s7 r - replica does not have device relocation information o - replica active prior to last mddb configuration change u - replica is up to date l - locator for this replica was read successfully c - replica's location was in /etc/lvm/mddb.cf p - replica's location was patched in kernel m - replica is master, this is replica selected as input

W - replica has device write errors a - replica is active, commits are occurring to this replica M - replica had problem with master blocks D - replica had problem with data blocks F - replica had format problems S - replica is too small to hold current data base R - replica had device read errors Deleting replicas Have 8 now. # metadb flags first blk block count a u r 16 8192 /dev/dsk/c1t1d0s7 a u r 8208 8192 /dev/dsk/c1t1d0s7 a u r 16400 8192 /dev/dsk/c1t1d0s7 a u r 16 200 /dev/dsk/c1t0d0s7 a u r 216 200 /dev/dsk/c1t0d0s7 a u r 416 200 /dev/dsk/c1t0d0s7 a u r 616 200 /dev/dsk/c1t0d0s7 a u r 816 200 /dev/dsk/c1t0d0s7 Want to delete five (5) from c1t0d0s7 # metadb -d -f /dev/dsk/c1t0d0s7 Have 3 now. # metadb flags first blk block count a u r 16 8192 /dev/dsk/c1t1d0s7 a u r 8208 8192 /dev/dsk/c1t1d0s7 a u r 16400 8192 /dev/dsk/c1t1d0s7 Tips: 1. Take good care (like backup) of files: /etc/lvm/mddb.cf (config file of SVM database) and /etc/lvm/md.cf (config file of metadevices) 2. Backup info about disks/partitions: keep copy of output from commands prtvtoc and metastat -p @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ SVM - RAID 0 - Concatenation and Stripe Volumes This doesnt provide any redundancy but allows you to expand storage capacity. There are three of them: 1. RAID-0 Stripe spreads data equally across all components (disk, slice or soft partition). 2. RAID-0 Concatenation writes data to first available component and then

moves to next one. 3. RAID-0 Concatenated Stripe is stripe expanded by additional component. You will probably use these guys to create submirrors (in order to create mirrors later). RAID-0 cannot be used for FS that are used during OS upgrade/installation: /, /usr, /var, /opt, swap. Logical data segments (define size in [K|M]bytes or blocks) of volume (RAID-0) is called interlace. Different values can be used to adjust application I/O performance. Default is 16Kb (32 blocks). Creating RAID-0 First you need state database replicas (let's create on 2 slices, total 6 replicas). # metadb -afc 3 c1t0d0s7 c1t1d0s7 # metadb flags first blk block count a u r 16 8192 /dev/dsk/c1t0d0s7 a u r 8208 8192 /dev/dsk/c1t0d0s7 a u r 16400 8192 /dev/dsk/c1t0d0s7 a u r 16 8192 /dev/dsk/c1t1d0s7 a u r 8208 8192 /dev/dsk/c1t1d0s7 a u r 16400 8192 /dev/dsk/c1t1d0s7 And get familiar (read man page) with command "metainit". Creating Stripe volume - example

# metainit d40 1 2 c1t1d0s4 c1t0d0s4 d40: Concat/Stripe is setup d40 = volume/metadevice name 1 = number of stripe 2 = two components in stripe # metastat d40: Concat/Stripe Size: 8388252 blocks (4.0 GB) Stripe 0: (interlace: 32 blocks) Device Start Block Dbase Reloc c1t1d0s4 0 No No c1t0d0s4 0 No No

Device Relocation Information: Device Reloc Device ID c1t1d0 No c1t0d0 No Creating Concatenation Volume example

# metainit d40 2 1 c1t1d0s4 1 c1t0d0s4 d40: Concat/Stripe is setup 2 = number of stripe 1 = one component per stripe # metastat d40: Concat/Stripe Size: 8391888 blocks (4.0 GB) Stripe 0: Device Start Block Dbase Reloc c1t1d0s4 0 No No Stripe 1: Device Start Block Dbase Reloc c1t0d0s4 0 No No

Device Relocation Information: Device Reloc Device ID c1t1d0 No c1t0d0 No Creating new FS Once you have metadevice, create FS. # newfs /dev/md/dsk/d40 Add line in /etc/vfstab file (table of file system defaults) and mount /.0 /dev/md/dsk/d40 /dev/md/rdsk/d40 /.0 ufs 2 yes Expanding storage capacity for existing data If you have RAID-0 volume (Concatenation or Stripe), you can expand it (convert it to concatenation stripe) no need to reboot or unmount FS! Check what do you have. # metastat d40: Concat/Stripe Size: 8388252 blocks (4.0 GB) Stripe 0: (interlace: 32 blocks) Device Start Block Dbase Reloc c1t1d0s4 0 No No c1t0d0s4 0 No No

Device Relocation Information: Device Reloc Device ID c1t1d0 No c1t0d0 No Say, stripe is mounted to /.0 file system. /dev/md/dsk/d40 3.9G 4.0M 3.9G 1% /.0

Attach new slices to existing metadevice/volume # metattach d40 c1t1d0s5 d40: component is attached Check what do you have now - see the size is bigger. # metastat d40: Concat/Stripe Size: 18201816 blocks (8.7 GB) Stripe 0: (interlace: 32 blocks) Device Start Block Dbase Reloc c1t0d0s4 0 No No c1t1d0s4 0 No No Stripe 1: Device Start Block Dbase Reloc c1t1d0s5 0 No No

Device Relocation Information: Device Reloc Device ID c1t0d0 No c1t1d0 No But your FS still shows same size. # df -h /.0 Filesystem size used avail capacity Mounted on /dev/md/dsk/d40 3.9G 4.0M 3.9G 1% /.0 Grow the FS # growfs -M /.0 /dev/md/rdsk/d40 -M = it does "write-lock" of mounted FS when expanding Now you have bigger FS. # df -h /.0 Filesystem size used avail capacity Mounted on /dev/md/dsk/d40 8.5G 8.7M 8.5G 1% /.0 Deleting/Clearing volume (and its data)

# umount /.0

# metaclear d40 d40: Concat/Stripe is cleared SVM - RAID 1 (Mirror) only for SPARC Now we can have fully usage of RAID-0 volumes since they can be used as submirrors. By the way, the max number of submirrors is 4. Most people use 2 of them in two-way mirror.

If you have three-way mirror, then you can take a submirror offline and perform backup (this submirror is read only). You still have redundancy as 2 others are online. Dont mix offline and detach actions. Offline-ing submirror is done for maintenance, detach-ing is done for (permanently) removing it. Basically, you start with creating one-way mirror (has only one submirror) and later attach second one. You can play around with RAID 0+1 (stripes that are mirrored) or 1+0 (mirrors that are striped) Creating RAID-1 (from unused slices) - example 0. First you need state database replicas (create on 2 slices, total 6 replicas). # metadb -afc 3 c1t0d0s7 c1t1d0s7 # metadb flags first blk block count a u r 16 8192 /dev/dsk/c1t0d0s7 a u r 8208 8192 /dev/dsk/c1t0d0s7 a u r 16400 8192 /dev/dsk/c1t0d0s7 a u r 16 8192 /dev/dsk/c1t1d0s7 a u r 8208 8192 /dev/dsk/c1t1d0s7 a u r 16400 8192 /dev/dsk/c1t1d0s7 1. Create two submirrors (stripes with one component): # metainit d15 1 1 c1t0d0s5 # metainit d25 1 1 c1t1d0s5

2. Create one-way mirror (from one stripe) # metainit d5 m d15

3. Attach second submirror to the mirror # metattach d5 d25

The resynchronization starts automatically. Note: Instead of steps 2 and 3, you could have created two-way mirror with: # metainit d5 m d15 d25

But this DOES NOT start resynchronization automatically trouble in the future! 4. Create new FS: # newfs /dev/md/rdsk/d5

5. Add line in /etc/vfstab file (table of file system defaults) and mount FS, for example /myfs /dev/md/dsk/d5 /dev/md/rdsk/d5 /myfs ufs 2 yes -

Check what you have now. # metastat d5: Mirror Submirror 0: d15 State: Okay Submirror 1: d25 State: Resyncing Resync in progress: 10 % done (resync-ing ...good!) Pass: 1 Read option: roundrobin (default) Write option: parallel (default) Size: 4195944 blocks (2.0 GB)

d15: Submirror of d5 State: Okay Size: 4195944 blocks (2.0 GB) Stripe 0: Device Start Block Dbase State Reloc Hot Spare c1t0d0s5 0 No Okay No

d25: Submirror of d5 State: Okay Size: 4195944 blocks (2.0 GB) Stripe 0: Device Start Block Dbase State Reloc Hot Spare c1t1d0s5 0 No Okay No SVM has different read/write policies for RAID-1. You can see default values above in red. Read more about other options in Sun doc 816-4520-12 on page 100. Pass number (0-9) shows resynchronized order for submirrors during boot time. Lower number resynchronized first. Zero means skipping resynchronization (use for read only mounted mirror).

Example of changing these parameter values: # metaparam -w serial -r geometric p 4 d5 See what you have after change: # metaparam d5 d5: Mirror current parameters are: Pass: 4 Read option: geometric (-g) Write option: serial (-S)

# metastat d5: Mirror Submirror 0: d15 State: Okay Submirror 1: d25 State: Okay Pass: 4 Read option: geometric (-g) Write option: serial (-S) Creating RAID-1 (from unused FS) - example No reboot is required if FS can be unmounted, otherwise if you deal with /, /usr, /swap - yes you need to reboot. For root FS you also need additional exercise like allowing boot from that FS and booting from second mirrored disk, but lets put stuff in the table.

Action \ FS type

FS can be unmounted /myfs

Creating 2 submirrors

FS cannot be unmounted /usr # metainit -f # metainit -f d13 1 1 d14 1 1 c1t0d0s3 c1t0d0s4 (-f = force it, (-f = force it, since FS is since FS is mounted on this mounted on this slice) slice) # metainit d24 1 1 c1t1d0s4 # metainit d23 1 1 c1t1d0s3

root FS (/)

# metainit -f d10 1 1 c1t0d0s0 (-f = force it, since FS is mounted on this slice) # metainit d20 1 1 c1t1d0s0

Creating oneway mirror Unmount FS

# metainit d4 -m d14 # umount /myfs

# metainit d3 -m d13 Do nothing, since you cannot unmount

# metainit d0 -m d10 Do nothing, since you cannot unmount

# metaroot d0 (This edits the /etc/vfstab and /etc/system files) This line shows in Have this line: Have this line: /etc/vfstab /dev/md/dsk/d0 /dev/md/rdsk/d0 / ufs 1 /dev/md/dsk/d4 /dev/md/dsk/d3 no /dev/md/rdsk/d4 /dev/md/rdsk/d3 /myfs ufs 2 yes /usr ufs 1 yes This lines shows in /etc/system file * Begin MDD root info (do not edit) rootdev:/pseudo/md@0:0,0, blk * End MDD root info (do not edit)

Edit /etc/vfstab FS points to mirror

Mount FS Reboot Attach second submirror

# mount /myfs no need to reboot here # metattach d4 d24 (resync starts)

n/a Reboot

n/a Reboot

# metattach d3 d23 (resync starts) # metattach d0 d20 (resync starts) # installboot /usr/platform/SUNW,UltraA Xi2/lib/fs/ufs/bootblk /dev/rdsk/c1t1d0s0 (use uname -i to find directory SUNW,UltraAX-i2 )

Install bootblock on second slice

n/a

n/a

Setup n/a alternate boot path

n/a

Let's find path of alternate root device slice we attached to oneway mirror

# ls -l /dev/dsk/c1t1d0s0 /dev/dsk/c1t1d0s0 -> ../../devices/pci@1f,0/pc i@1/scsi@8/sd@1,0:a

Write down physical path (see what is after

/devices directory) /pci@1f,0/pci@1/scsi@8/sd @1,0:a

Replace sd with disk, now you have: /pci@1f,0/pci@1/scsi@8/di sk@1,0:a

Go to OpenBoot PROM with init 0

Define alias for mirror boot disk. Alias points to physical path. ok devalias mirrdisk /pci@1f,0/pci@1/scsi@8/di sk@1,0:a

Define order of boot devices

ok setenv boot-device disk mirrdisk boot-device = disk mirrdisk

Check if everything okay? ok printenv

Copy content of temp buffer to NVRAMRC and discard content of temp buffer. You have to do this since defining alias with devalias is NOT persistent across reboot. ok nvstore

Reset OpenBoot PROM to verify that the new info has been saved. ok reset ( or reset-all )

If instead of devalias,

the nvalias has been used, then the alias will be persistent over reboot.

Just make sure that usenvramrc is true. ok setenv use-nvramrc? true use-nvramrc? = true

ok nvalias mirrdisk /pci@1f,0/pci@1/scsi@8/di sk@1,0:a Creating RAID-1 (from /swap ) 1. Create two submirrors (stripes with one component): # metainit d11 1 1 c1t0d0s1 # metainit d21 1 1 c1t1d0s1

2. Create one-way mirror (from one stripe) # metainit d1 m d11

3. Edit "swap" line in /etc/vfstab file /dev/md/dsk/d1 - - swap - no -

4. Reboot 5. Attach second submirror to the mirror # metattach d1 d21

6. Your dump device is still /dev/dsk/c1t0d0s1. Change it to the metadevice. # dumpadm -d swap Dump content: kernel pages Dump device: /dev/md/dsk/d1 (swap)

Postscript: Before creating state database replicas, you may need to copy VTOC from

current to mirror disk. # prtvtoc /dev/rdsk/c1t0d0s2 | fmthard -s - /dev/rdsk/c1t1d0s2 prtvtoc - shows disk geometry and partitioning fmthard - create label on hard disks DISK REPLACEMENT ============== # Array was d0 with d10 and d20 as submirrors # Detach the faulty disk root@server:# metadetach -f d0 d10 # and remove the disk from the array root@server:# metaclear d10 # delete the SDR from the disk root@server:# metadb -f -d c?t?d?s? # If hot swap then replace the disk # Copy the vtoc from the good disk to a file root@server:# prtvtoc /dev/rdsk/c?t?d?s? > vtoc.c?t?d?s? # and copy to the new disk root@server:# fmthard -s vtoc.c?t?d?s? /dev/rdsk/c?t?d?s? # Add the new disk to the array root@server:# metainit d10 1 1 c?t?d?s? # and atach to the array. Will sync to existing drive root@server:# metattach d0 d10 # add an SDR to the new disk root@server:# metadb -a -c 1 c?t?d?s? Root disk mirroring in SVM ================== 1. Copy the partition table of the first disk to its mirror. # prtvtoc /dev/rdsk/c0t0d0s2 | fmthard -s - /dev/rdsk/c0t1d0s2 2. Create at least 2 DiskSuite state database replicas on each disk. A state database replica contains DiskSuite configuration and state information. # metadb -a -f -c2 /dev/dsk/c0t0d0s3 /dev/dsk/c0t1d0s3 Description of metadb flags: -a -- adding -f -- force (needed the first time creating databases) -c2 -- create 2 databases in each slice 3. Mirror the root slice. # metainit -f d10 1 1 c0t0d0s0 # metainit -f d20 1 1 c0t1d0s0 # metainit d0 -m d10 # metaroot d0 (Use this command only on the root slice!) 4. Mirror the swap slice. # metainit -f d11 1 1 c0t0d0s1 # metainit -f d21 1 1 c0t1d0s1 # metainit d1 -m d11 5. Mirror the var slice. # metainit -f d14 1 1 c0t0d0s4 # metainit -f d24 1 1 c0t1d0s4 # metainit d4 -m d14 6. Mirror the usr slice. # metainit -f d15 1 1 c0t0d0s5

# metainit -f d25 1 1 c0t1d0s5 # metainit d5 -m d15 7. Mirror the opt slice. # metainit -f d16 1 1 c0t0d0s6 # metainit -f d26 1 1 c0t1d0s6 # metainit d6 -m d16 8. Mirror the home slice. # metainit -f d17 1 1 c0t0d0s7 # metainit -f d27 1 1 c0t1d0s7 # metainit d7 -m d17 9. Update /etc/vfstab to mount the mirrors after boot. 10. Reboot the system. # lockfs -fa # init 6 11. Attach the second submirror to the mirror. This will cause the data from the boot disk to be synchronized with the mirrored drive. # metattach d0 d20 # metattach d1 d21 # metattach d4 d24 # metattach d5 d25 # metattach d6 d26 # metattach d7 d27 SVM - soft partition If you need to divide disk/volume in more than 8 partitions, this is what you need to use. For best practices, place soft partition directly above disk slice or on top of mirror/stripe/raid5 volume (volume built from slices). Avoid other combinations. Each soft partition can be separate file system. Say you have 100 users, so you create 100 soft partitions over RAID1/5 volume and each user has home directory on separate FS. Example 1: Creating soft partition from whole disk size 16G. -p = soft partition is specified.

# metainit d10 -p c1t1d0s2 16g d10: Soft Partition is setup # metastat d10 d10: Soft Partition Device: c1t1d0s2 State: Okay Size: 33554432 blocks (16 GB) Device Start Block Dbase Reloc c1t1d0s2 3636 No No

Extent Start Block Block count 0 3637 33554432 Device Relocation Information: Device Reloc Device ID c1t1d0 No # metastat -p d10 -p c1t1d0s2 -o 3637 -b 33554432 Create new FS # newfs -v /dev/md/rdsk/d10 Mount FS Add line in /etc/vfstab /dev/md/dsk/d10 /dev/md/rdsk/d10 /.1 ufs 2 yes # mount /.1 Check the FS # df -h /.1 Filesystem size used avail capacity Mounted on /dev/md/dsk/d10 16G 16M 16G 1% /.1 Example 2: Create 11 soft partitions on disk (you can have max of 8 traditional partitions). # foreach i ( 1 2 3 4 5 6 7 8 9 10 11 ) foreach? metainit d${i}0 -p c1t1d0s2 1g foreach? end

# metastat -p d110 -p c1t1d0s2 -o 20975167 -b 2097152 d100 -p c1t1d0s2 -o 18878014 -b 2097152 d90 -p c1t1d0s2 -o 16780861 -b 2097152 d80 -p c1t1d0s2 -o 14683708 -b 2097152 d70 -p c1t1d0s2 -o 12586555 -b 2097152 d60 -p c1t1d0s2 -o 10489402 -b 2097152 d50 -p c1t1d0s2 -o 8392249 -b 2097152 d40 -p c1t1d0s2 -o 6295096 -b 2097152 d30 -p c1t1d0s2 -o 4197943 -b 2097152 d20 -p c1t1d0s2 -o 2100790 -b 2097152 d10 -p c1t1d0s2 -o 3637 -b 2097152 Create new FS(s) # foreach i ( 1 2 3 4 5 6 7 8 9 10 11 ) foreach? newfs /dev/md/rdsk/d${i}0 foreach? end Mount new FS(s) update /etc/vfstab file

# foreach i ( 1 2 3 4 5 6 7 8 9 10 11 ) foreach? echo "/dev/md/dsk/d${i}0 /dev/md/rdsk/d${i}0 /.${i} ufs 2 yes " >> /etc/vfstab foreach? end # mountall Lets say you want to add 2G to soft partition (has 1G). # metattach d110 2g d110: Soft Partition has been grown You can see now that soft partition has 3g. # metastat d110 d110: Soft Partition Device: c1t1d0s2 State: Okay Size: 6291456 blocks (3.0 GB) Device Start Block Dbase Reloc c1t1d0s2 3636 No No But FS is still 1G and you need to make it grow. #df -h /.11 Filesystem size used avail capacity Mounted on /dev/md/dsk/d110 962M 1.0M 904M 1% /.11 Use command: # growfs -M /.11 /dev/md/rdsk/d110 As you can see, soft partition has to be mounted when you do this. You are good to go now. All data is still there on FS /.11 # df -h /.11 Filesystem size used avail capacity Mounted on /dev/md/dsk/d110 2.8G 3.0M 2.8G 1% /.11

SVM Maintenance Attaching/Detaching submirror As you saw in the docs (about creating SVM RAID1), there is command metattach that works like: # metattach d4 d24 (attach submirror to mirror) Or # metattach d40 c1t1d0s5 (attach slice to stripe) Whit metattach, resync of new submirror starts automatically. Sometimes you need to do opposite, to de-attach submirror.

# metadetach d4 d24 This breaks mirror permanently. Placing submirror offline/online This is now different story, since placing submirror offline does not breaks mirror design. With metaoffline, SVM stops reading/writing to submirror that have been taken offline. During this time all writes are kept track of. Once submirror is back online, data will be resynchronized.

# metaoffline d4 d24 d4: submirror d24 is offlined But offline submirror can still be mounted as read-only (like here mount to /.1). This can be used if you want to backup data from submirror (/.1), but note that now you don't have redundancy on d4.

/dev/md/dsk/d4 2055643 2195 1991779 1% /.0 /dev/md/dsk/d24 2055643 2195 1991779 1% /.1 (read-only) Offline submirror is back online with command metaonline and with machine reboot.

# metaonline d4 d24 d4: submirror d24 is onlined Replacing components of submirror or metadevice of RAID5 Two states will require component replacement: Last Erred and Maintenance. Always replace maintenance state component first. Lets try example - check your mirror # metastat d4 d4: Mirror Submirror 0: d14 State: Okay Submirror 1: d24 State: Okay Pass: 1 Read option: roundrobin (default) Write option: parallel (default) Size: 4195944 blocks (2.0 GB)

d14: Submirror of d4 State: Okay Size: 4195944 blocks (2.0 GB) Stripe 0: Device Start Block Dbase State Reloc Hot Spare

c1t0d0s4 0 No Okay No

d24: Submirror of d4 State: Okay Size: 4195944 blocks (2.0 GB) Stripe 0: Device Start Block Dbase State Reloc Hot Spare c1t1d0s4 0 No Okay No

Device Device c1t0d0 c1t1d0 Say we

Relocation Information: Reloc Device ID No No want to replace slice in submirror d24.

# metareplace d4 c1t1d0s4 c1t1d0s5 d4: device c1t1d0s4 is replaced with c1t1d0s5 See what we have now there is resync in progress # metastat d4 d4: Mirror Submirror 0: d14 State: Okay Submirror 1: d24 State: Resyncing Resync in progress: 3 % done Pass: 1 Read option: roundrobin (default) Write option: parallel (default) Size: 4195944 blocks (2.0 GB)

d14: Submirror of d4 State: Okay Size: 4195944 blocks (2.0 GB) Stripe 0: Device Start Block Dbase State Reloc Hot Spare c1t0d0s4 0 No Okay No

d24: Submirror of d4 State: Resyncing Size: 4195944 blocks (2.0 GB) Stripe 0: Device Start Block Dbase State Reloc Hot Spare c1t1d0s5 0 No Resyncing No

Device Device c1t0d0 c1t1d0

Relocation Information: Reloc Device ID No No -

svm

SOL10

Unencapsulate SVM root mirror Often times administrators must boot a Solaris from alternate media in single user mode (failsafe, cd/dvdrom, or network image) in order to make repairs to the installed OS. In this environment, no Solaris Volume Manager (SVM) module is loaded, so if the OS is mirrored it is impossible to work on the installed OS without de-synchronizing the mirrors. Attempting to boot or run Solaris on descynchronized mirrors is extremely unstable and it may cause kernel panics or worse, data corruption. The supported method for dealing with this is to make changes to only one side of the mirror, then unencapsulate the root mirror before rebooting/resyncing. 1. Mount the root slice on one side of the mirror and make necessary repairs # mount /dev/dsk/c0t0d0s0 /a 2. Backup the SVM configuration # cp /a/etc/vfstab /a/etc/vfstab.svm # cp /a/etc/system /a/etc/system.svm 3. Update /etc/vfstab and /etc/system to boot from plain slices on the repaired side of the mirror # vi /a/etc/vfstab (return all md devices to plain slices) # vi /a/etc/system (comment out rootdev line) 4. Reboot # init 0 ok boot -r 5. Once the system is up, split the metadevice # metadetach <mirror device> <secondary submirror> (repeat for all mirrored slices) 6. Restore the configuration files in order to re-encapsulate, so the system boots on single-disk (single sub-mirror) mirror metadevices # mv /etc/system.svm /etc/system # mv /etc/vfstab.svm /etc/vfstab 7. Reboot # init 6 8. After reboot, sync the mirrors # metattach <mirror device> <secondary submirror> (repeat for all mirror slices)

Anda mungkin juga menyukai

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (120)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (895)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- Computer Quiz 1Dokumen1 halamanComputer Quiz 1ceyavio100% (2)

- PC Hardware and SoftwareDokumen145 halamanPC Hardware and Softwarempo3698567% (3)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (73)

- MPI Mid Exam QuestionsDokumen8 halamanMPI Mid Exam Questionskranthi6330% (1)

- I/O Basics: Ports Data, Control, and Status BitsDokumen11 halamanI/O Basics: Ports Data, Control, and Status BitsNikhil GobhilBelum ada peringkat

- NTAL Report FormatDokumen9 halamanNTAL Report FormatsangeetadineshBelum ada peringkat

- Dfg1394vsdfgusb - en USDokumen1 halamanDfg1394vsdfgusb - en UStheimagingsourceBelum ada peringkat

- Rekap Nilai Monev Ikm 2022 UmumDokumen9 halamanRekap Nilai Monev Ikm 2022 UmumNanda ByaBelum ada peringkat

- Gallaugher TIF Ch05 0Dokumen11 halamanGallaugher TIF Ch05 0c3570892Belum ada peringkat

- Computer Fundamentals Questions and AnswersDokumen276 halamanComputer Fundamentals Questions and AnswersMersal Ahmed AnamBelum ada peringkat

- Bandit: PV BuildDokumen64 halamanBandit: PV Builddương hoàngBelum ada peringkat

- MT8071iE1 DataSheet ENGDokumen2 halamanMT8071iE1 DataSheet ENGNCBelum ada peringkat

- Lesson 4Dokumen27 halamanLesson 4Niña Mae C. QuiambaoBelum ada peringkat

- Catchlogs - 2023-06-19 at 20-54-06 - 5.36.0.100 (1342) - .JavaDokumen29 halamanCatchlogs - 2023-06-19 at 20-54-06 - 5.36.0.100 (1342) - .Javamuchlis.mustafa1313Belum ada peringkat

- Zebra 220xi4 Printer SpecificationsDokumen5 halamanZebra 220xi4 Printer SpecificationsMiguel GascaBelum ada peringkat

- Lab3 ESDDokumen32 halamanLab3 ESDAbdulManan MemonBelum ada peringkat

- PN 22200027501 - U500 Mission Urine Analyzer - US - CRDokumen2 halamanPN 22200027501 - U500 Mission Urine Analyzer - US - CRZulfahmiBelum ada peringkat

- Datasheet DataMan8700Dokumen6 halamanDatasheet DataMan8700Erica SöderlundBelum ada peringkat

- The UniversimDokumen2 halamanThe UniversimGhazisBelum ada peringkat

- SECTION 04 After Sales Service PolicyDokumen14 halamanSECTION 04 After Sales Service PolicyRFamDamilyBelum ada peringkat

- MFDLink PDFDokumen12 halamanMFDLink PDFLautaro AyoroaBelum ada peringkat

- Four Nisse UrDokumen6 halamanFour Nisse Urabdoumrx94Belum ada peringkat

- Multifunctional Bench Scale For Standard Industrial ApplicationsDokumen4 halamanMultifunctional Bench Scale For Standard Industrial ApplicationsHector Fabio Carabali HerreraBelum ada peringkat

- Class 5 L-2 Input & Output DevicesDokumen61 halamanClass 5 L-2 Input & Output DevicesSmriti Tiwari0% (1)

- Everex Lm7we User ManualDokumen122 halamanEverex Lm7we User ManualGabriel CalijuriBelum ada peringkat

- 5 Axis ManualDokumen6 halaman5 Axis Manualpfonsecap0% (1)

- FM1100 User Manual v2.07Dokumen95 halamanFM1100 User Manual v2.07Yauheni GresskiBelum ada peringkat

- DVR Ritevision 16v 4a RV9016ZADokumen2 halamanDVR Ritevision 16v 4a RV9016ZAvrj1091Belum ada peringkat

- C6140-0060 - Control Cabinet Industrial PC: VariantsDokumen2 halamanC6140-0060 - Control Cabinet Industrial PC: Variantsdarinel88Belum ada peringkat

- Azure Sphere MT3620 Starter KitDokumen2 halamanAzure Sphere MT3620 Starter KitRP1996Belum ada peringkat

- A+ 8GBDokumen3 halamanA+ 8GBwe ExtenBelum ada peringkat