Software Quality - Wikipedia, The Free Encyclopedia

Diunggah oleh

Muhammad Siddig HassanDeskripsi Asli:

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Software Quality - Wikipedia, The Free Encyclopedia

Diunggah oleh

Muhammad Siddig HassanHak Cipta:

Format Tersedia

Software quality - Wikipedia, the free encyclopedia Page 1

Software quality

From Wikipedia, the free encyclopedia

In the context of software engineering, software quality measures how well software is designed (quality of design), and how well the

software conforms to that design (quality of conformance)[1], although there are several different definitions.

Whereas quality of conformance is concerned with implementation (see Software Quality Assurance), quality of design measures how valid

the design and requirements are in creating a worthwhile product[2].

Contents

1 Definition

2 History

2.1 Software product quality

2.2 Source code quality

3 Software reliability

3.1 History

3.2 The goal of reliability

3.3 The challenge of reliability

3.4 Reliability in program development

3.4.1 Requirements

3.4.2 Design

3.4.3 Programming

3.4.4 Software Build and Deployment

3.4.5 Testing

3.4.6 Runtime

4 Software Quality Factors

4.1 Measurement of software quality factors

4.1.1 Understandability

4.1.2 Completeness

4.1.3 Conciseness

4.1.4 Portability

4.1.5 Consistency

4.1.6 Maintainability

4.1.7 Testability

4.1.8 Usability

4.1.9 Reliability

4.1.10 Structuredness

4.1.11 Efficiency

4.1.12 Security

5 User's perspective

6 See also

7 Bibliography

Definition

One of the problems with Software Quality is that "everyone feels they understand it." [3] In addition to the definition above by Dr. Scott

Pressman, other software engineering experts have given several definitions.

A definition in Steve McConnell's Code Complete similarly divides software into two pieces: internal and external quality

characteristics. External quality characteristics are those parts of a product that face its users, where internal quality characteristics are

those that do not. [4]

Another definition by Dr. Tom DeMarco says "a product's quality is a function of how much it changes the world for the better." [5] This

can be interpreted as meaning that user satisfaction is more important than anything in determining software quality.[1]

Another definition, coined by Gerald Weinberg in Quality Software Management: Systems Thinking, is "Quality is value to some person."

This definition stresses that quality is inherently subjective - different people will experience the quality of the same software very

http://en.wikipedia.org/wiki/Software_quality 18/01/1430 12:39:01 ص

Software quality - Wikipedia, the free encyclopedia Page 2

differently. One strength of this definition is the questions it invites software teams to consider, such as "Who are the people we want to

value our software?" and "What will be valuable to them?".

History

Software product quality

Product quality

conformance to requirements or program specification; related to Reliability

Scalability

Correctness

Completeness

Absence of bugs

Fault-tolerance

Extensibility

Maintainability

Documentation

Source code quality

A computer has no concept of "well-written" source code. However, from a human point of view source code can be written in a way that

has an effect on the effort needed to comprehend its behavior. Many source code programming style guides, which often stress readability

and usually language-specific conventions are aimed at reducing the cost of source code maintenance. Some of the issues that effect code

quality include:

Readability

Ease of maintenance, testing, debugging, fixing, modification and portability

Low complexity

Low resource consumption: memory, CPU

Number of compilation or lint warnings

Robust input validation and error handling, established by software fault injection

Methods to improve the quality:

Refactoring

Documenting code

Software reliability

Software reliability is an important facet of software quality. It is defined as "the probability of failure-free operation of a computer

program in a specified environment for a specified time" [6].

One of reliability's distinguishing characteristics is that it is objective, measurable, and can be estimated, whereas much of software quality

is subjective criteria[7]. This distinction is especially important in the discipline of Software Quality Assurance. These measured criteria are

typically called software metrics.

History

With software embedded into many devices today, software failure has caused more than inconvenience. Software errors have even caused

human fatalities. The causes have ranged from poorly designed user interfaces to direct programming errors. An example of a programming

error that lead to multiple deaths is discussed in Dr. Leveson's paper [1] (http://sunnyday.mit.edu/papers/therac.pdf) (PDF). This has

resulted in requirements for development of some types software. In the United States, both the Food and Drug Administration (FDA) and

Federal Aviation Administration (FAA) have requirements for software development.

The goal of reliability

The need for a means to objectively determine software quality comes from the desire to apply the techniques of contemporary engineering

fields to the development of software. That desire is a result of the common observation, by both lay-persons and specialists, that computer

software does not work the way it ought to. In other words, software is seen to exhibit undesirable behaviour, up to and including outright

failure, with consequences for the data which is processed, the machinery on which the software runs, and by extension the people and

materials which those machines might negatively affect. The more critical the application of the software to economic and production

processes, or to life-sustaining systems, the more important is the need to assess the software's reliability.

http://en.wikipedia.org/wiki/Software_quality 18/01/1430 12:39:01 ص

Software quality - Wikipedia, the free encyclopedia Page 3

Regardless of the criticality of any single software application, it is also more and more frequently observed that software has penetrated

deeply into most every aspect of modern life through the technology we use. It is only expected that this infiltration will continue, along with

an accompanying dependency on the software by the systems which maintain our society. As software becomes more and more crucial to

the operation of the systems on which we depend, the argument goes, it only follows that the software should offer a concomitant level of

dependability. In other words, the software should behave in the way it is intended, or even better, in the way it should.

The challenge of reliability

The circular logic of the preceding sentence is not accidental—it is meant to illustrate a fundamental problem in the issue of measuring

software reliability, which is the difficulty of determining, in advance, exactly how the software is intended to operate. The problem seems

to stem from a common conceptual error in the consideration of software, which is that software in some sense takes on a role which would

otherwise be filled by a human being. This is a problem on two levels. Firstly, most modern software performs work which a human could

never perform, especially at the high level of reliability that is often expected from software in comparison to humans. Secondly, software is

fundamentally incapable of most of the mental capabilities of humans which separate them from mere mechanisms: qualities such as

adaptability, general-purpose knowledge, a sense of conceptual and functional context, and common sense.

Nevertheless, most software programs could safely be considered to have a particular, even singular purpose. If the possibility can be

allowed that said purpose can be well or even completely defined, it should present a means for at least considering objectively whether the

software is, in fact, reliable, by comparing the expected outcome to the actual outcome of running the software in a given environment, with

given data. Unfortunately, it is still not known whether it is possible to exhaustively determine either the expected outcome or the actual

outcome of the entire set of possible environment and input data to a given program, without which it is probably impossible to determine

the program's reliability with any certainty.

However, various attempts are in the works to attempt to rein in the vastness of the space of software's environmental and input variables,

both for actual programs and theoretical descriptions of programs. Such attempts to improve software reliability can be applied at different

stages of a program's development, in the case of real software. These stages principally include: requirements, design, programming,

testing, and runtime evaluation. The study of theoretical software reliability is predominantly concerned with the concept of correctness, a

mathematical field of computer science which is an outgrowth of language and automata theory.

Reliability in program development

Requirements

A program cannot be expected to work as desired if the developers of the program do not, in fact, know the program's desired behaviour in

advance, or if they cannot at least determine its desired behaviour in parallel with development, in sufficient detail. What level of detail is

considered sufficient is hotly debated. The idea of perfect detail is attractive, but may be impractical, if not actually impossible, in practice.

This is because the desired behaviour tends to change as the possible range of the behaviour is determined through actual attempts, or more

accurately, failed attempts, to achieve it.

Whether a program's desired behaviour can be successfully specified in advance is a moot point if the behaviour cannot be specified at all,

and this is the focus of attempts to formalize the process of creating requirements for new software projects. In situ with the formalization

effort is an attempt to help inform non-specialists, particularly non-programmers, who commission software projects without sufficient

knowledge of what computer software is in fact capable. Communicating this knowledge is made more difficult by the fact that, as hinted

above, even programmers cannot always know in advance what is actually possible for software in advance of trying.

Design

While requirements are meant to specify what a program should do, design is meant, at least at a high level, to specify how the program

should do it. The usefulness of design is also questioned by some, but those who look to formalize the process of ensuring reliability often

offer good software design processes as the most significant means to accomplish it. Software design usually involves the use of more

abstract and general means of specifying the parts of the software and what they do. As such, it can be seen as a way to break a large

program down into many smaller programs, such that those smaller pieces together do the work of the whole program.

The purposes of high-level design are as follows. It separates what are considered to be problems of architecture, or overall program concept

and structure, from problems of actual coding, which solve problems of actual data processing. It applies additional constraints to the

development process by narrowing the scope of the smaller software components, and thereby—it is hoped—removing variables which

could increase the likelihood of programming errors. It provides a program template, including the specification of interfaces, which can be

shared by different teams of developers working on disparate parts, such that they can know in advance how each of their contributions will

interface with those of the other teams. Finally, and perhaps most controversially, it specifies the program independently of the

implementation language or languages, thereby removing language-specific biases and limitations which would otherwise creep into the

design, perhaps unwittingly on the part of programmer-designers.

Programming

The history of computer programming language development can often be best understood in the light of attempts to master the complexity

of computer programs, which otherwise becomes more difficult to understand in proportion (perhaps exponentially) to the size of the

http://en.wikipedia.org/wiki/Software_quality 18/01/1430 12:39:01 ص

Software quality - Wikipedia, the free encyclopedia Page 4

programs. (Another way of looking at the evolution of programming languages is simply as a way of getting the computer to do more and

more of the work, but this may be a different way of saying the same thing.) Lack of understanding of a program's overall structure and

functionality is a sure way to fail to detect errors in the program, and thus the use of better languages should, conversely, reduce the number

of errors by enabling a better understanding.

Improvements in languages tend to provide incrementally what software design has attempted to do in one fell swoop: consider the software

at ever greater levels of abstraction. Such inventions as statement, sub-routine, file, class, template, library, component and more have

allowed the arrangement of a program's parts to be specified using abstractions such as layers, hierarchies and modules, which provide

structure at different granularities, so that from any point of view the program's code can be imagined to be orderly and comprehensible.

In addition, improvements in languages have enabled more exact control over the shape and use of data elements, culminating in the

abstract data type. These data types can be specified to a very fine degree, including how and when they are accessed, and even the state of

the data before and after it is accessed..

Software Build and Deployment

Many programming languages such as C and Java require the program "source code" to be translated in to a form that can be executed by a

computer. This translation is done by a program called a compiler. Additional operations may be involved to associate, bind, link or

package files together in order to create a usable runtime configuration of the software application. The totality of the compiling and

assembly process is called generically "building" the software.

The software build is critical to software quality because if any of the generated files are incorrect the software build is likely to fail. And, if

the incorrect version of a program is inadvertently used, then testing can lead to false results.

Software builds are typically done in work area unrelated to the runtime area, such as the application server. For this reason, a deployment

step is needed to physically transfer the software build products to the runtime area. The deployment procedure may also involve technical

parameters, which, if set incorrectly, can also prevent software testing from beginning. For example, a Java application server may have

options for parent-first or parent-last class loading. Using the incorrect parameter can cause the application to fail to execute on the

application server.

The technical activities supporting software quality including build, deployment, change control and reporting are collectively known as

Software configuration management. A number of software tools have arisen to help meet the challenges of configuration management

including file control tools and build control tools.

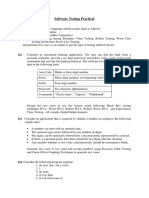

Testing

Software testing, when done correctly, can increase overall software quality of conformance by testing that the product conforms to its

requirements. Testing includes, but is not limited to:

1. Unit Testing

2. Functional Testing

3. Regression Testing

4. Performance Testing

5. Failover Testing

6. Usability Testing

A number of agile methodologies use testing early in the development cycle to ensure quality in their products. For example, the test-driven

development practice, where tests are written before the code they will test, is used in Extreme Programming to ensure quality.

Runtime

Runtime reliability determinations are similar to tests, but go beyond simple confirmation of behaviour to the evaluation of qualities such as

performance and interoperability with other code or particular hardware configurations.....

Software Quality Factors

A software quality factor is a non-functional requirement for a software program which is not called up by the customer's contract, but

nevertheless is a desirable requirement which enhances the quality of the software program. Note that none of these factors are binary; that

is, they are not “either you have it or you don’t” traits. Rather, they are characteristics that one seeks to maximize in one’s software to

optimize its quality. So rather than asking whether a software product “has” factor x, ask instead the degree to which it does (or does not).

Some software quality factors are listed here:

Understandability–clarity of purpose. This goes further than just a statement of purpose; all of the design and user documentation must be

clearly written so that it is easily understandable. This is obviously subjective in that the user context must be taken into account: for

instance, if the software product is to be used by software engineers it is not required to be understandable to the layman.

http://en.wikipedia.org/wiki/Software_quality 18/01/1430 12:39:01 ص

Software quality - Wikipedia, the free encyclopedia Page 5

Completeness–presence of all constituent parts, with each part fully developed. This means that if the code calls a subroutine from an

external library, the software package must provide reference to that library and all required parameters must be passed. All required input

data must also be available.

Conciseness–minimization of excessive or redundant information or processing. This is important where memory capacity is limited, and it

is generally considered good practice to keep lines of code to a minimum. It can be improved by replacing repeated functionality by one

subroutine or function which achieves that functionality. It also applies to documents.

Portability–ability to be run well and easily on multiple computer configurations. Portability can mean both between different hardware—

such as running on a PC as well as a smartphone—and between different operating systems—such as running on both Mac OS X and

GNU/Linux.

Consistency–uniformity in notation, symbology, appearance, and terminology within itself.

Maintainability–propensity to facilitate updates to satisfy new requirements. Thus the software product that is maintainable should be

well-documented, should not be complex, and should have spare capacity for memory, storage and processor utilization and other

resources.

Testability–disposition to support acceptance criteria and evaluation of performance. Such a characteristic must be built-in during the

design phase if the product is to be easily testable; a complex design leads to poor testability.

Usability–convenience and practicality of use. This is affected by such things as the human-computer interface. The component of the

software that has most impact on this is the user interface (UI), which for best usability is usually graphical (i.e. a GUI).

Reliability–ability to be expected to perform its intended functions satisfactorily. This implies a time factor in that a reliable product is

expected to perform correctly over a period of time. It also encompasses environmental considerations in that the product is required to

perform correctly in whatever conditions it finds itself (sometimes termed robustness).

Structuredness–organisation of constituent parts in a definite pattern. A software product written in a block-structured language such as

Pascal will satisfy this characteristic.

Efficiency–fulfillment of purpose without waste of resources, such as memory, space and processor utilization, network bandwidth, time,

etc.

Security–ability to protect data against unauthorized access and to withstand malicious or inadvertent interference with its operations.

Besides the presence of appropriate security mechanisms such as authentication, access control and encryption, security also implies

resilience in the face of malicious, intelligent and adaptive attackers.

Measurement of software quality factors

There are varied perspectives within the field on measurement. There are a great many measures that are valued by some professionals—or

in some contexts, that are decried as harmful by others. Some believe that quantitative measures of software quality are essential. Others

believe that contexts where quantitative measures are useful are quite rare, and so prefer qualitative measures. Several leaders in the field of

software testing have written about the difficulty of measuring what we truly want to measure well, including Dr. Cem Kaner [2] (http://

www.kaner.com/pdfs/metrics2004.pdf) (PDF) and Douglass Hoffman [3] (http://www.softwarequalitymethods.com/Papers/DarkMets%

20Paper.pdf) (PDF).

One example of a popular metric is the number of faults encountered in the software. Software that contains few faults is considered by

some to have higher quality than software that contains many faults. Questions that can help determine the usefulness of this metric in a

particular context include:

1. What constitutes “many faults?” Does this differ depending upon the purpose of the software (e.g., blogging software vs. navigational

software)? Does this take into account the size and complexity of the software?

2. Does this account for the importance of the bugs (and the importance to the stakeholders of the people those bugs bug)? Does one try

to weight this metric by the severity of the fault, or the incidence of users it effects? If so, how? And if not, how does one know that

100 faults discovered is better than 1000?

3. If the count of faults being discovered is shrinking, how do I know what that means? For example, does that mean that the product is

now higher quality than it was before? Or that this is a smaller/less ambitious change than before? Or that fewer tester-hours have

gone into the project than before? Or that this project was tested by less skilled testers than before? Or that the team has discovered

that fewer faults reported is in their interest?

This last question points to an especially difficult one to manage. All software quality metrics are in some sense measures of human

behavior, since humans create software[4] (http://www.kaner.com/pdfs/metrics2004.pdf) (PDF). If a team discovers that they will benefit

from a drop in the number of reported bugs, there is a strong tendency for the team to start reporting fewer defects. That may mean that

email begins to circumvent the bug tracking system, or that four or five bugs get lumped into one bug report, or that testers learn not to

http://en.wikipedia.org/wiki/Software_quality 18/01/1430 12:39:01 ص

Software quality - Wikipedia, the free encyclopedia Page 6

report minor annoyances. The difficulty is measuring what we mean to measure, without creating incentives for software programmers and

testers to consciously or unconsciously “game” the measurements.

Software quality factors cannot be measured because of their vague definitions. It is necessary to find measurements, or metrics, which can

be used to quantify them as non-functional requirements. For example, reliability is a software quality factor, but cannot be evaluated in its

own right. However, there are related attributes to reliability, which can indeed be measured. Some such attributes are mean time to failure,

rate of failure occurrence, and availability of the system. Similarly, an attribute of portability is the number of target-dependent statements in

a program.

A scheme that could be used for evaluating software quality factors is given below. For every characteristic, there are a set of questions

which are relevant to that characteristic. Some type of scoring formula could be developed based on the answers to these questions, from

which a measurement of the characteristic can be obtained.

Understandability

Are variable names descriptive of the physical or functional property represented? Do uniquely recognisable functions contain adequate

comments so that their purpose is clear? Are deviations from forward logical flow adequately commented? Are all elements of an array

functionally related?

Completeness

Are all necessary components available? Does any process fail for lack of resources or programming? Are all potential pathways through

the code accounted for, including proper error handling?

Conciseness

Is all code reachable? Is any code redundant? How many statements within loops could be placed outside the loop, thus reducing

computation time? Are branch decisions too complex?

Portability

Does the program depend upon system or library routines unique to a particular installation? Have machine-dependent statements been

flagged and commented? Has dependency on internal bit representation of alphanumeric or special characters been avoided? How much

effort would be required to transfer the program from one hardware/software system or environment to another?

Consistency

Is one variable name used to represent different logical or physical entities in the program? Does the program contain only one

representation for any given physical or mathematical constant? Are functionally similar arithmetic expressions similarly constructed? Is a

consistent scheme used for indentation, nomenclature, the color pallette, fonts and other visual elements?

Maintainability

Has some memory capacity been reserved for future expansion? Is the design cohesive—i.e., does each module have distinct, recognisable

functionality? Does the software allow for a change in data structures (object-oriented designs are more likely to allow for this)? If the code

is procedure-based (rather than object-oriented), is a change likely to require restructuring the main program, or just a module?

Testability

Are complex structures employed in the code? Does the detailed design contain clear pseudo-code? Is the pseudo-code at a higher level of

abstraction than the code? If tasking is used in concurrent designs, are schemes available for providing adequate test cases?

Usability

Is a GUI used? Is there adequate on-line help? Is a user manual provided? Are meaningful error messages provided?

Reliability

Are loop indexes range-tested? Is input data checked for range errors? Is divide-by-zero avoided? Is exception handling provided?

Structuredness

Is a block-structured programming language used? Are modules limited in size? Have the rules for transfer of control between modules

been established and followed?

Efficiency

http://en.wikipedia.org/wiki/Software_quality 18/01/1430 12:39:01 ص

Software quality - Wikipedia, the free encyclopedia Page 7

Have functions been optimized for speed? Have repeatedly used blocks of code been formed into subroutines? Has the program been

checked for memory leaks or overflow errors?

Security

Does the software protect itself and its data against unauthorized access and use? Does it allow its operator to enforce security policies? Are

security mechanisms appropriate, adequate and correctly implemented? Can the software withstand attacks that can be anticipated in its

intended environment? Is the software free of errors that would make it possible to circumvent its security mechanisms? Does the

architecture limit the potential impact of yet unknown errors?

User's perspective

In addition to the technical qualities of software, the end user's experience also determines the quality of software. This aspect of software

quality is called usability. It is hard to quantify the usability of a given software product. Some important questions to be asked are:

Is the user interface intuitive (self-explanatory/self-documenting)?

Is it easy to perform simple operations?

Is it feasible to perform complex operations?

Does the software give sensible error messages?

Do widgets behave as expected?

Is the software well documented?

Is the user interface responsive or too slow?

Also, the availability of (free or paid) support may factor into the usability of the software.

See also

ISO 9126

Software Process Improvement and Capability Determination - ISO 15504

Software testing

Quality: Quality control, Total Quality Management

Capability Maturity Model

Software Engineering

Performance Engineering

Assertion (computing)

Splint (programming tool)

Optimization (computer science)

Algorithmic efficiency

Performance analysis

Order

Programming paradigm

Programming style

Software architecture

Software metrics

Cyclomatic complexity

Cohesion and Coupling

Standards (software)

Software reusability

Ilities

Accessibility

Availability

Dependability

Security

Security engineering

bugs

anomaly in software

Software Quality Model

Software Quality Assurance

Software Quality Observatory for Open Source Software

Bibliography

International Organization for Standardization. Software Engineering—Product Quality—Part 1: Quality Model. ISO, Geneva,

Switzerland, 2001. ISO/IEC 9126-1:2001(E).

http://en.wikipedia.org/wiki/Software_quality 18/01/1430 12:39:01 ص

Software quality - Wikipedia, the free encyclopedia Page 8

Diomidis Spinellis. Code Quality: The Open Source Perspective (http://www.spinellis.gr/codequality) . Addison Wesley, Boston,

MA, 2006.

Ho-Won Jung, Seung-Gweon Kim, and Chang-Sin Chung. Measuring software product quality: A survey of ISO/IEC 9126 (http://

doi.ieeecomputersociety.org/10.1109/MS.2004.1331309) . IEEE Software, 21(5):10–13, September/October 2004.

Stephen H. Kan. Metrics and Models in Software Quality Engineering. Addison-Wesley, Boston, MA, second edition, 2002.

Robert L. Glass. Building Quality Software. Prentice Hall, Upper Saddle River, NJ, 1992.

1. ^ a b Pressman, Scott. Software Engineering: A Practitioner's Approach. Sixth Edition, International, p 746. McGraw-Hill Education

2005.

2. ^ Pressman, Scott. Software Engineering: A Practitioner's Approach. Sixth Edition, International, p 388. McGraw-Hill Education

2005.

3. ^ Crosby, P., Quality is Free, McGraw-Hill, 1979

4. ^ McConnell, Steve. Code Complete First Ed, p. 558. Microsoft Press 1993

5. ^ DeMarco, T., "Management Can Make Quality (Im)possible," Cutter IT Summit, Boston, April 1999

6. ^ Musa, J.D, A. Iannino, and K. Okumoto, Engineering and Managing Software with Reliability Measures, McGraw-Hill, 1987

7. ^ Pressman, Scott. Software Engineering: A Practitioner's Approach, Sixth Edition International, McGraw-Hill International, 2005, p

762.

Retrieved from "http://en.wikipedia.org/wiki/Software_quality"

Categories: Anticipatory thinking | Software testing | Source code

Hidden categories: Cleanup from April 2008 | All pages needing cleanup | Wikipedia references cleanup | Articles needing expert attention |

Uncategorized articles needing expert attention

This page was last modified on 7 January 2009, at 01:54.

All text is available under the terms of the GNU Free Documentation License. (See Copyrights for details.)

Wikipedia® is a registered trademark of the Wikimedia Foundation, Inc., a U.S. registered 501(c)(3) tax-deductible nonprofit charity.

http://en.wikipedia.org/wiki/Software_quality 18/01/1430 12:39:01 ص

Anda mungkin juga menyukai

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- User Interface DesignDokumen7 halamanUser Interface DesignMuhammad Siddig Hassan100% (1)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- User Interface - Definition From AnswersDokumen3 halamanUser Interface - Definition From AnswersMuhammad Siddig HassanBelum ada peringkat

- User Interface - Wikipedia, The Free EncyclopediaDokumen4 halamanUser Interface - Wikipedia, The Free EncyclopediaMuhammad Siddig Hassan100% (1)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- Usability Reference ManualDokumen24 halamanUsability Reference ManualExodius100% (3)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (895)

- Software Quality 1Dokumen136 halamanSoftware Quality 1Muhammad Siddig Hassan100% (1)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- Quality 01Dokumen34 halamanQuality 01Muhammad Siddig Hassan100% (1)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- Measuring Usability As Quality of UseDokumen20 halamanMeasuring Usability As Quality of UseMuhammad Siddig Hassan100% (2)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (588)

- Evaluating The Impact of Software ToolsDokumen13 halamanEvaluating The Impact of Software ToolsMuhammad Siddig HassanBelum ada peringkat

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- Acceptance Testing of An Utsourced ApplicationDokumen10 halamanAcceptance Testing of An Utsourced ApplicationMuhammad Siddig HassanBelum ada peringkat

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (266)

- دعاءDokumen1 halamanدعاءMuhammad Siddig HassanBelum ada peringkat

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Delta C V200 User Interface GuideDokumen16 halamanDelta C V200 User Interface GuideDIEGO GIOVANNY MURILLO RODRIGUEZBelum ada peringkat

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Opos GuideDokumen111 halamanOpos GuideKhaled Salahul DenBelum ada peringkat

- The Shopify Development Handbook.4.0.0-PreviewDokumen106 halamanThe Shopify Development Handbook.4.0.0-Previewblackquetzal100% (1)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (73)

- Difference Between Structure and UnionDokumen5 halamanDifference Between Structure and UnionMandeep SinghBelum ada peringkat

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- Ly CNC 6040z s65j GuideDokumen15 halamanLy CNC 6040z s65j GuideAnonymous IXswcnW100% (1)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- 1word 1introDokumen72 halaman1word 1introYsmech SalazarBelum ada peringkat

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2259)

- MSI Lab ContinusDokumen7 halamanMSI Lab ContinusMirza Riyasat AliBelum ada peringkat

- Merit LILIN CMX Software HD 3.6 User ManualDokumen66 halamanMerit LILIN CMX Software HD 3.6 User ManualMahmoud AhmedBelum ada peringkat

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- UNIT1 - FcpskarelDokumen61 halamanUNIT1 - Fcpskarelbrapbrap1Belum ada peringkat

- Lenovo ThinkPad R61 DAV3 GENBU5W Rev5.13 SchematicDokumen98 halamanLenovo ThinkPad R61 DAV3 GENBU5W Rev5.13 SchematicAhmar Hayat KhanBelum ada peringkat

- HP LaserJet Pro 4202Dokumen4 halamanHP LaserJet Pro 4202MihaiBelum ada peringkat

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- Solid PrincipleDokumen26 halamanSolid PrincipleManish RanjanBelum ada peringkat

- 6.stored Functions (Main Notes)Dokumen5 halaman6.stored Functions (Main Notes)Lakshman KumarBelum ada peringkat

- t24 Close of Business Cob t24 HelperDokumen17 halamant24 Close of Business Cob t24 HelperAswani MucharlaBelum ada peringkat

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- Configure FTD High Availability On Firepower Appliances: RequirementsDokumen33 halamanConfigure FTD High Availability On Firepower Appliances: RequirementsShriji SharmaBelum ada peringkat

- CV - Linus Antonio Ofori AGYEKUMDokumen4 halamanCV - Linus Antonio Ofori AGYEKUMLinus AntonioBelum ada peringkat

- How I Learned To Stop Worrying and Write My Own ORMDokumen24 halamanHow I Learned To Stop Worrying and Write My Own ORMJocildo KialaBelum ada peringkat

- Simple RAID Cheat SheetDokumen4 halamanSimple RAID Cheat Sheetdeserki20Belum ada peringkat

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (120)

- Brochure Rugged Railway COTS Solutions Rev20180904Dokumen7 halamanBrochure Rugged Railway COTS Solutions Rev20180904Nguyen Hoang AnhBelum ada peringkat

- Python Regex Cheatsheet With Examples: Re Module FunctionsDokumen1 halamanPython Regex Cheatsheet With Examples: Re Module FunctionsAnonymous jyMAzN8EQBelum ada peringkat

- DGFT IndiaDokumen33 halamanDGFT IndiaKarthik RedBelum ada peringkat

- Problem Solving and Programming With Python: Ltpac20 0 3.5Dokumen4 halamanProblem Solving and Programming With Python: Ltpac20 0 3.5NAMBURUNITHINBelum ada peringkat

- Software Testing Practical QuestionsDokumen3 halamanSoftware Testing Practical QuestionsGeetanjli Garg100% (1)

- School of Management Business Communication: Ibm India Pvt. LTDDokumen11 halamanSchool of Management Business Communication: Ibm India Pvt. LTD21MBA BARATH M.Belum ada peringkat

- USB To RS232 ConverterDokumen2 halamanUSB To RS232 ConverterPrasadPurohitBelum ada peringkat

- SPS 6.0 AdministrationGuideDokumen964 halamanSPS 6.0 AdministrationGuidemohammad fattahiBelum ada peringkat

- Operating Systems and Linux IDokumen41 halamanOperating Systems and Linux Idivyarai12345Belum ada peringkat

- Interview Question and AnswerDokumen14 halamanInterview Question and AnswerTarik A R BiswasBelum ada peringkat

- Oxygen Forensic Detective Getting Started PDFDokumen77 halamanOxygen Forensic Detective Getting Started PDFjmla69Belum ada peringkat

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- AD9852 DDS ModuleDokumen10 halamanAD9852 DDS ModuleHitesh MehtaBelum ada peringkat