Poisson Formula

Diunggah oleh

Riki NurzamanHak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Poisson Formula

Diunggah oleh

Riki NurzamanHak Cipta:

Format Tersedia

Cumulative Distribution Function of a Geometric

Poisson Distribution

Abstract

The geometric Poisson distribution (also called Polya-Aeppli) is a

particular case of the compound Poisson distribution. We propose

to express the general term of this distribution through a recurrence

formula leading to a linear algorithm for the computation of its cumu-

lative distribution function. Practical implementation with a special

care for numerical computations is proposed and validated. The paper

ends with an example of application of these results for the computa-

tion of pattern statistics in biological sequences modelized by a Markov

model.

Keywords: compound Poisson, Polya-Aeppli, conuent hypergeomet-

ric function, Kummer

1 INTRODUCTION

The geometric Poisson distribution (or Polya-Aeppli) is a particular case

of the classical compound Poisson distribution where the contribution of

each term is distributed according to the geometric distribution. Johnson et

al (1972) proposes a linear formula to compute the general term of a com-

pound Poisson distribution which can be simplied (remaining linear) in the

geometric Poisson case. This formula results in a quadratic complexity for

the computation of the cumulative distribution function (cdf) which is often

too slow for many problems.

We propose here to give a direct and straightforward proof for this for-

mula and, rewriting this result with a Kummers conuent geometric func-

1

tion, to derive a recurrence relation from it leading to a linear computation

of the cdf.

In a rst part we will recall some denitions and elementary results, then

the recurrence relation will be established. The practical implementation of

the resulting algorithm will be then discussed and an application to the

computation of pattern statistics on random text generated by a Markov

chain will be nally presented.

2 RECALLS

We rst recall the

Denition 1 (Poisson distribution) The random variable M is distributed

according to P(), the Poisson distribution of parameter > 0, if

P(M = k) = e

k

k!

k N

and the

Denition 2 (compound Poisson distribution) The random variable N

is distributed according to CP ((

k

)

kN

), the compound Poisson distribution

of parameter (

k

)

kN

such as all

k

> 0 and

k=1

k

= < if

N =

M

m=1

K

m

where M P() is independent from the K

m

which are independent and

identically distributed according to

P(K = k) =

k

k N

This last denition can be easily rewritten as such

Lemma 3 If N CP ((

k

)

kN

) with

k=1

k

= then we have n N

P(N = n) =

n

m=1

e

k

k!

k

1

,...,kmN

I

{k

1

+...+km=n}

k

1

. . .

km

m

(1)

and P(N = 0) = e

.

2

We are now ready to introduce the

Denition 4 (geometric Poisson distribution) The random variable N

is distributed according to GP(, ) the geometric Poisson distribution pa-

rameter ]0, 1] for the geometric part and parameter > 0 for the Poisson

part, if N CP ((

k

)

kN

) with

k

= (1 )

k1

k N

(2)

(in the degenerate case where = 1, we simply fall back to the Poisson

distribution) and we recall that

E[N] =

and V[N] =

(2 )

2

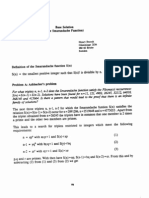

We can see on Fig. 1 the pdf of this distribution for some choices of

parameter with chosen such as the expectation / = 10. As pointed

out in the denition, we are in the Poisson case with = 1. When the

aggregative parameter decreases, we can see that the tail distribution

becomes heavier.

As stated by Johnson et al (1972, pp. 378),the geometric Poisson distri-

bution is also dened by

Proposition 5 If N GP(, ) then for all n 1 we have

P(N = n) =

n

k=1

e

k

k!

(1 )

nk

k

_

n 1

k 1

_

were

_

n

k

_

is the binomial coecient, and P(N = 0) = e

.

proof. Using relations (1) and (2) we get n N

P(N = n) =

n

m=1

e

k

k!

(1 )

nk

k

1

,...,kmN

I

{k

1

+...+km=n}

. .

A(n,k)

so we only have to compute A(n, k), the number of way to make n with a

sum of k non negative integers (where the order matters). Let us consider

the list 1, 2, . . . , n. This list contains n1 commas, and a selection of k 1

of these commas will obviously give a partition of n such as those involved

in A(n, k). It is therefore clear that A(n, k) =

_

n1

k1

_

and the proposition is

proved.

3

3 MAIN RESULTS

From now, N GP(, ) with > 0 and ]0, 1[. It is then possible to

rewrite the proposition 5 using the Kummers M conuent hypergeometric

function (see Abramovitz and Stegun (1972, chapter 13) ;all further x.x.x

references will concern this book).

Proposition 6 n N

we have

P(N = n) = e

(1 )

n

ze

z

M(n + 1, 2, z) (3)

where M is the Kummers M conuent hypergeometric function and

z =

1

(4)

proof. We start by considering e

z

M(n+1, 2, z) = M(n+1, 2, z) (thanks

to 13.1.27). The denition (13.1.2) easily gives that

M(n + 1, 2, z) = 1 +

(n 1)

2

z

1

1!

+

(n 1)(n 2)

2 3

z

2

2!

+. . .

=

n1

k=1

(n 1) . . . (n k + 1)

k!

z

k1

(k 1)!

=

n1

k=1

_

n 1

k 1

_

z

k1

k!

and so,

P(N = n) =

n1

k=1

e

(1 )

n

z

k

k!

_

n 1

k 1

_

which gives the result by replacing z by its value.

It is now nally time to establish our recurrence relation

Proposition 7 (recurrence) We have

P(N = 0) = e

and P(N = 1) = e

(1 )z

and n N, n 2

P(N = n) =

(2n 2 +z)

n

(1)P(N = n1)+

(2 n)

n

(1)

2

P(N = n2)

where z is dened in equation (4)

4

proof. Using the denition 13.1.2, it is easy to show that M(2, 2, z) = e

z

and this immediately gives P(N = 1). 13.4.1 also implies the following

relation a, b R

M(a + 1, b, z) =

1

a

[(2a b +z)M(a, b, z) + (b a)M(a 1, b, z)]

we simply apply this result to a = n and b = 2 to obtain

M(n + 1, 2, z) =

(2n 2 +z)

n

M(n, 2, z) +

(2 n)

n

M(n 1, 2, z)

and thanks to the relation (3) the proposition is proved.

4 ALGORITHMS

In this part, we want to compute for all n

0

N the following functions:

F(n

0

) =

n

0

n=0

P(N = n) and G(n

0

) = 1 F(n

0

1) =

n=n

0

P(N = n)

Thanks to proposition 7, we can compute all (P(N = n))

nn

0

in O(n

0

)

but we must face two numerical issues:

1. some terms could be out of the machine range and set to zero;

2. the relative error in the computation of a F close to one, becomes an

absolute error for the corresponding G computed through the relation

G = 1 F.

A simple solution for the rst point is to compute the L

n

= lnP(N = n)

by adapting proposition 7 to obtain

Proposition 8 (log recurrence)

L

0

= and L

1

= + ln((1 )z)

and n N, n 2

L

n

= L

n1

+ln

_

(2n 2 +z)

n

(1 ) +

(2 n)

n

(1 )

2

exp(L

n2

L

n1

)

_

5

A simple application of this proposition leads to the

Algorithm for lower tail

initialization L

0

= , L

1

= + ln((1 )z), A

1

= and S

1

=

1 + (1 )z

main loop for n = 2 . . . n

0

do

compute L

n

from L

n1

and L

n2

through the proposition (8)

check range of sum S = S

n1

+ exp(L

n

A

n1

)

if S ]0, [ then A

n

= A

n1

and S

n

= S

else A

n

= A

n1

+ ln(S

n1

) and S

n

= 1 + exp(L

n

A

n

)

end F(n

0

) = exp(A

n

0

) S

n

0

(where A

i

and S

i

are auxiliary variables used to compute the cumulative

sum).

The second problem is illustrated by the g (2). As one can see, using

F to compute G = 1 F is acceptable so long as the value of G is not too

small (> 10

12

) but when its value comes closer to the relative error of the

computations (10

16

for double precision on 32bit machine), the results are

no longer reliable.

The solution to this problem is obviously to compute G directly us-

ing the serie but, doing so, how does one know when the serie has con-

verged ? One could think to use a rough Gaussian approximation to test

the convergence. Unfortunately, the geometric Poisson distribution diers

so much from Gaussian one (for example, with = 10.0 and = 0.1,

P(N > 1000) = 8.765 10

24

while the Gaussian approximation gives

5.161 10

95

for the same value) that this option must be abandoned.

Without any better criterion, we will add terms until we get a numerical

convergence. We rst choose a small (typically = 10

16

depending on

the relative precision of the computations) and we get the

6

Algorithm for upper tail

initialization L

0

= and L

1

= + ln((1 )z)

rst loop for n = 2 . . . n

0

do

compute L

n

from L

n1

and L

n2

through the proposition (8)

sum initialization A

n0

= L

n

0

and S

n

0

= 1

main loop while the serie has not converged do

n = n + 1

compute L

n

from L

n1

and L

n2

through the proposition (8)

check convergence

if L

n

< ln() + A

n1

+ ln(S

n1

) then the serie has con-

verged and n

1

= n 1, go to end

else the serie has not yet converged

check range of sum S = S

n1

+ exp(L

n

A

n1

)

if S ]0, [ then A

n

= A

n1

and S

n

= S

else A

n

= A

n1

+ ln(S

n1

) and S

n

= 1 + exp(L

n

A

n

)

end G(n

0

) = exp(A

n

1

) S

n

1

(where, like in the previous algorithm, A

i

and S

i

are auxiliary variables).

Of course, such a naive approach could lead to errors if the convergence

rate of the serie is too low, but as we can see on Fig. 3 the algorithm works

very well with, in the worst case, a relative error around 10

12

.

Finally, let us point out that the complexity of these two algorithms is

O(n

0

) in time and O(1) in memory (as only the last two values of L

n

and

the last value of A

n

and S

n

are needed at each step).

5 APPLICATION

We consider an homogeneous stationary Markov chain X of length on

7

the nite alphabet A (ex: A = {a, c, g, t} when DNA sequences are consid-

ered). We count N the number of occurrences of a given (non degenerate)

pattern (ex: pattern aacaa or pattern gctgtctg).

Crysaphinou and Papastavridis (1988) rst introduced the geometric

Poisson approximation for N in the independant case. Arratia et al (1990)

then proposed to use Chen-Stein method to get a compound Poisson ap-

proximation in the Markov case. This idea was then studied in details by

Schbath (1995) and, more recently, Robin (2002) pointed out that the com-

pound Poisson distribution was in fact a simple geometric Poisson one in

the non degenerate case.

According to these references, N is approximately distributed according

to a GP(, 1 a) distribution where

a = P(pattern self-overlaps)

and

= (1 a) P(pattern appears at a a given position in X)

If a = 0, then = 1 a = 1.0 and the distribution is a simple Poisson

one (and its cumulative distribution function is easily computed with the

incomplete gamma function, see Press et al (1992),chapter 6, for more details

on this point). From now, we will then consider only cases where a > 0.

Let us consider the complete genome of the bacteria Escherichia coli

K12 (of length = 4 639 221). We estimate an order 1 Markov model on its

sequence to get

=

_

_

_

_

_

_

0.2957922699 0.2247175468 0.2082510314 0.2712391519

0.2756564177 0.2303218838 0.2939007929 0.2001209057

0.2270901404 0.3262008455 0.2295111640 0.2171978501

0.1857763808 0.2342592584 0.2824187007 0.2975456600

_

_

_

_

_

_

the transition matrix between the states {a, c, g, t} (in this order) such as

P(g|c) = (c, g) = (2, 3). The corresponding stationary distribution

(such as = ) is therefore given by

=

_

0.2461911663 0.2542308865 0.2536579603 0.2459199869

_

8

For any given pattern, we can use and to compute a and . For

example, for the pattern gggg we get

a(gggg) = (g, g) = 0.2295111640

and

(gggg) = (g) (g, g)

3

(1 a(gggg)) = 10961.53

So N(gggg) is approximately distributed according to GP(10961.53, 0.7704888)

and hence P(N(gggg) 8704) 10

356.942033

1.142791496 10

357

.

The table 1 gives the ten smallest p-values computed with the lower tail

algorithm for the self overlapping patterns of size 4 and 8 which are seen

less than expected. Table 2 gives the p-values computed with the upper tail

algorithm for the self overlapping patterns seen more than expected. In both

cases, these p-values are compared with the one obtained through precise

large deviations approximations which are, according to Nuel (2006), very

close to the exact p-values (usual relative error is smaller than 0.5%).

In both tables, one should note that p-values smaller than 10

300

are

computed, and, especially for the size 4 patterns, that large n

0

are considered

(which corresponding cdf would have taken a very long time to compute with

the original quadratic algorithm).

6 CONCLUSION

We have presented here a simple and ecient way to compute the cu-

mulative distribution function of a geometric Poisson distribution. As its

complexity is linear, this new algorithm allows to deal with cases where the

usual quadratic algorithm was not usable. Thanks to the logarithm ver-

sion of our recurrence, this algorithm is also capable to compute eciently

very small p-values such as those that usually arise when studying pattern

statistics on biological sequences.

One should note that the geometric assumption is here a key stage

of the proposed method since it allows to rewrite the complex sum on

k

1

, . . . , k

m

N

in lemma 3 in the much more comfortable formula of

proposition 5 and then to introduce our recurrence relation. For a gen-

eral compound Poisson distribution however, it is much more complicated.

9

Some preliminary work indicates that it seems very unlikely that similar

linear algorithms exist in this general case. Nevertheless, a paper should

soon complete the work presented here by proposing ecient computations

for more general compound Poisson distributions.

REFERENCES

Abramowitz, M., Stegun, I.A. (1972) Handbook of Mathematical Functions.

Wiley-Interscience Publication.

Arratia R., Goldstein L. and Gordon L. (1990) Poisson approximation and

the Chen-Stein method. Stat. Sci., 5(4), 403-434.

Chrysaphinou O. and Papastavridis S. (1988) A limit theorem on the number

of overlapping appearances of a pattern in a sequence of independant trials.

Proba. Theory Relat. Fields, 79(1), 129-143.

Johnson N. L., Kotz S. and Kemp A. W. (1992) Univariate dis crete distri-

butions. Wiley, New York.

Nuel G. (2006) Numerical solutions for Patterns Statistics on Markov chains,

Statistical Applications in Genetics and Molecular Biology. In revision.

Press W. H., Teukolsky S. A., Vetterling W. T., Flannery B. P. (1992)

Numerical Recipes in C, Cambridge University Press.

Robin S. (2002) A compound Poisson model for word occurrences in DNA

sequences. J. Roy. Stat. Soc. Ser. C, 51, 437-451.

Schbath S. (1995) Compount Poisson approximation of word count in DNA

sequences. ESAIM, Proba. Stat., 1, 1-16.

10

pattern n0 E[N] a geometric Poisson large deviations

cggcgccg 7 168.42 4.86e-04 61.209046 60.881043

cgcatgcg 30 228.91 6.60e-04 60.936502 60.817153

gcgccggc 14 186.50 4.86e-04 60.081156 59.868326

gcgcatgc 47 253.49 6.60e-04 56.379357 56.307102

ggcgccgg 1 131.22 5.97e-04 54.834258 53.992639

cggcgccc 2 131.98 1.12e-04 53.366761 52.783797

ggcgcccg 2 131.69 1.12e-04 53.239547 52.657075

cgcggccg 15 168.42 4.86e-04 51.793270 51.604176

gcatgcgc 54 253.49 6.60e-04 51.490606 51.431959

gggcgccg 3 131.22 1.12e-04 51.397185 50.924344

caca 13000 20139.39 6.19e-02 565.813732 583.968289

tgtg 12830 19764.28 6.13e-02 543.598735 560.747716

gagg 8091 12772.69 1.09e-02 422.828743 430.110448

cctc 8062 12734.96 1.08e-02 422.619815 429.872555

gggg 8704 14226.72 2.30e-01 356.942033 362.994999

cccc 8853 14410.49 2.30e-01 355.737769 361.846613

acac 10964 15898.62 6.19e-02 335.990058 344.540576

gtgt 10843 15678.26 6.13e-02 327.234105 335.456416

acta 6524 9541.95 8.35e-03 231.776187 234.561629

tagt 6723 9586.76 8.40e-03 206.324250 208.824845

Table 1: The ten smallest p-values for the self overlapping patterns of

size 4 and 8 seen less in Escherichia coli K12 complete genome than ex-

pected under an order one Markov model. Form left to right the columns

give: the considered pattern, its observed number of occurrences (n

0

),

its expected number of occurrences (N is the random count of the pat-

tern), its probability to overlap itself (a), its geometric Poisson log scale

p-value log

10

P(N n

0

) computed with the lower tail algorithm (using

N GP(, ) with = E(N) (1 a) and = 1 a) and the same p-value

using precise large deviations approximations.

11

pattern n0 E[N] a geometric Poisson large deviations

ccagcgcc 726 112.32 5.09e-04 322.902186 323.168145

cgctggcg 774 140.62 4.06e-04 299.487521 299.756283

ggcgctgg 661 109.56 4.99e-04 277.585055 277.800101

ccgccagc 663 112.32 9.52e-05 273.664407 273.879114

cgccagcg 733 143.33 4.13e-04 264.740215 264.974535

ccaccagc 510 72.58 6.15e-05 243.503618 243.630897

gctggcgg 613 109.56 9.31e-05 241.376886 241.555145

gctggtgg 499 70.10 5.96e-05 240.683818 240.814004

cttccagc 485 70.96 6.02e-05 226.647714 226.768893

gctggaag 481 69.65 5.92e-05 226.622118 226.741737

cagc 37475 22085.82 1.87e-02 1839.634208 1916.815507

gctg 36516 21695.23 1.84e-02 1744.022252 1815.665597

tggt 23904 16061.70 1.41e-02 701.021635 719.565205

acca 23981 16295.12 1.43e-02 666.399192 684.261519

cgcc 35156 26043.19 2.21e-02 595.751439 622.816682

tgat 27542 19846.44 1.74e-02 557.486921 575.279672

atca 27594 20004.81 1.75e-02 539.060422 556.415209

ggcg 34480 25893.03 2.20e-02 535.115609 559.163108

ggtg 23256 16567.08 1.41e-02 506.236812 520.965865

cacc 23467 16827.26 1.43e-02 492.183339 506.690325

Table 2: The ten smallest p-values for the self overlapping patterns of

size 4 and 8 seen more in Escherichia coli K12 complete genome than ex-

pected under an order one Markov model. Form left to right the columns

give: the considered pattern, its observed number of occurrences (n

0

),

its expected number of occurrences (N is the random count of the pat-

tern), its probability to overlap itself (a), its geometric Poisson log scale

p-value log

10

P(N n

0

) computed with the upper tail algorithm (using

N GP(, ) with = E(N) (1 a) and = 1 a) and the same p-value

using precise large deviations approximations.

12

Figure 1: Probability distribution function of N GP(, ) with / = 10.0

and taking several values. From the top to the bottom in abscissa x = 10.0,

equals 1.0 (simple Poisson case), 0.9, 0.8, . . . , 0.1.

13

Figure 2: We consider here the distribution of N GP(10.0, 0.75) (0 k

75). The gure plots the relative error done computing P(N k) through

1 P(N < k) using double precision on a 32bit machine (10

16

relative

precision). The value of P(N k) is computed on maple with the same

method but using arbitrary precision of 1 000 digits.

14

Figure 3: We consider here the distribution of N GP(10.0, 0.75) (0 <

k 1 000). The gure plots the relative error (in log scale) done computing

P(N k) through the upper tail algorithm (with = 10

13

) using double

precision on a 32bit machine (computational time: 0.13 s). The values of

P(N k) are computed on maple with the same method but using arbitrary

precision of 1 000 digits (computational time: 112 s). Convergence of the

serie is achieved with 67 terms (on the left of the plot) to 28 terms (on the

right).

15

Anda mungkin juga menyukai

- LEGENDRE POLYNOMIALSDokumen6 halamanLEGENDRE POLYNOMIALSFrancis Jr CastroBelum ada peringkat

- Remarks On Chapter 5 in L. Trefethen: Spectral Methods in MATLABDokumen8 halamanRemarks On Chapter 5 in L. Trefethen: Spectral Methods in MATLABTri NguyenBelum ada peringkat

- Outline CD 2Dokumen9 halamanOutline CD 2Imdadul HaqueBelum ada peringkat

- Numerical Methods Convergence OrderDokumen14 halamanNumerical Methods Convergence OrderDANIEL ALEJANDRO VARGAS UZURIAGABelum ada peringkat

- Fractal Order of PrimesDokumen24 halamanFractal Order of PrimesIoana GrozavBelum ada peringkat

- A Convenient Way of Generating Normal Random Variables Using Generalized Exponential DistributionDokumen11 halamanA Convenient Way of Generating Normal Random Variables Using Generalized Exponential DistributionvsalaiselvamBelum ada peringkat

- Eigenvalue Estimates For Non-Normal Matrices and The Zeros of Random Orthogonal Polynomials On The Unit CircleDokumen25 halamanEigenvalue Estimates For Non-Normal Matrices and The Zeros of Random Orthogonal Polynomials On The Unit CircleTracy AdamsBelum ada peringkat

- Nonlinear Schrödinger Equation Solutions Using Modified Trigonometric Function Series MethodDokumen4 halamanNonlinear Schrödinger Equation Solutions Using Modified Trigonometric Function Series Methodsongs qaumiBelum ada peringkat

- On The Asymptotic Enumeration of Accessible Automata: Elcio LebensztaynDokumen6 halamanOn The Asymptotic Enumeration of Accessible Automata: Elcio LebensztaynGrcak92Belum ada peringkat

- AMATH 231 Fourier Series AssignmentDokumen7 halamanAMATH 231 Fourier Series AssignmentdoraBelum ada peringkat

- M/M/1 queue model analysisDokumen12 halamanM/M/1 queue model analysisSparrowGospleGilbertBelum ada peringkat

- Solutions To Exercises 4.1: 1. We HaveDokumen28 halamanSolutions To Exercises 4.1: 1. We HaveTri Phương NguyễnBelum ada peringkat

- Transfer Matrix Solution to the 1D Ising ModelDokumen2 halamanTransfer Matrix Solution to the 1D Ising ModelVigneshwaran KannanBelum ada peringkat

- Solutions To Exercises 7.1: X Has Period TDokumen16 halamanSolutions To Exercises 7.1: X Has Period TTri Phương NguyễnBelum ada peringkat

- Fourier series for periodic functionDokumen14 halamanFourier series for periodic functionstriaukasBelum ada peringkat

- Fourier Series, Discrete Fourier Transforms and Fast Fourier TransformsDokumen6 halamanFourier Series, Discrete Fourier Transforms and Fast Fourier Transformsbnm007Belum ada peringkat

- Partitions and Q-Series Identities, I 1 Definitions and ExamplesDokumen13 halamanPartitions and Q-Series Identities, I 1 Definitions and Examplessathish_billBelum ada peringkat

- Revision Notes FP1-3Dokumen45 halamanRevision Notes FP1-3Stefan NovakBelum ada peringkat

- The Durbin-Levinson Algorithm for AR ModelsDokumen7 halamanThe Durbin-Levinson Algorithm for AR ModelsNguyễn Thành AnBelum ada peringkat

- Maximum Entropy DistributionDokumen11 halamanMaximum Entropy DistributionsurvinderpalBelum ada peringkat

- Leg BessDokumen2 halamanLeg BessVibhanshu VermaBelum ada peringkat

- Klaus KirstenDokumen36 halamanKlaus KirstenGaurav DharBelum ada peringkat

- Math Olympiad Seminar Covers Number TheoryDokumen22 halamanMath Olympiad Seminar Covers Number TheoryEmerson Soriano100% (1)

- Convergence Acceleration of Alternating SeriesDokumen10 halamanConvergence Acceleration of Alternating SeriesblablityBelum ada peringkat

- MATH 101 Final Exam SolutionsDokumen9 halamanMATH 101 Final Exam SolutionstehepiconeBelum ada peringkat

- The Prime Number TheoremDokumen5 halamanThe Prime Number TheoremSaparya SureshBelum ada peringkat

- SGN 2206 Lecture New 4Dokumen25 halamanSGN 2206 Lecture New 4sitaram_1Belum ada peringkat

- Fall 2013 PDFDokumen10 halamanFall 2013 PDFlopezmegoBelum ada peringkat

- Computing Permanents of Complex Matrices in Nearly Linear TimeDokumen12 halamanComputing Permanents of Complex Matrices in Nearly Linear TimeSimos SoldatosBelum ada peringkat

- PHYSICS HOMEWORK SOLUTIONSDokumen14 halamanPHYSICS HOMEWORK SOLUTIONSFuat YıldızBelum ada peringkat

- Quantum Mechanics IIDokumen6 halamanQuantum Mechanics IITseliso Man100% (1)

- Lecture08 1Dokumen3 halamanLecture08 1lgiansantiBelum ada peringkat

- Notes On Infinite Sequences and Series:, A, A, - . - , and Its N-TH As ADokumen21 halamanNotes On Infinite Sequences and Series:, A, A, - . - , and Its N-TH As AKunal PratapBelum ada peringkat

- Physics 715 HW 1Dokumen13 halamanPhysics 715 HW 1Antonildo PereiraBelum ada peringkat

- Fast Factoring Integers by SVP Algorithms - Peter SchnorrDokumen12 halamanFast Factoring Integers by SVP Algorithms - Peter SchnorrbytesombrioBelum ada peringkat

- Sequence and SeriesDokumen55 halamanSequence and SeriesAvnish BhasinBelum ada peringkat

- Headsoln Master MethodDokumen6 halamanHeadsoln Master MethodSumit DeyBelum ada peringkat

- Irrational lengths of sides and diagonals in regular polygonsDokumen2 halamanIrrational lengths of sides and diagonals in regular polygonsksr131Belum ada peringkat

- Math 3110 Homework SolutionsDokumen26 halamanMath 3110 Homework SolutionsCavia PorcellusBelum ada peringkat

- Sequences and Limits: July 31, 2007 13:35 WSPC /book Trim Size For 9in X 6in Real-AnalysisDokumen14 halamanSequences and Limits: July 31, 2007 13:35 WSPC /book Trim Size For 9in X 6in Real-Analysissan_kumar@ymail.commBelum ada peringkat

- Shift-Register Synthesis (Modulo M)Dokumen23 halamanShift-Register Synthesis (Modulo M)Shravan KumarBelum ada peringkat

- Bayes GaussDokumen29 halamanBayes GaussYogesh Nijsure100% (1)

- Complex Analysis: Contour Integrals and the Residue TheoremDokumen8 halamanComplex Analysis: Contour Integrals and the Residue TheoremGeorge TedderBelum ada peringkat

- Base SolutionsDokumen4 halamanBase SolutionsRyanEliasBelum ada peringkat

- Mark Rusi BolDokumen55 halamanMark Rusi BolPat BustillosBelum ada peringkat

- The Geometry of Möbius Transformations: John OlsenDokumen37 halamanThe Geometry of Möbius Transformations: John OlsenAnubastBelum ada peringkat

- Computing The Permanent of (Some) Complex MatricesDokumen14 halamanComputing The Permanent of (Some) Complex MatricesJainil ShahBelum ada peringkat

- MRRW Bound and Isoperimetric LectureDokumen8 halamanMRRW Bound and Isoperimetric LectureAshoka VanjareBelum ada peringkat

- Problem 11.1: (A) : F (Z) Z X (Z) F (Z) F Z + ZDokumen9 halamanProblem 11.1: (A) : F (Z) Z X (Z) F (Z) F Z + Zde8737Belum ada peringkat

- File Journal 2011171 Journal2011171101Dokumen9 halamanFile Journal 2011171 Journal2011171101Maliya QiyaBelum ada peringkat

- Solution of Exercise 1: FY8201 / TFY8 Nanoparticle and Polymer Physics IDokumen3 halamanSolution of Exercise 1: FY8201 / TFY8 Nanoparticle and Polymer Physics ISerpil YilmazBelum ada peringkat

- Discrete Fourier Transform: e C T F DT e T F T C C CDokumen9 halamanDiscrete Fourier Transform: e C T F DT e T F T C C CZuraidi EpendiBelum ada peringkat

- Application of The Chebyshev Polynomials To Approximation and Construction of Map ProjectionsDokumen10 halamanApplication of The Chebyshev Polynomials To Approximation and Construction of Map ProjectionsEdinson Figueroa FernandezBelum ada peringkat

- IMC 2010 (Day 2)Dokumen4 halamanIMC 2010 (Day 2)Liu ShanlanBelum ada peringkat

- Electricity and Magnetism II - Jackson Homework 11Dokumen5 halamanElectricity and Magnetism II - Jackson Homework 11Ale GomezBelum ada peringkat

- Collomb-Tutorial On Trigonometric Curve FittingDokumen15 halamanCollomb-Tutorial On Trigonometric Curve Fittingj.emmett.dwyer1033Belum ada peringkat

- Introduction To Spectral Methods: Eric GourgoulhonDokumen48 halamanIntroduction To Spectral Methods: Eric Gourgoulhonmanu2958Belum ada peringkat

- Harmonic Maps and Minimal Immersions with Symmetries (AM-130), Volume 130: Methods of Ordinary Differential Equations Applied to Elliptic Variational Problems. (AM-130)Dari EverandHarmonic Maps and Minimal Immersions with Symmetries (AM-130), Volume 130: Methods of Ordinary Differential Equations Applied to Elliptic Variational Problems. (AM-130)Belum ada peringkat

- Active PS CatalogueDokumen42 halamanActive PS CatalogueRiki NurzamanBelum ada peringkat

- Ot157tn 050115 UsDokumen1 halamanOt157tn 050115 UsRiki NurzamanBelum ada peringkat

- Vino 125 Service ManualDokumen294 halamanVino 125 Service Manualottuser94% (16)

- Touran FuseboxDokumen16 halamanTouran FuseboxRiki Nurzaman100% (3)

- Aw60 41sn Zip BookDokumen8 halamanAw60 41sn Zip BookRiki Nurzaman100% (3)

- Lihat Spesifikasi Suzuki Ertiga Click HereDokumen8 halamanLihat Spesifikasi Suzuki Ertiga Click HereRiki NurzamanBelum ada peringkat

- Catalog Daytona Azia 2010 (Racing)Dokumen32 halamanCatalog Daytona Azia 2010 (Racing)alyousha_05Belum ada peringkat

- Touran FuseboxDokumen16 halamanTouran FuseboxRiki Nurzaman100% (3)

- The TSI Engine: Environmental CommendationDokumen16 halamanThe TSI Engine: Environmental CommendationRiki Nurzaman100% (2)

- The TSI Engine: Environmental CommendationDokumen16 halamanThe TSI Engine: Environmental CommendationRiki Nurzaman100% (2)

- WindshieldsDokumen4 halamanWindshieldsRiki Nurzaman50% (2)

- Motorcycle HandbookDokumen56 halamanMotorcycle HandbookRiki NurzamanBelum ada peringkat

- CM1251 Chicago Miniature Datasheet 153476Dokumen1 halamanCM1251 Chicago Miniature Datasheet 153476Riki NurzamanBelum ada peringkat

- 2010 UK Vespa AdditionsDokumen15 halaman2010 UK Vespa AdditionsJCMBelum ada peringkat

- Yamaha Vino YJ50RN Service ManualDokumen206 halamanYamaha Vino YJ50RN Service ManualGilberto Ardila HermannBelum ada peringkat

- SpinneybeckDokumen1 halamanSpinneybeckRiki NurzamanBelum ada peringkat

- Af17 Zip01Dokumen2 halamanAf17 Zip01worx_suBelum ada peringkat

- Jetbrella BrochureDokumen1 halamanJetbrella BrochureRiki NurzamanBelum ada peringkat

- STD Wire SpecsDokumen1 halamanSTD Wire SpecsRiki NurzamanBelum ada peringkat

- Application Guide - Aerospace Sealants PDFDokumen4 halamanApplication Guide - Aerospace Sealants PDFRiki NurzamanBelum ada peringkat

- l3070 e 0Dokumen128 halamanl3070 e 0Riki NurzamanBelum ada peringkat

- Raychem Wire To Wire SpliceDokumen4 halamanRaychem Wire To Wire SpliceRiki NurzamanBelum ada peringkat

- LockwireDokumen1 halamanLockwireRiki NurzamanBelum ada peringkat

- Stainless Steels: Nominal Chemical CompositionsDokumen1 halamanStainless Steels: Nominal Chemical CompositionsRiki NurzamanBelum ada peringkat

- Ni Alloy ChartDokumen1 halamanNi Alloy ChartRiki NurzamanBelum ada peringkat

- SHC100 PDFDokumen3 halamanSHC100 PDFRiki NurzamanBelum ada peringkat

- Wire Inch To Gage Chart Gauge American Brown Copper Wash Moen Imperial S.W.GDokumen1 halamanWire Inch To Gage Chart Gauge American Brown Copper Wash Moen Imperial S.W.GRiki NurzamanBelum ada peringkat

- ShelfLife TechbulletinDokumen1 halamanShelfLife TechbulletinRiki NurzamanBelum ada peringkat

- SP B77000Dokumen4 halamanSP B77000Riki NurzamanBelum ada peringkat

- Ni Alloy ChartDokumen1 halamanNi Alloy ChartRiki NurzamanBelum ada peringkat

- Worksheet Scientific Notation/Significant FiguresDokumen3 halamanWorksheet Scientific Notation/Significant Figuresالاول من العالمBelum ada peringkat

- Grade 11 Chemistry Unit 1 Focuses on Fundamental ConceptsDokumen125 halamanGrade 11 Chemistry Unit 1 Focuses on Fundamental ConceptsZelalemBelum ada peringkat

- Units and Dimension - Mind Maps - Arjuna NEET Fastrack 2024Dokumen1 halamanUnits and Dimension - Mind Maps - Arjuna NEET Fastrack 2024barnikbanerjee11Belum ada peringkat

- wph16 01 Rms 20230302Dokumen14 halamanwph16 01 Rms 20230302Farayan IslamBelum ada peringkat

- ASME B1.30 (2002) Screw Threads Standard Practice For Calculating & Rounding DimensionsDokumen36 halamanASME B1.30 (2002) Screw Threads Standard Practice For Calculating & Rounding Dimensionsbobuncle03Belum ada peringkat

- IPL Lab Report GuideDokumen5 halamanIPL Lab Report Guidetowowot594Belum ada peringkat

- Significant Figure Rules For LogsDokumen2 halamanSignificant Figure Rules For Logsmooningearth2Belum ada peringkat

- Spheres, Cones and Cylinders: 1. (3 MarksDokumen11 halamanSpheres, Cones and Cylinders: 1. (3 MarksKeri-ann MillarBelum ada peringkat

- Physical Quantities UnitsDokumen26 halamanPhysical Quantities Unitsmuyideen abdulkareemBelum ada peringkat

- ISC Class 12 Physics Practical Question Paper 2020Dokumen3 halamanISC Class 12 Physics Practical Question Paper 2020RISHABH YADAVBelum ada peringkat

- Laboratory Manual For Queensland Sugar Mills Fifth EditionDokumen59 halamanLaboratory Manual For Queensland Sugar Mills Fifth Editionarcher178100% (3)

- Gravimetric Analysis of A Metal Carbonate - CompleteDokumen5 halamanGravimetric Analysis of A Metal Carbonate - CompleteKelly M. BadibangaBelum ada peringkat

- Mark Scheme (Results) : Summer 2018Dokumen16 halamanMark Scheme (Results) : Summer 2018Sean SheepBelum ada peringkat

- Tables PDFDokumen38 halamanTables PDFvandreBelum ada peringkat

- Physics Measurement NotesDokumen7 halamanPhysics Measurement NotesMuhammmad UmairBelum ada peringkat

- Units and Unit ConversionsDokumen24 halamanUnits and Unit ConversionsgrazianirBelum ada peringkat

- 2022 Mathematics Atp Grade 7Dokumen7 halaman2022 Mathematics Atp Grade 7Themba NyoniBelum ada peringkat

- Section 1.1: Section 1.2: Measurement Section 1.3: Graphing DataDokumen33 halamanSection 1.1: Section 1.2: Measurement Section 1.3: Graphing DataabcdBelum ada peringkat

- Applications of dimensional formula and significant figuresDokumen19 halamanApplications of dimensional formula and significant figuresDrishti SharmaBelum ada peringkat

- Asme B16.25 PDFDokumen22 halamanAsme B16.25 PDFAjay SinghBelum ada peringkat

- Measure Accuracy and PrecisionDokumen83 halamanMeasure Accuracy and Precisionibano626Belum ada peringkat

- Freshers CHM 101Dokumen19 halamanFreshers CHM 101Glory100% (1)

- Geneal Chemistry IDokumen32 halamanGeneal Chemistry IKaithlynBelum ada peringkat

- Measurements and Errors Learning Activity SheetDokumen5 halamanMeasurements and Errors Learning Activity SheetRaiza Mai MendozaBelum ada peringkat

- Chemistry BASIC CONCEPTS NotesDokumen67 halamanChemistry BASIC CONCEPTS NotesEby Samuel ThattampuramBelum ada peringkat

- Errata Checkpoints VCE Physics 34 May 2017Dokumen4 halamanErrata Checkpoints VCE Physics 34 May 2017richard langleyBelum ada peringkat

- SMD Resistor Code Calculator: How To Calculate The Value of An SMD ResistorDokumen6 halamanSMD Resistor Code Calculator: How To Calculate The Value of An SMD ResistorBeskaria SmbBelum ada peringkat

- Physics 1: Quarter 1 - Module 1: Measurements and Uncertainties/Vector and Scalar QuantitiesDokumen22 halamanPhysics 1: Quarter 1 - Module 1: Measurements and Uncertainties/Vector and Scalar QuantitiesKrisha Anne ChanBelum ada peringkat

- 3 2 H A UnitconversionhomeworkDokumen3 halaman3 2 H A Unitconversionhomeworkapi-291536844Belum ada peringkat

- ISC Physics Practical Paper 2 2013 Solved PaperDokumen9 halamanISC Physics Practical Paper 2 2013 Solved PaperPankajkumar GuptaBelum ada peringkat