Stability of Gepp and Gecp

Diunggah oleh

govawls89Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Stability of Gepp and Gecp

Diunggah oleh

govawls89Hak Cipta:

Format Tersedia

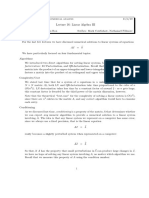

Is Gaussian elimination backward stable?

Why pivot?

Consider the following example:

A =

_

10

16

1

1 1

_

(1)

If we compute the LU-factorization of A in double-precision arithmetic but without pivoting (swapping

rows) then we obtain the following:

L =

_

1 0

(1/10

16

) 1

_

=

_

1 0

10

16

1

_

U =

_

10

16

1

0 (1 (10

16

1))

_

=

_

10

16

1

0 10

16

_

Note that

A

L

U =

_

0 0

0 1

_

,

in both oating-point and exact arithmetic. The norm of this error (or more precisely, the residual ) is

roughly the same size as the norm of the input A.

On the other hand, if we use partial pivoting we obtain the following:

PA =

_

1 1

10

16

1

_

L =

_

1 0

(10

16

/1) 1

_

=

_

1 0

10

16

1

_

U =

_

1 1

0 (1 (10

16

1))

_

=

_

1 1

0 1

_

Now we have A = (

U) (but not in exact arithmetic!).

Code for GEPP and GECP

There are two main strategies for pivoting. The rst method, called partial pivoting, swaps rows to

ensure that the entry in the pivot position has the greatest absolute value in that column on or below

that row. Stripped to its essence, here is GEPP in pseudocode:

for each column j = 1:m

let i = row in range j:m such that abs(A(i,j)) is max

swap rows j and i

set A(j+1:m,j+1:m) = A(j+1:m,j+1:m) - A(j+1:m,j)*A(j,j+1:m)

(A full version is posted.) Note that this algorithm has the property that it keeps the entries of L small.

The second main strategy for pivoting is called complete pivoting. GECP always swaps rows to

ensure that the entry in the pivot position has the greatest absolute value among all entries below and

to the right of the position. Stripped to its essence, here is GECP in pseudocode:

for each column j = 1:m

let i,k = row,column in ranges j:m,j:m such that abs(A(i,k)) is max

swap rows j and i

swap columns j and k

set A(j+1:m,j+1:m) = A(j+1:m,j+1:m) - A(j+1:m,j)*A(j,j+1:m)

This is a vastly more expensive search operation at each stage. Fortunately, as we shall see, GECP is

rarely necessary. In practice partial pivoting is stable.

1

Growth factors

To understand the stability of GEPP and GECP, we can assume that the pivoting (row swapping) has

all been done beforehand. With this in mind lets look at the key theorem.

Theorem 1 If A = LU, and if

L and

U are the values computed by Gaussian elimination without

pivoting, on an IEEE-compliant machine, then there exists a A such that

U = A + A, where A = C L U , (2)

and C is a constant which depends on the dimension m, but not on the matrix entries.

Note that we make no claim about the errors L

L or U

U, only about the residual A

L

U. We

will see more and more theorems of this avor. Also, note that this does not give backward stability,

since the factor on the right is L U. not A. Thus, the question of stability comes down to the

question of how big this factor is compared to A.

Since in both GEPP and GECP the factor L stays bounded (never bigger than m, depending on

the norm used) the question boils down to this? How big can the largest absolute value in U be compared

to the largest absolute value in A? This leads us to dene the growth factor for GEPP:

g

PP

(A) =

|U|

max

|A|

max

(3)

How bad can it be?

Consider the following m m example:

A =

_

_

1 0 0 0 1

1 1 0 0 1

1 1 1 0 1

.

.

.

.

.

.

.

.

.

1 1 1 1 1

_

_

=

_

_

1 0 0 0 0

1 1 0 0 0

1 1 1 0 0

.

.

.

.

.

.

.

.

.

1 1 1 1 1

_

_

_

_

1 0 0 0 1

0 1 0 0 2

0 0 1 0 4

.

.

.

.

.

.

.

.

.

0 0 0 0 2

m1

_

_

(4)

At each state of Gaussian elimination we add the pivot row to all of the rows below it. At each stage

this doubles the values of all the entries in the last column below the pivot row. The entry in the lower

right gets doubled m 1 times. In this case, therefore, g

PP

= 2

m1

.

Now this example also shows that with GEPP (or GECP) the growth factor never exceeds 2

m1

.

This is because at each step we are adding a multiple of one row to another, and that multiplier is at

most 1 in absolute value. Thus, after each type III operation each new entry is at most twice the value

of one of the previous matrix entries. Since each entry is modied (at most) m 1 times, each entry is

at worst multiplied by 2

m1

.

Of course, we wouldnt expect such continual doubling, if for no other reason than the fact that

the signs of the entries will not all be the same, and so there is as likely to be cancellation as there is

doubling. Moreover, since the entries vary in size and since we always take the biggest in a given column

as the pivot, the multipliers will usually be less than one.

But these are heuristic arguments. Are they valid in practice? If so, can we give some more precise

explanation as to why this is so? These are questions we will explore next.

Homework problems: First version due Friday, 17 February

Final version due Friday, 3 March

Do two of the following four problems.

1. In theory the function dened by the rule

f(x) =

_

_

_

log(1 + x)

x

, if x = 0

1, if x = 0

2

is smooth everywhere, even when x = 0. [Why? Explain!] Dene this function in Matlab or

Octave, then plot it, rst over the range [1, 1] and then over the range [10, 10], where is the

machine epsilon. Is the picture smooth?

Now dene a new algorithm to compute y = f(x):

s = 1 + x;

if s = 1

y = 1;

else

y = log(s)/(s-1);

end

Graph the output of this new algorithm, over the same intervals. Compare the two sets of graphs.

What do you nd? How do you explain it?

2. Consider the problem of solving AX = B, where A is n n and both X and B are n m. I want

you to compare two algorithms:

a. Use Gaussian elimination to nd the factorization PA = LU, then use forward and backward

substitution to solve for X.

b. Use Gaussian elimination to nd A

1

then compute A

1

B.

Count the maximum number of ops needed for both. (This is the theoretical maximum, which is

a function of m and n.) Which algorithm is faster? Use Matlab or Octave to verify your prediction.

3. Given a nonsingular matrix A and a vector b prove that for all suciently small positive there

are choices A and b such that

x

2

= A

2

(A

2

x

2

+ b

2

),

where (as before) Ax = b, x = x x, and

(A + x) x = b + b.

4. Consider the problem of solving a 2 2 system:

_

a b

c d

_ _

x

y

_

=

_

e

f

_

.

I want you to compare two algorithms:

a. GEPP.

b. Cramers Rule:

det = a*d - b*c

x = (d*e - b*f)/det

y = (-c*e + a*f)/det

Show by means of a numerical example that Cramers Rule is not backward stable. What does

backward stability imply about the size of the residual?

3

Anda mungkin juga menyukai

- NLOPFDokumen34 halamanNLOPFKelly SantosBelum ada peringkat

- Linear System: 2011 Intro. To Computation Mathematics LAB SessionDokumen7 halamanLinear System: 2011 Intro. To Computation Mathematics LAB Session陳仁豪Belum ada peringkat

- 2015 Preparatory Notes: Australian Chemistry Olympiad (Acho)Dokumen44 halaman2015 Preparatory Notes: Australian Chemistry Olympiad (Acho)kevBelum ada peringkat

- Section 1.8 Gaussian Elimination With PivotingDokumen8 halamanSection 1.8 Gaussian Elimination With PivotingAnshul GuptaBelum ada peringkat

- Quiz 3 SolutionsDokumen5 halamanQuiz 3 Solutionsyashar250075% (4)

- Error Analysis For IPhO ContestantsDokumen11 halamanError Analysis For IPhO ContestantsnurlubekBelum ada peringkat

- 2 Partial Pivoting, LU Factorization: 2.1 An ExampleDokumen11 halaman2 Partial Pivoting, LU Factorization: 2.1 An ExampleJuan Carlos De Los Santos SantosBelum ada peringkat

- Notes On Adjoint Methods MITDokumen6 halamanNotes On Adjoint Methods MITDudu ZhangBelum ada peringkat

- Numerical Solution of Ordinary Differential Equations Part 2 - Nonlinear EquationsDokumen38 halamanNumerical Solution of Ordinary Differential Equations Part 2 - Nonlinear EquationsMelih TecerBelum ada peringkat

- Lecture 16: Linear Algebra III: cs412: Introduction To Numerical AnalysisDokumen7 halamanLecture 16: Linear Algebra III: cs412: Introduction To Numerical AnalysisZachary MarionBelum ada peringkat

- Hand-In Problems Due Fri October 20: Reading AssignmentDokumen4 halamanHand-In Problems Due Fri October 20: Reading AssignmentAli DurazBelum ada peringkat

- Matlab Program For Linear EqnsDokumen2 halamanMatlab Program For Linear EqnsShivani AgarwalBelum ada peringkat

- Differential Equations and Linear Algebra Supplementary NotesDokumen17 halamanDifferential Equations and Linear Algebra Supplementary NotesOyster MacBelum ada peringkat

- Examples To Iterative MethodsDokumen13 halamanExamples To Iterative MethodsPranendu MaitiBelum ada peringkat

- On The Boolean Minimal Realization Problem in The Max-Plus AlgebraDokumen10 halamanOn The Boolean Minimal Realization Problem in The Max-Plus AlgebraHarsha KarunathissaBelum ada peringkat

- Roots of Equation PDFDokumen10 halamanRoots of Equation PDFMia ManguiobBelum ada peringkat

- Computing The Cake Eating ProblemDokumen13 halamanComputing The Cake Eating Problem.cadeau01Belum ada peringkat

- 124 - Section 1.2Dokumen41 halaman124 - Section 1.2Aesthetic DukeBelum ada peringkat

- Indefinite Symmetric SystemDokumen18 halamanIndefinite Symmetric SystemsalmansalsabilaBelum ada peringkat

- A Tutorial of Machine LearningDokumen16 halamanA Tutorial of Machine LearningSumod SundarBelum ada peringkat

- Linear System Theory 2 e SolDokumen106 halamanLinear System Theory 2 e SolShruti Mahadik78% (23)

- Solu 3Dokumen28 halamanSolu 3Aswani Kumar100% (2)

- 3 Transformations in Regression: Y X Y XDokumen13 halaman3 Transformations in Regression: Y X Y XjuntujuntuBelum ada peringkat

- Sin and Cos FunctionsDokumen5 halamanSin and Cos Functionsracer_10Belum ada peringkat

- Different Simplex MethodsDokumen7 halamanDifferent Simplex MethodsdaselknamBelum ada peringkat

- MIT14 384F13 Lec11 PDFDokumen6 halamanMIT14 384F13 Lec11 PDFRicardo CaffeBelum ada peringkat

- Lecture1 PDFDokumen6 halamanLecture1 PDFAnonymous ewKJI4xUBelum ada peringkat

- Matlab Tutorial of Modelling of A Slider Crank MechanismDokumen14 halamanMatlab Tutorial of Modelling of A Slider Crank MechanismTrolldaddyBelum ada peringkat

- FDSFSDDokumen6 halamanFDSFSDPotatoBelum ada peringkat

- RLbook Solutions ManualDokumen35 halamanRLbook Solutions ManualmeajagunBelum ada peringkat

- Solving Systems of Linear EquationsDokumen9 halamanSolving Systems of Linear EquationsGebrekirstos TsegayBelum ada peringkat

- Quick Look at The Margins CommandDokumen7 halamanQuick Look at The Margins Commandhubik38Belum ada peringkat

- Systems and MatricesDokumen15 halamanSystems and MatricesTom DavisBelum ada peringkat

- Waves and φ: 1 Obtaining φ from a snapshotDokumen7 halamanWaves and φ: 1 Obtaining φ from a snapshotKool PrashantBelum ada peringkat

- W10 - Module 008 Linear Regression and CorrelationDokumen6 halamanW10 - Module 008 Linear Regression and CorrelationCiajoy KimBelum ada peringkat

- G Arken Solutions-Problemas ResueltosDokumen754 halamanG Arken Solutions-Problemas ResueltosZer0kun100% (10)

- ME 17 - Homework #5 Solving Partial Differential Equations Poisson's EquationDokumen10 halamanME 17 - Homework #5 Solving Partial Differential Equations Poisson's EquationRichardBehielBelum ada peringkat

- Pages 16 To 23 (Annotated)Dokumen8 halamanPages 16 To 23 (Annotated)Victor BenedictBelum ada peringkat

- Lecture 9Dokumen7 halamanLecture 9Milind BhatiaBelum ada peringkat

- ARIMA GARCH Spectral AnalysisDokumen32 halamanARIMA GARCH Spectral AnalysisRewa ShankarBelum ada peringkat

- Applications of Diagonalisation: ReadingDokumen19 halamanApplications of Diagonalisation: Readingnepalboy20202192Belum ada peringkat

- Computer Network Assignment HelpDokumen10 halamanComputer Network Assignment HelpComputer Network Assignment Help100% (1)

- Ee263 Homework 2 SolutionsDokumen8 halamanEe263 Homework 2 Solutionsafnoddijfdheuj100% (1)

- Gauss Elimination: Reported byDokumen7 halamanGauss Elimination: Reported byXzxz WahshBelum ada peringkat

- Algorithms Solution - 2Dokumen9 halamanAlgorithms Solution - 2MiltonBelum ada peringkat

- Nullclines Phase PlansDokumen8 halamanNullclines Phase PlansLA GUESS100% (1)

- COMPUTATIONAL FLUID DYNAMICS - Anderson, Dale A. Computational Fluid Mechanics and Heat Transfer100-150Dokumen50 halamanCOMPUTATIONAL FLUID DYNAMICS - Anderson, Dale A. Computational Fluid Mechanics and Heat Transfer100-150mkashkooli_scribdBelum ada peringkat

- Practice 6 8Dokumen12 halamanPractice 6 8Marisnelvys CabrejaBelum ada peringkat

- Notes On Divide-and-Conquer and Dynamic Programming.: 1 N 1 n/2 n/2 +1 NDokumen11 halamanNotes On Divide-and-Conquer and Dynamic Programming.: 1 N 1 n/2 n/2 +1 NMrunal RuikarBelum ada peringkat

- 18.02 Lectures 1 & 2Dokumen6 halaman18.02 Lectures 1 & 2aanzah.tirmizi8715Belum ada peringkat

- Gauss Illumination: Reported byDokumen7 halamanGauss Illumination: Reported byXzxz WahshBelum ada peringkat

- Matrix Chain MultiplicationDokumen11 halamanMatrix Chain MultiplicationamukhopadhyayBelum ada peringkat

- 03a1 MIT18 - 409F09 - Scribe21Dokumen8 halaman03a1 MIT18 - 409F09 - Scribe21Omar Leon IñiguezBelum ada peringkat

- X G (X) Techniques Used in The First Chapter To Solve Nonlinear Equations. Initially, We Will ApplyDokumen4 halamanX G (X) Techniques Used in The First Chapter To Solve Nonlinear Equations. Initially, We Will Applydonovan87Belum ada peringkat

- Gauss Eliminasyon 4x4Dokumen17 halamanGauss Eliminasyon 4x4Abdulvahap ÇakmakBelum ada peringkat

- A Convenient Way of Generating Normal Random Variables Using Generalized Exponential DistributionDokumen11 halamanA Convenient Way of Generating Normal Random Variables Using Generalized Exponential DistributionvsalaiselvamBelum ada peringkat

- Efficient Parallel Non-Negative Least Squares On Multi-Core ArchitecturesDokumen16 halamanEfficient Parallel Non-Negative Least Squares On Multi-Core ArchitecturesJason StanleyBelum ada peringkat

- A Brief Introduction to MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"Dari EverandA Brief Introduction to MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"Penilaian: 2.5 dari 5 bintang2.5/5 (2)

- A-level Maths Revision: Cheeky Revision ShortcutsDari EverandA-level Maths Revision: Cheeky Revision ShortcutsPenilaian: 3.5 dari 5 bintang3.5/5 (8)

- AdvaithamDokumen2 halamanAdvaithamBhogar SishyanBelum ada peringkat

- Pharm-D 2nd Scheme of StudiesDokumen11 halamanPharm-D 2nd Scheme of StudiesEyssa MalikBelum ada peringkat

- Two PhaseDokumen29 halamanTwo Phaseafif arshadBelum ada peringkat

- GNL 05Dokumen4 halamanGNL 05Mauricio Bustamante HuaquipaBelum ada peringkat

- Short Column AnalysisDokumen1 halamanShort Column Analysisrelu59Belum ada peringkat

- I Can Statements - 4th Grade CC Math - NBT - Numbers and Operations in Base Ten Polka DotsDokumen13 halamanI Can Statements - 4th Grade CC Math - NBT - Numbers and Operations in Base Ten Polka DotsbrunerteachBelum ada peringkat

- 1 - Petrobras - DeepWater Gas LiftDokumen36 halaman1 - Petrobras - DeepWater Gas LiftNisar KhanBelum ada peringkat

- 4-Bit AluDokumen45 halaman4-Bit AluAllam Rajkumar0% (1)

- Quality Control: To Mam Dr. Sumaira Naeem by Muhammad Muzammal Roll No. 20014107-039Dokumen26 halamanQuality Control: To Mam Dr. Sumaira Naeem by Muhammad Muzammal Roll No. 20014107-039Muhammad MUZZAMALBelum ada peringkat

- 3rd PrepDokumen61 halaman3rd Prepmohamed faroukBelum ada peringkat

- Estimate FormworksDokumen2 halamanEstimate FormworksMarvin Gan GallosBelum ada peringkat

- T2 Hotelling PadaDokumen345 halamanT2 Hotelling Padafaiq0% (1)

- Approximate Solution of Navier Stokes EquationDokumen58 halamanApproximate Solution of Navier Stokes EquationsineBelum ada peringkat

- Corrective WaveDokumen1 halamanCorrective WaveMoses ArgBelum ada peringkat

- Standard-Equation-Of-A-Circle Math 10Dokumen12 halamanStandard-Equation-Of-A-Circle Math 10Maria JocosaBelum ada peringkat

- Cost Volume Profit AnalysisDokumen29 halamanCost Volume Profit AnalysischaluvadiinBelum ada peringkat

- Other Immediate InferenceDokumen14 halamanOther Immediate Inferencehimanshulohar79Belum ada peringkat

- Python Workshop ExercisesDokumen7 halamanPython Workshop ExercisesMegaVBelum ada peringkat

- Six Sigma Vs TaguchiDokumen14 halamanSix Sigma Vs TaguchiemykosmBelum ada peringkat

- Prediction of Airline Ticket Price: Motivation Models DiagnosticsDokumen1 halamanPrediction of Airline Ticket Price: Motivation Models DiagnosticsQa SimBelum ada peringkat

- Design of Fluid Thermal Systems: William S. Janna 9781285859651 Fourth Edition Errata For First PrintingDokumen1 halamanDesign of Fluid Thermal Systems: William S. Janna 9781285859651 Fourth Edition Errata For First PrintingAhsan Habib Tanim0% (1)

- Punctuations: Symbols Uses ExamplesDokumen3 halamanPunctuations: Symbols Uses ExamplesMari Carmen Pérez GómezBelum ada peringkat

- Long Report DsDokumen21 halamanLong Report DsNabilah AzizBelum ada peringkat

- What Is Flow NumberDokumen2 halamanWhat Is Flow NumberMonali ChhatbarBelum ada peringkat

- RSA (Cryptosystem) : 1 HistoryDokumen9 halamanRSA (Cryptosystem) : 1 HistoryTanmay RajBelum ada peringkat

- Impact of Agricultural Export On Economic Growth PDFDokumen28 halamanImpact of Agricultural Export On Economic Growth PDFAKINYEMI ADISA KAMORUBelum ada peringkat

- Research Aptitude TestDokumen14 halamanResearch Aptitude TestMaths CTBelum ada peringkat

- G11 Physics Extreme Series BookDokumen435 halamanG11 Physics Extreme Series BookHabtamu Worku100% (2)

- Iyanaga S. - Algebraic Number TheoryDokumen155 halamanIyanaga S. - Algebraic Number TheoryJohnnyBelum ada peringkat

- HSC Science March 2018 Board Question Paper of MathsDokumen4 halamanHSC Science March 2018 Board Question Paper of MathsVismay VoraBelum ada peringkat