CTT Editorial28nov09

Diunggah oleh

ClinPsyJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

CTT Editorial28nov09

Diunggah oleh

ClinPsyHak Cipta:

Format Tersedia

Classical and modern measurement theories, patient reports, and clinical outcomes Rochelle E. Tractenberg, Ph. D., M.P.H.

Address for correspondence and reprint requests: Rochelle E. Tractenberg Building D, Suite 207 Georgetown University Medical Center 4000 Reservoir Rd. NW Washington, DC 20057 TEL: 202.444.8748 FAX: 202.444.4114 ret7@georgetown.edu Director, Collaborative for Research on Outcomes and Metrics Departments of Neurology; Biostatistics, Bioinformatics & Biomathematics; and Psychiatry, Georgetown University Medical Center, Washington, D.C.

RUNNING HEAD: Psychometric theories and potential pitfalls for clinical outcomes

Psychometric theories and potential pitfalls for clinical outcomes

Abstract Classical test theory (CTT) has been extremely popular in the development, characterization, and sometimes selection of outcome measures in clinical trials. That is, qualities of outcomes, whether administered by clinicians or representing patient reports, are often described in terms of validity and reliability, two features of assessments that are derived from, and dependent upon the assumptions in, classical test theory. Psychometrics embodies many theories. In PubMed, CTT is more generally represented; modern measurement, or item response theory (IRT) is becoming more common. This editorial seeks to only briefly orient the reader to the differences between CTT and IRT, and to highlight a very important, virtually unrecognized, difficulty in the utility of IRT for clinical outcomes.

Psychometric theories and potential pitfalls for clinical outcomes

Classical test theory (CTT) has been extremely popular in the development, characterization, and sometimes selection of outcome measures in clinical trials. That is, qualities of outcomes, whether administered by clinicians or representing patient reports, are often described in terms of validity and reliability, two features of assessments that are derived from, and dependent upon the assumptions in, classical test theory There are many different types of validity (e.g., Sechrest, 2005; Kane, 2006; Zumbo 2007), and while there are many different methods for estimating reliability (e.g., Haertel, 2006), it is defined, within classical test theory, as the fidelity of the observed score to the true score. The fundamental feature of classical test theory is the formulation of every observed score (X) as a function of the individuals true score (T) and some random measurement error (e) (Haertel, 2006, p. 68): X=T+e CTT focuses on total test score - individual items are not considered but their summary (sum of responses, average response, or other quantification of overall level) is the datum on which classical test theoretic constructs operate. An exception could be the item-total correlation or split-half versions of these (e.g., Cronbachs alpha). Because of the total-score emphasis of classical test theoretic constructs, when an outcome measure is established, characterized or selected on the basis of its reliability (howsoever that might be estimated), tailoring the assessment is not possible, and in fact, the items in the assessment must be considered exchangeable. Every score of 10 is assumed to be the same. Another feature of CTT-based characterizations is that they are best when a single factor underlies the total score. This can be addressed, in multi-factorial assessments, with testlet reliability (i.e. the breaking up of the whole assessment into unidimensional bits, each of which has some reliability estimate (Wainer, Bradlow and Wang, 2007, especially pp. 55-57). In all cases, wherever CTT is used, constant error (for all examinees) is assumed, that is, the measurement error of the instrument must be independent of true score (ability). This means that an outcome that is less reliable for individuals with lower or higher overall performance does not meet the assumptions required for the application and interpretation of CTT-derived formulae. CTT offers several ways to estimate reliability, and assumptions for CTT may frequently be met but all estimations make assumptions that cannot be tested within the CTT framework. If CTT assumptions are not met, then reliability may be estimated, but the result is not meaningful. The formulae themselves will work; it is the interpretation of these values that cannot be supported. Item response theory (IRT) can be contrasted with classical test theory in several different ways (Embretsen and Reise, 2000, ch. 1); often IRT is referred to as modern test theory, which contrasts it with classical test theory. IRT is NOT psychometrics. The impetus of psychometrics (& limitations of CTT) led to the development of IRT (Jones and Thissen, 2007). IRT is a probabilistic (statistical, logistic) model of how examinees respond to any given item(s). CTT is not a probabilistic model of response. Both the classical and modern theoretical approaches to test development are useful in understanding, and possibly measuring, psychological phenomena and constructs (i.e., both are subsumed under psychometrics). IRT has great potential for the development and characterization of outcomes for clinical trials because it provides a statistical model of how/why individuals respond as they do to an item

Psychometric theories and potential pitfalls for clinical outcomes

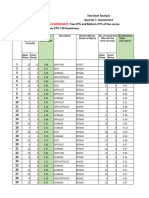

and independently, about the items themselves. IRT-derived characterizations of tests, their constituent items, and individuals are general for the entire population of items or individuals, while CTT-derived characterizations pertain only to total tests and are specific to the sample from which they are derived. This is another feature of modern methods that is important and attractive in clinical settings. Further, under IRT, the reliability of an outcome measure has a different meaning than for CTT: if and only if the IRT model fits, then the items always measure the same thing the same way essentially like inches on a ruler. This invariance property of IRT is its key feature. Under IRT, the items themselves are characterized; test or outcome characteristics are simply derived from those of the items. These characteristics include difficulty (level of ability (or underlying construct) required for correct answer/endorsement of that item) discrimination (power of item to discriminate individuals with different (adjacent) ability levels) ( Unlike CTT, if and only if the model fits then item parameters are invariant across any population, and the reverse is also true. Also unlike CTT, if the IRT model fits, then item characteristics can depend on your ability level (easier/harder items can have less/more variability). Items can be designed, improved with respect to the amount of information they provide about the ability level(s) of interest. Within a CTT framework, information about the performance of particular items across populations is not available. This has great implications for the utility and generalizability of clinical trial results when an IRT-derived outcome is used; and computerized adaptive testing (CAT) obtains responses to only those items focusing increasingly on a given individuals ability (or construct) level (e.g., Wainer et al. 2007, p. 10). CAT has the potential to precisely estimate what the outcome seeks to assess while minimizing the number of responses required by any study participant. Tests can be tailored (CAT), or global tests can be developed with precision in the target range of the underlying construct that the inclusion criteria emphasize or for which FDA labeling is approved. IRT is powerful and offers options as clinical outcomes that CTT does not provide. However, IRT modeling is complex. The Patient Reported Outcome Measurement Information System (PROMIS, http://www.nihpromis.org) is an example of clinical trial outcomes that are being characterized using IRT. All items (for content area) pooled together for evaluation (DeWalt et al, 2007). Content experts identify best representation of content area supporting face and content validity. IRT models are fit by expert IRT modeling teams for all existing data, so that large enough sample sizes are used in the estimation of item parameters. Items that dont fit the content, or statistical, models are dropped. The purpose of PROMIS is To create valid, reliable & generalizable measures of clinical outcomes of interest to patients. (http://www.nihpromis.org/default.aspx) Unevaluated in PROMIS and many other- protocols is the direction of causality, as shown in Figure 1. Using the construct quality of life (QOL), Figure 1 shows that causality flows from the items (qol 1, qol 2, qol 3) to the construct (QOL). That is, in this example QOL is a construct that arises from the responses that individuals give on QOL inventory items (3 are shown in Figure 1 for clarity/simplicity). The level of QOL is not causing those responses to vary, variability in the responses is causing the construct of QOL to vary (e.g., Fayers and Hand, 1997). This type of construct is called emergent (e.g., Bollen 1989) and is common (e.g., Bollen and Ting, 2000; Kline, 2006). The problem for PROMIS (and similar applications of IRT models) arises from the fact that IRT models require a causal

Psychometric theories and potential pitfalls for clinical outcomes

factor underlying observed responses, because conditioning on the cause must yield conditional independence in the items (e.g., Bock and Moustaki, 2007, p. 470). This conditional independence (i.e., when the underlying cause is held constant, the previously-correlated variables become statistically independent) is a critical assumption of IRT. QOL and PROMIS are only exemplars of when this causal directionality is an impediment to interpretability. If one finds that an IRT model does fit the items (qol 1-3 in Figure 1), then the conditional independence in those observed items must be coming from a causal factor; this is represented in Figure 1 by the latent factor F; conditioning on the factor that emerges from observed items induces dependence, not independence (Pearl, 2000, p. 17). Therefore, if conditional independence is obtained, which is required for an IRT model to fit, and if the construct (QOL in Figure 1) is not causal, then there must be another causal factor in the system (F in Figure 1). The implication is that the factor of interest (e.g., QOL) is not the construct being measured in an IRT model such as that shown in Figure 1 <in fact, it is F>. This problem exists acknowledged or not for any emergent construct such as QOL is shown to be in Figure 1. Many investigations into factor structure assume a causal model (Kline 2006), all IRT analyses assume this. Figure 1 shows that, if the construct is not causal, then that which the IRT model is measuring is not only not the construct of interest, it will also mislead the investigator into believing that the IRT model is describing the construct of interest. Efforts such as PROMIS, if inadvertently directed at constructs like F rather than QOL, waste time and valuable resources and give a false sense of propriety, reliability, and generalizability for their results. CTT and IRT differ in many respects. A crucial similarity is that both are models of performance; if the model assumptions are not met, conclusions and interpretations will not be supportable and the investigator will not necessarily be able to test the assumptions. In the case of IRT, however, there are statistical tests to help determine whether the construct is causal or emergent (e.g., Bollen & Ting, 2000). Whether tested from a theoretical or a statistical perspective, IRT modeling should include the careful consideration of whether the construct is causal or emergent. REFERENCES: 1. Bock RD & Moustaki I. (2007). Item response theory in a general framework. In CR Rao & S. Sinharay (Eds), Handbook of Statistics, Vol. 26: Psychometrics. The Netherlands: Elsevier. Pp. 469-513. 2. Bollen KA. (1989). Structural equations with latent variables. New York: Wiley. 3. Bollen KA & Ting K. (2000). A tetrad test for causal indicators. Psychological Methods 5: 605-634. 4. DeWalt DA, Rothrock N, Yount S, Stone AA. (2007). Evaluation of item candidates: the PROMIS qualitative item review. Medical Care 45(5, suppl 1): S12-S21. 5. Embretsen SE, Reise SP. (2000). Item response theory for psychologists. LEA.

Psychometric theories and potential pitfalls for clinical outcomes

6. Fayers PM, Hand DJ. (1997). Factor analysis, causal indicators, and quality of life. Quality of Life Research 6(2): 139-150. 7. Haertel EH. (2006). Reliability. In, RL Brennan (Ed) Educational Measurement, 4E. Washington, DC: American Council on Education and Praeger Publishers. Pp. 65-110. 8. Jones LV & Thissen D. (2007). A history and overview of psychometrics. In CR Rao & S. Sinharay (Eds), Handbook of Statistics, Vol. 26: Psychometrics. The Netherlands: Elsevier. Pp. 1-27. 9. Kane MT. (2006). Validation. In, RL Brennan (Ed) Educational Measurement, 4E. Washington, DC: American Council on Education and Praeger Publishers. Pp. 17-64. 10. Kline RB. (2006). Formative measurement and feedback loops. In GR Hancock & RO Mueller (Eds), Structural equation modeling: a second course. Charlotte, NC: Information Age Publishing. Pp. 43-68. 11. Pearl J. (2000). Causality: Models, reasoning and inference. Cambridge, UK: Cambridge University Press. 12. Sechrest L. (2005). Validity of measures is no simple matter. Health Services Research 40(5), part II:1584-1604. 13. Wainer H, Bradlow ET, Wang X. (2007). Testlet response theory and its applications. Cambridge, UK: Cambridge University Press.

Anda mungkin juga menyukai

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (121)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (588)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (266)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5795)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1091)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- Psychometric and IQ Test Workbook DownloadDokumen311 halamanPsychometric and IQ Test Workbook DownloadHAbbunoBelum ada peringkat

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- Rcmas ScoringDokumen6 halamanRcmas ScoringRizal Saeful Drajat50% (4)

- 2010 Handbook Survey ResearchDokumen51 halaman2010 Handbook Survey Researchec16043Belum ada peringkat

- In-Basket TestDokumen78 halamanIn-Basket TestKoleksi Lowongan50% (2)

- Manual For Participants: Mary Burgess Trudie ChalderDokumen127 halamanManual For Participants: Mary Burgess Trudie ChalderClinPsyBelum ada peringkat

- Appendix A A.1: WAIS IV Interpretive Worksheet 1Dokumen40 halamanAppendix A A.1: WAIS IV Interpretive Worksheet 1ClinPsyBelum ada peringkat

- 3 MLP Psychometrics Reliability and Validity 3.1Dokumen26 halaman3 MLP Psychometrics Reliability and Validity 3.1Subhas RoyBelum ada peringkat

- Characteristics of A Good Test/: Compiled by Nurmala Hendrawaty, M.PDDokumen12 halamanCharacteristics of A Good Test/: Compiled by Nurmala Hendrawaty, M.PDPutri Adibah Kakuhito75% (16)

- Likert ScaleDokumen9 halamanLikert ScaleUtkarsh SharmaBelum ada peringkat

- Approaches To Language TestingDokumen7 halamanApproaches To Language TestingSayyidah Balqies75% (8)

- Behav Res Ther 2000 38 311-318Dokumen8 halamanBehav Res Ther 2000 38 311-318ClinPsyBelum ada peringkat

- Rating Manuals For HAMD and HAMADokumen18 halamanRating Manuals For HAMD and HAMAClinPsyBelum ada peringkat

- Introduction To IRTDokumen64 halamanIntroduction To IRTClinPsyBelum ada peringkat

- Estabrook Intro To MplusDokumen81 halamanEstabrook Intro To MplusClinPsyBelum ada peringkat

- Excel For Gift (Moodle)Dokumen12 halamanExcel For Gift (Moodle)Jay Sunga VillanBelum ada peringkat

- Ekolu 2019Dokumen6 halamanEkolu 2019mj.kvsp2Belum ada peringkat

- Boldizar, J., Assessing Sex Typing and Androgyny in Children - The Children's Sex Role InventoryDokumen11 halamanBoldizar, J., Assessing Sex Typing and Androgyny in Children - The Children's Sex Role InventoryGigi KentBelum ada peringkat

- Definition of Terms: What Is A Test?Dokumen21 halamanDefinition of Terms: What Is A Test?Oinokwesiga PinkleenBelum ada peringkat

- AI Assisted Knowledge Assessment Techniques 2022 Computers and Education ADokumen12 halamanAI Assisted Knowledge Assessment Techniques 2022 Computers and Education Acp3y2000-scribdBelum ada peringkat

- Ajpe43 Response Rates and Responsiveness For Surveys, Standards, PDFDokumen3 halamanAjpe43 Response Rates and Responsiveness For Surveys, Standards, PDFPatient Safety MyBelum ada peringkat

- Psychometric Properties of Metacognitive Awarness of Reading Strategy Inventory MARSI PDFDokumen15 halamanPsychometric Properties of Metacognitive Awarness of Reading Strategy Inventory MARSI PDFkaskaraitBelum ada peringkat

- EDU402 Quiz 1 Spring 2019Dokumen11 halamanEDU402 Quiz 1 Spring 2019Muhammad Yasin50% (2)

- Assessment CompilationDokumen17 halamanAssessment CompilationArjie ConsignaBelum ada peringkat

- Brough2014 (Measurement Work-Life Balance)Dokumen23 halamanBrough2014 (Measurement Work-Life Balance)Siti Namira AisyahBelum ada peringkat

- Testing (Psychological and Psychometric Test)Dokumen12 halamanTesting (Psychological and Psychometric Test)Anna DyBelum ada peringkat

- A Review of Instruments Measuring ResilienceDokumen23 halamanA Review of Instruments Measuring ResilienceJoyce CarolBelum ada peringkat

- Item Analysis & ReliabilityDokumen57 halamanItem Analysis & ReliabilityNadia Luthfi Khairunnisa100% (1)

- English 7 Item AnalysisDokumen55 halamanEnglish 7 Item AnalysisCara RamaBelum ada peringkat

- Survey Books 090911Dokumen2 halamanSurvey Books 090911Wahyu HidayatBelum ada peringkat

- Establishing The Validity and Reliability of A Research InstrumentDokumen30 halamanEstablishing The Validity and Reliability of A Research InstrumentJennelyn MaltizoBelum ada peringkat

- New Directions: Assessment and Evaluation: A Collection of PapersDokumen206 halamanNew Directions: Assessment and Evaluation: A Collection of PapersSaifur Zahri Muhamad AisaBelum ada peringkat

- Intelligence TestDokumen26 halamanIntelligence TestEya MoralesBelum ada peringkat

- English - Self-Efficacy For Managing Chronic Disease 6-ItemDokumen2 halamanEnglish - Self-Efficacy For Managing Chronic Disease 6-Itemapi-559575515Belum ada peringkat

- Compilation of The Different Theories in PsychologyDokumen38 halamanCompilation of The Different Theories in PsychologyRenChaBelum ada peringkat

- SST Lin Dan HsiehDokumen14 halamanSST Lin Dan HsiehMUHAMMAD SIDIKBelum ada peringkat

- Item AnalysisDokumen6 halamanItem Analysiszettevasquez8Belum ada peringkat