IRDM Assignment-I PDF

Diunggah oleh

Piyush AggarwalJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

IRDM Assignment-I PDF

Diunggah oleh

Piyush AggarwalHak Cipta:

Format Tersedia

Dated: 31st July, 2013

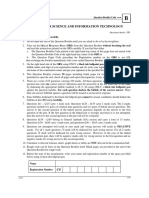

Jaypee Institute of Information Technology, Noida Sector-128 Department of CSE & IT Information Retrieval and Data Mining (10B1NCI736)

Assignment-I

Q.1 The following list of Rs and Ns represents relevant (R) and nonrelevant (N) returned documents in a ranked list of 20 documents retrieved in response to a query from a collection of 10,000 documents. The top of the ranked list (the document the system thinks is most likely to be relevant) is on the left of the list. This list shows 6 relevant documents. Assume that there are 8 relevant documents in total in the collection. R R N NNNNN R N R N NN R N NNN R a. What is the precision of the system on the top 20? b. What is the F1 on the top 20? c. What is the un-interpolated precision of the system at 25% recall? d. What is the interpolated precision at 33% recall? e. Assume that these 20 documents are the complete result set of the system. What is the MAP for the query? Assume, now, instead, that the system returned the entire 10,000 documents in a ranked list, and these are the first 20 results returned. f. What is the largest possible MAP that this system could have? g. What is the smallest possible MAP that this system could have? h. In a set of experiments, only the top 20 results are evaluated by hand. The result in (e) is used to approximate the range (f)(g). For this example, how large (in absolute terms) can the error for the MAP be by calculating (e) instead of (f) and (g) for this query? (Answer: (a.) Precision = 0.3, (b.) F-measure = 0.43 (c.) 1, 2/3, 2/4, 2/5, 2/6, 2/7, (d.) 4/11 = 0.364 (e.) MAP=0.555 (f.) MAP largest =0.503 MAP largest = 0.417 (h) Ehe error is in [ 0.052, 0.138].

Q.2 Below is a table showing how two human judges rated the relevance of a set of 12 documents to a particular information need (0 = nonrelevant, 1 = relevant). Let us assume that youve written an IR system that for this query returns the set of documents{4, 5, 6, 7, 8}.

docID 1 2 3 4 5 6 7 8 9 10 11 12

Judge1 0 0 1 1 1 1 1 1 0 0 0 0

Judge2 0 0 1 1 0 0 0 0 1 1 1 1

a. Calculate the kappa measure between the two judges. b. Calculate precision, recall, and F1 of your system if a document is considered relevant only if the two judges agree. c. Calculate precision, recall, and F1 of your system if a document is considered relevant if either judge thinks it is relevant. (Answer: (a.) Kappa = -1/3 (b.) P =0.2, R= 0.5 , and F-measure(F1) = 0.286 (c.) P= 1, R=0.5, and F1=0.667) Q.3 Consider the following two queries (Query 1 and Query 2). Calculate the MAP for these results.

(Answer: MAP= 0.594)

Q.4 Consider the table of term frequencies for 3 documents denoted Doc1, Doc2, Doc3 in Figure 6.9. Compute the tf-idf weights for the terms car, auto, insurance, best, for each document, using the idf values from Figure 6.8

(Answer: car auto insurance best Doc1 44.55 6.24 0 21 Doc2 6.6 68.64 53.46 0 Doc3 39.6 0 46.98 25.5

Q. 5 How does the base of the logarithm in computation of IDF affect the score calculation in Q. 4? How does the base of the logarithm affect the relative scores of two documents on a given query? Q. 6 Use the the tf-idf weights computed in Q. 4. Compute the Euclidean normalized document vectors for each of the documents, where each vector has four components, one for each of the four terms. (Answer: Doc1 = [0.8974, 0.1257, 0, 0.4230], Doc2 =[0.0756, 0.7867, 0.6127, 0], and Doc3= [0.5953, 0, 0.7062, 0.3833] )

Q. 7 Use the term weights as computed in Q. 6, rank the three documents by computed score for the query car insurance, for each of the following cases of term weighting in the query: (a.) The weight of a term is 1 if present in the query, 0 otherwise. (b. ) Euclidean normalized idf. (Answer: (a.) Ranking is Doc3, Doc1, Doc2 as score (q, doc1) =0.8974, score (q, doc2) =0.6883, score (q, doc3) =1.3015 (b.) Ranking is Doc2, Doc3, Doc1 as score (q, doc1) =0.6883, score (q, doc2) =0.7975, score (q, doc3) =0.7823) Q. 8 Compute the vector space similarity between the query digital cameras and the document digital cameras and video cameras by filling out the empty columns in Table 6.1. Assume N = 10,000,000, logarithmic term weighting (wf columns) for query and document, idf weighting for the query only and cosine normalization for the document only. Treat and as a stop word. Enter term counts in the tf columns. What is the final similarity score? Table 6.1

(Answer: Similarity score is 3.12) Q.9 Refer to the tf and idf values for four terms and three documents in Q. 4. Compute the two top scoring documents on the query best car insurance for each of the following weighing schemes: (i) ntc.atc. (ii.) lnc.ltn (iii.) lnc.ltc (Answer: Top two scoring documents are doc3, doc1 as score (q, doc1) =0.762, score (q, doc2) =0.657, score (q, doc3) =0.916 )

Note: Last date of submission is 30th August, 2013 by 5:00 p.m.)

Anda mungkin juga menyukai

- Learn Mandarin Chinese: Most Popular Chinese Tales With AnimalsDokumen65 halamanLearn Mandarin Chinese: Most Popular Chinese Tales With AnimalseChineseLearning100% (6)

- Lover Album LyricsDokumen34 halamanLover Album LyricsMichael PlanasBelum ada peringkat

- Bruce Lyon - Occult CosmologyDokumen55 halamanBruce Lyon - Occult Cosmologyeponymos100% (1)

- Gate-Cs 2008Dokumen31 halamanGate-Cs 2008tomundaBelum ada peringkat

- Midterm2006 Sol Csi4107Dokumen9 halamanMidterm2006 Sol Csi4107martin100% (2)

- Gate Book - CseDokumen291 halamanGate Book - Cseadityabaid4100% (3)

- Alum Rosin SizingDokumen9 halamanAlum Rosin SizingAnkit JainBelum ada peringkat

- Dark Elves WarbandDokumen9 halamanDark Elves Warbanddueydueck100% (1)

- LP 1st ObservationDokumen6 halamanLP 1st ObservationMichael AnoraBelum ada peringkat

- Computer Processing of Remotely-Sensed Images: An IntroductionDari EverandComputer Processing of Remotely-Sensed Images: An IntroductionBelum ada peringkat

- Programming and Data Structure by NODIADokumen55 halamanProgramming and Data Structure by NODIAJyoti GoswamiBelum ada peringkat

- Interference Measurement SOP v1.2 Sum PDFDokumen26 halamanInterference Measurement SOP v1.2 Sum PDFTeofilo FloresBelum ada peringkat

- MH 3511 Midterm 2018 So LNDokumen5 halamanMH 3511 Midterm 2018 So LNFrancis TanBelum ada peringkat

- Q2007Apr FE AM Questions WHLDokumen32 halamanQ2007Apr FE AM Questions WHLPaolo ZafraBelum ada peringkat

- ProblemsDokumen22 halamanProblemsSam LankaBelum ada peringkat

- Mid Semster Exam QPDokumen2 halamanMid Semster Exam QPKriti Goyal100% (1)

- PgtrbcomputerscienceDokumen99 halamanPgtrbcomputerscienceDesperado Manogaran MBelum ada peringkat

- ETC1010 Paper 1Dokumen9 halamanETC1010 Paper 1wjia26Belum ada peringkat

- The British Computer Society The Bcs Professional ExaminationsDokumen4 halamanThe British Computer Society The Bcs Professional ExaminationsOzioma IhekwoabaBelum ada peringkat

- DW - Compre SolutionDokumen7 halamanDW - Compre SolutionDivyanshu TiwariBelum ada peringkat

- GATE - 2013 - Computer Science - Question Paper - Paper Code CDokumen20 halamanGATE - 2013 - Computer Science - Question Paper - Paper Code CCode BlocksBelum ada peringkat

- 2010apr FE AM QuestionsDokumen33 halaman2010apr FE AM Questionsaprilfool5584Belum ada peringkat

- A New Imputation Method For Missing Attribute Values in Data MiningDokumen6 halamanA New Imputation Method For Missing Attribute Values in Data MiningNarendraSinghThakurBelum ada peringkat

- GATE CS Question 2013Dokumen20 halamanGATE CS Question 2013rrs_1988Belum ada peringkat

- DS 432 Assignment I 2020Dokumen7 halamanDS 432 Assignment I 2020sristi agrawalBelum ada peringkat

- CSI 4107 - Winter 2016 - MidtermDokumen10 halamanCSI 4107 - Winter 2016 - MidtermAmin Dhouib0% (1)

- CS2013 PDFDokumen20 halamanCS2013 PDFAnkit GoyalBelum ada peringkat

- Math 3101 ProbsDokumen29 halamanMath 3101 ProbsChikuBelum ada peringkat

- 2010oct FE AM Questions PDFDokumen34 halaman2010oct FE AM Questions PDFĐinh Văn Bắc ĐinhBelum ada peringkat

- 2017A IP QuestionDokumen36 halaman2017A IP QuestionNJ DegomaBelum ada peringkat

- GATE 2016 CS Set 2 Answer KeyDokumen24 halamanGATE 2016 CS Set 2 Answer KeysanketsdiveBelum ada peringkat

- Assignment3 Ans 2015 PDFDokumen11 halamanAssignment3 Ans 2015 PDFMohsen FragBelum ada peringkat

- Gate-Cs 2005Dokumen31 halamanGate-Cs 2005tomundaBelum ada peringkat

- Computer Science and Engineering - Full Paper - 2010Dokumen17 halamanComputer Science and Engineering - Full Paper - 2010गोपाल शर्माBelum ada peringkat

- ECO4016F 2011 Tutorial 7Dokumen6 halamanECO4016F 2011 Tutorial 7Swazzy12Belum ada peringkat

- Question Paper3Dokumen12 halamanQuestion Paper3Divya KakumanuBelum ada peringkat

- GATE-2015 AnswerKeysDokumen16 halamanGATE-2015 AnswerKeysAmit JindalBelum ada peringkat

- 94 Gre CSDokumen23 halaman94 Gre CSSudhanshu IyerBelum ada peringkat

- 18CSO106T Data Analysis Using Open Source Tool: Question BankDokumen26 halaman18CSO106T Data Analysis Using Open Source Tool: Question BankShivaditya singhBelum ada peringkat

- Midsem I 31 03 2023Dokumen12 halamanMidsem I 31 03 2023MUSHTAQ AHAMEDBelum ada peringkat

- Section 2: Quantitative and Logical Reasoning Most of The Questions Were From TCS Old Papers - Only The Data Get ChangeDokumen17 halamanSection 2: Quantitative and Logical Reasoning Most of The Questions Were From TCS Old Papers - Only The Data Get ChangePradeep TiwariBelum ada peringkat

- CS373 Homework 1: 1 Part I: Basic Probability and StatisticsDokumen5 halamanCS373 Homework 1: 1 Part I: Basic Probability and StatisticssanjayBelum ada peringkat

- CS: Computer Science and Information TechnologyDokumen20 halamanCS: Computer Science and Information Technologyavi0341Belum ada peringkat

- Statistics Handbk Act09Dokumen6 halamanStatistics Handbk Act09Shan TiBelum ada peringkat

- Answer: (C) : - CS-GATE-2013 PAPERDokumen17 halamanAnswer: (C) : - CS-GATE-2013 PAPERkomalpillaBelum ada peringkat

- MS-Computer Science-XIIDokumen13 halamanMS-Computer Science-XIIMayank Jha100% (2)

- Section A Module 1: Discrete Mathematics Answer BOTH QuestionsDokumen9 halamanSection A Module 1: Discrete Mathematics Answer BOTH Questionsapi-336954866Belum ada peringkat

- TCS Papers1Dokumen45 halamanTCS Papers1Moushumi DharBelum ada peringkat

- Com 124 Exam 2019 Regular Marking SchemeDokumen10 halamanCom 124 Exam 2019 Regular Marking SchemeOladele CampbellBelum ada peringkat

- XZCXDokumen6 halamanXZCXUmer Sayeed SiddiquiBelum ada peringkat

- Feb 2Dokumen15 halamanFeb 2Abhi RockzzBelum ada peringkat

- Class-11 49Dokumen8 halamanClass-11 49Himanshu TiwariBelum ada peringkat

- QM - Exam Statistics & Data Management 2019Dokumen8 halamanQM - Exam Statistics & Data Management 2019Juho ViljanenBelum ada peringkat

- Gate Cs Paper 2003Dokumen24 halamanGate Cs Paper 2003shreebirthareBelum ada peringkat

- Exam1 s16 SolDokumen10 halamanExam1 s16 SolVũ Quốc NgọcBelum ada peringkat

- IMSL IDL With The IMSL Math Library and Exponet Gra PDFDokumen64 halamanIMSL IDL With The IMSL Math Library and Exponet Gra PDFAshoka VanjareBelum ada peringkat

- CS Gate 2006Dokumen31 halamanCS Gate 2006Saurabh Dutt PathakBelum ada peringkat

- TCS Paper Reasoning - 25 September 2004Dokumen4 halamanTCS Paper Reasoning - 25 September 2004NandgulabDeshmukhBelum ada peringkat

- Assignment 1+2 IME MA 244L 1Dokumen12 halamanAssignment 1+2 IME MA 244L 1Muhammad Asim Muhammad ArshadBelum ada peringkat

- TCS ReasoningDokumen6 halamanTCS Reasoningapi-3818780Belum ada peringkat

- Punjab Technical University Jalandhar: Entrance Test For Enrollment in Ph.D. ProgrammeDokumen8 halamanPunjab Technical University Jalandhar: Entrance Test For Enrollment in Ph.D. ProgrammeJaspal SinghBelum ada peringkat

- End SemDokumen3 halamanEnd SemSathiya JothiBelum ada peringkat

- Class 10 SampleDokumen8 halamanClass 10 SampleraviBelum ada peringkat

- 2019S IP QuestionDokumen40 halaman2019S IP QuestionNJ DegomaBelum ada peringkat

- F. Y. B. Sc. (Computer Science) Examination - 2010: Total No. of Questions: 5) (Total No. of Printed Pages: 4Dokumen76 halamanF. Y. B. Sc. (Computer Science) Examination - 2010: Total No. of Questions: 5) (Total No. of Printed Pages: 4Amarjeet DasBelum ada peringkat

- AP Computer Science Principles: Student-Crafted Practice Tests For ExcellenceDari EverandAP Computer Science Principles: Student-Crafted Practice Tests For ExcellenceBelum ada peringkat

- ?????Dokumen89 halaman?????munglepreeti2Belum ada peringkat

- Single Nozzle Air-Jet LoomDokumen7 halamanSingle Nozzle Air-Jet LoomRakeahkumarDabkeyaBelum ada peringkat

- Ebp Cedera Kepala - The Effect of Giving Oxygenation With Simple Oxygen Mask andDokumen6 halamanEbp Cedera Kepala - The Effect of Giving Oxygenation With Simple Oxygen Mask andNindy kusuma wardaniBelum ada peringkat

- The Beginningof The Church.R.E.brownDokumen4 halamanThe Beginningof The Church.R.E.brownnoquierodarinforBelum ada peringkat

- ASTM D4852-88 (Reapproved 2009) E1Dokumen3 halamanASTM D4852-88 (Reapproved 2009) E1Sandra LopesBelum ada peringkat

- SWOT Analysis Textile IndustryDokumen23 halamanSWOT Analysis Textile Industrydumitrescu viorelBelum ada peringkat

- List BRG TGL 12Dokumen49 halamanList BRG TGL 12Rizal MuhammarBelum ada peringkat

- 1 An Introduction Basin AnalysisDokumen29 halaman1 An Introduction Basin AnalysisMuhamadKamilAzharBelum ada peringkat

- Colorado Wing - Sep 2012Dokumen32 halamanColorado Wing - Sep 2012CAP History LibraryBelum ada peringkat

- ISO 13920 - Tolerâncias para Juntas SoldadasDokumen7 halamanISO 13920 - Tolerâncias para Juntas SoldadasRicardo RicardoBelum ada peringkat

- CE-401CE 2.0 Network Diagrams 2015Dokumen83 halamanCE-401CE 2.0 Network Diagrams 2015Shubham BansalBelum ada peringkat

- Problem Set Solution QT I I 17 DecDokumen22 halamanProblem Set Solution QT I I 17 DecPradnya Nikam bj21158Belum ada peringkat

- HP Compaq 6531s Inventec Zzi MV Rev A03 (6820s)Dokumen54 halamanHP Compaq 6531s Inventec Zzi MV Rev A03 (6820s)y2k_yah7758Belum ada peringkat

- Cross Border Pack 2 SumDokumen35 halamanCross Border Pack 2 SumYến Như100% (1)

- DH-IPC-HDBW1231E: 2MP WDR IR Mini-Dome Network CameraDokumen3 halamanDH-IPC-HDBW1231E: 2MP WDR IR Mini-Dome Network CameraDeltaz AZBelum ada peringkat

- Introducing RS: A New 3D Program For Geotechnical AnalysisDokumen4 halamanIntroducing RS: A New 3D Program For Geotechnical AnalysisAriel BustamanteBelum ada peringkat

- What Is MetaphysicsDokumen24 halamanWhat Is MetaphysicsDiane EnopiaBelum ada peringkat

- Dividing Fractions : and What It MeansDokumen22 halamanDividing Fractions : and What It MeansFlors BorneaBelum ada peringkat

- Daitoumaru and Panstar GenieDokumen41 halamanDaitoumaru and Panstar GeniePedroBelum ada peringkat

- Detailed Lesson Plan in Science Grade 10Dokumen9 halamanDetailed Lesson Plan in Science Grade 10christian josh magtarayoBelum ada peringkat

- Baby Checklist: Room Furniture Baby Wear Baby BeddingDokumen2 halamanBaby Checklist: Room Furniture Baby Wear Baby BeddingLawrence ConananBelum ada peringkat

- CERN Courier - Digital EditionDokumen33 halamanCERN Courier - Digital EditionFeriferi FerencBelum ada peringkat

- Apexification Using Different Approaches - Case Series Report With A Brief Literature ReviewDokumen12 halamanApexification Using Different Approaches - Case Series Report With A Brief Literature ReviewIJAR JOURNALBelum ada peringkat