DiNuovo 2012 LearningMentalImagery, DAVID Cota

Diunggah oleh

Erika SánchezDeskripsi Asli:

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

DiNuovo 2012 LearningMentalImagery, DAVID Cota

Diunggah oleh

Erika SánchezHak Cipta:

Format Tersedia

Autonomous learning in humanoid robotics through mental imagery

a bs t r a c t

In this paper we focus on modeling autonomous learning to improve performance of a humanoid robot through a modular artificial neural networks architecture. A model of a neural controller is presented, which allows a humanoid robot iCub to autonomously improve its sensorimotor skills. This is achieved by endowing the neural controller with a secondary neural system that, by exploiting the sensorimotor skills already acquired by the robot, is able to generate additional imaginary examples that can be used by the controller itself to improve the performance through a simulated mental training. Results and analysis presented in the paper provide evidence of the viability of the approach proposed and help to clarify the rational behind the chosen model and its implementation.

Introduction Despite the wide range of potential applications, the fast grow- ing field of humanoid robotics still poses several interesting chal- lenges, both in terms of mechanics and autonomous control. Indeed, in humanoid robots, sensor and actuator arrangements de- termine a highly redundant system which is traditionally difficult to control, and hard coded solutions often do not allow further im- provement and flexibility of controllers. Letting those robots free to learn from their own experience is very often regarded as the unique real solution that will allow the creation of flexible and au- tonomous controllers for humanoid robots in the future. In this paper we aim to present a model of a controller based on neural networks that allows the humanoid robot iCub to au- tonomously improve its sensorimotor skills. This is achieved by en- dowing the controller with two interconnected neural networks: a feedforward neural network that directly controls the robots joints and a recurrent neural network that is able to generate additional imaginary training data by exploiting the sensorimotor skills ac- quired by the robot. Those new data, in turn, can be used to further improve the performance of the feedforward controller through a simulated mental training. We use the term imaginary on purpose here, as we take inspi- ration from the concept of mental imagery in humans. Indeed, we think that among the many hypotheses and models already tested in the field of robot control, the use of mental imagery as a cogni- tive tool capable of enhancing a robots performance is both inno- vative and well grounded in experimental data. As a matter of fact, much psychological research to date clearly demonstrates the tight connection between mental training and motor performance im- provement as well as the primary role of mental imagery in motor performance and in supporting human highlevel cognitive capa- bilities involved in complex motor control and the anticipation of events. In psychology, a mental image is defined as an internal experi- ence that resembles a real perceptual experience and that occurs in the absence of appropriate external stimuli, while motor imagery has been defined as mentally simulating a given action without actual execution. The role of mental imagery has been researched extensively over the past 50 years in areas of motor learning and psychology. Nevertheless, although the effects of mental practice on physical performance have been well established, the processes involved and explanations are still largely unclear. Therefore, un- derstanding the process behind the human ability to create mental images and motor simulation is still a crucial issue, both from a scientific and a computational point of view. The rest of the paper is organized as follows: Section 2 reviews some previous work about performance improvement in human and humanoids through mental imagery. Section 3 presents mate- rials and methods used in this work by describing in detail the hu- manoid robot platform, the control systems, and the experimental scenario. Section 4 shows experimental results for two case studies where we studied and analyzed the performance of two different neural architectures and tested the effect of the artificial mental practice to enhance motor performance in the robotic scenario. In Section 4.3 we discuss the results obtained and the impact of us- ing artificial mental practice for improving motor performance of robots and humans. 2. Mental imagery and performance improvements in human and humanoids The processes behind the human ability to create mental images have recently become an object of renewed interest in cognitive science and much evidence from empirical studies demonstrates the relationship between bodily experiences and mental processes that actively involve body representations. Neuropsychological research has widely shown that the same brain areas are activated when seeing or recalling images (Ishai, Ungerleider, & Haxby, 2000) and that areas controlling perception are also recruited for generating and maintaining mental images in the working memory. Jeannerod (1994) observed that the primary motor cortex M1 is activated during the production of motor images, as well as during the production of active movements. This fact can explain some interesting psychological results, such as the fact that the time needed for an imaginary and mentally simulated walk is proportional to the time spent in performing the real action (Decety, Jeannerod, & Prablanc, 1989). Indeed, as empirical evidence suggests, the imagined movements of body parts rely strongly on mechanisms associated with the actual execution of the same movements (Jeannerod, 2001). These studies, together with many others (Markman, Klein, & Suhr, 2009), demonstrate how the images that we have in mind might influence movement execution and the acquisition and refinement of motor skills. For this reason, understanding the relationship that exists between mental imagery and motor activities has become a relevant topic in domains in which improving motor skills is crucial for obtaining better performance, such as in sport and rehabilitation. Therefore, it is also possible to exploit mental imagery for improving a humans motor performance and this is achieved thanks to special mental training techniques. Mental training is widely used among professional athletes, and many researchers began to study its beneficial effects for the rehabilitation of patients, in particular after cerebral lesions. In Munzert, Lorey, and Zentgraf (2009), for example, the authors analyzed motor imagery during mental training procedures in patients and athletes. Their findings support the notion that mental training procedures can be applied as a therapeutic tool in rehabilitation and in applications for empowering standard training methodologies. Other studies have shown that motor skills can be improved through mental imagery techniques. In Jeannerod and Decety (1995), for example, the authors discuss how training based on mental simulation can influence motor performance in terms of muscular strength and reduction of variability. In Yue and Cole (1992), a convincing result is presented, showing that imaginary fifth finger abductions led to an increased level of muscular strength. The authors note that the observed increment in muscle strength is not due to a gain in muscle mass. Rather, it is based on higher-level changes in cortical maps, presumably resulting in a more efficient recruitment of motor units. Those findings are in line with other studies, specifically focused on motor imagery, which have shown enhancement of mobility range (Mulder, Zijlstra, Zijlstra, & Hochstenbach, 2004) or increased accuracy (Ygez et al., 1998) after mental training. Interestingly, it should be noted that such effects operate both ways: mental imagery can influence motor performance as well as the extent of physical practice can change the areas activated by mental imagery, e.g. Takahashi et al. (2005). As a result of these studies, new opportunities for the use of mental training have opened up in collateral fields, such as medical and orthopedictraumatologic rehabilitation. For instance, mental practice has been used to rehabilitate motor deficits in a variety of neurological disorders (Jackson, Lafleur, Malouin, Richards, & Doyon, 2001). Mental training can be successfully applied in helping a person to regain lost movement patterns after joint operations or joint replacements and in neurological reha- bilitation. Mental practice has also been used in combination with actual practice to rehabilitate motor deficits in a patient with sub- acute stroke (Verbunt et al., 2008), and several studies have also shown improvement in strength, function, and use of both upper and lower extremities in chronic stroke patients (Nilsen, Gillen, & Gordon, 2010; Page, Levine, & Leonard, 2007). In sport, beneficial effects of mental training for the perfor- mance enhancement of athletes are well established and sev- eral works are focused on this topic

with tests, analysis and in new training principles, e.g. Morris, Spittle, and Watt (2005), Rushall and Lippman (1998), Sheikh and Korn (1994) and Wein- berg (2008). In Hamilton and Fremouw (1985), for example, a cognitive-behavioral training program was implemented to im- prove the free-throw performance of college basketball players, finding improvements of over 50%. Furthermore, the trial in Smith, Holmes, Whitemore, Collins, and Devonport (2001), where mental imagery is used to enhance the training phase of hockey athletes to score a goal, showed that imaginary practice allowed athletes to achieve better performance. Despite the fact that there is ample ev- idence that mental imagery, and in particular motor imagery, con- tributes to enhancing motor performance, the topic still attracts new research, such as (Guillot, Nadrowska, & Collet, 2009) that investigated the effect of mental practice to improve game plans or strategies of play in open skills in a trial with 10 female pick- ers. Results of the trial support the assumption that motor imagery may lead to improved motor performance in open skills when com- pared to the no-practice condition. Another recent paper Wei and Luo (2010) demonstrated that sports experts showed more focused activation patterns in pre-frontal areas while performing imagery tasks than novices. Interestingly, when it comes to robotics and artificial systems, the fact that mental training and mental imagery techniques are widening their application potential in humans has only recently attracted the attention of researchers in humanoid robotics. A model-based learning approach for mobile robot navigation was presented in Tani (1996), where it is discussed how a behavior-based robot can construct a symbolic process that accounts for its deliberative thinking processes using internal models of the environment. The approach is based on a forward modeling scheme using recurrent neural learning, and results show that the robot is capable of learning grammatical structure hidden in the geometry of the workspace from the local sensory inputs through its navigational experiences. Experiments on internal simulation of perception using ANN robot controllers are presented in Ziemke, Jirenhed, and Hesslow (2005). The paper focuses on a series of experiments in which FFNNs were evolved to control collision-free corridor following behavior in a simulated Khepera robot and predict the next time steps sensory input as accurately as possible. The trained robot is in some cases actually able to move blindly in a simple environment for hundreds of time steps, successfully handling several multi-step turns. In Nishimoto and Tani (2009), the authors present a neuro- robotics experiment in which developmental learning processes of the goal-directed actions of a robot were examined. The robot controller was implemented with a Multiple Timescales RNN model, which is characterized by the co-existence of slow and fast dynamics in generating anticipatory behaviors. Through the iterative tutoring of the robot for multiple goal-directed actions, interesting developmental processes emerged. It was observed that behavior primitives are self-organized in the fast context network part earlier and sequencing of them appears later in the slow context part. It was also observed that motor images are generated in the early stage of development. A recent paper, (Gigliotta, Pezzulo, & Nolfi, 2011), shows how simulated robots evolved for the ability to display a context-dependent periodic behavior can spontaneously develop an internal model and rely on it to fulfill their task when sensory stimulation is temporarily unavailable. Results suggest that internal models might have arisen for behavioral reasons and successively adapted for other cognitive functions. Moreover, the obtained results suggest that self-generated internal states should not necessarily match in detail the corresponding sensory states and might rather encode more abstract and motororiented information. In a previous paper, (Di Nuovo, Marocco, Di Nuovo, & Cangelosi, 2011), the authors presented preliminary results on the use of artificial Recurrent Neural Networks (RNN) to model spatial imagery in cognitive robotics. In a soccer scenario, in which the task was to acquire the goal and shoot, the robot showed the capability to estimate the position in the field from its proprioceptive and visual information. In another recent work, (Di Nuovo, De La Cruz, Marocco, Di Nuovo, & Cangelosi, 2012), the authors present new results showing that the robot used is able to imagine or mentally recall and accurately execute movements learned in previous training phases, strictly on the basis of the verbal commands. Further tests show that data obtained with imagination could be used to simulate mental training processes, such as those that have been employed with human subjects in sports training, in order to enhance precision in the performance of new tasks through the association of different verbal commands. 3. Modeling autonomous learning through mental imagery In this paper, we present an implementation of a general model for robot control that allows autonomous learning through mental imagery. This model is based on a modular architecture in which each module has a neural network performing different tasks. The two networks in the proposed architecture are trained simultaneously using the same information, but only one acts directly as a robot controller, while the other is used to generate imagined data in order to build an additional training set, which allows mental training for the controller network. To this end, the former network should have the best performance in controlling the robot, while the latter should be the best suited to build new data from conditions not experienced before. In other words, the first network should be able to use the available information to obtain the values of certain system parameters with the lower possible error, while the second network should be able to use real information to generalize and create new imagined information, such as for data ranges not present in the real learning set, as in the case discussed in this paper. Fig. 1 presents the proposed modular architecture, that comprises two neural networks to model action control and autonomous learning through mental imagery. To prove the model, we built an implementation of such a model and tested it by controlling the iCub robot platform. The experimental scenario is the realization of a ballistic movement of the right arm, with the aim to throw a small object as far as possible according to an externally given velocity for the movement. Ballistic movements can be defined as rapid movements initiated by muscular contraction and continued by momentum (Bartlett, 2007). These movements are typical in sport actions, such as throwing and jumping (e.g. a soccer kick, a tennis serve or a boxing punch). From the brain control point of view, definitions of ballistic movements vary according to different fields of study. As Gan and Hoffmann (1988) noted, some psychologists refer to ballistic movements as rapid movements where there is no visual feedback (e.g. Welford, 1968), while some neurophysiologists classify them as a rapid movement in the absence of corrective feedback of exteroceptive origin such as visual and auditory (e.g. Denier Van Der Gon & Wadman, 1977). In this article, we focus on two main features that characterize a ballistic movement: (1) it is executed by the brain with a pre-defined order which is fully programmed before the actual movement realization and (2) it is executed as a whole and will not be subject to interference or modification until its completion. This definition of ballistic movement implies that proprioceptive feedback is not needed to control the movement and that its development is only based on starting conditions (Zehr & Sale, 1994). 3.1. The humanoid robot platform and the experimental scenario The robotic model used for the experiments presented here is the iCub humanoid robot controlled by artificial neural networks. The iCub is an open-source humanoid robot platform designed to facilitate developmental robotics research, e.g. Sandini, Metta, and Vernon (2007). This platform is a child-like humanoid robot 1.05 m tall, with 53 degrees of freedom distributed in the head, arms, hands and legs. A computer simulation model of the iCub has also been developed (Tikhanoff et al., 2008) with the aim to reproduce the physics and the dynamics of the physical iCub as accurately as possible. The simulator allows the creation of realistic physical scenarios in which the robot can interact with a virtual environment. Physical constraints and interactions that occur between the environment and the robot are simulated using a software library that provides an accurate simulation of rigid body dynamics and collisions. For the present experiments we used the iCub simulator to run all the experiments and testing, as it is currently dangerous for the robots mechanics to perform movements at the speed required by the present scenario. As stated earlier, the task of the robot was to throw a small cube of side size 2 cm and weight 40 g, to a given distance indicated by the experimenter. As the distance is directly related to the angular velocity of the shoulder pitch, to indicate the distance we decided to use the angular velocity as the external input provided to the robot. During the throwing movement, joint angle information over time was sampled in order to build 25 inputoutput sequences corresponding to different velocities of the movement, that is, different distances. For modeling purposes and for simplifying the motor implementation of the ballistic movement, we used only two of the available degrees of freedom in this work: the shoulder pitch and the hand wrist pitch. In addition, in order to model the throw

of the object, a primitive action already available in the iCub simulator was used that allows the robot to grab and release an object attached to its palm. This primitive produces a non-realistic behavior, as the object is virtually glued

Fig. 2. Three phases of the movement: (left) Preparation phase: the object is grabbed and shoulder and wrist joints are positioned at 90; (center) Acceleration phase: the shoulder joint accelerates until a given angular velocity is reached, while the wrist rotates down; (right) Release phase: the object is released and thrown away.

given speed in input and the robots arm is activated according to the duration indicated by the FFNN. On the other hand, the RNN was chosen because of its capability to predict and extract new in- formation from previous experiences, so as to produce new imag- inary training data which are used to integrate the training set of the FFNN. 3.2.1. Feedforward neural network for robot control The robot is controlled by software that runs in parallel with the simulator. The controller is a FFNN trained to predict the duration of the movement for a given angular velocity in input. The FFNN comprises one neuron in the input layer, which is fed with the desired angular velocity of the shoulder by the experimenter, four neurons in the hidden layer and one neuron in the output layer. The output unit encodes the duration of the movement given the velocity in input. Activations of hidden and output neurons yi are calculated by passing the net input ui to the sigmoid function, as it is described in Eqs. (1) and (2): behavior by reducing the computation of the physical movements required in closing and opening the hand and the computation of the numerous collisions between the object and the bodies that constitute the robots hand: a computational limitation which is obviously not relevant in a hypothetical implementation of the task with the real robot. Fig. 2 presents the throwing movement, which can be divided into three main phases: 1. Preparation. In this phase the object is grabbed and the shoulder where wij is the synaptic weight that connects neuron i with neuron j and ki is the bias of neuron i. We used a classic back-propagation algorithm as the learning process (Rumelhart, Hinton, & Williams, 1988), the goal of which is to find optimal values of synaptic weights that minimize the error E , defined as the error between the training set and the actual output generated by the network. The error function E is calculated as follows: pitch is positioned 90 backward. The right hand wrist is also moved up to the 90 position. 2. Acceleration. The shoulder pitch motor accelerates until a given 1 p

angular velocity is reached. Meanwhile, the right hand wrist rotates down with an angular velocity that is half that of the shoulder pitch. 3. Release. In this phase the object is released and the movement is stopped. It is crucial for the task that the release point is as close as possible to the ideal point, so as to maximize the distance covered by the object before hitting the ground. According to the iCub platform reference axes, the positive versus of the shoulder pitch is backward. For this reason, since the movement is done forward, angular velocities and release points are negative. According to ballistics theory, the ideal release angle is 45. But elbow and wrist positions, that count 15, must be taken into account, so the ideal release point is when the shoulder pitch is at 30 and the wrist pitch at 15. It should be noted here that, since ballistic movements are by definition not affected by external interferences, the training can be performed without considering the surrounding environment, as well as vision and auditory information. 3.2. Simulating mental training As said above, the robots cognitive system is made of a feedfor- ward (FFNN) neural network that controls the actual robots joints, which is coupled with a sort of imagery module which is mod- eled through a particular implementation of a recurrent neural net- work. The choice of the FFNN architecture as a controller was made according to the nature of the problem, which does not need pro- prioceptive feedback during movement execution. Therefore, the FFNN sets the duration of the entire movement according to the where p is the number of outputs, ti is the desired activation value of the output neuron i and yi is the actual activation of the same neuron produced by the neural network, calculated using Eqs. (1) and (2). During the training phase, the synaptic weights at learning step n+1 are updated using the error calculated at the previous learning step n, which in turn depends on the error E . Synaptic weights and bias were updated according to the following Eq. (4): 1wij (n + 1) = i yi (n) (4) where wij is the synaptic weight that connects neuron i with neuron j, yi is the activation of neuron j, is the learning rate, and i is calculated by using (Eq. (5)) for the output neuron: i = (yj yj ) yj (1 yj ) (5) and (Eq. (6)) for the hidden neurons: M i = j wij yj (1 yj ) j=1

(6)

where M is the number of output neurons. The learning phase lasts one million (106 ) epochs with a learning rate of 0.1 and without a momentum factor, as better results were obtained. The network parameters are initialized with randomly chosen values in the range [0.1, 0.1]. The FFNN is trained by providing a desired angular velocity of the shoulder joint as input and the movement duration as output. After the duration is set by the FFNN, the arm is activated according to the desired velocity and for the duration indicated by the FFNN output. Therefore, the duration is not updated during the acceleration

Fig. 3. Recurrent neural network architecture. In imaginary mode proprioceptive input is obtained from previous motor outputs through the dotted link, meanwhile links with real proprioceptive input and motor/actuators (hatched boxes) are inhibited.

phase. In this way, to follow the current neuro-biological findings on ballistic movement in humans (Zehr & Sale, 1994), the duration of the movement is completely set in the preparation phase and the release will happen when the time elapsed is equal to the predicted duration. 3.2.2. Dual recurrent neural network for artificial mental imagery The artificial neural network architecture we used to model mental imagery is depicted in Fig. 3. It is a three layer Dual Re- current Neural Network (DRNN) with two recurrent sets of con- nections, one from the hidden layer and one from the output layer, which feed directly to the hidden layer through a set of context units added to the input layer. At each iteration of the neural net- work, the context units hold a copy of the previous values of the hidden and output units. This creates an internal memory of the network, which allows it to exhibit dynamic temporal behavior (Botvinick & Plaut, 2006). It should be noted that, in preliminary ex- periments, this architecture proved to show better performances and improved stability with respect to classical Elman (1990) or Jordan (1986) architectures. Those architectures only include one recurrent set of connections, respectively from the output layer or from the hidden layer (results not shown). The DRNN compre- hends 5 output neurons, 20 neurons in the hidden layer, and 31 neurons in the input layer (6 of them encode the proprioceptive in- puts from the robots joints and 25 are the context units). Neuron activations are computed according to Eqs. (1) and (2). The six pro- prioceptive inputs encode, respectively: the shoulder pitch angu- lar velocity (constant during the movement), positions of shoulder pitch and hand wrist pitch (at time t ), elapsed time, expected dura- tion time (at time t ) and the grab/release command (0 if the object is grabbed, 1 otherwise). The five outputs encode the predictions of shoulder and wrist positions at the next time step, the estima- tion of the elapsed time, the estimation of the movement duration and the grab/release state. The DRNN retrieves information from joint encoders to predict the movement duration during the accel- eration phase. The activation time step of the DRNN is 30 ms. The object is released when at time t1 the predicted time to release, tp , is lower than the next step time t2 . The error for training purposes is calculated as in Eq. (3) and the back-propagation algorithm described above is used to update synaptic weights and neuron biases, with a learning rate () of 0.2 and a momentum factor () of 0.6, according to the following where i is the error of the unit i calculated at the previous learning step n. To calculate the error at the first step of the back- propagation algorithm, network parameters are initialized with randomly chosen values in the range [0.1, 0.1]. The training lasts 10 000 epochs. The main difference between the FFNN and the DRNN is that, in the latter case, the training set consists in a series of inputoutput sequences. To model mental imagery, the outputs related to the motor activities are redirected to the corresponding inputs with an additional recurrent connection (the dotted one in Fig. 3), which is only activated when we ask the robot to mentally simulate the throwing of the object according to a certain angular velocity, i.e. when the network is used to model the imaginative process. In this particular imaginary mode, external connections from and to joint encoders and motor controllers are inhibited. These connections are shown in hatched boxes in Fig. 3. When the DRNN is used to create new training data for the FFNN only the last element of the imagined series is taken into account, as the duration output is maintained constant after the throw event. 3.2.3. Training data structure and generation In order to generate the data for training the networks, a Reference Benchmark Controller (RBC) was implemented. This controller plays the role of an expert tutor that provides examples for the neural controller. The algorithm works as follows. During the acceleration phase the controller calculates and updates the duration by checking the shoulder pitch position with a refresh- rate of 30 ms (benchmark timestep). In this way, it is able to predict the exact time to send the release command and throw the object. To deal with the uncertainty determined by the 30 ms refresh-rate, if the predicted time to release tp falls between two benchmark timesteps t1 and t2 , the update is stopped at t1 and the release command is sent at tp . This controller was both used to collect datasets to train the neural controller and to test the performance when the FFNN was autonomously controlling the robot in the simulator. In the preparation phase, the RBC, as well as the DRNN, sets the angular velocity of the shoulder pitch, then in the acceleration phase retrieves information from the joints to predict when the object should be released to reach the maximum distance. The simulator timestep was set to 10 ms. The dataset used during the learning phase of both the FFNN and the DRNN was built with data collected during the execution of the RBC with angular velocity of the shoulder pitch ranging from 150 to 850/s and using a step of 25/s. Thus the learning dataset comprises 22 input/output series. Similarly, the testing dataset was built in the range from 160 to 840/s and using a step of 40/s, and it comprises 18 input/output series. The learning and testing dataset for the FFNN comprises one pair of data: the angular velocity of the shoulder as input and the execution time, i.e. duration, as desired output. The learning and testing dataset for the DRNN comprehends sequences of 25 elements collected using a timestep of 0.03 s. All data in both learning and testing datasets are normalized in the range [0, 1]. For the purpose of the present work, we split both the learning and testing dataset into two subsets according to the duration of the movement: Slow range subset, that comprises examples of movements with duration greater than 0.3 s. Fast range subset, that comprises examples of movements with duration less than 0.3 s. Both learning and testing datasets have 7 examples in the slow subset and 11 examples in the fast subset. Labels slow and fast are given to subsets based on angular velocity of the shoulder pitch, with an absolute value of less than 400/s for the slow subset. For the fast subset, it is more than 400/s. It should be noted that

Table 1 Test cases and results with reference benchmark algorithm.

Test case

Angular velocity (/s)

Benchmark algorithm

Table 2 Full range training: comparison of average results of feedforward and recurrent artificial neural nets. No. Type Test Feedforward net Recurrent net Duration (s) Release point () Distance (m)

1 Slow 2 Slow 3 Slow 4 Slow 5 Slow 6 Slow 7 Slow 8 Fast 9 Fast 10 Fast

160 200 240 280 320 360 400 440 480 520

0.755 0.605 0.505 0.433 0.380 0.338 0.305 0.277 0.255 0.235 Release point 30.718 6.46 31.976 6.34 31.486 6.39

29.994 29.989 29.983 30.377 31.568 32.360 29.951 28.743 29.931 34.712

0.506 0.609 0.684 0.818 0.959 1.094 1.233 1.584 1.572 2.017 Release point type 33.345 11.96 28.088 22.18 30.132 18.21

range Duration Slow 0.472 1.75 Fast 0.202 0.87 Full 0.307 1.21

Duration 0.482 3.60 0.194 5.67 0.306 4.86

Table 3 FFNN: comparison of average performance improvement with artificial mental training. 11 12 13 14 15 Fast Fast Fast Fast Fast 560 600 640 680 720 0.219 0.205 0.192 0.181 0.171 33.102 29.889 31.472 32.254 32.237 2.030 2.191 2.476 2.710 3.007

Test range type Slow range only training Slow range plus mental training Duration Release point Duration Release point s Error (%) Error (%) s Error (%) Error (%) 16 Fast 17 Fast 18 Fast Full range 760 800 840 0.307 0.163 0.155 0.148 30.843 31.421 29.802 27.390 1.938 3.308 3.880 4.210

Slow 0.474 1.38 Fast 0.247 26.92 Full 0.335 16.99 Average Slow range Fast range

30.603 7.88 0.471 1.74 64.950 111.72 0.188 7.12 51.593 71.34 0.298 5.03

30.774 8.18 20.901 35.89 24.741 25.11

0.474 0.200

30.603 30.996

0.843 2.635

4.1. Ballistic movement control In this subsection we studied, separately, the performance the labels slow and fast are only given to better differentiate the two subsets with respect to the whole set. Indeed, movements in the slow range are also fast movements from a broader point of view. The slow subset in the test set is smaller than the fast subset because we want to study the performance of the proposed model with fast range cases, that end with an angular velocity more than double the maximum in the slow subset.

4. Performance improvement through mental imagery In this section we present and compare results of experiments that were done implementing the three iCub controllers described in the previous sections, that is the RBC, the FFNN and the DRNN. In particular, in Section 4.1 we compared the performance of the two neural networks architectures (FFNN and DRNN) with respect to the reference benchmark algorithm, in order to study characteristics of both architectures when they are trained with examples that cover the whole input range. In Section 4.2, we show results obtained training both FFNN and DRNN using only the slow training subset. Moreover, the DRNN was used to model the mental training to improve the performance of FFNN in situations not previously experienced. Discussion about the results of the experiments is in Section 4.3. To give greater detail on our experiments, Table 1 shows results on test cases with the reference benchmark algorithm. This data is used for error evaluation in comparisons presented in Tables 2 and 3. It should be noted that even for the benchmark algorithm it is difficult to release the object at the ideal point, that is when the shoulder pitch is at 30. This difficulty is because, after the release command is issued to the iCub simulator port from the control software, there is a variable latency before the command is executed. For this reason, since the control software tries to compensate for this delay using the average value and it stops the movement only when it receives the confirmation that the release command is executed, a slightly different release point can be observed in all cases. It should be stated that this latency problem is negligible with the simulator, as confirmed by results in Table 1, but its effect would be much more evident with the real robot implementation. However, similar problems can be found also in humans (Rossini, Di Stefano, & Stanzione, 1985), for this reason we believe that the use of a robotic platform helps to collect more realistic data, especially in this work. of both of the artificial neural network architectures presented to control the throwing action with respect to the reference benchmark algorithm. Table 2 presents numerical results obtained controlling the iCub robot with the reference benchmark algorithm and with the two artificial neural networks trained using the complete training set, i.e. both slow and fast subsets. Error columns in Table 2 show a comparison of the results obtained with the two neural nets with respect to the benchmark algorithm. The FFNN, despite its simplicity, shows very good performance in the whole range, with an average error of 1.21% in duration prediction. In particular, it is very effective in the fast range where it achieved just a 0.87% error. As expected, the DRNN performance is worse than the FFNN. Indeed, it averages 4.86% error in duration prediction, but this result is mainly due to the fast range in which the DRNN architecture has problems because the timestep could not be enough to efficiently cover the duration of the fast movements. The different performance of the two architectures could be used to model different expertise levels in mental simulation of action. In fact, the FFNN could be seen as an expert which is able to predict the right duration before the movement starts and therefore to control the movement efficiently in the whole range of velocity. On the other hand, the DRNN could be seen as a novice that is able to perform well in easy cases when there is enough time to collect proprioceptive information to efficiently predict the duration of the movement but with performance that worsens when proprioceptive information starts to degrade. This result shows that the architecture composed by both networks is able to replicate the behaviors shown in Beilock and Lyons (2009), where the performance of novice and expert golfers was compared against the time needed to perform a swing movement, both with and without imaging the movement. Indeed, by letting the novices take time to mentally rehearse and imagine the swing movement by focusing on the involved body movements, novices increase their performance, while experts performance is impaired. Conversely, when subjects were asked to perform the movement as fast as possible, experts greatly outperform novices, who tend to perform worse than normal under time pressure. Fig. 4 shows in detail errors in the test set obtained in experiments with the simulator. By testing the whole training set, the FFNN performs well both in fast and slow ranges with an error always lower than 2% (except for the slowest example), while the DRNN has only a few cases in which the error is lower than 2%. Fig. 4. Error comparison: the error on movements duration on test set of ballistic movements with different architectures. Dashed lines represent mean values.

Fig. 6. FFNN: comparison of duration of the movement with different training. Blue bars represent error after a full range training, orange bars show error for the network trained with slow range only, yellow bars show error after mental practice training. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.) Fig. 5. Duration of movements at different velocities for FFNN and DRNN, in the case in which both networks are trained only on the slow range training set. Therefore, we can conclude that the FFNN outperforms the DRNN when appropriately trained. On the other hand, the DRNN is better than the FFNN to approxi- mate the benchmark curve when both networks are trained solely on the slow range set. Fig. 5 shows that the DRNN curve is very close to the benchmark, while the curves of the FFNN and DRNN start to diverge at 480/s, i.e. right after the limit we imposed for the slow range, and the difference constantly increases with the an- gular velocity. This fact indicates that the DRNN is more capable of predicting the temporal dynamics of the movement than the FFNN. 4.2. Artificial mental training for performance improvement In this section we compare results, reported in Table 3, for the FFNN on three different case studies: Full range: The performance obtained by the FFNN when it is trained using the full range of examples, i.e., both slow and fast. Slow range only training : The performance obtained by the FFNN when it is trained using only the slow range subset. This case stressed the generalization capability of the controller when it is tested with the fast range subset, which is never experienced during the training. Slow range plus mental training : The performance obtained by the FFNN by training the slow range only, and by integrating the training set with additional data generated by the DRNN. The training set is built by adding to the real slow range dataset a new imagined dataset for the fast range obtained with mental training, i.e. from outputs of the DRNN operating in mental imagery mode. In other words, in this case the two architectures operate together as a single hierarchical architecture, in which first both nets are trained with the slow range subset, then the DRNN runs in mental imagery mode to build a new dataset of fast examples for the FFNN, and finally weights and biases of both nets are updated with an additional training using both the slow range subset and the new imagined one. Looking at Table 3 we can observe that the additional mental training improved the performance in controlling fast movements. The improvement is impressive (on average about 20% for

Fig. 7.

Distance reached by the object after throwing movements of varying velocities. Negative values represent the objects falling backward.

duration) and it is very significant for all cases in the fast subset (see Fig. 6). Results show that generalization capability of the DRNN is better than the FFNN, as

reported in Fig. 4. This feature helps to feed the FFNN with new data to cover the fast range, simulating mental training. In fact, as can be seen in Fig. 6 the FFNN, trained only with the slow subset is not able to foresee the trend of duration in the fast range. This implies that fast movements last longer than needed and, because the inclination angle is over 90, the object falls backward (Fig. 7). Note that the release point shown in the tables only refers to the position of the shoulder pitch, but, as we said, an additional 15 should be taken into account because of the elbow and hand positions. Thus, a release point over 75 means an inclination angle over 90. 4.3. Discussion From the results presented in previous sections we are able to draw some general remarks on the model and on the most appropriate way of taking advantage of artificial mental training in the neural network framework we have chosen for this work. First of all, results shown on Fig. 4 suggest that the FFNN appears to be the best architecture to model ballistic movement control, as it outperforms the DRNN in most of the cases when trained on the full training set. Indeed, the DRNN seems to experience problems related to the speed needed to perform fast range movements, since the inputoutput flow required to compute the joints next positions is increasingly affected by (a) communication delays between the robot and the controller, and (b) the timestep that regulates the activation of the DRNN, at fast speed, could not be enough to correctly sample the duration of the movement. Secondly, Fig. 5 indicates that the DRNN architecture is better suited for generalization and, therefore, for modeling artificial mental imagery. In the case in which both networks are trained only on the slow training set, the DRNN outperforms the FFNN in the ability to predict the dynamic of the movement, i.e., the duration, with respect to increasing angular velocities that are completely unknown to the neural network. It is worth noting here that the ability of a DRNN architecture to model temporal dynamics is a general characteristic of this type of neural network. Indeed, both in Di Nuovo et al. (2012) and Di Nuovo et al. (2011) the authors have applied a similar neural architecture to different problems: the spatial mental imagery in a soccer field, where the robot has to mentally figure out the position of the goal, and mental imagery used to improve the performance of a combined torso/arms movements mediated by language. In both cases a DRNN has been able to correctly predict the body dynamics of the robot on the basis of incomplete training examples. The FFNN failure in predicting temporal dynamics is explainable by the simplistic information used to train the FFNN, which seems to be not enough to reliably predict the duration time in a faster range, never experienced before. On the contrary, the greater amount of information that comes from the proprioception and the fact that the DRNN has to integrate over time those information in order to perform the movement, makes the DRNN able to create a sort of internal model of the robots body behavior. This allows the DRNN to better generalize and, therefore guide the FFNN in enhancing its performance. This interesting aspect of the DRNN can be partially unveiled by analyzing the internal dynamic of the neural network, which can be done by reducing the complexity of the hidden neuron activations trough a Principal Component Analysis. Fig. 8, for example, presents the values of the first Principal Component at the last timestep, i.e., after the neural network has finished the throwing movement, for all the test cases, both slow and fast showing that the internal representations are very similar, also in the case in which the DRNN is trained with the slow range only. Interestingly, the series shown in Fig. 8 are highly correlated with the duration time, which is never explicitly given to the neural network. The correlation is 97.50 for the full range training, 99.41 for slow only and 99.37 for slow plus mental training. This result demonstrates that the DRNN is able to extrapolate the duration time from the input sequences and to generalize when operating with new sequences never experienced before. Similarly, Fig. 9 shows the first principal component of the DRNN in relation to different angular velocities: slow being the slowest test velocity, medium the fastest within the slow range, and fast the fastest possible velocity tested in the experiment. As can be seen, the DRNN is able to uncover the similarities in the temporal dynamics that link slow and fast cases. Hence, it is finally able to better approximate the correct trajectory of joint positions also in a situation not experienced before.

Fig. 9. DRNN hidden units activation analysis. Lines represent the values of first principal component for a slow velocity (blue), a medium velocity (green) and a fast velocity (red). (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.) 5. Conclusion and future research directions In this paper we presented a model of a humanoid robot controller based on neural networks that allows the iCub robot to autonomously improve its sensorimotor skills. This was achieved by endowing the neural controller with a secondary neural system that, by exploiting the sensorimotor skills already acquired by the robot, is able to generate additional imaginary examples that can be used by the controller itself to improve the performance through a simulated mental training. An implementation of this model was presented and tested in controlling the ballistic movement required to throw an object as far as possible for a given velocity of the movement itself. Experimental results with the iCub platform simulator showed that the use of simulated mental training can significantly improve the performance of the robot in ranges not experienced before. The results presented in this work, in conclusion, allow us to imagine the creation of novel algorithms and cognitive systems that implement even better and with more efficacy the concept of artificial mental training. Such a concept appears very useful in robotics, for at least two reasons: it helps to speed-up the learning process in terms of time resources by reducing the number of real examples and real movements performed by the robot. Besides the time issue, the reduction of real examples is also beneficial in terms of costs, because it similarly reduces the probability of failures and damage to the robot while keeping the robot improving its performance through mental simulations. In future, we speculate that imagery techniques might be applied in robotics not only for performance improvement, but also for the creation of safety algorithms capable to predict dangerous joints positions and to stop the robots movements before those critical situations actually occur.

Anda mungkin juga menyukai

- Functional Rehabilitation of Some Common Neurological Conditions: A Physical Management Strategy to Optimise Functional Activity LevelDari EverandFunctional Rehabilitation of Some Common Neurological Conditions: A Physical Management Strategy to Optimise Functional Activity LevelBelum ada peringkat

- Psychophysiological assessment of human cognition and its enhancement by a non-invasive methodDari EverandPsychophysiological assessment of human cognition and its enhancement by a non-invasive methodBelum ada peringkat

- Mental PracticeDokumen3 halamanMental PracticeBdk NakalBelum ada peringkat

- MOTOR IMAGERY Tarek Abo Ayoub-1 PDFDokumen5 halamanMOTOR IMAGERY Tarek Abo Ayoub-1 PDFTarek Abo ayoubBelum ada peringkat

- Doyon 2005. Reorganization and Plasticity in The Adult Brain During Learning of Motor SkillsDokumen7 halamanDoyon 2005. Reorganization and Plasticity in The Adult Brain During Learning of Motor SkillsenzoverdiBelum ada peringkat

- Gentili 2015Dokumen12 halamanGentili 2015sunithaBelum ada peringkat

- Neural plasticity during motor learning with motor imagery practiceDokumen18 halamanNeural plasticity during motor learning with motor imagery practiceLaura RugeBelum ada peringkat

- Relationship Between Corticospinal Excitability WHDokumen10 halamanRelationship Between Corticospinal Excitability WHanaBelum ada peringkat

- Bai 2014Dokumen9 halamanBai 2014Karen GuzmanBelum ada peringkat

- Carr & ShepherdDokumen100 halamanCarr & ShepherdСергій Черкасов100% (1)

- Department of Exercise and Sport Science Manchester Metropolitan University, UKDokumen24 halamanDepartment of Exercise and Sport Science Manchester Metropolitan University, UKChristopher ChanBelum ada peringkat

- TMP 2 F22Dokumen6 halamanTMP 2 F22FrontiersBelum ada peringkat

- Neural Signatures of Motor Skill in The Resting BrainDokumen8 halamanNeural Signatures of Motor Skill in The Resting BrainAnooshdini2002Belum ada peringkat

- PsyRes ImaKawDokumen57 halamanPsyRes ImaKawadriancaraimanBelum ada peringkat

- Nre 192931Dokumen11 halamanNre 192931Fabricio CardosoBelum ada peringkat

- 10 1 1 16 1900 PDFDokumen15 halaman10 1 1 16 1900 PDFJisha KuruvillaBelum ada peringkat

- Report-8 1168891Dokumen4 halamanReport-8 1168891NeeharikaBelum ada peringkat

- Enhancing Intelligence: From The Group To The IndividualDokumen20 halamanEnhancing Intelligence: From The Group To The IndividualSebastiánsillo SánchezBelum ada peringkat

- Pzab 250Dokumen9 halamanPzab 250sneha duttaBelum ada peringkat

- Automated Derivation of Movement Primitives Using PCADokumen17 halamanAutomated Derivation of Movement Primitives Using PCAEscuela De ArteBelum ada peringkat

- Motor Imagery - If You Can't Do It, You Won't Think ItDokumen6 halamanMotor Imagery - If You Can't Do It, You Won't Think ItRandy HoweBelum ada peringkat

- HIRADokumen6 halamanHIRAAdenosine DiphosphateBelum ada peringkat

- Artificial Intelligence For Prosthetics - Challenge SolutionsDokumen49 halamanArtificial Intelligence For Prosthetics - Challenge Solutionsnessus joshua aragonés salazarBelum ada peringkat

- Effect of Mental Imagery On The Development of Skilled Motor ActionsDokumen24 halamanEffect of Mental Imagery On The Development of Skilled Motor ActionsfaridBelum ada peringkat

- Adaptive Robotic Neuro-Rehabilitation Haptic Device For Motor and Sensory Dysfunction PatientsDokumen8 halamanAdaptive Robotic Neuro-Rehabilitation Haptic Device For Motor and Sensory Dysfunction PatientssnowdewdropBelum ada peringkat

- Studyprotocol Open Access: Grecco Et Al. BMC Pediatrics 2013, 13:168Dokumen11 halamanStudyprotocol Open Access: Grecco Et Al. BMC Pediatrics 2013, 13:168Ana paula CamargoBelum ada peringkat

- Physio Theraphy by Dickstein 2007Dokumen14 halamanPhysio Theraphy by Dickstein 2007Gary Charles TayBelum ada peringkat

- Motor imagery and swallowing literature reviewDokumen10 halamanMotor imagery and swallowing literature reviewDaniela OrtizBelum ada peringkat

- Use of Robotic Devices in Post-Stroke Rehabilitation: A. A. Frolov, I. B. Kozlovskaya, E. V. Biryukova, and P. D. BobrovDokumen14 halamanUse of Robotic Devices in Post-Stroke Rehabilitation: A. A. Frolov, I. B. Kozlovskaya, E. V. Biryukova, and P. D. BobrovMonica PrisacariuBelum ada peringkat

- Acquisition and Consolidation of Implicit Motor Learning With Physical and Mental Practice Across Multiple Days of Anodal TDCSDokumen10 halamanAcquisition and Consolidation of Implicit Motor Learning With Physical and Mental Practice Across Multiple Days of Anodal TDCSCpeti CzingraberBelum ada peringkat

- Gross Motor Ability Predicts Response To Upper Extremity Rehabilitaion in Chronic StrokeDokumen9 halamanGross Motor Ability Predicts Response To Upper Extremity Rehabilitaion in Chronic StrokeFlorencia WirawanBelum ada peringkat

- Pzab 250Dokumen9 halamanPzab 250DPPT 0017 NURUL MAZIDAH BINTI MOHD ROSDIBelum ada peringkat

- Neuroscience of Exercise - Neuroplasticity and Its Behavioral ConsequencesDokumen3 halamanNeuroscience of Exercise - Neuroplasticity and Its Behavioral ConsequencesTBelum ada peringkat

- Born To Move A Review On The Impact of Physical Exercise On Brain HealthDokumen15 halamanBorn To Move A Review On The Impact of Physical Exercise On Brain HealthVerônica DuquinnaBelum ada peringkat

- Eco Approach To Cog EnhancementDokumen12 halamanEco Approach To Cog EnhancementScott_PTBelum ada peringkat

- Robotic Upper LimbDokumen23 halamanRobotic Upper LimbIlhamFatriaBelum ada peringkat

- Human Musculoskeletal Bio MechanicsDokumen254 halamanHuman Musculoskeletal Bio MechanicsJosé RamírezBelum ada peringkat

- PEM7 Chapter 1Dokumen12 halamanPEM7 Chapter 1Ivan Quin E. ParedesBelum ada peringkat

- Teque S 2017Dokumen20 halamanTeque S 2017NICOLÁS ANDRÉS AYELEF PARRAGUEZBelum ada peringkat

- A Collaborative Brain-Computer Interface For Improving Human PerformanceDokumen11 halamanA Collaborative Brain-Computer Interface For Improving Human Performancehyperion1Belum ada peringkat

- Scalable Muscle-Actuated Human Simulation and Control: Seunghwan Lee, Moonseok Park, Kyoungmin Lee, Jehee LeeDokumen13 halamanScalable Muscle-Actuated Human Simulation and Control: Seunghwan Lee, Moonseok Park, Kyoungmin Lee, Jehee Leesouaifi yosraBelum ada peringkat

- Efectos de La Realidad Virtual en La Plasticidad Neuronal en La Rehabilitacion de Ictus. Revision SistematicaDokumen19 halamanEfectos de La Realidad Virtual en La Plasticidad Neuronal en La Rehabilitacion de Ictus. Revision SistematicaÁngel OlivaresBelum ada peringkat

- HuangDokumen14 halamanHuangNathalie GodartBelum ada peringkat

- Neurofeedback Hampson2019Dokumen5 halamanNeurofeedback Hampson2019Josefina PalmesBelum ada peringkat

- A Review of Upper Limb Robot Assisted Therapy Techniques and Virtual Reality ApplicationsDokumen11 halamanA Review of Upper Limb Robot Assisted Therapy Techniques and Virtual Reality ApplicationsIAES IJAIBelum ada peringkat

- Oti 1403 PDFDokumen9 halamanOti 1403 PDFAnggelia jopa sariBelum ada peringkat

- Haptic Training Method Using A Nonlinear Joint Control: NtroductionDokumen8 halamanHaptic Training Method Using A Nonlinear Joint Control: NtroductionRamanan SubramanianBelum ada peringkat

- 9 - TechnologicalAdvancements in CerebralPalsy RehabilitationDokumen13 halaman9 - TechnologicalAdvancements in CerebralPalsy RehabilitationMariaBelum ada peringkat

- Perceptual Cognitive Training of AthletesDokumen24 halamanPerceptual Cognitive Training of AthletesSports Psychology DepartmentBelum ada peringkat

- Chapter/ Animation Thesis StatementDokumen5 halamanChapter/ Animation Thesis StatementAlfad SonglanBelum ada peringkat

- Cognitive Representation of Human Action: Theory, Applications, and PerspectivesDokumen6 halamanCognitive Representation of Human Action: Theory, Applications, and PerspectivesFederico WimanBelum ada peringkat

- Interplay of Rhythmic and Discrete Manipulation Movements During Development: A Policy-Search Reinforcement-Learning Robot ModelDokumen19 halamanInterplay of Rhythmic and Discrete Manipulation Movements During Development: A Policy-Search Reinforcement-Learning Robot ModeljuanBelum ada peringkat

- Correlation Between Videogame Mechanics and ExecutiveDokumen23 halamanCorrelation Between Videogame Mechanics and ExecutiveChristian F. VegaBelum ada peringkat

- Chapter 8 Study GuideDokumen3 halamanChapter 8 Study Guideapi-242113360Belum ada peringkat

- Gutierrez Et Al-2018-Brain Structure and FunctionDokumen17 halamanGutierrez Et Al-2018-Brain Structure and FunctionThiago CruzBelum ada peringkat

- Neuroimage: T. Marins, E.C. Rodrigues, T. Bortolini, Bruno Melo, J. Moll, F. Tovar-MollDokumen8 halamanNeuroimage: T. Marins, E.C. Rodrigues, T. Bortolini, Bruno Melo, J. Moll, F. Tovar-MollMarcelo MenezesBelum ada peringkat

- Visión Estroboscópica y RendimientoDokumen13 halamanVisión Estroboscópica y RendimientoPrapamobileBelum ada peringkat

- MotorLearning Krakauer Et Al 2019Dokumen51 halamanMotorLearning Krakauer Et Al 2019markBelum ada peringkat

- Functional Cerebral Reorganization Following Motor Sequence Learning Through Mental Practice With Motor ImageryDokumen10 halamanFunctional Cerebral Reorganization Following Motor Sequence Learning Through Mental Practice With Motor ImageryMayrita Antonio UrquidiBelum ada peringkat

- Committee History 50yearsDokumen156 halamanCommittee History 50yearsd_maassBelum ada peringkat

- Correlation Degree Serpentinization of Source Rock To Laterite Nickel Value The Saprolite Zone in PB 5, Konawe Regency, Southeast SulawesiDokumen8 halamanCorrelation Degree Serpentinization of Source Rock To Laterite Nickel Value The Saprolite Zone in PB 5, Konawe Regency, Southeast SulawesimuqfiBelum ada peringkat

- Returnable Goods Register: STR/4/005 Issue 1 Page1Of1Dokumen1 halamanReturnable Goods Register: STR/4/005 Issue 1 Page1Of1Zohaib QasimBelum ada peringkat

- HP HP3-X11 Exam: A Composite Solution With Just One ClickDokumen17 halamanHP HP3-X11 Exam: A Composite Solution With Just One ClicksunnyBelum ada peringkat

- Brochure en 2014 Web Canyon Bikes How ToDokumen36 halamanBrochure en 2014 Web Canyon Bikes How ToRadivizija PortalBelum ada peringkat

- Masteringphys 14Dokumen20 halamanMasteringphys 14CarlosGomez0% (3)

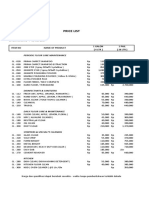

- Price List PPM TerbaruDokumen7 halamanPrice List PPM TerbaruAvip HidayatBelum ada peringkat

- Factors of Active Citizenship EducationDokumen2 halamanFactors of Active Citizenship EducationmauïBelum ada peringkat

- LSMW With Rfbibl00Dokumen14 halamanLSMW With Rfbibl00abbasx0% (1)

- Pasadena Nursery Roses Inventory ReportDokumen2 halamanPasadena Nursery Roses Inventory ReportHeng SrunBelum ada peringkat

- Pfr140 User ManualDokumen4 halamanPfr140 User ManualOanh NguyenBelum ada peringkat

- Technical specifications for JR3 multi-axis force-torque sensor modelsDokumen1 halamanTechnical specifications for JR3 multi-axis force-torque sensor modelsSAN JUAN BAUTISTABelum ada peringkat

- Production of Sodium Chlorite PDFDokumen13 halamanProduction of Sodium Chlorite PDFangelofgloryBelum ada peringkat

- Mrs. Universe PH - Empowering Women, Inspiring ChildrenDokumen2 halamanMrs. Universe PH - Empowering Women, Inspiring ChildrenKate PestanasBelum ada peringkat

- CBT For BDDDokumen13 halamanCBT For BDDGregg Williams100% (5)

- !!!Логос - конференц10.12.21 копіяDokumen141 halaman!!!Логос - конференц10.12.21 копіяНаталія БондарBelum ada peringkat

- PHY210 Mechanism Ii and Thermal Physics Lab Report: Faculty of Applied Sciences Uitm Pahang (Jengka Campus)Dokumen13 halamanPHY210 Mechanism Ii and Thermal Physics Lab Report: Faculty of Applied Sciences Uitm Pahang (Jengka Campus)Arissa SyaminaBelum ada peringkat

- United-nations-Organization-uno Solved MCQs (Set-4)Dokumen8 halamanUnited-nations-Organization-uno Solved MCQs (Set-4)SãñÂt SûRÿá MishraBelum ada peringkat

- CFO TagsDokumen95 halamanCFO Tagssatyagodfather0% (1)

- 4 Factor DoeDokumen5 halaman4 Factor Doeapi-516384896Belum ada peringkat

- How To Text A Girl - A Girls Chase Guide (Girls Chase Guides) (PDFDrive) - 31-61Dokumen31 halamanHow To Text A Girl - A Girls Chase Guide (Girls Chase Guides) (PDFDrive) - 31-61Myster HighBelum ada peringkat

- Cot 2Dokumen3 halamanCot 2Kathjoy ParochaBelum ada peringkat

- Bula Defense M14 Operator's ManualDokumen32 halamanBula Defense M14 Operator's ManualmeBelum ada peringkat

- 7 Aleksandar VladimirovDokumen6 halaman7 Aleksandar VladimirovDante FilhoBelum ada peringkat

- Week 15 - Rams vs. VikingsDokumen175 halamanWeek 15 - Rams vs. VikingsJMOTTUTNBelum ada peringkat

- Flexible Regression and Smoothing - Using GAMLSS in RDokumen572 halamanFlexible Regression and Smoothing - Using GAMLSS in RDavid50% (2)

- Form 709 United States Gift Tax ReturnDokumen5 halamanForm 709 United States Gift Tax ReturnBogdan PraščevićBelum ada peringkat

- Weone ProfileDokumen10 halamanWeone ProfileOmair FarooqBelum ada peringkat

- SDS OU1060 IPeptideDokumen6 halamanSDS OU1060 IPeptideSaowalak PhonseeBelum ada peringkat