Covariance: A Little Introduction

Diunggah oleh

Abhijit Kar GuptaJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Covariance: A Little Introduction

Diunggah oleh

Abhijit Kar GuptaHak Cipta:

Format Tersedia

1

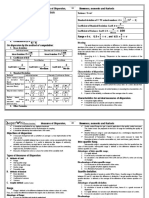

Variance, Covariance and Covariance Matrix

The variance for a set of numbers is defined to have a measure how the data points deviate (or vary) from their mean. For a set of numbers: , ,. , Variance, ( ) . ). ( ), the mean of the

square of the deviations from mean, where the mean,

Note that the square root of variance is the standard deviation (

The above formula can be reduced to the following: ( ), the mean of square minus the square of mean. By definition, variance is always positive and it has no upper limit. We can likewise, calculate variance for another set of numbers: ( ) . , ,. , Variance,

Now we may want to know if there is any connection between the two sets of numbers! For that we devise a formula which may tell us how the variation in one can be related to the same with the other. Let us rewrite the expression (2) in the following way: Following the above, we can construct a formula: or in some other notation,

, this is COVARIANCE!

If we consider a probability distribution, that is when we consider that the numbers appear according to some probability, the formula for variance is written in the following way: ( ) , where stands for the expectation value, replaced by the expectation value of the variable . Now, the covariance formula would be written as Cov( Where ( ) and ( ). )= (( )( )) ( ) , ( ), the mean is now

Abhijit Kar Gupta.

Email: kg.abhi@gmail.com

By definition, covariance can take any positive or negative value without limit including zero. This is easy to understand if we consider that the covariance is defined in terms of the product )( of two factors, ( ); each of the factors can be positive or negative. To have a normalized measure, one considers the correlation formula: Corr ( )= , it is a normalized definition. Correlation is defined to vary between -1 and +1.

What is the meaning of covariance? If the covariance is positive, both the factors are either positive or both negative. If the covariance is negative, one of the factors is positive while other is negative. In case of zero value, one or both are zero. Now, if we consider two sets of numbers (or two probability distributions for two different events), the covariance value of them tells us how or whether they are related. If the increase in one variable causes in some way the increase in the other, we call they are positively correlated. That means their covariance value is positive. If the increase in one makes another to decrease (or other way), the covariance value will be negative. If the variation in one does not affect the other, the covariance is zero. Now, we can readily see, for two sets of variables (or numbers or probability distributions), we can construct a 2x2 matrix: ( )

It is easy to check that the this matrix, we call this COVARIANCE MATRIX, is a symmetric matrix with and each diagonal elements ( or ) is essentially the covariance with itself (simply the variance)! We can generalize the idea for more than two variables. For 3 variables, we obtain a covariance matrix of order 3x3 and so on. When the value of any off-diagonal element is positive, we call the variables are positively correlated; for higher values we know they are highly (or strongly) correlated. The above is the basis of understanding a covariance matrix.

Abhijit Kar Gupta.

Email: kg.abhi@gmail.com

Abhijit Kar Gupta.

Email: kg.abhi@gmail.com

Anda mungkin juga menyukai

- CorrelationDokumen11 halamanCorrelationAbhijit Kar GuptaBelum ada peringkat

- Measure of Central TendencyDokumen113 halamanMeasure of Central TendencyRajdeep DasBelum ada peringkat

- Compound Distributions and Mixture Distributions: ParametersDokumen5 halamanCompound Distributions and Mixture Distributions: ParametersDennis ClasonBelum ada peringkat

- Poisson Distribution PDFDokumen15 halamanPoisson Distribution PDFTanzil Mujeeb yacoobBelum ada peringkat

- Chap 2 Introduction To StatisticsDokumen46 halamanChap 2 Introduction To StatisticsAnanthanarayananBelum ada peringkat

- Tutorial ProblemsDokumen74 halamanTutorial ProblemsBhaswar MajumderBelum ada peringkat

- Practical Exercise 8 - SolutionDokumen3 halamanPractical Exercise 8 - SolutionmidoriBelum ada peringkat

- 2.1 Measures of Central TendencyDokumen32 halaman2.1 Measures of Central Tendencypooja_patnaik237Belum ada peringkat

- CatpcaDokumen19 halamanCatpcaRodito AcolBelum ada peringkat

- Disperson SkwenessOriginalDokumen10 halamanDisperson SkwenessOriginalRam KrishnaBelum ada peringkat

- Numerical Method 1 PDFDokumen2 halamanNumerical Method 1 PDFGagan DeepBelum ada peringkat

- Nature of Regression AnalysisDokumen22 halamanNature of Regression Analysiswhoosh2008Belum ada peringkat

- Karl Pearson's Measure of SkewnessDokumen27 halamanKarl Pearson's Measure of SkewnessdipanajnBelum ada peringkat

- D. EquationsDokumen39 halamanD. Equationsmumtaz aliBelum ada peringkat

- 3 Dispersion Skewness Kurtosis PDFDokumen42 halaman3 Dispersion Skewness Kurtosis PDFKelvin Kayode OlukojuBelum ada peringkat

- 6175-Assignment 3 (Ways of Representation of Graphical Data)Dokumen7 halaman6175-Assignment 3 (Ways of Representation of Graphical Data)dsvidhya380450% (2)

- Stat 147 - Chapter 2 - Multivariate Distributions PDFDokumen6 halamanStat 147 - Chapter 2 - Multivariate Distributions PDFjhoycel ann ramirezBelum ada peringkat

- 2015 Midterm SolutionsDokumen7 halaman2015 Midterm SolutionsEdith Kua100% (1)

- AnnuitiesDokumen43 halamanAnnuitiesMarz Gesalago DenilaBelum ada peringkat

- Statistics and Freq DistributionDokumen35 halamanStatistics and Freq DistributionMuhammad UsmanBelum ada peringkat

- Week 2 Lab - Introduction To Data - CourseraDokumen6 halamanWeek 2 Lab - Introduction To Data - CourseraSampada DesaiBelum ada peringkat

- Chow TestDokumen23 halamanChow TestYaronBabaBelum ada peringkat

- Midterm PrinciplesDokumen8 halamanMidterm Principlesarshad_pmadBelum ada peringkat

- Basic Econometrics Intro MujahedDokumen57 halamanBasic Econometrics Intro MujahedYammanuri VenkyBelum ada peringkat

- MR Barton's Maths Notes: GraphsDokumen10 halamanMR Barton's Maths Notes: GraphsbaljbaBelum ada peringkat

- Introduction To Analysis of VarianceCDokumen35 halamanIntroduction To Analysis of VarianceCyohannesBelum ada peringkat

- ANCOVADokumen17 halamanANCOVAErik Otarola-CastilloBelum ada peringkat

- Multicollinearity Assignment April 5Dokumen10 halamanMulticollinearity Assignment April 5Zeinm KhenBelum ada peringkat

- DispersionDokumen2 halamanDispersionrauf tabassumBelum ada peringkat

- Normal DistributionDokumen21 halamanNormal DistributionRajesh DwivediBelum ada peringkat

- Lectures On Theoretical Mechanics - 7 (Rotating Coordinate System: Coriolis Force Etc.)Dokumen6 halamanLectures On Theoretical Mechanics - 7 (Rotating Coordinate System: Coriolis Force Etc.)Abhijit Kar Gupta100% (1)

- Joint Probability DistributionDokumen10 halamanJoint Probability DistributionJawad Sandhu100% (1)

- Chapter 3 Numerical Descriptive MeasuresDokumen65 halamanChapter 3 Numerical Descriptive MeasuresKhouloud bn16Belum ada peringkat

- Data Analysis-PrelimDokumen53 halamanData Analysis-PrelimMarkDanieleAbonisBelum ada peringkat

- 2.1 Multiple Choice: Chapter 2 Review of ProbabilityDokumen35 halaman2.1 Multiple Choice: Chapter 2 Review of Probabilitydaddy's cockBelum ada peringkat

- Applications of Vector Analysis & PDEsDokumen28 halamanApplications of Vector Analysis & PDEsmasoodBelum ada peringkat

- Regression AnalysisDokumen21 halamanRegression AnalysisjjjjkjhkhjkhjkjkBelum ada peringkat

- Correlation: (For M.B.A. I Semester)Dokumen46 halamanCorrelation: (For M.B.A. I Semester)Arun Mishra100% (2)

- Probability and Probability DistributionDokumen46 halamanProbability and Probability DistributionEdna Lip AnerBelum ada peringkat

- Topic 6 Correlation and RegressionDokumen25 halamanTopic 6 Correlation and RegressionMuhammad Tarmizi100% (1)

- Linear Regression Analysis For STARDEX: Trend CalculationDokumen6 halamanLinear Regression Analysis For STARDEX: Trend CalculationSrinivasu UpparapalliBelum ada peringkat

- Properties of Bulk MatterDokumen1 halamanProperties of Bulk MatterAbhijit Kar Gupta50% (2)

- Dot ProductDokumen5 halamanDot ProductMadhavBelum ada peringkat

- Presentation of The Data Features: Tabular and GraphicDokumen31 halamanPresentation of The Data Features: Tabular and GraphicTariq Bin AmirBelum ada peringkat

- ARCH ModelDokumen26 halamanARCH ModelAnish S.MenonBelum ada peringkat

- Chap 013Dokumen67 halamanChap 013Bruno MarinsBelum ada peringkat

- 5 - Test of Hypothesis (Part - 1)Dokumen44 halaman5 - Test of Hypothesis (Part - 1)Muhammad Huzaifa JamilBelum ada peringkat

- Steam and - Leaf DisplayDokumen25 halamanSteam and - Leaf DisplayKuldeepPaudel100% (1)

- Ignou BCA Mathematics Block 2Dokumen134 halamanIgnou BCA Mathematics Block 2JaheerBelum ada peringkat

- Multivariate Linear RegressionDokumen30 halamanMultivariate Linear RegressionesjaiBelum ada peringkat

- Intro of Hypothesis TestingDokumen66 halamanIntro of Hypothesis TestingDharyl BallartaBelum ada peringkat

- Continuous Probability Distribution PDFDokumen47 halamanContinuous Probability Distribution PDFDipika PandaBelum ada peringkat

- Sec D CH 12 Regression Part 2Dokumen66 halamanSec D CH 12 Regression Part 2Ranga SriBelum ada peringkat

- Chapter No. 08 Fundamental Sampling Distributions and Data Descriptions - 02 (Presentation)Dokumen91 halamanChapter No. 08 Fundamental Sampling Distributions and Data Descriptions - 02 (Presentation)Sahib Ullah MukhlisBelum ada peringkat

- Central TendencyDokumen26 halamanCentral TendencyAlok MittalBelum ada peringkat

- Correlation and Regression Feb2014Dokumen50 halamanCorrelation and Regression Feb2014Zeinab Goda100% (1)

- Various Measures of Central Tendenc1Dokumen45 halamanVarious Measures of Central Tendenc1Mohammed Demssie MohammedBelum ada peringkat

- 12 CoorelationDokumen25 halaman12 Coorelationdranu3100% (1)

- Correlation and CovarianceDokumen11 halamanCorrelation and CovarianceSrikirupa V MuralyBelum ada peringkat

- Correlation:: (Bálint Tóth, Pázmány Péter Catholic University:, Do Not Share Without Author's Permission)Dokumen9 halamanCorrelation:: (Bálint Tóth, Pázmány Péter Catholic University:, Do Not Share Without Author's Permission)Bálint L'Obasso TóthBelum ada peringkat

- A Lecture On Teachers' DayDokumen1 halamanA Lecture On Teachers' DayAbhijit Kar Gupta100% (1)

- Python 3 PDFDokumen4 halamanPython 3 PDFAbhijit Kar Gupta100% (3)

- Karnaugh Map (K-Map) and All ThatDokumen5 halamanKarnaugh Map (K-Map) and All ThatAbhijit Kar GuptaBelum ada peringkat

- From Pick's To PythagorasDokumen1 halamanFrom Pick's To PythagorasAbhijit Kar Gupta100% (1)

- Path Analysis For Linear Multiple RegressionDokumen3 halamanPath Analysis For Linear Multiple RegressionAbhijit Kar GuptaBelum ada peringkat

- Tower of Hanoi: Fortran CodeDokumen3 halamanTower of Hanoi: Fortran CodeAbhijit Kar GuptaBelum ada peringkat

- Bangla ChhoraDokumen9 halamanBangla ChhoraAbhijit Kar GuptaBelum ada peringkat

- From Equations of Motions To Mathematical IdentitiesDokumen4 halamanFrom Equations of Motions To Mathematical IdentitiesAbhijit Kar Gupta100% (2)

- A Short Course On PROBABILITY and SAMPLINGDokumen44 halamanA Short Course On PROBABILITY and SAMPLINGAbhijit Kar Gupta100% (1)

- Lectures On Theoretical Mechanics - 7 (Rotating Coordinate System: Coriolis Force Etc.)Dokumen6 halamanLectures On Theoretical Mechanics - 7 (Rotating Coordinate System: Coriolis Force Etc.)Abhijit Kar Gupta100% (1)

- Failure of Democracy in India?Dokumen1 halamanFailure of Democracy in India?Abhijit Kar GuptaBelum ada peringkat

- Statistical Mechanics TEST QuestionsDokumen2 halamanStatistical Mechanics TEST QuestionsAbhijit Kar Gupta83% (42)

- Lect-V Vector SpaceDokumen5 halamanLect-V Vector SpaceAbhijit Kar GuptaBelum ada peringkat

- Properties of Bulk MatterDokumen1 halamanProperties of Bulk MatterAbhijit Kar Gupta50% (2)

- Solution To Question Paper IV, V.U. 2013Dokumen9 halamanSolution To Question Paper IV, V.U. 2013Abhijit Kar GuptaBelum ada peringkat

- How Can You Create A SUDOKU Puzzle?Dokumen8 halamanHow Can You Create A SUDOKU Puzzle?Abhijit Kar GuptaBelum ada peringkat

- Lect-VI Vector SpaceDokumen11 halamanLect-VI Vector SpaceAbhijit Kar GuptaBelum ada peringkat

- Oscillation and Decay - A Pictorial Tour Through Computer ProgramsDokumen5 halamanOscillation and Decay - A Pictorial Tour Through Computer ProgramsAbhijit Kar GuptaBelum ada peringkat

- Lect-III Vector SpaceDokumen9 halamanLect-III Vector SpaceAbhijit Kar GuptaBelum ada peringkat

- Lect-V Vector SpaceDokumen5 halamanLect-V Vector SpaceAbhijit Kar GuptaBelum ada peringkat

- Lect-IV Vector SpaceDokumen10 halamanLect-IV Vector SpaceAbhijit Kar GuptaBelum ada peringkat

- Lect-II Vector SpaceDokumen9 halamanLect-II Vector SpaceAbhijit Kar GuptaBelum ada peringkat

- Lecture - I Vector SpaceDokumen5 halamanLecture - I Vector SpaceAbhijit Kar GuptaBelum ada peringkat

- Portfolio WsDokumen4 halamanPortfolio WsNancy KapoloBelum ada peringkat

- Correlation Regression - 2023Dokumen69 halamanCorrelation Regression - 2023Writabrata BhattacharyaBelum ada peringkat

- ADM 2303 Week6Dokumen9 halamanADM 2303 Week6saad ullahBelum ada peringkat

- Probability CheatsheetDokumen8 halamanProbability CheatsheetMo Ml100% (1)

- Descriptive Statistical MeasuresDokumen63 halamanDescriptive Statistical MeasuresKUA JIEN BINBelum ada peringkat

- Exponential Distribution Theory and MethodsDokumen158 halamanExponential Distribution Theory and MethodsImad Tantawi100% (2)

- Rossmanith - Completion of Market DataDokumen62 halamanRossmanith - Completion of Market Datafckw-1Belum ada peringkat

- 2013 Self-Concept QuestionnaireDokumen8 halaman2013 Self-Concept QuestionnaireJulio LealBelum ada peringkat

- Variogram Modeling, Defining Values To Variogram Adjustments in PetrelDokumen10 halamanVariogram Modeling, Defining Values To Variogram Adjustments in PetrelIbrahim Abdallah0% (1)

- Fuzzy Time SeriesDokumen42 halamanFuzzy Time SeriescengelliigneBelum ada peringkat

- Lecture 4: Joint Probability Distribution & Limit Theorems: Wisnu Setiadi NugrohoDokumen48 halamanLecture 4: Joint Probability Distribution & Limit Theorems: Wisnu Setiadi NugrohoRidwan Putra JBelum ada peringkat

- Random Variables: - Definition of Random VariableDokumen29 halamanRandom Variables: - Definition of Random Variabletomk2220Belum ada peringkat

- Engineering MathematicsDokumen62 halamanEngineering MathematicsPalashBelum ada peringkat

- Gaussian Observation HMM For EEGDokumen9 halamanGaussian Observation HMM For EEGDeetovBelum ada peringkat

- Active Knowledge Extraction From Cyclic VoltammetryDokumen19 halamanActive Knowledge Extraction From Cyclic VoltammetryikoutsBelum ada peringkat

- CH06 Mean, Variance, and Transform PDFDokumen67 halamanCH06 Mean, Variance, and Transform PDFMahedi HassanBelum ada peringkat

- R-Cheat SheetDokumen4 halamanR-Cheat SheetPrasad Marathe100% (1)

- Joint DistributionsDokumen9 halamanJoint Distributionschacha_420Belum ada peringkat

- Using ACF and PACF To Select MA (Q) or AR (P) ModelsDokumen3 halamanUsing ACF and PACF To Select MA (Q) or AR (P) ModelsbboyvnBelum ada peringkat

- EcmAll PDFDokumen266 halamanEcmAll PDFMithilesh KumarBelum ada peringkat

- Lecture #1Dokumen22 halamanLecture #1Muhammad WaqasBelum ada peringkat

- Emerson Et Al 1988Dokumen12 halamanEmerson Et Al 1988Sebastián BarrionuevoBelum ada peringkat

- Joint Density Functions, Marginal Density Functions, Conditional Density Functions, Expectations and IndependenceDokumen12 halamanJoint Density Functions, Marginal Density Functions, Conditional Density Functions, Expectations and IndependenceAnonymous Ptxr6wl9DhBelum ada peringkat

- Statistical Signal ProcessingDokumen135 halamanStatistical Signal Processingprobability2Belum ada peringkat

- Probability and Statistics: Cheat SheetDokumen10 halamanProbability and Statistics: Cheat Sheetmrbook79100% (1)

- EDA Lesson 3Dokumen3 halamanEDA Lesson 3Cathrina ClaveBelum ada peringkat

- Minimum Variance Portfolio ExampleDokumen4 halamanMinimum Variance Portfolio ExampleDaniel Dunlap100% (1)

- Analysing Spatial Data Via Geostatistical Methods: Craig John MorganDokumen25 halamanAnalysing Spatial Data Via Geostatistical Methods: Craig John MorganSivashni NaickerBelum ada peringkat

- Basics of Multivariate NormalDokumen46 halamanBasics of Multivariate NormalSayan GuptaBelum ada peringkat

- Analyzing Time-Varying Noise Properties With SpectrerfDokumen22 halamanAnalyzing Time-Varying Noise Properties With Spectrerffrostyfoley100% (1)