Multivariable Differential Calculus: Definition 8.1: Function of Real Variables, Vector

Diunggah oleh

preethamismJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Multivariable Differential Calculus: Definition 8.1: Function of Real Variables, Vector

Diunggah oleh

preethamismHak Cipta:

Format Tersedia

1999 by CRC Press LLC

Chapter 8

Multivariable Differential Calculus

1 Introduction

Up to this point we have restricted our attention to problems involving

functions of only one variable that is, functions whose inputs and outputs

are real numbers. As well see, this background is essential for the proper

study of functions whose inputs consist of more than just numbers. Such

functions come up often enough to warrant the extensive treatment being

introduced in this chapter; because just about all systems well ever meet,

from electronic to biological and economic ones, are inuenced by many

(input) factors. To list a few examples,

The exact location of a space satellite is most strongly

inuenced by the sequence of thrusts provided by its

engines, as well as the winds it encounters, the air

densities it meets, etc.

The weight of a person is inuenced by the food

consumed, exercise levels, genetic factors, etc.

The cost of living is affected by the Federal Reserve

discount rates, consumer demand, and so forth.

To adequately predict the weather in any area requires a

great deal of input, from knowledge of the trade winds

and jet stream behaviors to an enormous number of

other measurements on temperature, pressure, humidity,

over many different regions.

There are so many factors that affect every phenomenon we want to predict

and control, that we must be very selective and consider only those which seem

very important, if we expect any success in our efforts. To proceed

systematically we start by introducing the following denition.

Denition 8.1: Function of

n

real variables, vector

A

function of n real variables

is a function whose domain (Denition

1.1 on page 2) consists of sequences of real numbers. Such

sequences are also referred to as (

real

)

n

-dimensional vectors

,

or (real)

n

-

tuples

.

If the range (outputs) consists of real numbers, the function is called

real-valued

.

x

1

x

2

...., x

n

, , ( )

1999 by CRC Press LLC

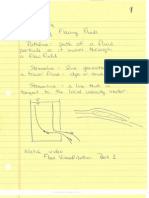

Figure 8-1 illustrates Denition 8.1.

Example 8.1: Energy as a function of current and resistance

If

I

represents electrical current and

R

represents electrical resistance,

then the heat energy generated per unit time,

W

(in

watts

) is described by the

equation

I

We can picture a two or three dimensional vector either as a point in 2 or 3

dimensional space, or as an arrow in two or three dimensions, with a specied

length and direction, as illustrated in Figure 8-2.

Which representation of a vector we choose depends on the particular

situation were in. Our

pictures

, even of three-dimensional vectors, on paper

are necessarily drawn in two dimensions - but

will be used to illustrate

the

general case

and suggest results

of a general nature. The

proofs of any such

results must be carried out algebraically

; the reasoning, however, is most

often motivated by the picture, but

not relying on the picture for its validity

.

The

fundamental idea

of our study of functions of

n

variables is to

convert

our questions and descriptions

to

questions and descriptions of

functions of

one variable,

i.e., to functions we are familiar with. To see how to do this, we

examine the

graph

of a function

f

that is, we examine the set of points of

the form

f

x

1

x

2

..., x

n

, , ( ) x

1

x

2

..., x

n

, , ( )

f

Figure 8-1

Function of n variables

W f I R , ( ) = I

2

= R

P P

1

P

2

, ( ) =

P P

1

P

2

P

3

, , ( ) =

Figure 8-2

x

1

... , x

n

f x

1

... , x

n

, ( ) , , ( ).

1999 by CRC Press LLC

Our picture will be for the case

n

= 2 (see Figure 8-3 ). Here the graph of

the function

f

is pictured as a

surface

whose height above the point

in the base plane is .

Before proceeding any further well introduce some notation to keep our

expressions from getting too complicated looking.

Denition 8.2: Vector notation

In our work with real valued functions of

n

real variables, (Denition 8.1 on

page 261),

n

-dimensional vectors

which are nite sequences of numbers,

(Denition 8.1 on page 261)

are denoted as follows:

etc.,

so that a point is denoted

1

by

and denote the

standard unit length coordinate axis vectors

, by

where the

j

-th coordinate of is given by

Also if

t

is a real number, (Actually, this

was already the case, because a sequence is a function, and we already have a

meaning assigned to and where

t

is a number, namely (see

Denition 1.2 on page 3 and Denition 2.7 on page 35),

1. There is a slight notational inconsistency, which shouldnt bother anyone

namely, is really

x

1

x

2

0 , , ( ) f x

1

x

2

, ( )

x

1

x

2

0

, , ( )

f x

1

x

2

, ( )

x

1

x

2

f x

1

x

2

, ( ) , , ( )

Graph of f

Figure 8-3

x x

1

x

2

... , x

n

, , ( ), x

0

x

01

x

02

... , x

0n

, , ( ), h h

1

h

2

... ,h

n

, , ( ), = = =

x

1

x

2

... , x

n

f x

1

x

2

... , x

n

, , ( ) , , , ( ) x f x ( ) , ( )

x f x ( ) , ( ) x

1

x

2

..., x

n

, , ( ) f x

1

x

2

..., x

n

, , ( ) , ( ).

e

k

k , 1,...,n, = e

k

e

kj

1 if j k =

0 if j k

=

x th + x

1

th

1

,..., x

n

th

n

+ + ( ). =

x y + tx

1999 by CRC Press LLC

The operations above have a geometrical interpretation that was provided in

Figures 2-5 through 2-7 starting on page 24.

2 Local Behavior of Function of n Variables

Suppose now that we want to investigate the local behavior of the function f

of n real variables, near the point x, that is, at points displaced just a short

distance from x. As we can see from Figure 8-4, in order to follow the plan

presented in the introduction, of converting such an investigation to one

involving functions of one variable, we restrict the displacement from x to

be in a xed direction specied by some nonzero length vector. For

convenience we choose this vector to be of unit length, and denote it by u.

We are thus restricting consideration to the function of one variable

obtained by restricting inputs to be on the line through x in the direction

specied by the unit length vector, u.

Extending the reasoning that generated equation 2.2 on page 25, we see that

this line consists of all points of the form , where t may be any real

number, as illustrated in Figure 8-5.

For completeness and reference purposes, we introduce Denition 8.3.

x y + x

1

y

1

... , x

n

y

n

+ , + ( ) = cx cx

1

... ,cx

n

, ( ) =

Figure 8-4

u

x

look only at points

along this line

x tu +

x tu +

1999 by CRC Press LLC

Denition 8.3: Line in n dimensions

The line through the point (see Denition 8.2 on page 263) in the

direction specied by the unit length vector is the set of all points x + t u

for t any real number.

The function of one variable that is obtained by restricting the inputs of the

real valued function, f, of n real variables to the line whose formula is

x + tu will be denoted by G, that is,

8.1

The function G is illustrated in Figure 8-5, the portion of the graph of f

from which it came appearing above the line x + tu, of Figure 8-4.

We can now make use of our one-variable results, by introducing the

concept of directional derivative.

Denition 8.4: Directional derivative

If the derivative (Denition 2.5 on page 30) of the function G

specied by equation 8.1 exists, it is called the directional derivative of f at

x in the direction specied by the unit vector u. This directional derivative

will be denoted by

.

Notice that if we want to approximate in a manner similar

to the fundamental approximation, equation 2.7 on page 31, we simply use

,

where is just the length of (h,k) (for the general formal

denition, see Denition 8.6 on page 270) and

x

u

G t ( ) f x t u + ( ). =

Coordinates in terms o f t

1

0

representation in the n dimensional

coordinate system

x

x+u

Figure 8-5

graph of G,

G(t) = f(x + t u)

G 0 ( )

D

u

f x ( )

f x h y k + , + ( )

f x h y k + , + ( ) f x y , ( ) D

u

f x y , ( ) h k , ( ) +

h k , ( ) h

2

k

2

+ =

u

h k , ( )

h k , ( )

------------------. =

1999 by CRC Press LLC

Before developing the necessary formulas for simple mechanical

computation of the directional derivative, we illustrate its computation directly

from the denition.

Example 8.2 Finding for

Here

Noting that all stand for xed constants in the current

context, we see from the rules for differentiation of functions of one variable

(Theorem 2.13 on page 53) that

So nally we see that

.

I

Exercises 8.3

1. Find for . Evaluate this

for

2. Use the previous result to nd an approximation to

3. Make up your own functions, and compute various directional

derivatives of your own choosing, checking the results using

Mathematica or Maple, or the equivalent.

Directional derivatives are not very much used for practical computations;

rather, as we will shortly see, they will be the starting point for development of

the important practical tools of multivariable calculus. So we will not place a

great deal of emphasis here on routine computations with them, but will

immediately continue with developing their important properties. First we will

concern ourselves with nding a simpler way to compute directional

derivatives. It turns out that the formula we will develop will greatly increase

our understanding of whats really going on.

We start by observing that there are certain special directional derivatives

which can usually be computed by inspection namely, those for which u is

D

u

f x ( ) f x ( ) f x

1

x

2

, ( ) x

1

3x

2

( ) exp = =

G t ( ) f x t u + ( ) f x

1

tu

1

x

2

tu

2

+ , + ( ) = =

x

1

t u

1

+ ( ) 3 x

2

t u

2

+ ( ) ( ). exp =

x

1

x

2

u

1

u

2

, , ,

G t ( ) u

1

3 x

2

t u

2

+ ( ) ( ) exp = 3u

2

x

1

tu

1

+ ( ) 3 x

2

t u

2

+ ( ) ( ) exp +

u

1

3u

2

x

1

tu

1

+ ( ) + ( ) 3 x

2

t u

2

+ ( ) ( ) exp . =

D

u

f x ( ) G 0 ( ) u

1

3u

2

x

1

+ ( ) 3 x

2

( ) exp = =

D

u

f x ( ) f x

1

x

2

, ( ) x

1

2

( ) cos x

1

2

x

2

+ =

x

1

x

2

, ( )

2

--- 2 ,

( ,

j \

= u

1

u

2

, ( )

1

5

-------

2

5

------- ,

( ,

j \

. =

f

2

--- 0.02 2.04 , +

( ,

j \

1999 by CRC Press LLC

a coordinate axis vector; that is (already introduced in Denition 8.2 on page

263), u is one of the vectors whose j-th coordinate, , is given by

8.2

In three dimensions

The signicance of being able to compute these directional derivatives

almost by inspection arises because any vector can be written as a sum of

vectors in the coordinate axis directions so that we will be able to write all

directional derivatives very easily in terms of these simple ones. The ease of

computation of these coordinate axis directional derivatives follows because

we can compute by treating all as xed constants

with being the variable of interest in the function of one variable being

differentiated.

Example 8.4:

Here, to obtain the desired answer, we act as if are xed

constants, and take the derivative of the resulting function of the one variable,

, to obtain that if

then

I

Because they come up so frequently, the special directional derivatives,

, have special names and notations.

Denition 8.5: Partial derivatives

The directional derivative , where the vector is given in

Denition 8.2 on page 263, is called the partial derivative of f at x with

respect to its i-th coordinate, and is denoted

1

by

1. Sometimes is denoted by . The most common notation

for and by far the one worth avoiding if at all possible, is

since it has no redeeming virtues of any kind.

e

i

e

ij

e

ij

1 if j i =

0 if j i

=

e

1

1 0 0 , , ( ), e

2

0 1 0 , , ( ) = = , e

3

0 0 1 , , ( ) = .

D

e

i

f x ( ) x

j

with j i

x

i

D

e

1

x

1

2

e

x

1

x

2

x

3

x

2

and x

3

x

1

f x

1

x

2

x

3

, , ( ) x

1

2

e

x

1

x

2

x

3

, =

D

e

1

f x

1

x

2

x

3

, , ( ) 2x

1

e

x

1

x

2

x

3

x

1

2

x

2

x

3

e

x

1

x

2

x

3

+ 2x

1

x

1

2

x

2

x

3

+ ( ) e

x

1

x

2

x

3

= = .

D

e

i

f x ( )

D

e

i

f x ( ) e

i

D

i

f x ( ).

D

e

i

f x ( ) f

i

x ( )

D

e

i

f x ( ),

x

i

f x ( ),

1999 by CRC Press LLC

is called the partial derivative operator with respect to the i-th

coordinate. It is a function whose domain (inputs) and range (outputs) consist

of functions.

Exercises 8.5

Compute for the functions of Example 8.2 on page 266 and of

Exercises 8.3 on page 266.

As mentioned earlier, one can always proceed from the point x to the point

x + t u along segments parallel to the coordinate axes, as illustrated in

Figure 8-6.

Now to get to the details of computing any directional derivative from the

partial derivatives. We will illustrate the derivation of the general result via the

case n = 3. We rst rewrite the difference quotient as a

sum of function differences, in each of which the changes in the argument is

restricted to precisely one coordinate. Specically we write

8.3

D

i

D

i

f x ( )

x

x + t u

x

x + t u

in domain of a function

of two variables

in domain of

a function of

three variables

Figure 8-6

.

.

. .

.

. .

f x t u + ( ) f x ( ) [ ] t

f x t u + ( ) f x ( )

t

---------------------------------------

f x

1

tu

1

+ x

2

tu

2

+ x

3

tu

3

+ , , ( ) f x

1

x

2

x

3

, , ( )

t

-------------------------------------------------------------------------------------------------------------- =

f x

1

tu

1

+ x

2

tu

2

+ x

3

tu

3

+ , , ( ) f x

1

tu

1

+ x

2

tu

2

+ x

3

, , ( )

t

---------------------------------------------------------------------------------------------------------------------------------- =

+

f x

1

tu

1

+ x

2

tu

2

+ x

3

, , ( ) f x

1

tu

1

+ x

2

x

3

, , ( )

t

--------------------------------------------------------------------------------------------------------------

+

f x

1

tu

1

+ x

2

x

3

, , ( ) f x

1

x

2

x

3

, , ( )

t

----------------------------------------------------------------------------------.

1999 by CRC Press LLC

Most readers should not have much difculty applying the mean value

theorem (Theorem 3.1 on page 62) to each of the last three ratios above, to

obtain the result we present shortly in equation 8.7. The precise arguments are

presented in the optional segment to follow.

,

Look at the numerator of the second line of equations 8.3. It is

, 8.4

where

.

To be able to legitimately apply the mean value theorem we let

. 8.5

We see that the expression in equation 8.4 can be expressed in the simpler form

which, from the mean value theorem, Theorem 3.1 on page 62, is equal to

, where lies between 0 and 1 (assuming the hypotheses of

the mean value theorem are satised). But using equation 8.5 above, we see that

So

8.6

That is, the rst numerator in the nal part of equation 8.3 on page 268 is given

by the right side of equation 8.6. This gives the rst ratio of the nal part of

equation 8.3 as

.

In a similar fashion the remaining two ratios in the nal part of equation 8.3

may be written as

and

respectively.

f x t u + ( ) f x t u t u

3

e

3

+ ( ) f z t u

3

e

3

+ ( ) f z ( ) =

z x t u t u

3

e

3

+ =

G ( ) f z e

3

+ ( ) =

G t u

3

( ) G 0 ( ),

t u

3

G

3

t u

3

( )

3

G ( )

f z e

3

+ [ ] k e

3

+ ( ) f z e

3

+ ( )

k

-----------------------------------------------------------------------------

k 0

lim =

D

3

f z e

3

+ ( ). =

t u

3

G

3

t u

3

( ) t u

3

D

3

f z

3

t u

3

e

3

+ ( ). =

u

3

D

3

f x

1

t u

1

x

2

t u

2

x

3

3

t u

3

e

3

+ , + , + ( )

u

2

D

2

f x

1

t u

1

x

2

2

t u

2

x

3

, + , + ( )

u

1

D

1

f x

1

1

t u

1

x

2

x

3

, , + ( ),

1999 by CRC Press LLC

So we nd that under the appropriate circumstances allowing application of

the mean value theorem, Theorem 3.1 on page 62, we have

8.7

where the are numbers between 0 and 1. To obtain the desired directional

derivative, , we must take the limit as t approaches 0. Provided

that the partial derivatives at the indicated values above for t near 0 get

close to their values at t = 0 (i.e., at x), we would expect this limit to be

A precise statement of the result just derived requires at least a minimal

discussion of continuity for functions of several variables. All of the material

to be presented below is a natural extension of previously introduced concepts

related to continuity and closeness. We return from this detour at Theorem 8.9.

To begin we extend the denition of length and distance between vectors

from the two-dimensional case, Denition 6.11 on page 200, to n-dimensional

vectors in the following.

Denition 8.6: Length of vector, distance between vectors

The l engt h ( or norm) , of t he n- di mens i onal vect or

is dened

1

by

8.8

the (Euclidean) distance between

is dened to be

8.9

Denition 8.7: Continuity of function of n variables, (precise)

We say that the real valued function, g, of n real variables (Denition 8.1

on page 261) is continuous at in the domain of g, if, for x in the

domain of g, gets close to 0 as gets small i.e.,

1. This is motivated by the Pythagorean theorem.

f x t u + ( ) f x ( )

t

---------------------------------------- u

1

D

1

f x

1

1

t u

1

x

2

x

3

, , + ( ) =

u

2

D

2

f x

1

t u

1

x

2

2

t u

2

x

3

, + , + ( ) +

u

3

D

3

f x

1

t u

1

x

2

t u

2

x

3

3

t u

3

e

3

+ , + , + ( ) +

i

D

u

f x ( )

D

j

f

u

j

D

j

f x ( )

j 1 =

3

x

x x

1

x

2

,..., x

n

, ( ) =

x x

i

2

;

i 1 =

n

=

x x

1

x

2

,..., x

n

, ( ) and y y

1

y,..., y

n

, ( ) = =

x y

x

0

g x ( ) g x

0

( ) x

0

x

1999 by CRC Press LLC

small changes to the input, , yield small changes to the output .

More precisely, keeping in mind Denition 8.6 on page 270,

Given any positive number, , there is a positive number, ,

(determined by g, and ) such that

whenever x is in the domain of g and

we have

The elementary properties of real valued continuous functions of n real

variables are essentially the same as those of one-variable functions, and are

derived in the same way. We extend the continuity theorem, Theorem 2.10 on

page 38, and Theorem 2.11 on page 42 (continuity of constant and identity

functions) to functions of n variables.

Theorem 8.8: Continuity of functions of n variables

Assuming the real valued functions f and g of n real variables

(Denition 8.1 on page 261) have the same domain and are continuous at

(Denition 8.7 on page 270) the sum, f + g, product, f g, and ratio, f / g

(assuming it is dened) are continuous at ; if the real valued function, R,

of a single real variable is continuous and its domain includes the range of f,

then the composition

1

is continuous at .

The coordinate functions, , dened via

8.10

are continuous at all x.

So, as seen earlier, for functions of one variable, we can often recognize

continuity of a function of several variables from its structure.

Now that we have available the concept of continuity of a function of

several variables, the above discussion yields the following result.

Theorem 8.9: Determination of directional derivatives

If f is a real valued function of n real variables (Denition 8.1 on page

261) all of whose partial derivatives (Denition 8.5 on page 267) exist at and

near (i.e., for all x within some positive distance from (see

Denition 8.6 on page 270) and are continuous at (Denition 8.7 on page

270) then the directional derivative (Denition 8.4 on page 265)

exists and is given by the formula

1. Whose value at x is

x

0

g x

0

( )

x

0

x

0

x ,

g x

0

( ) g x ( ) .

x

0

x

0

R f ( ) x

0

R f x ( ) ( ).

c

j

c

j

x ( ) c

j

x

1

x

2

,..., x

n

, ( ) x

j

= =

x

0

x

0

x

0

D

u

f x

0

( )

1999 by CRC Press LLC

8.11

Exercises 8.6

Repeat Exercises 8.3 on page 266 using equation 8.11.

In the formula just developed, a very important type of expression appears,

namely a number of the form

Thi s expr essi on i s cal l ed t he dot product of t he r eal vect or

with the real vector We see, rather

generally, that the directional derivative, is the dot product of the

unit vector u with the vector whose components are the partial derivatives of f

at . This latter vector and dot products are important enough to warrant

the formal denitions we now present.

Denition 8.10: Gradient vector, dot product of two vectors

Let f be a real valued function of n real variables (Denition 8.1 on page

261) all of whose partial derivatives (Denition 8.5 on page 267) exist at x.

The vector

is called the gradient of f at x. It will be denoted

1

by

The vector-valued function whose value at x is is denoted by

and is referred to as the gradient function, or, when there is no danger of

ambiguity, simply as the gradient, or gradient vector.

The dot product, , of the real n-dimensional vectors

1. It is also written

D

u

f x

0

( ) u

j

D

j

f x

0

( ).

j 1 =

n

=

x

j

y

j

j 1 =

n

x x

1

x

2

... , x

n

, , ( ) =

y y

1

y

2

... , y

n

, , ( ) = .

D

u

f x

0

( ),

x

0

D

1

f x ( ) D

2

f x ( ) , ... ,D

n

f x ( ) , ( )

f x ( ).

grad f x ( ) or Df x ( ).

Df x ( )

Df or grad f

x y

x x

1

x

2

... , x

n

, , ( ) = and y y

1

y

2

... , y

n

, , ( ) =

1999 by CRC Press LLC

is dened by the equation

1

8.12

The result concerning directional derivative computation can now be neatly

and formally stated in the following theorem.

Theorem 8.11: Computation of directional derivatives

Let f be a real valued function of n real variables (Denition 8.1 on page

261), whose gradient vector components (Denition 8.10 on page 272) exist

and are continuous (Denition 8.7 on page 270) at x and at all points within

some positive distance (Denition 8.6 on page 270) of x. Then

8.13

also written .

That is, when the partial derivatives of f are continuous at and near x, the

directional derivative, , is the dot product of u with grad f(x).

This result is sometimes written

, 8.14

where

is the vector of partial derivative operators

2

(the gradient operator).

The fundamental approximation for functions of one variable, equation 2.7

on page 31,

8.15

1. When x and y are vectors of complex numbers, this denition needs to

be modied, to

where is the complex conjugate of , (see Denition 6.8 on page 199) in

order that be real and nonnegative.

2. This notation will prove extremely useful, as we will see later.

x y x

j

y

j

j 1 =

n

=

y

j

y

j

x x x

2

=

x y x

j

y

j

..

j 1 =

n

=

D

u

f x ( ) u Df x ( ) = ,

D

u

f u D ( ) f or D

u

u D = =

D

u

f x ( )

D

u

f x ( ) u D ( ) f x ( ) = D

u

f u D ( ) f , or D

u

u D, = =

D D

1

D

2

,...,D

n

, ( ) =

f x

0

h + ( ) f x

0

( ) f x

0

( ) + h,

1999 by CRC Press LLC

which was essential to studying functions of one variable, can now be

generalized to functions of several variables in a natural way. From the

meaning of directional derivative we see from approximation 8.15 that

8.16

where u is the unit vector in the direction of h, that is

.

Substituting for u in equation 8.13 and substituting the result into

the right side of approximation 8.16 yields the desired extension of the

fundamental approximation for functions of one variable namely, the

following.

Fundamental multivariate approximation

If f is a real valued function of n real variables (Denition 8.1 on page

261) whose gradient vector (Denition 8.10 on page 272) exists at , then

8.17

(where the dot product symbol is also dened in Denition 8.10).

In this generalization, the derivative, Df, is replaced by the gradient

vector, D f, and ordinary multiplication here turns into dot product

multiplication.

Evidently the gradient vector and dot product occupy a central role in the

study of functions of several variables, so now seems a reasonable time to seek

a clear understanding of the dot product formula, equation 8.12 on page 273

and formula 8.13 on page 273 for .

Since the formula for involved the dot product of a unit length

vector, u, with , well rst examine dot products of the form

in three dimensions, where .

First look at the simplest unit vector, namely, u = (1,0,0). Then

f x

0

h + ( ) f x

0

( ) D

u

f x

0

( ) h + ,

u

h

h

-------- =

h h

x

0

f x

0

h + ( ) f x

0

( ) h Df x

0

( ) + f x

0

( ) h gradf x

0

( ) + =

D

u

f x ( )

D

u

f x ( )

Df

u X

u u

1

u

2

u

3

, , ( ) and X X

1

X

2

X

3

, , ( ) = =

u X X

1

= .

1999 by CRC Press LLC

So in this case, the dot product of X with the unit vector along the positive

rst coordinate axis is just the rst coordinate of X.

Looking at Figure 8-7, the vector of length is the vector along the rst

axis (whose endpoint is) closest to (the endpoint of) the vector X. This follows

because, as seen in Figure 8-8, from the denition of distance (Denition 8.6

on page 270) the distance, d, of any other vector along the rst axis from the

endpoint of X (here ) exceeds .

This vector, which is the rst-axis vector closest to X, is called

the projection of X on u = (1,0,0) (or on the rst axis). What we have just

seen suggests the possibility that generally is the magnitude of the

projection of the vector X along the axis specied by any unit length vector, u.

Well investigate this conjecture, and then for reference purposes, sum up

the results in a formal denition and theorem.

To determine the projection of the n-dimensional vector X along the axis

specied by the unit length vector u, we must nd the vector closest to

X. That is, we must nd the real number, , which minimizes the quantity

X

1

X

1

X

2

0 , , ( )

X

1

X

2

X

3

, , ( ) X =

Figure 8-7

X

1

b

d

a

X

1

Figure 8-8

First axis

Any other vector along

first axis

X

d a

2

X

2

2

X

3

2

+ + = b X

2

2

X

3

2

+ =

X

1

0 0 , , ( ),

u X

u

1999 by CRC Press LLC

. To make this easier, well do the equivalent task of choosing to

minimize

8.18

To nd the desired we could solve the equation . We will

leave this to the reader, and instead complete the square, by rst writing

Now we complete the square of the last expression, by adding

immediately preceding the last term in the nal expression above, to obtain a

perfect square, and then compensating, to preserve the equality, obtaining

It is now evident that is minimized by choosing

.

We can now supply the promised denition and theorem.

Denition 8.12: Projection of X on Y

Let X and Y be any real n-dimensional vectors (see Denition 8.1 on

page 261). The projection, , of X on Y is the vector (where

is a real number) closest to X, i.e., minimizing (see Denition

8.6 on page 270). is sometimes referred to as the component of X

in the Y direction.

Theorem 8.13: Projection of X on Y

Let X = and Y = be any real n-

dimensional vectors. If Y = 0 (i.e., for all i), then = 0.

Otherwise (see Denition 8.10 on page 272)

8.19

The proof is trivial when Y = 0, because in this case all vectors = 0,

and so the closest of these vectors to X is clearly 0, as asserted.

X u

G ( ) X u

2

X

j

u

j

( )

2

.

j 1 =

n

= =

G ( ) 0 =

G ( ) X

j

u

j

( )

2

j 1 =

n

X

j

2

j 1 =

n

2

X

j

u

j

j 1 =

n

( ,

, (

j \

u

j

2

j 1 =

n

( ,

, (

j \

2

+ = =

u u ( )

2

2 X u ( ) X X + =

2

2 X u ( ) X X because u is of unit length. + =

X u ( )

2

G ( )

2

2 X u ( ) X u ( )

2

X u ( )

2

+ X X + =

X u ( )

2

X u ( )

2

X X. + =

G ( )

X u =

P

Y

X ( ) Y

X Y

P

Y

X ( )

X

1

X

2

,..., X

n

, ( ) X

1

X

2

,..., X

n

, ( )

Y

i

0 = P

Y

X ( )

P

Y

X ( )

X Y

Y Y

------------ Y. =

Y

1999 by CRC Press LLC

If , we may write

Choosing , we know from the previous completion of the

square argument that the vector closest to X is

establishing Theorem 8.13.

It is evident that we can write any vector X as the sum of two vectors, one

of which is the component of X in the Y direction, illustrated in Figure 8-9.

That is, we can write

8.20

It would appear that the second vector in this decomposition has a zero

component in the Y direction which we easily verify (using equation

8.19), via

8.21

A decomposition such as given by equation 8.20 is called an orthogonal

decomposition, or an orthogonal breakup. Orthogonal breakups are

extremely important, because they produce zeros. Well see later on just how

useful this is in solving systems of equations. Were just starting to investigate

this concept, and we begin with a formal denition followed by a simple

operational characterization.

Y 0

Y Y

Y

Y

-------- Y u = = .

Y =

u Y Y =

X u ( ) u X

Y

Y

--------

( ,

j \

Y

Y

--------

X Y

Y

2

------------ Y

X Y

Y Y

------------ Y = = =

Y

X

X P

Y

X ( )

P

Y

X ( )

Figure 8-9

X P

Y

X ( ) X P

Y

X ( ) [ ]. + =

P

Y

X P

Y

X ( ) ( ) P

Y

X ( ) P

Y

P

Y

X ( ) ( ) =

P

Y

X ( ) P

Y

X ( ) =

0. =

1999 by CRC Press LLC

Denition 8.14: Orthogonality

The real n-dimensional vector X is said to be orthogonal (or

perpendicular) to the real n-dimensional vector Y if

(see Denition 8.12 on page 276).

Equation 8. 21 makes it reasonable to refer to as the

component of X perpendicular to Y.

An immediate consequence of Denition 8.14 and Theorem 8.13 is the

following.

Theorem 8.15: Orthogonality of X and Y

if and only if . Hence if and only if

We can see immediately from this result and formula 8.13 on page 273 for

directional derivatives, that precisely when .

This suggests that this gradient vector species the direction of greatest rate of

change of the function f at x, a result we shall soon establish, and one which

will evidently be critical in studying the behavior of functions of several

variables.

From equations 8.20 and 8.21 we know that

is a breakup into perpendicular components. It turns out to be important that

the converse also holds. We state these results in Theorem 8.16.

Theorem 8.16: Orthogonal Breakup

If X and Y are real n-dimensional vectors (see Denition 8.1 on page

261) then

8.22

(where is dened in Denition 8.12 on page 276) is an orthogonal

breakup i.e.,

8.23

(see Theorem 8.15 on page 278).

Moreover, if

8.24

P

Y

X ( ) 0 also written X Y =

X P

Y

X ( )

X Y X Y 0 = X Y Y X

D

u

f x ( ) 0 = u grad f x ( )

X P

Y

X ( ) X P

Y

X ( ) [ ] + =

X P

Y

X ( ) X P

Y

X ( ) [ ] + =

P

Y

X ( )

P

Y

X ( ) X P

Y

X ( )

X V

Y

V

Y

+ =

1999 by CRC Press LLC

where is a scalar multiple of Y, and is orthogonal to Y, then this

orthogonal breakup is unique i.e., must be the one given by equation 8.22;

so that we must have

. 8.25

We have already shown that equation 8.23 holds. So we need only prove

that latter part of this theorem. We rst handle the trivial case where .

In this case we must have

and then from equation 8.24 it follows that

.

This establishes equation 8.25 for the case .

If , then from equation 8.24 and the denition of we may write

. 8.26

Now take the dot product of the left and right sides of this equation with Y, and

use the assumed perpendicularity of and Y, to obtain

,

from which it follows that , and hence

.

Now substituting this into equation 8.26, we obtain the desired equation

8.25, proving Theorem 8.16. To state this theorem in English, any vector, X,

can be written in one and only one way as the sum of two vectors one of

which is in the direction of a specied vector Y and the other

perpendicular to Y. The component in the direction of Y is the projection

of X on Y. All of this is suggested by Figure 8-9 on page 277, but established

algebraically using the denitions of dot product and projection.

We never used the Pythagorean theorem directly in our proofs, but rather,

used it to suggest the proper denition of the length of a vector. With the

machinery we have built up, it is a natural corollary.

Theorem 8.17: Corollary Pythagorean theorem

For any real n-dimensional vectors, X and Y

8.27

(see Denition 8.12 on page 276 and Denition 8.6 on page 270).

V

Y

V

Y

V

Y

P

Y

X ( ) and V

Y

X P

Y

X ( ) = =

Y 0 =

V

Y

0 P

Y

X ( ) = = ,

V

Y

X X P

Y

X ( ) = =

Y 0 =

Y 0 V

Y

X V

Y

V

Y

+ Y V

Y

+ = =

V

Y

X Y Y Y ( ) =

X Y Y Y =

Y P

Y

X ( ) =

X

2

P

Y

X ( )

2

X P

Y

X ( )

2

+ =

1999 by CRC Press LLC

Furthermore

8.28

equality always holding in the trivial case X = 0, and in the nontrivial case,

if and only if Y is a nonzero scalar multiple of X.

To prove these results we write

Now expand out

1

and use the orthogonality of and

(result 8.23 on page 278 and Theorem 8.15 on page 278) to obtain equation

8.27. Inequality 8.28 follows immediately from equation 8.27.

Finally, if Y is a nonzero scalar multiple of X, we know from Theorem

8.13 on page 276 that . On the other hand, if ,

we see from the Pythagorean theorem equality, 8.27, that

,

which implies that

which, in the nontrivial case we are considering, , using the projection

formulas from Theorem 8.13 on page 276 we see that X must be a nonzero

scalar multiple of Y, and Y must be a nonzero scalar multiple of X. These

arguments establish Theorem 8.17.

Theorem 8.17 allows us to establish our earlier conjecture, that a nonzero

gradient vector species the direction of greatest change of the associated

function. So suppose f is a real valued function of n real variables which

allows application of formula 8.13 on page 273 for directional derivatives. Let

u be any unit length n dimensional vector which is not a scalar multiple of

grad f (x). Then

where , ,

justied by Theorem 8.16 on page 278 and Theorem 8.17 on page 279. .

1. Using the distributivity of dot products i.e., , etc.

P

Y

X ( ) X ,

X 0,

X

2

X X =

P

Y

X ( ) X P

Y

X ( ) [ ] ( ) P

Y

X ( ) X P

Y

X ( ) [ ] ( ). =

P

Y

X ( ) X P

Y

X ( )

u v + ( ) w u w v w + =

X P

Y

X ( ) = X P

Y

X ( ) =

X P

Y

X ( )

2

0 =

X P

Y

X ( ) = ,

X 0

D

u

f x ( ) u grad f x ( ). =

u

g

grad f x ( ) u

g

grad f x ( ) + =

u

g

P

grad f x ( )

u ( ) = u

g

u u

g

grad f x ( ) and u

g

1 < =

1999 by CRC Press LLC

Hence

But because is in the direction, but has length < 1, we can

write where is the unit length vector in the direction of

and

Hence

That is, if u is any unit vector not parallel to the nonzero gradient vector,

and is the unit vector in the direction of then

,

establishing that under the given conditions, the gradient species the direction

of greatest rate of change of f. Note that is the direction of the

most negative rate of change of f. We summarize the various properties that

we have established about the gradient vector as follows.

Theorem 8.18: Gradient vector properties

Suppose the real valued function, f, of n real variables (Denition 8.1 on

page 261) has partial derivatives (Denition 8.5 on page 267) dened for all

values within some positive distance (Denition 8.6 on page 270) of the

vector x, and these partial derivatives are continuous (Denition 8.7 on page

270) at x. If the unit vector (Denition 8.14 on page 278

and Denition 8.10 on page 272) then (see Denition 8.4 on

page 265). If , is a maximum for

and a minimum for

.

From this result it should be evident that the principal nice places to hunt

for maxima and minima of functions of several variables are those values of x

at which . Finding such x involves solving a system of

(usually nonlinear) equations, and we have not yet developed the tools for

doing this. Furthermore, once we have solved these equations, to handle this

subject properly, sufcient conditions to determine whether the solution

corresponds to a maximum, minimum or neither must be developed. Since all

D

u

f x ( ) u

g

grad f x ( ). =

u

g

grad f x ( )

u

g

c u

G

= u

G

grad f x ( ) c 1. <

D

u

f x ( ) c u

G

grad f x ( ). =

c D

u

G

f x ( ) D

u

G

f x ( ). < =

grad f x ( ), u

G

grad f x ( ),

D

u

f x ( ) D

u

G

f x ( ) <

grad f x ( )

u grad f x ( )

D

u

f x ( ) 0 =

grad f x ( ) 0 D

u

f x ( )

u u

G

grad f x ( )

grad f x ( )

------------------------------- = =

u u

G

grad f x ( )

grad f x ( )

------------------------------- = =

grad f x ( ) 0 =

1999 by CRC Press LLC

of this depends on the linear algebra to be introduced in the next chapters,

we postpone any further discussion of this subject until the necessary tools are

available.

A useful corollary to the Pythagorean theorem, Theorem 8.17 on page 279,

is the Schwartz inequality or vectors.

Theorem 8.19: The Schwartz inequality for vectors

Recall Denition 8.10 on page 272 of dot product of two vectors. If X and

Y are real n-dimensional vectors, then

8.29

with equality only if one of these vectors is a scalar multiple of the other.

To establish this version of the Schwartz inequality, we note that if

then inequality 8.29 is certainly true. Thus we need only consider the case

. For this we note that inequality 8.28 on page 280 is equivalent to

8.30

But when

8.31

Substituting and the last expression from equation 8.31 into

equation 8.30 yields the asserted equation 8.29, proving the Schwartz

inequality for vectors.

We may think of the Schwartz inequality as the assertion that the magnitude

8.32

of the cosine

1

determined by the vectors X and Y does not exceed 1; here

writing (see equation 8.19 on page 276 yielding the formula

). we provide a sign for by requiring

if and if ,

1. Note that here we are not referring to the cosine of an angle, but the ratio

of the signed leg (projection) to the hypotenuse length, a geometric

conguration not requiring consideration of angles.

X Y ( )

2

X X ( ) Y Y ( )

Y 0 =

Y 0

P

Y

X ( )

2

X

2

.

Y 0

P

Y

X ( )

2 X Y

Y Y

------------ Y

2

X Y

Y Y

------------ Y

( ,

j \

X Y

Y Y

------------ Y

( ,

j \

= =

X Y ( ) X Y ( )

Y Y

-----------------------------------. =

X

2

X X =

X Y ,,, , ( ) cos

P

Y

X ( )

X

--------------------- =

P

Y

X ( ) Y =

X Y ( ) Y Y ( ) = X Y ,,, , ( ) cos

X Y ,,, , ( ) cos 0 < 0 < X Y ,,, , ( ) cos 0 > 0 >

1999 by CRC Press LLC

as illustrated in Figure 8-10; this yields the property that the cosine is

negative when the vector Y and the projection of X on Y point in the

opposite directions (i.e., are negative multiples of each other).

.

Its useful to introduce the signum function at this point to write down a

convenient representation of the dot product.

Denition 8.20: sgn(a)

We dene the signum function, sgn, of a real variable, by

From the arguments above, in particular equation 8.31, we see that

That is, for neither X nor Y the 0 vector

8.33

We should think of equation 8.33 as the denition of the cosine dened by

two vectors, representing the ratio of the signed leg (projection) to the

hypotenuse length; i.e., the algebraic realization of a geometric concept.

The Schwartz inequality may be extended to integrals, which is the setting

where it nds most of its application. The precise statement is as follows:

X

Y

Y

X

Positive cosine Negative cosine

Figure 8-10

a ( ) sgn

1 if a 0 >

0 if a 0 =

1 if a 0. <

=

X Y ,,, , ( ) cos X Y ( ) sgn

P

Y

X ( )

X

--------------------- =

X Y ( ) sgn

X Y

X Y

------------------ =

X Y

X Y

------------------

.

=

X Y ,,, , ( ) cos

X Y

X Y

------------------ = or equivalently X Y X Y X Y ,,, , ( ). cos =

1999 by CRC Press LLC

Theorem 8.21: Schwartz inequality for integrals

If f and g are real valued functions of a real variable (Denition 8.1 on

page 261) continuous at each x (see Denition 7.2 on page 213), in the closed

bounded interval [a; b] (Denition 3.11 on page 88) then (Denition 4.2 on

page 102)

8.34

This follows by applying the Schwartz inequality for vectors (Theorem

8.19 on page 282) to

where

which yields

.

Since these sums are Riemann approximating sums (Denition 4.1 on page

101) for the integrals of inequality 8.34, letting n grow arbitrarily large in the

inequality above leads to the sought-after establishment of inequality 8.34. As

before, because of the assumed continuity, equality holds only if one of the

functions involved is a scalar multiple of the other.

This version of the Schwartz inequality can sometimes be applied to obtain

simple useful bounds for integrals which are hard to evaluate.

Example 8.7: Upper bound for

Write the integrand as

.

So

The actual value of the integral of interest is 0.1359.

I

f g

a

b

( ,

j \

2

f

2

a

b

( ,

j \

g

2

a

b

( ,

j \

.

X f

0

f

1

,..., f

n 1

, ( ) = and Y g

0

g

1

,...,g

n 1

, ( ) =

f

k

f a k h + ( ) = , g

k

g a k h + ( ), and h =

b a

n

------------, =

h f a k h + ( )g a k h + ( )

k 0 =

n 1

( ,

, (

j \

2

h f

2

a k h + ( )

k 0 =

n 1

( ,

, (

j \

h g

2

a k h + ( )

k 0 =

n 1

( ,

, (

j \

1

2

---------------

1

2

e

x

2

2

dx

1

2 x

----------------- x e

x

2

2

1

2

---------------

1

2

e

x

2

2

dx

( ,

j \

2

1

2

------

x d

x

-----

1

2

( ,

j \

x e

x

2

x d

1

2

( ,

j \

l n 2 ( )

2

-------------

1

2

--- e

1

e

4

[ ]

( ,

j \

0.1389 ( )

2

. = =

1999 by CRC Press LLC

Note that the more nearly parallel the two factors you choose, the better

the bound you get.

Exercises 8.8

Use the Schwartz inequality for integrals, Theorem 8.21, to nd upper

bounds for the following. If possible, use some numerical integration scheme

to determine how good these bounds are.

1.

2.

3.

e

x

x d

1

2

x

3

( ) sin x d

1

1.5

x d

x

0.5

1 +

--------------------------

1

2

Anda mungkin juga menyukai

- AdvertisementDokumen1 halamanAdvertisementpreethamismBelum ada peringkat

- Beamer TutorialDokumen58 halamanBeamer TutorialAbdullah AlhmeedBelum ada peringkat

- Fourier TransformDokumen22 halamanFourier TransformpreethamismBelum ada peringkat

- COPUS ProtocolDokumen3 halamanCOPUS ProtocolpreethamismBelum ada peringkat

- Pharma Endo Cards 12Dokumen12 halamanPharma Endo Cards 12sechzhenBelum ada peringkat

- Study Guide For IC Enignes QuizDokumen1 halamanStudy Guide For IC Enignes QuizpreethamismBelum ada peringkat

- Fluid Mechanics NotesDokumen20 halamanFluid Mechanics NotespreethamismBelum ada peringkat

- Fourier TransformDokumen22 halamanFourier TransformpreethamismBelum ada peringkat

- Paper Summary TabularDokumen1 halamanPaper Summary TabularpreethamismBelum ada peringkat

- Demystifying The Brain PDFDokumen428 halamanDemystifying The Brain PDFgoldfinger0007100% (1)

- Conservation Equations (Cont'd)Dokumen12 halamanConservation Equations (Cont'd)preethamismBelum ada peringkat

- Machine PressDokumen8 halamanMachine PresspreethamismBelum ada peringkat

- Nonlinear VibrationsDokumen7 halamanNonlinear VibrationspreethamismBelum ada peringkat

- Pharma Endo Cards 12Dokumen12 halamanPharma Endo Cards 12sechzhenBelum ada peringkat

- Pharma Endo Cards 12Dokumen12 halamanPharma Endo Cards 12sechzhenBelum ada peringkat

- ModelDokumen82 halamanModelpreethamismBelum ada peringkat

- Math 1Dokumen6 halamanMath 1preethamismBelum ada peringkat

- Appendix 7: Evaluation of Volume ofDokumen7 halamanAppendix 7: Evaluation of Volume ofpreethamismBelum ada peringkat

- ModellingDokumen36 halamanModellingpreethamismBelum ada peringkat

- Appendix 7: Evaluation of Volume ofDokumen7 halamanAppendix 7: Evaluation of Volume ofpreethamismBelum ada peringkat

- Appendix 4: Curves and Arc LengthDokumen8 halamanAppendix 4: Curves and Arc LengthpreethamismBelum ada peringkat

- Model 8Dokumen4 halamanModel 8preethamismBelum ada peringkat

- Lecture NotesDokumen3 halamanLecture NotespreethamismBelum ada peringkat

- Appendix 2: Inverse FunctionsDokumen2 halamanAppendix 2: Inverse FunctionspreethamismBelum ada peringkat

- Numerical Methods in Heat, Mass, and Momentum TransferDokumen196 halamanNumerical Methods in Heat, Mass, and Momentum TransferPallabi MitraBelum ada peringkat

- Numerical FluidsDokumen20 halamanNumerical FluidspreethamismBelum ada peringkat

- Lecture NotesDokumen3 halamanLecture NotespreethamismBelum ada peringkat

- COMSOL - Workshop PDFDokumen23 halamanCOMSOL - Workshop PDFpreethamism100% (2)

- Numerical FluidsDokumen20 halamanNumerical FluidspreethamismBelum ada peringkat

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5782)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (587)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (72)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (119)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- Compendium Book For IIT - MainsDokumen28 halamanCompendium Book For IIT - MainsApex InstituteBelum ada peringkat

- Ball and BeamDokumen8 halamanBall and BeamIonel MunteanuBelum ada peringkat

- ORGANISASI SELULER - Struktur Dan Fungsi-2017 PDFDokumen58 halamanORGANISASI SELULER - Struktur Dan Fungsi-2017 PDFMantanindah 0206Belum ada peringkat

- HW 3Dokumen2 halamanHW 3DrMohamed MansourBelum ada peringkat

- Welding ProcedureDokumen8 halamanWelding ProcedurerohsingBelum ada peringkat

- T6818DP04 PDFDokumen5 halamanT6818DP04 PDFwaleed ayeshBelum ada peringkat

- Breasting, Mooring Dolphin, & Main Jetty (Version 1) PDFDokumen48 halamanBreasting, Mooring Dolphin, & Main Jetty (Version 1) PDFjuraganiwal100% (10)

- Application for Electrical EngineerDokumen3 halamanApplication for Electrical EngineerUbaid ZiaBelum ada peringkat

- Influence of The Clinker SO3 On The Cement CharacteristicsDokumen1 halamanInfluence of The Clinker SO3 On The Cement Characteristicsroshan_geo078896Belum ada peringkat

- Zinc Alloy PlatingDokumen20 halamanZinc Alloy PlatingHarish Vasishtha100% (1)

- Grasp8 Users Manual PDFDokumen95 halamanGrasp8 Users Manual PDFantoniolflacoBelum ada peringkat

- AP Final Exam IIDokumen21 halamanAP Final Exam IIRobert JonesBelum ada peringkat

- Mech 3305: Fluid Mechanics: Differential Equations of Fluid MotionDokumen12 halamanMech 3305: Fluid Mechanics: Differential Equations of Fluid MotionHiroki NoseBelum ada peringkat

- TL ASP Aircraft Batteries Maintenance D0 - 2009Dokumen32 halamanTL ASP Aircraft Batteries Maintenance D0 - 2009packratpdfBelum ada peringkat

- Primary Series Circuit Cables: Compliance With StandardsDokumen4 halamanPrimary Series Circuit Cables: Compliance With StandardsMena Balan SerbuBelum ada peringkat

- Syllabus (402050B) Finite Element Analysis (Elective IV)Dokumen3 halamanSyllabus (402050B) Finite Element Analysis (Elective IV)shekhusatavBelum ada peringkat

- Sizing Calculations of Thrust BlocksDokumen5 halamanSizing Calculations of Thrust BlocksElvis Gray83% (6)

- LS Dyna Crack PDFDokumen28 halamanLS Dyna Crack PDFEren KalayBelum ada peringkat

- 00hydr Fundamentals PDFDokumen298 halaman00hydr Fundamentals PDFpcalver2000Belum ada peringkat

- Zeta-Potential Measurements On Micro Bubbles GeneratedDokumen9 halamanZeta-Potential Measurements On Micro Bubbles Generatedggg123789Belum ada peringkat

- Design of A Novel Spectrum Sensing Technique in Cognitive Radio Vehicular Ad Hoc NetworksDokumen24 halamanDesign of A Novel Spectrum Sensing Technique in Cognitive Radio Vehicular Ad Hoc NetworksSomeoneBelum ada peringkat

- Graflex xl Cameras Lenses Accessories GuideDokumen12 halamanGraflex xl Cameras Lenses Accessories GuideDocBelum ada peringkat

- Lab MektanDokumen75 halamanLab MektanbaboBelum ada peringkat

- Biology of Tooth Movement Phases and Forces (BMTPDokumen21 halamanBiology of Tooth Movement Phases and Forces (BMTPRukshad Asif Jaman KhanBelum ada peringkat

- Finding the Area of Circular Sectors and their Real-World ApplicationsDokumen4 halamanFinding the Area of Circular Sectors and their Real-World ApplicationsPaula Jan100% (5)

- Flocculation Dewatering ProcessDokumen5 halamanFlocculation Dewatering ProcessRuth LimboBelum ada peringkat

- Huayou Special Steel Company IntroductionDokumen36 halamanHuayou Special Steel Company IntroductionValeria Serrano VidalBelum ada peringkat

- catinduSENSORLVDTen 11364236687 PDFDokumen52 halamancatinduSENSORLVDTen 11364236687 PDFLmf DanielBelum ada peringkat

- Experiment 1 (Relative Density - A4)Dokumen17 halamanExperiment 1 (Relative Density - A4)Jamiel CatapangBelum ada peringkat

- Determination of Specific Energy in Cutting Process Using Diamond Saw Blade of Natural StoneDokumen9 halamanDetermination of Specific Energy in Cutting Process Using Diamond Saw Blade of Natural StoneIrina BesliuBelum ada peringkat