CS545-Contents IX: Inverse Kinematics

Diunggah oleh

Bharath KumarJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

CS545-Contents IX: Inverse Kinematics

Diunggah oleh

Bharath KumarHak Cipta:

Format Tersedia

CS545Contents IX

!!

Inverse Kinematics

!! !!

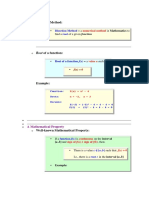

Analytical Methods Iterative (Differential) Methods

"! "! "! "! "!

Geometric and Analytical Jacobian Jacobian Transpose Method Pseudo-Inverse Pseudo-Inverse with Optimization Extended Jacobian Method See http://www-clmc.usc.edu/~cs545

!!

Reading Assignment for Next Class

"!

The Inverse Kinematics Problem

!!

Direct Kinematics

x = f (! )

! = f "1 ( x )

!!

Inverse Kinematics

!!

Possible Problems of Inverse Kinematics

!! !! !! !!

Multiple solutions Infinitely many solutions No solutions No closed-form (analytical solution)

Analytical (Algebraic) Solutions

!!

Analytically invert the direct kinematics equations and enumerate all solution branches

!!

!!

Note: this only works if the number of constraints is the same as the number of degrees-of-freedom of the robot What if not?

!! !!

Iterative solutions Invent artificial constraints

!!

Examples

!! !!

2DOF arm See S&S textbook 2.11 ff

Analytical Inverse Kinematics of a 2 DOF Arm

y! l1! ! l2! e! x!

!!

x = l1 cos !1 + l2 cos (!1 + ! 2 ) y = l1 sin !1 + l2 sin (!1 + ! 2 ) l = x 2 + y2

2 l2 = l12 + l 2 " 2l1l cos # 2 % l 2 + l12 " l2 ( $ # = arccos ' * 2l1l & )

Inverse Kinematics:

y $ !1 = arctan " # x % y " l1 sin ! ( ! 2 = arctan ' " !1 * & x " l1 cos !1 )

y = tan + x

Iterative Solutions of Inverse Kinematics

!!

Resolved Motion Rate Control

! ! = J (! )! x

"

!!

Properties

!! !! !! !!

# ! ! ! = J (! ) x

Only holds for high sampling rates or low Cartesian velocities a local solution that may be globally inappropriate Problems with singular postures Can be used in two ways:

"! "!

As an instantaneous solutions of which way to take As an batch iteration method to find the correct configuration at a target

Essential in Resolved Motion Rate Methods: The Jacobian

!!

Jacobian of direct kinematics:

x = f (! ) " #x #f (! ) = = J (! ) #! #!

Analytical! Jacobian!

!!

In general, the Jacobian (for Cartesian positions and orientations) has the following form (geometrical Jacobian):

pi is the vector from the origin of the world coordinate system to the origin of the i-th link coordinate system, p is the vector from the origin to the endeffector end, and z is the i-th joint axis (p.72 S&S)!

The Jacobian Transpose Method

!" = # J T (" ) !x

!!

Operating Principle:

-!

Project difference vector Dx on those dimensions q which can reduce it the most

!!

Advantages:

-! -!

Simple computation (numerically robust) No matrix inversions

!!

Disadvantages:

-! !! !!

Needs many iterations until convergence in certain configurations (e.g., Jacobian has very small coefficients) Unpredictable joint configurations Non conservative

Jacobian Transpose Derivation

T 1 x target ! x x target ! x 2 T 1 = x target ! f (" ) x target ! f (" ) 2 with respect to " by gradient descent:

Minimize cost function F =

( (

)(

)(

& %F ) #" = !$ ( ' %" + *

& = $ ( x target ! x '

= $ J T (" ) x target ! x = $ J T (" )#x

% f (" ) ) + %" *

Jacobian Transpose Geometric Intuition

x

Target

!#

!" !!

The Pseudo Inverse Method !" = # J (" )( J (") J ("))

T T

!!

$1

!x = J # !x

Operating Principle:

-!

Shortest path in q-space

!!

Advantages:

-!

Computationally fast (second order method)

!!

Disadvantages:

-! !! !!

Matrix inversion necessary (numerical problems) Unpredictable joint configurations Non conservative

Pseudo Inverse Method Derivation

For a small step !x, minimize with repect to !" the cost function: 1 F = !"T !" + # T ( !x $ J (" )!" ) 2 where # T is a vector of Lagrange multipliers. Solution: %F (1) = 0 & !x = J !" %# %F (2) = 0 & !" = J T # & J !" = JJ T # %!" & # = JJ T insert (1) into (2): (3) # = JJ T

$1

J !"

$1

!x

insertion of (3) into (2) gives the final result: !" = J T # = J T JJ T

$1

!x

Pseudo Inverse Geometric Intuition

x

Target

start p osture = desired p osture for op timization

Pseudo Inverse with explicit Optimization Criterion

!" = # J # !x + I $ J # J ( " % $ " )

!!

Operating Principle:

-!

!!

Advantages:

-! -!

Optimization in null-space of Jacobian using a kinematic cost function d 2 F = g(!), e.g., F = # (! i " ! i,0 ) i =1 Computationally fast Explicit optimization criterion provides control over arm configurations

!!

Disadvantages:

!! !!

Numerical problems at singularities Non conservative

Pseudo Inverse Method & Optimization Derivation

For a small step !x, minimize with repect to !" the cost function: 1 T F = ( !" + " # " $ ) ( !" + " # " $ ) + % T ( !x # J (" )!" ) 2 where % T is a vector of Lagrange multipliers. Solution: &F (1) = 0 ' !x = J !" &% &F (2) = 0 ' !" = J T % # ( " # " $ ) ' J !" = JJ T % # J ( " # " $ ) &!" ' % = JJ T insert (1) into (2): (3) % = JJ T

#1

J !" + JJ T

) J (" # " )

#1 $ $

#1

!x + JJ T

) J (" # " )

#1

insertion of (3) into (2) gives the final result: !" = J T % # ( " # " $ ) = J T JJ T = J # !x + I # J # J ( " $ # " )

#1

!x + J T JJ T

) J (" # " ) # (" # " )

#1 $ $

The Extended Jacobian Method

!" = # ( J

!!

ext.

(")) !x ext.

$1

Operating Principle:

-!

!!

Advantages:

-! -! !! !!

Optimization in null-space of Jacobian using a kinematic cost function d 2 F = g(!), e.g., F = # (! i " ! i,0 ) i =1 Computationally fast (second order method) Explicit optimization criterion provides control over arm configurations Numerically robust Conservative

!!

Disadvantages:

!! !!

Computationally expensive matrix inversion necessary (singular value decomposition) Note: new and better ext. Jac. algorithms exist

Extended Jacobian Method Derivation

The forward kinematics x = f (! ) is a mapping " n # " m , e.g., from a n-dimensional joint space to a m-dimensional Cartesian space. The singular value decomposition of the Jacobian of this mapping is: The rows [ V ]i whose corresponding entry in the diagonal matrix S is J ( ! ) = USVT (at least) n-m such vectors (n $ m ). Denote these vectors ni , i %[1, n & m ]. The goal of the extended Jacobian method is to augment the rank deficient Jacobian such that it becomes properly invertible. In order to do this, a cost function F =g ( ! ) has to be defined which is to be minimized with respect to ! in the Null space. Minimization of F must always yield: zero are the vectors which span the Null space of J ( ! ) . There must be

'F 'g = =0 '! '! Since we are only interested in zeroing the gradient in Null space, we project this gradient onto the Null space basis vectors: 'g Gi = n '! i If all Gi equal zero, the cost function F is minimized in Null space. Thus we obtain the following set of equations which are to be fulfilled by the inverse kinematics solution:

( f ( ! )+ ( x + * G - * 0* 1 - =* * ... - * 0 * - * ) Gn & m , ) 0 , For an incremental step .x, this system can be linearized: ( J (! ) + * 'G ( .x + 1 * * * '! - .! = * 0 * ... * 0* * 0' G ) , * n& m * ) '! ,

or

J ext . .! = .x ext . .

The unique solution of these equations is: .! = J ext .

&1

.x ext . .

Extended Jacobian Geometric Intuition

x

Target

start posture

desired posture for optimization

Anda mungkin juga menyukai

- Introduction To AlgorithmsDokumen769 halamanIntroduction To AlgorithmsShri Man90% (10)

- Calculus Exercises With SolutionsDokumen135 halamanCalculus Exercises With SolutionsGia Minh Tieu Tu69% (13)

- Calculus Cheat Sheet AllDokumen11 halamanCalculus Cheat Sheet AllDr Milan Glendza Petrovic NjegosBelum ada peringkat

- CalculusDokumen15 halamanCalculusrpdemaladeBelum ada peringkat

- Brain Yoga: The ManualDokumen48 halamanBrain Yoga: The Manualdrshwetasaxena100% (2)

- Numerical IntegrationDokumen67 halamanNumerical IntegrationMisgun SamuelBelum ada peringkat

- Dynamic ProgrammingDokumen14 halamanDynamic ProgrammingRuchi GujarathiBelum ada peringkat

- Numerical-Methods Question and AnswerDokumen32 halamanNumerical-Methods Question and AnswerNeha Anis100% (5)

- Gayatri Mantras PDFDokumen0 halamanGayatri Mantras PDFBharath Kumar100% (1)

- Advanced Microeconomics ProblemsDokumen35 halamanAdvanced Microeconomics Problemsfarsad1383Belum ada peringkat

- Introduction To Feedback Control SystemsDokumen60 halamanIntroduction To Feedback Control SystemsBonz AriolaBelum ada peringkat

- MHF4U Chapter 1 Notes - UnlockedDokumen30 halamanMHF4U Chapter 1 Notes - UnlockedAlex JimBelum ada peringkat

- Vedic Marriage Ceremony PDFDokumen0 halamanVedic Marriage Ceremony PDFBharath KumarBelum ada peringkat

- A Course in Machine Learning 1648562733Dokumen193 halamanA Course in Machine Learning 1648562733logicloverBelum ada peringkat

- Minsky y PapertDokumen77 halamanMinsky y PapertHenrry CastilloBelum ada peringkat

- Numerical Methods For Elliptic EquationsDokumen12 halamanNumerical Methods For Elliptic Equationsatb2215Belum ada peringkat

- Geometric Transformations in OpenGLDokumen44 halamanGeometric Transformations in OpenGLWinnie TandaBelum ada peringkat

- Module 14Dokumen16 halamanModule 14augustus1189Belum ada peringkat

- 12 InverseDiffKinStatics PDFDokumen37 halaman12 InverseDiffKinStatics PDFPhạm Ngọc HòaBelum ada peringkat

- Calculus Cheat Sheet BlankDokumen6 halamanCalculus Cheat Sheet Blanklucas SANCHEZBelum ada peringkat

- Kumpulan Iterasi MATLABDokumen10 halamanKumpulan Iterasi MATLABZia SilverBelum ada peringkat

- Core 3 Revision SheetDokumen7 halamanCore 3 Revision SheetOriginaltitleBelum ada peringkat

- Lab Sheet-1Dokumen8 halamanLab Sheet-1rj OpuBelum ada peringkat

- Mhf4u Exam ReviewDokumen29 halamanMhf4u Exam ReviewJulia ZhouBelum ada peringkat

- Numerical Programming I (For CSE) : Final ExamDokumen8 halamanNumerical Programming I (For CSE) : Final ExamhisuinBelum ada peringkat

- Class 02 AdvancedFunctions HandoutSOLDokumen11 halamanClass 02 AdvancedFunctions HandoutSOLRonan ZhongBelum ada peringkat

- Computing For Data Sciences: Introduction To Regression AnalysisDokumen9 halamanComputing For Data Sciences: Introduction To Regression AnalysisAnuragBelum ada peringkat

- 10.1 Types of Constrained Optimization AlgorithmsDokumen24 halaman10.1 Types of Constrained Optimization AlgorithmsRachit SharmaBelum ada peringkat

- ML Module 2Dokumen185 halamanML Module 2Roudra ChakrabortyBelum ada peringkat

- Maximum Likelihood Method: MLM: pick α to maximize the probability of getting the measurements (the x 's) that we did!Dokumen8 halamanMaximum Likelihood Method: MLM: pick α to maximize the probability of getting the measurements (the x 's) that we did!Gharib MahmoudBelum ada peringkat

- Chebyshev Collocation Method For Differential EquationsDokumen12 halamanChebyshev Collocation Method For Differential EquationsRyan GuevaraBelum ada peringkat

- EC744 Lecture Notes: Projection Method: Jianjun MiaoDokumen31 halamanEC744 Lecture Notes: Projection Method: Jianjun MiaobinicleBelum ada peringkat

- Other! Methods!: Solving The Navier-Stokes Equations-II!Dokumen8 halamanOther! Methods!: Solving The Navier-Stokes Equations-II!Pranav LadkatBelum ada peringkat

- Statistics Chapter3Dokumen60 halamanStatistics Chapter3Zhiye Tang100% (1)

- Mae 546 Lecture 16Dokumen8 halamanMae 546 Lecture 16Rajmohan AsokanBelum ada peringkat

- Calc wk1 HWDokumen4 halamanCalc wk1 HWAbdullah MustafaBelum ada peringkat

- 3e - Implementation - Linear StaticsDokumen20 halaman3e - Implementation - Linear Staticscharlottekiel12Belum ada peringkat

- Gen Math Week 6Dokumen55 halamanGen Math Week 6Kevin De GuzmanBelum ada peringkat

- RNG RevisedDokumen132 halamanRNG RevisedLaurenceBelum ada peringkat

- 1.6 NotesDokumen5 halaman1.6 NotesRahul MishraBelum ada peringkat

- Optimumengineeringdesign Day3aDokumen34 halamanOptimumengineeringdesign Day3aSantiago Garrido BullónBelum ada peringkat

- PC 2 2 NotesDokumen5 halamanPC 2 2 Notesapi-261956805Belum ada peringkat

- Introducere in Matlab PDFDokumen77 halamanIntroducere in Matlab PDFAlecs BalaBelum ada peringkat

- Week 04Dokumen101 halamanWeek 04Osii CBelum ada peringkat

- CSE 421 Algorithms: Divide and Conquer: Summer 2011! Larry Ruzzo! !Dokumen63 halamanCSE 421 Algorithms: Divide and Conquer: Summer 2011! Larry Ruzzo! !Abhay SrinivasBelum ada peringkat

- Fast Power FlowDokumen23 halamanFast Power FlowKornepati SureshBelum ada peringkat

- Algoritmo Número (Valor 5 Puntos) : RecursivedetDokumen6 halamanAlgoritmo Número (Valor 5 Puntos) : RecursivedetSeidy SalazarBelum ada peringkat

- E7 2021 FinalReviewDokumen85 halamanE7 2021 FinalReviewkongBelum ada peringkat

- Navigate: Learning Guide ModuleDokumen5 halamanNavigate: Learning Guide ModuleJezross Divine Quiroz FloresBelum ada peringkat

- Lab Course Kinect Programming For Computer Vision: TransformationsDokumen57 halamanLab Course Kinect Programming For Computer Vision: TransformationsAna SousaBelum ada peringkat

- Practical Lesson 4. Constrained Optimization: Degree in Data ScienceDokumen6 halamanPractical Lesson 4. Constrained Optimization: Degree in Data ScienceIvan AlexandrovBelum ada peringkat

- Cobweb Con Matlab PDFDokumen6 halamanCobweb Con Matlab PDFLea EleuteriBelum ada peringkat

- Homework 2 Solutions: G (0) 1 and G (1) 1/2. Therefore G (X) Is in (0,1) For All X in (0,1) - and Since G IsDokumen6 halamanHomework 2 Solutions: G (0) 1 and G (1) 1/2. Therefore G (X) Is in (0,1) For All X in (0,1) - and Since G IsKamran AhmedBelum ada peringkat

- SPR S12 F SolDokumen5 halamanSPR S12 F SolCengiz KayaBelum ada peringkat

- ECE 3040 Lecture 6: Programming Examples: © Prof. Mohamad HassounDokumen17 halamanECE 3040 Lecture 6: Programming Examples: © Prof. Mohamad HassounKen ZhengBelum ada peringkat

- Formula Sheet CT1Dokumen3 halamanFormula Sheet CT1Hamad JamalBelum ada peringkat

- Mat FungsiDokumen36 halamanMat FungsimazayaBelum ada peringkat

- Shooting Method For Boundary Value Problem SolvingDokumen6 halamanShooting Method For Boundary Value Problem Solvingminnntheino75% (4)

- Universidad Católica Santa María: Facultad de Ciencias e Ingenierías Físicas y FormalesDokumen17 halamanUniversidad Católica Santa María: Facultad de Ciencias e Ingenierías Físicas y FormalesDavid Gonzales OviedoBelum ada peringkat

- EEE 822 - Numerical Methods Laboraotry ManualDokumen14 halamanEEE 822 - Numerical Methods Laboraotry ManualSabuj AhmedBelum ada peringkat

- Algebra 2 Notetaking GuideDokumen18 halamanAlgebra 2 Notetaking GuidePaz MorenoBelum ada peringkat

- Crash Course On Tensorflow!: Vincent Lepetit!Dokumen63 halamanCrash Course On Tensorflow!: Vincent Lepetit!Akshay ChellsBelum ada peringkat

- ReadmeDokumen11 halamanReadmeAnonymous 20iMAwBelum ada peringkat

- Serving-Retired-Released Armed Forced PersonnelDokumen3 halamanServing-Retired-Released Armed Forced PersonnelBharath KumarBelum ada peringkat

- SSB PDFDokumen11 halamanSSB PDFSumeet MittalBelum ada peringkat

- Mentucdo Document Nobbir Ribbon Eteled Delete Tonf FontDokumen1 halamanMentucdo Document Nobbir Ribbon Eteled Delete Tonf FontBharath KumarBelum ada peringkat

- How To Calibrate An RTD or Platinum Resistance Thermometer (PRT)Dokumen8 halamanHow To Calibrate An RTD or Platinum Resistance Thermometer (PRT)Bharath KumarBelum ada peringkat

- Lalbhai Dalpatbhai Institute of Indology, Ahmedabad - 380 009. List of Ph.D. Scholars From 1960Dokumen3 halamanLalbhai Dalpatbhai Institute of Indology, Ahmedabad - 380 009. List of Ph.D. Scholars From 1960Bharath KumarBelum ada peringkat

- Medical Standards in Armed Forces Gomilitary - inDokumen48 halamanMedical Standards in Armed Forces Gomilitary - inBharath Kumar100% (1)

- Guidelines For Filling Online Application Form - Advt. No. 112Dokumen3 halamanGuidelines For Filling Online Application Form - Advt. No. 112Bharath KumarBelum ada peringkat

- Minimal Representations of Orientation Homogeneous TransformationsDokumen13 halamanMinimal Representations of Orientation Homogeneous TransformationsBharath KumarBelum ada peringkat

- Finding Areas by IntegrationDokumen11 halamanFinding Areas by IntegrationBharath KumarBelum ada peringkat

- Among Kinematic Relations and Jacobians: What Did Mikael and Thomas Say The First Lecture?Dokumen16 halamanAmong Kinematic Relations and Jacobians: What Did Mikael and Thomas Say The First Lecture?Bharath KumarBelum ada peringkat

- Information and Program: Robotics 1Dokumen13 halamanInformation and Program: Robotics 1Bharath KumarBelum ada peringkat

- Position and Orientation of Rigid Bodies: Robotics 1Dokumen20 halamanPosition and Orientation of Rigid Bodies: Robotics 1Bharath KumarBelum ada peringkat

- VSRF Profile LatestmodifyDokumen8 halamanVSRF Profile LatestmodifyBharath KumarBelum ada peringkat

- Dhyana Sloka PDFDokumen0 halamanDhyana Sloka PDFBharath KumarBelum ada peringkat

- .. Atma-Vidya-Vilasa ..: Aa (M-Evdya-EvlasDokumen5 halaman.. Atma-Vidya-Vilasa ..: Aa (M-Evdya-EvlasBharath KumarBelum ada peringkat

- Global Operations and Supply Chain ManagementDokumen24 halamanGlobal Operations and Supply Chain ManagementShoqstaRBelum ada peringkat

- Time Value of Money Quiz ReviewerDokumen3 halamanTime Value of Money Quiz ReviewerAra FloresBelum ada peringkat

- BafS - Java8-CheatSheet - A Java 8+ Cheat Sheet For Functional ProgrammingDokumen5 halamanBafS - Java8-CheatSheet - A Java 8+ Cheat Sheet For Functional ProgrammingArun KumarBelum ada peringkat

- Dami Lecture4Dokumen34 halamanDami Lecture4Aaditya BaidBelum ada peringkat

- KnapsackDokumen16 halamanKnapsackAmirthayogam GnanasekarenBelum ada peringkat

- Birla Institute of Course Handout Part A: Content Design: Work Integrated Learning ProgrammesDokumen12 halamanBirla Institute of Course Handout Part A: Content Design: Work Integrated Learning ProgrammesSubrahmanyam GampalaBelum ada peringkat

- Lecture 6 - ClusteringDokumen25 halamanLecture 6 - ClusteringManikandan MBelum ada peringkat

- Bisection MethodDokumen15 halamanBisection MethodSohar AlkindiBelum ada peringkat

- Speed Up Kernel Discriminant AnalysisDokumen13 halamanSpeed Up Kernel Discriminant AnalysisamarolimaBelum ada peringkat

- Information Theory and ApplicationsDokumen293 halamanInformation Theory and ApplicationsGeorgy ZaidanBelum ada peringkat

- Duhamel PrincipleDokumen2 halamanDuhamel PrincipleArshpreet SinghBelum ada peringkat

- Aman Saxena: Experience EducationDokumen1 halamanAman Saxena: Experience Educationapi-389086603Belum ada peringkat

- Me4718 Chisom NwogboDokumen12 halamanMe4718 Chisom NwogboChisom ChubaBelum ada peringkat

- Amity BScStat 101 2018 Version (I)Dokumen3 halamanAmity BScStat 101 2018 Version (I)Sanjoy BrahmaBelum ada peringkat

- Data Mining Supervised Techniques IIDokumen13 halamanData Mining Supervised Techniques IIPriyank JainBelum ada peringkat

- Control Performance Monitor Taiji Pid Loop Tuning: Product Information NoteDokumen2 halamanControl Performance Monitor Taiji Pid Loop Tuning: Product Information NotemelvokingBelum ada peringkat

- Solution To Exercise 14.12: Econometric Theory and MethodsDokumen3 halamanSolution To Exercise 14.12: Econometric Theory and MethodsLeonard Gonzalo Saavedra AstopilcoBelum ada peringkat

- Designing A Quantum Hamming Code Generator Detector and Error Corrector Using IBM Quantum ExperienceDokumen8 halamanDesigning A Quantum Hamming Code Generator Detector and Error Corrector Using IBM Quantum ExperienceJohnny is LiveBelum ada peringkat

- ELEN0060-2 Information and Coding Theory: Université de LiègeDokumen7 halamanELEN0060-2 Information and Coding Theory: Université de Liègemaxime.ptrnk1Belum ada peringkat

- 15 NIPS Auto Sklearn PosterDokumen1 halaman15 NIPS Auto Sklearn PosterJack ShaftoeBelum ada peringkat

- Ensc - Assignment7 PDFDokumen3 halamanEnsc - Assignment7 PDFYoujung KimBelum ada peringkat

- Toc 1Dokumen5 halamanToc 1Mallesham DevasaneBelum ada peringkat

- Unsupervised Learning Networks: "Principles of Soft Computing, 2Dokumen44 halamanUnsupervised Learning Networks: "Principles of Soft Computing, 2جرح الماضيBelum ada peringkat

- Quick Sort AlgorithmDokumen23 halamanQuick Sort Algorithmksb9790Belum ada peringkat

- 1 2 Analyzing Graphs of Functions and RelationsDokumen40 halaman1 2 Analyzing Graphs of Functions and RelationsDenise WUBelum ada peringkat

- Introduction PDFDokumen25 halamanIntroduction PDFvismeike septauli simbolonBelum ada peringkat