NLP Nctu

Diunggah oleh

larasmoyoJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

NLP Nctu

Diunggah oleh

larasmoyoHak Cipta:

Format Tersedia

College of Management, NCTU

Operation Research II

Spring, 2009

Chap12 Nonlinear Programming

� General Form of Nonlinear Programming Problems Max f(x) S.T. gi(x) bi for i = 1,, m x0 � No algorithm that will solve every specific problem fitting this format is available. � An Example The Product-Mix Problem with Price Elasticity � The amount of a product that can be sold has an inverse relationship to the price charged. That is, the relationship between demand and price is an inverse curve.

� The firms profit from producing and selling x units is the sales revenue xp(x) minus the production costs. That is, P(x) = xp(x) cx. � If each of the firms products has a similar profit function, say, Pj(xj) for producing and selling xj units of product j, then the overall objective function is f( x ) =

P (x

j =1 j

) , a sum of nonlinear functions.

� Nonlinearities also may arise in the gi(x) constraint function.

Jin Y. Wang

Chap12-1

College of Management, NCTU

Operation Research II

Spring, 2009

� An Example The Transportation Problem with Volume Discounts � Determine an optimal plan for shipping goods from various sources to various destinations, given supply and demand constraints. � In actuality, the shipping costs may not be fixed. Volume discounts sometimes are available for large shipments, which cause a piecewise linear cost function.

� Graphical Illustration of Nonlinear Programming Problems Max Z = 3x1 + 5x2 S.T. x1 4 9x12 + 5x22 216 x1, x2 0

� The optimal solution is no longer a CPF anymore. (Sometimes, it is; sometimes, it isnt). But, it still lies on the boundary of the feasible region. � We no longer have the tremendous simplification used in LP of limiting the search for an optimal solution to just the CPF solutions. � What if the constraints are linear; but the objective function is not?

Jin Y. Wang

Chap12-2

College of Management, NCTU

Operation Research II

Spring, 2009

Max Z = 126x1 9x12 + 182x2 13x22 4 S.T. x1 2x2 12 3x1 + 2x2 18 x1, x2 0

� What if we change the objective function to 54x1 9x12 + 78x2 13x22

� The optimal solution lies inside the feasible region. � That means we cannot only focus on the boundary of feasible region. We need to look at the entire feasible region. � The local optimal needs not to be global optimal--Complicate further

Jin Y. Wang

Chap12-3

College of Management, NCTU

Operation Research II

Spring, 2009

� Nonlinear programming algorithms generally are unable to distinguish between a local optimal and a global optimal. � It is desired to know the conditions under which any local optimal is guaranteed to be a global optimal. � If a nonlinear programming problem has no constraints, the objective function being concave (convex) guarantees that a local maximum (minimum) is a global maximum (minimum). � What is a concave (convex) function? � A function that is always curving downward (or not curving at all) is called a concave function.

� A function is always curving upward (or not curving at all), it is called a convex function.

� This is neither concave nor convex.

� Definition of concave and convex functions of a single variable � A function of a single variable f ( x) is a convex function, if for each pair of values of x, say, x ' and x '' ( x ' < x '' ),

f [x '' + (1 ) x ' ] f ( x '' ) + (1 ) f ( x ' )

for all value of such that 0 < < 1 . � It is a strictly convex function if can be replaced by <. � It is a concave function if this statement holds when is replaced by (> for the case of strict concave).

Jin Y. Wang Chap12-4

College of Management, NCTU

Operation Research II

Spring, 2009

� The geometric interpretation of concave and convex functions.

� How to judge a single variable function is convex or concave? � Consider any function of a single variable f(x) that possesses a second derivative at all possible value of x. Then f(x) is

d 2 f ( x) 0 for all possible value of x. convex if and only if dx 2

concave if and only if

d 2 f ( x) 0 for all possible value of x. dx 2

� How to judge a two-variables function is convex or concave? � If the derivatives exist, the following table can be used to determine a two-variable function is concave of convex. (for all possible values of x1 and x2) Quantity

2 f ( x1 , x 2 ) 2 f ( x1 , x 2 ) 2 f ( x1 , x 2 ) 2 x12 x 2 x1 x 2 2 f ( x1 , x 2 ) x12 2 f ( x1 , x 2 ) 2 x 2

2

Convex

0

Concave

0

Jin Y. Wang

Chap12-5

College of Management, NCTU

Operation Research II

Spring, 2009

2 � Example: f ( x1 , x 2 ) = x12 2 x1 x 2 + x 2

� How to judge a multi-variables function is convex or concave? � The sum of convex functions is a convex function, and the sum of concave functions is a concave function. � Example: f(x1, x2, x3) = 4x1 x12 (x2 x3)2 = [4x1 x12] + [(x2 x3)2]

� If there are constraints, then one more condition will provide the guarantee, namely, that the feasible region is a convex set. � Convex set � A convex set is a collection of points such that, for each pair of points in the collection, the entire line segment joining these two points is also in the collection.

� In general, the feasible region for a nonlinear programming problem is a convex set whenever all the gi(x) (for the constraints gi(x) bi) are convex. Max Z = 3x1 + 5x2 S.T. x1 4 9x12 + 5x22 216 x1, x2 0

Jin Y. Wang

Chap12-6

College of Management, NCTU

Operation Research II

Spring, 2009

� What happens when just one of these gi(x) is a concave function instead? Max Z = 3x1 + 5x2 4 S.T. x1 14 2x2 8x1 x12 + 14x2 x22 49 x1, x2 0 � The feasible region is not a convex set. � Under this circumstance, we cannot guarantee that a local maximum is a global maximum. � Condition for local maximum = global maximum (with gi(x) bi constraints). � To guarantee that a local maximum is a global maximum for a nonlinear programming problem with constraint gi(x) bi and x 0, the objective function f(x) must be a concave function and each gi(x) must be a convex function. � Such a problem is called a convex programming problem. � One-Variable Unconstrained Optimization � The differentiable function f(x) to be maximized is concave. � The necessary and sufficient condition for x = x* to be optimal (a global max) is

df * = 0 , at x = x . dx

� It is usually not very easy to solve the above equation analytically. � The One-Dimensional Search Procedure. � Fining a sequence of trial solutions that leads toward an optimal solution. � Using the signs of derivative to determine where to move. Positive derivative indicates that x* is greater than x; and vice versa.

Jin Y. Wang

Chap12-7

College of Management, NCTU

Operation Research II

Spring, 2009

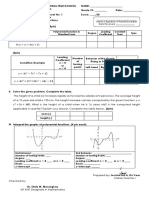

� The Bisection Method � Initialization: Select (error tolerance). Find an initial x (lower bound on x* ) and x (upper bound on x* ) by inspection. Set the initial trial solution x ' = � Iteration: � Evaluate � If

df ( x) at x = x ' . dx

x+x . 2

df ( x) ' 0 , reset x = x . dx df ( x) ' 0 , reset x = x . � If dx

' � Select a new x =

x+x . 2

� Stopping Rule: If x x 2 , so that the new x ' must be within of x * , stops. Otherwise, perform another iteration. � Example: Max f(x) = 12x 3x4 2x6

df(x)/dx 0 1 2 3 4 5 6 7

New x

'

f (x' )

4.09 -2.19 1.31 -0.34 0.51

0.75 0.75 0.8125 0.8125 0.828125

1 0.875 0.875 0.84375 0.84375

0.875 0.8125 0.84375 0.828125 0.8359375

7.8439 7.8672 7.8829 7.8815 7.8839

Jin Y. Wang

Chap12-8

College of Management, NCTU

Operation Research II

Spring, 2009

� Newtons Method � The bisection method converges slowly. � Only take the information of first derivative into account. � The basic idea is to approximate f(x) within the neighborhood of the current trial solution by a quadratic function and then to maximize (or minimize) the approximate function exactly to obtain the new trial solution.

� This approximating quadratic function is obtained by truncating the Taylor series after the second derivative term.

f '' ( xi ) f ( xi +1 ) f ( xi ) + f ( xi )( xi +1 xi ) + ( xi +1 xi ) 2 2

'

� This quadratic function can be optimized in the usual way by setting its first derivative to zero and solving for xi+1.

Thus, xi +1 = xi

f ' ( xi ) . f '' ( x i )

� Stopping Rule: If xi +1 xi , stop and output xi+1. � Example: Max f(x) = 12x 3x4 2x6 (same as the bisection example) �

xi +1 = xi f ' ( xi ) = f '' ( x i )

� Select = 0.00001, and choose x1 = 1. f ( xi ) Iteration i xi 1 2 3 4

f ' ( xi )

f '' ( xi )

xi+1

0.84003 0.83763

7.8838 7.8839

-0.1325 -0.0006

-55.279 -54.790

0.83763 0.83762

� Multivariable Unconstrained Optimization � Usually, there is no analytical method for solving the system of equations given by setting the respective partial derivatives equal to zero. � Thus, a numerical search procedure must be used.

Jin Y. Wang

Chap12-9

College of Management, NCTU

Operation Research II

Spring, 2009

� The Gradient Search Procedure (for multivariable unconstrained maximization problems) � The goal is to reach a point where all the partial derivatives are 0. � A natural approach is to use the values of the partial derivatives to select the specific direction in which to move. � The gradient at point x = x is f (x) = (

f f f , ,..., ) at x = x . x1 x x xn

� The direction of the gradient is interpreted as the direction of the directed line segment from the origin to the point (

f f f , ,..., ) , which is the direction of x1 x x x n

changing x that will maximize f(x) change rate. � However, normally it would not be practical to change x continuously in the direction of f (x), because this series of changes would require continuously reevaluating the

f and changing the direction of the path. xi

� A better approach is to keep moving in a fixed direction from the current trial solution, not stopping until f(x) stops increasing. � The stopping point would be the next trial solution and reevaluate gradient. The gradient would be recalculated to determine the new direction in which to move. � Reset x = x + t* f (x), where t* is the positive value that maximizes f(x+t* f(x)) = � The iterations continue until f ( x) = 0 with a small tolerance .

� Summary of the Gradient Search Procedures � Initialization: Select and any initial trail solution x. Go first to the stopping rule. � Step 1: Express f(x+t f(x)) as a function of t by setting x j = x 'j + t ( j = 1, 2,, n, and then substituting these expressions into f(x). � Step 2: Use the one-dimensional search procedure to find t = t* that maximizes f(x+t f(x)) over t 0. � Step 3: Reset x = x + t* f(x). Then go to the stopping rule.

Jin Y. Wang Chap12-10

f ) ' , for x j x = x

College of Management, NCTU

Operation Research II

Spring, 2009

� Stopping Rule: Evaluate f(x) at x = x. Check if

f , for all j = 1,2,, n. xi

If so, stop with the current x as the desired approximation of an optimal solution x*. Otherwise, perform another iteration. � Example for multivariate unconstraint nonlinear programming Max f(x) = 2x1x2 + 2x2 x12 2x22

f f = 2 x 2 2 x1 , = 2 x1 + 2 4 x 2 x1 x 2

. We verify that f(x) is Suppose pick x = (0, 0) as the initial trial solution.

f (0,0) =

� Iteration 1: x = (0, 0) + t(0, 2) = (0, 2t)

f ( x ' + tf ( x ' )) = f(0, 2t) =

� Iteration 2: x = (0, 1/2) + t(1, 0) = (t, 1/2)

� Usually, we will use a table for convenience purpose.

Jin Y. Wang Chap12-11

College of Management, NCTU

Operation Research II

Spring, 2009

Iteration 1 2

x'

f ( x ' )

x ' + t f ( x ' )

f ( x ' + tf ( x ' ))

t*

x ' + t * f ( x ' )

� For minimization problem � We move in the opposite direction. That is x = x t* f(x). � Another change is t = t* that minimize f(x t f(x)) over t 0 � Necessary and Sufficient Conditions for Optimality (Maximization) Problem Necessary Condition Also Sufficient if: f(x) concave One-variable unconstrained df = 0 Multivariable unconstrained

dx f = 0 (j=1,2,n) xi

f(x) concave

f(x) is concave and gi(x) is convex � The Karush-Kuhn-Tucker (KKT) Conditions for Constrained Optimization � Assumed that f(x), g1(x), g2(x), , gm(x) are differentiable functions. Then x* = (x1*, x2*, , xn*) can be an optimal solution for the nonlinear programming problem only if there exist m numbers u1, u2, , um such that all the following KKT conditions are satisfied: (1)

m g f * u i i 0 , at x = x , for j = 1, 2, , n x j i =1 x j m g f * u i i ) = 0 , at x = x , for j = 1, 2, , n x j i =1 x j

General constrained problem KKT conditions

(2) x *j (

(3) gi(x*) bi 0, for i =1, 2, , m (4) ui [gi(x*) bi] = 0, for i =1, 2, , m

Jin Y. Wang

Chap12-12

College of Management, NCTU

Operation Research II

Spring, 2009

(5) x *j 0 , for j =1, 2, , m (6) u j 0 , for j =1, 2, , m � Corollary of KKT Theorem (Sufficient Conditions) � Note that satisfying these conditions does not guarantee that the solution is optimal. � Assume that f(x) is a concave function and that g1(x), g2(x), , gm(x) are convex functions. Then x* = (x1*, x2*, , xn*) is an optimal solution if and only if all the KKT conditions are satisfied. � An Example Max f(x) = ln(x1 + 1) + x2 S.T. 2x1 + x2 3 x1, x2 0 n = 2; m = 1; g1(x) = 2x1 + x2 is convex; f(x) is concave. 1. ( j = 1)

1 2u1 0 x1 + 1

1 2u1 ) = 0 x1 + 1

2. ( j = 1) x1 (

1. (j = 2) 1 u1 0 2. (j = 2) x 2 (1 u1 ) = 0 3. 2 x1 + x2 3 0 4. u1 (2 x1 + x 2 3) = 0 5. x1 0, x2 0 6. u1 0 � Therefore, There exists a u1 = 1 such that x1 = 0, x2 = 3, and u1 = 1 satisfy KKT conditions. The optimal solution is (0, 3). � How to solve the KKT conditions � Sorry, there is no easy way. � In the above example, there are 8 combinations for x1( 0), x2( 0), and u1( 0). Try each one until find a fit one. � What if there are lots of variables? � Lets look at some easier (special) cases.

Jin Y. Wang

Chap12-13

College of Management, NCTU

Operation Research II

Spring, 2009

� Quadratic Programming Max f(x) = cx 1/2 xTQx S.T. Ax b x0 � The objective function is f(x) = cx 1/2 xTQx = c j x j

j =1 n

1 n n qij xi x j . 2 i =1 j =1

� The qij are elements of Q. If i = j, then xixj = xj2, so 1/2qij is the coefficient of xj2. If i j, then 1/2(qij xixj + qji xjxi) = qij xixj, so qij is the coefficient for the product of xi and xj (since qij = qji).

� An example Max f(x1, x2) = 15x1 + 30x2 + 4x1x2 2x12 4x22 S.T. x1 + 2x2 30 x1, x2 0

� The KKT conditions for the above quadratic programming problem. 1. 2. 1. 2. 3. 4. 5. 6. (j = 1) (j = 1) (j = 2) (j = 2) 15 + 4x2 4x1 u1 0 x1(15 + 4x2 4x1 u1) = 0 30 + 4x1 8x2 2u1 0 x2(30 + 4x1 8x2 2u1) = 0 x1 + 2x2 30 0 u1(x1 + 2x2 30) = 0 x1 0, x2 0 u1 0

Jin Y. Wang

Chap12-14

College of Management, NCTU

Operation Research II

Spring, 2009

� Introduce slack variables (y1, y2, and v1) for condition 1 (j=1), 1 (j=2), and 3. = 15 1. (j = 1) 4x1 + 4x2 u1 + y1 + y2 = 30 1. (j = 2) 4x1 8x2 2u1 + v1 = 30 3. x1 + 2x2 Condition 2 (j = 1) can be reexpressed as 2. (j = 1) x1y1 = 0 Similarly, we have 2. (j = 2) x2y2 = 0 4. u1v1 = 0 � For each of these pairs(x1, y1), (x2, y2), (u1, v1)the two variables are called complementary variables, because only one of them can be nonzero. � Combine them into one constraint x1y1 + x2y2 + u1v1 = 0, called the complementary constraint. � Rewrite the whole conditions 4x1 4x2 + u1 y1 = 15 y2 = 30 4x1 + 8x2 + 2u1 + v1 = 30 x1 + 2x2 =0 x1y1 + x2y2 + u1v1 x1 0, x2 0, u1 0, y1 0, y2 0, v1 0 � Except for the complementary constraint, they are all linear constraints. � For any quadratic programming problem, its KKT conditions have this form Qx + ATu y = cT Ax + v = b x 0, u 0, y 0, v 0 x Ty + u Tv = 0 � Assume the objective function (of a quadratic programming problem) is concave and constraints are convex (they are all linear). � Thus, x is optimal if and only if there exist values of y, u, and v such that all four vectors together satisfy all these conditions. � The original problem is thereby reduced to the equivalent problem of finding a feasible solution to these constraints. � These constraints are really the constraints of a LP except the complementary constraint. Why dont we just modify the Simplex Method?

Jin Y. Wang

Chap12-15

College of Management, NCTU

Operation Research II

Spring, 2009

� The Modified Simplex Method � The complementary constraint implies that it is not permissible for both complementary variables of any pair to be basic variables. � The problem reduces to finding an initial BF solution to any linear programming problem that has these constraints, subject to this additional restriction on the identify of the basic variables. � When cT 0 (unlikely) and b 0, the initial solution is easy to find. x = 0 , u = 0 , y = c T, v = b � Otherwise, introduce artificial variable into each of the equations where cj > 0 or bi < 0, in order to use these artificial variables as initial basic variables � This choice of initial basic variables will set x = 0 and u = 0 automatically, which satisfy the complementary constraint. � Then, use phase 1 of the two-phase method to find a BF solution for the real problem. � That is, apply the simplex to (zi is the artificial variables) Min Z = z j

j

Subject to the linear programming constraints obtained from the KKT conditions, but with these artificial variables included. � Still need to modify the simplex method to satisfy the complementary constraint. � Restricted-Entry Rule: � Exclude from consideration any nonbasic variable to be the entering variable whose complementary variable already is a basic variable. � Choice the other nonbasic variables according to the usual criterion. � This rule keeps the complementary constraint satisfied all the time. � When an optimal solution x*, u*, y*, v*, z1 = 0, , zn = 0 is obtained for the phase 1 problem, x* is the desired optimal solution for the original quadratic programming problem.

Jin Y. Wang

Chap12-16

College of Management, NCTU

Operation Research II

Spring, 2009

� A Quadratic Programming Example Max 15x1 + 30x2 + 4x1x2 2x12 4x22 S.T. x1 + 2x2 30 x1, x2 0

Jin Y. Wang

Chap12-17

College of Management, NCTU

Operation Research II

Spring, 2009

� Constrained Optimization with Equality Constraints � Consider the problem of finding the minimum or maximum of the function f(x), subject to the restriction that x must satisfy all the equations g1(x) = b1 gm(x) = bm � Example: Max f(x1, x2) = x12 + 2x2 S.T. g(x1, x2) = x12 + x22 = 1 � A classical method is the method of Lagrange multipliers. � The Lagrangian function h( x, ) = f ( x) i [ g i ( x) bi ] , where (1 , 2 ,..., m )

i =1 m

are called Lagrange multipliers. � For the feasible values of x, gi(x) bi = 0 for all i, so h(x, ) = f(x). � The method reduces to analyzing h(x, ) by the procedure for unconstrained optimization. � Set all partial derivative to zero

m g h f = i i = 0 , for j = 1, 2, , n x j x j i =1 x j

h = g i ( x) + bi = 0, for i = 1, 2, , m i

� Notice that the last m equations are equivalent to the constraints in the original problem, so only feasible solutions are considered. � Back to our example � h(x1, x2) = x12 + 2x2 ( x12 + x22 1). �

h = x1 h = x 21 h =

Jin Y. Wang

Chap12-18

College of Management, NCTU

Operation Research II

Spring, 2009

� Other types of Nonlinear Programming Problems � Separable Programming � It is a special case of convex programming with one additional assumption: f(x) and g(x) functions are separable functions. � A separable function is a function where each term involves just a single variable. � Example: f(x1, x2) = 126x1 9x12 + 182x2 13x22 = f1(x1) + f2(x2) f1(x1) = f2(x2) = � Such problem can be closely approximated by a linear programming problem. Please refer to section 12.8 for details. � Geometric Programming � The objective and the constraint functions take the form

ai 2 ai 3 g ( x) = ci Pi ( x) , where Pi ( x) = x1ai1 x 2 for i = 1, 2, , N ...x3

i =1 N

� When all the ci are strictly positive and the objective function is to be minimized, this geometric programming can be converted to a convex programming problem by setting x j = e y .

j

� Fractional Programming � Suppose that the objective function is in the form of a (linear) fraction. Maximize f(x) = f1(x) / f2(x) = (cx + c0) / (dx + d0). � Also assume that the constraints gi(x) are linear. Ax b, x 0. � We can transform it to an equivalent problem of a standard type for which effective solution procedures are available. � We can transform the problem to an equivalent linear programming problem by letting y = x / (dx + d0) and t = 1 / (dx + d0), so that x = y/t. � The original formulation is transformed to a linear programming problem. Max Z = cy + c0t S.T. Ay bt 0 dy + d0t = 1 y,t 0

Jin Y. Wang

Chap12-19

Anda mungkin juga menyukai

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)Dari EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)Belum ada peringkat

- B For I 1,, M: N J J JDokumen19 halamanB For I 1,, M: N J J Jضيٍَِِ الكُمرBelum ada peringkat

- 4 Chapter 21 Non Linear ProgrammingDokumen37 halaman4 Chapter 21 Non Linear ProgrammingMir Md Mofachel HossainBelum ada peringkat

- Limits and Continuity (Calculus) Engineering Entrance Exams Question BankDari EverandLimits and Continuity (Calculus) Engineering Entrance Exams Question BankBelum ada peringkat

- 4 Handling Constraints: F (X) X R C J 1, - . - , M C 0, K 1, - . - , MDokumen10 halaman4 Handling Constraints: F (X) X R C J 1, - . - , M C 0, K 1, - . - , MverbicarBelum ada peringkat

- A-level Maths Revision: Cheeky Revision ShortcutsDari EverandA-level Maths Revision: Cheeky Revision ShortcutsPenilaian: 3.5 dari 5 bintang3.5/5 (8)

- Nonlinear OptimizationDokumen6 halamanNonlinear OptimizationKibria PrangonBelum ada peringkat

- Difference Equations in Normed Spaces: Stability and OscillationsDari EverandDifference Equations in Normed Spaces: Stability and OscillationsBelum ada peringkat

- Connexions Module: m11240Dokumen4 halamanConnexions Module: m11240rajeshagri100% (2)

- Section One - DerivativesDokumen17 halamanSection One - Derivativestsunderes100% (2)

- Nonlinear Programming GuideDokumen109 halamanNonlinear Programming GuideinftraBelum ada peringkat

- 06 Subgradients ScribedDokumen5 halaman06 Subgradients ScribedFaragBelum ada peringkat

- Notes HQDokumen96 halamanNotes HQPiyush AgrahariBelum ada peringkat

- Recitation 8: Continuous Optimization: For I End ForDokumen12 halamanRecitation 8: Continuous Optimization: For I End ForWenjun HouBelum ada peringkat

- M2L2 LNDokumen8 halamanM2L2 LNnagesha_basappa865Belum ada peringkat

- Nonlinear ProgrammingDokumen35 halamanNonlinear ProgrammingPreethi GopalanBelum ada peringkat

- Chapter 6: Integration: Partial Fractions and Improper IntegralsDokumen33 halamanChapter 6: Integration: Partial Fractions and Improper IntegralsAriana HallBelum ada peringkat

- Notes 3Dokumen8 halamanNotes 3Sriram BalasubramanianBelum ada peringkat

- Optimization Techniques for Multi-Variable ProblemsDokumen30 halamanOptimization Techniques for Multi-Variable ProblemsMuhammad Bilal JunaidBelum ada peringkat

- Optimality Conditions For General Constrained Optimization: CME307/MS&E311: Optimization Lecture Note #07Dokumen28 halamanOptimality Conditions For General Constrained Optimization: CME307/MS&E311: Optimization Lecture Note #07Ikbal GencarslanBelum ada peringkat

- Constrained OptimizationDokumen10 halamanConstrained OptimizationCourtney WilliamsBelum ada peringkat

- OQM Lecture Note - Part 1 Introduction To Mathematical OptimisationDokumen10 halamanOQM Lecture Note - Part 1 Introduction To Mathematical OptimisationdanBelum ada peringkat

- Bellocruz 2016Dokumen19 halamanBellocruz 2016zhongyu xiaBelum ada peringkat

- Tutorial 1 Fundamentals: Review of Derivatives, Gradients and HessiansDokumen25 halamanTutorial 1 Fundamentals: Review of Derivatives, Gradients and Hessiansrashed44Belum ada peringkat

- Lagrange Multipliers: Solving Multivariable Optimization ProblemsDokumen5 halamanLagrange Multipliers: Solving Multivariable Optimization ProblemsOctav DragoiBelum ada peringkat

- Calculus I Notes On Limits and Continuity.: Limits of Piece Wise FunctionsDokumen8 halamanCalculus I Notes On Limits and Continuity.: Limits of Piece Wise Functionsajaysharma19686191Belum ada peringkat

- Modified Section 01Dokumen7 halamanModified Section 01Eric ShiBelum ada peringkat

- Optimization QFin Lecture NotesDokumen33 halamanOptimization QFin Lecture NotesCarlos CadenaBelum ada peringkat

- Test Functions for Optimization Needs ReviewDokumen43 halamanTest Functions for Optimization Needs ReviewbatooluzmaBelum ada peringkat

- OQM Lecture Note - Part 8 Unconstrained Nonlinear OptimisationDokumen23 halamanOQM Lecture Note - Part 8 Unconstrained Nonlinear OptimisationdanBelum ada peringkat

- 2 Nonlinear Programming ModelsDokumen27 halaman2 Nonlinear Programming ModelsSumit SauravBelum ada peringkat

- HW3 SolutionDokumen7 halamanHW3 SolutionprakshiBelum ada peringkat

- Mirror Descent and Nonlinear Projected Subgradient Methods For Convex OptimizationDokumen9 halamanMirror Descent and Nonlinear Projected Subgradient Methods For Convex OptimizationfurbyhaterBelum ada peringkat

- 9 Calculus: Differentiation: 9.1 First Optimization Result: Weierstraß TheoremDokumen8 halaman9 Calculus: Differentiation: 9.1 First Optimization Result: Weierstraß TheoremAqua Nail SpaBelum ada peringkat

- Modeling, Simulation and Optimisation for Chemical EngineeringDokumen22 halamanModeling, Simulation and Optimisation for Chemical EngineeringHậu Lê TrungBelum ada peringkat

- Lecture 9 - SVMDokumen42 halamanLecture 9 - SVMHusein YusufBelum ada peringkat

- Problem Set 10Dokumen2 halamanProblem Set 10phani saiBelum ada peringkat

- I. Introduction To Convex OptimizationDokumen12 halamanI. Introduction To Convex OptimizationAZEENBelum ada peringkat

- Chapter 7Dokumen37 halamanChapter 7Hoang Quoc TrungBelum ada peringkat

- Math ProgDokumen35 halamanMath ProgRakhma TikaBelum ada peringkat

- Random Processes: Version 2, ECE IIT, KharagpurDokumen8 halamanRandom Processes: Version 2, ECE IIT, KharagpurHarshaBelum ada peringkat

- 11 Limits of Functions of Real Variables: 11.1 The ε / δ DefinitionDokumen7 halaman11 Limits of Functions of Real Variables: 11.1 The ε / δ Definitiona2hasijaBelum ada peringkat

- Linear Programming (LP) FundamentalsDokumen57 halamanLinear Programming (LP) FundamentalsPratibha GoswamiBelum ada peringkat

- Operations Research Models and Methods: Nonlinear Programming MethodsDokumen10 halamanOperations Research Models and Methods: Nonlinear Programming MethodsGUSTAVO EDUARDO ROCA MENDOZA0% (1)

- Optimization 111Dokumen29 halamanOptimization 111mohammed aliBelum ada peringkat

- Convex Optimization For Machine LearningDokumen110 halamanConvex Optimization For Machine LearningratnadeepbimtacBelum ada peringkat

- I. Introduction To Convex Optimization: Georgia Tech ECE 8823a Notes by J. Romberg. Last Updated 13:32, January 11, 2017Dokumen20 halamanI. Introduction To Convex Optimization: Georgia Tech ECE 8823a Notes by J. Romberg. Last Updated 13:32, January 11, 2017zeldaikBelum ada peringkat

- ECE275AB Lecture 10 View Graphs 2008-2009Dokumen15 halamanECE275AB Lecture 10 View Graphs 2008-2009Ajay VermaBelum ada peringkat

- Linear Programming OptimizationDokumen37 halamanLinear Programming OptimizationlaestatBelum ada peringkat

- 1 s2.0 S0747717197901103 MainDokumen30 halaman1 s2.0 S0747717197901103 MainMerbsTimeline (Merblin)Belum ada peringkat

- Op Tim Ization Uw 06Dokumen29 halamanOp Tim Ization Uw 06Rushikesh DandagwhalBelum ada peringkat

- Transforming Density Functions into New FormsDokumen11 halamanTransforming Density Functions into New FormsDavid JamesBelum ada peringkat

- Introduction To Optimization: Anjela Govan North Carolina State University SAMSI NDHS Undergraduate Workshop 2006Dokumen29 halamanIntroduction To Optimization: Anjela Govan North Carolina State University SAMSI NDHS Undergraduate Workshop 2006Teferi LemmaBelum ada peringkat

- AM13 Concavity HandoutDokumen22 halamanAM13 Concavity Handout张舒Belum ada peringkat

- Defazio NIPS2014Dokumen15 halamanDefazio NIPS2014Montassar MhamdiBelum ada peringkat

- CP 2Dokumen2 halamanCP 2Ankita MishraBelum ada peringkat

- M2L2 LNDokumen8 halamanM2L2 LNswapna44Belum ada peringkat

- Unconstrained and Constrained Optimization Algorithms by Soman K.PDokumen166 halamanUnconstrained and Constrained Optimization Algorithms by Soman K.PprasanthrajsBelum ada peringkat

- DC Programming Optimization MethodDokumen13 halamanDC Programming Optimization Methodamisha2562585Belum ada peringkat

- Initial and Final Value TheoremsDokumen4 halamanInitial and Final Value TheoremslarasmoyoBelum ada peringkat

- Initial and Final Value TheoremsDokumen4 halamanInitial and Final Value TheoremslarasmoyoBelum ada peringkat

- Nps Future UcavsDokumen195 halamanNps Future UcavslarasmoyoBelum ada peringkat

- Fluid Mechanics SyllabusDokumen1 halamanFluid Mechanics SyllabuslarasmoyoBelum ada peringkat

- Doyle Robust Control of Nonlinear SystemDokumen5 halamanDoyle Robust Control of Nonlinear SystemlarasmoyoBelum ada peringkat

- ME 582 Finite Element Analysis in Thermofluids Course OverviewDokumen13 halamanME 582 Finite Element Analysis in Thermofluids Course Overviewlarasmoyo100% (1)

- Oral QuestionsDokumen1 halamanOral QuestionslarasmoyoBelum ada peringkat

- Aerodynamics Homework 5 - SolutionsDokumen18 halamanAerodynamics Homework 5 - SolutionslarasmoyoBelum ada peringkat

- Ruijgrok Flight Performance TocDokumen5 halamanRuijgrok Flight Performance ToclarasmoyoBelum ada peringkat

- Caldeira Torcs APIDokumen8 halamanCaldeira Torcs APIlarasmoyoBelum ada peringkat

- W1121Dokumen15 halamanW1121Tamil VananBelum ada peringkat

- Yang Chen-Missile Control AP N DynamicsDokumen29 halamanYang Chen-Missile Control AP N DynamicslarasmoyoBelum ada peringkat

- Abaspour Optimal Fuzzy Logic Tuning of Dynamic Inversion AfcsDokumen21 halamanAbaspour Optimal Fuzzy Logic Tuning of Dynamic Inversion AfcslarasmoyoBelum ada peringkat

- Fluidmech in Pipe Velocity Shear ProfileDokumen46 halamanFluidmech in Pipe Velocity Shear ProfilelarasmoyoBelum ada peringkat

- A Velocity Algorithm For The Implementation of Gain Scheduled ControllersDokumen14 halamanA Velocity Algorithm For The Implementation of Gain Scheduled ControllerslarasmoyoBelum ada peringkat

- Wael Robust+Hybrid+Control+for+Ballistic+Missile+Longitudinal+AutopilotDokumen20 halamanWael Robust+Hybrid+Control+for+Ballistic+Missile+Longitudinal+AutopilotlarasmoyoBelum ada peringkat

- Vijaya Aeat 2008Dokumen13 halamanVijaya Aeat 2008larasmoyoBelum ada peringkat

- Caldeira Torcs APIDokumen8 halamanCaldeira Torcs APIlarasmoyoBelum ada peringkat

- Partial Fractions: Matthew M. PeetDokumen27 halamanPartial Fractions: Matthew M. Peetkewan22win_728961021Belum ada peringkat

- Fluid Mechanics HW - 2 SolutionDokumen6 halamanFluid Mechanics HW - 2 SolutionlarasmoyoBelum ada peringkat

- 04-Panel Methods (Theory and Method)Dokumen32 halaman04-Panel Methods (Theory and Method)larasmoyoBelum ada peringkat

- Fluid Mechanics HW - 5 SolutionDokumen8 halamanFluid Mechanics HW - 5 SolutionlarasmoyoBelum ada peringkat

- Single Horseshoe Vortex Wing Model: B V S V L CDokumen4 halamanSingle Horseshoe Vortex Wing Model: B V S V L Cnanduslns07Belum ada peringkat

- Univ San Diego Aerodynamics 1 Homework 1 - SolutionDokumen11 halamanUniv San Diego Aerodynamics 1 Homework 1 - SolutionlarasmoyoBelum ada peringkat

- 16.100 Take-Home Exam SolutionsDokumen3 halaman16.100 Take-Home Exam SolutionslarasmoyoBelum ada peringkat

- Linear System 2 HomeworkDokumen3 halamanLinear System 2 HomeworklarasmoyoBelum ada peringkat

- 543 Lecture 24 Peet Optimal Output FeedbackDokumen27 halaman543 Lecture 24 Peet Optimal Output FeedbacklarasmoyoBelum ada peringkat

- Postmarc: A Windows Postprocessor For CMARC and PmarcDokumen51 halamanPostmarc: A Windows Postprocessor For CMARC and PmarclarasmoyoBelum ada peringkat

- Concept of Lift and DragDokumen7 halamanConcept of Lift and DraglarasmoyoBelum ada peringkat

- Metodo A PannelliDokumen26 halamanMetodo A PannelliDoris GraceniBelum ada peringkat

- Classification and Regression Trees (CART - I) : Dr. A. RameshDokumen34 halamanClassification and Regression Trees (CART - I) : Dr. A. RameshVaibhav GuptaBelum ada peringkat

- CH14Dokumen32 halamanCH14AkhilReddy SankatiBelum ada peringkat

- Laboratory Exercise #4 Newton-Raphson MethodDokumen10 halamanLaboratory Exercise #4 Newton-Raphson MethodAlvinia PelingonBelum ada peringkat

- Niranjana DIP Assignment2Dokumen9 halamanNiranjana DIP Assignment2Niranjana VannadilBelum ada peringkat

- Engineering eBooks Download SiteDokumen303 halamanEngineering eBooks Download SiteAnush67% (3)

- ISyE 6669 Homework 15 PDFDokumen3 halamanISyE 6669 Homework 15 PDFEhxan HaqBelum ada peringkat

- OR GroupDokumen25 halamanOR Groupshiferaw meleseBelum ada peringkat

- Network Flow UNIT - 14: Chandan Sinha RoyDokumen18 halamanNetwork Flow UNIT - 14: Chandan Sinha RoyRISHIRAJ CHANDABelum ada peringkat

- Calculating Errors in Numerical MethodsDokumen15 halamanCalculating Errors in Numerical MethodsJOSEFCARLMIKHAIL GEMINIANOBelum ada peringkat

- Irjet V6i5465Dokumen4 halamanIrjet V6i5465nikita paiBelum ada peringkat

- Adaline/MadalineDokumen38 halamanAdaline/MadalineRoots999100% (8)

- 15 Dynamic ProgrammingDokumen27 halaman15 Dynamic ProgrammingnehruBelum ada peringkat

- Assignment EEE437Dokumen2 halamanAssignment EEE437Abu RaihanBelum ada peringkat

- The Ubiquitous Matched Filter A Tutorial and Application To Radar DetectionDokumen18 halamanThe Ubiquitous Matched Filter A Tutorial and Application To Radar DetectionlerhlerhBelum ada peringkat

- Genetic Algorithm Design of Low Pass FIR FilterDokumen17 halamanGenetic Algorithm Design of Low Pass FIR FilterPranav DassBelum ada peringkat

- JN - 2013 - Li - Model and Simulation For Collaborative VRPSPDDokumen8 halamanJN - 2013 - Li - Model and Simulation For Collaborative VRPSPDAdrian SerranoBelum ada peringkat

- Mesacc - Edu-Solving Polynomial InequalitiesDokumen4 halamanMesacc - Edu-Solving Polynomial InequalitiesAva BarramedaBelum ada peringkat

- LTI Systems Impulse Response PropertiesDokumen5 halamanLTI Systems Impulse Response PropertiesCangkang SainsBelum ada peringkat

- July - , 2019 Summative Test On FACTORING ScoreDokumen4 halamanJuly - , 2019 Summative Test On FACTORING ScoreHannah MJBelum ada peringkat

- GTU Question Bank on Data Compression TechniquesDokumen7 halamanGTU Question Bank on Data Compression TechniquesMeghal PrajapatiBelum ada peringkat

- TEXTURE IMAGE SEGMENTATION USING NEURO EVOLUTIONARY METHODS-a SurveyDokumen5 halamanTEXTURE IMAGE SEGMENTATION USING NEURO EVOLUTIONARY METHODS-a Surveypurushothaman sinivasanBelum ada peringkat

- LAS 4-Polynomial FunctionsDokumen2 halamanLAS 4-Polynomial FunctionsJell De Veas TorregozaBelum ada peringkat

- Bessel Filter Crossover and Its Relation To OthersDokumen8 halamanBessel Filter Crossover and Its Relation To OthersSt ChristianBelum ada peringkat

- Binomial Theorem Class 11Dokumen13 halamanBinomial Theorem Class 11shriganesharamaa007Belum ada peringkat

- Video Compression: Dereje Teferi (PHD) Dereje - Teferi@Aau - Edu.EtDokumen26 halamanVideo Compression: Dereje Teferi (PHD) Dereje - Teferi@Aau - Edu.EtdaveBelum ada peringkat

- GTU Algorithm Analysis and Design CourseDokumen3 halamanGTU Algorithm Analysis and Design CourseLion KenanBelum ada peringkat

- Solution Manual For An Introduction To Optimization 4th Edition Edwin K P Chong Stanislaw H ZakDokumen7 halamanSolution Manual For An Introduction To Optimization 4th Edition Edwin K P Chong Stanislaw H ZakDarla Lambe100% (30)

- Time Response of Second Order Systems - GATE Study Material in PDFDokumen5 halamanTime Response of Second Order Systems - GATE Study Material in PDFAtul ChoudharyBelum ada peringkat

- Iii Part A BDokumen115 halamanIii Part A BDivya SreeBelum ada peringkat

- UT Dallas Syllabus For Ee6361.501.09s Taught by Issa Panahi (Imp015000)Dokumen1 halamanUT Dallas Syllabus For Ee6361.501.09s Taught by Issa Panahi (Imp015000)UT Dallas Provost's Technology GroupBelum ada peringkat

- A Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormDari EverandA Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormPenilaian: 5 dari 5 bintang5/5 (5)

- Quantum Physics: A Beginners Guide to How Quantum Physics Affects Everything around UsDari EverandQuantum Physics: A Beginners Guide to How Quantum Physics Affects Everything around UsPenilaian: 5 dari 5 bintang5/5 (2)

- Mental Math Secrets - How To Be a Human CalculatorDari EverandMental Math Secrets - How To Be a Human CalculatorPenilaian: 5 dari 5 bintang5/5 (3)

- Mathematical Mindsets: Unleashing Students' Potential through Creative Math, Inspiring Messages and Innovative TeachingDari EverandMathematical Mindsets: Unleashing Students' Potential through Creative Math, Inspiring Messages and Innovative TeachingPenilaian: 4.5 dari 5 bintang4.5/5 (21)

- Basic Math & Pre-Algebra For DummiesDari EverandBasic Math & Pre-Algebra For DummiesPenilaian: 3.5 dari 5 bintang3.5/5 (6)

- Build a Mathematical Mind - Even If You Think You Can't Have One: Become a Pattern Detective. Boost Your Critical and Logical Thinking Skills.Dari EverandBuild a Mathematical Mind - Even If You Think You Can't Have One: Become a Pattern Detective. Boost Your Critical and Logical Thinking Skills.Penilaian: 5 dari 5 bintang5/5 (1)

- Making and Tinkering With STEM: Solving Design Challenges With Young ChildrenDari EverandMaking and Tinkering With STEM: Solving Design Challenges With Young ChildrenBelum ada peringkat

- Strategies for Problem Solving: Equip Kids to Solve Math Problems With ConfidenceDari EverandStrategies for Problem Solving: Equip Kids to Solve Math Problems With ConfidenceBelum ada peringkat

- Basic Math & Pre-Algebra Workbook For Dummies with Online PracticeDari EverandBasic Math & Pre-Algebra Workbook For Dummies with Online PracticePenilaian: 4 dari 5 bintang4/5 (2)

- Calculus Made Easy: Being a Very-Simplest Introduction to Those Beautiful Methods of Reckoning Which are Generally Called by the Terrifying Names of the Differential Calculus and the Integral CalculusDari EverandCalculus Made Easy: Being a Very-Simplest Introduction to Those Beautiful Methods of Reckoning Which are Generally Called by the Terrifying Names of the Differential Calculus and the Integral CalculusPenilaian: 4.5 dari 5 bintang4.5/5 (2)

- A-level Maths Revision: Cheeky Revision ShortcutsDari EverandA-level Maths Revision: Cheeky Revision ShortcutsPenilaian: 3.5 dari 5 bintang3.5/5 (8)

- Math Magic: How To Master Everyday Math ProblemsDari EverandMath Magic: How To Master Everyday Math ProblemsPenilaian: 3.5 dari 5 bintang3.5/5 (15)

- Psychology Behind Mathematics - The Comprehensive GuideDari EverandPsychology Behind Mathematics - The Comprehensive GuideBelum ada peringkat

- Pre-Calculus Workbook For DummiesDari EverandPre-Calculus Workbook For DummiesPenilaian: 4.5 dari 5 bintang4.5/5 (2)

- Mental Math: How to Develop a Mind for Numbers, Rapid Calculations and Creative Math Tricks (Including Special Speed Math for SAT, GMAT and GRE Students)Dari EverandMental Math: How to Develop a Mind for Numbers, Rapid Calculations and Creative Math Tricks (Including Special Speed Math for SAT, GMAT and GRE Students)Belum ada peringkat

- A Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormDari EverandA Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormPenilaian: 4.5 dari 5 bintang4.5/5 (20)

- Limitless Mind: Learn, Lead, and Live Without BarriersDari EverandLimitless Mind: Learn, Lead, and Live Without BarriersPenilaian: 4 dari 5 bintang4/5 (6)

- How Math Explains the World: A Guide to the Power of Numbers, from Car Repair to Modern PhysicsDari EverandHow Math Explains the World: A Guide to the Power of Numbers, from Car Repair to Modern PhysicsPenilaian: 3.5 dari 5 bintang3.5/5 (9)