Emotion-Sensitive Human-Computer Interaction

Diunggah oleh

Dũng Cù ViệtDeskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Emotion-Sensitive Human-Computer Interaction

Diunggah oleh

Dũng Cù ViệtHak Cipta:

Format Tersedia

Emotion-sensitive Human-Computer Interaction (HCI): State of the art - Seminar paper

University of Fribourg 1700 Fribourg Fribourg Switzerland

Caroline Voeffray

caroline.voeffray@unifr.ch ABSTRACT

Emotion-sensitive Human-Computer Interaction (HCI) is a hot topic. HCI is the study, planning and design of the interaction between users an computer systems. Indeed today a lot of research is moving towards this direction. To attract users, more and more developers add the emotional side to their applications. To simplify the HCI, systems must be more natural, ecacious, persuasive and trustworthy. And to do that, system must be able to sense and response approriately to the users emotional states. This paper presents a short overview of the existing emotion-sensitive HCI applications. This paper focused on what is done today and brings out what are the most important features to take into account for emotion-sensitive HCI. The most important nding is that applications can help people to feel better with technologies used in the daily life. mainly in articial intelligence. Aective computing consist of recognition, expression, modeling, communicating and responding to emotions [4]. Research in this domain contributes to many dierent domains such as aircraft, jobs in scientic sectors like lawyers or police, anthropology, neurology, psychiatry or in behavioral science, but for this paper we will focus especially on HCI. In this paper we will approach dierent elements of Emotion-sensitive HCI. Firstly we briey discuss the role of emotions and how they can be detected. Then, we explain the research areas related to affective computing. Finally we make a state of the art survey of existing aective application.

2.

ROLE OF EMOTIONS

General Terms

Emotion in HCI

Keywords

Emotion HCI, Aective HCI, Aective technology, Aective systems

1. INTRODUCTION

First aective-applications must collect emmotionals data with facial recognition, voice analysis and detecting physiological signals. Then the system interpret collected data and must be adapting to the user In the past, the study of usability and emotions were separated but for some time, the area of human-computer interaction has evolved and today emotions take more space in our life and the aective computing appeared. Aective computing is a popular and innovative research area Seminar emotion recognition : http://diuf.unifr.ch/main /diva/teaching/seminars/emotion-recognition

Emotions are an important factor of life and they play an essential role to understand users behavior with computer interaction [1]. In addition, the emotional intelligence plays a major role to measure aspects of success in life [2]. Recent researches in human-computer interaction dont focus only on the cognitive approach, but on the emotions part too. Both approaches are very important, indeed take into acount that emotions of the user solve some important aspects of the design in HCI systems. Additionnaly the human-machine interaction could be better if the machine can adapt its behavior according to users; and this system is seen more natural, ecacious, persuasive, and trustworthy by users [2]. The following question is asked Which is the connection between emotions and design? and the respond is that Our feelings strongly inuence our perceptions and often frame how we think or how we refer to our experiences at a later date, emotion is the dierentiator in our experience [1]. In our society, positive and negative emotions inuence the consumption of product and to understand the decisional process, it could be crucial to measure the emotional expression [1]. Emotions are an important part in our life, its why aective computing was developed. As say in [3] affective computing is to develop computer-based system that recognize and express emotions in the same way humans do. In human-computer interaction, the nonverbal communication plays an important role, in that we can identify the diculties that users stumble upon, by measuring the emotions in facial expressions [1]. Therefore in [3] we talk about a number of studies that have investigated peoples reactions and responses to computers that have been designed to be more human-like. And several studies have reported a positive impact of computers that were designed to atter

and praise users when they did something right. With these system, users have a better opinion of themselves [3]. By cons, we have to be careful because sometimes, the same expression of an emotion can have a dierent signication in dierent countries and cultures. A smile in Europe is the sign of happiness, pleasure or irony. But for japanese people, it could simply imply their agreement with the applied punishment or could be the sign of indignation associated with the person applying the punishment [1]. These ethnic dierences should make us aware of dierent results with the use of the same aective system in dierent countries.

All these methods to collect data are very useful but they they do not seem always easy to use. Firstly, available technologies are restrictive and some parameters must absolutely be taken into account to have valid data. We must dierentiate users by gender, age, socio-geographical origin, physical condition or pathology. Moreover we need to remove the noise of collected data like unwanted noise, image against the light or physical characteristics such as beard, glasses, hat, etc. Secondly, to collect physiological signals, we must use some intrusive methods like electrodes, chest trap or wearable computer [6].

3.

EMOTIONAL CUES

4. EMOTION-SENSITIVE APPLICATION 4.1 Research areas

The automatic recognition of human aective states can be used in many domains besides the HCI. In fact the assessment of dierent emotions like annoyance, inattention or stress can be highly valuable in some situations. The aective-sensitive monitoring done by a computer could provide prompts for better performance. Especially for certain jobs like aircraft and air trac controller, nuclear power plant surveillance or all jobwhere we drive a vehicle. In these professions attention to a crucial task is essential. In scientic sectors like lawyers, police or security agents, monitoring and interpreting aective behavioral signs can be very useful. These information could help in critical situations such as to know the veracity of testimonies. Another area where the computer analysis of human emotion can be benet is the automatic aect-based indexing of digital visual material. Detection of pain, rage and fear in scenes could provide a good tool for violent-content-based indexing of movies, video material and digital libraries. Finally machine analysis of human aective states would also facilitate research in anthropology, neurology, psychiatry or in behavioral science. In these domains sensitivity, reliability and precision are recurring problems, this kind of emotion recognition can help them to advance in their research [2]. In this paper we focused on HCI and one of the most important goal of HCIs application is to design technologies that can help people feeling better. For example how an aective system can calm a crying child or prevent strong feeling of loneliness and negative emotions [3]. A domain where recognition of emotions in HCI is widely used is the evaluation of interface. The design of an interface can inuence strongly the emotional impact of the system on the users [3]. The appareance of an interface such as combination of shapes, fonts, colors, balance, white space and graphical elements determine the rst users feeling. Moreover there can have a positive or a negative eect on peoples perception of the systems usability. For example with goodlooking interfaces users are more tolerant because the system is more satisfying and pleasant to use. For example if the waiting time to download a website is long, with a goodlooking interface, the user is prepared to wait a few more seconds. On the contrary, computer interfaces can cause to user frustration and negative reactions like anger or disgust. It happens when something is too complex, if the system crashes or doesnt work properly, or if the appareance of the interface is not adapted. Therefore the recognition of emotions is important to evaluate the usability and the interface

To understand how emotions aect peoples behavior we must understand the relationship between the dierent cues such as facial expressions, body language, gestures, tone of voice, etc. [3]. Before creating an aective system, we must examine how people express emotions and how we perceive other peoples feelings. To have an intelligent HCI system that responds appropriately to the users aective feedback, the rst step is that the system must be able to detect and interpret the users emotional states automatically [2]. The visual channel (e.g., facial expression) and the auditory (e.g. vocal caps) like vocal reactions are the most important features in the human recognition of aective feedback. But other elements need to be taken into account, for example body movements and the physiological reactions. When a person judge the emotional state of someone else, it relies mainly on his facial and vocal expressions. However some emotions are harder to dierentiate than others and need to consider other types of signals such as gestures, posture or physiological signals. A lot of research have been done in the eld of face and gesture recognition, especially to recognize facial expressions. Facial expressions can be seen as communicative signals or can be considered as being expression of emotions [6]. And they can be associated with basic emotions like happiness, surprise, fear, anger, disgust or sadness [1]. Another tool to detect emotions is the emotional speech recognition. In the voice several factors can vary depending on emotions, such as pitch, loudness, voice quality and rhythm. In case of human-computer interaction, we can detect emotions by monitoring the nervous system because for some feelings, physiological signs are very marked [1]. We can measure the blood pressure, the skin conductivity, the rate of breathing or the nger temperature. Changing in physiological signals means a change in the users behavior. Unfortunately, physiological signals play a secondary role in human recognition of aective states, these signals are neglected because to detect somenones clamminess or heart rate, we should be in a physical contact with the person. The analysis of the tactile channel is harder because, the person must be wired to collect data and its usually perceived as being uncomfortable and unpleasant [2]. Several techniques are available to capture this physiological signs; Electomyogram (EMG) for evaluating and recording the electrical activity produced by muscles, Electrocardiogram to measure the activity of the heart with electrodes attached to the skin or skin conductance sensors can be used [6] .

of applications. Emotions felt by user play an important role in the success of a new application. Another big research area is the creation of intelligent robots and avatars that behave like humans [3]. The goal of this domain is to develop computer-based systems that recognize and express emotions like humans. There are two major sectors, aective interface for children like dolls and animated pets or intelligent robots developed to interact and help people. Safety driving or soldier training are other examples of emotionnal research areas. Sensing devices can be used to measure dierent physiological signals to detect stress or frustration. For drivers, panic and sleepiness levels can be measured and if these signals are too high, the system alerts the user to be more careful or advise to stop for a break. The physiological data from soldiers can be used to design a better training plan without frustration, confusion or panic [6]. Finally, sensing devices can also be used to measure the body signals of patients that are having tele-home health care. Collected data can be sent to a doctor or a nurse for further decisions [6], [5] .

data are analyze to detect emotions in real-time and applies it to the online chat interface. Because its dicult to obtain valence information from physiological sensors, the user species manually the animation tag for the type of emotion, whereas physiological data are used to detect the intensity of emotion. Twenty types of animations are available to change the speed, size, color and interaction of the text. The user species the emotion with a tag before the message. Through this animated chat, the user can determine the aective state of his partner.

4.2.3

Interactive Control of Music

4.2 Applications

In this section, we make a state of the art of dierent types of existing aective-applications.

4.2.1

Emoticons and companion

The evolution of emotions in technology is explain in this book [3]. The rst expressive interfaces were designed by emoticons, sounds, icons and virtual agents. For example in the 1980s and 1990s when an Apples computer was booted, the user saw the happy Mac icon on the screen. The meaning of this smiling icon was that the computer was working correctly. Moreover, the smile is a sign of friendliness and may encourage the user to feel good and smile back. An existing technique to help users is the use of friendly agents at the interface. This companion helps users to feel better and encourages them to try things out. An example is the infamous Clippy, the paper clip with human-like qualities as part of their windows 98 operating system. Clippy appears in the users screen when the system thinks that the user need some help to make some tasks [3]. Later, users have also found ways to express emotions through the computer by using emoticons. The combination of keyboard symbols that simulate facial expressions, allows users to express their feelings and emotions [3].

We discover an interface to mix pieces of music by moving their body in dierent emotional styles [8]. In this application, the users body is a part of the interface. The system analyzes the body motions and produces a mix of music to represent expressed emotions in real time. To classify body motions into emotions, the machine learning is used. This approach give very satisfactory results. To begin, a training phase is needed, during which the system observes the user moving in response to particular emotional music pieces. After the training part, the system is able to recognize users natural movements associated with each emotion. This phase is necessary because each participant has a very dierent way to express his emotions and body motions are very high-dimensional. There are variations even if the same user makes several times the same movement. A database of lm music classied by emotions is used. The users detected emotion are mapped with corresponding music in database and a music mix is produced.

4.2.4

Tele-home health care

4.2.2

Online chat with animated text

Researches demonstrated that animated text was eective to convey a speakers tone of voice, aection and emotion. Therefore an aective chat system uses animated text associated with emotional information is proposed in [9]. This chat application focuses only on text messages because its simpler than video, the size of data is smaller and concerns less the privacy. A GSR (Galvanic Skin Response) sensor was attached to the middle and to the index ngers of the users non-dominant hand to collected necessary aective information. An animated text is created according to these data. With this method, the peaks and troughs of GSR

Interesting aective applications are developed in the health care, for example an Tele-home health care (Tele-HHC) application discovered in [5]. The goal of this system is to provide communication between patient and medical professionnal via multimedia and empathetic avatars when handson care is not required. The Tele-HHC can be used to collect dierent vital sign data remotely. This system is used to verify compliance with medicine regimes, to improve diet compliance or to assess mental or emotional status. Knowing the patients emotional state can signicantly improve his care. The system monitors and responds to patients. An algorithm map the physiological data collected with emotions. After that, the system synthesizes all informations for use by the medical sta. For this research a system which observe the user via multi-sensory devices is used. A wireless noninvasive wearable computer is employed to collect all needed physiological signals and mapping these to emotional states. A library of avatars is built, and these avatars can be used in three dierent ways. Firstly, avatars can assist the user to understand his emotional state. Secondly they can be used to mirror the users emotions to conrm it. Finally they can animate a chat session showing empathic expressions.

4.2.5

Arthur and D.W Aardvarks:

Systems related to children is common research area. Positive emotions play an important role in learning and mental growth of children. Therefore an aective interface can be important in achieving the learning goals. Two animated, interactive plush dolls ActiMates Arthur and D.W were developed [10]. These two characters are creations of childrens

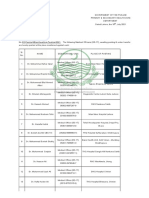

new. Not real applications are already commercialize, currently there are only prototypes of aective application. This is a topic that has a future and will be increasingly present in our technologies life because emotions play an important role in our life. Aective interfaces and applications can help people to feel better with computers and in the daily life. Stress, frustation and angry can be reduced with sensible and adaptive systems. Finally the challenge today is to improve technologies to collect data from dierent features. Existing systems must be improve to really identify emotions despite factors that can skew results. Tools to automatically recognize emotional cues must keep only key items and must throw all the noises. Figure 1: ActiMates D.W and ActiMates Arthur author Marc Brown, and are familiar to children for more than 15 years. These dolls look like animals but have a human behavior with their own personalities. They can move and speak. Seven sensors located in their body allows children to interact with them. Each sensors has one dened function. When the dolls speak three emotional interactions are used: humour, praise and aection. Arthur and D.W. can ask questions, oer opinions, share jokes and give compliments to children. Children interact with these dolls to play games or to listen joke and secret. The goal of these dolls is to promote the mental growth of children through the systematic use of social responses to positive aect during their playful learning eorts.

6.

4.2.6

Affective diary

An aective diary to express innerthoughts and collect experiences or memories was developed [7]. This diary captures the physical aspects of experiences and emotions. The most important element that diers from other traditional diaries is the addition of data collected by sensors like pulse, skin conductivity, pedometer, body movement and posture, etc. Collected data are uploaded via a mobile phone and used to express emotions. Other materials from the mobile phone like texts, MMS messages, photographs, videos, sounds, etc. can be combined and added to events or memories to create the diary. With this system, a graphical representation of the emotions of the day can be created. For that, the user must load all data into the computer, then a movie with ambiguous, abstract colourful body shap is created. This lm is consolidated by an expressive sound or music played in background. The user can modify and organize the movie, edit the content, change the body state or the color, add text, etc.

5.

CONCLUSION

We addressed the role of emotions in life and HCI. We have highlighted the fact that emotions are an important part of the human-computer interaction. The dierent features available to automatically detect emotions of users are the visual, the auditory and the physiological channels. By combining all these data the aective system must be able to determine the emotion of the current user. Several research areas like aircraft, scientic sectors or HCI used the emotions recognition. This subject is hot and attractive but relatively

[1] Irina Cristescu. Emotions in human-computer interaction: the role of non-verbal behavior in interactive systems. Revista Informatica Economica, vol. 2 na46, 110-116, 2008. [2] M. Pantic and L. J. M. Rothkrantz. Toward an aect-sensitive multimodal Human-Computer Interaction. proc. of the IEEE, vol. 91 na9, 1370-1390, September 2003. [3] Yvonne Rogers, Helen Sharp, Jenny Preece. Interaction Design : beyond human-computer interaction. Wiley; 3 edition, chapter 5, June 2011. [4] Luo Qi. Aective Emotion Processing Research and Application. International Symposiums on Information Processing, 768-769, 2008. [5] C. Lisetti, F. nasoz, C. leRouge, O. Ozyer, K. Alvarez. Developing multimodal intelligent aective interfaces for tele-home health care. International journal of Human-Computer Studies, vol.59, na46, 245-255, July 2003. [6] Christine L. Lisetti, Fatma Nasoz. MAUI: a Multimodal Aective User Interface. MULTIMEDIA 02 Proceedings of the tenth ACM international conference on Multimedia, 161-170, 2002. Zk, Petra [7] Madelene lindstrZm, Anna StNhl, kristina HZ SundstrZm, Jarmo Laaksolathi, Marco Combetto, Alex Taylor, Roberto Bresin. Aective Diary - Designing for Bodily Expressiveness and Self-Reection . CHI EA 06 CHI 06 extended abstracts on Human factors in computing systems, 1037-1042, april 2006. [8] Daniel Bernhardt, Peter Robinson. Interactive Control of Music using Emotional Body Expressions. CHI EA 08 CHI 08 extended abstracts on Human factors in computing systems, 3117-3122, april 2008. [9] Hua Wang, Helmut Prendinger, Takeo Igarash. Communicating Emotions in Online Chat using Physiological Sensors and Animated Text. CHI EA 04 CHI 04 extended abstracts on Human factors in computing systems, 1171-1174, april 2004. [10] Erik Strommen, Kristin Alexander. Emotional Interfaces for interactive Aardvarks: Designing Aect into Social Interfaces for Children. Proceedings of ACM CHI99 (May 1-3, 1999, Pittsburgh, PA) Conference on Human Factors in Computing Systems, 528-535, may 1999.

REFERENCES

Anda mungkin juga menyukai

- Cay Dau Hieu Nhi PhanDokumen9 halamanCay Dau Hieu Nhi PhanDũng Cù ViệtBelum ada peringkat

- Lect w3 Patternsstylesframeworktactics1Dokumen47 halamanLect w3 Patternsstylesframeworktactics1Dũng Cù ViệtBelum ada peringkat

- Chit Kara 2001Dokumen54 halamanChit Kara 2001Dũng Cù ViệtBelum ada peringkat

- CBIR in Infomation RetrievalDokumen6 halamanCBIR in Infomation RetrievalDũng Cù ViệtBelum ada peringkat

- Analysis of Emotion Recognition Using Facial Expression-SpeechDokumen7 halamanAnalysis of Emotion Recognition Using Facial Expression-SpeechDũng Cù ViệtBelum ada peringkat

- 10 1 1 323 9065Dokumen10 halaman10 1 1 323 9065Dũng Cù ViệtBelum ada peringkat

- Ijcsi 9 3 3 354 359Dokumen6 halamanIjcsi 9 3 3 354 359Dũng Cù ViệtBelum ada peringkat

- Image and Video Description With Local Binary Pattern Variants CVPR Tutorial FinalDokumen87 halamanImage and Video Description With Local Binary Pattern Variants CVPR Tutorial FinalDũng Cù ViệtBelum ada peringkat

- GLCMDokumen2 halamanGLCMprotogiziBelum ada peringkat

- Facial Feature Extraction and Principal Component Analysis For Face Detection in Color ImagesDokumen9 halamanFacial Feature Extraction and Principal Component Analysis For Face Detection in Color ImagesSilvianBelum ada peringkat

- EigenfaceDokumen7 halamanEigenfaceDũng Cù ViệtBelum ada peringkat

- SRW224PDokumen104 halamanSRW224PDũng Cù ViệtBelum ada peringkat

- Human Computer Interaction Human Computer Interaction - Dix, Finlay, Dix, Finlay, Abowd Abowd, Beatle, Beatle - ( )Dokumen22 halamanHuman Computer Interaction Human Computer Interaction - Dix, Finlay, Dix, Finlay, Abowd Abowd, Beatle, Beatle - ( )Dũng Cù ViệtBelum ada peringkat

- 10 1 1 87 1632Dokumen55 halaman10 1 1 87 1632Dũng Cù ViệtBelum ada peringkat

- Binary SignaturesDokumen19 halamanBinary SignaturesDũng Cù ViệtBelum ada peringkat

- Binary SignaturesDokumen19 halamanBinary SignaturesDũng Cù ViệtBelum ada peringkat

- An Efficient Color Representation For Image RetrievalDokumen8 halamanAn Efficient Color Representation For Image RetrievalDũng Cù ViệtBelum ada peringkat

- Binary SignaturesDokumen19 halamanBinary SignaturesDũng Cù ViệtBelum ada peringkat

- 99iscasDokumen1 halaman99iscasDũng Cù ViệtBelum ada peringkat

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (587)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (73)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2219)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (265)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (119)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- SCIENCE 5 PERFORMANCE TASKs 1-4 4th QuarterDokumen3 halamanSCIENCE 5 PERFORMANCE TASKs 1-4 4th QuarterBALETE100% (1)

- Ejemplo FFT Con ArduinoDokumen2 halamanEjemplo FFT Con ArduinoAns Shel Cardenas YllanesBelum ada peringkat

- Module-1 STSDokumen35 halamanModule-1 STSMARYLIZA SAEZBelum ada peringkat

- Plumbing Arithmetic RefresherDokumen80 halamanPlumbing Arithmetic RefresherGigi AguasBelum ada peringkat

- Heads of Departments - 13102021Dokumen2 halamanHeads of Departments - 13102021Indian LawyerBelum ada peringkat

- Lte Numbering and AddressingDokumen3 halamanLte Numbering and AddressingRoderick OchiBelum ada peringkat

- Module 7 - Assessment of Learning 1 CoursepackDokumen7 halamanModule 7 - Assessment of Learning 1 CoursepackZel FerrelBelum ada peringkat

- The Patient Self-Determination ActDokumen2 halamanThe Patient Self-Determination Actmarlon marlon JuniorBelum ada peringkat

- 7 Equity Futures and Delta OneDokumen65 halaman7 Equity Futures and Delta OneBarry HeBelum ada peringkat

- Purp Com Lesson 1.2Dokumen2 halamanPurp Com Lesson 1.2bualjuldeeangelBelum ada peringkat

- SIO 12 Syllabus 17Dokumen3 halamanSIO 12 Syllabus 17Paul RobaiaBelum ada peringkat

- Skype Sex - Date of Birth - Nationality: Curriculum VitaeDokumen4 halamanSkype Sex - Date of Birth - Nationality: Curriculum VitaeSasa DjurasBelum ada peringkat

- Online Music QuizDokumen3 halamanOnline Music QuizGiang VõBelum ada peringkat

- Buyers FancyFoodDokumen6 halamanBuyers FancyFoodvanBelum ada peringkat

- Surveying 2 Practical 3Dokumen15 halamanSurveying 2 Practical 3Huzefa AliBelum ada peringkat

- Wargames Illustrated #115Dokumen64 halamanWargames Illustrated #115Анатолий Золотухин100% (1)

- Influence of Social Media on Youth Brand Choice in IndiaDokumen7 halamanInfluence of Social Media on Youth Brand Choice in IndiaSukashiny Sandran LeeBelum ada peringkat

- Afrah Summer ProjectDokumen11 halamanAfrah Summer Projectاشفاق احمدBelum ada peringkat

- Exercise C: Cocurrent and Countercurrent FlowDokumen6 halamanExercise C: Cocurrent and Countercurrent FlowJuniorBelum ada peringkat

- COP2251 Syllabus - Ellis 0525Dokumen9 halamanCOP2251 Syllabus - Ellis 0525Satish PrajapatiBelum ada peringkat

- Marketing of Agriculture InputsDokumen18 halamanMarketing of Agriculture InputsChanakyaBelum ada peringkat

- SMChap 018Dokumen32 halamanSMChap 018testbank100% (8)

- 4 DiscussionDokumen2 halaman4 DiscussiondreiBelum ada peringkat

- Introduction To Global Positioning System: Anil Rai I.A.S.R.I., New Delhi - 110012Dokumen19 halamanIntroduction To Global Positioning System: Anil Rai I.A.S.R.I., New Delhi - 110012vinothrathinamBelum ada peringkat

- Solution of Introduction To Many-Body Quantum Theory in Condensed Matter Physics (H.Bruus & K. Flensberg)Dokumen54 halamanSolution of Introduction To Many-Body Quantum Theory in Condensed Matter Physics (H.Bruus & K. Flensberg)Calamanciuc Mihai MadalinBelum ada peringkat

- Case Study, g6Dokumen62 halamanCase Study, g6julie pearl peliyoBelum ada peringkat

- Government of The Punjab Primary & Secondary Healthcare DepartmentDokumen3 halamanGovernment of The Punjab Primary & Secondary Healthcare DepartmentYasir GhafoorBelum ada peringkat

- Fi 7160Dokumen2 halamanFi 7160maxis2022Belum ada peringkat

- UntitledDokumen4 halamanUntitledMOHD JEFRI BIN TAJARIBelum ada peringkat

- Online Music Courses With NifaDokumen5 halamanOnline Music Courses With NifagksamuraiBelum ada peringkat