Detecting Facial Expressions From Face Images Using A Genetic Algorithm

Diunggah oleh

Nuansa Dipa BismokoJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Detecting Facial Expressions From Face Images Using A Genetic Algorithm

Diunggah oleh

Nuansa Dipa BismokoHak Cipta:

Format Tersedia

Detecting Facial Expressions from Face Images Using a Genetic Algorithm*,

Jun OHYA Fumio KISHINO ATR Media Integration & Communications Research. Laboratories 2-2 Hikaridai, Seika-cho, Soraku-gun, Kyoto, 619-02, Japan email: ohya@mic.atr.co.jp

Abstract

A new method t o detect deformations of facial parts from a face image regardless of changes in the position and orientation of a face using a genetic algorithm is proposed. Facial expression parameters that are used t o deform and position a 9D face model are assigned to the genes of an individual in a population. The f a c e model is deformed and positioned according to the gene values of each individual and is observed b y a virtual camera, and a face image is synthesized. The fitness which evaluates t o what extent the real and synthesized face images are similar to each other is calculated. After this process is repeated for suficient generations, parameter estimation is obtained from the genes of the individual with the best fitness. Experimental results demonstrate the effectiveness of the method.

by the participants. This facilitates image processing, but the tape marks and helmets are not appropriate for natural human comminication and should be replaced by a passive detection method. Other related works on facial expression analysis include facial expression recognition and locating faces and facial parts. Methods that recognize facial expressions from dynamic image sequences were developed[2, 31. Although the recognition performances are very good, detection of facial expression at each time instant has not yet been achieved. There are 51. many works on locating faces and facial parts [4, If the locating processes work constantly, the detection of facial expressions is very promising, but the search algorithm is quite noise sensitive, and fatal errors could be caused by a search failure. This paper proposes a new passive method that detects quantitative deformation of facial parts as well as the position and orientation of the face from a face image. As catalogued in Eckmans Facial Action Coding System (FACS) [7], .humans can generate a variety of facial expressions. Since facial expressions are caused by actions of facial muscles, each facial part is continuously deformed. Although each facial muscle has a limited range of actions and is not completely independent of other muscles, the number of combinations of deformations of the facial parts is infinite. Finding the set of deformatilons of the facial parts as well as the position and orientation of a face is a combinatorial optimization prblem. To solve this type of problem, a genetic algorithm (GA) [6] is useful and utilized in this paper. In the proposed method, a 3D face model of a person whose expressions are to be detected is deformed and positioned according to the parameters assigned to the genes of an individual in a population. The deformed and positioned face model is observed by a virtual camera whose camera parameters are same as those of the real camera, and a face image is syn-

1 Introduction

Recently, automatic analysis of face images has become important toward realizing a variety of applications such as model-based video coding, intelligent computer interfaces, monitoring applications and visual communication systems. In particular, facial expressions are very important cues for these applications. This paper deals with detecting facial expressions, more specifically deformation of each facial part at a time instant, regardless of changes in the position and orientation of a face. In Virtual Space Teleconferencing [l]proposed by the authors, facial expressions of teleconference participants need to be detected and reproduced in the participants 3D face models in real-time. In our current implementations, tape marks are pasted to participants faces and are tracked in the face images acquired by small CCD cameras fixed to helmets worn

*This work was done by the authors mainly at ATR Communication Systems Research Laboratories, Kyoto, Japan

1015-4651/96 $5.000 1996 IEEE Proceedings of ICPR 96

649

thesized. The fitness which evaluates t o what extent the real and synthesized face images are similar to each other is calculated. After this process is repeated for sufficient generations, parameter estimation is obtained from the genes of the individual with the best fitness. In the following, more details are described on the proposed method and experimental results.

2 Detecting facial expressions

2 . 1 Algorithm based on GA

The proposed method for detecting a facial expression at a time instant from a face image using GA is illustrated in Fig. 1. A face image is acquired by a video camera that observes a human face. The face image is the target (environment) for the GA process explained in the following. The 3D face model of a human whose facial expressions are to be detected is created in advance as described in Section 2.2. As shown in Fig. 1, the face model is deformed according to the values of the . ,X, of each individual in a population; genes XI,. here the genes correspond t o parameters representing facial expressions and t o the position and orientation of the face model. Details of the deformation and the definitions of the parameters assigned to the genes are explained in Section 2.2. Each individual has one chromosome, and its genes are bit-strings. The initial values of the genes of each individual are given at random. The following processes are carried out for each individual. A face model deformed and positioned according t o the values of the genes is observed by a virtual camera, and a face image is synthesized. The virtual camera and the real camera have the same camera parameters; e.g. viewing angle, focal length and viewing direction. Like a usual GA procedure, the fitness of the individual to the environment, i.e. the face image from the real camera, is evaluated (Fig. 1). The fitness used in this paper evaluates how much the target image and the synthesized face image are similar to each other. First, the error E is calculated by

(1) W * H where k denotes red, green and blue; Tk,,3 and s k , , , are the red, green and blue values of the pixel ( i , j ) in the target and the synthesized face images, respectively; W and H are the width and height of the images. The error E in Eq. (1) is calculated for all individuals in the population. For the subsequent processes, the error E is converted to the fitness F , which has a large value if an individual suits the environment.

1

Individuals with a higher fitness survive and reproduce (can be parents) at a higher rate and vice versa. That is, natural selection is the process that chooses parents who can bear children in the next generation. The mechanism of natural selection is obtained from a biased roulette wheel [6], where each individual has a roulette slot sized in proportion to its fitness. According t o the probabilities calculated by the biased roulette wheel, individuals to be parents are selected and are entered into the mating pool, in which two individuals (parents) are mated and reproduce two new individuals (children). During the mating process, the two genetic operations of crossover and mutation[6] are performed. In this way, new individuals are born, and the same processes are repeated. After a certain number of generations (repetition of the processes), the estimated values of the parameters are obtained from the values of the genes X I , . . . , X n of the individual with the best fitness in the population.

2.2 How to deform a 3D face model

E=

W-l,H-1 C i = O , j = O C k = r , g , b ITk,,3

- sk,,J

A face model is deformed according to the values of the genes of an individual by the method developed for the real-time facial expression reproduction system [8] for Virtual Space Teleconferencing. The system consists of three main modules: (1) 3D modeling of faces, (2) real-time detection of facial expressions, and (3) real-time reproduction of the detected expressions in the 3D models. Prior t o a teleconference session, 3D wire frame models (WFM) of the persons faces are created in (1) by a 3D scanner that can acquire color texture and 3D shape data of the object. In (2), during the teleconference, the tape marks pasted t o the persons faces are tracked in the face images acquired by the small video cameras fixed t o the helmets worn by the persons. The tracking results are used t o deform the face models in (3) such that the facial expressions are reproduced in the face models by mapping the color texture to the deformed model. In this paper, the facial expression reproduction method in (3) is used to deform the face model. Since the dat a to be input to (3) is 2D displacements of the tape marks in the face images, 2 D vectors corresponding t o the tape mark displacements are assigned to the genes as parameters representing facial expressions. In the following, although tape marks are not pasted t o the faces in the proposed method, details of the deformation are explained using the tape marks t o facilitate the explanation. To convert 2D movements of tape marks to 3D displacements of vertices of the face WFM, 3D shape

650

data of the face generating different facial expressions (reference expressions) is utilized. In general, facial skin does not have salient features except for the areas of the facial parts; thus, many small dots are painted on the facial skin in advance. Each tape mark is pasted on the position of a dot. The 3D positions of the dots for the reference expressions are measured by the 3D scanner mentioned earlier. When the face WFM is created in ( l ) , the positions of the vertices of the WFM are adjusted so that each tape mark is positioned to a vertex, and so is each dot. For the explanations of the conversion, the 3D coordinate system of the scanner and the 2D coordinate system of the video camera that are used to track the tape marks are defined. As shown in Fig. 2, the coordinate system of the 3D scanner is the X - Y - 2 coordinate system. The CCD camera, which is fixed to the helmet worn by a person, projects the persons face to the 2D plane; this is called the face image. In Fig. 2, the face image is defined by the 2 - y coordinate system. In the two coordinate systems, the X and Y axes are parallel to the 2 and y axes, respectively such that a frontal view of a face is obtained from the camera. The two coordinate systems exist in YO, 20) system. the world coordinate (XO, In the pogtion mtasurements of the dots painted on a face, let Dhj and Fh be the X - Y 2 coordinates of dot h for expression j ( j = 1 , 2 , . ,N ; N is the number of the reference expressions, excluding the neutral expression) and for the neutral expression, respectively. Then, the 3D displacements of dot h from the n e : tral expression are calculated from Mhj = D h j . - Fh. The 2D reference vector m i j is obtained by projecting M ; j to the face image (the 2 - y plane). In the expression reproduction system (3), detected movements of the tape marks in the face image are used to deform the face WFM according to the following algorithm. As explained earlier, there are three types of vertices: a vertex that corresponds to (a) both tape mark and dot, (b) a dot (but not tape mark), and (c) neither tape mark nor dot. Each type has its own process to obtain a 3D displacement of a vertex, and the algorithm is carried out in the order of (a), (b), (c). In (a), let ! U be the detected 2D displacement (from the position for the neutral expression) of the tape mark that corresponds to dot h , and let m % i (i = 1,.. . , N ; N is the number of reference ex; , pressions) be the reference vectors of dot h. Let m and m z b be the nearest neighbors on both sides of U ; , where a and b are the reference expressions. Then, : U is represented by

In Eq.(2), f h and ih are weights and can be calculated by solving Eq.(2). Let M;, denote the original 3D reference vector of m!,; then, the 3D displacement vector of the vertex corresponding to dot h is obtained from V7, = k h M i , $- IhMfLb. (3) Similarly, the 3D displacement vector of a type (b) vertex is calculated using the data for the type (a) vertex nearest to the (b) vertex. The displacement of a type (c) vertex is calculated using the data for type (a) and/or (b) vertices that surround the (c) vertex. ; of the In this way, with the detected 2D vector U tape mark h, the face WFM is deformed. Therefore, the x - y coordinates of the tape marks are assigned to the genes as parameters representing facial expression. As other paratmeters, eye openness and the x directional position cif the pupil in an eye are assigned to the genes. In addition, the position and orientation of a face; the translations along the X - Y - 2 axes and the rotations about the three axes are assigned to the genes.

3 Experimental1 results and discussion

The face WFM used for the experiments has 1610 vertices to which seven tape marks and 310 dots are assigned. As the positions of the seven (numbered) tape marks for the neutral expression are displayed in Fig. 3, the marks to be tracked are pasted on one half of the face, because vve assume that faces are symmetrical. In this paper, face images synthesized using the face WFM described above are used as target images to facilitate a comparison between real and estimated parameter values. The viewpoint for synthesizing face images is placed on the 20 axis of the world coordinate system (Fig. 2), where a frontal view of the face model can be obtained if the face model coordinate system (X - Y - 2 ) is a translation of the world coordinate system (-KO - YO- 2 0 ) . The images are synthesized under orthographic projection. The size of the synthesized face images is 256 x 256 (pixels). Each pixel has 8-bit red, green and blue color values. In the GA procediire described in Section 2.1, each individual in the population has one chromosome and is asexual-haploid. The genes are 12-bit integers, and the integers are converted to real values. The population size is 100, and ithe number of generations is 300. The crossover and mutation rates are 0.3 and 0.003, respectively. The number of parameters to be estimated is 21: 14 for the tape marks, 2 for eye openness and the pupil, and 5 for the position and orientation parameters for the face. The computer used is a Silicon

-T

-e

U ,

= kh

+ lh.a;

(2)

651

Graphics IRIS Workstation (Indigo). An example of the synthesized face images are shown in Fig. 4. The parameters used for synthesizing the image are listed in Table 1 as the real parameter values to be estimated. In Table 1, the parameters for facial expression; i.e., the displacements of the seven tape marks from the neutral expression, are given by the z - y coordinates in the CCD camera image (Fig. 2), whose 2 and y lengths are 640 and 480 pixels, respectively. Similarly, eyelid openness is given by the coordinate of the lower edge of the eyelid, and the position of the pupil is given by the x coordinate. As for the face position parameters in Table 1, the three translation parameters of the face model are given by the normalized X o -YOcoordinates, which correspond to the horizontal and vertical coordinates of the synthesized face image and take values between -3.0 and 3.0. The rotations (degree) of the face model are about the X O , YOand 20axes, respectively. Experiments to detect facial expressions were carried out for the synthesized images. The estimated parameter values are listed in Table 1. The estimated face images synthesized by using the estimated parameter values are also shown in Fig. 4, in which the target and estimated faces look quite similar in each example. In all the cases, the position and orientation parameters of the face model are estimated very accurately. The parameters for facial expressions are also detected fairly accurately. Although the computation time is approximately five hours by the current implementation based on an Indigo workstation, the fidelity of the reproductions by using the detected parameter values is good enough for our teleconferencing application. Hardware based implementation is necessary to accelerate the processing speed. To test the robustness of the algorithm against rotations of the face, face images are synthesized by rotating the face model by 20 to 80. The rotated (40) face images synthesized using the real and estimated parameter values are shown in Fig. 5 . The real and estimated values for each rotation value is listed in Table 2, from which it turns out the rotations are estimated very accurately. Regarding the detection of facial expressions, eyelid estimation is not very accurate for some cases. The error E in Eq. (1) reflects only the entire face; therefore, local information on facial parts could be utilized. It turned out that the proposed method is globally robust against face rotations. The experimental results of this paper demonstrate a possibility that facial expressions could be detected regardless of changes in the pose of a face,

4 Conclusions

This paper has presented a method to detect facial expressions from a face image acquired by a video camera using a genetic algorithm. Our method is a passive method and does not need 3D reconstruction, which is generally a difficult task, nor search techniques, which are quite noise sensitive. The only image processing in our method is pixel by pixel comparison of target and synthesized images. Experiments to detect facial expressions were carried out for synthesized face images. The experimental results in this paper show that the proposed method could cope with changes in the pose of a face and achieve accurate detection of facial expressions. Although the results are promising, robustness of the method should be tested for real face images. Calculations of the proposed method are still quite intense. Accelerating the processes based on hardware implementation should be studied.

Referenc es

J. Ohya et al., Virtual Space Teleconferencing: Realtime reproduction of 3D human images, J. of Visual Communication and Image Representation, vo1.6, No. 1, pp. 1-25, (Mar. 1995).

M.J. Black et al., Tracking and recognizing rigid and non-rigid facial motions using local parametric models of image motion, Proc. of Fifth ICCV, pp. 374381, (Jun. 1995). I.A. Essa et al., Facial expression recognition using a dynamic model and motion energy, Proc. of Fifth ICCV, pp. 360-367, (Jun. 1995). H.P. Graf et al., Locating faces and facial parts, Prof. of International Workshop on Automatic Faceand Gesture-Recognition, pp. 41-46, (Jun. 1995).

L.C.DeSilva et al., Detection and tracking of facial features, Proc. of SPIE, Visual Communication and Image Processing 95, Vol. 2501, pp.1161-1172, (May.

1995).

D.E.Goldberg, Genetic Algorithms in search, optimization, and machine learning, Addison-Wesley Publishing Company, Inc., 1989,

P. Ekman et al., Facial Action Coding System, Consulting Psychologists Press Inc., 1978.

K. Ebihara et al., Real-time 3-D facial image reconstruction for Virtual Space Teleconferencing, The Transactions of The Institute of Electronics, Information and Communication Engineers A, Vol. J79-A, No.2, pp.527-536, (Feb. 1996) (in Japanese).

652

3D Face Model

t

1

Real face

Genes

I

Mating pool (Mutation, Crossover)

Pea'

camera

Fig.4 Target and estimated face images

Virtual camera

A

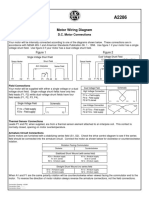

Table 1 Real and estimated vades for Fig.4 *1 z and y (pixels), *2 Normalized XO and YO, *3 Degrees about XO, I$, ZOaxes

Estimated Darameters

I

with the best fitness

1

Parameters Q2

Fig.1 Principle

Face Image

Real XlXO 1 YIYO 22 j 5 j -8 01

~

11

20

Estimated

~

- I 22.0 1 - I -6.2 1

1 XIXo

YiYO

I 20

-

5.1 j 0 . 01

'

Facial

expressions

Face

3DScanncr

Eyeli

World Coordinate System

Fig.2 Definitions of the coordinate systems

Fig.5 Rotated (40') face image Table 2 Real ,and estimated vades for Fig.5

#7

-7

,, - 1

. j -14.5

-,

.

-2.7

201

-0.75

-1

Fig.3 Positions of the tape marks

.I . 1 .

0.3 0.50

8.6 .

.0.75 ,

40.0 10.0

0.0 j 39.8 -0.1

653

Anda mungkin juga menyukai

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- Analysis and Calculations of The Ground Plane Inductance Associated With A Printed Circuit BoardDokumen46 halamanAnalysis and Calculations of The Ground Plane Inductance Associated With A Printed Circuit BoardAbdel-Rahman SaifedinBelum ada peringkat

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- Motor Wiring Diagram: D.C. Motor ConnectionsDokumen1 halamanMotor Wiring Diagram: D.C. Motor Connectionsczds6594Belum ada peringkat

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Religion in Space Science FictionDokumen23 halamanReligion in Space Science FictionjasonbattBelum ada peringkat

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (894)

- Air Wellness QRSDokumen2 halamanAir Wellness QRSapi-3743459Belum ada peringkat

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- Descripcion Unidad 9, Dos CiudadesDokumen13 halamanDescripcion Unidad 9, Dos CiudadesGabriela ValderramaBelum ada peringkat

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- Final Decision W - Cover Letter, 7-14-22Dokumen19 halamanFinal Decision W - Cover Letter, 7-14-22Helen BennettBelum ada peringkat

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- KoyoDokumen4 halamanKoyovichitBelum ada peringkat

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Stability Calculation of Embedded Bolts For Drop Arm Arrangement For ACC Location Inside TunnelDokumen7 halamanStability Calculation of Embedded Bolts For Drop Arm Arrangement For ACC Location Inside TunnelSamwailBelum ada peringkat

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (587)

- Flexibility Personal ProjectDokumen34 halamanFlexibility Personal Projectapi-267428952100% (1)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (265)

- 47-Article Text-338-1-10-20220107Dokumen8 halaman47-Article Text-338-1-10-20220107Ime HartatiBelum ada peringkat

- IEQ CompleteDokumen19 halamanIEQ Completeharshal patilBelum ada peringkat

- Survey Report on Status of Chemical and Microbiological Laboratories in NepalDokumen38 halamanSurvey Report on Status of Chemical and Microbiological Laboratories in NepalGautam0% (1)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- Flowing Gas Material BalanceDokumen4 halamanFlowing Gas Material BalanceVladimir PriescuBelum ada peringkat

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (73)

- Maintenance Handbook On Compressors (Of Under Slung AC Coaches) PDFDokumen39 halamanMaintenance Handbook On Compressors (Of Under Slung AC Coaches) PDFSandeepBelum ada peringkat

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- 07 Raction KineticsDokumen43 halaman07 Raction KineticsestefanoveiraBelum ada peringkat

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Soil LiquefactionDokumen12 halamanSoil LiquefactionKikin Kikin PelukaBelum ada peringkat

- Motor GraderDokumen24 halamanMotor GraderRafael OtuboguatiaBelum ada peringkat

- Aircraft Design Project 2Dokumen80 halamanAircraft Design Project 2Technology Informer90% (21)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- Telco XPOL MIMO Industrial Class Solid Dish AntennaDokumen4 halamanTelco XPOL MIMO Industrial Class Solid Dish AntennaOmar PerezBelum ada peringkat

- Detection and Attribution Methodologies Overview: Appendix CDokumen9 halamanDetection and Attribution Methodologies Overview: Appendix CDinesh GaikwadBelum ada peringkat

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- Railway Airport Docks and HarbourDokumen21 halamanRailway Airport Docks and HarbourvalarmathibalanBelum ada peringkat

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2219)

- Innovative Food Science and Emerging TechnologiesDokumen6 halamanInnovative Food Science and Emerging TechnologiesAnyelo MurilloBelum ada peringkat

- Math 202: Di Fferential Equations: Course DescriptionDokumen2 halamanMath 202: Di Fferential Equations: Course DescriptionNyannue FlomoBelum ada peringkat

- QP (2016) 2Dokumen1 halamanQP (2016) 2pedro carrapicoBelum ada peringkat

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- DNB Paper - IDokumen7 halamanDNB Paper - Isushil chaudhari100% (7)

- Product ListDokumen4 halamanProduct ListyuvashreeBelum ada peringkat

- Handout Tematik MukhidDokumen72 halamanHandout Tematik MukhidJaya ExpressBelum ada peringkat

- Casio AP-80R Service ManualDokumen41 halamanCasio AP-80R Service ManualEngkiong Go100% (1)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (119)

- Fraktur Dentoalevolar (Yayun)Dokumen22 halamanFraktur Dentoalevolar (Yayun)Gea RahmatBelum ada peringkat

- GLOBAL Hydro Turbine Folder enDokumen4 halamanGLOBAL Hydro Turbine Folder enGogyBelum ada peringkat

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)