7010 w06 Er

Diunggah oleh

mstudy123456Deskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

7010 w06 Er

Diunggah oleh

mstudy123456Hak Cipta:

Format Tersedia

COMPUTER STUDIES

Paper 7010/01

Paper 1

General comments

The general standard of work from candidates was very similar this year to previous years. It was pleasing

to note that there was less evidence of rote learning in the questions which required definitions and

descriptions of standard processes.

There were one or two new topics in the syllabus for 2006 and these appeared to cause the candidates the

greatest challenge.

Questions which involve interpreting or writing algorithms continue to cause many candidates problems. The

last question on the paper, which requires candidates to write their own algorithm, can be answered either in

the form of pseudocode or flowchart. Weaker candidates should consider using flowcharts if their algorithmic

skills are rather limited.

Comments on specific questions

Question 1

(a) Many candidates gained one mark here for examples given or human checks/double entry. It

proved more difficult to obtain the second mark since many answers were referring to validation

checks rather than verification.

(b) This was generally well answered with most candidates aware of the hardware needed (i.e.

webcams, microphones, speakers, etc.) and the need for a network or Internet connection. There

were the usual very general answers: it is a way of communication between people this could

refer to a telephone system and any other communication device.

(c) This question was well answered with many candidates gaining maximum marks. The only real

error was to refer to simply sending signals without mentioning that there is a need to exchange

signals for handshaking to work.

(d) Again this was generally well answered. The most common example was a flight simulator. There

was some confusion between simulations and virtual reality with many candidates not fully aware

of the differences.

(e) The majority of candidates got full marks here most were aware of the use of batch processing in

utility billing and producing payslips.

Question 2

In this question, the majority of candidates named a correct direct data capture device. However, they were

either unable to give a suitable application or their answer was too vague to be awarded a mark. For

example: device: bar code reader (1 mark); application: to read bar codes (0 marks since no application

such as automatic stock control system mentioned). Device: camera (1 mark); application: to take

photographs (0 marks since this is not an application a suitable example would be a speed camera).

7010 Computer Studies November 2006

1

Question 3

(a) This was generally well answered. The most common error was to simply give the word virus

rather than say that it is the planting or sending of the virus that is the crime.

(b) Again, fairly well answered with most prevention methods, such as encryption, passwords, anti-

virus software, etc. chosen. However, it is still common to see candidates think back ups prevent

computer crime this simply guards against the effect of computer crime but does not prevent it.

Question 4

This question was not well answered with many candidates simply referring to computer use in general

rather than answering the question which asked about effects on society. Therefore, answers that

mentioned health risks, hacking, etc. were not valid. However, loss of jobs, less interaction between people,

town centres become deserted as shops close, etc. are all valid effects.

Question 5

This was new topic and caused a few problems with many candidates. It was common to see references to

word processors, CAD software, etc. without actually answering the question. Computer software is used in

the film/TV industry to create animation effects, produce special effects (e.g. in science fiction/fantasy or in

morphing), synchronizing voice outputs with cartoon characters, etc.

Question 6

Many of the answers given here were too vague and consequently very few candidates gained good marks.

The design stage needs, for example, design input forms and NOT just input or select validation rules, and

NOT just validation. Many marks were lost for non-specific answers.

Question 7

(a) Many general answers describing what expert systems were and how they were created. The

question, however, was asking for HOW the expert system would be USED to identify mineral

deposits. This would include a question and answer session between computer and user,

searching of a knowledge base and a map of mineral deposits/probability of finding minerals.

(b) This was well answered by the majority of candidates.

Question 8

(a) (b) Generally well answered. Some candidates, however, just described general effects of introducing

computer-based systems rather than made a comparison with a manual filing system. The main

advantages are the reduction in paperwork, much faster search for information and ease of cross

referencing. The usual effects of such a system would be unemployment, need for re-training and

de-skilling.

(c) Part (i) of this question was well answered, but part (ii) caused a few problems. Some of the main

areas where parallel running would not be appropriate include control applications or POS

terminals.

Question 9

(a) Most candidates made an attempt at this question although very few spotted all 3 errors: product

set at 0, incorrect count control and output in the wrong place. The most common error found was

the initialisation of product. Several candidates thought that syntax errors were the main fault e.g.

output should be used instead of print, LET count = 0 rather than just count = 0.

(b) No real problems here except an alarming number of candidates chose IF THEN ELSE as an

example of a loop construct.

7010 Computer Studies November 2006

2

Question 10

This question was very well answered by the majority of candidates. The only real mistake was to write out

the instructions in full rather than use the commands supplied.

Question 11

Generally well answered. In part (b) marks were lost for brackets in the wrong place or for using formulae

such as AVERAGE(C2:F2)/4 i.e. not realising that the word AVERAGE carried out the required calculation.

Part (c) probably caused the greatest problems to candidates with very few giving a good description of the

descending sort routine.

Question 12

Part (a) gave no problems. In the second part, most candidates gained 1 mark for general information about

flights. The third part caused the most problems with some candidates believing the information kiosks

would be linked into Air Traffic Control.

Question 13

(a) Many candidates gained 1 mark here for reference to a computer simulation. It is clear from this

question and Question 1(d) (description of simulation) that many candidates are not fully aware of

the differences between virtual reality and simulations.

(b) Many candidates gave the correct interface devices such as data goggles and gloves, special suits

fitted with sensors etc. Unfortunately, the usual array of devices such as printers, VDUs, and

keyboards appeared as well.

(c) Very few candidates understood the real advantages of virtual reality systems. The type of

answers expected included: it is much safer (e.g. can view inside a nuclear reactor), the feeling of

being there, ability to perform actual tasks without any risk, etc.

(d) Training aspects were the most common examples here. Many candidates just wrote down games

which is not enough on its own since many computer games do not make any use of virtual reality

technology.

Question 14

No real problems here although the most common error was just to give a description rather than describe

the benefits/advantages of top down design.

Question 15

Most candidates gained 1 mark for the portability aspect of laptops in part (a). In part (b) most marks were

gained for laptops being easier to damage or steal and also tend to be more expensive to buy in the first

place. However, several candidates referred to wireless connections when the question was asking for

differences between laptop computers and desktop computers.

Question 16

(a) Many general responses were given here e.g. spreadsheets are used to draw graphs or can be

used to teach students how to use spreadsheets, or DTP is used to produce leaflets. The question

was asking how these 4 packages would be used by the company to run on-line training courses.

Thus, answers expected included: use spreadsheets to keep candidate marks, use a database

program to keep candidate details, use DTP to design and produce training material and use an

authoring package to carry out website design.

(b) This part was fairly well answered with most candidates aware of how word processed documents

could be suitably modified to fit one page.

7010 Computer Studies November 2006

3

Question 17

This question proved to be a very good discriminator with marks ranging from 0 to 5 with all marks between.

The most common error was to switch items 4 (error report) and 6 (reject item) which lost 2 marks. Many

candidates quite correctly used numbers in the boxes rather than try and squeeze in the full descriptions.

Question 18

Again, this was fairly well answered by most candidates. The only real problem occurred in part (c) where

an incorrect query was supplied or an AND statement was used in error. In part (d), customer or customer

name was given rather than customer id or customer ref no.

Question 19

Part (a) caused few problems although there were a surprising number of temperature sensors given as

ways of detecting cars. The third part was not very well answered. Many answers took the form: as a car

approaches the bridge the lights change to green and the other light turns red this would be an interesting

way to control traffic! Very few candidates mentioned counting cars approaching the bridge on both sides

and using the simulation results to determine the traffic light timing sequences (the number of cars in various

scenarios would be stored on file and a comparison would be made to determine the lights sequence). In

part (d), a few candidates suggested turning off the lights altogether after a computer failure or put both sets

of lights to green neither option would be a particularly safe method. The most likely solution would be to

put both sets of lights to red until the problem could be sorted or put both sets of lights to flashing amber and

warn drivers to approach with caution.

Question 20

This caused the usual array of marks from 0 to 5. The better candidates scoring good marks here with well

written algorithms. The very weak candidates continue to simply re-write the question in essay form only to

gain zero marks. Very few candidates realise that one of the best ways of answering questions of this type is

to supply a flowchart. This avoids the need to fully understand pseudocode and is also a very logical way of

approaching the problem. We would advise Centres to consider this approach with their weaker candidates

when preparing for this exam in future years.

7010 Computer Studies November 2006

4

COMPUTER STUDIES

Paper 7010/02

Project

General comments

The quality of work was of a slightly higher standard than last year. There were fewer inappropriate projects

which provided limited opportunities for development and, therefore, did not qualify for one of the higher

grades. Such projects included word-processing/DTP projects of a theoretical nature.

The majority of Centres assessed the projects accurately according to the assessment headings. In some

instances, however, marks were awarded by the Centre where there was no written evidence in the

documentation. Marks can only be awarded where there is written proof in the documentation. It is

important to realise that the project should enable the candidate to use a computer to solve a significant

problem, be fully documented and contain some sample output that matches their test plans. A significant

number of Centres failed to provide the correct documentation for external moderation purposes. Firstly, the

syllabus requires a set number of projects to be provided as a sample and full details can be found in the

syllabus. A number of Centres still send the work for all candidates; this is only required where the number

of candidates is ten or less. It is only necessary for schools to carry out internal moderation when more than

one teacher is involved in assessing the projects. A number of Centres appear to be a combination of one or

more different schools; it is vital that internal moderation takes place between such joint schools. In these

cases, schools need to adjust the sample size to reflect the joint total number of entries.

The standard of presentation and the structure of the documentation continued to improve. Many candidates

structured their documentation around the broad headings of the assessment scheme, and this is to be

commended. Candidates might find it useful to structure their documentation using the following framework.

Many of the sections corresponded on a one-to-one basis exactly to the assessment headings, some

combined assessment headings and some carried no marks but formed part of a logical sequence of

documentation.

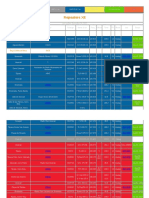

Suggested framework for Documentation of the Project

ANALYSIS

Description of the problem

List of Objectives (in computer-related terms or computer processes)

Description of Existing Solution and business objectives

Evaluation of Existing Solution

Description of Other Possible Solutions

Evaluation of Other Possible Solution

DESIGN

Action Plan (including a time scale or Gantt chart)

Hardware Requirements (related to their own solution)

Software Requirements (related to their own solution)

IMPLEMENTATION

Method of Solution (related to the individual problem, including any algorithms, flowcharts,

top down designs or pseudocode.)

7010 Computer Studies November 2006

5

TESTING

Test strategy/plans Normal data (including the expected results and the objective to be

tested)

Extreme data (including the expected results and the objective to be

tested)

Abnormal data (including the expected results and the objective to be

tested)

Test Results Normal data (including the objective to be tested)

Extreme data (including the objective to be tested)

Abnormal data (including the objective to be tested)

DOCUMENTATION

Technical Documentation

User Documentation/User Guide

SYSTEM EVALUATION AND DEVELOPMENT

Evaluation (must be based on actual results/output which can be assessed from

the written report and referenced to the original objectives)

Future Development/Improvements

The assessment forms for use by Centres should not allow for a deduction for the trivial nature of any

project. One of the moderators roles is to make such a deduction. Therefore, if the Centre thinks that a

deduction should be made in this section then that particular project must be included in the sample.

Centres should note that the project work should contain an individual mark sheet for every candidate and

one or more summary mark sheets, depending on the size of entry. It is recommended that the Centre retain

a copy of the summary mark sheet(s) in case this is required by the moderator. In addition, the top copy of

the MS1 mark sheet should be sent to Cambridge International Examinations by separate means. The

carbon copy should be included with the sample projects. Although the syllabus states that disks should not

be sent with the projects, it is advisable for Centres to make back up copies of the documentation and retain

such copies until after the results query deadlines. Although disks or CDs should not be submitted with the

coursework, the moderators reserve the right to send for any available electronic version. Centres should

note that on occasions coursework may be retained for archival purposes.

The standard of marking is generally of a consistent nature and of an acceptable standard. However, there

are a few Centres where there was a significant variation from the prescribed standard, mainly for the

reasons previously outlined. It is recommended that when marking the project, teachers indicate in the

appropriate place where credit is being awarded, e.g. by writing in the margin 2,7 when awarding two

marks for section seven.

Areas of relative weakness in candidates documentation included setting objectives, hardware, algorithms,

testing and a lack of references back to the original objectives. Centres should note that marks can only be

awarded when there is clear evidence in the documentation. A possible exception would be in the case of a

computer control project where it would be inappropriate to have hard copy evidence of any testing strategy.

In this case, it is perfectly acceptable for the teacher to certify copies of screen dumps or photographs to

prove that testing has taken place

The mark a candidate can achieve is often linked to the problem definition. It would be in the candidates

interest to set themselves a suitable project and not one which is too complex (for example, it is far too

complex a task for a student to attempt a problem which will computerise a hospitals administration). The

candidates needed to describe in detail the problem and, where this was done correctly, it enabled the

candidate to score highly on many other sections. This was an area for improvement by many candidates. If

the objectives are clearly stated in computer terms, then a testing strategy and the subsequent evaluation

should follow on naturally, e.g. print a membership list, perform certain calculations, etc. Candidates should

note that they should limit the number of objectives for their particular problem; it is inadvisable to set more

than 7 or 8 objectives. If candidates set themselves too many objectives, then they may not be able to

achieve all of them and this prevents them from scoring full marks.

7010 Computer Studies November 2006

6

There was evidence that some candidates appeared to be using a textbook to describe certain aspects of

the documentation. Some candidates did not attempt to write the hardware section of the documentation

with specific reference to their own problem. It is important to note that candidates should write their own

documentation to reflect the individuality of their problem and that group projects are not allowed. Where the

work of many candidates from the same centre is identical in one or more sections then the marks for these

sections will be reduced to zero, for all candidates, by the moderators. Centres are reminded of the fact that

they should supervise the candidates work and that the candidate verifies that the project is their own work.

The hardware section often lacked sufficient detail. Full marks were scored by a full technical specification of

the required minimum hardware, together with reasons why such hardware was needed by the candidates

solution to his/her problem. As an example of the different levels of response needed for each of the marks,

consider the following:

I shall need a monitor is a simple statement of fact and would be part of a list of hardware.

I shall need a 17 monitor is more descriptive and has been more specific.

I shall need a 17 monitor because it provides a clearer image is giving a reason for the choice, but

I am still not at the top mark.

I will need a 17 monitor because it gives a clearer image which is important because the user is

short sighted NOW it has been related to the problem being solved.

Candidates need to provide a detailed specification and justify at least two hardware items in this way to

score full marks.

Often the algorithms were poorly described and rarely annotated. Candidates often produced pages and

pages of computer generated algorithms without any annotation; in these cases it was essential that the

algorithms were annotated in someway in order to show that the candidates understood their algorithm.

Candidates should ensure that any algorithm is independent of any programming language and that another

user could solve the problem by any appropriate method, either programming or using a software

application. If a candidate uses a spreadsheet to solve their problem, then full details of the formulae and

any macros should be included.

Many candidates did not produce test plans by which the success of their project could be evaluated. It is

vital that candidates include in their test strategy the expected result. This is the only way in which the actual

results can be judged to be successful. If these expected results are missing, then the candidate will

automatically score no marks in the evaluation section. The test results should have include output both

before and after any test data, such printouts should be clearly labelled and linked to the test plans. This will

make it easy to evaluate the success or failure of the project in achieving its objectives. Such results must

be obtained by actually running the software and not the result of word-processing. The increasing

sophistication of software is such that it can sometimes be difficult to establish if the results are genuine

outputs which have been cut and pasted or simply a word-processed list of what the candidate expects the

output to be. Candidates need to ensure that their documentation clearly shows that the output is the result

of actually using the proposed system. The use of screen dumps to illustrate the actual sample runs

provides all the necessary evidence, especially in the case of abnormal data where the error message can

be included.

An increasing number of candidates are designing websites as their project. Candidates must include site

layout and page links in their documentation. The better candidates should include external links and

possibly a facility for the user to leave an email for the webmaster; in this case the work would qualify for the

marks in the modules section.

The moderation team have also commented on the fact that only a small number of Centres appear to act on

the advice given in the Centre reports and that candidates make the same omissions from their

documentation year on year.

7010 Computer Studies November 2006

7

Anda mungkin juga menyukai

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2219)

- Magical Industrial RevolutionDokumen156 halamanMagical Industrial RevolutionUgly Panda100% (12)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- 0486 w09 QP 4Dokumen36 halaman0486 w09 QP 4mstudy123456Belum ada peringkat

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (73)

- 0654 w04 Ms 6Dokumen6 halaman0654 w04 Ms 6mstudy123456Belum ada peringkat

- Organizational Transformations: Population Ecology TheoryDokumen25 halamanOrganizational Transformations: Population Ecology TheoryTurki Jarallah100% (2)

- UCO Reporter 2021, December Edition, November 26, 2021Dokumen40 halamanUCO Reporter 2021, December Edition, November 26, 2021ucopresident100% (2)

- 0547 s06 TN 3Dokumen20 halaman0547 s06 TN 3mstudy123456Belum ada peringkat

- 0445 s13 ErDokumen37 halaman0445 s13 Ermstudy123456Belum ada peringkat

- Literature (English) : International General Certificate of Secondary EducationDokumen1 halamanLiterature (English) : International General Certificate of Secondary Educationmstudy123456Belum ada peringkat

- Best Practices in Implementing A Secure Microservices ArchitectureDokumen85 halamanBest Practices in Implementing A Secure Microservices Architecturewenapo100% (1)

- 0420-Nos As 1Dokumen24 halaman0420-Nos As 1Ali HassamBelum ada peringkat

- 9697 s12 QP 33Dokumen4 halaman9697 s12 QP 33mstudy123456Belum ada peringkat

- Frequently Asked Questions: A/AS Level Sociology (9699)Dokumen1 halamanFrequently Asked Questions: A/AS Level Sociology (9699)mstudy123456Belum ada peringkat

- 9701 s06 Ms 4Dokumen5 halaman9701 s06 Ms 4Kenzy99Belum ada peringkat

- 9695 s05 QP 4Dokumen12 halaman9695 s05 QP 4mstudy123456Belum ada peringkat

- 9697 s12 QP 53Dokumen4 halaman9697 s12 QP 53mstudy123456Belum ada peringkat

- 9697 w11 QP 41Dokumen2 halaman9697 w11 QP 41mstudy123456Belum ada peringkat

- 9694 w10 QP 23Dokumen8 halaman9694 w10 QP 23mstudy123456Belum ada peringkat

- University of Cambridge International Examinations General Certificate of Education Advanced LevelDokumen2 halamanUniversity of Cambridge International Examinations General Certificate of Education Advanced Levelmstudy123456Belum ada peringkat

- 9693 s12 QP 2Dokumen12 halaman9693 s12 QP 2mstudy123456Belum ada peringkat

- 9689 w05 ErDokumen4 halaman9689 w05 Ermstudy123456Belum ada peringkat

- 8683 w12 Ms 1Dokumen4 halaman8683 w12 Ms 1mstudy123456Belum ada peringkat

- 9694 s11 QP 21Dokumen8 halaman9694 s11 QP 21mstudy123456Belum ada peringkat

- 8780 w12 QP 1Dokumen16 halaman8780 w12 QP 1mstudy123456Belum ada peringkat

- 9706 s11 Ms 41Dokumen5 halaman9706 s11 Ms 41HAHA_123Belum ada peringkat

- 9274 w12 ErDokumen21 halaman9274 w12 Ermstudy123456Belum ada peringkat

- 9719 SPANISH 8685 Spanish Language: MARK SCHEME For The May/June 2009 Question Paper For The Guidance of TeachersDokumen3 halaman9719 SPANISH 8685 Spanish Language: MARK SCHEME For The May/June 2009 Question Paper For The Guidance of Teachersmstudy123456Belum ada peringkat

- 8004 General Paper: MARK SCHEME For The May/June 2011 Question Paper For The Guidance of TeachersDokumen12 halaman8004 General Paper: MARK SCHEME For The May/June 2011 Question Paper For The Guidance of Teachersmrustudy12345678Belum ada peringkat

- 9084 s10 Ms 31Dokumen7 halaman9084 s10 Ms 31olamideBelum ada peringkat

- 8695 s13 Ms 21Dokumen6 halaman8695 s13 Ms 21mstudy123456Belum ada peringkat

- English Language: PAPER 1 Passages For CommentDokumen8 halamanEnglish Language: PAPER 1 Passages For Commentmstudy123456Belum ada peringkat

- 8693 English Language: MARK SCHEME For The October/November 2009 Question Paper For The Guidance of TeachersDokumen4 halaman8693 English Language: MARK SCHEME For The October/November 2009 Question Paper For The Guidance of Teachersmstudy123456Belum ada peringkat

- 8679 w04 ErDokumen4 halaman8679 w04 Ermstudy123456Belum ada peringkat

- First Language Spanish: Paper 8665/22 Reading and WritingDokumen6 halamanFirst Language Spanish: Paper 8665/22 Reading and Writingmstudy123456Belum ada peringkat

- SpanishDokumen2 halamanSpanishmstudy123456Belum ada peringkat

- Assessment - Lesson 1Dokumen12 halamanAssessment - Lesson 1Charlynjoy AbañasBelum ada peringkat

- Hold-Up?" As He Simultaneously Grabbed The Firearm of Verzosa. WhenDokumen2 halamanHold-Up?" As He Simultaneously Grabbed The Firearm of Verzosa. WhenVener MargalloBelum ada peringkat

- Product Data: Real-Time Frequency Analyzer - Type 2143 Dual Channel Real-Time Frequency Analyzers - Types 2144, 2148/7667Dokumen12 halamanProduct Data: Real-Time Frequency Analyzer - Type 2143 Dual Channel Real-Time Frequency Analyzers - Types 2144, 2148/7667jhon vargasBelum ada peringkat

- Igbo Traditional Security System: A Panacea To Nigeria Security QuagmireDokumen17 halamanIgbo Traditional Security System: A Panacea To Nigeria Security QuagmireChukwukadibia E. Nwafor100% (1)

- St. Helen Church: 4106 Mountain St. Beamsville, ON L0R 1B7Dokumen5 halamanSt. Helen Church: 4106 Mountain St. Beamsville, ON L0R 1B7sthelenscatholicBelum ada peringkat

- Repeaters XE PDFDokumen12 halamanRepeaters XE PDFenzzo molinariBelum ada peringkat

- Philippines Disaster Response PlanDokumen7 halamanPhilippines Disaster Response PlanJoselle RuizBelum ada peringkat

- TNT Construction PLC - Tender 3Dokumen42 halamanTNT Construction PLC - Tender 3berekajimma100% (1)

- List of Indian Timber TreesDokumen5 halamanList of Indian Timber TreesE.n. ElangoBelum ada peringkat

- Gautam KDokumen12 halamanGautam Kgautam kayapakBelum ada peringkat

- Quantitative Techniques For Business DecisionsDokumen8 halamanQuantitative Techniques For Business DecisionsArumairaja0% (1)

- Giai Thich Ngu Phap Tieng Anh - Mai Lan Huong (Ban Dep)Dokumen9 halamanGiai Thich Ngu Phap Tieng Anh - Mai Lan Huong (Ban Dep)Teddylove11Belum ada peringkat

- MHRP Player SheetDokumen1 halamanMHRP Player SheetFelipe CaldeiraBelum ada peringkat

- OPGWDokumen18 halamanOPGWGuilhermeBelum ada peringkat

- Classification of IndustriesDokumen17 halamanClassification of IndustriesAdyasha MohapatraBelum ada peringkat

- Senior SAP Engineer ResumeDokumen1 halamanSenior SAP Engineer ResumeSatish Acharya NamballaBelum ada peringkat

- Doubles and Doppelgangers: Religious Meaning For The Young and OldDokumen12 halamanDoubles and Doppelgangers: Religious Meaning For The Young and Old0 1Belum ada peringkat

- Types, Shapes and MarginsDokumen10 halamanTypes, Shapes and MarginsAkhil KanukulaBelum ada peringkat

- VB6 ControlDokumen4 halamanVB6 Controlahouba100% (1)

- Sar Oumad Zemestoun v01b Music Score سر اومد زمستون، نت موسیقیDokumen1 halamanSar Oumad Zemestoun v01b Music Score سر اومد زمستون، نت موسیقیPayman Akhlaghi (پیمان اخلاقی)100% (3)

- Bible TabsDokumen8 halamanBible TabsAstrid TabordaBelum ada peringkat

- Specification For Corrugated Bitumen Roofing Sheets: Indian StandardDokumen10 halamanSpecification For Corrugated Bitumen Roofing Sheets: Indian StandardAmanulla KhanBelum ada peringkat

- 3DO For IPDokumen7 halaman3DO For IPHannah Angela NiñoBelum ada peringkat

- Tahap Kesediaan Pensyarah Terhadap Penggunaan M-Pembelajaran Dalam Sistem Pendidikan Dan Latihan Teknik Dan Vokasional (TVET)Dokumen17 halamanTahap Kesediaan Pensyarah Terhadap Penggunaan M-Pembelajaran Dalam Sistem Pendidikan Dan Latihan Teknik Dan Vokasional (TVET)Khairul Yop AzreenBelum ada peringkat

- MS0800288 Angh enDokumen5 halamanMS0800288 Angh enSeason AkhirBelum ada peringkat

- Plants Promoting Happiness: The Effect of Indoor Plants on MoodDokumen11 halamanPlants Promoting Happiness: The Effect of Indoor Plants on MoodWil UfanaBelum ada peringkat