Advance Computer Architecture Homework 2 Solution

Diunggah oleh

Jahanzaib AwanHak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Advance Computer Architecture Homework 2 Solution

Diunggah oleh

Jahanzaib AwanHak Cipta:

Format Tersedia

Homework 2 Solutions

B.5

A useful tool for solving this type of problem is to extract all of the available information from the

problem description. It is possible that not all of the information will be necessary to solve the problem,

but having it in summary form makes it easier to think about. Here is a summary

! "#$ %.% &H' ().*)*ns e+uivalent,, "#I of ).- (excludes memory accesses,

! Instruction mix -5. non/memory/access instructions, 0). loads, 5. stores

! "aches 1plit 2% with no hit penalty, (i.e., the access time is the time it takes to execute the

load3store instruction

4 2% I/cache 0. miss rate, 50/byte blocks (re+uires 0 bus cycles to fill, miss penalty is %5ns 6

0 cycles

4 2% 7/cache 5. miss rate, write/through (no write/allocate,, *5. of all writes do not stall

because of a write buffer, %8/byte blocks (re+uires % bus cycle to fill,, miss penalty is %5ns 6 % cycle

! 2%320 bus %09/bit, 088 :H' bus between the 2% and 20 caches

! 20 (unified, cache, 5%0 ;B, write/back (write/allocate,, 9). hit rate, 5). of replaced blocks

are dirty (must go to main memory,, 8</byte blocks (re+uires < bus cycles to fill,, miss penalty is 8)ns

6 -.50ns = 8-.50ns

! :emory, %09 bits (%8 bytes, wide, first access takes 8)ns, subse+uent accesses take % cycle

on %55 :H', %09/bit bus

a. >he average memory access time for instruction accesses

!2% (inst, miss time in 20 %5ns access time plus two 20 cycles ( two = 50 bytes in inst. cache

line3%8 bytes width of 20 bus, = %5 6 0 ? 5.-5 = 00.5ns. (5.-5 is e+uivalent to one 088 :H' 20 cache

cycle,

! 20 miss time in memory 8)ns 6 plus four memory cycles (four = 8< bytes in 20 cache3%8

bytes width of memory bus, = 8) 6 < ? -.5 = *)ns (-.5 is e+uivalent to one %55 :H' memory bus

cycle,.

! Avg. memory access time for inst = avg. access time in 20 cache 6 avg. access time in

memory 6 avg. access time for 20 write/back.

= ).)0 ? 00.5 6 ).)0 ? (% @ ).9, ? *) 6 ).)0 ? (% @ ).9, ? ).5 ? *) = ).**ns (%.)* "#$ cycles,

b. >he average memory access time for data reads

1imilar to the above formula with one difference the data cache width is %8 bytes which takes one 20

bus cycles transfer (versus two for the inst. cache,, so

! 2% (read, miss time in 20 %5 6 5.-5 = %9.-5ns

! 20 miss time in memory *)ns

! Avg. memory access time for read = ).)0 ? %9.-5 6 ).)0 ? (% @ ).9, ? *) 6 ).)0 ? (% @ ).9, ?

).5 ? *) = ).*0ns (%.)% "#$ cycles,

c. >he average memory access time for data writes

Assume that writes misses are not allocated in 2%, hence all writes use the write buffer. Also assume

the write buffer is as wide as the 2% data cache.

! 2% (write, time to 20 %5 6 5.-5 = %9.-5ns

! 20 miss time in memory *)ns

! Avg. memory access time for data writes = ).)5 ? %9.-5 6 ).)5 ? (% @ ).9, ? *) 6 ).)5 ? (% @

).9, ? ).5 ? *) = 0.0*ns (0.50 "#$ cycles,

d. Ahat is the overall "#I, including memory accesses

!"omponents base "#I, Inst fetch "#I, read "#I or write "#I, inst fetch time is added to data

read or write time (for load3store instructions,.

"#I = ).- 6 %.)* 6 ).0 ? %.)% 6 ).)5 ? 0.50 = 0.%* "#I.

B.%)

a. 2% cache miss behavior when the caches are organi'ed in an inclusive hierarchy and the two caches

have identical block si'e

! Access 20 cache.

! If 20 cache hits, supply block to 2% from 20, evicted 2% block can be stored in 20 if it is not

already there.

! If 20 cache misses, supply block to both 2% and 20 from memory, evicted 2% block can be

stored in 20 if it is not already there.

! In both cases (hit, miss,, if storing an 2% evicted block in 20 causes a block to be evicted

from 20, then 2% has to be checked and if 20 block that was evicted is found in 2%, it has to be

invalidated.

b. 2% cache miss behavior when the caches are organi'ed in an exclusive hierarchy and the two caches

have identical block si'e

! Access 20 cache

! If 20 cache hits, supply block to 2% from 20, invalidate block in 20, write evicted block from

2% to 20 (it must have not been there,

! If 20 cache misses, supply block to 2% from memory, write evicted block from 2% to 20 (it

must have not been there,

c. Ahen 2% evicted block is dirty it must be written back to 20 even if an earlier copy was there

(inclusive 20,. Bo change for exclusive case.

0.%

a. Cach element is 9B. 1ince a 8<B cacheline has 9 elements, and each column access will result in

fetching a new line for the non/ideal matrix, we need a minimum of 9x9 (8< elements, for each matrix.

Hence, the minimum cache si'e is %09 ? 9B = %;B.

b. >he blocked version only has to fetch each input and output element once. >he unblocked version

will have one cache miss for every 8<B39B = 9 row elements. Cach column re+uires 8<Bx058 of

storage, or %8;B. >hus, column elements will be replaced in the cache before they can be used again.

Hence the unblocked version will have * misses (% row and 9 columns, for every 0 in the blocked

version.

c. for (i = )D i E 058D i=i6B, F

for (G = )D G E 058D G=G6B, F

for(m=)D mEBD m66, F

for(n=)D nEBD n66, F

outputHG6nIHi6mI = inputHi6mIHG6nID

J

J

J

J

d. 0/way set associative. In a direct/mapped cache the blocks could be allocated so that they map to

overlapping regions in the cache.

0.0

1ince the unblocked version is too large to fit in the cache, processing eight 9B elements re+uires

fetching one 8<B row cache block and 9 column cache blocks. 1ince each iteration re+uires 0 cycles

without misses, prefetches can be initiated every 0 cycles, and the number of prefetches per iteration is

more than one, the memory system will be completely saturated with prefetches. Because the latency

of a prefetch is %8 cycles, and one will start every 0 cycles, %830 = 9 will be outstanding at a time.

0.9

a. >he access time of the direct/mapped cache is ).98ns, while the 0/way and </way are %.%0ns and

%.5-ns respectively. >his makes the relative access times %.%03.98 = %.5) or 5). more for the 0/way

and %.5-3).98 = %.5* or 5*. more for the </way.

b. >he access time of the %8;B cache is %.0-ns, while the 50;B and 8<;B are %.55ns and %.5-ns

respectively. >his makes the relative access times %.553%.0- = %.)8 or 8. larger for the 50;B and

%.5-3%.0- = %.)-9 or 9. larger for the 8<;B.

c. Avg. access time = hit. ? hit time 6 miss. ? miss penalty, miss. = misses per

instruction3references per instruction = 0.0. (7:,, %.0. (0/way,, ).55. (</way,, ).)*. (9/way,.

7irect mapped access time = ).98ns 3 ).5ns cycle time = 0 cycles

0/way set associative = %.%0ns 3 ).5ns cycle time = 5 cycles

</way set associative = %.5-ns 3 ).95ns cycle time = 0 cycles

9/way set associative = 0.)5ns 3 ).-*ns cycle time = 5 cycles

:iss penalty = (%)3).5, = 0) cycles for 7: and 0/wayD %)3).95 = %5 cycles for </wayD %)3.-* = %5

cycles for 9/way.

7irect mapped @ (% @ ).)00, ? 0 6 ).)00 ? (0), = 0.5* 8 cycles =K 0.5*8 ? ).5 = %.0ns

0/way @ (% @ ).)%0, ? 5 6 ).)%0 ? (0), = 5. 0 cycles =K 5.0 ? ).5 = %.8ns

</way @ (% @ ).))55, ? 0 6 ).))55 ? (%5, = 0.)58 cycles =K 0.)8 ? ).95 = %.8*ns

9/way @ (% @ ).)))*, ? 5 6 ).)))* ? %5 = 5 cycles =K 5 ? ).-* = 0.5-ns

7irect mapped cache is the best.

0.*

a. >he average memory access time of the current (</way 8<;B, cache is %.8*ns. 8<;B direct mapped

cache access time = ).98ns 3 ).5 ns cycle time = 0 cycles Aay/predicted cache has cycle time and

access time similar to direct mapped cache and miss rate similar to </way cache. >he A:A> of the

way/predicted cache has three components miss, hit with way prediction correct, and hit with way

prediction mispredict ).))55 ? (0), 6 ().9) ? 0 6 (% @ ).9), ? 5, ? (% @ ).))55, = 0.08 cycles = %.%5ns

b. >he cycle time of the 8<;B </way cache is ).95ns, while the 8<;B direct mapped cache can be

accessed in ).5ns. >his provides ).953).5 = %.88 or 88. faster cache access.

c. Aith % cycle way misprediction penalty, A:A> is %.%5ns (as per part a,, but with a %5 cycle

misprediction penalty, the A:A> becomes ).))55 ? 0) 6 ().9) ? 0 6 (% @ ).9), ? %5, ? (% @ ).))55, =

<.85 cycles or 0.5ns.

d. >he serial access is 0.<ns3%.5*ns = %.5)* or 5%. slower.

"%

5.%

>he baseline performance (in cycles, per loop iteration, of the code se+uence inLigure 5.<9, if no new

instructionMs execution could be initiated until the previous instructionMs execution had completed, is

<). 1ee Ligure 1.0. Cach instruction re+uires one clock cycle of execution (a clock cycle in which that

instruction, and only that instruction, is occupying the execution unitsD since every instruction must

execute, the loop will take at least that many clock cycles,. >o that base number, we add the extra

latency cycles. 7onMt forget the branch shadow cycle.

5.0

How many cycles would the loop body in the code se+uence in Ligure 5.<9 re+uire if the pipeline

detected true data dependencies and only stalled on those, rather than blindly stalling everything Gust

because one functional unit is busyN >he answer is 05, as shown in Ligure 1.5. Oemember, the point of

the extra latency cycles is to allow an instruction to complete whatever actions it needs, in order to

produce its correct output. $ntil that output is ready, no dependent instructions can be executed. 1o the

first 27 must stall the next instruction for three clock cycles. >he :$2>7 produces a result for its

successor, and therefore must stall < more clocks, and so on.

5.%-

Anda mungkin juga menyukai

- Memory Management Algorithms ExplainedDokumen5 halamanMemory Management Algorithms ExplainedMonicaP18Belum ada peringkat

- Ca Sol PDFDokumen8 halamanCa Sol PDFsukhi_digraBelum ada peringkat

- Memory Hir and Io SystemDokumen26 halamanMemory Hir and Io SystemArjun M BetageriBelum ada peringkat

- hw4 SolDokumen4 halamanhw4 SolMarah IrshedatBelum ada peringkat

- Disk ManagementDokumen27 halamanDisk ManagementramBelum ada peringkat

- Memory Management: Concept of Memory HierarchyDokumen10 halamanMemory Management: Concept of Memory HierarchyEdo LeeBelum ada peringkat

- CS704 Final Term 2015 Exam Questions and AnswersDokumen20 halamanCS704 Final Term 2015 Exam Questions and AnswersMuhammad MunirBelum ada peringkat

- Cache TLBDokumen15 halamanCache TLBAarthy Sundaram100% (1)

- Computer Architecture - Quantitative ApproachDokumen7 halamanComputer Architecture - Quantitative Approach葉浩峰Belum ada peringkat

- Multi2sim-M2s Simulation FrameworkDokumen36 halamanMulti2sim-M2s Simulation FrameworkNeha JainBelum ada peringkat

- HW4Dokumen3 halamanHW40123456789abcdefgBelum ada peringkat

- MidtermsolutionsDokumen3 halamanMidtermsolutionsRajini GuttiBelum ada peringkat

- Computer Organization PDFDokumen2 halamanComputer Organization PDFCREATIVE QUOTESBelum ada peringkat

- ExcerciseDokumen8 halamanExcerciseMinh VươngBelum ada peringkat

- Week 6: Assignment SolutionsDokumen4 halamanWeek 6: Assignment SolutionsIshan JawaBelum ada peringkat

- Question Paper Object Oriented Programming and Java (MC221) : January 2008Dokumen12 halamanQuestion Paper Object Oriented Programming and Java (MC221) : January 2008amitukumarBelum ada peringkat

- 207 Assignment 6Dokumen7 halaman207 Assignment 6CKB The ArtistBelum ada peringkat

- WEBINAR2012 03 Optimizing MySQL ConfigurationDokumen43 halamanWEBINAR2012 03 Optimizing MySQL ConfigurationLinder AyalaBelum ada peringkat

- Exam OS - Ready! PDFDokumen8 halamanExam OS - Ready! PDFCaris TchobsiBelum ada peringkat

- Exam 1 Review: Cache Performance Calculations and Address MappingDokumen6 halamanExam 1 Review: Cache Performance Calculations and Address MappingErz SeBelum ada peringkat

- Operating System Homework-3: Submitted byDokumen12 halamanOperating System Homework-3: Submitted bySuraj SinghBelum ada peringkat

- QnsDokumen3 halamanQnsAnonymous gZjDZkBelum ada peringkat

- An Introduction To Numpy and Scipy by Scott ShellDokumen24 halamanAn Introduction To Numpy and Scipy by Scott ShellmarkymattBelum ada peringkat

- CS211 Exam PDFDokumen8 halamanCS211 Exam PDFUnknown UserBelum ada peringkat

- 8.11 Given Six Memory Partitions of 300KB, 600KB, 350KB, 200KBDokumen20 halaman8.11 Given Six Memory Partitions of 300KB, 600KB, 350KB, 200KBAnshita VarshneyBelum ada peringkat

- Computer Organization Hamacher Instructor Manual Solution - Chapter 5 PDFDokumen13 halamanComputer Organization Hamacher Instructor Manual Solution - Chapter 5 PDFtheachidBelum ada peringkat

- c4029 Fall 1 12 SolDokumen8 halamanc4029 Fall 1 12 SolskBelum ada peringkat

- 4 2 2Dokumen22 halaman4 2 2joBelum ada peringkat

- Reverse EngineeringDokumen85 halamanReverse Engineeringhughpearse88% (8)

- MathsDokumen3 halamanMathssukanta majumderBelum ada peringkat

- Study Set 12 Memory Components and DRAMDokumen8 halamanStudy Set 12 Memory Components and DRAMjnubkuybBelum ada peringkat

- Dynamic Memory AllocationDokumen14 halamanDynamic Memory AllocationHemesh Jain SuranaBelum ada peringkat

- Cse410 Sp09 Final SolDokumen10 halamanCse410 Sp09 Final Soladchy7Belum ada peringkat

- Lecture 25Dokumen25 halamanLecture 25Vikas ChoudharyBelum ada peringkat

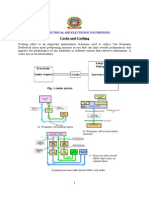

- ELECTRICAL AND ELECTRONIC ENGINEERING Cache CachingDokumen15 halamanELECTRICAL AND ELECTRONIC ENGINEERING Cache CachingEnock OmariBelum ada peringkat

- Operating System Exercises - Chapter 9 SolDokumen6 halamanOperating System Exercises - Chapter 9 SolevilanubhavBelum ada peringkat

- Answers To Final ExamDokumen4 halamanAnswers To Final ExamSyed Mohammad RizwanBelum ada peringkat

- Final 222 2009 SolDokumen6 halamanFinal 222 2009 SolNapsterBelum ada peringkat

- Operating System | Memory Management Techniques and Page Replacement AlgorithmsDokumen50 halamanOperating System | Memory Management Techniques and Page Replacement Algorithmsbasualok@rediffmail.comBelum ada peringkat

- Os + DsDokumen12 halamanOs + DsAniket AggarwalBelum ada peringkat

- Star 100 and TI-ASCDokumen5 halamanStar 100 and TI-ASCRajitha PrashanBelum ada peringkat

- Resolving Common Oracle Wait Events Using The Wait InterfaceDokumen11 halamanResolving Common Oracle Wait Events Using The Wait Interfacealok_mishra4533Belum ada peringkat

- Cache LabDokumen10 halamanCache Labarteepu37022Belum ada peringkat

- Final Exam - Fall 2008: COE 308 - Computer ArchitectureDokumen8 halamanFinal Exam - Fall 2008: COE 308 - Computer ArchitectureKhalid Kn3Belum ada peringkat

- System - No - Role Dump Related ParameterDokumen4 halamanSystem - No - Role Dump Related ParameterpapusahaBelum ada peringkat

- BaiTap Chuong4 PDFDokumen8 halamanBaiTap Chuong4 PDFtrongbang108Belum ada peringkat

- COE301 Final Solution 162Dokumen10 halamanCOE301 Final Solution 162Karim IbrahimBelum ada peringkat

- cs325 Fall10 FinalexamDokumen9 halamancs325 Fall10 FinalexamMohamed NoahBelum ada peringkat

- Algorithm: Enhanced Second Chance Best Combination: Dirty Bit Is 0 and R Bit Is 0Dokumen8 halamanAlgorithm: Enhanced Second Chance Best Combination: Dirty Bit Is 0 and R Bit Is 0SANA OMARBelum ada peringkat

- Auto Memory DefinedDokumen10 halamanAuto Memory Definedtssr2001Belum ada peringkat

- Exam OS 2 - Multiple Choice and QuestionsDokumen5 halamanExam OS 2 - Multiple Choice and QuestionsCaris Tchobsi100% (1)

- EnggRoom - Placement - HCL Campus PlacementDokumen5 halamanEnggRoom - Placement - HCL Campus PlacementChaudhari JainishBelum ada peringkat

- CidrDokumen9 halamanCidrit_expertBelum ada peringkat

- Operating - System - KCS 401 - Assignment - 2Dokumen5 halamanOperating - System - KCS 401 - Assignment - 2jdhfdsjfoBelum ada peringkat

- And M Is Address of A Memory LocationDokumen3 halamanAnd M Is Address of A Memory LocationkarimahnajiyahBelum ada peringkat

- SAP Memory ManagementDokumen16 halamanSAP Memory ManagementSurya NandaBelum ada peringkat

- Os Lab Pratices FinializedDokumen2 halamanOs Lab Pratices Finializedswathi bommisettyBelum ada peringkat

- Numerical Methods for Simulation and Optimization of Piecewise Deterministic Markov Processes: Application to ReliabilityDari EverandNumerical Methods for Simulation and Optimization of Piecewise Deterministic Markov Processes: Application to ReliabilityBelum ada peringkat

- Mathematical and Computational Modeling: With Applications in Natural and Social Sciences, Engineering, and the ArtsDari EverandMathematical and Computational Modeling: With Applications in Natural and Social Sciences, Engineering, and the ArtsRoderick MelnikBelum ada peringkat

- Marketing Plan - Evofix (Cefixime) : Saad Khan Jahangeer Khan Syeda Laila Ali JafferyDokumen46 halamanMarketing Plan - Evofix (Cefixime) : Saad Khan Jahangeer Khan Syeda Laila Ali JafferyJahanzaib Awan100% (3)

- Building Strong Pharma BrandsDokumen418 halamanBuilding Strong Pharma BrandsJahanzaib Awan100% (3)

- Introduction To MIPS ArchitectureDokumen10 halamanIntroduction To MIPS ArchitectureJahanzaib AwanBelum ada peringkat

- Computer Architecture - Quantitative ApproachDokumen7 halamanComputer Architecture - Quantitative Approach葉浩峰Belum ada peringkat

- Final Report of Islamic FinanceDokumen13 halamanFinal Report of Islamic FinanceJahanzaib AwanBelum ada peringkat

- Exercise CH - 1 CAO Hennessy PDFDokumen4 halamanExercise CH - 1 CAO Hennessy PDFJahanzaib Awan0% (1)

- Internship Report Pakistan Steel MillDokumen57 halamanInternship Report Pakistan Steel MillJahanzaib Awan67% (3)

- Latifa's Thesis PDFDokumen182 halamanLatifa's Thesis PDFنذير امحمديBelum ada peringkat

- Nist Technical Note 1297 SDokumen25 halamanNist Technical Note 1297 SRonny Andalas100% (1)

- Template Builder ManualDokumen10 halamanTemplate Builder ManualNacer AssamBelum ada peringkat

- Cambridge International Examinations: Additional Mathematics 4037/12 May/June 2017Dokumen11 halamanCambridge International Examinations: Additional Mathematics 4037/12 May/June 2017Ms jennyBelum ada peringkat

- MV Capacitor CalculationDokumen1 halamanMV Capacitor CalculationPramod B.WankhadeBelum ada peringkat

- Mari Andrew: Am I There Yet?: The Loop-De-Loop, Zigzagging Journey To AdulthoodDokumen4 halamanMari Andrew: Am I There Yet?: The Loop-De-Loop, Zigzagging Journey To Adulthoodjamie carpioBelum ada peringkat

- Critical Path Method: A Guide to CPM Project SchedulingDokumen6 halamanCritical Path Method: A Guide to CPM Project SchedulingFaizan AhmadBelum ada peringkat

- 9 Little Translation Mistakes With Big ConsequencesDokumen2 halaman9 Little Translation Mistakes With Big ConsequencesJuliany Chaves AlvearBelum ada peringkat

- This Manual Includes: Repair Procedures Fault Codes Electrical and Hydraulic SchematicsDokumen135 halamanThis Manual Includes: Repair Procedures Fault Codes Electrical and Hydraulic Schematicsrvalverde50gmailcomBelum ada peringkat

- Oracle® Fusion Middleware: Installation Guide For Oracle Jdeveloper 11G Release 1 (11.1.1)Dokumen24 halamanOracle® Fusion Middleware: Installation Guide For Oracle Jdeveloper 11G Release 1 (11.1.1)GerardoBelum ada peringkat

- Resilience Advantage Guidebook HMLLC2014Dokumen32 halamanResilience Advantage Guidebook HMLLC2014Alfred Schweizer100% (3)

- Cat DP150 Forklift Service Manual 2 PDFDokumen291 halamanCat DP150 Forklift Service Manual 2 PDFdiegoBelum ada peringkat

- Musical Instruments Speech The Chinese Philosopher Confucius Said Long Ago ThatDokumen2 halamanMusical Instruments Speech The Chinese Philosopher Confucius Said Long Ago ThatKhánh Linh NguyễnBelum ada peringkat

- C5-2015-03-24T22 29 11Dokumen2 halamanC5-2015-03-24T22 29 11BekBelum ada peringkat

- Rohtak:: ICT Hub For E-Governance in HaryanaDokumen2 halamanRohtak:: ICT Hub For E-Governance in HaryanaAr Aayush GoelBelum ada peringkat

- Nordstrom Physical Security ManualDokumen13 halamanNordstrom Physical Security ManualBopanna BolliandaBelum ada peringkat

- Thomas Calculus 13th Edition Thomas Test BankDokumen33 halamanThomas Calculus 13th Edition Thomas Test Banklovellgwynavo100% (24)

- New Microsoft Word DocumentDokumen12 halamanNew Microsoft Word DocumentMuhammad BilalBelum ada peringkat

- Pipelining VerilogDokumen26 halamanPipelining VerilogThineshBelum ada peringkat

- HCTS Fabricated Products Group Empowers High Tech MaterialsDokumen12 halamanHCTS Fabricated Products Group Empowers High Tech MaterialsYoami PerdomoBelum ada peringkat

- B1 Unit 6 PDFDokumen1 halamanB1 Unit 6 PDFMt Mt100% (2)

- Ebook Childhood and Adolescence Voyages in Development 6Th Edition Rathus Test Bank Full Chapter PDFDokumen64 halamanEbook Childhood and Adolescence Voyages in Development 6Th Edition Rathus Test Bank Full Chapter PDFolwennathan731y100% (8)

- Creep Behavior of GPDokumen310 halamanCreep Behavior of GPYoukhanna ZayiaBelum ada peringkat

- Datasheet - LNB PLL Njs8486!87!88Dokumen10 halamanDatasheet - LNB PLL Njs8486!87!88Aziz SurantoBelum ada peringkat

- Electronic Devices and Circuit TheoryDokumen32 halamanElectronic Devices and Circuit TheoryIñaki Zuriel ConstantinoBelum ada peringkat

- 1 s2.0 S1366554522001557 MainDokumen23 halaman1 s2.0 S1366554522001557 MainMahin1977Belum ada peringkat

- Corning® LEAF® Optical Fiber: Product InformationDokumen0 halamanCorning® LEAF® Optical Fiber: Product Informationhcdung18Belum ada peringkat

- Joe Armstrong (Programmer)Dokumen3 halamanJoe Armstrong (Programmer)Robert BonisoloBelum ada peringkat

- 01-02 Common Stack OperationsDokumen6 halaman01-02 Common Stack OperationsYa KrevedkoBelum ada peringkat

- Barangay Clearance2014Dokumen68 halamanBarangay Clearance2014Barangay PangilBelum ada peringkat