ZFS - Most Frequently Used Commands

Diunggah oleh

shekhar785424Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

ZFS - Most Frequently Used Commands

Diunggah oleh

shekhar785424Hak Cipta:

Format Tersedia

ZFS - most frequently used commands.

ZFS - most frequently used commands - below are the commands that we use on dail

y basis for ZFS filesystem Administration.

# Some reminders on command syntax

root@server:# zpool create oradb-1 c0d0s0

root@server:# zpool create oradb-1 mirror c0d0s3 c1d0s0

root@server:# zfs create oradb-1/oracle-10g

root@server:# zfs set mountpoint=/u01/oracle-10g oradb-1/oracle-10g

root@server:# zfs create oradb-1/home

root@server:# zfs set mountpoint=/export/home oradb-1/home

root@server:# zfs create oradb-1/home/oracle

root@server:# zfs set compression=on oradb-1/home

root@server:# zfs set quota=1g oradb-1/home/oracle

root@server:# zfs set reservation=2g oradb-1/home/oracle

root@server:# zfs set sharenfs=rw oradb-1/home

# to set the filesystem block size to 16k

root@server:# zfs set recordsize=16k oradb-1/home

zpool list, zpool status, zfs list are also useful command in case of FS monitor

ing.

I have lots of things to add to this however as and when I will get hands on I w

ill modify or add to my blog.

Thanks!

Posted by Nilesh Joshi at 7/14/2009 10:09:00 AM

=========

http://docs.oracle.com/cd/E19963-01/html/821-1448/gaynd.html

=========

Tutorials: Solaris ZFS Quick Tutorial / Examples

Posted by: mattzone on Dec 12, 2008 - 08:24 PM

Solaris

Solaris ZFS Quick Tutorial / Examples

First, create a pool using 'zpool'. Then use 'zfs' to make the filesystems.

Create a pool called pool1. The -m is optional. If given, it specifies a mount p

oint for zfs filesystems created from the specified pool. The mount point should

be empty or nonexistant. If the -m argument is omitted, mount point is "/".

# zpool create -m /export/data01 pool1 mirror c2t0d0 c4t0d0

# zpool status

pool: pool1

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

pool1 ONLINE 0 0 0

mirror ONLINE 0 0 0

c2t0d0 ONLINE 0 0 0

c4t0d0 ONLINE 0 0 0

To list about pools:

# zpool list

NAME SIZE USED AVAIL CAP HEALTH ALTROOT

pool1 136G 18.2G 118G 13% ONLINE -

To create a zfs filesystem:

# zfs create pool1/fs.001

To set filesystem size:

# zfs set quota=24g pool1/fs.001

The "zfs share -a" command makes all zfs filesystems that have the "sharenfs" pr

operty turned on automatically shared. It only has to be issued once and persist

s over a reboot. Alternatively, one can issue individual "zfs share" commands fo

r specific filesystems:

# zfs share -a

To make a filesystem sharable:

# zfs set sharenfs=on pool1/fs.001

To list existing zfs filesystems:

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

pool1 18.2G 116G 26.5K /export/data01

pool1/fs.001 18.2G 5.85G 18.2G /export/data01/fs.001

To list all properties of a specific filesystem:

# zfs get all pool1/fs.001

NAME PROPERTY VALUE SOURCE

pool1/fs.001 type filesystem -

pool1/fs.001 creation Wed Sep 13 16:34 2006 -

pool1/fs.001 used 18.2G -

pool1/fs.001 available 5.85G -

pool1/fs.001 referenced 18.2G -

pool1/fs.001 compressratio 1.00x -

pool1/fs.001 mounted yes -

pool1/fs.001 quota 24G local

pool1/fs.001 reservation none default

pool1/fs.001 recordsize 128K default

pool1/fs.001 mountpoint /export/data01/fs.001

inherited

from pool1

pool1/fs.001 sharenfs on local

pool1/fs.001 checksum on default

pool1/fs.001 compression off default

pool1/fs.001 atime on default

pool1/fs.001 devices on default

pool1/fs.001 exec on default

pool1/fs.001 setuid on default

pool1/fs.001 readonly off default

pool1/fs.001 zoned off default

pool1/fs.001 snapdir hidden default

pool1/fs.001 aclmode groupmask default

pool1/fs.001 aclinherit secure default

Here's an example of 'df':

# df -k -Fzfs

Filesystem kbytes used avail capacity

Mounted_on

pool1 140378112 26 121339726 1%

/export/data01

pool1/fs.001 25165824 19036649 6129174 76%

/export/data01/fs.001

You can increase the size of a pool by adding a mirrored pair of disk drives:

# zpool add pool1 mirror c2t1d0 c4t1d0

# zpool status

pool: pool1

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

pool1 ONLINE 0 0 0

mirror ONLINE 0 0 0

c2t0d0 ONLINE 0 0 0

c4t0d0 ONLINE 0 0 0

mirror ONLINE 0 0 0

c2t1d0 ONLINE 0 0 0

c4t1d0 ONLINE 0 0 0

# zpool list

NAME SIZE USED AVAIL CAP HEALTH

ALTROOT

pool1 272G 18.2G 254G 6% ONLINE -

Note that "zpool attach" is a completely different command that adds multiway mi

rrors to increase redundancy but does not add extra space.

Make some more filesystems:

# zfs create pool1/fs.002

# zfs set quota=20g pool1/fs.002

# zfs set sharenfs=on pool1/fs.002

# zfs create pool1/fs.003

# zfs set quota=30g pool1/fs.003

# zfs set sharenfs=on pool1/fs.003

# zfs create pool1/fs.004

# zfs set quota=190G pool1/fs.004

# zfs set sharenfs=on pool1/fs.004

They show up in 'df':

# df -k -Fzfs -h

Filesystem size used avail capacity Mounted on

pool1 268G 29K 250G 1% /export/data01

pool1/fs.001 24G 18G 5.8G 76% /export/data01/fs.001

pool1/fs.002 30G 24K 30G 1% /export/data01/fs.002

pool1/fs.003 20G 24K 20G 1% /export/data01/fs.003

pool1/fs.004 190G 24K 190G 1% /export/data01/fs.004

But don't look for them in /etc/vfstab:

# cat /etc/vfstab

#device device mount FS fsck mount mou

nt

#to_mount to_fsck point type pass at_boot opt

ions

#

fd - /dev/fd fd - no -

/proc - /proc proc - no -

/dev/dsk/c0t0d0s1 - - swap - no -

/dev/dsk/c0t0d0s0 /dev/rdsk/c0t0d0s0 / ufs 1 no -

/dev/dsk/c0t0d0s3 /dev/rdsk/c0t0d0s3 /var ufs 1 no -

/devices - /devices devfs - no -

ctfs - /system/contract ctfs - no -

objfs - /system/object objfs - no -

swap - /tmp tmpfs - yes -

Note that they are all shared because the share -a is in effect:

# share

- /export/data01/fs.001 rw ""

- /export/data01/fs.002 rw ""

- /export/data01/fs.003 rw ""

- /export/data01/fs.004 rw ""

You can list zfs pools with:

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

pool1 18.2G 250G 29.5K /export/data01

pool1/fs.001 18.2G 5.85G 18.2G /export/data01/gdos.001

pool1/fs.002 24.5K 30.0G 24.5K /export/data01/gdos.002

pool1/fs.003 24.5K 20.0G 24.5K /export/data01/gdos.003

pool1/fs.004 24.5K 190G 24.5K /export/data01/gdos.004

The disks are labeled and partitioned automatically by 'zpool':

# format

Searching for disks...done

AVAILABLE DISK SELECTIONS:

0. c0t0d0 <default cyl="cyl" 8851="8851" alt="alt" 2="2" hd="hd" 255=

"255" sec="sec" 63="63">

/pci@0,0/pcie11,4080@1/sd@0,0

1. c2t0d0

/pci@6,0/pci13e9,1300@3/sd@0,0

2. c2t1d0

/pci@6,0/pci13e9,1300@3/sd@1,0

3. c4t0d0

/pci@6,0/pci13e9,1300@4/sd@0,0

4. c4t1d0

/pci@6,0/pci13e9,1300@4/sd@1,0

Specify disk (enter its number):

partition> p

Current partition table (original):

Total disk sectors available: 286733069 + 16384 (reserved sectors)

Part Tag Flag First Sector Size Last

Sector

0 usr wm 34 136.72GB

286733069

1 unassigned wm 0 0 0

2 unassigned wm 0 0 0

3 unassigned wm 0 0 0

4 unassigned wm 0 0 0

5 unassigned wm 0 0 0

6 unassigned wm 0 0 0

8 reserved wm 286733070 8.00MB

286749453

</default>Note that Solaris 10 cannot boot from a zfs filesystem. You may want t

o have a look at this tutorial on Mirroring system disks with Solaris Volume Man

ager / Disksuite.

=====

How can I set up and configure a ZFS storage pool under Solaris 10?

ANSWER

This is a brief example of setting up a ZFS storage

pool and a couple of filesystems ("datasets") on Solaris 10.

You'll need the Solaris Update 2 release (dated June 2006) or later, as ZFS was

not in the earlier standard releases of Solaris 10.

Useful documentation reference:-

http://www.opensolaris.org/os/community/zfs/docs

Once you have the Solaris system up and running, this is how to proceed:-

1. You'll need a spare disk partition or two for the storage pool. You can

use files (see mkfile) if you're stuck for spare hard partitions, but only for e

xperimentation. The partitions

will be OVERWRITTEN by this procedure.

2. Login as root and create a storage pool using the spare partitions:-

# zpool create lake c0t2d0s0 c0t2d0s1

(The # indicates the type-in prompt)

If the above partitions contain exiting file systems, you may need to use

the -f (force) option:-

# zpool create -f lake c0t2d0s0 c0t2d0s1

The pool(called lake) has been created.

3. Use zpool list to view your pool stats:-

# zpool list

NAME SIZE USED AVAIL CAP HEALTH ALTROOT

lake 38.2G 32.5K 38.2G 0% ONLINE -

A df listing will also show it:-

# df -h /lake

Filesystem size used avail capacity Mounted on

lake 38G 8K 38G 1% /lake

4. Now create a file system within the pool (Sun call these datasets):-

# zfs create lake/fa

# zfs list

NAME USED AVAIL REFER MOUNTPOINT

lake 43.0K 38.0G 8.50K /lake

lake/fa 8K 38.0G 8K /lake/fa

To destroy a storage pool (AND ALL ITS DATA)

# zpool destroy lake

To destroy a dataset:-

# zfs destroy lake/fa

5. zpool can also create mirror devices:-

# zpool create lake mirror c0t2d0s0 c0t2d0s1

and something called RAID Z (similar to RAID 5):-

# zpool create lake raidz c0t2d0s0 c0t2d0s1 c0t2d0s3

6. To add further devices to a pool (not mirrors or RAIDZ - these must be extend

ed only with similar datasets):-

# zpool add lake c0t2d0s3 c0t2d0s4

# zpool status

pool: lake

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

lake ONLINE 0 0 0

c0t2d0s0 ONLINE 0 0 0

c0t2d0s1 ONLINE 0 0 0

c0t2d0s3 ONLINE 0 0 0

c0t2d0s4 ONLINE 0 0 0

Note that datasets are automatically mounted, so no more

updating of /etc/vfstab!

You can also offline/online components, take snapshots (recursive since update 3

), clone

filesystems, and apply properties such as quotas, NFS sharing, etc.

Entire pools can be exported, then imported on another system, Intel or SPARC.

There are even built-in backup and restore facilities, not to mention

performance tools:-

# zpool iostat -v

capacity operations bandwidt

h

pool used avail read write read wr

ite

------------ ----- ----- ----- ----- ----- -

----

lake 305M 9.39G 0 0 43.7K 7

4.7K

mirror 153M 4.69G 0 0 38.6K 37

.5K

c0t2d0s0 - - 0 0 39

.1K 38.5K

c0t2d0s1 - - 0 1 8

1 114K

mirror 152M 4.69G 0 0 5.11K 3

7.5K

c0t2d0s3 - - 0 0 5

.13K 38.2K

c0t2d0s4 - - 0 0

27 38.2K

------------ ----- ----- ----- ----- ----- -

----

For more information on ZFS, why not attend our 4-day Solaris 10 Update

course see: http://www.firstalt.co.uk/courses/s10up.html

ZFS is also included in our standard Solaris 10 Systems Administration courses.

How do I set up and configure a Zone in Solaris 10?

ANSWER

Anda mungkin juga menyukai

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (587)

- Changes to Google Cloud Professional Cloud Architect examDokumen7 halamanChanges to Google Cloud Professional Cloud Architect examAman MalikBelum ada peringkat

- Studying, Working or Migrating: Ielts and OetDokumen2 halamanStudying, Working or Migrating: Ielts and OetSantosh KambleBelum ada peringkat

- GCP - SolutionsDokumen11 halamanGCP - SolutionsGirish MamtaniBelum ada peringkat

- Google Cloud Big Data Solutions OverviewDokumen17 halamanGoogle Cloud Big Data Solutions OverviewSouvik PratiherBelum ada peringkat

- Simon Ielts NotesDokumen8 halamanSimon Ielts Notesshekhar785424Belum ada peringkat

- AWS Answers To Key Compliance QuestionsDokumen12 halamanAWS Answers To Key Compliance Questionssparta21Belum ada peringkat

- MRP SolutionDokumen21 halamanMRP SolutionsabhazraBelum ada peringkat

- AzureMigrationTechnicalWhitepaperfinal 5Dokumen6 halamanAzureMigrationTechnicalWhitepaperfinal 5Anonymous PZW11xWeOoBelum ada peringkat

- Awsthe Path To The Cloud Dec2015Dokumen13 halamanAwsthe Path To The Cloud Dec2015shekhar785424Belum ada peringkat

- Pearson's Automated Scoring of Writing, Speaking, and MathematicsDokumen24 halamanPearson's Automated Scoring of Writing, Speaking, and Mathematicsshekhar785424Belum ada peringkat

- Cloudera Ref Arch AwsDokumen24 halamanCloudera Ref Arch AwsyosiaBelum ada peringkat

- Simon Ielts NotesDokumen8 halamanSimon Ielts Notesshekhar785424Belum ada peringkat

- Qualys Securing Amazon Web Services (1) Imp VVVDokumen75 halamanQualys Securing Amazon Web Services (1) Imp VVVshekhar785424Belum ada peringkat

- AWS Compliance Quick ReferenceDokumen26 halamanAWS Compliance Quick Referenceshekhar785424Belum ada peringkat

- Demart SWOTDokumen24 halamanDemart SWOTshekhar785424Belum ada peringkat

- Retail Management Strategy of D-Mart Under 39 CharactersDokumen30 halamanRetail Management Strategy of D-Mart Under 39 CharactersPriyanka AgarwalBelum ada peringkat

- Best Practices For SAS® On EMC® SYMMETRIX® VMAXTM StorageDokumen46 halamanBest Practices For SAS® On EMC® SYMMETRIX® VMAXTM Storageshekhar785424Belum ada peringkat

- Data Center Design Best PracticesDokumen2 halamanData Center Design Best Practicesshekhar785424Belum ada peringkat

- 10 1 1 459 7159Dokumen13 halaman10 1 1 459 7159shekhar785424Belum ada peringkat

- Data Center Consolidation1Dokumen2 halamanData Center Consolidation1shekhar785424Belum ada peringkat

- AgendaBook DUBLINDokumen38 halamanAgendaBook DUBLINshekhar785424Belum ada peringkat

- Australia Point System (189 - 190 - 489) - Australia Subclass 489 VIsaDokumen11 halamanAustralia Point System (189 - 190 - 489) - Australia Subclass 489 VIsashekhar785424Belum ada peringkat

- Data Migration From An Existing Storage Array To A New Storage Array IMP VVVDokumen3 halamanData Migration From An Existing Storage Array To A New Storage Array IMP VVVshekhar785424Belum ada peringkat

- 21 Functions Part 1 of 3Dokumen13 halaman21 Functions Part 1 of 3Sunil DasBelum ada peringkat

- Cisco Cloud Architecture: For The Microsoft Cloud PlatformDokumen2 halamanCisco Cloud Architecture: For The Microsoft Cloud Platformshekhar785424Belum ada peringkat

- Learn Functions from Revision Notes & Video TutorialsDokumen18 halamanLearn Functions from Revision Notes & Video Tutorialsshubham kharwalBelum ada peringkat

- PTE Repeat Sentence 4 Flashcards - QuizletDokumen2 halamanPTE Repeat Sentence 4 Flashcards - Quizletshekhar785424Belum ada peringkat

- Read AloudDokumen3 halamanRead AloudLokesh NABelum ada peringkat

- Repeat SentenceDokumen2 halamanRepeat SentenceDilip DivateBelum ada peringkat

- Read AloudDokumen3 halamanRead AloudLokesh NABelum ada peringkat

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (73)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2219)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (265)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (119)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- Question Forms Auxiliary VerbsDokumen10 halamanQuestion Forms Auxiliary VerbsMer-maidBelum ada peringkat

- LESSON PLAN - Kinds of WeatherDokumen4 halamanLESSON PLAN - Kinds of WeatherSittie Naifa CasanBelum ada peringkat

- The Relationship Between Psychology and LiteratureDokumen4 halamanThe Relationship Between Psychology and LiteratureWiem Belkhiria0% (2)

- 12-AS400 Schedule Job (09nov15)Dokumen5 halaman12-AS400 Schedule Job (09nov15)Mourad TimarBelum ada peringkat

- Parasmai Atmane PadaniDokumen5 halamanParasmai Atmane PadaniNagaraj VenkatBelum ada peringkat

- Updates on university exams, courses and admissionsDokumen2 halamanUpdates on university exams, courses and admissionssameeksha chiguruBelum ada peringkat

- Installation Cheat Sheet 1 - OpenCV 3 and C++Dokumen3 halamanInstallation Cheat Sheet 1 - OpenCV 3 and C++David BernalBelum ada peringkat

- Making Disciples of Oral LearnersDokumen98 halamanMaking Disciples of Oral LearnersJosé AlvarezBelum ada peringkat

- Arts G10 First QuarterDokumen9 halamanArts G10 First QuarterKilo SanBelum ada peringkat

- Kishore OracleDokumen5 halamanKishore OraclepriyankBelum ada peringkat

- SECRET KNOCK PATTERN DOOR LOCKDokumen5 halamanSECRET KNOCK PATTERN DOOR LOCKMuhammad RizaBelum ada peringkat

- Laser WORKS 8 InstallDokumen12 halamanLaser WORKS 8 InstalldavicocasteBelum ada peringkat

- Code Glosses in University State of Jakarta Using Hyland's TaxonomyDokumen25 halamanCode Glosses in University State of Jakarta Using Hyland's TaxonomyFauzan Ahmad Ragil100% (1)

- Checklist of MOVs for Proficient TeachersDokumen8 halamanChecklist of MOVs for Proficient TeachersEvelyn Esperanza AcaylarBelum ada peringkat

- Anticipation Guides - (Literacy Strategy Guide)Dokumen8 halamanAnticipation Guides - (Literacy Strategy Guide)Princejoy ManzanoBelum ada peringkat

- Unit 8 General Test: Describe The PictureDokumen12 halamanUnit 8 General Test: Describe The PictureHermes Orozco100% (1)

- English Week PaperworkDokumen6 halamanEnglish Week PaperworkYusnamariah Md YusopBelum ada peringkat

- Chemistry - Superscripts, Subscripts and Other Symbols For Use in Google DocsDokumen2 halamanChemistry - Superscripts, Subscripts and Other Symbols For Use in Google DocsSandra Aparecida Martins E SilvaBelum ada peringkat

- Editing Tools in AutocadDokumen25 halamanEditing Tools in AutocadStephanie M. BernasBelum ada peringkat

- Celine BSC 1 - 3Dokumen43 halamanCeline BSC 1 - 3Nsemi NsemiBelum ada peringkat

- LaserCAD ManualDokumen65 halamanLaserCAD ManualOlger NavarroBelum ada peringkat

- BB 7Dokumen17 halamanBB 7Bayu Sabda ChristantaBelum ada peringkat

- Learn Past Tenses in 40 StepsDokumen8 halamanLearn Past Tenses in 40 StepsGeraldine QuirogaBelum ada peringkat

- Who Is The Antichrist?Dokumen9 halamanWho Is The Antichrist?United Church of GodBelum ada peringkat

- LGUserCSTool LogDokumen1 halamanLGUserCSTool LogDávison IsmaelBelum ada peringkat

- 320 Sir Orfeo PaperDokumen9 halaman320 Sir Orfeo Paperapi-248997586Belum ada peringkat

- EVE Comm BOOK 1.07 2020 PDFDokumen165 halamanEVE Comm BOOK 1.07 2020 PDFDeepakBelum ada peringkat

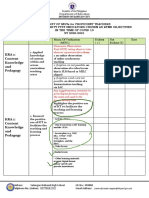

- CSTP 4 Planning Instruction & Designing Learning ExperiencesDokumen8 halamanCSTP 4 Planning Instruction & Designing Learning ExperiencesCharlesBelum ada peringkat

- LISTENING COMPREHENSION TEST TYPESDokumen15 halamanLISTENING COMPREHENSION TEST TYPESMuhammad AldinBelum ada peringkat

- Romanian Mathematical Magazine 39Dokumen169 halamanRomanian Mathematical Magazine 39Nawar AlasadiBelum ada peringkat