Neural

Diunggah oleh

rwshifletJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Neural

Diunggah oleh

rwshifletHak Cipta:

Format Tersedia

Big Neural Networks Waste Capacity

Yann N. Dauphin & Yoshua Bengio

D epartement dinformatique et de recherche op erationnelle

Universit e de Montr eal, Montr eal, QC, Canada

Abstract

This article exposes the failure of some big neural networks to leverage added

capacity to reduce undertting. Past research suggest diminishing returns when

increasing the size of neural networks. Our experiments on ImageNet LSVRC-

2010 show that this may be due to the fact there are highly diminishing returns for

capacity in terms of training error, leading to undertting. This suggests that the

optimization method - rst order gradient descent - fails at this regime. Directly

attacking this problem, either through the optimization method or the choices of

parametrization, may allow to improve the generalization error on large datasets,

for which a large capacity is required.

1 Introduction

Deep learning and neural networks have achieved state-of-the-art results on vision

1

, language

2

,

and audio-processing tasks

3

. All these cases involved fairly large datasets, but in all these cases,

even larger ones could be used. One of the major challenges remains to extend neural networks on

a much larger scale, and with this objective in mind, this paper asks a simple question: is there an

optimization issue that prevents efciently training larger networks?

Prior evidence of the failure of big networks in the litterature can be found for example in Coates

et al. (2011), which shows that increasing the capacity of certain neural net methods quickly reaches

a point of diminishing returns on the test error. These results have since been extended to other

types of auto-encoders and RBMs (Rifai et al., 2011; Courville et al., 2011). Furthermore, Coates

et al. (2011) shows that while neural net methods fail to leverage added capacity K-Means can.

This has allowed K-Means to reach state-of-the-art performance on CIFAR-10 for methods that do

not use articial transformations. This is an unexpected result because K-Means is a much dumber

unsupervised learning algorithm when compared with RBMs and regularized auto-encoders. Coates

et al. (2011) argues that this is mainly due to K-Means making better use of added capacity, but it

does not explain why the neural net methods failed to do this.

2 Experimental Setup

We will performexperiments with the well known ImageNet LSVRC-2010 object detection dataset

4

.

The subset used in the Large Scale Visual Recognition Challenge 2010 contains 1000 object cate-

gories and 1.2 million training images.

1

Krizhevsky et al. (2012) reduced by almost one half the error rate on the 1000-class ImageNet object

recognition benchmark

2

Mikolov et al. (2011) reduced perplexity on WSJ by 40% and speech recognition absolute word error rate

by > 1%.

3

For speech recognition, Seide et al. (2011) report relative word error rates decreasing by about 30% on

datasets of 309 hours

4

http://www.image-net.org/challenges/LSVRC/2010/

1

a

r

X

i

v

:

1

3

0

1

.

3

5

8

3

v

4

[

c

s

.

L

G

]

1

4

M

a

r

2

0

1

3

This dataset has many attractive features:

1. The task is difcult enough for current algorithms that there is still room for much improve-

ment. For instance, Krizhevsky et al. (2012) was able to reduce the error by half recently.

Whats more the state-of-the-art is at 15.3% error. Assuming minimal error in the human

labelling of the dataset, it should be possible to reach errors close to 0%.

2. Improvements on ImageNet are thought to be a good proxy for progress in object recogni-

tion (Deng et al., 2009).

3. It has a large number of examples. This is the setting that is commonly found in industry

where datasets reach billions of examples. Interestingly, as you increase the amount of

data, the training error converges to the generalization error. In other words, reducing

training error is well correlated with reducing generalization error, when large datasets are

available. Therefore, it stands to reason that resolving undertting problems may yield

signicant improvements.

We use the features provided by the Large Scale Visual Recognition Challenge 2010

5

. The images

are convolved with SIFT features, then K-Means is used to form a visual vocabulary of 1000 visual

words. Following the litterature, we report the Top-5 error rate only.

The experiments focus on the behavior of Multi-Layer Perceptrons (MLP) as capacity is increased.

This is done by increasing the number of hidden units in the network. The nal classication layer of

the network is a softmax over possible classes (softmax(x) = e

x

/

i

e

xi

). The hidden layers

use the logistic sigmoid activation function ((x) =

1

/

(1+e

x

)

). We initialize the weights of the

hidden layer according to the formula proposed by Glorot and Bengio (2010). The parameters of

the classication layer are initialized to 0, along with all the bias (offset) parameters of the MLP.

The hyper-parameters to tune are the learning rate and the number of hidden units. We are in-

terested in optimization performance so we cross-validate them based on the training error. We

use a grid search with the learning rates taken from {0.1, 0.01} and the number of hiddens from

{1000, 2000, 5000, 7000, 10000, 15000}. When we report the performance of a network with a

given number of units we choose the best learning rate. The learning rate is decreased by 5% every-

time the training error goes up aftern an epoch. We do not use any regularization because it would

typically not help to decrease the training set error. The number of epochs is set to 300 s that it is

large enough for the networks to converge.

The experiments are run on a cluster of Nvidia Geforce GTX 580 GPUs with the help of the Theano

library (Bergstra et al., 2010). We make use of HDF5 (Folk et al., 2011) to load the dataset in a

lazy fashion because of its large size. The shortest training experiment took 10 hours to run and the

longest took 28 hours.

3 Experimental Results

Figure 1 shows the evolution of the training error as the capacity is increased. The common intuition

is that this increased capacity will help t the training set - possibly to the detriment of generalization

error. For this reason practitioners have focused mainly on the problem of overtting the dataset

when dealing with large networks - not undertting. In fact, much research is concerned with proper

regularization of such large networks (Hinton et al., 2012, 2006).

However, Figure 2 reveals a problem in the training of big networks. This gure is the derivative of

the curve in Figure 1 (using the number of errors instead of the percentage). It may be interpreted

as the return on investment (ROI) for the addition of capacity. The Figure shows that the return

on investment of additional hidden units decreases fast, where in fact we would like it to be close

to constant. Increasing the capacity from 1000 to 2000 units, the ROI decreases by an order of

magnitude. It is harder and harder for the model to make use of additional capacity. The red line is

a baseline where the additional unit is used as a template matcher for one of the training errors. In

this case, the number of errors reduced per unit is at least 1. We see that the MLP does not manage

to beat this baseline after 5000 units, for the given number of training iterations.

5

http://www.image-net.org/challenges/LSVRC/2010/download-public

2

Figure 1: Training error with respect to the capacity of a 1-layer sigmoidal neural network. This

curve seems to suggest we are correctly leveraging added capacity.

Figure 2: Return on investment on the addition of hidden units for a 1-hidden layer sigmoidal neural

network. The vertical axis is the number of training errors removed per additional hidden unit, after

300 epochs. We see here that it is harder and harder to use added capacity.

For reference, we also include the learning curves of the networks used for Figure 1 and 2 in Figure

3. We see that the curves for capacities above 5000 all converge towards the same point.

4 Future directions

This rapidly decreasing return on investment for capacity in big networks seems to be a failure of

rst order gradient descent.

In fact, we know that the rst order approximation fails when there are a lot of interactions between

hidden units. It may be that adding units increases the interactions between units and causes the

Hessian to be ill-conditioned. This reasoning suggests two research directions:

methods that break interactions between large numbers of units. This helps the Hessian

to be better conditioned and will lead to better effectiveness for rst-order descent. This

3

Figure 3: Training error with respect to the number of epochs of gradient descent. Each line is a

1-hidden layer sigmoidal neural network with a different number of hidden units.

type of method can be implemented efciently. Examples of this approach are sparsity and

orthognality penalties.

methods that model interactions between hidden units. For example, second order methods

(Martens, 2010) and natural gradient methods (Le Roux et al., 2008). Typically, these are

expensive approaches and the challenge is in scaling them to large datasets, where stochas-

tic gradient approaches may dominate. The ideal target is a stochastic natural gradient or

stochastic second-order method.

The optimization failure may also be due to other reasons. For example, networks with more ca-

pacity have more local minima. Future work should investigate tests that help discriminate between

ill-conditioning issues and local minima issues.

Fixing this optimization problem may be the key to unlocking better performance of deep networks.

Based on past observations (Bengio, 2009; Erhan et al., 2010), we expect this optimization problem

to worsen for deeper networks, and our experimental setup should be extended to measure the effect

of depth. As we have noted earlier, improvements on the training set error should be well correlated

with generalization for large datasets.

References

Bengio, Y. (2009). Learning deep architectures for AI. Now Publishers.

Bergstra, J., Breuleux, O., Bastien, F., Lamblin, P., Pascanu, R., Desjardins, G., Turian, J., Warde-

Farley, D., and Bengio, Y. (2010). Theano: a CPU and GPU math expression compiler. In

Proceedings of the Python for Scientic Computing Conference (SciPy). Oral Presentation.

Coates, A., Lee, H., and Ng, A. Y. (2011). An analysis of single-layer networks in unsupervised fea-

ture learning. In Proceedings of the Thirteenth International Conference on Articial Intelligence

and Statistics (AISTATS 2011).

Courville, A., Bergstra, J., and Bengio, Y. (2011). Unsupervised models of images by spike-and-

slab RBMs. In Proceedings of the Twenty-eight International Conference on Machine Learning

(ICML11).

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., and Fei-Fei, L. (2009). ImageNet: A Large-Scale

Hierarchical Image Database. In CVPR09.

Erhan, D., Bengio, Y., Courville, A., Manzagol, P., Vincent, P., and Bengio, S. ((11) 2010). Why

does unsupervised pre-training help deep learning? J. Machine Learning Res.

4

Folk, M., Heber, G., Koziol, Q., Pourmal, E., and Robinson, D. (2011). An overview of the hdf5

technology suite and its applications. In Proceedings of the EDBT/ICDT 2011 Workshop on Array

Databases, pages 3647. ACM.

Glorot, X. and Bengio, Y. (2010). Understanding the difculty of training deep feedforward neural

networks. In JMLR W&CP: Proceedings of the Thirteenth International Conference on Articial

Intelligence and Statistics (AISTATS 2010), volume 9, pages 249256.

Hinton, G. E., Osindero, S., and Teh, Y. (2006). A fast learning algorithm for deep belief nets.

Neural Computation, 18, 15271554.

Hinton, G. E., Srivastava, N., Krizhevsky, A., Sutskever, I., and Salakhutdinov, R. (2012). Im-

proving neural networks by preventing co-adaptation of feature detectors. Technical report,

arXiv:1207.0580.

Krizhevsky, A., Sutskever, I., and Hinton, G. (2012). ImageNet classication with deep convolu-

tional neural networks. In Advances in Neural Information Processing Systems 25 (NIPS2012).

Le Roux, N., Manzagol, P.-A., and Bengio, Y. (2008). Topmoumoute online natural gradient algo-

rithm. In J. Platt, D. Koller, Y. Singer, and S. Roweis, editors, Advances in Neural Information

Processing Systems 20 (NIPS07), Cambridge, MA. MIT Press.

Martens, J. (2010). Deep learning via Hessian-free optimization. In L. Bottou and M. Littman, ed-

itors, Proceedings of the Twenty-seventh International Conference on Machine Learning (ICML-

10), pages 735742. ACM.

Mikolov, T., Deoras, A., Kombrink, S., Burget, L., and Cernocky, J. (2011). Empirical evaluation

and combination of advanced language modeling techniques. In Proc. 12th annual conference of

the international speech communication association (INTERSPEECH 2011).

Rifai, S., Mesnil, G., Vincent, P., Muller, X., Bengio, Y., Dauphin, Y., and Glorot, X. (2011). Higher

order contractive auto-encoder. In European Conference on Machine Learning and Principles

and Practice of Knowledge Discovery in Databases (ECML PKDD).

Seide, F., Li, G., and Yu, D. (2011). Conversational speech transcription using context-dependent

deep neural networks. In Interspeech 2011, pages 437440.

5

Anda mungkin juga menyukai

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- Astrology Bunda His HNDokumen19 halamanAstrology Bunda His HNrwshifletBelum ada peringkat

- Manuscripts of Astrological Image MagicDokumen23 halamanManuscripts of Astrological Image MagicrwshifletBelum ada peringkat

- SCT Thesis Dissertation Policies and Procedures ADokumen29 halamanSCT Thesis Dissertation Policies and Procedures ArwshifletBelum ada peringkat

- C I Is Chicago Diss 2011Dokumen18 halamanC I Is Chicago Diss 2011rwshifletBelum ada peringkat

- Request For Approval of Thesis-Dissertation CommitDokumen1 halamanRequest For Approval of Thesis-Dissertation CommitrwshifletBelum ada peringkat

- PCC Spring Class ScheduleDokumen3 halamanPCC Spring Class SchedulerwshifletBelum ada peringkat

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (895)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (588)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (74)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (121)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- UntitledDokumen50 halamanUntitledapi-118172932Belum ada peringkat

- Accenture Global Pharmaceutical and Company Planning Forecasting With Oracle HyperionDokumen2 halamanAccenture Global Pharmaceutical and Company Planning Forecasting With Oracle HyperionparmitchoudhuryBelum ada peringkat

- Mechanics Guidelines 23 Div ST Fair ExhibitDokumen8 halamanMechanics Guidelines 23 Div ST Fair ExhibitMarilyn GarciaBelum ada peringkat

- Eaton Fuller FS-4205A Transmission Parts ManualDokumen18 halamanEaton Fuller FS-4205A Transmission Parts ManualJuan Diego Vergel RangelBelum ada peringkat

- Bomba - Weir - Warman Horizontal Pumps PDFDokumen8 halamanBomba - Weir - Warman Horizontal Pumps PDFWanderson Alcantara100% (1)

- CSCE 513: Computer Architecture: Quantitative Approach, 4Dokumen2 halamanCSCE 513: Computer Architecture: Quantitative Approach, 4BharatBelum ada peringkat

- Reservoir SimulationDokumen75 halamanReservoir SimulationEslem Islam100% (9)

- Thermal Plasma TechDokumen4 halamanThermal Plasma TechjohnribarBelum ada peringkat

- Final Drive Transmission Assembly - Prior To SN Dac0202754 (1) 9030Dokumen3 halamanFinal Drive Transmission Assembly - Prior To SN Dac0202754 (1) 9030Caroline RosaBelum ada peringkat

- 050-Itp For Installation of Air Intake Filter PDFDokumen17 halaman050-Itp For Installation of Air Intake Filter PDFKöksal PatanBelum ada peringkat

- Aspen Plus Model For Moving Bed Coal GasifierDokumen30 halamanAspen Plus Model For Moving Bed Coal GasifierAzharuddin_kfupm100% (2)

- ASTM 6365 - 99 - Spark TestDokumen4 halamanASTM 6365 - 99 - Spark Testjudith_ayala_10Belum ada peringkat

- Rahul Soni BiodataDokumen2 halamanRahul Soni BiodataRahul SoniBelum ada peringkat

- DjikstraDokumen5 halamanDjikstramanoj1390Belum ada peringkat

- What Is A BW WorkspaceDokumen8 halamanWhat Is A BW Workspacetfaruq_Belum ada peringkat

- Miller (1982) Innovation in Conservative and Entrepreneurial Firms - Two Models of Strategic MomentumDokumen26 halamanMiller (1982) Innovation in Conservative and Entrepreneurial Firms - Two Models of Strategic Momentumsam lissen100% (1)

- How McAfee Took First Step To Master Data Management SuccessDokumen3 halamanHow McAfee Took First Step To Master Data Management SuccessFirst San Francisco PartnersBelum ada peringkat

- BC-2800 - Service Manual V1.1 PDFDokumen109 halamanBC-2800 - Service Manual V1.1 PDFMarcelo Ferreira CorgosinhoBelum ada peringkat

- HR AuditDokumen5 halamanHR AuditshanumanuranuBelum ada peringkat

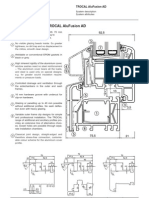

- Alufusion Eng TrocalDokumen226 halamanAlufusion Eng TrocalSid SilviuBelum ada peringkat

- Unit I Software Process and Project Management: Hindusthan College of Engineering and TechnologyDokumen1 halamanUnit I Software Process and Project Management: Hindusthan College of Engineering and TechnologyRevathimuthusamyBelum ada peringkat

- Appexchange Publishing GuideDokumen29 halamanAppexchange Publishing GuideHeatherBelum ada peringkat

- Topray Tpsm5u 185w-200wDokumen2 halamanTopray Tpsm5u 185w-200wThanh Thai LeBelum ada peringkat

- York Ylcs 725 HaDokumen52 halamanYork Ylcs 725 HaDalila Ammar100% (2)

- Practice - Creating A Discount Modifier Using QualifiersDokumen37 halamanPractice - Creating A Discount Modifier Using Qualifiersmadhu12343Belum ada peringkat

- Trafo Manual ABBDokumen104 halamanTrafo Manual ABBMarcos SebastianBelum ada peringkat

- Types and Construction of Biogas PlantDokumen36 halamanTypes and Construction of Biogas Plantadeel_jamel100% (3)

- SMILE System For 2d 3d DSMC ComputationDokumen6 halamanSMILE System For 2d 3d DSMC ComputationchanmyaBelum ada peringkat

- Regulation JigDokumen16 halamanRegulation JigMichaelZarateBelum ada peringkat

- FL Studio TutorialsDokumen8 halamanFL Studio TutorialsRoberto DFBelum ada peringkat