On The Convergence of Parallel Simulated Annealing

Diunggah oleh

Domingo BenDeskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

On The Convergence of Parallel Simulated Annealing

Diunggah oleh

Domingo BenHak Cipta:

Format Tersedia

Stochastic Processes and their Applications 76 (1998) 99115

On the convergence of parallel simulated annealing

Christian Meise

Fakult at f ur Mathematik, Universit at Bielefeld, Postfach 100131, 33501 Bielefeld, Germany

Received 28 August 1997; received in revised form 20 January 1998; accepted 23 January 1998

Abstract

We consider a parallel simulated annealing algorithm that is closely related to the so-called

parallel chain algorithm. Periodically a new state from N

1

states is chosen as the initial state

for simulated annealing Markov chains running independent of each other. We use selection

strategies such as best-wins or worst-wins and show that the algorithm in the case of best-wins

does not in general converge to the set of global minima. Indeed the period length and the

number have to be large enough. In the case of worst-wins the convergence result is true.

The phenomenon of the superiority of worst-wins over best-wins already occurs in nite-time

simulations. c 1998 Elsevier Science B.V. All rights reserved.

Keywords: Simulated annealing; Nonhomogeneous Markov processes

1. Introduction

Let X be a nite set and U : X R

+

be a non-constant function to be minimized. We

denote by X

min

the set of global minima of U. Suppose that we have 1 processors.

The optimization algorithm which is the center of our interest is described as follows.

Choose any starting point x

0

X and let each processor individually run a Metropolis

Markov chain of xed length L1 at inverse temperature [(0) starting in x

0

. After

L transitions the simulation is stopped and only one state x

1

is selected from the

states according to a selection strategy. Again each processor individually simulates a

Metropolis Markov chain of length L at an updated inverse temperature [(1) starting

in x

1

. Again at the end of the simulation a state x

2

is selected from the states and

the next simulation starts from x

2

, etc., cf. Fig. 1. This, algorithm is closely related to

the so-called parallel chain algorithm, cf. Diekmann et al. (1993). However, the main

dierence is that the number of parallel chains and the length of the Markov chains

L is kept xed in our model. In the parallel chain algorithm the length L is usually

increased and the number of parallel Markov chains is decreased during the run of the

algorithm so that the parallel chain algorithm asymptotically behaves like the so-called

one-chain algorithm.

In the sequel we specify the transition probabilities for each of the Markov chains

starting at time n(L + 1). Let q be an irreducible exploration kernel on X (i.e. a

0304-4149/98/$19.00 c 1998 Elsevier Science B.V. All rights reserved

PII: S0304- 4149( 98) 00011- 8

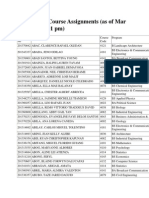

100 C. Meise / Stochastic Processes and their Applications 76 (1998) 99115

Fig. 1. Illustration of the algorithms working method.

time-independent Markov kernel), that satises q(x, x)c for all x X, where 0c1

is a xed constant, and that fullls the following quasi-reversibility condition

for all x, , X such that q(x, ,)0, q(,, x)0. (1)

Moreover, let [(n), n N

0

, denote a sequence satisfying the property:

lim

n

[(n) =.

The transition probabilities for the Markov chains, being simulated at time n(L +

1), are given by the classical simulated annealing transition kernel, i.e. by denot-

ing Y

k

n(L+1)+i

(c) =c

n, i

k

for 06i6L, 16k6 the projection on the sequence space

O=(X

)

L+1

(X

)

L+1

the transition probability for the )th Markov chain,

) {1, . . . , }, at time n(L + 1) + i (06i6L 1) is given by

P

[(n)

(Y

)

n(L+1)+i+1

=: | Y

)

n(L+1)+i

=,) =

[(n)

(,, :) for ,, : X,

where

[

(x, ,) =

_

_

_

exp([(U(,) U(x))

+

)q(x, ,), x =,

1

:=x

[

(x, :), x =,

(2)

denotes the classical simulated annealing transition kernel with exploration kernel q.

By the denition of the algorithm we have Y

n(L+1)

{(x, . . . , x) | x X} X

for all

n0. Therefore, by identifying the tuples (x, . . . , x) with x, we obtain a Markov chain

(X

n

)

n

on X by the denition X

n

:=Y

n(L+1)

, see also Fig. 1.

The selection behavior is described by selection functions.

Denition 1.1. A function F : {1, . . . , } X X

[0, 1] is called a selection

function, if

m=1

F(m, x; ,

1

, . . . , ,

) =1 for (x, ,

1

, . . . , ,

) X X

.

Remark 1.2. The selection function is used for dening the following transition

probabilities:

P

[(n)

(Y

k

n(L+1)

=: | Y

)

n(L+1)1

=,

)

for ) =1, . . . , ; X

n1

=x)

=

m: :=,

m

F(m, x; ,

1

, . . . , ,

) for all 16k6.

C. Meise / Stochastic Processes and their Applications 76 (1998) 99115 101

Before we can present the selection strategies we are concerned with we have to

introduce

[x] :={x

i

| i {1, . . . , }},

x

:={x

i

| U(x

i

) =min{U(x

)

) | ) {1, . . . , }}, i {1, . . . , }},

x

*

:={x

i

| U(x

i

) =max{U(x

)

) | ) {1, . . . , }}, i {1, . . . , }},

where x =(x

1

, . . . , x

) X

. The selection strategies are as follows.

1. First-wins. For 16m6,

F(m, x; :

1

, . . . , :

) =

_

_

_

1, :

1

= =:

m1

=x, :

m

=x,

1, :

1

= =:

=x,

0, :

m

=x, {x} =[:].

If there exists i with :

i

=x the state :

)

with smallest such ) will be chosen, else the

process will stay in x.

2. Chance-to-anyone.

F(m, x; :

1

, . . . , :

)c0 for all m{1, . . . , }.

3. Best-wins. The next state is chosen from the best states with uniform probability,

i.e.

F(m, x; :

1

, . . . , :

) =

1

|:

|

1

:

(:

m

).

4. Worst-wins. The next state is chosen from the worst states with uniform

probability, i.e.

F(m, x; :

1

, . . . , :

) =

1

|:

*

|

1

:

*

(:

m

).

Remark 1.3. For the worst-wins, best-wins and chance-to-anyone strategy the selection

function F does not depend on the second argument, i.e. for all 16m6, ,

1

, . . . , ,

X

we have

F(m, x; ,

1

, . . . , ,

) =F(m, :; ,

1

, . . . , ,

) for all : X.

In the case of the rst-wins strategy the second argument is needed because the choice

of the next initial state depends on the last initial state x. The selection behavior is

determined by selection functions in the following way: Given that the initial state of

the Markov chains has been x X the probability for selecting the state ,

)

from

{,

1

, . . . , ,

} is given by

!:,

!

=,

)

F(!, x; ,

1

, . . . , ,

).

2. Description of the Markov process

For ! N

1

and x X introduce the set of !-step neighbors of x under the kernel

[(n)

:

N

!

(x) :={, X |

(!)

[(n)

(x, ,)0}.

102 C. Meise / Stochastic Processes and their Applications 76 (1998) 99115

For x, , X denote by

N

(x, ,) =N

L

(x, ,) :={t N

L

(x)

| , [t]}

the set of -tuples whose components are L-step neighbors of x containing ,. By

denition of the algorithm we conclude for the transition probabilities of the chain

(X

n

)

n

,

P(X

n+1

=, | X

n

=x)

=

t N

(x,,)

P(X

n+1

=, | Y

n(L+1)1

=t)P(Y

n(L+1)1

=t | X

n

=x)

=

t N

(x,,)

!:t

!

=,

F(!, x; t

1

, . . . , t

i=1

P

[(n), L

(x, t

i

) =: Q

[(n), L

(x, ,), (3)

where

P

[(n), L

(x, ,) :=

(:

0

,..., :

L

){x} X

L1

{,}

L1

i=0

[(n)

(:

i

, :

i+1

).

Since Q

[(n), L

inherits the aperiodicity and irreducibility from

[(n)

, Q

[(n), L

has got

exactly one stationary probability j

[(n)

which charges any point (by irreducibility).

Instead of analyzing the time-discrete Markov chain (X

n

)

n

we consider the corre-

sponding time-continuous Markov process (X

t

)

t0

which is described by the inhomo-

geneous semigroup P

s, t

, s6t. We let t [(t), t0 denote a non-negative function and

dene a generator

[(t), L

by

[(t), L

:=Q

[(t), L

Id.

The semigroup P

s, t

of the process (X

t

)

t

is then given by the solution of the Kolmogorov

forward equations

c

ct

[P

s, t

[](x) =[P

s, t

[

[(t), L

[]](s) for st (4)

and

[P

s, s

[](x) :=[(x)

for [ : X R. In Eq. (4) we have used that any kernel P

s, t

induces an operator on

L

2

(j

t

) by the denition

[P

s, t

[](x) :=

,X

P

s, t

(x, ,)[(,) for any x X.

Roughly speaking the process (X

t

) waits an exponential distributed time with mean 1

before the next transition is performed. Given that the transition occurs at time t and the

process is in the state x the next state is drawn according to the distribution Q

[(t), L

(x, ).

The process (X

t

)

t0

will also be called the algorithm. The distribution of the process

(X

t

)

t

is determined by an initial distribution v

0

on X, a cooling schedule t [(t) an

exploration kernel q and a selection strategy F. Given an initial distribution v

0

on X

C. Meise / Stochastic Processes and their Applications 76 (1998) 99115 103

we denote by v

t

the distribution of (X

t

)

t0

at time t0 and introduce the probability

densities

[

t

(x) :=

v

t

(x)

j

[(t)

(x)

, x X, t0.

We say that the algorithm is convergent (to a subset of the set of global minima) if

for all initial distribution v

0

on X,

lim

t

P

v

0

(X

t

X

min

) =1.

3. Main results

Let

Q

*

[(t), L

(x, ,) =

Q

[(t), L

(,, x)j

[(t)

(,)

j

[(t)

(x)

for x =,,

Q

*

[(t), L

(x, x) =

{, | , =x}

Q

*

[(t), L

(x, ,).

Moreover by dening

*

[(t), L

:=Q

*

[(t), L

Id the symmetrized operator is given by

[(t), L

:=(

[(t), L

+

*

[(t), L

)}2.

Since

[(t), L

is self-adjoint with respect to the L

2

(j

[(t)

)-product all its eigenvalues

are real. The rst positive eigenvalue of

[(t), L

is denoted by

C([) and called the

spectral gap. We show that Q

[(t), L

is a transition kernel of type L

1

, cf. Denition 5.3

below. This implies that the following two conditions are satised.

There exist positive constants K, m and M ]0, [ such that

C([)K exp([(t)m), (5)

d

d[

log j

B

(x)

6M for all x X, (6)

where j

[

denotes the stationary probability of Q

[, L

. Before stating the main result we

have to introduce some notations.

For a nite subset AN

1

let G

[, A

=(X, E

[, A

) be the oriented graph that is induced

by Q

[, A

i.e.

(,, :) E

[, A

:

_

max

!A

Q

[, !

(,, :)0

_

_

max

!A

(!)

[

(,, :)0

_

.

Observe that the graph G

[, A

does in fact not depend on [. Therefore, we write

G

A

=G

[, A

for some [. Often we have A={L}.

Let be a path from , to : of length ! in the graph G

A

. We will consider as

! + 1-tuple =(, =

0

, . . . ,

!

=:) as well as set of its edges

={(

i

,

i+1

) | 06i6!}.

Denote by I

A

,:

the set of paths leading from , to : such that ! A with ! denoting

the length of .

104 C. Meise / Stochastic Processes and their Applications 76 (1998) 99115

Introduce the cost of I

A

,:

by s() :=

e

(U(e

+

)U(e

))

+

, where e =(e

, e

+

),

e

resp. e

+

denotes the source resp. the destination of the edge e. Our result reads as

follows.

Theorem 3.1. Choose

[(t) =

1

m

ln(1 + jt) where j =2mK}3M, t0. (7)

(i) Case rst-wins, worst-wins or chance-to-anyone. There exist constants A0,

B]0, M[ such that

P

x

(X

t

X

min

)62A

1}2

(1 + jt)

B}2m

+ (j

[(0)

(x)

1

1)

1}2

A

1}2

(1 + jt)

(B+2M)}2m

.

(8)

(ii) Case best-wins. Using min

+

(A) :=min(A\{0}) for AR, |A| we set

o :=min

+

{max(U(x) U(,), 0) | x N

1

(,), , X}

0 := max

xX\X

min

min{s(

!

) | ! N, : X

min

,

!

I

!

x:

}.

Suppose that p satises o0. Moreover, let L be chosen such that for all x X

holds: There exists : X

min

and

L

I

L

x:

such that s(

L

)60. Then there exist constants

A0, B]0, M[ such that

P

x

(X

t

X

min

)62A

1}2

(1 + jt)

B}2m

+ (j

[(0)

(x)

1

1)

1}2

A

1}2

(1 + jt)

(B+2M)}2m

.

If the number of parallel chains p or the length of each Markov chain L is not chosen

large enough the algorithm (X

t

)

t0

may get trapped in local but not global minima,

cf. Examples 5.13 and 5.14.

4. Finite-time simulations

In the following we consider the well known traveling salesman problem. The state

space X is the set of cycles of length 100 in the permutation group of order 100. We

use the Kro100a.tsp 100-city traveling salesman problem proposed by Krolak et al.

The neighborhood structure on X is dened by using 2-changes, more precisely two

non-successive edges of the current tour are chosen and removed from the tour. Then

two edges are added such that a dierent new tour is created. For more details see

Aarts and Korst (1991). The gures show mean curves: We repeated each simulation k

times (k the repetition number) resulting in k curves. Then we computed the arithmetic

mean cost at each time step and thus obtained an average curve (Fig. 2).

C. Meise / Stochastic Processes and their Applications 76 (1998) 99115 105

Fig. 2. Parameters: Number of processors =2, length of independent Markov chains L =5. Logarithmic

cooling schedule [(t) =c log(jt +1) with c =0.006 and j =3.0. The total number of iterations is 40 million

and the number of repetitions is 20.

5. Proofs

We use a general convergence result due to Deuschel and Mazza (1994). More

precisely we have

Theorem 5.1. Assume Eqs. (5) and (6). Choose [(t) as in Eq. (7). Let A0 and

B]0, M[ be such that for some set O

*

X we have

j

[(t)

(O

*

)6Aexp([(t)B). (9)

Then for each x X we have,

P

x

(X

t

O

*

)62A

1}2

(1 + jt)

B}2m

+ (j

[(0)

(x)

1

1)

1}2

A

1}2

(1 + jt)

(B+2M)}2m

.

(10)

Proof. See Deuschel and Mazza (1994, Corollary 2.2.8).

Our aim is to show that

[(t), L

is contained in the class L

1

of exponentially

vanishing coecients. This implies that conditions (5) and (6) are fullled.

Denition 5.2 (Class C

1

). A dierentiable function [: R

+

R

+

belongs to C

1

if [

is bounded from above and from below by strictly positive constants and if

sup

[0

d

d[

log([([))

6K,

for some K.

106 C. Meise / Stochastic Processes and their Applications 76 (1998) 99115

Denition 5.3 (Class L

1

). The set L

1

is given by irreducible transition functions

[

, [0 such that

[

(x, ,) =exp([J(x, ,))[

x, ,

([), x =,, (11)

where 06J(x, ,)6 and [

x, ,

C

1

. In Deuschel and Mazza (1994) it is shown that

transition functions

[

L

1

satisfy condition (5) as well as condition (6).

For a nite subset AN

1

we have already introduced the set of paths I

A

,:

leading

from x X to , X. Let

I

A

,:,

:={ I

A

,:

| s() =min{s(

) |

I

A

,:

}} (12)

the set of s-minimizing paths. Moreover, denote by G

A

{x} the set of {x}-graphs, cf.

FreidlinWentzell (1984, p. 177) for a denition of {x}-graphs, using edges of the

graph G

A

only. Roughly speaking q is an {x}-graph if q is a directed graph with

exactly one outgoing edge (,, ,

) q for each , =x and if there is a path leading from

, to x for each , =x. If additionally each edge of q is also an edge in the graph G

A

we say that q G

A

{x}. The elements of G

A

{x} will also be called {x}-A-graphs.

Choose

,:

I

A

,:,

for ,, : X with I

A

,:

=. The cost of an {x}-A-graph is given by

h

A

(q) :=

eq

s(

e

). (13)

5.1. The kernel Q

[, L

is in L

1

For a path =(x =

0

,

1

, . . . ,

L

=,) I

L

x,

we introduce

J

1

=J

1

() :={i | 06iL,

i

=

i+1

},

J

2

=J

2

() :={i | 06iL,

i

=

i+1

}.

Moreover, we dene

c

[

() :=

iJ

1

q(

i

,

i+1

)

iJ

2

[

(

i

,

i

),

and obtain for P

[, L

(x, ,)

P

[, L

(x, ,) =

I

L

x,

L1

i=0

[

(

i

,

i+1

) =

I

L

x,

exp([s())c

[

().

For x, , X choose a path

x,

I

L

x,,

. Note that [ c

[

() is in C

1

for any xed path .

Thus

[

(x, ,), 1

([) :=

I

L

x,

exp([(s() s(

x,

)))c

[

() C

1

and we obtain

P

[, L

(x, ,) =

I

L

x,

exp([s())c

[

()

= [

(x, ,), 1

([) exp([s(

x,

)).

C. Meise / Stochastic Processes and their Applications 76 (1998) 99115 107

Using the expression for P

[, L

and dening [

(x, t), 2

([) :=

i=1

[

(x, t

i

), 1

([) we obtain

Q

[, L

(x, ,) =

tN

(x,,)

[

(x, t), 2

([) exp

_

[

i=1

s(

xt

i

)

_

! : t

!

=,

F(!, x; t). (14)

For x, , X we introduce

K

L

(x, ,) :=

_

_

_

t N

L

(x, ,)

!:t

!

=,

F(!, x; t)0

_

_

_

,

k

L

(x, ,) :=min

_

i=1

s(

xt

i

) | t K

L

(x, ,)

_

.

Using that

[

(x, ,), 3

([) =

tK

L

(x,,)

[

(x, t), 2

([) exp

_

[

_

i=1

s(

xt

i

) k

L

(x, ,)

__

!:t

!

=,

F(!, x; t) C

1

we conclude

Q

[, L

(x, ,) =

tK

L

(x,,)

[

(x, t), 2

([) exp

_

[

i=1

s(

xt

i

)

_

!:t

!

=,

F(!, x; t)

=[

(x, ,), 3

([) exp([k

L

(x, ,)). (15)

The important point is that [

(x, ,), 3

([) is a continuously dierentiable function of [

which is bounded from below and from above by strictly positive and nite constants,

i.e. [

(x, ,), 3

() C

1

. Thus we have shown that the transition kernel Q

[, L

is of Freidlin

Wentzell type L

1

and consequently Conditions (5) and (6) are fullled.

The remaining task for proving convergence is to show that Inequality (9) holds

with O

*

=X

c

min

for appropriate selection strategies.

In the sequel we show that the stationary probability j

[

corresponding to Q

[, L

is

concentrated on a certain w

, L

for large [. The set w

, L

is given by those x X that

minimize the function

x min

_

_

_

(,, :)q

k

L

(,, :) | q G

L

{x}

_

_

_

.

Using the notation of Freidlin and Wentzell (1984, Paragraph 6.3, p. 177), we dene

for q G

L

{x}, x X,

[

(q) :=

(,, :)q

Q

[, L

(,, :),

Q

[

x

:=

qG

L

{x}

[

(q).

108 C. Meise / Stochastic Processes and their Applications 76 (1998) 99115

Applying the matrix-tree theorem of Bott and Mayberry (1954) yields for the stationary

probability j

[

of the Markov chain Q

[, L

j

[

(x) =

Q

[

x

,X

Q

[

,

for x X.

Dene K

[, L

(q) :=

(,, :)q

[

(,, :), 3

([) C

1

and use Eq. (15) in order to derive

[

(q) =K

[, L

(q) exp

_

_

[

(,, :)q

k

L

(,, :)

_

_

.

Therefore

Q

[

x

=

qG

L

{x}

K

[, L

(q) exp

_

_

[

(,, :)q

k

L

(,, :)

_

_

.

Using the notations

h(q) =

h

L

(q) :=

(,, :)q

k

L

(,, :), (16)

w

x, L

:= min{

h(q) | q G

L

{x}},

w

min

:= min{w

x, L

| x X},

w

, L

:={x | w

x, L

=w

min

}, (17)

we obtain

Q

[

x

=

qG

L

(x)

K

[, L

(q) exp([

h(q)) =

K

[, L

(x) exp([w

x, L

),

where

K

[, L

(x) =

qG

L

(x)

K

[, L

(q) exp([(

h(q) w

x, L

)) C

1

.

Moreover

j

[

(x) =

Q

[

x

,X

Q

[

,

=

K

[, L

(x)

,X

K

[, L

(,) exp([(w

,, L

w

min

))

exp([(w

x, L

w

min

)).

(18)

Finally since the function [

x

() dened by

[

x

([) =

K

[, L

(x)

,X

K

[, L

(,) exp([(w

,, L

w

min

))

is in C

1

, we obtain that

lim

[

j

[

(x) =0 for x w

, L

.

The remaining task that has to be done is to show that w

, L

X

min

for appropriate

selection functions.

C. Meise / Stochastic Processes and their Applications 76 (1998) 99115 109

5.2. Preparations for the proof of w

, L

X

min

In this section we will see that X

min

=m

, L

, where m

, L

is the set of minimizers of

x min{h

L

(q) | q G

L

{x}}

and h

L

(q) is dened in Eq. (13). The concentration of j

[

on X

min

will be shown by

establishing the inclusion m

, L

w

, L

for appropriate selection functions. In the sequel

we need the following denitions:

m

x, L

:=min{h

L

(q) | q G

L

{x}},

m

L

:=min{m

x, L

| x X},

m

, L

:={x X | m

x, L

=m

L

}.

Let

1

:={(e

+

, e

) | e } denote the reverse path. Note that

1

is a path by as-

sumption (1). The following lemma gives a formula for the cost of the reverse path.

This fact is used for deriving that m

, L

=X

min

.

Lemma 5.4 (Reverse path formula). Let , X and : N

L

(,). For any path I

L

,:

from , to : we have

s() =s(

1

) + (U(:) U(,)). (19)

Proof. Using (a)

+

=a

+

a for a R, we conclude

s(

1

) =

e

(U(e

) U(e

+

))

+

=

e

(U(e

+

) U(e

))

+

(U(e

+

) U(e

))

= s()

e

(U(e

+

) U(e

))

= s() (U(:) U(,)).

The formula for reverse paths I

L

x,

yields a formula for reverse {x}-L-graphs.

Lemma 5.5 (Reverse graph formula). Let x, , X and q G

L

{x}. Moreover let p q

be the uniquely determined path in q from , to x. Then for the {,}-L-graph q

:=

q\p p

1

h

L

(q

) =h

L

(q) + (U(,) U(x)). (20)

110 C. Meise / Stochastic Processes and their Applications 76 (1998) 99115

Proof. First we show that

s(

1

,:

) =s(

:,

) for ,, : X with (,, :) q G

L

{x}. (21)

Proof of Eq. (21). Since

1

,:

I

L

:,

, it follows that s(

1

,:

)s(

:,

). Applying Eq. (19)

to

:,

, yields

s(

:,

) = s(

1

:,

)

. .

s(

,:

)

+(U(,) U(:))s(

,:

) + (U(,) U(:))

= s(

1

,:

)

For q and q

as in the assertion, we conclude

h

L

(q

) =h

L

(q)

ep

(s(

e

) s(

1

e

))

=h

L

(q) +

ep

(U(e

) U(e

+

))

=h

L

(q) + (U(,) U(x)), (22)

where Eq. (19) has been used in Eq. (22).

Let

G

L,

{x} :={q G

L

{x} | h

L

(q) =m

x, L

}

the set of {x}-L-graphs minimizing the cost h

L

. Next, we obtain a satisfying description

of the set m

, L

.

Proposition 5.6. For all L1 we have m

, L

=X

min

.

Proof. At rst we show that m

, L

X

min

. Let q G

L,

{x}, , X

min

, x =, and let

p q be the path in q leading from , to x. By applying the reverse formula for graphs

(20) to q

:=q\p p

1

, we obtain for x } X

min

m

,, L

6h

L

(q

) =h

L

(q) + (U(,) U(x))

. .

0

h

L

(q) =m

x, L

.

Hence m

L

6m

,, L

m

x, L

and x } m

, L

. For x X

min

, we obtain

m

,, L

6h

L

(q

) =h

L

(q) =m

x, L

.

By symmetry we also have m

,, L

m

x, L

, hence m

,, L

=m

x, L

if x, , X

min

.

5.3. Convergence for chance-to-anyone

Proposition 5.7. Let F be the chance-to-anyone selection strategy. Then we have

w

, L

=X

min

, i.e. Inequality (10) holds with O

*

=X

c

min

.

C. Meise / Stochastic Processes and their Applications 76 (1998) 99115 111

Proof. Let q G

L

{x}. We show that

h

L

(q) =h

L

(q). Let (,, :) q. By denition of

k

L

(,, :) it holds k

L

(,, :)s(

,:

). Since F F

b

we have K

L

(,, :) =N

(,, :). Therefore

k

L

(,, :) =s(

,:

) and

h

L

(q) =h

L

(q). Hence w

, L

=m

, L

=X

min

, where the last equality

holds by Proposition 5.6.

5.4. Convergence for worst-wins

We need the basic inequality m

x, 1

6m

x, L

which is indeed an equality.

Lemma 5.8. Let x X. Then for L1

m

x, 1

=min{h

1

(q) | q G

1

{x}}6min{h

L

(q) | q G

L

{x}} =m

x, L

. (23)

Indeed equality holds in Eq. (23).

Proof. Let q G

L

{x} G

{1, L}

{x}. By denition of h

{1, L}

, we have h

{1, L}

(q)6h

1

(q).

We show that there exists q G

1

{x} such that h

1

( q)6h

{1, L}

(q). This is done by in-

duction over |K(q)|, where

K(q) :={(,, :) q |

[(n)

(,, :) =0} for q G

{1, L}

.

For |K(q)| =0 the graph q is already element of G

1

{x}, so we can choose q :=q. (Note

that paths of length 1 have minimal costs, so that h

1

(q)6h

{1, L}

(q).) Let |K(q)| =n +

1. By induction hypothesis there exists a {x}-1-graph q with h

1

( q)6h

{1, L}

(q), if q

is a {x}-{1, L}-graph q with |K(q)|6n. Let (,, :) K(q). Set p :=

,:

I

L

,:

. For

any i {0, . . . , L} there exists for p

i

exactly one p

i

, such that (p

i

, p

i

) q. Dene the

following {x}-{1, L}-graph

q

:=q\

_

L1

_

i=0

(p

i

, p

i

)

_

_

_

_

_

_

L1

_

i=0

p

i

=p

i+1

(p

i

, p

i+1

)

_

_

_

_

_

.

Since

[(n)

(p

i

, p

i+1

)0, we can choose the path

p

i

p

i+1

:=(p

i

, p

i+1

) of length 1 as min-

imizing path from p

i

to p

i+1

. Using s(

p

i

p

i+1

) =(U(p

i+1

) U(p

i

))

+

, we derive for the

cost of q

h

{1, L}

(q

) = h

{1, L}

(q)

L1

i=0

s(

p

i

p

i

) +

L1

i=0

p

i

=p

i+1

s(

p

i

p

i+1

)

. .

=s(

,:

)

= h

{1, L}

(q) s(

,:

)

L1

i=1

s(

p

i

p

i

)

. .

0

+s(

,:

)

6h

{1, L}

(q).

112 C. Meise / Stochastic Processes and their Applications 76 (1998) 99115

Note that K(q

) has at most n elements. By induction hypothesis there exists q G

1

{x}

with h

1

( q)6h

{1, L}

(q

). Hence h

1

( q)6h

{1, L}

(q

)6h

{1, L}

(q)6h

L

(q). This yields m

x, 1

6

m

x, L

.

For a nite subset AN

1

, we dene

h

A

(q) :=

(,, :)q

min

aA

k

a

(,, :), q G

A

{x}.

If K

a

(,, :) = we let k

a

(,, :) =. Note that the denition of

h

A

(q) coincides with

Eq. (16) for A consisting of one element.

Lemma 5.9. Using the worst-wins strategy any q G

{1, L}

{x} (x X) is contained in

G

L

{x}. Moreover the equality

h

L

(q) =

h

{1, L}

(q) holds.

Proof. Obviously

h

{1, L}

(q)6

h

L

(q). Each path =(

0

,

1

) of length 1 can be replaced

by a path of length L with the same cost, e.g. take

=(

0

, . . . ,

0

,

1

) (note that

[

(

0

,

0

)0). Hence k

1

(

0

,

1

) =k

L

(

0

,

1

) which yields the assertion.

For L =1 we have the following identity.

Lemma 5.10. Let q G

1

{x} for x X. Then

h

1

(q) =h

1

(q).

Proof. Let (,, :) q G

1

{x}. Since q is a 1-graph, we have

,:

=(,, :) and s(

,:

) =

(U(:)U(,))

+

. Furthermore, we have k

1

(,, :) =(U(:)U(,))

+

, because the -tuple

(:, ,, . . . , ,) is contained in K

1

(,, :). Therefore

h

1

(q) =

(,, :)q

k

1

(,, :) =

(,, :)q

(U(:) U(,))

+

=h

1

(q).

Combining the above results, we get

Proposition 5.11. Let F be the worst-wins selection strategy then we have w

, L

=X

min

which proves that Inequality (10) holds with O

*

=X

c

min

, yielding Theorem 3.1(ii).

Proof. We have the following inequality:

min{

h

L

(q) | q G

L

{x}} = min{

h

{1, L}

(q) | q G

{1, L}

{x}} (24)

6min{

h

1

(q) | q G

1

{x}}

= min{h

1

(q) | q G

1

{x}} (25)

6min{h

L

(q) | q G

L

{x}}. (26)

Here we have used Lemma 5.9 in Eq. (24), Lemma 5.10 in Eq. (25) and Lemma 5.8

in Inequality (26). By using

h

L

(q)h

L

(q) for any q G

L

{x} we conclude

min{

h

L

(q) | q G

L

{x}} = min{h

L

(q) | q G

L

{x}}.

This implies w

x, L

=m

x, L

and w

, L

=m

, L

=X

min

by Proposition 5.6.

C. Meise / Stochastic Processes and their Applications 76 (1998) 99115 113

Fig. 3. Energy landscape (X, U).

5.5. In general no convergence for best-wins

For L =1 the convergence of the algorithm is still true:

Proposition 5.12. Let q G

1

{x} for x X. Then

h

1

(q) =h

1

(q) implying w

, 1

=

m

, 1

=X

min

.

Proof. Let (,, :) q G

1

{x}. For U(,)U(:) we have k

1

(,, :) =(U(:) U(,))

+

and for U(,)U(:), we get k

1

(,, :) =(U(:) U(,))

+

=0. Therefore

h

1

(q) =

(,, :)q

k

1

(,, :) =

(,, :)q

(U(:) U(,))

+

=h

1

(q).

For L2 the inclusion w

, L

X

min

is in general false:

Example 5.13. For the state space X :={1, . . . , L + 2} consider the energy landscape

(X, U) shown in Fig. 3. The exploration kernel q is given by nearest-neighbor random

walk with uniform probability, i.e.

q(i, i + 1) =q(i, i 1) =

1

2

for i {2, . . . , L + 1},

q(1, 2) =q(L + 2, L + 1) =1.

If a moving particle is in the local minimum L+2, then after L transitions it can only

be in states that are worse except returning to L + 2 again.

Therefore leaving the state L + 2 is very expensive. We have w

L+2, L

=2 + 4 and

w

1, L

=5. Thus for all 2

lim

[

j

[

(X

min

) = lim

[

j

[

(1) =0.

In the above example we have proposed an energy landscape (X, U), for which

w

, L

X

min

for any 2.

If we x the above landscape and increase the simulation length L of the parallel

Markov chains, there is L

*

such that w

, L

X

min

is true for LL

*

. Therefore one may

conjecture that in general w

, L

X

min

can be established for large L. The next example

114 C. Meise / Stochastic Processes and their Applications 76 (1998) 99115

Fig. 4. Energy landscape (X, U).

shows that this does not suce: In general one has to increase the number of parallel

Markov chains as well. Compare the following example.

Example 5.14. Let L7 and 3. For a1 let the state space be given by X :=

{1, . . . , 7, x

1

, . . . , x

a

} and consider the energy landscape shown in Fig. 4 for N7.

The exploration kernel q is again given by nearest neighbor random walk with

uniform probability. A best {x

)

}-L-graph, ) {1, . . . , a} is the following:

q = (X, {(1, 2), (2, 4), (3, 2), (4, 6), (5, 4), (6, x

)

), (7, 6),

(x

1

, x

)

), . . . , (x

)1

, x

)

), (x

)+1

, x

)

), (x

a

, x

)

)})

with cost

h

L

(q) =3(N 2) + 4 + (a 1)(N 1). A best {1}-L-graph is given by

q

= (X, {(x

1

, 1), (2, 1), (3, 2), (4, 6), (5, 4), (6, x

1

), (7, 6),

(x

2

, x

1

), . . . , (x

a

, x

1

)})

with cost

h

L

(q

) =(a + 2)(N 1) + 2(N 2). Hence the local minima x

1

, . . . , x

a

are

contained in w

, L

for L7 and all 36(2N 1)}4.

For and L large we can establish w

, L

X

min

.

Proposition 5.15. Set

o :=min

+

{max(U(x) U(,), 0) | x N

1

(,), , X},

0 := max

xX\X

min

min{s(

!

) | ! N, : X

min

,

!

I

!

x:

}.

Suppose that satises o0. Moreover let L be chosen such that for all x X

holds: There exists : X

min

and

L

I

L

x:

such that s(

L

)60. Then w

, L

X

min

.

Proof. Let x X\X

min

and q G

L,

{x}. By choice of L there exists : X

min

such that

s(

x:

)60.

Let p =(: =p

0

, . . . , p

!(p)

=x) q be the path from : to x and let

J :={i {0, . . . , !(p) 1} | U(p

i

)U(p

i+1

)}

C. Meise / Stochastic Processes and their Applications 76 (1998) 99115 115

be the set of indices, that correspond to increasing edges. Since U(x)U(:) the set J

is non-empty. Let ) := min{J} be the smallest index with U(p

i

)U(p

i+1

). Suppose

that p

)+1

=x. For :=

)

i=0

(p

i

, p

i+1

) q dene the {:}-L-graph

q

:=q\

1

(x, :). (27)

Note that

h

L

( )o and

h

L

(

1

) =0. Hence the cost of q

can be estimated by

h

L

(q

) =

h

L

(q)

h

L

( ) +

h

L

(

1

) + s(

x:

)

6 w

x, L

o + 0

w

x, L

.

If p

)+1

=x the denition of q

in Eq. (27) is replaced by q

:=q\

1

which also

yields

h

L

(q

)w

x, L

.

5.6. Convergence for rst-wins

Proposition 5.16. Using the rst-wins strategy we have w

, L

=X

min

.

Proof. Let (,, :) q G

, L

{x}, x X. The -tuple t =(:, t

2

, . . . , t

) with t

i

N

L

(,),

26i6, is contained in K

L

(,, :), because F(1, :; t) =1. Since t

2

, . . . , t

can be chosen

such that s(

,t

i

) =0 we have k

L

(,, :) =s(

,:

). Hence

h

L

(q) =h

L

(q) and w

, L

=m

, L

=

X

min

.

References

Aarts, E., Korst, J., 1991. Simulated Annealing and Boltzmann Machines, Wiley-Interscience, Discrete Math.

Optim. Ser. Wiley-Interscience, New York.

Bott, R., Mayberry, J.P., 1954. Matrices and Trees, Economics Activity Analysis. Wiley, New York.

Deuschel, J.-D., Mazza, C., 1994. L

2

convergence of time non-homogeneous Markov-processes: I. Spectral

estimates. Annal. Appl. Probab. 4, 10121056.

Diekmann, R., L uling, R., Simon, J., 1993. Problem independent distributed simulated annealing and its

applications. Lecture Notes Econom. Math. Syst., vol. 369. Springer, Berlin, pp. 116.

Freidlin, M.I., Wentzell, A.D., 1984. Random Perturbations of Dynamical Systems. Springer, Berlin.

Anda mungkin juga menyukai

- Solar Thermal PDFDokumen4 halamanSolar Thermal PDFDomingo BenBelum ada peringkat

- 230 SolarEnergy PV LCA 2011 PDFDokumen20 halaman230 SolarEnergy PV LCA 2011 PDFDomingo BenBelum ada peringkat

- Neural Network Approach For Modelling Global Solar Radiation PDFDokumen7 halamanNeural Network Approach For Modelling Global Solar Radiation PDFDomingo BenBelum ada peringkat

- Review of The BookDokumen3 halamanReview of The BookDomingo BenBelum ada peringkat

- A New Methode For Evaluating ToDokumen4 halamanA New Methode For Evaluating ToDomingo BenBelum ada peringkat

- Determination of Solar Cells ParametersDokumen5 halamanDetermination of Solar Cells ParametersDomingo BenBelum ada peringkat

- McKenna Domestic PV Battery Study 2012 11 06 PDFDokumen39 halamanMcKenna Domestic PV Battery Study 2012 11 06 PDFDomingo BenBelum ada peringkat

- Solar Cell Array Parameters Using LambertDokumen7 halamanSolar Cell Array Parameters Using LambertDomingo BenBelum ada peringkat

- Determination of Solar Cells ParametersDokumen5 halamanDetermination of Solar Cells ParametersDomingo BenBelum ada peringkat

- Experimental Validation of Theoretical StudiesDokumen12 halamanExperimental Validation of Theoretical StudiesDomingo BenBelum ada peringkat

- Determination of Solar Cells ParametersDokumen5 halamanDetermination of Solar Cells ParametersDomingo BenBelum ada peringkat

- Extraction and Analysis of Solar Cell ParametersDokumen9 halamanExtraction and Analysis of Solar Cell ParametersDomingo BenBelum ada peringkat

- Analytical Derivation of Explicit J-V Model of A Solar CellDokumen5 halamanAnalytical Derivation of Explicit J-V Model of A Solar CellDomingo BenBelum ada peringkat

- Experimental Validation of Theoretical StudiesDokumen12 halamanExperimental Validation of Theoretical StudiesDomingo BenBelum ada peringkat

- Modeling motor-pump systems for PV applicationsDokumen9 halamanModeling motor-pump systems for PV applicationsDomingo BenBelum ada peringkat

- Bifacial Photovoltaic Panels With Sun TrackingDokumen12 halamanBifacial Photovoltaic Panels With Sun TrackingDomingo BenBelum ada peringkat

- Etude Impact ReseauDokumen5 halamanEtude Impact ReseauDomingo BenBelum ada peringkat

- WCECS2008 pp846-851Dokumen6 halamanWCECS2008 pp846-851Domingo BenBelum ada peringkat

- Analytical Derivation of Explicit J-V Model of A Solar CellDokumen5 halamanAnalytical Derivation of Explicit J-V Model of A Solar CellDomingo BenBelum ada peringkat

- Analysis and Modelling The ReverseDokumen16 halamanAnalysis and Modelling The ReverseDomingo BenBelum ada peringkat

- 9.余定中-Establishment and Study of a Photovoltaic System With the MPPT FunctionDokumen12 halaman9.余定中-Establishment and Study of a Photovoltaic System With the MPPT FunctionDomingo BenBelum ada peringkat

- Accurate and Fast Convergence Method For Parameter Estimation of PV GeneratorsDokumen6 halamanAccurate and Fast Convergence Method For Parameter Estimation of PV GeneratorsDomingo BenBelum ada peringkat

- Two-Diode Model with Variable Series ResistanceDokumen10 halamanTwo-Diode Model with Variable Series ResistanceDomingo BenBelum ada peringkat

- Bifacial Photovoltaic Panels With Sun TrackingDokumen12 halamanBifacial Photovoltaic Panels With Sun TrackingDomingo BenBelum ada peringkat

- Analysis and Modelling The ReverseDokumen16 halamanAnalysis and Modelling The ReverseDomingo BenBelum ada peringkat

- A New Methode For Evaluating ToDokumen4 halamanA New Methode For Evaluating ToDomingo BenBelum ada peringkat

- EECS498Dokumen21 halamanEECS498Domingo BenBelum ada peringkat

- Photovoltaic Generator Modelling For Power System Simulation StudiesDokumen7 halamanPhotovoltaic Generator Modelling For Power System Simulation StudiesDomingo BenBelum ada peringkat

- 131 669 3 PBDokumen8 halaman131 669 3 PBMahamudul HasanBelum ada peringkat

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5784)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (890)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (587)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (72)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2219)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (119)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- S7-1200 SM 1231 8 X Analog Input - SpecDokumen3 halamanS7-1200 SM 1231 8 X Analog Input - Specpryzinha_evBelum ada peringkat

- Fundamentals of Surveying - Experiment No. 2Dokumen6 halamanFundamentals of Surveying - Experiment No. 2Jocel SangalangBelum ada peringkat

- 01 - The Freerider Free Energy Inverter Rev 00DDokumen18 halaman01 - The Freerider Free Energy Inverter Rev 00Dpeterfoss791665Belum ada peringkat

- Savi TancetDokumen3 halamanSavi TancetJasmine DavidBelum ada peringkat

- History of Representation TheoryDokumen7 halamanHistory of Representation TheoryAaron HillmanBelum ada peringkat

- Storage and Flow of Powder: Mass Flow Funnel FlowDokumen9 halamanStorage and Flow of Powder: Mass Flow Funnel FlowDuc HuynhBelum ada peringkat

- SM PDFDokumen607 halamanSM PDFGladwin SimendyBelum ada peringkat

- UP Diliman Course AssignmentsDokumen82 halamanUP Diliman Course Assignmentsgamingonly_accountBelum ada peringkat

- NSEP 2022-23 - (Questions and Answer)Dokumen19 halamanNSEP 2022-23 - (Questions and Answer)Aditya KumarBelum ada peringkat

- Inverse Normal Distribution (TI-nSpire)Dokumen2 halamanInverse Normal Distribution (TI-nSpire)Shane RajapakshaBelum ada peringkat

- Triton X 100Dokumen2 halamanTriton X 100jelaapeBelum ada peringkat

- Interference QuestionsDokumen11 halamanInterference QuestionsKhaled SaadiBelum ada peringkat

- II Assignment MMDokumen3 halamanII Assignment MMshantan02Belum ada peringkat

- Radiation Physics and Chemistry: Traian Zaharescu, Maria Râp Ă, Eduard-Marius Lungulescu, Nicoleta Butoi TDokumen10 halamanRadiation Physics and Chemistry: Traian Zaharescu, Maria Râp Ă, Eduard-Marius Lungulescu, Nicoleta Butoi TMIGUEL ANGEL GARCIA BONBelum ada peringkat

- GSK980TDDokumen406 halamanGSK980TDgiantepepinBelum ada peringkat

- 11 HW ChemistryDokumen6 halaman11 HW ChemistryJ BalanBelum ada peringkat

- Unconfined Compression Test: Experiment No. 3Dokumen6 halamanUnconfined Compression Test: Experiment No. 3Patricia TubangBelum ada peringkat

- Channel Tut v1Dokumen11 halamanChannel Tut v1Umair IsmailBelum ada peringkat

- Engleski Jezik-Seminarski RadDokumen18 halamanEngleski Jezik-Seminarski Radfahreta_hasicBelum ada peringkat

- Linear equations worksheet solutionsDokumen4 halamanLinear equations worksheet solutionsHari Kiran M PBelum ada peringkat

- Astm B 265 - 03Dokumen8 halamanAstm B 265 - 03kaminaljuyuBelum ada peringkat

- Malla Curricular Ingenieria Civil UNTRMDokumen1 halamanMalla Curricular Ingenieria Civil UNTRMhugo maldonado mendoza50% (2)

- J.F.M. Wiggenraad and D.G. Zimcik: NLR-TP-2001-064Dokumen20 halamanJ.F.M. Wiggenraad and D.G. Zimcik: NLR-TP-2001-064rtplemat lematBelum ada peringkat

- Elementarysurvey PDFDokumen167 halamanElementarysurvey PDFStanley OnyejiBelum ada peringkat

- All Rac α Tocopheryl Acetate (Vitamin E Acetate) RM COA - 013Dokumen2 halamanAll Rac α Tocopheryl Acetate (Vitamin E Acetate) RM COA - 013ASHOK KUMAR LENKABelum ada peringkat

- Layers of The EarthDokumen105 halamanLayers of The Earthbradbader100% (11)

- THF Oxidation With Calcium HypochloriteDokumen3 halamanTHF Oxidation With Calcium HypochloriteSmokeBelum ada peringkat

- Chap 3 DieterDokumen25 halamanChap 3 DieterTumelo InnocentBelum ada peringkat

- Part Number 26-84: Illustrated Parts BreakdownDokumen90 halamanPart Number 26-84: Illustrated Parts BreakdownJesus OliverosBelum ada peringkat

- Energy-Momentum Tensor For The Electromagnetic Field in A Dispersive MediumDokumen17 halamanEnergy-Momentum Tensor For The Electromagnetic Field in A Dispersive MediumSamrat RoyBelum ada peringkat