Ijaiem 2014 08 01 97

0 penilaian0% menganggap dokumen ini bermanfaat (0 suara)

10 tayangan3 halamanInternational Journal of Application or Innovation in Engineering & Management (IJAIEM),

Web Site: www.ijaiem.org, Email: editor@ijaiem.org, Volume 3, Issue 7, July 2014 ISSN 2319 - 4847, Impact Factor: 3.111 for year 2014

Judul Asli

IJAIEM-2014-08-01-97

Hak Cipta

© © All Rights Reserved

Format Tersedia

PDF, TXT atau baca online dari Scribd

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniInternational Journal of Application or Innovation in Engineering & Management (IJAIEM),

Web Site: www.ijaiem.org, Email: editor@ijaiem.org, Volume 3, Issue 7, July 2014 ISSN 2319 - 4847, Impact Factor: 3.111 for year 2014

Hak Cipta:

© All Rights Reserved

Format Tersedia

Unduh sebagai PDF, TXT atau baca online dari Scribd

0 penilaian0% menganggap dokumen ini bermanfaat (0 suara)

10 tayangan3 halamanIjaiem 2014 08 01 97

International Journal of Application or Innovation in Engineering & Management (IJAIEM),

Web Site: www.ijaiem.org, Email: editor@ijaiem.org, Volume 3, Issue 7, July 2014 ISSN 2319 - 4847, Impact Factor: 3.111 for year 2014

Hak Cipta:

© All Rights Reserved

Format Tersedia

Unduh sebagai PDF, TXT atau baca online dari Scribd

Anda di halaman 1dari 3

International Journal of Application or Innovation in Engineering& Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 3, Issue 7, July 2014 ISSN 2319 - 4847

Volume 3, Issue 7, July 2014 Page 278

ABSTRACT

Nowadays, the use of internet grows, so user wish to store data on cloud computing platform. Most of the time, users data are

small files. HDFS designed to process large volume of data. But it cannot handle large number of small files. In this paper, we

have designed improved model for processing small files. In existing system, map tasks processes a block of input at a time. Map

task produces intermediate output which is given to reducer. Reducer gives sort-merge output. Here, multiple map tasks & single

reduce task is used for processing small file. In this approach, if there are large numbers of small files then each map task gets

less input. In this way, performance of HDFS for processing lot of small files has been degraded. In proposed system, small files

efficiently processed by HDFS. HDFS client requested to store small files in HDFS. NameNode permits to store small files in

HDFS. When small files are submitted by HDFS client for processing, NameNode combine files into single split which becomes

an input to map task. Intermediate output produced by map task is given to multiple reducers as an input. Reducer gives sorted

merge output. As the number of map tasks has reduced, the processing time gets decreases.

Keywords: Hadoop Distributed File System, Hadoop, MapReduce, Small File Problem

1. INTRODUCTION

Hadoop is an open-source software framework developed for reliable, scalable, distributed computing and storage [10]. It

is gaining increasing popularity for building big data ecosystem such as Cloud based on it [5]. Hadoop distributed file

system (HDFS), which is inspired by Google file system [4], is composed of NameNode and DataNodes. NameNode

manages file system namespace and regulates client accesses. DataNodes provide block storage, serve I/O requests from

clients, and perform block operations upon instructions from NameNode. HDFS is a representative for Internet service

file systems running on clusters [6], and is widely adopted to support lots of Internet applications as file systems. HDFS is

designed for storing large files with streaming data access patterns [7], and stores small files inefficiently.

The Apache Hadoop framework is composed of the following modules:

Hadoop Common - contains libraries and utilities needed by other Hadoop modules.

Hadoop Distributed File System (HDFS) - a distributed file-system that stores data on the commodity machines,

providing very high aggregate bandwidth across the cluster.

Hadoop YARN - a resource-management platform responsible for managing compute resources in clusters and using

them for scheduling of users' applications.

Hadoop MapReduce - a programming model for large scale data processing.

All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common and thus

should be automatically handled in software by the framework. Apache Hadoop's MapReduce and HDFS components

originally derived respectively from Google's MapReduce and Google File System (GFS) papers. Beyond HDFS, YARN

and MapReduce, the entire Apache Hadoop platform is now commonly considered to consist of a number of related

projects as well Apache Pig, Apache Hive, Apache HBase, and others. For the end-users, though MapReduce Java code

is common, any programming language can be used with "Hadoop Streaming" to implement the "map" and "reduce"

parts of the user's program. Apache Pig, Apache Hive among other related projects expose higher level user interfaces

like Pig latin and a SQL variant respectively. The Hadoop framework itself is mostly written in the Java programming

language, with some native code in C and command line utilities written as shell-scripts[8].

2. RELATED WORK

Hadoop is an open-source Apache project. Yahoo! has developed and contributed to 80% of the core of Hadoop [3] from

Yahoo! described the detailed design and implementation of HDFS. They realized that their assumption that applications

would mainly create large files was flawed, and new classes of applications for HDFS would need to store a large number

of smaller files. As there is only one NameNode in Hadoop and it keeps all the metadata in main memory, a large number

of small files produce significant impact on the metadata performance of HDFS, and it appears to be the bottleneck for

handling metadata requests of massive small files [2]. HDFS is designed to read/write large files, and there is no

optimization for small files. Mismatch of accessing patterns will emerge if HDFS is used to read/write a large amount of

small files directly (Liu et al., 2009). Moreover, HDFS ignores the optimization on the native storage resource, and leads

An Innovative Strategy for Improved

Processing of Small Files in Hadoop

Priyanka Phakade

1

, Dr. Suhas Raut

2

1

N.K. Orchid College of Engineering and Technology, Solapur

2

N.K. Orchid College of Engineering and Technology, Solapur

International Journal of Application or Innovation in Engineering& Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 3, Issue 7, July 2014 ISSN 2319 - 4847

Volume 3, Issue 7, July 2014 Page 279

to local disk access becoming a bottleneck. In addition, data prefetching is not employed to improve access performance

for HDFS[1]. HAR is a general small file solution which archives small files into larger files [9].

3. SMALL FILE PROBLEM

The Hadoop Distributed File System (HDFS) is a distributed file system. It is mainly designed for batch processing of

large volume of data. The default block size of HDFS is 64MB. HDFS does not work well with lot of small files for the

two reasons. First reason is that each block will hold a single file. Thus, if there are so many small files then there will

have a lot of small blocks (smaller than the configured block size). Reading all these blocks, one by one means a lot of

time will be spent with disk seeks. Another reason is the NameNode keeps track of each file and each block (about 150

bytes for each) and stores this data into memory. A large number of files will occupy more memory [11].

4. PROPOSED SYSTEM

The Hadoop Distributed File System (HDFS) is designed to store and process large data sets. However, storing a large

number of small files in HDFS is inefficient. The large number of small files increases the number of mappers, less input

for each map task and overall processing time. Hadoop works better with a small number of large files than a large

number of small files. Compare a 2 GB file broken into thirty two 64 MB blocks and 20,000 or so 100 KB files. The

20,000 files use one map each, and the job time can be tens or hundreds of times slower than the equivalent one with a

single input file & it uses 32 map tasks. The situation is alleviated by modifying InputFormat, which will be designed to

work well with small files. FileInputFormat creates a split per file. InputFormat will be modified in such a way that many

files will be combined into single split so that each mapper will have more to process. Therefore, as the each mapper will

get sufficient input to process, the job completion time becomes less. In this way, this method minimizes the overall

processing time. Fig.2 shows the proposed system for processing large number of small files. In first step, we will have to

implement the middleware between the HDFS & HDFS Client. HDFS Client will request for connection to NameNode.

NameNode will accept the connection. When HDFSClient will wish to store files in HDFS then HDFS client will want to

take permission from NameNode. NameNode will grant or deny the permission. If NameNode does not allow to HDFS

Client for storing files into HDFS then HDFS Client wont store files into HDFS and the connection between the HDFS

Client & NameNode will be closed. Otherwise HDFS Client will store the files into HDFS. Splits will be provided as an

input to each DataNode. When a map task will be completed, it will generate the intermediate result which is given to

reducer. After getting the input, the reducer will give the sort-merge output & finally the result will be stored on HDFS.

Here, multiple reducers will be used for taking advantage of parallelism.

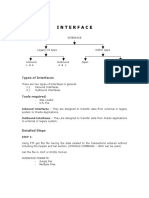

Figure 1: Workflow of proposed system

5. EXPECTED PERFORMANCE IMPROVEMENT

In existing HDFS system, the Mapper maps input key/value pairs to a set of intermediate key/value pairs. Maps are the

individual task that receives input records & results into intermediate records. The intermediate records do not need to be

of the same type as the input records. A given input key/value pair may map to zero or many output pairs. The Hadoop

MapReduce framework spawns one map task for each InputSplit generated by the InputFormat for the job. Reducer

reduces a set of intermediate values which share a key to a smaller set of values. Multiple mappers & Single reducer is

used for Word-Count application. As shown in result, hadoop job is executed on NameNode. For testing, 15,000 files

were used for processing on Word-Count application. HDFS Client stores 5000 small files in HDFS System. In existing

HDFS system, the word count application assigns 2546 map tasks & one reduce task to process those files. Processing

time for 15000 test files was observed to be 1697 seconds.

International Journal of Application or Innovation in Engineering& Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 3, Issue 7, July 2014 ISSN 2319 - 4847

Volume 3, Issue 7, July 2014 Page 280

In our proposed system performance be improved by modifying InputFormat class. InputFormat is responsible for

splitting the input files. InputFormat will be modified in such a way that multiple files are combined into a single split.

So, the map task will get more input to process, unlike existing system. As a result, the time required to process large

number of small files will be minimized. In addition, multiple reducers will be used for taking advantage of parallelism.

We expect at least 50% performance improvement with our proposed system.

6. CONCLUSION

We have studied performance behavior of HDFS for small file processing. Considerable time is needed to process a large

number of small files. We are proposing innovative method for processing large number of small files. In this method, we

will have to implement middleware between the HDFS Client & Hadoop Distributed File System. Also, we will have to

modify InputFormat & task management of MapReduce framework. In existing system, the map tasks receives single

block of input at a time. As there are so many small files to process, each map task requires less input for process. So it

requires too much time for processing large number of small files. In proposed system, multiple files are combined into

single split which is given as an input to map task. So, the number of map tasks has decreased by giving more input to

each map task. In this way, the processing time required for many small files becomes less.

REFERENCES

[1.] Shafer J, Rixner S, Cox A. The hadoop distributed filesystem: balancing portability and performance. In: IEEE

international symposium on performance analysis of systems & software (ISPASS). IEEE; 2010. p. 12233.

[2.] Shvachko K, Kuang H, Radia S, Chansler R. The hadoop distributed file system. In: IEEE 26th symposium on mass

storage systems and technologies (MSST). IEEE; 2010. p. 110

[3.] Mackey G, Sehrish S, Wang J. Improving metadata management for small files in hdfs. In: IEEE international

conference on cluster computing and workshops, 2009. CLUSTER09. IEEE; 2009. p. 14.

[4.] S. Ghemawat, H. Gobioff, and S. Leung, The Google File System, Proc. of the 19th ACM Symp. on Operating

System Principles, pp. 2943, 2003.

[5.] Feng Wang, Jie Qiu, Jie Yang, Bo Dong, Xinhui Li, and Ying Li, Hadoop high availability through metadata

replication, Proc. of the First CIKM Workshop on Cloud Data Management, pp. 37-44, 2009.

[6.] W. Tantisiriroj, S. Patil, and G. Gibson, Data-intensive file systems for internet services: A rose by any other name,

Tech. Report CMU-PDL-08-114, Oct. 2008.

[7.] Tom White. Hadoop: The Definitive Guide. OReilly Media, Inc. June 2009.

[8.] Apache hadoop http://en.wikipedia.org/wiki/Apache_Hadoop

[9.] Hadoop archives, http://hadoop.apache.org/common/docs/current/hadoop_archives.html.

[10.] Hadoop official site, http://hadoop.apache.org/.

[11.] Solving the Small Files Problem in Apache Hadoop: Appending and Merging in HDFS

http://pastiaro.wordpress.com/2013/06/05/solving-the-small-files-problem-in-apache-hadoop-appending-and-

merging-in-hdfs/

Anda mungkin juga menyukai

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (121)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (588)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (266)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- Abap Notes PDFDokumen580 halamanAbap Notes PDFmuditBelum ada peringkat

- Disaster Recovery and Data Replication ArchitecturesDokumen22 halamanDisaster Recovery and Data Replication Architecturessreeni17Belum ada peringkat

- Cockroach DBDokumen37 halamanCockroach DBMayur PatilBelum ada peringkat

- Performance Tuning - Expert Panel PDFDokumen135 halamanPerformance Tuning - Expert Panel PDFAmit VermaBelum ada peringkat

- Design and Detection of Fruits and Vegetable Spoiled Detetction SystemDokumen8 halamanDesign and Detection of Fruits and Vegetable Spoiled Detetction SystemInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Impact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryDokumen8 halamanImpact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesDokumen6 halamanDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Analysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyDokumen6 halamanAnalysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- THE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSDokumen7 halamanTHE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Study of Customer Experience and Uses of Uber Cab Services in MumbaiDokumen12 halamanStudy of Customer Experience and Uses of Uber Cab Services in MumbaiInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Soil Stabilization of Road by Using Spent WashDokumen7 halamanSoil Stabilization of Road by Using Spent WashInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewDokumen9 halamanAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- The Mexican Innovation System: A System's Dynamics PerspectiveDokumen12 halamanThe Mexican Innovation System: A System's Dynamics PerspectiveInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- A Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)Dokumen10 halamanA Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)International Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Staycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityDokumen10 halamanStaycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Performance of Short Transmission Line Using Mathematical MethodDokumen8 halamanPerformance of Short Transmission Line Using Mathematical MethodInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Synthetic Datasets For Myocardial Infarction Based On Actual DatasetsDokumen9 halamanSynthetic Datasets For Myocardial Infarction Based On Actual DatasetsInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- A Deep Learning Based Assistant For The Visually ImpairedDokumen11 halamanA Deep Learning Based Assistant For The Visually ImpairedInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Anchoring of Inflation Expectations and Monetary Policy Transparency in IndiaDokumen9 halamanAnchoring of Inflation Expectations and Monetary Policy Transparency in IndiaInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Design and Manufacturing of 6V 120ah Battery Container Mould For Train Lighting ApplicationDokumen13 halamanDesign and Manufacturing of 6V 120ah Battery Container Mould For Train Lighting ApplicationInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Ijaiem 2021 01 28 6Dokumen9 halamanIjaiem 2021 01 28 6International Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Swot Analysis of Backwater Tourism With Special Reference To Alappuzha DistrictDokumen5 halamanSwot Analysis of Backwater Tourism With Special Reference To Alappuzha DistrictInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- The Effect of Work Involvement and Work Stress On Employee Performance: A Case Study of Forged Wheel Plant, IndiaDokumen5 halamanThe Effect of Work Involvement and Work Stress On Employee Performance: A Case Study of Forged Wheel Plant, IndiaInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Error Back Propagation AlgorithmDokumen14 halamanError Back Propagation Algorithmkaran26121989Belum ada peringkat

- 1536594096226resume NikitaDokumen4 halaman1536594096226resume NikitaAnonymous L83ttOwAeHBelum ada peringkat

- C++ Coding StandardsDokumen83 halamanC++ Coding StandardsPranay_Kishore_6442Belum ada peringkat

- MSinfo 32Dokumen81 halamanMSinfo 32stevetorpeyBelum ada peringkat

- AcknowledgementDokumen4 halamanAcknowledgementritiBelum ada peringkat

- Lectures2 3Dokumen55 halamanLectures2 3Apple ChiuBelum ada peringkat

- IndexDokumen6 halamanIndexrupesh guptaBelum ada peringkat

- Peer To Peer Network in Distributed SystemsDokumen88 halamanPeer To Peer Network in Distributed SystemsRahul BatraBelum ada peringkat

- Cobol 160Dokumen69 halamanCobol 160sivakumar1.7.855836Belum ada peringkat

- Forecasting Gold Prices Using Fuzzy C MeansDokumen8 halamanForecasting Gold Prices Using Fuzzy C MeansJournal of ComputingBelum ada peringkat

- Introduction 8086Dokumen42 halamanIntroduction 8086Vishnu Pavan100% (1)

- Ee-323 Digital Signal Processing Complex Engineering Problem (Cep)Dokumen23 halamanEe-323 Digital Signal Processing Complex Engineering Problem (Cep)Rubina KhattakBelum ada peringkat

- Unix by Rahul Singh3Dokumen8 halamanUnix by Rahul Singh3Prakash WaghmodeBelum ada peringkat

- Ict ReviewerDokumen6 halamanIct ReviewerJanidelle SwiftieBelum ada peringkat

- Only Root Can Write To OSX Volumes - Can't Change Permissions Within PDFDokumen40 halamanOnly Root Can Write To OSX Volumes - Can't Change Permissions Within PDFLeonardo CostaBelum ada peringkat

- Google Slides Quiz 1Dokumen10 halamanGoogle Slides Quiz 1api-354896636Belum ada peringkat

- WinPIC800programmerUserGuide Include877a Aug09Dokumen2 halamanWinPIC800programmerUserGuide Include877a Aug09Murali Krishna GbBelum ada peringkat

- Class Notes - EnetsDokumen9 halamanClass Notes - EnetsUthara RamanandanBelum ada peringkat

- JOptionPane ClassDokumen19 halamanJOptionPane ClassmrchungBelum ada peringkat

- Web Services in SAP Business ByDesign PDFDokumen72 halamanWeb Services in SAP Business ByDesign PDFashwiniBelum ada peringkat

- Plcio - V4 3 0Dokumen92 halamanPlcio - V4 3 0fusti_88Belum ada peringkat

- (MAN-025!1!0) Trigno SDK User GuideDokumen21 halaman(MAN-025!1!0) Trigno SDK User GuideAndrea SpencerBelum ada peringkat

- Interface: Types of InterfacesDokumen3 halamanInterface: Types of InterfacesashibekBelum ada peringkat

- Code Review Checklist: Seq No. Issue Priority Code Segment Status/CommentsDokumen5 halamanCode Review Checklist: Seq No. Issue Priority Code Segment Status/CommentsSurya KiranBelum ada peringkat

- Lab 07 The Linked List As A Data StructureDokumen4 halamanLab 07 The Linked List As A Data StructurevignesvaranBelum ada peringkat

- Usando Caché - ODBC PDFDokumen38 halamanUsando Caché - ODBC PDFlvaldeirBelum ada peringkat