Different Simplex Methods

Diunggah oleh

daselknamDeskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Different Simplex Methods

Diunggah oleh

daselknamHak Cipta:

Format Tersedia

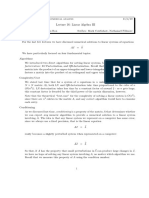

Implementation of the Simplex Algorithm

Kurt Mehlhorn

May 18, 2010

1 Overview

There are many excellent public-domain implementations of the simplex algorithm; we list some

of them in Section 2. All of them are variants of the revised simplex algorithm which we explain

in Sections 4 to 6. The simplex algorithm is a numerical algorithm; it computes with real num-

bers. Almost all implementations of the simplex algorithm use oating point arithmetic; oating

point arithmetic incurs round-off error and hence none of the implementations mentioned in

Section 2 are guaranteed to nd optimal solutions. They may also declare a feasible problem

infeasible or vice versa. The papers [DFK

+

03, ACDE07] give examples where CPLEX a very

popular commercial solver and SoPlex a very popular public-domain solver fail to nd op-

timal solutions. However, for most instances the inexact solvers return optimal or near optimal

solutions. These solutions can be taken as a starting point for an exact solver as we discuss in

Section 8. Large linear programs have sparse constraint matrices, i.e., the number of nonzero

entries of the constraint matrix is small compared to the total number of entries. For the sake of

efciency, it is important to exploit sparsity.

2 Resources

There are many excellent implementations of the simplex algorithm available. KM has used

the public-domain solver SoPlex (http://soplex.zib.de/) and the commercial solver

CPLEX.

H. Mittelmann maintains a page (http://plato.asu.edu/ftp/lpfree.html) where

he compares the following LP-solvers. I quote from his web-page.

This benchmark was run on a 2.667GHz Intel Core 2 processor under Linux. The MPS-

datales for all testcases are in one of (see column s) miplib.zib.de/ [1], plato.asu.

edu/ftp/lptestset/[2] www.sztaki.hu/

meszaros/public_ftp/lptestset/

(NETLIB[3], MISC[4], PROBLEMATIC[5], STOCHLP[6], KENNINGTON[7], INFEAS[8])

NOTE: les in [2-8] need to be expanded with emps in same directory!

The following codes were tested:

BPMPD-2.21 www-neos.mcs.anl.gov/neos/solvers/lp:bpmpd/MPS.html (run locally)

1

CLP-1.11.0 projects.coin-or.org/Clp PCx-1.1 www-fp.mcs.anl.gov/OTC/Tools/PCx/

QSOPT-1.0 www.isye.gatech.edu/ wcook/qsopt/

SOPLEX-1.4.1 soplex.zib.de/

GLPK-4.36 www.gnu.org/software/glpk/

Times are user times in secs including input.

================================================================

s problem BPMPD CLP PCX QSOPT SOPLEX GLPK

================================================================

2 cont1 15 4011 22 13241 4127

2 cont11 26 106 51341

2 cont4 20 2062 44 9682 3355

================================================================

Problem sizes

problem rows columns nonzeros

===============================================

cont1 160793 40398 399991

cont11 160793 80396 439989

cont4 160793 40398 398399

===============================================

3 Linear Programming in Practice

As you can see from the examples above, LPs can involve a larger number of constraints (160

thousand in the examples above) and variables (40 to 80 thousand in the examples above). How-

ever, the constraint matrix tends to be sparse, i.e., the average number of nonzero entries per

row or column tend to be small (in the examples about, about 3 nonzero entries per row and 10

nonzero entries per column). Dense constraint matrices also arise in practice. However, then the

number of constraints and variables are much smaller. Large LPs have up to 10 million nonzero

entries in the constraint matrix.

The main concerns for a good implementation of the simplex method are:

exploit sparsity of the constraint matrix so as to make iterations fast and keep the space

requirement low and

numerical stability.

I will address the rst issue rst and turn to the second issue in Section 7.

2

4 The Standard Simplex Algorithm

We consider LPs in standard form: minimize c

T

x subject to Ax = b and x 0. The constraint

matrix A has m rows and n columns where m < n; A is assumed to have full row rank. Let B be

the basic variables and N the non-basic variables. A

B

is the mm submatrix of A selected by the

basis. The standard simplex algorithm maintains:

the basic solution x

B

= A

1

B

b with x

B

0.

the dictionary A

1

B

A,

the objective value z = c

T

B

x

B

= c

T

B

A

1

B

b, and

the vector of reduced costs c

T

c

T

B

A

1

B

A. The entries corresponding to the basic variables

are zero.

In each iteration, we do the following:

1. nd a negative reduced cost, say in column j. If there is none, we terminate. The current

solution is optimal.

2. determine the basic variable b

i

to leave the basis. For this we inspect the j-th column of

the dictionary. If all entries are nonnegative, the problem is unbounded. Let d

B

=A

1

B

A

j

,

where A

j

is the j-th column of A. Among the b

i

B with d

b

i

< 0, we nd the one that

minimizes

x

b

i

|d

b

i

|

.

3. We move to the basis B \ b

i

j. For this purpose, we perform row operations on the

tableau. We subtract suitable multiples of the row corresponding to the leaving variable

from all other rows to as to generate a unit vector in the j-th row. This will also update

cost vector, objective value, and the basic solution.

The cost for the update is O(nm). For n >m =10

6

, this is prohibitive. Even more prohibitive

is that the matrix A

1

B

A

N

, where A

N

is the part of A corresponding to non-basic variables is dense.

Thus the space requirement of the tableau implementation is O(n(nm)).

5 The Revised Simplex Method

The revised simplex method reduces the cost of an update to O(m

2

+n) and the space requirement

to O(m

2

+n+nz(A)), where nz(A) is the number of nonzero entries of the constraint matrix.

How does one store a sparse matrix? One could store it as n +m linear lists, one for each

row and column. The linear list corresponding to a row contains the nonzero entries of the row

(together with the indices of these entries). With this representation, the cost of a matrix-vector

product Ax is O(nz(A)). For each row of A, we do the following.

3

assume that row r has nonzero entries (r, j

1

, a

r, j

1

), . . . (r, j

k

, a

r, j

k

).

s :=0, :=1

while ( k) do

s :=s +a

r, j

, = +1

end while

We no longer maintain the dictionary A

1

B

A. We now only maintain the inverse A

1

B

of the

basis matrix. We will rene this further in later sections. An iteration becomes:

1. compute x

B

= A

1

B

b. This is a matrix-vector product and takes time O(m

2

).

2. compute the vector c

T

c

T

B

A

1

B

A of reduced costs by rst computing y

T

= c

T

B

A

1

B

and then

y

T

A. So we are computing two matrix-vector products; the costs are O(m

2

) and O(nz(A)),

respectively.

3. select the variable j that should enter the basis.

4. compute the relevant column of the dictionary as d

B

=A

1

B

A

j

; this takes time O(m

2

).

5. determine the variable b

i

that should leave the basis; this takes time O(m

2

).

6. update A

1

B

by row operations. We are now performing row operations on a matrix of size

mm+1 and hence this step takes time O(m

2

).

The space requirement is O(m

2

) for the inverse of the basis matrix plus O(nz(A)) for the

constraint matrix plus O(n) for the vector of reduced costs.

6 Sparse Revised Simplex Method

The inverse of sparse matrix tends to be dense. Can we avoid to store the inverse of A

B

? Is there

are better way to maintain the inverse of A

B

?

An LU-decomposition of a matrix A

B

is a pair (L,U) of matrices such that A

B

= LU, L is a

lower diagonal matrix (all entries above the diagonal are zero) and U is an upper diagonal matrix.

The LU-decomposition is unique, if we require, in addition, that L has ones on the diagonal. Here

are some useful facts about LU-decompositions.

LU-decompositions can be computed by Gaussian elimination.

Sparse matrices frequently have sparse LU-decompositions. This might require to permute

the rows and columns of A

B

. Observe that we may permute the rows and columns of A

B

.

Permuting columns corresponds to renumbering variables and permuting rows corresponds

to renumbering constraints.

Consider the selection of the rst pivot. By interchanging columns and rows, we may

move any element of the matrix to the left upper corner. There are two considerations in

choosing the element.

4

the element should not be too small (certainly nonzero) for the sake of numerical

stability. Recall that one is dividing by the pivot element in Gaussian elimination.

We subtract a multiple of the row containing the pivot from any row where the pivot

column contains a nonzero element. Thus the number of nonzero elements created

by the step is the product of the number of nonzero elements in the row and column

containing the pivot. This number should be small; this rule is know as Markowitz

criterion.

Given an LU-decomposition of a matrix A

B

=LU, it is easy to solve a linear system A

B

x =

b in time O(nz(L) +nz(U)). We have A

B

x = (LU)x = L(Ux) = b. We therefore rst solve

Ly = b and then Ux = y. Linear systems with a lower or upper diagonal matrix are easily

solved by backward or forward substitution. For example, in order to solve Ly =b, we rst

compute the rst entry of y using the rst equation, substitute this value into the second

equation and solve for the second variable, and so on. The time for this is proportional to

the number of nonzero entries of L.

Exercise 1 Show that the inverse of an upper diagonal matrix is an upper diagonal matrix and

can be computed in time proportional to the product of the dimension of the matrix times the

number of nonzero entries of the matrix. Also, show that the product of two upper diagonal

matrices is upper diagonal.

The idea is now to use the LU-decomposition of A

B

wherever we used A

1

B

in the preceding

section, i.e., instead of computing A

1

B

b, we solve LUx

B

= b by rst computing y with Ly = b

and then x

B

with Ux

B

= y. Please convince yourself that steps 1) to 5) are readily performed.

We still need to discuss what we do in step 6). Step 6) asks us to update the inverse of the basis

matrix. We now need to update the LU-decomposition.

The new basis matrix

A

B

is obtained from A

B

by replacing column b

i

of A

B

by the j-th column

of A. Observe that A

B

e

b

i

, where e

b

i

is the b

i

-th unit vector yields the b

i

-th column of A

B

and hence

A

B

e

b

i

e

T

b

i

is a mm matrix whose b

i

-th column is equal to A

B

e

b

i

and which has zeros everywhere

else. Similarly A

j

e

T

b

i

is a matrix with A

j

in column b

i

and zeros everywhere else. Thus

A

B

= A

B

A

B

e

b

i

e

T

b

i

+A

j

e

T

b

i

= A

B

+(A

j

A

B

e

b

i

)e

T

b

i

.

Multiplying by L

1

from the left yields

L

1

A

B

=U +(L

1

A

j

Ue

b

i

)e

T

b

i

=: R.

The right hand side R is the matrix U with its b

i

-th column replaced by the vector L

1

A

j

; in

general, this is a sparse vector, because L

1

is sparse and A

j

is sparse.

We next compute an LU-factorization of R, say R=

L

U. This is not a general LU-factorization

since R differs from an upper triangular matrix in only one column. We refer the reader to [SS93]

and its references for a detailed discussion on how to compute the LU-decomposition of a nearly

upper diagonal matrix sufciently.

5

We can now write

L

1

A

B

= R =

L

U

and hence have

A

B

= L

L

U.

Since the product of two lower triangular matrices is a lower triangular matrix, we now have an

LU-decomposition of

A

B

.

Actually, it is better to keep the factorization L

L

U and to use A

1

B

=U

1

L

1

L

1

. In this way,

the factorization of the basis matrix grows by one factor in every iteration. Accordingly, the costs

of the steps 1) to 5) grow in each iteration. When the costs of these steps become too large, one

refactors A

B

from scratch.

7 Numerical Stability

LP-solvers use oating point arithmetic and hence the arithmetic incurs round-off error. In a row

operation, one rst scales one of the rows (by dividing by the pivot element) and then subtracts a

multiple of this row from another row. Divisions by small elements are numerically instable and

hence to be avoided.

An Anecdote: KM taught this course in the year 2000. As part of the course, he organized a

competition. He asked the students to compute the optimal solution for an NP-complete cutting-

stock problem.

He also took part of the competition. He used a heuristic to produce feasible solutions and

used linear programming to compute lower bounds. He found a solution of objective value 213

and the LP proved a lower bound of 212.000001 (KM does not recall the true numbers). Since

the solution had to be integral, he knew that he had found the optimum and stopped his heuristic.

However, in class a student produced an integral solution of value 212. When KM looked deeper,

he found that value 212.000001 was larger than 212 due to round-off errors.

This experience motivated the research reported on in the next section.

8 Exact Solvers

How can one avoid the pitfalls of approximate arithmetic. The answer is easy. Use exact arith-

metic. Under the assumption that all coefcients of the problem are integral (or more generally

rational), rational arithmetic sufces. The drawback of this approach is that rational arithmetic

is much slower than oating point arithmetic and hence only small problems can be solved.

The paper [DFK

+

03] pioneered a more clever approach. The authors argued that inexact

solvers usually nd an optimal or a near-optimal basis. So they took the basis returned by the

inexact solver as the starting basis for an exact solver implemented in rational arithmetic. For a

collection of medium size LPs, they found that CPLEX found the optimal basis in all but two

6

cases. In the cases, where it did not nd the optimum a small number of pivots sufced to reach

the optimum.

[ACDE07] took this a step further. They used oating point arithmetic even more aggres-

sively. Instead of switching to rational arithmetic they switched to higher precision oating

arithmetic for computing the LU-decomposition of the constraint matrix. Then they rounded the

oating point numbers computed in this way to rational numbers (with small denominators) and

tried to verify that the decomposition is correct for the rational numbers obtained in this way.

This approach gave them a tremendous speed-up over [DFK

+

03].

Converting Reals to Rationals: The standard method for nding a rational number with small

denominator close to a real number is to compute the continued fraction expansion of the real

numbers; see the lectures notes of Computational Geometry and Geometric Computing (winter

term 09/10). For example,

0.6705 = 0+

1

1/0.6705

= 0+

1

10000/6705

= 0+

1

1+3295/6705

= 0+

1

1+

1

6705/3295

= 0+

1

1+

1

2+115/3295

= 0+

1

1+

1

2+

1

3295/115

= 0+

1

1+

1

2+

1

28+75/115

= 0+

1

1+

1

2+

1

28+

1

115/75

In this way, we obtain the following rational approximations with small denominator:

0 0+

1

1

= 1 0+

1

1+

1

2

=

2

3

0+

1

1+

1

2+

1

28

=

57

85

.

References

[ACDE07] D. Applegate, W. Cook, S. Dash, and D. Espinoza. Exact solution to linear program-

ming problems. Operations Research Letters, 35:693699, 2007.

[DFK

+

03] M. Dhiaoui, S. Funke, C. Kwappik, K. Mehlhorn, M. Seel, E. Sch omer, R. Schulte,

and D. Weber. Certifying and Repairing Solutions to Large LPs, How Good are

LP-solvers?. In SODA, pages 255256, 2003.

[SS93] L. Suhl and U. Suhl. A fast LU update for linear programming. Annals of Operations

Research, 43:33 47, 1993.

7

Anda mungkin juga menyukai

- Sparse Implementation of Revised Simplex Algorithms On Parallel ComputersDokumen8 halamanSparse Implementation of Revised Simplex Algorithms On Parallel ComputersKaram SalehBelum ada peringkat

- LP NotesDokumen7 halamanLP NotesSumit VermaBelum ada peringkat

- Matlab Tutorial of Modelling of A Slider Crank MechanismDokumen14 halamanMatlab Tutorial of Modelling of A Slider Crank MechanismTrolldaddyBelum ada peringkat

- BM Pars TacsDokumen13 halamanBM Pars TacsJ Luis MlsBelum ada peringkat

- Efficient Parallel Non-Negative Least Squares On Multi-Core ArchitecturesDokumen16 halamanEfficient Parallel Non-Negative Least Squares On Multi-Core ArchitecturesJason StanleyBelum ada peringkat

- A Computational Comparison of Two Different Approaches To Solve The Multi-Area Optimal Power FlowDokumen4 halamanA Computational Comparison of Two Different Approaches To Solve The Multi-Area Optimal Power FlowFlores JesusBelum ada peringkat

- An Economical Method For Determining The Smallest Eigenvalues of Large Linear SystemsDokumen9 halamanAn Economical Method For Determining The Smallest Eigenvalues of Large Linear Systemsjuan carlos molano toroBelum ada peringkat

- NA FinalExam Summer15 PDFDokumen8 halamanNA FinalExam Summer15 PDFAnonymous jITO0qQHBelum ada peringkat

- Linear Programming: Data Structures and Algorithms A.G. MalamosDokumen32 halamanLinear Programming: Data Structures and Algorithms A.G. MalamosAlem Abebe AryoBelum ada peringkat

- MIR2012 Lec1Dokumen37 halamanMIR2012 Lec1yeesuenBelum ada peringkat

- Linear AlgebraDokumen65 halamanLinear AlgebraWilliam Hartono100% (1)

- Lecture 16: Linear Algebra III: cs412: Introduction To Numerical AnalysisDokumen7 halamanLecture 16: Linear Algebra III: cs412: Introduction To Numerical AnalysisZachary MarionBelum ada peringkat

- 2 Partial Pivoting, LU Factorization: 2.1 An ExampleDokumen11 halaman2 Partial Pivoting, LU Factorization: 2.1 An ExampleJuan Carlos De Los Santos SantosBelum ada peringkat

- Systems of Linear EquationsDokumen35 halamanSystems of Linear EquationsJulija KaraliunaiteBelum ada peringkat

- Linear AlgebraDokumen65 halamanLinear AlgebraMariyah ZainBelum ada peringkat

- Linear Equations and Matrices: I J I IDokumen10 halamanLinear Equations and Matrices: I J I IspamjimtehBelum ada peringkat

- Lab 1Dokumen11 halamanLab 1RanaAshBelum ada peringkat

- Lab 2Dokumen14 halamanLab 2Tahsin Zaman TalhaBelum ada peringkat

- Project, Due: Friday, 2/23Dokumen2 halamanProject, Due: Friday, 2/23my nameBelum ada peringkat

- Linear Programming and The Simplex Method: David GaleDokumen6 halamanLinear Programming and The Simplex Method: David GaleRichard Canar PerezBelum ada peringkat

- Lecture Notes Math 307Dokumen181 halamanLecture Notes Math 307homopeniscuntfuck100% (1)

- Routine Implementing The Simplex Method - NUMERICAL RECIPESDokumen12 halamanRoutine Implementing The Simplex Method - NUMERICAL RECIPESs8nd11d UNIBelum ada peringkat

- Sym Band DocDokumen7 halamanSym Band DocAlex CooperBelum ada peringkat

- Vibrations Using MatlabDokumen170 halamanVibrations Using MatlabSam67% (3)

- Carnegie-Mellon University, Department of Electrical Engineering, Pittsburgh, PA 15213, USADokumen4 halamanCarnegie-Mellon University, Department of Electrical Engineering, Pittsburgh, PA 15213, USAjuan carlos molano toroBelum ada peringkat

- Planar Trusses Solution Via Numerical MethodDokumen42 halamanPlanar Trusses Solution Via Numerical MethodIzzaty RoslanBelum ada peringkat

- Lec3 PDFDokumen9 halamanLec3 PDFyacp16761Belum ada peringkat

- NSG 2009 Loke & WilkinsonDokumen22 halamanNSG 2009 Loke & Wilkinsonfsg1985Belum ada peringkat

- Local Search in Smooth Convex Sets: CX Ax B A I A A A A A A O D X Ax B X CX CX O A I J Z O Opt D X X C A B P CXDokumen9 halamanLocal Search in Smooth Convex Sets: CX Ax B A I A A A A A A O D X Ax B X CX CX O A I J Z O Opt D X X C A B P CXhellothapliyalBelum ada peringkat

- LipsolDokumen26 halamanLipsolCmpt CmptBelum ada peringkat

- Variable Neighborhood Search For The Probabilistic Satisfiability ProblemDokumen6 halamanVariable Neighborhood Search For The Probabilistic Satisfiability Problemsk8888888Belum ada peringkat

- Gaussian EliminationDokumen22 halamanGaussian Eliminationnefoxy100% (1)

- Multivariate Data Analysis: Universiteit Van AmsterdamDokumen28 halamanMultivariate Data Analysis: Universiteit Van AmsterdamcfisicasterBelum ada peringkat

- SEAS Short Course On MATLAB Tutorial 1: An Introduction To MATLABDokumen16 halamanSEAS Short Course On MATLAB Tutorial 1: An Introduction To MATLABdans_lwBelum ada peringkat

- Chapter 6. Power Flow Analysis: First The Generators Are Replaced by EquivalentDokumen8 halamanChapter 6. Power Flow Analysis: First The Generators Are Replaced by EquivalentMadhur MayankBelum ada peringkat

- 10 Operation Count Numerical Linear AlgebraDokumen7 halaman10 Operation Count Numerical Linear AlgebraJeevan Jyoti BaliarsinghBelum ada peringkat

- Indefinite Symmetric SystemDokumen18 halamanIndefinite Symmetric SystemsalmansalsabilaBelum ada peringkat

- 2.29 / 2.290 Numerical Fluid Mechanics - Spring 2021 Problem Set 2Dokumen5 halaman2.29 / 2.290 Numerical Fluid Mechanics - Spring 2021 Problem Set 2Aman JalanBelum ada peringkat

- 3 Gaussian Elimination (Row Reduction)Dokumen7 halaman3 Gaussian Elimination (Row Reduction)fardin222Belum ada peringkat

- Approximating The Logarithm of A Matrix To Specified AccuracyDokumen14 halamanApproximating The Logarithm of A Matrix To Specified AccuracyfewieBelum ada peringkat

- Web AppendixDokumen31 halamanWeb AppendixYassin RoslanBelum ada peringkat

- Current Trends in Numerical Linear AlgebraDokumen19 halamanCurrent Trends in Numerical Linear Algebramanasdutta3495Belum ada peringkat

- Java Programming For BSC It 4th Sem Kuvempu UniversityDokumen52 halamanJava Programming For BSC It 4th Sem Kuvempu UniversityUsha Shaw100% (1)

- Pre Solving in Linear ProgrammingDokumen16 halamanPre Solving in Linear ProgrammingRenato Almeida JrBelum ada peringkat

- Computational Physics: - The Programming Language I'll Use IsDokumen19 halamanComputational Physics: - The Programming Language I'll Use IsUtkarsh GuptaBelum ada peringkat

- Matrix Chain MultiplicationDokumen11 halamanMatrix Chain MultiplicationamukhopadhyayBelum ada peringkat

- Simplex AlgorithmDokumen10 halamanSimplex AlgorithmGetachew MekonnenBelum ada peringkat

- A Fast LU Update For Linear ProgrammingDokumen15 halamanA Fast LU Update For Linear ProgrammingtestedbutaBelum ada peringkat

- Fast and Stable Least-Squares Approach For The Design of Linear Phase FIR FiltersDokumen9 halamanFast and Stable Least-Squares Approach For The Design of Linear Phase FIR Filtersjoussef19Belum ada peringkat

- Analysis of Algorithms, A Case Study: Determinants of Matrices With Polynomial EntriesDokumen10 halamanAnalysis of Algorithms, A Case Study: Determinants of Matrices With Polynomial EntriesNiltonJuniorBelum ada peringkat

- 21b Text PDFDokumen47 halaman21b Text PDFyoeluruBelum ada peringkat

- An Algorithm For Set Covering ProblemDokumen9 halamanAn Algorithm For Set Covering ProblemdewyekhaBelum ada peringkat

- Practice 6 8Dokumen12 halamanPractice 6 8Marisnelvys CabrejaBelum ada peringkat

- Solving Systems of Algebraic Equations: Daniel LazardDokumen27 halamanSolving Systems of Algebraic Equations: Daniel LazardErwan Christo Shui 廖Belum ada peringkat

- ECSE 420 - Parallel Cholesky Algorithm - ReportDokumen17 halamanECSE 420 - Parallel Cholesky Algorithm - ReportpiohmBelum ada peringkat

- Using The Deutsch-Jozsa Algorithm To Determine Parts of An Array and Apply A Specified Function To Each Independent PartDokumen22 halamanUsing The Deutsch-Jozsa Algorithm To Determine Parts of An Array and Apply A Specified Function To Each Independent PartAman MalikBelum ada peringkat

- A. H. Land A. G. Doig: Econometrica, Vol. 28, No. 3. (Jul., 1960), Pp. 497-520Dokumen27 halamanA. H. Land A. G. Doig: Econometrica, Vol. 28, No. 3. (Jul., 1960), Pp. 497-520john brownBelum ada peringkat

- Safe and Effective Determinant Evaluation: February 25, 1994Dokumen16 halamanSafe and Effective Determinant Evaluation: February 25, 1994David ImmanuelBelum ada peringkat

- MCMC OriginalDokumen6 halamanMCMC OriginalAleksey OrlovBelum ada peringkat

- Knox Variety FolderDokumen2 halamanKnox Variety FolderdaselknamBelum ada peringkat

- EN LTL Multileaf-Trio TechSheetDokumen1 halamanEN LTL Multileaf-Trio TechSheetdaselknamBelum ada peringkat

- CHAPTER 6 Time Value of MoneyDokumen44 halamanCHAPTER 6 Time Value of MoneyAhsanBelum ada peringkat

- AUS LTL AssortmentDokumen24 halamanAUS LTL AssortmentdaselknamBelum ada peringkat

- Risk and Rates of Return: Stand-Alone Risk Portfolio Risk Risk & Return: CAPM/SMLDokumen55 halamanRisk and Rates of Return: Stand-Alone Risk Portfolio Risk Risk & Return: CAPM/SMLBrix ArriolaBelum ada peringkat

- SalatrioDokumen4 halamanSalatriodaselknamBelum ada peringkat

- Crunchy LettuceDokumen6 halamanCrunchy LettucedaselknamBelum ada peringkat

- An Overview of Financial ManagementDokumen17 halamanAn Overview of Financial ManagementdaselknamBelum ada peringkat

- Sup 07Dokumen48 halamanSup 07Piyush AmbulgekarBelum ada peringkat

- Operation AnalyticsDokumen10 halamanOperation AnalyticsdaselknamBelum ada peringkat

- CHAPTER 3 Analysis of Financial StatementsDokumen37 halamanCHAPTER 3 Analysis of Financial Statementsmuhammadosama100% (1)

- Factor Analysis and Principal Component Analysis: Statistics For Marketing & Consumer ResearchDokumen68 halamanFactor Analysis and Principal Component Analysis: Statistics For Marketing & Consumer ResearchdaselknamBelum ada peringkat

- CH 02Dokumen30 halamanCH 02AhsanBelum ada peringkat

- 5220-Article Text-45397-1-10-20210202Dokumen10 halaman5220-Article Text-45397-1-10-20210202daselknamBelum ada peringkat

- HW2Dokumen1 halamanHW2daselknamBelum ada peringkat

- 5220-Article Text-45397-1-10-20210202Dokumen10 halaman5220-Article Text-45397-1-10-20210202daselknamBelum ada peringkat

- Agm Thesis Official FinalDokumen78 halamanAgm Thesis Official FinaldaselknamBelum ada peringkat

- Manual de Aquaponia FaoDokumen288 halamanManual de Aquaponia FaoYves Moreira100% (3)

- 7 4528 BorcinovaDokumen7 halaman7 4528 BorcinovaSyifani RahmaniaBelum ada peringkat

- Agm Thesis Official FinalDokumen78 halamanAgm Thesis Official FinaldaselknamBelum ada peringkat

- HW1Dokumen1 halamanHW1daselknamBelum ada peringkat

- HW4Dokumen1 halamanHW4daselknamBelum ada peringkat

- HW3Dokumen1 halamanHW3daselknamBelum ada peringkat

- WSJ ArticleDokumen3 halamanWSJ ArticledaselknamBelum ada peringkat

- Web4sa PDFDokumen77 halamanWeb4sa PDFdaselknamBelum ada peringkat

- Supply ChainDokumen50 halamanSupply ChaindaselknamBelum ada peringkat

- Ciml v0 - 99 All PDFDokumen227 halamanCiml v0 - 99 All PDFKleyton TürmeBelum ada peringkat

- Super Forecasters: Project Proposal PitchDokumen7 halamanSuper Forecasters: Project Proposal PitchdaselknamBelum ada peringkat

- Dynamic ProgrammingDokumen17 halamanDynamic ProgrammingdaselknamBelum ada peringkat

- Entity Transfer: Simulation With Arena, 4 EdDokumen25 halamanEntity Transfer: Simulation With Arena, 4 EddaselknamBelum ada peringkat

- Tutorial 03 - FSA and Turing MachineDokumen3 halamanTutorial 03 - FSA and Turing Machinej2yshjzzsxBelum ada peringkat

- Optimization PDFDokumen59 halamanOptimization PDFtewedajBelum ada peringkat

- UNIT-5 DAA Complete NotesDokumen52 halamanUNIT-5 DAA Complete NotesAman LakhwariaBelum ada peringkat

- Homework Set No. 8: 1. Simplest Problem Using Least Squares MethodDokumen3 halamanHomework Set No. 8: 1. Simplest Problem Using Least Squares MethodkelbmutsBelum ada peringkat

- CAED101 - DE CASTRO - ACN1 - Assignment ProblemDokumen2 halamanCAED101 - DE CASTRO - ACN1 - Assignment ProblemIra Grace De CastroBelum ada peringkat

- Cse 321 HW05Dokumen2 halamanCse 321 HW05tubaBelum ada peringkat

- Neural Network Pruning and Pruning Parameters: G. Thimm and E. FieslerDokumen2 halamanNeural Network Pruning and Pruning Parameters: G. Thimm and E. FieslerGsadeghyBelum ada peringkat

- Compilations of AlgorithmsDokumen28 halamanCompilations of AlgorithmsJessica NacesBelum ada peringkat

- MATA29 Assignment 1 - Functions (BLANK)Dokumen7 halamanMATA29 Assignment 1 - Functions (BLANK)annaBelum ada peringkat

- Neural NetworksDokumen3 halamanNeural NetworksMahesh MalkaniBelum ada peringkat

- Ma 321 22 4Dokumen24 halamanMa 321 22 4Jayesh JoshiBelum ada peringkat

- Polynomials Class 10Dokumen2 halamanPolynomials Class 10akshit bhambriBelum ada peringkat

- Quiz 1 - Special ProductsDokumen2 halamanQuiz 1 - Special ProductsAngelica Aga100% (1)

- EXERCISE 2. Solve The Following Problems Using Regula-Falsi Method and Show The GraphDokumen5 halamanEXERCISE 2. Solve The Following Problems Using Regula-Falsi Method and Show The GraphFarizza Ann KiseoBelum ada peringkat

- Concept of Divide and Conquer StrategyDokumen4 halamanConcept of Divide and Conquer StrategypatrickBelum ada peringkat

- Engineering Maths 3 (Week3)Dokumen21 halamanEngineering Maths 3 (Week3)Charles BongBelum ada peringkat

- 1X Polynomials2 2010Dokumen1 halaman1X Polynomials2 2010jsunil06274Belum ada peringkat

- Add Polynomials SEDokumen5 halamanAdd Polynomials SEPagoejaBelum ada peringkat

- Alignment CorrectionDokumen3 halamanAlignment CorrectionfaisalnadimBelum ada peringkat

- Lab 5 AnswersDokumen4 halamanLab 5 AnswersM a i n eBelum ada peringkat

- Time Table Problem Solving Using Graph ColoringDokumen15 halamanTime Table Problem Solving Using Graph ColoringSrinitya SuripeddiBelum ada peringkat

- Analytical and Numerical Methods For Structural Engg. - 17052019 - 123417PMDokumen2 halamanAnalytical and Numerical Methods For Structural Engg. - 17052019 - 123417PMHbk xboyBelum ada peringkat

- Applied Linear Algebra - Boyd Slides Part 7Dokumen6 halamanApplied Linear Algebra - Boyd Slides Part 7usmanali17Belum ada peringkat

- Module 4: Dynamic Programming: Design and Analysis of Algorithms 21CS42Dokumen105 halamanModule 4: Dynamic Programming: Design and Analysis of Algorithms 21CS42Prajwal OconnerBelum ada peringkat

- AI BrochureDokumen3 halamanAI Brochurejbsimha3629Belum ada peringkat

- Lagrange Multiplier - Using - FminconDokumen3 halamanLagrange Multiplier - Using - FminconAleyna BozkurtBelum ada peringkat

- Grade 8 Multiplication of Polynomials inDokumen8 halamanGrade 8 Multiplication of Polynomials inDinesh MandalBelum ada peringkat

- NUMSOLDokumen5 halamanNUMSOLGwyneth Lynn C. InchanBelum ada peringkat

- Lecture 01 - IntroDokumen20 halamanLecture 01 - Introbillie quantBelum ada peringkat

- Scary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldDari EverandScary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldPenilaian: 4.5 dari 5 bintang4.5/5 (55)

- Generative AI: The Insights You Need from Harvard Business ReviewDari EverandGenerative AI: The Insights You Need from Harvard Business ReviewPenilaian: 4.5 dari 5 bintang4.5/5 (2)

- ChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindDari EverandChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindBelum ada peringkat

- Alibaba: The House That Jack Ma BuiltDari EverandAlibaba: The House That Jack Ma BuiltPenilaian: 3.5 dari 5 bintang3.5/5 (29)

- Algorithms to Live By: The Computer Science of Human DecisionsDari EverandAlgorithms to Live By: The Computer Science of Human DecisionsPenilaian: 4.5 dari 5 bintang4.5/5 (722)

- Defensive Cyber Mastery: Expert Strategies for Unbeatable Personal and Business SecurityDari EverandDefensive Cyber Mastery: Expert Strategies for Unbeatable Personal and Business SecurityPenilaian: 5 dari 5 bintang5/5 (1)

- ChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveDari EverandChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveBelum ada peringkat

- Cyber War: The Next Threat to National Security and What to Do About ItDari EverandCyber War: The Next Threat to National Security and What to Do About ItPenilaian: 3.5 dari 5 bintang3.5/5 (66)

- ChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessDari EverandChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessBelum ada peringkat

- Chip War: The Quest to Dominate the World's Most Critical TechnologyDari EverandChip War: The Quest to Dominate the World's Most Critical TechnologyPenilaian: 4.5 dari 5 bintang4.5/5 (227)

- ChatGPT: Is the future already here?Dari EverandChatGPT: Is the future already here?Penilaian: 4 dari 5 bintang4/5 (21)

- Chaos Monkeys: Obscene Fortune and Random Failure in Silicon ValleyDari EverandChaos Monkeys: Obscene Fortune and Random Failure in Silicon ValleyPenilaian: 3.5 dari 5 bintang3.5/5 (111)

- CompTIA Security+ Get Certified Get Ahead: SY0-701 Study GuideDari EverandCompTIA Security+ Get Certified Get Ahead: SY0-701 Study GuidePenilaian: 5 dari 5 bintang5/5 (2)

- The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldDari EverandThe Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldPenilaian: 4.5 dari 5 bintang4.5/5 (107)

- The Simulated Multiverse: An MIT Computer Scientist Explores Parallel Universes, The Simulation Hypothesis, Quantum Computing and the Mandela EffectDari EverandThe Simulated Multiverse: An MIT Computer Scientist Explores Parallel Universes, The Simulation Hypothesis, Quantum Computing and the Mandela EffectPenilaian: 4.5 dari 5 bintang4.5/5 (20)

- Who's Afraid of AI?: Fear and Promise in the Age of Thinking MachinesDari EverandWho's Afraid of AI?: Fear and Promise in the Age of Thinking MachinesPenilaian: 4.5 dari 5 bintang4.5/5 (13)

- Dark Data: Why What You Don’t Know MattersDari EverandDark Data: Why What You Don’t Know MattersPenilaian: 4.5 dari 5 bintang4.5/5 (3)

- Artificial Intelligence: The Insights You Need from Harvard Business ReviewDari EverandArtificial Intelligence: The Insights You Need from Harvard Business ReviewPenilaian: 4.5 dari 5 bintang4.5/5 (104)

- The Internet Con: How to Seize the Means of ComputationDari EverandThe Internet Con: How to Seize the Means of ComputationPenilaian: 5 dari 5 bintang5/5 (6)

- Data-ism: The Revolution Transforming Decision Making, Consumer Behavior, and Almost Everything ElseDari EverandData-ism: The Revolution Transforming Decision Making, Consumer Behavior, and Almost Everything ElsePenilaian: 3.5 dari 5 bintang3.5/5 (12)

- Power and Prediction: The Disruptive Economics of Artificial IntelligenceDari EverandPower and Prediction: The Disruptive Economics of Artificial IntelligencePenilaian: 4.5 dari 5 bintang4.5/5 (38)

- Demystifying Prompt Engineering: AI Prompts at Your Fingertips (A Step-By-Step Guide)Dari EverandDemystifying Prompt Engineering: AI Prompts at Your Fingertips (A Step-By-Step Guide)Penilaian: 4 dari 5 bintang4/5 (1)

- The AI Advantage: How to Put the Artificial Intelligence Revolution to WorkDari EverandThe AI Advantage: How to Put the Artificial Intelligence Revolution to WorkPenilaian: 4 dari 5 bintang4/5 (7)