Polynomial Modeling For Timevarying Systems Based On A Particle Swarm Optimization Algorithm

Diunggah oleh

prawinpsgJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Polynomial Modeling For Timevarying Systems Based On A Particle Swarm Optimization Algorithm

Diunggah oleh

prawinpsgHak Cipta:

Format Tersedia

NOTICE: this is the authors version of a work that was accepted for

publication in Information Sciences. Changes resulting from the

publishing process, such as peer review, editing, corrections, structural

formatting, and other quality control mechanisms may not be reflected in

this document. Changes may have been made to this work since it was

submitted for publication. A definitive version was subsequently

published in Information Sciences, Vol.181, no.9 (May 2011).

DOI: 10.1016/j.ins.2011.01.006

Polynomial modeling for time-varying systems based on a particle

swarm optimization algorithm

1

*

Kit Yan Chan,

1

Dillon S. Tharam

and

2

Che Kit Kwong

1

Digital Ecosystem and Business Intelligence Institute, Curtin University of Technology, WA 6102,

Australia

2

Department of Industrial and Systems Engineering, The Hong Kong Polytechnic University, Hung Hom,

Hong Kong

Abstract In this paper, an effective particle swarm optimization (PSO) is proposed for

polynomial models for time varying systems. The basic operations of the proposed PSO

are similar to those of the classical PSO except that elements of particles represent

arithmetic operations and variables of time-varying models. The performance of the

proposed PSO is evaluated by polynomial modeling based on various sets of time-

invariant and time-varying data. Results of polynomial modeling in time-varying systems

show that the proposed PSO outperforms commonly used modeling methods which have

been developed for solving dynamic optimization problems including genetic

programming (GP) and dynamic GP. An analysis of the diversity of individuals of

populations in the proposed PSO and GP reveals why the proposed PSO obtains better

results than those obtained by GP.

Keywords Particle swarm optimization, time-varying systems, polynomial modeling,

genetic programming

*

Kit Yan Chan is the corresponding author. His email address is Kit.Chan@curtin.edu.au

1. Introduction

Genetic programming (GP) [25, 26] is a commonly used evolutionary computation

method which is used to generate polynomial models for various systems such as

chemical plants [38], time series systems [21], nonlinear dynamic systems [56], object

classification systems [1, 65], machine learning systems [27], feature selection systems

[43], object detection systems [37], speech recognition systems [11], control systems [5]

and mechatronic systems [61]. The GP starts by creating a random initial population of

individuals, each of which represents the structure of a polynomial model. Evolution of

individuals takes place by mutation and crossover over generations, and individuals with

high goodness-of-fit are selected as survivors in the next generation. The evolutionary

process continues until the diversity of individuals of a population saturates to a low level

or no progress can be found.

Observations reveal that polynomial models represented by individuals in the GP

are distinct from each other in early generations. As the GP is progressing, polynomial

models represented by individuals converge to a form, which achieves relatively higher

goodness-of-fit in the population. Vaessens et al. [59] and Reeves [55] put this

population-based optimization method into the context of local searches. Maintaining

population diversity in GP is a key to preventing premature convergence and stagnation

in local optima [17, 40]. Using GP, it is difficult to develop optimal polynomial models

for time-varying systems whose structures or coefficients vary over time while the

diversity of individuals in a population is low. Time-varying characteristics can

commonly be found in many industrial systems [6, 22, 41, 44, 57, 66, 36, 34]. To develop

models for time-varying environments, Wagner et al. [60] developed a GP approach in

which a varying window for capturing significant time series is proposed to generate time

series models based on time series data. This approach cannot be applied for generating

models for time-varying systems if the nature of the data is not all in time series formats.

While mechanisms implemented on evolutionary algorithms have been well studied for

solving various dynamic optimization problems [64], those implemented in GP have not

been thoroughly studied for the development of polynomial models in time-varying

environments. It is essential that an effective algorithm be developed for generating

models that deal with time-varying characteristics, given their occurrence in many

industrial systems.

Another more recent population based optimization method, particle swarm

optimization (PSO) [15], inspires the movements of a population of individuals seeking

optimal solutions. The movement of each individual is based on its best position recorded

so far from previous generations and the position of the best individual among all the

individuals [28, 29]. The diversity of the individuals can be maintained by selecting PSO

parameters which provide a balance between global exploration, based on the position of

the best individual in the swarm, and local exploration based on each individuals best

previous position. Each individual can move gradually toward both its best position

recorded to date and the position of the best individual in the population. Kennedy and

Eberhart [29] demonstrated that PSO can solve many difficult optimization problems

with satisfactory results. PSO outperforms evolutionary computation methods for solving

various static optimization problems [13, 31, 53, 62], and various dynamic optimization

problems [2, 9, 8, 52] in which the optima or landscapes of the problems vary over time.

Although PSO can obtain satisfactory results when solving various dynamic optimization

problems, PSO has not currently been used on polynomial modelling for time-varying

systems. The development of PSO for polynomial modelling for systems with time-

varying characteristics is a new research area.

In this paper, a PSO is proposed for the development of polynomial models for

time-varying systems in which the system coefficients vary over time. The basic

operations of the proposed PSO are identical to those of the classical PSO [12] except

that the elements of individuals are represented by arithmetic operations and system

variables of polynomial models. The representation of elements takes the form of

grammatical swarm [47, 48] or grammatical evolution [46]. The performance of the

proposed PSO in the present paper is evaluated by developing models based on several

sets of time-varying data which are generated based on time-varying functions with

different time varying characteristics. In order to provide a comprehensive evaluation, a

comparison is conducted of the results obtained by the proposed PSO with:

(a) classical GP [46] - in which the representation of individuals of population is

identical to the one used in the proposed PSO;

(b) dynamic GP - which is integrated with a recent mechanism [63] for solving

dynamic optimization problems;

(c) dynamic PSO - which is integrated with a recent mechanism [2] for dynamic

optimization problems.

Even if additional computational effort is used in the dynamic PSO to maintain

the diversity of individuals, no significant difference in diversity can be found between

the proposed PSO and the dynamic PSO. Compared with the two GPs, the results indicate

that the proposed PSO outperforms both classical GP and dynamic GP in developing

polynomial models for systems with both time-invariant data and time-varying data. The

results can be explained by the diversity of individuals in the proposed PSO, which can

be maintained in both early and later generations. The individuals of the proposed PSO

continue to explore the solution spaces over the generations. In contrast the individuals of

both the GP methods start to converge and get stuck on a solution after early generations.

This paper is organized as follows. Section 2 presents the operations of the

proposed PSO. The experimental set-up for testing the proposed PSO, and the data sets

used for evaluating the proposed PSO are presented in Sections 3.1 and 3.2 respectively.

The experimental results and the analysis of the experimental results are presented in

Section 3.3 and 3.4 respectively. Finally, conclusions and suggestions for further work

are given in Section 4.

2. Particle swarm optimization

A time-varying system can be formulated as follows:

y = f

t

(x

1

,x

2

,x

m

) (1)

where y is the output response, x

j

, j=1,2,m, is the j-th variable of the time-varying

system, and f

t

is the functional relationship of the time-varying system at time t. Based

on a set of data which represents relations between the output response y and the

variables, x

1

, x

2

, ..., x

m

at time t, the time-varying system

t

f in (1) can be generated in a

polynomial form with constant coefficients at time t. The data set at time t is defined by

( ) ( ) ( ) ( ) { }

D

N

i

D D

i t y i t t

1

, , ,

=

= x D , where the corresponding values of the i-th data at time t is

( ) ( ) ( ) ( ) ( )

m D

m

D D D

R i t x i t x i t x i t e = , ,..., , , , ,

2 1

x and the corresponding value of the response

output of the i-th data at time t is ( ) R i t y

D

e , . Where ( ) t D is available,

t

f can be

generated as the high-order high-dimensional Kolmogorov-Gabor polynomial in

expression (2):

( ) ( ) ( ) ( ) ( )

[

= = = = = = =

+ + + + =

m

i

i m

m

i

m

i

m

i

i i i i i i

m

i

m

i

i i i i

m

i

i i

m

m

x t a x x x t a x x t a x t a t a y

1

... 123

1 1 1 1 1 1

0

...

1 2 3

3 2 1 3 2 1

1 2

2 1 2 1

1

1 1

(2)

where ( ) t a

0

, ( ) t a

1

, ( ) t a

2

, ...., ( ) t a

m

, ( ) t a

11

, ( ) t a

12

, ..., ( ) t a

mm

,... and ( ) t a

m mm...

are the

polynomial coefficients at time t. Equation (2) is a universal format of the polynomial

model if the number of terms in equation (2) is large enough [18]. In this paper, a PSO is

proposed in order to generate the time-varying model at time t based on equation (2),

using an available set of data at time t. Based on [12], the proposed PSO uses a number of

individuals, which constitute a swarm, and each individual represents a time-varying

model. Each individual traverses the search space to trace the polynomial model of the

time-varying system whose system coefficients vary over time.

In the PSO, each individual is represented by the system variables (x

1

, x

2

, , and

x

m

) and the arithmetic operations (+, - and *) of the system model as defined in (2).

m is the number of variables of the system model. A similar mechanism was first

proposed by Kennedy and Eberhart [30] for representing discrete binary variables, and

has been applied to the PSO for solving flowshop scheduling problems [31, 51, 58]. The

i-th individual at generation g is defined as

( ) ,1 ,2 ,

, ,...,

p

g g g g

i i i i N

P p p p = ; where m N

p

> ;

pop

N i ,..., 2 , 1 = ;

pop

N is the number of individuals of the swarm;

p

N

is the number of

elements of the individual; and

pop

N is an odd number;

,

g

i k

p is the k-th element of the i-th

individual at the g-th generation, and

,

g

i k

p is in the range between 0 to 1 i.e. { }

,

0...1

g

i k

p e .

If the value of

p

N

is large, a larger number of terms can be generated in the model, and

the model can better fit the data which is used for model development. However, a model

may contain too many unnecessary and complex terms. A complex, over-parameterized

model with a large number of parametric terms reduces the transparency and ease of

interpretation of the model leading to overfitting problems. To prevent the PSO from

generating models which are too complex, the value of

p

N

has to be selected carefully.

The value of

p

N

can be determined based on the trial and error method, and the value of

p

N

cannot be set too high, otherwise redundant terms can be produced. If the number of

variables of the system model is 4,

p

N

can be initially set as 10. If the modelling error

obtained by the PSO is not satisfactory, the value of

p

N

can be increased until a

satisfactory modelling error is achieved. If the modelling error obtained by the PSO is

satisfactory, the value of

p

N

can be decreased until just before an unsatisfactory

modelling error is achieved.

The elements in odd numbers (i.e.

,1 ,3 ,5

, , ,...

g g g

i i i

p p p ) are used to represent the

system variables, and the elements in even numbers (i.e.

,2 ,4 ,6

, , ,...

g g g

i i i

p p p ) are used to

represent the arithmetic operations. For odd k, if

,

1

0

1

g

i k

p

m

< s

+

, no system variable is

represented by the element

,

g

i k

p . If

,

1

1 1

g

i k

l l

p

m m

+

< s

+ +

with l>0,

,

g

i k

p represents the l-th

system variable,

l

x . System variables represented by the individual are summarized in

Table 1.

Table 1: Representation of system variables

The k-

th

element

,

1

0

1

g

i k

p

m

< s

+

,

1 2

1 1

g

i k

p

m m

< s

+ +

,

2 3

1 1

g

i k

p

m m

< s

+ +

.

,

1

1

g

i k

m

p

m

< s

+

The

system

variable

No system

variable

1

x

2

x .

m

x

*

k is an odd number

In the polynomial model, +, - and * are the only three arithmetic operations

considered. For even k, if

,

1

0

3

g

i k

p < s ,

,

1 2

3 3

g

i k

p < s and

,

2

1

3

g

i k

p < s , the element

,

g

i k

p

represents the arithmetic operations +, - and * respectively. Arithmetic operations

represented by the individual are summarized in Table 2.

Table 2: Representation of arithmetic operations

The k-th

element

,

1

0

3

g

i k

p < s

,

1 2

3 3

g

i k

p < s

,

2

1

3

g

i k

p < s

The arithmetic

operations

+ - *

*

k is an even number

For example, the i-th individual at generation g with 11 elements is used to

represent a polynomial model of the time-varying system at time t, which consists of 4

system variables (i.e. x

1

, x

2

, x

3

and x

4

):

g

i

p

1 ,

g

i

p

2 ,

g

i

p

3 ,

g

i

p

4 ,

g

i

p

5 ,

g

i

p

6 ,

g

i

p

7 ,

g

i

p

8 ,

g

i

p

9 ,

g

i

p

10 ,

g

i

p

11 ,

0.18 0.41 0.94 0.92 0.41 0.89 0.06 0.35 0.81 0.01 0.74

The elements in the individual are within the following ranges:

g

i

p

1 ,

g

i

p

2 ,

g

i

p

3 ,

g

i

p

4 ,

g

i

p

5 ,

g

i

p

6 ,

g

i

p

7 ,

g

i

p

8 ,

g

i

p

9 ,

g

i

p

10 ,

g

i

p

11 ,

0<

0.18

1/5

1/3<

0.41

2/3

4/5<

0.94

5/5

2/3<

0.92

3/3

2/5<

0.41

3/5

0<

0.08

1/3

0<

0.06

1/5

0<

0.35

1/3

4/5<

0.81

5/5

0<

0.01

1/3

3/5<

0.74

4/5

Therefore, the model is represented in the following form:

g

i

p

1 ,

g

i

p

2 ,

g

i

p

3 ,

g

i

p

4 ,

g

i

p

5 ,

g

i

p

6 ,

g

i

p

7 ,

g

i

p

8 ,

g

i

p

9 ,

g

i

p

10 ,

g

i

p

11 ,

0 -

4

x *

2

x + 0 +

4

x +

3

x

which is equivalent to:

( )

3 4 2 4

0 0 x x x x

g

i

+ + + = x

or

( )

3 4 2 4

x x x x

g

i

+ + = x .

The PSO is used only to find the structure of the polynomial and not the

coefficients. The system coefficients a

0

(t), a

1

(t), a

2

(t) and a

3

(t) are determined after the

structure of the time-varying model at time t is established, where the number of

coefficients is 4. The completed time-varying model at time t is represented as follows:

( ) = x

g

i

f a

0

(t) a

1

(t)x

4

x

2

+ a

2

(t)x

4

+ a

3

(t)x

3

In this research, the system coefficients a

0

(t), a

1

(t), a

2

(t) and a

3

(t) are determined

by the orthogonal least squares algorithm (OLSA) [2, 5], which has been demonstrated to

be effective in determining system coefficients in polynomial models [39]. Details of the

orthogonal least squares algorithm can be found in [3, 7].

The polynomial model represented by each individual is evaluated based on the

root mean absolute error (RMAE). This reflects the differences between the predictions

by the model of the time-varying system at time t and the actual values of the data sets at

time t. The RMAE of the i-th individual at the g-th generation RMAE

i

g

can be calculated

based on (3).

( ) ( ) ( )

( )

=

D

N

j

D

D g

i

D

D

g

i

j t y

j t f j t y

N

RMAE

1

,

, , 1

% 100

x

, (3)

where

g

i

f is the polynomial model represented by the i-th individual

g

i

P at the g-th

generation, ( ) ( ) ( )

, , ,

D D

t j y t j x is the j-th data set at time t, and N

D

is the number of

training data sets used for developing the polynomial model of the time-varying system.

The velocity

,

g

i k

v (corresponding to the flight velocity in a search space) and the k-

th element of the i-th individual at the g-th generation

,

g

i k

p are calculated by expressions

(4) and (5) of the PSO [10] respectively:

( ) ( ) ( ) ( ) ( )

1

, 2

1

, , 1

1

, ,

+ + =

g

k i k

g

k i k i

g

k i

g

k i

p gbest rand p pbest rand v K v (4)

1

, , ,

g g g

i k i k i k

p p v

= + (5)

where

,1 ,2 ,

, , ...

p

i i i i N

pbest pbest pbest pbest

(

=

,

1 2

, , ...

p

N

gbest gbest gbest gbest

(

=

,

k = 1,2, , N

p

,

The best previous position so far of an individual is recorded from the previous

generation and is represented as

i

pbest ; the position of the best individual among all the

individuals is represented as gbest; rand() returns a uniform random number in the range

of [0,1]; w is an inertia weight factor;

1

and

2

are acceleration constants [13]; K is a

constriction factor derived from the stability analysis of equation (4) to ensure that the

system converges, but not prematurely. K is a function of

1

and

2

as reflected in the

following equation:

4 2

2

2

= K (6)

where

2 1

+ = and 4 > .

The proposed PSO utilizes pbest

i

and gbest to modify the current search point to

prevent the individuals from moving in the same direction, but to converge gradually

toward pbest

i

and gbest. g is the current generation number, G is the total number of

generations [14].

In (4), the particle velocity is limited by a maximum value

max

v . The parameter

max

v determines the resolution with which regions are to be searched between the present

position and the target position. This enhances the local exploration of a search process.

If

max

v is too high, individuals might fly past good solutions. If

max

v is too small,

individuals may not explore sufficiently beyond local solutions.

max

v was often set as

10%20% of the dynamic range of the element on each dimension. The pseudo code of

the proposed PSO is presented in Figure 1.

Figure 1: Pseudo code of the PSO

3. Polynomial modelling

In this section, the effectiveness of the PSO in modeling time-invariant or time-varying

systems is evaluated based on both the time-invariant data and time-varying data. The

PSO and the other commonly used, but recently developed, algorithms are compared.

3.1 Time-invariant and time-varying data

For time-invariant data, five sets of data, namely static data,

stat

Sph

H ,

stat

Ros

H ,

stat

Ras

H ,

stat

Gri

H and

stat

Ack

H , were generated based on each of the five benchmark functions, Sphere, Griewank,

Rastrigin, Rosenbrock and Ackley (see Table 3) by randomly choosing 100 numbers X

i

in

each of the predefined intervals | |

n

X X

max min

, . The 100 corresponding output responses Y

i

{

g0 // g is the generation number

Initialize a set of individuals

g

N

g g

pop

P P P ,..., ,

2 1

//

|

.

|

\

|

=

g

N i

g

i

g

i

g

i

p

p p p P

, 2 , 1 ,

,..., ,

Evaluate each individual

g

i

P based on (3)

while (g<G) do // G is the total number of generations

{

gg+1

Update the velocity

g

k i

v

,

based on (4)

if

g

k i

v

,

>v

max

g

k i

v

,

= v

max

end

if

g

k i

v

,

<v

max

g

k i

v

,

= v

max

end

Update each element of each particle

g

k i

p

,

based on (5)

Evaluate each particle

g

i

P based on (3)

Update pbest

i

and gbest

}

}

are computed by the benchmark function Y

i

= F(X

i

) whose landscape and optimum are

static with respect to time. The dimension of each benchmark function is n=4. The Sphere

(

Sph

F ) and Rosenbrock (

Ros

F ) functions are unimodal (a single local and global optimum),

and the Griewank (

Gri

F ), Rastrigin (

Ras

F ), and Ackley (

Ack

F ) functions are multimodal

(several local optima).

Table 3: Benchmark functions and initialization areas

Benchmark functions Initialization areas

| |

n

X X

max min

,

Sphere (Sph): ( ) ( )

=

=

n

i

i Sph

x F

1

2

x | |

n

50 , 50

Rosenbrock (Ros): ( ) ( ) ( ) ( )

=

+

+ =

1

1

2

2

2

1

1 100

n

i

i i i Ros

x x x F x | |

n

30 , 30

Rastrigin (Ras): ( ) ( ) ( )

=

+ =

n

i

i i Ras

x x F

1

2

10 2 cos 10 t x | |

n

12 . 5 , 12 . 5

Griewank (Gri): ( ) 1 cos

4000

1

1 1

2

+ |

.

|

\

|

=

[

= =

n

i

i

n

i

i Gri

i

x

x F x

| |

n

600 , 600

Ackley (Ack):

( )

( ) ( ) e x n

x n F

n

i

i

n

i

i Ack

+ +

|

.

|

\

|

=

=

=

20 2 cos 1 exp

1 2 . 0 exp 20

1

1

2

t

x

| |

n

32 , 32

The time-varying data used in this study was generated by a set of time-varying

functions which were extended from the benchmark functions shown in Table 3. X

i

was

generated by randomly chosen numbers in the predefined interval of the benchmark

function. Y

i

was computed in each generation of the PSO run by the time-varying

function Y

i

= F(X

i

, t) whose landscape or optima varies over time t. The mechanisms for

the development of the time-varying functions were based on the dynamic properties of

step changes of optima, changes of locations of optima, and changes of the landscapes of

the benchmark functions [33, 42].

For those based on the mechanism of step changes of optima, the time-varying

data was generated based on each of the five benchmark functions,

Sph

F ,

Ros

F ,

Ras

F ,

Gri

F

and

Ack

F . The optimum position

+

x of each benchmark function is moved by adding or

subtracting random values in all dimensions by a severity parameter s, at every change of

the environment [32]. The choice of whether to add or subtract the severity parameter s

on the optimum

+

x is done randomly with an equal probability. The severity parameter s

is defined by:

( ) ( )

( ) ( )

< A

> A +

=

0 rand if

0 rand if

min max

min max

X X d

X X d

s , (7)

where d A determines the scale of the step change of optima.

For each test run, a different random seed was used. The severity was chosen

relative to the extension (in one dimension) of the initialization area of each benchmark

function. The optima of the benchmark functions were periodically changed in every 100

generations of the runs of the algorithms. For small step changes of optima, 5% = Ad is

selected, and five sets of time-varying data, (namely step move data with % 5 = Ad ,

5

H

Step

Sph

,

5

H

Step

Ros

,

5

H

Step

Ras

,

5

H

Step

Gri

and

5

H

Step

Ack

) were generated based on the five benchmark

functions,

Sph

F ,

Ros

F ,

Ras

F ,

Gri

F and

Ack

F , in Table 3 respectively. For large step changes of

optima, 10% = Ad is selected, and five sets of time varying data, (namely step move

data with % 5 = Ad ,

10

H

Step

Sph

,

10

H

Step

Ros

,

10

H

Step

Ras

,

10

H

Step

Gri

and

10

H

Step

Ack

) were generated

respectively.

For those based on the mechanism of changes of locations of optima [21], the

time-varying data was generated based on the following time-varying function:

( ) ( ) ( ) ( ) ( ) ( ) s x F t w x F t w x F

i i

+ = 0 . 1 (8)

where ( )

|

.

|

\

|

=

100

floor

100

1

t

G

t w , G t s s 0 ; G is the pre-defined number of generations;

( ) x F

i

is any of the five benchmark functions,

Sph

F ,

Ros

F ,

Ras

F ,

Gri

F and

Ack

F , in Table 3, s

is a randomly chosen constant which is 20% of the range of the benchmark function

( ) x F

i

. The optimum in ( ) x F shifts from the original optimum

+

x of ( ) x F

i

to the new

optimum ( ) s +

+

x in every 100 generations. Based on these time-varying functions, five

sets of time varying data, namely shift data,

shift

Sph

H ,

shift

Ros

H ,

shift

Ras

H ,

shift

Gri

H and

shift

Ack

H , were

generated based on the five benchmark functions,

Sph

F ,

Ros

F ,

Ras

F ,

Gri

F and

Ack

F in Table 3

respectively.

For those based on the mechanism of changes of the landscapes of the benchmark

functions [24], the time-varying data was generated based on the following time-varying

function, which is similar to equation (8):

( ) ( ) ( ) ( ) ( ) ( ) x F t w s x F t w x F

j i

+ = 0 . 1 (9)

where ( ) x F

i

is any of the five benchmark functions in Table 3, and ( ) x F

j

is another

benchmark function. The landscape of ( ) x F changes gradually from the landscape of

( ) x F

j

to the landscape of ( ) x F

i

in every 100 generations. s is a randomly chosen constant

which is 20% of the range of the benchmark function ( ) x F

i

. Based on the time-varying

function (10), four sets of time varying data, namely match data,

match

Ros Sph

H ,

match

Ras Sph

H ,

match

Gri Sph

H

and

match

Ack Sph

H , were generated in which

Sph

F is used as ( ) x F

j

, and

Ros

F ,

Ras

F ,

Gri

F or

Ack

F

functions is used as ( ) x F

i

. Another three sets of match data,

match

Ras Ros

H ,

match

Gri Ros

H and

match

Ack Ros

H ,

were generated in which ( )

Ros

F x

is used as ( ) x F

j

, and

Ras

F ,

Gri

F or

Ack

F is used as ( ) x F

i

.

A brief summary of all the 27 data sets is presented in Table 4, and the benchmark

functions, which can be used to generate the data sets, can be downloaded from the

following link (http://www.4shared.com/account/dir/G2J--2eV/sharing.html).

Table 4: Description of the data sets

Data sets Descriptions

Static data

stat

Sph

H ,

stat

Ros

H ,

stat

Ras

H ,

stat

Gri

H ,

stat

Ack

H

The static data was generated by the benchmark

functions,

Sph

F ,

Ros

F ,

Ras

F ,

Gri

F and

Ack

F .

Step move

data with

% 5 = Ad

5

H

Step

Sph

,

5

H

Step

Ros

,

5

H

Step

Ras

,

5

H

Step

Gri

,

5

H

Step

Ack

The step move data with % 5 = Ad were generated

based on the benchmark functions,

Sph

F ,

Ros

F ,

Ras

F ,

Gri

F and

Ack

F , in which the locations of the optima

change from

+

x to s +

+

x in every 100 generations. s

is 5% of the ranges of the benchmark functions.

Step move

data with

% 10 = Ad

10

H

Step

Sph

,

10

H

Step

Ros

,

10

H

Step

Ras

,

10

H

Step

Gri

,

10

H

Step

Ack

The mechanism is the same as that for the above data

sets except that s is 10% of the ranges of the

benchmark functions.

Shift data

shift

Sph

H ,

shift

Ros

H ,

shift

Ras

H ,

shift

Gri

H ,

shift

Ack

H

The shift data was generated based on the benchmark

functions,

Sph

F ,

Ros

F ,

Ras

F ,

Gri

F and

Ack

F , in which the

locations of the optima move from

+

x to s +

+

x

gradually. s is 20% of the ranges of the benchmark

functions.

Match data

based on

Sph

F

match

Ros Sph

H ,

match

Ras Sph

H ,

match

Gri Sph

H ,

match

Ack Sph

H

The match data based on

Sph

F was generated in

which the landscape changes from

Sph

F to

Ros

F ,

Ras

F ,

Gri

F or

Ack

F .

Match data

based on

Ros

F

match

Ras Ros

H ,

match

Gri Ros

H ,

match

Ack Ros

H

The match data based on

Ros

F was generated in

which the landscape changes from

Ros

F to

Ras

F ,

Gri

F or

Ack

F .

3.2 Experiment Set-up

In this paper, because the basic operation of the PSO discussed in Section 2 is similar to

classical PSO, it is called classical PSO, C-PSO in this paper. The following parameters,

which can be found in reference [48], were implemented in the C-PSO: the number of

particles in the swarm was 100; the number of elements in the particle was 30; both the

acceleration constants

1

and

2

were set at 2.05; the maximum velocity

max

v was 0.2;

the pre-defined number of generations was 1000. Based on the results in [48], these

parameters can produce satisfactory results when solving both parameterized and

combinatorial problems. Therefore, these parameters are used in this research. The C-

PSO was compared against the following five approaches for generating models based on

both the time-invariant and time-varying data sets, which have been discussed in Section

3.1.

1. Classical genetic programming (C-GP): A commonly used method for

polynomial modeling, the classical genetic programming (C-GP) [25, 26] was

employed. Here the representation of the individuals of the grammatical

genetic programming [46] is identical to the one of the representations of the

C-PSO. The basic operations of the C-GP are shown in Figure 2 in the

Appendix. The C-GP first starts by creating a random initial population O(g)

of individuals [u

1

(g), u

2

(g), u

POP

(g)], while g=0. The i-th individual u

i

(g) at

the g-th generation represents the structure of the time-varying model (2). For

example, the i-th individual at the g-th generation u

i

(g) represents the structure

of the following time-varying model at time t:

u

i

(g) = x

1

2

x

2

2

+ x

1

x

2

x

4

(10)

After determining the structure of the time-varying model u

i

(g), the

system coefficients are determined. The completed time-varying model u

i

(g)

is represented by:

u

i

(g)= a

0

(t) + a

1

(t)x

1

2

a

2

(t)x

2

2

+ a

3

(t)x

1

x

2

x

4

(11)

where a

0

(t), a

1

(t), a

2

(t) and a

3

(t) are the system coefficients at time t, and are

calculated by OLSA. This is the same as the one used in the C-PSO for

calculating system coefficients. The classical genetic operations, point

mutation and one-point crossover, were used. Standard roulette wheel

selection was used. The following GA parameters were implemented in the C-

GP: The population size is 100. The type of replacement is elitist. Crossover

rate and mutation rate were 0.9 and 0.01 respectively. The pre-defined number

of generations was 1000. The dimension of the individuals was 30.

2. Dynamic particle swarm optimization (D-PSO): D-PSO is identical to the C-

PSO except for integration of the recent mechanisms for maintaining

diversities of the swarms [2] when solving the dynamic optimization problem.

The mechanism splits the whole set of particles into a set of interacting

swarms. These swarms interact locally through an exclusion parameter and

globally through an anti-convergence operator. Each swarm maintains its

diversity by using either charged or quantum particles. Results show that

when this mechanism for maintaining diversity in the PSO is used, the PSO

outperforms the other PSO or evolutionary algorithms, even where they are

integrated with other diversity maintaining mechanisms, for solving dynamic

optimization problems. The performance of D-PSO was optimized by tuning

it with different settings for the number particles in the sub-swarms. 5, 10 and

25 particles in the sub-swarms were used and the best performance among

them was recorded. The detailed description of the mechanisms used to

maintain diversity in the swarms can be found in [2].

3. Dynamic genetic programming (D-GP): D-GP is identical to the C-GP except

for integration of the recent mechanism [63] used for evolutionary algorithms

on solving dynamic optimization problems. The mechanism relocates the

positions of the individuals based on the changes of the landscape of the

dynamic optimization problem and the average sensitivities of their decision

variables to the corresponding change in the landscape. While integrating the

mechanism in the evolutionary algorithm, the evolutionary algorithm

outperforms the other dynamic evolutionary approaches for solving dynamic

optimization problems. The detailed description of the mechanisms for

maintaining diversity can be found in [63].

4. Polynomial-genetic algorithm (P-GA): P-GA is a genetic algorithm proposed by

Potgieter and Engelbrecht [53] which can evolve structurally polynomial

expressions in order to accurately describe a given data set. In P-GA, each

individual is used to represent the structure of the polynomial and this is

evolved based on the designed crossover and mutation operations. The

coefficients of the polynomial are determined by OLSA [3, 7]. The crossover

rate and the mutation rate were set at 0.1 and 0.2 respectively, which are the

same as those used in [54]. The population size was set at 100. The individual

length was set at 22.

5. Polynomial neural network (PNN): PNN is developed based on a genetic

algorithm which is proposed by Oh and Pedrycz [50]. Individuals in the

genetic algorithm are used to represent the parameters of the PNN including

the number of input variables, the order of the polynomial and input variables,

which lead to a structurally and parametrically optimized network. The

coefficients of the polynomial are determined by OLSA [3, 7]. The number of

layers of the PNN was set at 3. The crossover rate and the mutation rate used

in the genetic algorithm were set at 0.65 and 0.1 respectively, which are the

same as those used in [50]. The population size was set at 100. The individual

length was set at 36.

3.3 Experimental results

Thirty runs were performed on the C-PSO, D-PSO, D-GP, C-GP, P-GA and PNN in

generating polynomial models based on each of the 27 data sets shown in Table 4. In

each generation of the runs, the RMAE obtained by the individuals of the six algorithms

was recorded.

Online performance of the algorithms is demonstrated by the convergence plots.

Figures 3a, 3b, 3c, 3d and 3e show the convergence plots for the step move data with

% 5 = Ad (

5

H

Step

Sph

,

5

H

Step

Ros

,

5

H

Step

Ras

,

5

H

Step

Gri

,

5

H

Step

Ack

) respectively. It can be observed from

Figures 3a-3e that the evolutionary algorithms, C-GP, D-GP, P-GA and PNN, converged

more quickly in the early generations than did those of the PSO algorithms, C-PSO and

D-PSO. However, the PSO algorithms, C-PSO and D-PSO, kept progressing after the

early generations. Finally, both the PSO algorithms, C-PSO and D-PSO, reached a

smaller RMAE than that reached by the evolutionary algorithms, C-GP, D-GP, P-GA and

PNN, in the final stage of the search. D-PSO can reach the smallest RMAE compared

with those obtained by the other algorithms. Therefore in general, the PSO algorithms

outperform the evolutionary algorithms in generating the models for these static data sets

in later generations. For the rest of the data (static data, step move data with % 10 = Ad ,

shift data, match data based on

Sph

F , match data based on

Ros

F ), a similar finding can be

observed in that the convergence speed of the evolutionary algorithms was faster than

that of the particle swarm optimization algorithms in the early generations. In the late

generations, the particle swarm optimization algorithms can reach a smaller RMAE than

that reached by the evolutionary algorithms.

The smallest RMEA among all generations of each run of each algorithm was

recorded, and was averaged. This measure is called offline performance. The commonly

used method for testing the significance of the results, the Wilcoxon Rank Sum Test, was

used to compare the results between the two algorithms [19]. The results of the 30 runs

for two algorithms form two independent random samples X and Y. The distributions of X

and Y, F

X

and F

Y

, are compared using the null-hypothesis H

0

: F

X

=F

Y

and the one-sided

alternative H

1

: F

X

<F

Y

. We performed the significance tests at a significance level =

0.01. Only if the probability of the observed difference is less than , is the null-

hypothesis rejected and the alternative hypothesis accepted. The significance comparison

among a set of the six algorithms, C-PSO, D-PSO, D-GP, C-GP, P-GA and PNN, is

displayed using a 66 matrix, where X denotes that the result obtained by algorithm i

and that obtained by algorithm j is statistically significant different. i and j are the

position in the corresponding result table. An entry of _ indicates that the result

obtained by algorithm i and algorithm j is not a statistically significant difference. We

name such a matrix a significance matrix.

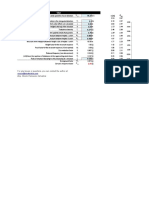

Tables 5, 6, 7, 8, 9 and 10 show the performance and the significance matrices for

the static data, the step move data with % 5 = Ad , the step move data with % 10 = Ad ,

the shift data, the match data based on

Sph

F and the match data based on

Ros

F respectively.

The average RMAE among the 30 runs of each algorithm and the ranks of the algorithms

in regard to the average RMAE are shown in the tables. Table 5 shows that D-PSO is

better than C-PSO in generating time-invariant models based on the static data

stat

Sph

H . C-

PSO is better than D-GP which is better than C-GP, P-GA and PNN. A significant

difference can be found between the results obtained by the PSO algorithms (C-PSO and

D-PSO) and those obtained by the evolutionary algorithms (D-GP, C-GP, P-GA and

PNN). However, there is no significant difference between the results obtained by C-PSO

and D-PSO, even if D-PSO can obtain a smaller RMAE than that obtained by C-PSO. In

regard to the other static data sets (

stat

Ros

H ,

stat

Ras

H ,

stat

Gri

H ,

stat

Ack

H ), both the PSO algorithms, C-

PSO and D-PSO can obtain a smaller average RMAE than that obtained by the

evolutionary algorithms, D-GP, C-GP, P-GA and PNN. Also, significant differences exist

between the results obtained by the PSO algorithms (C-PSO and D-PSO) and those

obtained by the evolutionary algorithms (D-GP, C-GP, P-GA and PNN). Also, similar

results can be found in Tables 6, 7, 8, 9 and 10 where the PSO algorithms are

significantly better than the evolutionary algorithms in generating models based on the

time-varying data.

Therefore, it can be concluded that the PSO algorithms are significantly better

than the evolutionary algorithms.

3.4 Population diversity

An investigation of population diversities of C-PSO, D-PSO, D-GP, C-GP, P-GA and

PNN is presented in this section. Maintaining population diversity in population-based

algorithms like evolutionary algorithms or PSO is a key to preventing premature

convergence and stagnation in local optima [11, 16, 40]. Thus it is essential to study the

population diversities of the six algorithms during the search. Various diversity measures,

which involve calculations of distance between two individuals in genetic programming

for the development of models, have been widely studied [4, 49]. These distance

measures calculate the distances between two individuals which are in a tree based

representation in genetic programming. They indicate the number of different nodes and

different terminals between two individuals. In this paper, we measure the distance

between two individuals by counting the number of different terms of the polynomials

represented by the two individuals in the four algorithms. If the terms in both

polynomials are all identical, the distance between the two polynomials is zero. The

distance between the two polynomials is larger when the number of different terms in the

two polynomials is larger. For example,

1

f and

2

f are two polynomials represented by:

5 3 1

2

4 3 1 2 1 1

x x x x x x x x f + + + + =

and

5 3 1 4 5 1 2 1 2

x x x x x x x x f + + + + =

Both

1

f and

2

f contain the three terms

1

x ,

2

x and

5 3 1

x x x , and the terms

3 1

x x

and

2

4

x in

1

f and the terms

5 1

x x and

4

x in

2

f are different. Therefore, the number of

terms which are different in

1

f and

2

f are 2, and the distance between

1

f and

2

f is

defined to be 2.

The diversity measure of the population at the g-th generation is defined by the

total sum of distances of individuals which is denoted as:

( ) ( ) ( )

= + =

=

p

N

i

p

N

i j

g g g

j s i s d

1 1

, o

where ( ) i s

g

and ( ) j s

g

are the i-th and the j-th individuals in the population at the g-th

generation, and d is the distance measure between the two individuals.

The diversities of the populations throughout the generations were recorded for

the four algorithms. Figure 4 shows the diversity plots which indicate the diversities of

the individuals in the algorithms in generating the models based on the step move data

with % 5 = Ad . Figure 4a, 4b, 4c, 4d and 4e shows the diversities for static data which

are generated based on the benchmark functions

Sph

F ,

Ros

F ,

Ras

F ,

Gri

F and

Ack

F respectively.

The diversities of the populations throughout the generations were recorded for the six

algorithms. The five figures indicate that the diversities along the generations of the D-

PSO are slightly higher than those of the C-PSO which are much higher than those of the

evolutionary algorithms, D-GP, C-GP, P-GA and PNN. For the rest of the data (static

data, step move data with % 10 = Ad , shift data, match data based on

Sph

F , match data

based on

Ros

F ), similar findings indicate that the diversities of the two PSO algorithms are

much larger than those of the evolutionary algorithms.

The findings indicate the reason why the PSO algorithms, D-PSO and C-PSO can

obtain significantly better results than the evolutionary algorithms, D-GP, C-GP, P-GA

and PNN. The diversities of the individuals of the PSO algorithms can be maintained

along the search in both earlier and later generations, while the individuals of the

evolutionary algorithms converged in the earlier generation. Therefore, the PSO

algorithms are more likely to explore the solution space, as the diversity of the

individuals of the algorithms can be maintained. In regard to the effectiveness of the two

PSO algorithms, since the diversities of the populations of C-PSO are only slightly

smaller than those of the D-PSO, D-PSO can obtain only slightly better results than those

obtained by C-PSO.

4 Conclusion and further work

In this paper, a particle swarm optimization algorithm has been proposed for developing

polynomial models in which both time-invariant and time-varying characteristics are

represented. The individuals of the PSO are represented by arithmetic operations and

system variables, which are the components of the polynomial models. A set of dynamic

benchmark functions whose optima or landscapes vary over time was employed to

evaluate the performance of the PSO. The PSO algorithms, C-PSO and D-PSO, were

used to generate models based on both the time-invariant and time-varying data sets. The

evolutionary algorithms, D-GP, C-GP, P-GA and PNN, were also included in the

experiments for comparison. Results show that the PSO algorithms significantly

outperform the evolutionary algorithms in generating models based on both the time-

invariant or time-varying data sets. It is observed that the evolutionary algorithms

converge faster in the earlier generations when compared with the PSO algorithms. In

contrast, the PSO algorithms can obtain better solutions in the later generations of the

runs. The performance obtained in the results can be explained by the diversity measures

and the fact that significant differences of diversities between the PSO algorithms and the

evolutionary algorithms exist.

In future work, we will enhance the effectiveness of the PSO by the hybridization

of the evolutionary algorithm and the PSO algorithm. Here the evolutionary algorithm

will be implemented to localize the potential solutions in the early generations and the

PSO algorithm will be implemented in order to continue to explore the solution space to

avoid pre-mature convergence in late generations. The resulting algorithm will be further

validated by solving real-time traffic flow forecasting problems, which are time varying

in nature.

References

[1] F.J. Berlanga, A.J. Rivera, M.L. del Jesus and F. Herrera, GP-COACH: Genetic

programming-based learning of COmpact and ACcurate fuzzy rule-based

classification systems for high-dimensional problems, Information Sciences, vol. 15,

no. 8, pp. 1183-1200, 2010.

[2] T. Blackwell and J. Branke, Multiswarms, exclusion, and anti-convergence in

dynamic environments, IEEE Transactions on Evolutionary Computation, vol. 10,

no. 4, pp. 459-472, 2006.

[3] S. Billings, M. Korenberg and S. Chen, Identification of nonlinear outputaffine

systems using an orthogonal least-squares algorithm, International Journal of

Systems Science, vol. 19, 1559-1568, 1988.

[4] E.K. Burke, S. Gustafson and G. Kendall, Diversity in genetic programming: an

analysis of measures and correlation with fitness, IEEE Transactions on

Evolutionary Computation, vol. 8, no. 1, pp. 47-62, 2004.

[5] Y.C. Chen, T.H.S. Li and Y.C. Yeh, EP-based kinematic control and adaptive fuzzy

sliding mode dynamic control for wheeled mobile robots, Information Sciences, vol.

179, no. 1, pp. 180-195, 2009.

[6] G. Chen and C.P. Low, Coordinating agents in shop floor environments from a

dynamic systems perspective, IEEE Transactions on Industrial Informatics, vol. 2,

no. 4, pp. 269-280, 2006.

[7] S. Chen, S. Billings and W. Luo, Orthogonal least squares methods and their

application to non-linear system identification, International Journal of Control, vol.

50, pp. 1873-1896, 1988.

[8] Y.P. Chen, W.C. Peng and M.C. Jian, Particle swarm optimization with

recombination and dynamic linkage discovery, IEEE Transactions on Systems, Man

and Cybernetics - Part B, vol. 37, no. 6, pp. 1460-1470, 2007.

[9] Y. Chen, B. Yang, Q. Meng and A. Abraham, Time series forecasting using a system

of ordinary differential equations, Information Sciences, vol. 181, no. 1, pp. 106-

114, 2011.

[10] M. Clerc and J. Kennedy, The particle swarm explosion, stability, and convergence

in a multidimensional complex space, IEEE Transactions on Evolutionary

Computation, vol. 6, no. 1, pp. 58-73, 2002.

[11] P. Day and A.K. Nandi, Robust text-independent speaker verification using genetic

programming, IEEE Transactions on Audio, Speech and Language Processing, vol.

15, no. 1, pp. 285-295, 2007.

[12] R. Eberhart and J. Kennedy, A new optimizer using particle swarm theory, in

Proceedings of the 6th International Symposium on Micro Machine and Human

Science, IEEE Service Center, Nagoya, Oct. 1995, pp.39-43.

[13] R.C. Eberhart and Y. Shi, Comparison between genetic algorithms and particle

swarm optimization, in Evolutionary Programming VII. New York: Springer-

Verlag, vol. 1447, Lecture Notes in Computer Science, 1998, pp. 611-616.

[14] R.C. Eberhart, and Y. Shi, Comparing inertia weights and constriction factors in

particle swarm optimization, in Proceedings of the Congress on Evolutionary

Computing, IEEE Service Center, vol. 1, Jul. 2000, pp.84-88.

[15] R.C. Eberhart and Y. Shi, Particle swarm optimization: developments, applications

and resources, in Proc. Congress on Evolutionary Computation (Hawaii), 2001, pp

816

[16] K.B. Edmund, G. Steven and K. Graham, Diversity in genetic programming: an

analysis of measures and correlation with fitness, IEEE Transactions on

Evolutionary Computation, vol. 8, no. 1, pp. 47-62, 2004.

[17] A. Ekart and S.Z. Nemeth, A metric for genetic programs and fitness sharing, in

Proceedings of the European Conference on Genetic Programming, vol. 1802, pp.

259-270, 2000.

[18] D. Gabor, W. Wides and R. Woodcock, A universal nonlinear filter predictor and

simulator which optimizes itself by a learning process, Proceedings of IEE, vol.

108-B, pp. 422-438, 1961.

[19] S. Garcia, D. Molina, M. Lozano and F. Herrera, A study on the use of non-

parametric tests for analyzing the evolutionary algorithms behaviour: a case study

on the CEC 2005 special session on real parameter optimization, Journal of

Heuristics, vol. 15, pp. 617-644, 2009.

[20] D.E. Goldberg, Genetic algorithms in search, optimization and machine learning,

United States of America: Addison Wesley Longman, Inc., 1989.

[21] H. Iba, Inference of differential equation models by genetic programming,

Information Sciences, vol. 178, pp. 4453-4468, 2008.

[22] A. Izadian, P. Khayyer and P. Famouri, Fault diagnosis of time-varying parameter

systems with application in MEMS LCRs, IEEE Transactions on Industrial

Electronics, vol. 56, no. 4, pp. 973-978, 2009.

[23] S. Janson and M. Middendorf, A hierarchical particle swarm optimizer for noisy and

dynamic environments, Genetic Programming and Evolvable Machines, vol. 7, pp.

329-354, 2006.

[24] Y. Jin and B. Sendhoff, Constructing dynamic optimization test problems using the

multi-objective optimization concept, In G. R. Raidl, editor, Applications of

evolutionary computing, Lecture Notes on Computer Sciences 3005, pp. 525-536.

Springer, 2004.

[25] J. Koza, Genetic Programming: On the Programming of Computers by Means of

Natural Evolution, MIT Press: Cambridge, 1992.

[26] J. Koza, Genetic Programming II: automatic discovery of reusable programs, MIT

Press, 1994.

[27] J.R. Koza, M.J. Streeter and M.A. Keane, Routine high-return human-competitive

automated problem-solving by means of genetic programming, Information

Sciences, vol. 178, no. 23, pp. 4434-4452, 2008.

[28] J. Kennedy and R.C. Eberhart, Particle Swarm Optimization, in IEEE International

Conference on Neural Networks, 1995, pp. 1942-1948.

[29] J. Kennedy and R.C. Eberhart, Swarm Intelligence. Morgan Kaufmann Publishers,

2001.

[30] J. Kennedy and R.C. Eberhart, A discrete binary version of the particle swarm

algorithm, IEEE Proceedings of International Conference on Systems, Man and

Cybernetics, vol. 5, pp. 4104-4108, 1997.

[31] C.J. Liao, C.T. Tseng and P. Luran, A discrete version of particle swarm

optimization for flowshop scheduling problems, Computer and Operations Research,

vol. 34, pp. 3099-3111, 2007.

[32] C. Li and S. Yang, A generalized approach to construct benchmark problems for

dynamic optimization, Proceedings of the 7

th

International Conference on Simulated

Evolution and Learning, pp. 391-400, 2008.

[33] C. Li, S. Yang, T.T. Nguyen, E.L. Yu, X. Yao, Y. Jin, H.G. Beyer and P.N.

Suganthan, Benchmark Generator for CEC 2009 Competition on Dynamic

Optimization, Technical report, University of Leicester and University of

Birmingham, UK, 2009.

[34] D.R. Liu, C.H. Lai and W.J. Lee, A hybrid of sequential rules and collaborative

filtering for product recommendation, Information Sciences, vol. 179, no. 20, pp.

3505-3519, 2009.

[35] G. Li, J.F. Wang, K.H. Lee and K.S. Leung, Instruction-matrix-based genetic

programming, IEEE Transactions on Systems, Man and Cybernetics Part B:

Cybernetics, vol. 38, no. 4, pp. 1036-1049, 2008.

[36] T. Li, Lei Guo and X. Lin, Improved delay-dependent bounded real lemma for

uncertain time-delay systems, Information Sciences, vol. 179, no. 20, pp. 3711-

3729, 2009.

[37] Y. Lin and B. Bhanu, Object detection via feature synthesis using MDL-based

genetic programming, IEEE Transactions on Systems, Man and Cybernetics Part

B: Cybernetics, vol. 35, no. 3, pp. 538-547, 2005.

[38] J. Madar, J. Abonyi and F. Szeifert, Genetic programming for the identification of

nonlinear input output models, Industrial and Engineering Chemistry Research, vol.

44, pp. 3178 3186, 2005.

[39] B. McKay, M.J. Willis and G.W. Barton, Steady-state modeling of chemical

processes using genetic programming, Computers and Chemical Engineering, vol.

21, no. 9, pp. 981-996, 1997.

[40] R.I. McKay, Fitness sharing genetic programming, Proceedings of the Genetic and

Evolutionary Computation Conference, pp. 435-442, 2000.

[41] N. Motoi, M. Ikebe and K. Ohnishi, Real-time gait planning for pushing motion of

humanoid robot, IEEE Transactions on Industrial Informatics, vol. 3, no. 2, pp. 154-

163, 2007.

[42] R.W. Morrison and K.A. De Jong, A test problem generator for non-stationary

environments, Proceedings of the IEEE Congress on Evolutionary Computation, pp.

2047-2053, 1999.

[43] D.P. Muni, N.R. Pal and J. Das, Genetic programming for simultaneous feature

selection and classifier design, IEEE Transactions on Systems, Man and

Cybernetics Part B: Cybernetics, vol. 36, no. 1, pp. 106-117, 2006.

[44] K. Natori, R. Oboe and K. Ohnishi, Stability analysis and practical design procedure

of time delayed control systems with communication disturbance observer, IEEE

Transactions on Industrial Informatics, vol. 4, no. 3, pp. 185-197, 2007.

[45] B. Naudts and L. Kallel, A comparison of predictive measures of problem difficulty

in evolutionary algorithms, IEEE Transactions on Evolutionary Computation, vol. 4,

no. 1, 2000.

[46] M.O. Neill and Conor Ryan, Grammatical Evolution, IEEE Transactions on

Evolutionary Computation, vol. 5, no. 4, pp. 349-358, 2001.

[47] M.O. Neill and A. Brabazon, Grammatical Swarm, Genetic and Evolutionary

Computation Conference, vol. 1, pp. 163-174, 2004.

[48] M.O. Neill and A. Brabazon, Grammatical Swarm: The generation of programs by

social programming, Natural Computing, vol. 5, pp. 443-462, 2006.

[49] X.H. Nguyen, R.I. McKay, D. Essam and H.A. Abbass, Toward an alternative

comparison between different genetic programming systems, Proceedings of

European Conference on Genetic Programming, pp. 67-77, 2004.

[50] S.K. Oh and W. Pedrycz, Multi-layer self-organizing polynomial neural networks

and their development with the use of genetic algorithms, Journal of the Franklin

Institute, vol. 343, pp. 125-136, 2006.

[51] Q.K. Pan, M.F. Tasgetiren and Y.C. Liang, A discrete particle swarm optimization

algorithm for the no-wait flowshop scheduling problem, Computers and Operations

Research, vol. 35, pp. 2907-2839, 2008.

[52] D. Parrott and X. Li, Locating and tracking multiple dynamic optima by a particle

swarm model using speciation, IEEE Transactions on Evolutionary Computation,

vol. 10, no. 4, pp. 440-458, 2006.

[53] K.E. Parsopoulos and M.N. Vrahatis, On the computation of all global minimizers

through particle swarm optimization, IEEE Transactions on Evolutionary

Computation, vol. 8, no. 3, pp. 211-224, 2004.

[54] G. Potgieter and A.P. Engelbrecht, Genetic algorithms for the structural optimization

of learned polynomial expression, Applied Mathematics and Computation, vol.

186, pp. 1441-1466, 2007.

[55] C.R. Reeves, Genetic algorithms and neighbourhood search, Evolutionary

Computing: AISB Workshop, pp. 115-130, 1994.

[56] K. Rodriguez-Vazquez, C.M. Fonseca and P.J. Fleming, Identifying the structure of

nonlinear dynamic systems using multiobjective genetic programming, IEEE

Transactions on Systems, Man and Cybernetics Part A, vol. 34, no. 4, pp. 531-545,

2004.

[57] T. Shibata and T. Murakami, Null space motion control by PID control considering

passivity in redundant manipulator, IEEE Transactions on Industrial Informatics,

vol. 4, no. 4, pp. 261-270, 2008.

[58] C.T. Tseng and C.J. Liao, A discrete particle swarm optimization for lot-streaming

flowshop scheduling problem, European Journal of Operational Research, vol. 191,

pp. 360-373, 2008.

[59] R.J.M. Vaessens, E.H.L. Aarts, and J.K. Lenstra, A local search template, in Proc

Parallel Problem-Solving from Nature 2, 1992, pp. 65-74.

[60] N. Wagner, Z. Michalewicz, M. Khouja and R.R. McGregor, Time series forecasting

for dynamic environments: the DyFor genetic program model, IEEE Transactions

on Evolutionary Computation, vol. 11, no. 4, pp. 433-452, 2007.

[61] J. Wang, Z. Fan, J.P. Terpenny and E.D. Goodman, Knowledge interaction with

genetic programming in mechatronic systems design using bond graphs, IEEE

Transactions on Systems, Man and Cybernetics Part C: Applications and Reviews,

vol. 35, no. 2, pp. 172-182, 2005.

[62] Y. Wang and Y. Yang, Particle swarm optimization with preference order ranking

for multi-objective optimization, Information Sciences, vol. 179, no. 12, pp. 1944-

1959, 2009.

[63] Y.G. Woldesenbet and G.G. Yen, Dynamic evolutionary algorithm with variable

relocation, IEEE Transactions on Evolutionary Computation, vol. 13, no. 3, pp.

500-513, 2009.

[64] S.X. Yang and X. Yao, Population-based incremental learning with associative

memory for dynamic environments, IEEE Transactions on Evolutionary

Computation, vol. 12, no. 5, pp. 542-561, 2008.

[65] A. Zafra and S. Ventura, G3P-MI: a genetic programming algorithm for multiple

instance learning, Information Sciences, vol. 180, no. 23, pp. 4496-4513, 2010.

[66] Y. Zhang, K.M. Jan, K.H. Ju and K.H. Chon, Representation of time-varying

nonlinear systems with time-varying principle dynamic modes, IEEE Transactions

on Biomedical Engineering, vol. 54, no. 11, pp. 1983-1992, 2007.

Appendix

Figure 2: The pseudocode of the genetic programming GP

{

Step 1: g=0

Step 2: Initialize O(g)=[u

1

(g), u

2

(g), u

POP

(g)]

// O(g) is the population of the g-th generation.

// u

i

(g) is the i-th individual of O(g).

//where u

k

(g) = x

i

+ x

i

x

j

+..[x

i

Step 3: Assign system coefficients a(t) in all u

k

(g) by LSM

//where u

i

(g) = a

0

(t) + a

i

(t) x

i

+ a

ij

(t) x

i

x

j

+

// ..+ a

12..Nterm

(t) [x

i

Step 4: Evaluate all u

k

(g) based on (3)

while (Terminational condition not fulfilled) do {

Step 5: Parent Selection O(g+1) =[u

1

(g+1), u

2

(g+1),

u

POP

(g+1)]

// where u

k

(g+1) = x

i

+ x

i

x

j

+[x

i

Step 6: Crossover O(g+1)

Step 7: Mutation O(g+1)

Step 8: Assign parameters a(k) in all u

k

(g+1) by

LSM

//where u

k

(g+1) = a

0

(t) + a

i

(t) x

i

+ a

ij

(t) x

i

x

j

+

// ..+ a

12..Nterm

(t) [x

i

Step 9: Evaluate all u

k

(g+1) based on (3)

Step 10: O(g)= O(g+1)

Step 11: g=g+1

}

0 100 200 300 400 500 600 700 800 900 1000

10

1

10

2

generation number

m

e

a

n

a

b

s

o

l

u

t

e

e

r

r

o

r

Sphere (5%)

C-PSO

D-PSO

D-GP

C-GP

P-GA

PNN

Figure 3a: Convergence plot for step moving data

5

H

Step

Sph

(Sphere function with 5% = Ad )

0 100 200 300 400 500 600 700 800 900 1000

10

2

10

3

10

4

generation number

m

e

a

n

a

b

s

o

l

u

t

e

e

r

r

o

r

Rosenbrock (5%)

C-PSO

D-PSO

D-GP

C-GP

P-GA

PNN

Figure 3b: Convergence plot for step moving data

5

H

Step

Ros

(Rosenbrock function with

5% = Ad )

0 100 200 300 400 500 600 700 800 900 1000

10

1

10

2

generation number

m

e

a

n

a

b

s

o

l

u

t

e

e

r

r

o

r

Rastrigrin (5%)

C-PSO

D-PSO

D-GP

C-GP

P-GA

PNN

Figure 3c: Convergence plot for step moving data

5

H

Step

Ras

(Rastrigrin function with

5% = Ad )

0 100 200 300 400 500 600 700 800 900 1000

10

1

10

2

generation number

m

e

a

n

a

b

s

o

l

u

t

e

e

r

r

o

r

Griewank (5%)

C-PSO

D-PSO

D-GP

C-GP

P-GA

PNN

Figure 3d: Convergence plot for step moving data

5

H

Step

Gri

(Griewank function with

5% = Ad )

0 100 200 300 400 500 600 700 800 900 1000

10

0

10

1

generation number

m

e

a

n

a

b

s

o

l

u

t

e

e

r

r

o

r

Ackley (5%)

C-PSO

D-PSO

D-GP

C-GP

P-GA

PNN

Figure 3e: Convergence plot for step moving data

5

H

Step

Ackley

(Ackley function with 5% = Ad )

0 100 200 300 400 500 600 700 800 900 1000

10

3

10

4

10

5

10

6

generation number

D

i

f

f

e

r

e

n

c

e

i

n

p

o

p

u

l

a

t

i

o

n

Sphere(5%)

C-PSO

D-PSO

D-GP

C-GP

P-GA

PNN

Figure 4a: Diversity plot for step moving data

5

H

Step

Sph

(Sphere function with 5% = Ad )

0 100 200 300 400 500 600 700 800 900 1000

10

3

10

4

10

5

10

6

generation number

D

i

f

f

e

r

e

n

c

e

i

n

p

o

p

u

l

a

t

i

o

n

Rosenbrock(5%)

C-PSO

D-PSO

D-GP

C-GP

P-GA

PNN

Figure 4b: Diversity plot for step moving data

5

H

Step

Ros

(Rosenbrock function with

5% = Ad )

0 100 200 300 400 500 600 700 800 900 1000

10

3

10

4

10

5

10

6

generation number

D

i

f

f

e

r

e

n

c

e

i

n

p

o

p

u

l

a

t

i

o

n

Rastrigrin(5%)

C-PSO

D-PSO

D-GP

C-GP

P-GA

PNN

Figure 4c: Diversity plot for step moving data

5

H

Step

Ras

(Rastrigrin function with 5% = Ad )

0 100 200 300 400 500 600 700 800 900 1000

10

3

10

4

10

5

10

6

generation number

D

i

f

f

e

r

e

n

c

e

i

n

p

o

p

u

l

a

t

i

o

n

Griewank(5%)

C-PSO

D-PSO

D-GP

C-GP

P-GA

PNN

Figure 4d: Diversity plot for step moving data

5

H

Step

Gri

(Griewank function with 5% = Ad )

0 100 200 300 400 500 600 700 800 900 1000

10

3

10

4

10

5

10

6

generation number

D

i

f

f

e

r

e

n

c

e

i

n

p

o

p

u

l

a

t

i

o

n

Ackley(5%)

C-PSO

D-PSO

D-GP

C-GP

P-GA

PNN

Figure 4e: Diversity plot for step moving data

5

H

Step

Ackley

(Ackley function with 5% = Ad )

Table 5: Average RMAE and ranks for the static data (

stat

Sph

H ,

stat

Ros

H ,

stat

Ras

H ,

stat

Gri

H ,

stat

Ack

H )

Algorithm

Static

Sph

F

(

stat

Sph

H )

Static

Ros

F

(

stat

Ros

H )

Static

Ras

F

(

stat

Ras

H )

Static

Gri

F

(

stat

Gri

H )

Static

Ack

F

(

stat

Ack

H )

Mean

rA

oP

(rA)

S-matrix oP

(rA)

S-matrix oP

(rA)

S-matrix oP

(rA)

S-matrix oP

(rA)

S-matrix

1 2 3 4 5 6 1 2 3 4 5 6 1 2 3 4 5 6 1 2 3 4 5 6 1 2 3 4 5 6

1 C-PSO 0.8873

(1)

_ _ X X X X 1.9252

(2)

_ _ X X X X 3.6538

(2)

_ _ X X X X 2.9008

(2)

_ _ X X X X 0.8178

(2)

_ X X X X X 1.8

2 D-PSO 1.3661

(2)

_ _ X X X X 1.0401

(1)

_ _ X X X X 2.2758

(1)

_ _ X X X X 1.8290

(1)

_ _ X X X X 0.7747

(1)

X _ X X X X 1.2

3 D-GP 4.3595

(3)

X X _ X _ _ 4.2012

(3)

X X _ _ _ X 5.6365

(3)

X X _ _ _ X 3.4597

(3)

X X _ _ _ _ 0.9546

(3)

X X _ X X X 3

4 C-GP 5.7800

(6)

X X X _ _ _ 5.2979

(5)

X X _ _ _ _ 6.0036

(4)

X X _ _ _ _ 5.8344

(6)

X X _ _ _ _ 1.0398

(5)

X X X _ _ _ 5.2

5 P-GA 4.7552

(5)

X X _ _ _ _ 5.1909

(4)

X X _ _ _ _ 6.0764

(5)

X X _ _ _ _ 5.1195

(5)

X X _ _ _ _ 1.0069

(4)

X X X _ _ _ 4.6

6 PNN 4.4885

(4)

X X _ _ _ _ 5.8052

(6)

X X X _ _ _ 6.3742

(6)

X X X _ _ _ 4.9114

(4)

X X _ _ _ _ 1.1207

(6)

X X X _ _ _ 5.2

oP Average RMAE obtained by the algorithms; rA rank; X difference between the two algorithms is significant; _ -

difference between the two algorithms is not significant;

Table 6: Average RMAE and ranks for the step move data with % 5 = Ad (

5

H

Step

Sph

,

5

H

Step

Ros

,

5

H

Step

Ras

,

5

H

Step

Gri

,

5

H

Step

Ack

)

Algorithm

Step

Sph

F - % 5 = Ad

(

5

H

Step

Sph

)

Step

Ros

F - % 5 = Ad

(

5

H

Step

Ros

)

Step

Ras

F - % 5 = Ad

(

5

H

Step

Ras

)

Step

Gri

F - % 5 = Ad

(

5

H

Step

Gri

)

Step

Ack

F - % 5 = Ad

(

5

H

Step

Ack

)

Mean

rA

oP

(rA)

S-matrix oP

(rA)

S-matrix oP

(rA)

S-matrix oP

(rA)

S-matrix oP

(rA)

S-matrix

1 2 3 4 5 6 1 2 3 4 5 6 1 2 3 4 5 6 1 2 3 4 5 6 1 2 3 4 5 6

1 C-PSO 2.8118

(2)

_ _ X X X X 2.0365

(2)

_ _ X X X X 4.9994

(2)

_ X X X X X 4.4084

10

1

(2)

_ X X X X X 0.8790

(2)

_ _ X X X X 2

2 D-PSO 1.7906

(1)