Searching External Data in SharePoint 2010 Using Business Connectivity Services

Diunggah oleh

Anonymous RGh8TgHak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Searching External Data in SharePoint 2010 Using Business Connectivity Services

Diunggah oleh

Anonymous RGh8TgHak Cipta:

Format Tersedia

Blog Map

Searching External Data in SharePoint 2010

Connectivity Services

18 Eric White - Independant Consultant 27 Apr 2010 9:28 PM

[Blog Map] This blog is inactive. New blog: EricWhite.com/blog

Business Connectivity Services (BCS) are a set of services and features that provide a way to connect SharePoint solutions to sources of

define external content types that are based on that external data. The search services in SharePoint 2010 are able to index the

external content type and show meaningful results to the searchers of the external data. BCS is based on its predecessor, Business Data

enhancements in several key areas, including support in SharePoint Designer.

This is an MSDN article written by Bob McClellan. It will be published sometime in the near future. After it is published, I will

that article. This article was driven by Juan Balmori Labra, with technical reviews by Nitin Ved, Josh Teitelbaum, Russell

Balmori Labra.

Table of Contents

Overview

Step-by-Step Example of Searching External Data

Customizing Search Results

Using Associations for Master/Detail Relationships

Explanation of the BCS Model XML

Modifying the External Content Type to Support Incremental Crawls

Using the Same External Content Type for an External List

Additional Resources

Overview

This article will examine how to use SharePoint Designer to define an external content type (ECT) and how to set up a search crawl that

external data. You will then see how to reduce crawl times for large amounts of data by using incremental crawls. All of this will be done

Designer, SharePoint Central Administration and some editing of XML.

Before beginning, you should have the following:

Access to SharePoint 2010 Central Administration, including the search service and the business data connectivity service.

Access to SharePoint Designer.

Page 1 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

A SharePoint 2010 web application with a site collection. For more information, see Create a site collection (Office SharePoint Server)

probably use Sharepoint - 80 for these examples.

Access to Microsoft SQL Server. You can use the same instance of SQL Server that SharePoint uses.

A sample database for Microsoft SQL Server (AdventureWorks will be used in these examples. See

to install.)

These examples will be more meaningful if you have some general understanding of SQL queries and views. Previous experience with XML will also

helpful. Even without that experience, you should be able to successfully complete all the examples.

Step-by-Step Example of Searching External Data

In this section, I will show you how to quickly set up a connection to external data using SharePoint Designer to define an external

you will see how to configure the search service to index that data and show results in a way that is useful to the end user. The

Verify that the web application has connections to the proper services.

Create a profile page site and a search site.

Configure the Business Data Connectivity Service.

Create the External Content Type.

Configure the Search Service and index the external data with a crawl.

Test the search.

As you work through the specific steps that follow, you will be using four main areas:

Business Data Connectivity in SharePoint 2010 Central Administration

Search in SharePoint 2010 Central Administration

SharePoint Designer

The search site that you will be creating

I suggest that once you have started working in one of those areas that you keep that window open. For example, once you have a window open for

Business Data Connectivity, open a new window to configure the search service.

The first step is to verify that the web application has a connection to the necessary services. If any necessary services are not

them.

Procedure: Verifying the Web Application Connections

Start SharePoint 2010 Central Administration. 1.

Click Manage web applications under the Application Management heading. 2.

Select the web application you will be using for these examples (e.g. Sharepoint - 80). 3.

Click Service Connections in the ribbon.

Figure 1. The Web Applications ribbon

4.

You need to have checks by Business Data Connectivity Service and a search service, like Search Service Application

service the web application uses so that you are configuring the correct one. (For example, you may have the

5.

Page 2 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

are not checked, check them and click OK. If you cannot check them, use the drop down at the top to change from

Figure 2. Dialog for Web Applications connections

The next step is to configure the Business Data Connectivity Service. You will need to set the permissions and set a host site for profile

are used to show the detailed information about an external data record. The search service will link its results to the appropriate profile

set up a profile page, then the search results will contain a useless link. Each profile page will show the data for a particular external

need a different page for each type. You could host these pages on any site, but it is usually better to create a separate site, or even a

collection for those pages so that they are not stored with regular site content. These instructions will show how to create the site within

collection.

Procedure: Creating a site for profile pages

Open the site collection you will be using with Internet Explorer. 1.

On the Site Actions drop-down, click New Site. 2.

Select the Blank Site template. 3.

In the right pane, click the More Options button. 4.

Type "Search Results Details" in the Title box. 5.

Type "ProfilePages" in the URL name box. 6.

In the Navigation section, click the No radio button for Display this site on the top link bar of the parent site? 7.

Click Create. 8.

Page 3 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

The URL that you see is the one you will use for the next step. For example, if the URL for the profile page site is

then you will specify the location of the profile pages as "http://server1/ProfilePages" as the host URL.

Procedure: Configuring the Business Data Connectivity Service

Start SharePoint 2010 Central Administration. 1.

Click Manage service applications under the Application Management heading. 2.

In the Name column, click on Business Data Connectivity Service.

Figure 3. Manage Service Applications list

3.

Click on the Edit tab. 4.

In the ribbon, click on Configure in the Profile Pages group. 5.

In the Host SharePoint site URL box, enter the full URL for the site you just created for profile pages.

Figure 4. Configuring the ECT profile page host

6.

Click OK. 7.

In the ribbon, click Set Metadata Store Permissions in the Permissions group. 8.

Page 4 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

If the account you will be using is not shown in the dialog, type the account name in the text box next to the 9.

Click Add. 10.

In the bottom list, check all of the check boxes (Edit, Execute, Selectable In Clients and Set Permissions 11.

Check the box for Propagate permissions to all BDC Models, External Systems and External Content Types in the BDC Metadata Store 12.

Click OK. 13.

You must also create a simple search site for testing.

Procedure: Creating a Basic Search Site

Open the site collection you will be using with Internet Explorer. (Make sure you have navigated back to the original site collection and that you are

not still viewing the site you created for the profile pages.)

1.

On the Site Actions drop-down, click New Site. 2.

Select the Basic Search Center template. 3.

In the right pane, type "BCS Search" for the Title and "BCSSearch" for the URL name. 4.

Click Create. 5.

Next, you will create an external content type (ECT) for the product and description view in the AdventureWorks database. The ECT can be

root of the site collection or any of the sites in that collection, including the one you just created. The ECT will be connected to SQL

system. Then you will define two basic operations to make the ECT usable by the search service. The Read List operation is used to get the

from the ECT. The Read Item operation is used to get a specific record from the ECT. Both operations require that you specify the field

identifies each record.

Procedure: Creating the External Content Type

On the Site Actions drop-down for the BCS Search site that you just created, click Edit in SharePoint Designer

of the four windows open that I mentioned previously. Only the search service has not been opened yet.)

1.

In the Navigation pane, click External Content Types. 2.

In the New group of the ribbon, click External Content Type.

Figure 5. Creating a new External Content Type

3.

Page 5 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

In the External Content Type Information group, next to Name, click New external content type

Figure 6. Summary view of an External Content Type

4.

Next to External System, click Click here to discover external data sources and... 5.

Click on the Add Connection button. 6.

In the External Data Source Type Selection dialog, choose SQL Server in the drop down. 7.

Click OK. 8.

In the SQL Server Connection dialog, type "localhost" in the Database Server box. 9.

Page 6 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Figure 7. SQL Server Connection dialog

Enter "AdventureWorks" for the Database Name box. 10.

Enter "AWProducts" for the Name (optional) box. 11.

Click OK.

Note: This connection will use the search service account to connect to the database. In a production configuration, you may want to use

impersonated identities so that database access is controlled independently from that account.

12.

Click on the expansion symbol next to AWProducts. 13.

Click on the expansion symbol next to Views. 14.

Right-click on vProductAndDescription to open a pop-up menu and click New Read Item Operation 15.

Page 7 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Figure 8. Data Source Explorer for an External Content Type

Click the Next > button at the bottom of the Read Item dialog. 16.

On the Input Parameters page, click ProductID in the Data Source Elements pane. 17.

Page 8 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Figure 9. Read Item dialog

In the Properties pane, check the box for Map to Identifier. 18.

Click the Next > button. 19.

On the Return Parameter page, click ProductID in the Data Source Elements pane. 20.

In the Properties pane, check the box for Map to Identifier. 21.

Click the Finish button. 22.

Right-click on vProductAndDescription to open a pop-up menu and click New Read List Operation 23.

Click the Next > button at the bottom of the Read List dialog. 24.

Click the Next > button. 25.

On the Return Parameter page, click ProductID in the Data Source Elements pane. 26.

In the Properties pane, check the box for Map to Identifier. 27.

Click the Finish button. 28.

In the ribbon, click on Summary View. 29.

In the Fields group, click on Name in the list. 30.

In the ribbon, click on Set as Title. 31.

Page 9 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Figure 10. Completed External Content Type

Right-click on the Products tab and click Save on the pop-up menu. (You can also use the disk icon above the File tab or Ctrl 32.

In the ribbon, click Create Profile Page. 33.

Toward the end of creating the ECT, you set the field that will be used as the title. That is the value that will appear as the link in the

don't specify a title, the search results will show cryptic text as the title that is likely to be confusing to the end user.

Now you need to configure the search service to use this ECT while crawling and indexing. The search service uses Content Sources to identify

the content it needs to search. You will also need to set the account to use for the crawl operation. This account should be the same as the

set permissions in the Business Data Connectivity service and it must have read access to the AdventureWorks database.

Note: In a production configuration, a new account should be created and used only to control permissions for the search service.

Procedure: Configuring the Search Service

Start SharePoint 2010 Central Administration. (If you open this as a new window, then you will now have a separate window for the fourth main 1.

Click Manage service applications under the Manage Applications heading. 2.

In the Name column, click on Search Service Application (or the search service that is connected to your site collection). 3.

Click the link to the right of Default content access account to change the account that will be used for crawling. Set it to the same account that you

used to set permissions in the Business Data Connectivity service.

4.

In the navigation pane, under the Crawling heading, click Content Sources. 5.

Click New Content Source. 6.

Page 10 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Figure 11. Content Source configuration in the search service

Type "AWProductSearch" in the Name box. 7.

Click on the Line of Business Data radio button. 8.

Click on the Crawl selected external data source radio button. 9.

Check the box next to AWProducts.

Figure 12. New Content Source dialog

10.

Check the box next to Start full crawl of this content source. 11.

Click OK. 12.

Page 11 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

The search service is now crawling through the new data to create search indexes for that content. It should be done in a few minutes, but

millions of records, the crawl would take significantly longer. You can click on the Refresh link to update the status. When the status is

crawl is done.

Procedure: Testing the Search

Open the "BCS Search" site you created earlier. 1.

Type "Jersey" in the search box and then Enter. 2.

Click on any of the results titles to see the profile page for that item.

Figure 13. Profile page from the search results

3.

Now you have a basic search working with external data. Next you will see how to control the appearance of the profile page and the results of

Customizing Search Results

There are two simple modifications you can make to the search using SharePoint Designer. First, you can change the fields that appear on the

and their descriptions by modifying the Read Item operation. For example, the descriptions "ProductID" and "ProductModel" are not particularly

friendly. Also, the CultureID field is not particularly useful.

If you have kept SharePoint Designer open, you can skip the first two steps below.

Procedure: Changing the Fields Shown on the Profile Page

In SharePoint Designer, click External Content Types in the navigation pane. 1.

In the list, click on Products to edit that ECT. 2.

In the External Content Type Operations group, double-click on the Read Item operation. 3.

Click Next > twice. 4.

In the Return Parameter page, uncheck the box by CultureID. 5.

Click on ProductID. 6.

In the Properties pane, type "ID" in the Display Name box. 7.

Click on ProductModel. 8.

In the Properties pane, type "Model" in the Display Name box. 9.

Click Finish. 10.

Click on the disk icon to save the ECT. 11.

In the ribbon, click Create Profile Page. 12.

Click Yes on the warning dialog. 13.

Page 12 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

You can test the results by going to the search site and looking at one of the profile pages. This change was only to the profile page, so there

perform another crawl. Obviously, the changes you can make through the ECT are limited. If you want more advanced customizations, you can edit the

directly and change it as you wish. (For example, you cannot remove the identifier field from the operation, but you could remove it from the profile

manually.) Be aware that if you ever create that profile page from the ECT later, it will overwrite those direct changes.

Figure 14. Modified profile page

The second change you can make is to adjust the Read List operation to exclude some fields from the search indexing. In this step, you

description from the indexing. Before you start that step, try a search for the word "microfiber." You should get some matches on that word in

descriptions. Now you will remove the Description field from the Read List operation and see that it is no longer indexed.

Procedure: Changing the Field Values Indexed for Search

In SharePoint Designer, in the External Content Type Operations group, double-click on the Read List 1.

Click Next > twice. 2.

In the Return Parameter page, uncheck the box by Description. 3.

Click Finish. 4.

Click on the disk icon to save. 5.

The ECT has been changed, but now the search index needs to be updated by running a full crawl. Whenever you modify an ECT, its internal ID is

The content source needs to be recreated, otherwise it will get errors trying to find that old ID.

Procedure: Updating the Search Index for a Modified External Content Type

In the search service configuration in SharePoint Central Administration, click on Content Sources 1.

Click on the drop-down button for the AWProductSearch content source. Click Delete. 2.

Click Yes to delete. 3.

Click New Content Source. 4.

Type "AWProductSearch" in the Name box. 5.

Click on the Line of Business Data radio button. 6.

Click on the Crawl selected external data source radio button. 7.

Check the box next to AWProducts. 8.

Check the box next to Start full crawl of this content source. 9.

Click OK. 10.

When the crawl is complete, go back to the search site and try searching for "microfiber" again. There will now be no matches. You can still

"Jersey" and click on one of the "Long-Sleeve Logo Jersey" items to see that the description with the word "microfiber" can be viewed in the profile

but it has now been excluded from the search results.

Page 13 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Using Associations for Master/Detail Relationships

Business Connectivity Services also allows you to define a master/detail (aka parent-child) relationship between two ECTs. The resulting

master ECT will automatically be generated with a list of the detail records for that particular master record. Our interest here is how

handles this type of association.

To try this out, you will create an association from the detail ECT (Product) to the master ECT (ProductModel). Then you will create a new content source in

the search to see how the results are generated for those. Then you will see how to modify the configuration to give a much simpler and more useful set of

results by treating the detail records as attachments to the master record.

Procedure: Creating an Association in an External Content Type

In the Navigation pane in SharePoint Designer, click External Content Types. 1.

On the New section of the ribbon, click External Content Type. 2.

In the External Content Type Information group, next to Name, click New external content type

be replicated to the Display Name text.)

3.

In the External Content Type Information group, next to Display Name, click ProductModel. Type "Model" in the box. (This label will appear on

profile page.)

4.

Next to External System, click Click here to discover external data sources and... 5.

Click on the Add Connection button. 6.

In the External Data Source Type Selection dialog, choose SQL Server in the drop down. 7.

Click OK. 8.

In the SQL Server Connection dialog, enter "localhost" for the Database Server. 9.

Enter "AdventureWorks" for the Database Name box. 10.

Enter "AWModel" for the Name (optional) box. 11.

Click OK. 12.

Click on the expansion symbol next to AWModel. 13.

Click on the expansion symbol next to Tables. 14.

Right-click on ProductModel (you will probably have to scroll down to find this table) to open a pop 15.

Click the Finish button. 16.

Right-click on ProductModel to open a pop-up menu and click New Read List Operation. 17.

Click the Finish button. 18.

In the ribbon, click on Summary View. 19.

In the Fields group, click on Name in the list. 20.

In the ribbon, click on Set as Title. 21.

Save the ECT by clicking on the disk icon. 22.

In the Navigation pane in SharePoint Designer, click External Content Types. 23.

On the New section of the ribbon, click External Content Type. 24.

In the External Content Type Information group, next to Name, click New external content type

replicated to the Display Name text.)

25.

Next to External System, click Click here to discover external data sources and... 26.

Right-click on Product (in Tables within AWModel) to open a pop-up menu and click New Read Item Operation 27.

Click the Finish button. 28.

Right-click on Product to open a pop-up menu and click New Read List Operation. 29.

Click the Finish button. 30.

Right-click on Product to open a pop-up menu and click New Association. 31.

Page 14 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Type "Products" in the box for the Association Display Name. (This will change the label for the detail records in the profile page for the master

record.)

32.

Click the Browse button.

Figure 15. Choosing the External Content Type for an Association

33.

Click ProductModel to select it. 34.

Click OK. 35.

Click Next >. 36.

In the Data Source Elements pane on the Input Parameters page, click ProductModelID to select it. 37.

In the Properties pane, check the box for Map to Identifier. 38.

Click Next > twice. 39.

In the Data Source Elements pane on the Return Parameter page, uncheck all of the fields except for

and ProductModelID.

40.

If you used the top check box to uncheck all the fields, you will need to set the identifier again. Click

then check Map to Identifier in the Properties pane.

41.

Click ProductID and change the text for the Display Name to "ID." 42.

Click ProductNumber and change the text for the Display Name to "Product Number." 43.

Click ListPrice and change the text for the Display Name to "List Price." 44.

Page 15 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Figure 16. Return Parameter page for association

Click the Finish button. 45.

In the ribbon, click on Summary View. 46.

In the Fields group, click on Name in the list. 47.

In the ribbon, click on Set as Title. 48.

Save the ECT using the disk icon. 49.

In the ribbon, click Create Profile Page. 50.

Click the Model tab to see the first ECT. 51.

In the ribbon, click Create Profile Page. 52.

You probably noticed that I had you create a new named connection for the same AdventureWorks database. The reason is that a content

search will crawl all ECTs defined with the same data source. If you want to be able to completely control when those different ECTs are crawled

they are crawled, you will need to create different data sources so that you can also create different content sources for search.

You may also have noticed that you did not have to set the identifiers for these ECTs. SharePoint Designer automatically detects the

tables, but not for views.

The associated ECTs are ready. Now you need to create a new search content source to index them. It also helps to delete the old content

you can see the results of the new indexing only.

Page 16 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Procedure: Crawling and Indexing the Associated ECTs

In the search service configuration in SharePoint Central Administration, click on Content Sources 1.

Click on the drop-down button for the AWProductSearch content source. Click Delete. 2.

Click OK to delete. 3.

Click New Content Source. 4.

Type "AWModelSearch" in the Name box. 5.

Click on the Line of Business Data radio button. 6.

Click on the Crawl selected external data source radio button. 7.

Check the box next to AWModel. 8.

Check the box next to Start full crawl of this content source. 9.

Click OK. 10.

When the crawl is done (click Refresh to update the status), you can search for "Jersey" and you will see results for both the

(Product). The model results do not have sizes (e.g. S, M, L) and the link for them includes the words "ProductModel" instead of

one of those and you will see the master/detail view that is created by the association. Although this search works, it isn't pretty

indexing information in the detail. You can see matches for the detail, but they are not based on the master record. For example, if you

"Dissolver," you will only see the detail in the results. To make these results better, you will first eliminate the detail records from the

the ECT to "attach" the detail record information to the master record. The first change is one you have already done before, but now you

the fields that can be indexed.

Figure 17. Profile Page for a Master/Detail Association

If you have left the tabs open for the ECTs you just defined, skip the first two steps.

Procedure: Changing the Field Values Indexed for Search

In SharePoint Designer, click External Content Types in the navigation pane. 1.

In the list, click on ProductDetail to edit that ECT. 2.

Page 17 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

In the External Content Type Operations group, double-click on the Read List operation. 3.

Click Next > twice. 4.

In the Return Parameter page, uncheck the boxes for all of the fields in the Data Source Elements

you use the check box to uncheck all fields, you will need to check Map to Identifier for ProductID

5.

Click Finish. 6.

Save the ECT using the disk icon. 7.

Follow the usual steps to run a full crawl after changing the model. Remember to delete the existing content source and then create a new one.

you search for "Dissolver," there should be no results. You will now modify the model so that the associated data is attached to the master during

indexing. This change is not supported by SharePoint Designer, so you will need to modify the model in its XML form. To do so, you will need to export

model to an XML file, edit it and then import the changed file.

Procedure: Exporting the BCS Model to an XML file

In SharePoint Designer, click External Content Types in the navigation pane. 1.

In the list, click AWModel next to ProductDetail to select that ECT. 2.

In the ribbon, click Export BDC Model. 3.

In the Export BDC Model dialog, type "ProductDetail" in the BDC Model Name box. 4.

Click OK. 5.

Change the Save as type to All Files. 6.

Choose a destination folder for the file. 7.

Change the File Name box to "ProductDetail.xml" 8.

Click Save. 9.

You can use any XML editor to change this file. I use SharePoint Designer by finding the file in a file browser and then selecting

SharePoint Designer from the right-click popup menu. You could also drag the file into SharePoint Designer or even edit it with Notepad.

Modify the XML file to add the highlighted line shown below. Be sure you are in the MethodInstances element for the association rather than the

the Read List or Read Item methods.

<MethodInstances>

<Association Name="ProductNavigate Association"

Type="AssociationNavigator"

ReturnParameterName="ProductNavigate Association"

DefaultDisplayName="Products">

<Properties>

<Property Name="AttachmentAccessor" Type="System.String"></Property>

<Property Name="ForeignFieldMappings" ...>

</Properties>

Save the change and then follow the steps below to import the model with the change.

Procedure: Importing an XML file to Update a BCS Model

In the BDC service configuration in SharePoint Central Administration, click on the Edit tab. 1.

In the View section of the ribbon, select External Content Types from the drop-down list. 2.

Click on the drop-down menu for ProductDetail and click Delete. 3.

Click OK. 4.

In the BDC Models section of the ribbon, select Import. 5.

In the BDC Model File box, type your XML file name or click the Browse button to browse for it. 6.

Page 18 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Click the Import button. You may see one or two warnings, but there should be no errors. 7.

Click OK. 8.

Note: When you exported the model, you chose a name for it that also happens to be the same as the External Content Type. Although the model and

may seem like the same thing, they are not. If you had to import that same model file again, you would get an error saying that there is a duplicate model,

even if you had deleted the ECT. Whenever you are importing the same model a second time, you must delete the model instead of the ECT to avoid that

error.

Follow the usual steps to recreate the AWModelSearch content source and run a full crawl. Now when you search for" Dissolver," you should get

results. You can also try searching for "Jersey" and see that it only returns the shows the model records in the results.

Explanation of the BCS Model XML

You made a modification to the XML version of the BCS Model in the last section. This section will explain most of the elements of that XML

skip down to the next section about incremental crawls, if you are not that interested in these details. This information is not necessary

examples. It will help if you are looking at an actual model XML file while reading through this.

The top-level element is the Model. It has a Name attribute that will appear as the name of the model when you import it. LobSystems is a child

may contain one or more LobSystem child elements. The LobSystem element has a Name attribute that is used to easily identify the external data

also has a Type attribute for the type of data source. For these examples, the Type is "Database."

The actual connection information for that data source is defined as Property elements in the LobSystemInstance element, which is a child

LobSystemInstances. LobSystemInstances is a child of LobSystem. Property elements are very common in the BCS Model. Many of the elements allow a

Properties element that contains one or more Property elements. A Property element has a Name attribute that identifies the property and a Type attribute

that identifies the .Net type of the value for that property. The value then appears in the body of that Property element. Some of the property names for

LobSystemInstance are shown in the table below.

Table 1. Properties for LobSystemInstance

Name Type Example Value

AuthenticationMode System.String PassThrough

DatabaseAccessProvider System.String SqlServer

RdbConnection Data Source System.String localhost

RdbConnection Initial Catalog System.String AdventureWorks

RdbConnection Integrated

Security

System.String SSPI

RdbConnection Pooling System.String True

Page 19 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

ShowInSearchUI System.String <anything>

You might have noticed that all the properties that start with "RdbConnection" are regular connection string values for an SQL database. Any

appears in a connection string can defined in this list of properties by prefixing it with "RdbConnection." The last property listed, ShowInSearchUI,

have value. Even though it is defined as a string, just having the property is all that is needed for the model to know that the LobSystemInstance

appear in the user interface.

Another child of LobSystem is Entities. Although it may contain one or more Entity children, you will generally see models with just one entity.

equivalent to a single External Content Type. There are several attributes for an Entity, including the Name and the DefaultDisplayName. The

an Entity element are shown in the table below.

Table 2. Properties for Entity

Name Type Example Value

Title System.String Name

DefaultAction System.String View Profile

The Title property defines the field that will be used as the title in the search results. The DefaultAction property refers to an Action

action will be used as the link from the search results. In these examples, that action is always set to open a URL for a profile

Some child elements for an Entity are AccessControlList, Identifiers, AssociationGroups, Actions and Methods.

The AccessControlList element may contain one or more AccessControlEntry elements that have a Principal attribute with the user name

access. The child elements are Right elements that define the access rights for the principal. Each Right element has a BdcRight attribute

Execute, SetPermissions or SelectableInClients.

The Identifiers element contains an Identifier element with a Name attribute for the field name that is an identifier for the entity and a

that contains the .Net data type of the identifier.

The AssociationGroups element is used to define associations between ECTs. The Actions element contains an Action element, usually to open a

page. These are easily defined by SharePoint Designer, so I won't say much about them here.

The Methods element contains one or more Method elements. Each Method element has a Name attribute that uniquely identifies the method for this

entity. It also has an AccessControlList element that is identical to the one in the Entity element. The properties for a Method element are shown in the table

below.

Table 3. Properties for Model

Name Type Example Value

RdbCommandType System.String System.Data.CommandType ...

Page 20 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

RdbCommandText System.String SELECT [ProductID],

[ProductModelID] FROM

[Production].[Product]

BackEndObjectType System.String SqlServerTable

BackEndObject System.String Product

Schema System.String Production

The RdbCommandText is very important since it allows you to greatly customize the SQL query used for your data. For example, if you don't

to create a view on a particular database, you could still modify that text to do joins within the command text as long as the return

BackEndObjectType can be SqlServerView if the RdbCommandText refers to a view instead of table.

FilterDescriptors is a child of the Method element. It may contain one or more FilterDescriptor elements. SharePoint Designer supports the

in its user interface, but they are not generally that useful for search methods where you have control over the SQL query. There is

SynchronizationCookie, that will be shown in the next section.

Parameters is a child of the Method element. It may contain one or more Parameter child elements. The Parameter element has a Name attribute

uniquely identifies the parameter and Type attribute that can be In, InOut or Return. There can only be a single parameter for each Method that is a Return

type. The number and types of parameters are determined by the operation that the method implements. Many of these operations can be defined by

SharePoint Designer, so it is easiest to create the operation there and then edit as necessary. Two of the operations that cannot be defined by SharePoint

Designer, ChangedIdEnumerator and DeletedIdEnumerator, will be defined in the next section.

The parameter is actually defined by the child TypeDescriptor element. This element has attributes for the Name, TypeName, and IdentifierName.

elements have simple types and are defined by a single TypeDescriptor element. Others can be very complex with nested TypeDescriptors and

TypeDescriptor elements and additional properties. Some of the properties for the TypeDescriptor element are shown below.

Table 4. Properties for TypeDescriptor

Name Type Example Value

Size System.Int32 25

RequiredInForms System.Boolean true

Decimal Digits System.Int32 4

For the most part, you should be able to copy and edit the parameters that are defined by SharePoint Designer.

The last important child of the Method element is MethodInstances. It contains a MethodInstance element that defines the type of

attribute may be Finder, SpecificFinder, AssociationNavigator, IdEnumerator and others. The ReturnParameterName attribute

Page 21 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

attribute that defines the return value for the operation. The Default attribute will usually be "true" since you will only

operation. If there were more than one Finder, for example, this would specify which one would be used by default. The Name

MethodInstance and the DefaultDisplayName will be used whenever that operation name is displayed. The MethodInstance element has some

properties as shown in the table below.

Table 5. Properties for TypeDescriptor

Name Type Example Value

RootFinder System.String <anything>

AttachmentAccessor System.String <anything>

LastModifiedTimeStampField System.String ModifiedDate

The RootFinder marks the Finder method that the search crawler will use to enumerate all of the top level rows that should be crawled. There

only one Finder method that is marked as the RootFinder. The AttachmentAccessor property was discussed in the previous section and the

LastModifiedTimeStampField property is discussed in the next section.

If you want to see more documentation of the BCS Model, see the links in the Additional Resources section.

Modifying the External Content Type to Support Incremental Crawls

It's easy enough to run full crawls all the time for the numbers of records in these examples, but it doesn't work so well if you want to index a

records. Once the ECT and search is set up for that kind of volume, it is much better to run incremental crawls to find any changes since the last

then update just those in the search index. SharePoint search supports two approaches for handling incremental crawls on external content types. There

advantages and disadvantages to each, but first you need to understand how they function.

Timestamp-based Incremental Crawls

In this approach, you define a field that contains the last modified timestamp for each record. If that timestamp is newer than the last crawl, then the

indexes for that record are updated. For this example, you will be modifying the ECT based on a view, but first you will need to add the last modified date to

the view in the AdventureWorks database.

Procedure: Modifying the vProductAndDescription View to Add the Modified Date

Start SQL Server Management Studio. 1.

In the Connect to Server dialog, select Database Engine as the Server type. 2.

Type "localhost" in the Server name box. 3.

Click Connect. 4.

Expand the Databases item in the Object Explorer group. 5.

Right-click on the AdventureWorks item and click New Query. 6.

Execute the ALTER VIEW statement as shown below. 7.

ALTER VIEW [Production].[vProductAndDescription]

WITH SCHEMABINDING

AS

Page 22 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

SELECT

p.[ProductID]

,p.[Name]

,pm.[Name] AS [ProductModel]

,pmx.[CultureID]

,pd.[Description]

,CASE WHEN p.ModifiedDate > pm.ModifiedDate THEN p.ModifiedDate ELSE pm.ModifiedDate END AS

FROM [Production].[Product] p

INNER JOIN [Production].[ProductModel] pm

ON p.[ProductModelID] = pm.[ProductModelID]

INNER JOIN [Production].[ProductModelProductDescriptionCulture] pmx

ON pm.[ProductModelID] = pmx.[ProductModelID]

INNER JOIN [Production].[ProductDescription] pd

ON pmx.[ProductDescriptionID] = pd.[ProductDescriptionID];

This adds one new field to the view. For this example, the ModifiedDate is the most recent modified date from Product or ProductModel. If

to include ProductDescription in that expression, you would just need to add another case to determine which of the dates is most recent.

Procedure: Adding the ModifiedDate to the ECT Operations

In SharePoint Designer, click External Content Types in the navigation pane. 1.

In the list, click on Products to edit that ECT. 2.

In the External Content Type Operations group, double-click on the Read Item operation. 3.

Click Next > twice. 4.

In the Return Parameter page, check the ModifiedDate field in the Data Source Elements pane. 5.

Click Finish. 6.

In the External Content Type Operations group, double-click on the Read List operation. 7.

Click Next > twice. 8.

In the Return Parameter page, check the ModifiedDate field in the Data Source Elements pane. 9.

In the Properties pane, check the box next to Timestamp Field.

Figure 18. Setting the Timestamp Field

10.

Page 23 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Click Finish. 11.

Save the ECT by clicking on the disk icon. 12.

By checking the box for the Timestamp Field, you have set that field to be used by the search service to determine if the record has changed since the last

crawl. Internally, the XML will now have a LastModifiedTimeStampField property that specifies the ModifiedDate field. If you exported the model for

Products and searched for the property, you would find it in the MethodInstances properties for the Read List method.

You can now follow the usual steps to create the AWProductSearch content source and run a full crawl. As before, you may want to delete the

AWModelSearch to avoid seeing results from both sources. When the full crawl is done, start an incremental crawl. When the incremental crawl has

completed, you can now see if it worked.

Procedure: Examining the Results of the Incremental Crawl

In the search service configuration in SharePoint Designer, click on Crawl Log under Crawling in the left pane. 1.

Click Crawl History at the top. 2.

You should see that the full crawl had something like 296 successes and the incremental crawl had only 2 successes. Since no records had

incremental crawl did not have to re-index the records.

If you want to test the modified date, make a change to one or more of the records that includes updating the ModifiedDate field to the current date/time

and then run another incremental crawl. The successes will be higher than 2 (3 if you change just one record), but not nearly as high as 296. The query below

shows an example of that sort of change.

UPDATE Production.Product

SET Name='Classical Vest, M',ModifiedDate=GETDATE()

WHERE ProductID=865

Changelog-based Incremental Crawls

In this approach, SharePoint search stores a token or cookie that we designate to track changes since the last crawl. In this case, the cookie will be a single

timestamp from the last time that a crawl was started. If there have been any changes since that time, then only the modifications are updated in the index.

This process requires two new operations to be defined for the ECT, one for changed records and one for deleted records. These must be created manually

because SharePoint Designer does not have a way to create them. (If you look at the ECT in SharePoint Designer, they will be shown in the External Content

Type Operations list, but you will not be able to edit them.) As before, you will need to export the BCS model in order to edit the XML directly.

Procedure: Exporting the BCS Model to an XML file

In SharePoint Designer, click External Content Types in the navigation pane. 1.

In the list, click AWProducts next to Products to select that ECT. 2.

In the ribbon, click Export BDC Model. 3.

In the Export BDC Model dialog, type "Products" in the BDC Model Name box. 4.

Click OK. 5.

Change the Save as type to All Files. 6.

Choose a destination folder for the file. 7.

Change the File Name box to "Products.xml" 8.

Click Save. 9.

In order to implement these two operations, you will need two SQL queries. One will return the records changed after a particular timestamp and the other

will return the records deleted after a particular timestamp. For the product table, I will use the DiscontinuedDate to indicate that a record has been

Page 24 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

effectively deleted and should no longer appear in search results. If the DiscontinueDate field is null, it is still active. The DiscontinuedDate from the Product

table will need to be added to the view just like the ModifiedDate was added previously, though there is no need to look at the ProductModel record, so it's

a simple added field (p.DiscontinuedDate).

Here are the new methods that you will need to add to the BCS model for the Products ECT within the Methods element. They both use a

SynchronizationCookie filter type to track the last run date and time. The SQL query must return the appropriate set of changed or deleted record IDs and

also update the last run date and time. The key SQL is highlighted in the XML for these two operations below.

<Method IsStatic="false" Name="GetChangedProductIds">

<Properties>

<Property Name="RdbCommandType" Type="System.Data.CommandType, System.Data, Version=2.0.0.0, Culture=neutral,

PublicKeyToken=b77a5c561934e089">Text</Property>

<Property Name="RdbCommandText" Type="System.String">

if @LastRunDate != CAST('1900-1-1' as datetime)

BEGIN

SELECT [ProductID] FROM [Production].[vProductAndDescription] WHERE [ModifiedDate] >

END

SET @LastRunDate=CURRENT_TIMESTAMP

</Property>

<Property Name="BackEndObjectType" Type="System.String">SqlServerView</Property>

<Property Name="BackEndObject" Type="System.String">vProductAndDescription</Property>

<Property Name="Schema" Type="System.String">Production</Property>

</Properties>

<AccessControlList>

<AccessControlEntry Principal="contoso\administrator">

<Right BdcRight="Edit" />

<Right BdcRight="Execute" />

<Right BdcRight="SetPermissions" />

<Right BdcRight="SelectableInClients" />

</AccessControlEntry>

</AccessControlList>

<FilterDescriptors>

<FilterDescriptor Name="LastRunDate" Type="InputOutput">

<Properties>

<Property Name="SynchronizationCookie" Type="System.String">ChangedItemCookie</Property>

</Properties>

</FilterDescriptor>

<FilterDescriptor Name="FilterDescriptor" Type="Timestamp" />

</FilterDescriptors>

<Parameters>

<Parameter Name="@LastRunDate" Direction="InOut">

<TypeDescriptor Name="LastRunDateTypeDescriptor" TypeName="System.DateTime" AssociatedFilter="LastRunDate">

<Interpretation>

<NormalizeDateTime LobDateTimeMode="Local" />

</Interpretation>

</TypeDescriptor>

</Parameter>

<Parameter Direction="Return" Name="ChangedProductIds">

<TypeDescriptor TypeName="System.Data.IDataReader, System.Data, Version=2.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089"

IsCollection="true" Name="ChangedProductIds">

<TypeDescriptors>

<TypeDescriptor TypeName="System.Data.IDataRecord, System.Data, Version=2.0.0.0, Culture=neutral,

PublicKeyToken=b77a5c561934e089" Name="ChangedProductIdElement">

<TypeDescriptors>

<TypeDescriptor TypeName="System.Int32" ReadOnly="true" IdentifierName="ProductID" Name="ProductID">

Page 25 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

<Properties>

<Property Name="RequiredInForms" Type="System.Boolean">true</Property>

</Properties>

</TypeDescriptor>

</TypeDescriptors>

</TypeDescriptor>

</TypeDescriptors>

</TypeDescriptor>

</Parameter>

</Parameters>

<MethodInstances>

<MethodInstance Name="GetChangedPrimaryKeysInstance" Type="ChangedIdEnumerator" ReturnParameterName="ChangedProductIds"

</MethodInstances>

</Method>

<Method IsStatic="false" Name="GetDeletedProductIds">

<Properties>

<Property Name="RdbCommandType" Type="System.Data.CommandType, System.Data, Version=2.0.0.0, Culture=neutral,

PublicKeyToken=b77a5c561934e089">Text</Property>

<Property Name="RdbCommandText" Type="System.String">

if @LastRunDate != CAST('1900-1-1' as datetime)

BEGIN

SELECT [ProductID] FROM [Production].[vProductAndDescription] WHERE [DiscontinuedDate] >

END

SET @LastRunDate=CURRENT_TIMESTAMP

</Property>

<Property Name="BackEndObjectType" Type="System.String">SqlServerView</Property>

<Property Name="BackEndObject" Type="System.String">vProductAndDescription</Property>

<Property Name="Schema" Type="System.String">Production</Property>

</Properties>

<AccessControlList>

<AccessControlEntry Principal="contoso\administrator">

<Right BdcRight="Edit" />

<Right BdcRight="Execute" />

<Right BdcRight="SetPermissions" />

<Right BdcRight="SelectableInClients" />

</AccessControlEntry>

</AccessControlList>

<FilterDescriptors>

<FilterDescriptor Name="LastRunDate" Type="InputOutput">

<Properties>

<Property Name="SynchronizationCookie" Type="System.String">DeletedItemCookie</Property>

</Properties>

</FilterDescriptor>

<FilterDescriptor Name="FilterDescriptor" Type="Timestamp" />

</FilterDescriptors>

<Parameters>

<Parameter Name="@LastRunDate" Direction="InOut">

<TypeDescriptor Name="LastRunDateTypeDescriptor" TypeName="System.DateTime" AssociatedFilter="LastRunDate">

<Interpretation>

<NormalizeDateTime LobDateTimeMode="Local" />

</Interpretation>

</TypeDescriptor>

</Parameter>

<Parameter Direction="Return" Name="DeletedProductIds">

<TypeDescriptor TypeName="System.Data.IDataReader, System.Data, Version=2.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089"

IsCollection="true" Name="DeletedProductIds">

<TypeDescriptors>

<TypeDescriptor TypeName="System.Data.IDataRecord, System.Data, Version=2.0.0.0, Culture=neutral,

Page 26 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

PublicKeyToken=b77a5c561934e089" Name="DeletedProductIdElement">

<TypeDescriptors>

<TypeDescriptor TypeName="System.Int32" ReadOnly="true" IdentifierName="ProductID" Name="ProductID">

<Properties>

<Property Name="RequiredInForms" Type="System.Boolean">true</Property>

</Properties>

</TypeDescriptor>

</TypeDescriptors>

</TypeDescriptor>

</TypeDescriptors>

</TypeDescriptor>

</Parameter>

</Parameters>

<MethodInstances>

<MethodInstance Name="GetDeletedPrimaryKeysInstance" Type="DeletedIdEnumerator" ReturnParameterName="DeletedProductIds"

</MethodInstances>

</Method>

Although these methods appear very long, they are actually pretty simple and similar. The filter is defined to pass in the last run date,

timestamp containing both date and time. The return parameter is defined as a set of records containing just a ProductID. The

define these methods as the changed record and deleted record operations.

The SQL code is also similar. Both check to see if the "LastRunDate" timestamp is valid. If so, they return all the modifications since

the timestamp is updated to the current date and time so that the cookie will be stored with the new value. The changed record method

ModificationDate to the last timestamp and the deleted record method compares the DiscontinuedDate.

There is one other change that should be made to correctly implement this approach. The SQL for the Read List operation should be updated

no deleted records will be included in a full crawl, as shown below.

<Property Name="RdbCommandText" Type="System.String">

SELECT [ProductID],[Name],[ProductModel],[CultureID],[Description]

FROM [Production].[vProductAndDescription]

WHERE [DiscontinuedDate] IS NULL

</Property>

Make these changes to the Products model, and you might also want to remove the LastModifiedTimeStampField property to verify that the new

are doing the job. Import the modified model using the BDC service configuration in SharePoint Central Administration.

Procedure: Importing an XML file to Update a BCS Model

In the BDC service configuration in SharePoint Central Administration, click on the Edit tab. 1.

In the View section of the ribbon, select External Content Types from the drop-down list. 2.

Click on the drop-down menu for Products and click Delete. 3.

Click OK. 4.

In the BDC Models section of the ribbon, select Import. 5.

In the BDC Model File box, type your XML file name or click the Browse button to browse for it. 6.

Click the Import button. You may see one or two warnings, but there should be no errors. 7.

Click OK. 8.

Now you can try it out by recreating the content source for search and then making changes to the records, starting the incremental crawl

see the results.

Page 27 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

If the database really needs to delete records, rather than just mark them as "deleted," you will need to use another approach to keep track of deletions.

The easiest is to create a new table for deleted IDs and add a trigger to the original table that adds records to the new table whenever they are deleted. The

table of deleted records could store just IDs and a timestamp. If your incremental crawls are run on a schedule, then you could also schedule a SQL job

delete older records from the table.

Comparing the Two Approaches

The timestamp-based approach is much simpler to implement, but the changelog-based approach is much more efficient. The timestamp

requires that the search service to store the timestamps for every record and then compare them all every time it does an incremental crawl. The

-based approach only requires the search service to store a single timestamp. In addition, when the search service requests the changes, it only

the records that have changed. If you have a very large number of records, then the changelog-based approach will save a lot of time and storage.

have very few records, then the incremental crawls aren't really necessary. If you aren't sure which approach to use, you can try the

approach first. Then, if it seems to be too slow or be taking too much disk storage, try switching to the changelog

have any control over the database, you may be forced to use the approach that works with the existing schema.

What Ever Happened to IDEnumerator?

If you have used SharePoint search with external data since the Business Data Catalog was introduced in SharePoint 2007, you would have used

IDEnumerator instead of the Read List operation. The IDEnumerator method still works for searches, but it is not supported directly in SharePoint Designer.

One of the uses of IDEnumerator was also to allow for very large external lists that otherwise would be throttled by the site settings. The search crawlers are

not constrained by the throttle settings, so there is no need to use "segmenting" techniques. If you already have an ECT with IDEnumerator defined, you can

use it for search as well. For new ECT definitions, it is quicker to use the Read List operation in SharePoint Designer.

Using the Same External Content Type for an External List

The use of ECTs for external lists is not really within the scope of this paper, but you might be tempted to use the same ECTs for both.

many of the customizations for search could also affect the appearance of the external list. Also, you could easily have to deal with

could interfere with the efficiency of the search crawls. In general, it is probably better to use different ECTs for these purposes

simple and less than a thousand records.

Additional Resources

For more information, see the following resources:

Enterprise Search administration

Business Data Connectivity services administration

Business Connectivity Services Schema Reference

Stereotyped Operations Supported By BDC

The Notion of Associations and the External Item Picker

Tooling Associations in SharePoint Designer 2010

Configure the Secure Store Service (SharePoint Server 2010)

Page 28 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

DL

3 May 2010 4:58 PM

Thorough technical blog posts like this are vital! We appreciate the great efforts that have gone into these

Moe

1 Jun 2010 8:37 AM

Thank you for this article Eric.

I was searching for this topic for some time now. I am trying to figure out which approach to use for providing external data (from an SQL

records) to our users: providing search through a content source reading from an ECT or exposing the ECT through a Business Data

know we could use both at the same time (i.e. search and the filtering through a web part), but we need to choose the most

I guess after reading this article, I will be going with the search option using the incremental crawls.

Thank you.

Sara

11 Jun 2010 8:31 AM

This is incredibly helpful. When I'm configuring the Business Data Connectivity Service, I get an error after

Access is denied by Business Data Connectivity, with a long and unhelpful correlation ID. Any ideas as to why this is happening?

Eric White - Independant Consultant

11 Jun 2010 2:15 PM

Hi Sara,

I'm not sure why. Have you reviewed Rob Silver's permissions post?

blogs.msdn.com/.../permissions-in-business-connectivity-services.aspx

Also, if you use SPD to modify the ECT, you need to re-setup permissions:

blogs.msdn.com/.../access-denied-by-business-data-connectivity.aspx

-Eric

Kirk Liemohn

30 Jul 2010 6:38 AM

I have a few questions regarding incremental crawls and throttling:

1) Is it true that deleted items cannot be handled with timestamp-based incremental crawls? I didn't see

Regardless, the better approach seems to be changelog-based if feasible

2) In your large XML snippet for changelog-based crawls you use @LastRunDate for the RdbCommandText in the GetChangedProductIds method, but

for the RdbCommandTExt in the GetDeletedProductIds method. Am I missing something? It's mentioned as Parameter in the latter method,

3) Is there a way to run queries in batches for throttling purposes? Say you are doing a full crawl and you have a million records.

records 1000 at a time? How do you prevent a huge full crawl query from causing memory and resource

Page 29 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

BobMcClellan

30 Jul 2010 10:34 AM

Kirk,

1. The search service handles deletions for you when using the timestamp-based incremental crawl. It

their current timestamp. If it does not find one of the old records in the new list, then it assumes that record has been deleted. That means that if your database does

not actually delete the records, you will need to manually modify the SQL for the Read List operation so that it will exclude the deleted records. (This can

with the same kind of change as I described for the changelog-based approach.)

2. You are not missing something, the article is. The correct SQL for the GetDeletedProductIds method should be as follows:

If @LastRunDate != CAST('1900-1-1' as datetime)

BEGIN

SELECT [ProductID] FROM [Production].[vProductAndDescription]

WHERE [DiscontinuedDate] > @LastRunDate

END

SET @LastRunDate=CURRENT_TIMESTAMP

That was totally my fault. (Eric, perhaps you can replace that SQL and make it bold, just like the GetChangedProductIds

3. The way to prevent huge crawls is to use the changelog-based incremental crawl so that only the changes are being processed. However, you

all the records the first time. If you want that huge index, it is going to take some time, but there is no need to throttle. SQL

groups as the search service is processing them. It shouldn't be a problem, but you will have a big stored

Search Results, you can remove fields from the Read List operation so that they won't be indexed and that will reduce the overall size of the stored index.

-Bob

BobMcClellan

30 Jul 2010 10:38 AM

Hmm, in my previous comment, the post has changed my "& gt ;" (remove spaces) into a >.

Kirk Liemohn

30 Jul 2010 1:51 PM

Bob, thanks for the quick response - and thanks to you and Eric for an excellent posting!!!

What you have said makes sense. For the throttling part it sounds like I should go to search administration (or BCS admin?) to slow down the crawling and SQL

server will adjust accordingly. I have had a problem with the MOSS 2007 BDC where a crawl basically took control of the CPU for two days. Granted, that was

against an underpowered test environment, but I still want to be a good citizen for the rest of the SharePoint users and users for the external

Eric White - Independant Consultant

30 Jul 2010 3:20 PM

I've updated the post to contain the correct code. Thanks for the update, Bob.

Page 30 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Kirk Liemohn

5 Aug 2010 10:09 PM

A few more comments now that I have gone through this completely.

1. I believe there is a typo for the second time you are importing a BDC model. It specifies to delete the

"Products" ECT.

2. For those that like to have it spelled out in black and white, here is the alter view statement needed to successfully run the

ALTER VIEW [Production].[vProductAndDescription]

WITH SCHEMABINDING

AS

SELECT

p.[ProductID]

,p.[Name]

,p.[DiscontinuedDate]

,pm.[Name] AS [ProductModel]

,pmx.[CultureID]

,pd.[Description]

,CASE WHEN p.ModifiedDate > pm.ModifiedDate THEN p.ModifiedDate ELSE pm.ModifiedDate END AS ModifiedDate

FROM [Production].[Product] p

INNER JOIN [Production].[ProductModel] pm

ON p.[ProductModelID] = pm.[ProductModelID]

INNER JOIN [Production].[ProductModelProductDescriptionCulture] pmx

ON pm.[ProductModelID] = pmx.[ProductModelID]

INNER JOIN [Production].[ProductDescription] pd

ON pmx.[ProductDescriptionID] = pd.[ProductDescriptionID];

3. To test out a modification and deletion for the changelog-based incremental crawl, here is the SQL that you can

UPDATE Production.Product

SET Name='Classical Vest, M',ModifiedDate=GETDATE()

WHERE ProductID=865

UPDATE Production.Product

SET DiscontinuedDate=GETDATE()

WHERE ProductID=980

Kirk Liemohn

Page 31 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

6 Aug 2010 6:42 AM

One other note. Last night I was having problems seeing the deletes show up in the incremental crawls.

don't see it (maybe it is in moderation limbo). Regardless, I was wrong. You can see deletes just fine. I was looking at the "Successes" column in

expecting to see the count go up there for every deleted item. It does not (makes sense if you think about it). You need to look at the

Chalk that up to working too late at night (and having my browser too small to see that column).

Kirk Liemohn

18 Aug 2010 2:52 PM

For your changelog-based incremental crawls you have T-SQL that sets @LastRunDate after performing the

missed that occurred during the change query? Would a better approach be to store @LastRunDate in a temporary variable, SET

@LastRunDate=CURRENT_TIMESTAMP, then run the query? Of course you'd then have to have an ELSE statement to

1900-1-1.

Kirk Liemohn

19 Aug 2010 9:00 PM

For what it's worth, I tried the following for my changelog-based incremental crawls to hopefully remove any

DECLARE @NewRunDate datetime

if @LastRunDate != CAST('1900-1-1' as datetime)

BEGIN

SET @NewRunDate=CURRENT_TIMESTAMP

SELECT [ProductID] FROM [Production].[vProductAndDescription] WHERE [ModifiedDate] > @LastRunDate

SET @LastRunDate=@NewRunDate

END

else

SET @LastRunDate=CURRENT_TIMESTAMP

macngif

8 Dec 2010 6:32 PM

Great post.

Successfully set up AdventureWorks example & got it going fine.

Then moved onto to my own ECTs & BDCs again all fine however when I implement a crawl using

AttachementAccessor property) my crawls error with the following

Error while crawling LOB contents. ( Invalid object name 'dbo.myECTtable'. )

Error is occurring when the crawl attempts to execute the select query for the association - i.e. the select statement (RdbCommandText) within the association

method.

The major difference between my case and the AdventureWorks case is that my parent and child ECTs (in the association relationship) exist in separate databases

(hence separate external systems).

Page 32 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

1 2

In both cases BDC is using a simple SQL query to grab the data.

Tried various different configurations in an attempt to resolve however no luck as yet.

I am starting to wonder if it is even possible to configure an association that spans across external systems

nir oren

24 Jan 2011 3:54 PM

This is a great post - 10X for that

One thinkg, what about LOB permissions. Let's say the LOB system managed it's own permissions how can we keep it in the search

For example let's say that teh products in you example are not available for everyone and the permissions managed in SQL tables, how can we

Page 33 of 33 MSDN Blogs

1/25/2013 http://blogs.msdn.com/b/ericwhite/archive/2010/04/27/searching-external-data-in-sharepoi...

Anda mungkin juga menyukai

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (121)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (400)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2259)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (895)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- Paritala Ravi BookDokumen208 halamanParitala Ravi Booksrungk100% (4)

- EeNadu Sunday MagazineDokumen56 halamanEeNadu Sunday MagazineAnonymous RGh8Tg100% (6)

- Wife Has A CowDokumen3 halamanWife Has A Cowfantasticat100% (1)

- Unit 6Dokumen11 halamanUnit 6Marta100% (1)

- Comparison of Plant and Animal DevelopmentDokumen3 halamanComparison of Plant and Animal DevelopmentAnonymous RGh8Tg75% (8)

- Só˝B-Δäˇ-Gã‹ Kyëõ!: A-Ö˛$-Δ> - Ö˛Ü (¥Îgò˝-Mê¸$T-Àmê¸$ Mû¸Ö (ßê˛Ö Ayê˛$Z-Mê¸RtDokumen16 halamanSó˝B-Δäˇ-Gã‹ Kyëõ!: A-Ö˛$-Δ> - Ö˛Ü (¥Îgò˝-Mê¸$T-Àmê¸$ Mû¸Ö (ßê˛Ö Ayê˛$Z-Mê¸RtAnonymous RGh8TgBelum ada peringkat

- ¢Ëææ-Néà ´·Çüë Æ‘´'Èπ◊ Ü∆£Æ…Jh Ï©-Æœj Åúø'-Í∫Ç-Öà† ¶®Ω'X Ñ®Ω'ͬ ≤Ƒí∫'-Ûª'†O Föà ¢√U§Ƒ®ΩçDokumen1 halaman¢Ëææ-Néà ´·Çüë Æ‘´'Èπ◊ Ü∆£Æ…Jh Ï©-Æœj Åúø'-Í∫Ç-Öà† ¶®Ω'X Ñ®Ω'ͬ ≤Ƒí∫'-Ûª'†O Föà ¢√U§Ƒ®ΩçAnonymous RGh8TgBelum ada peringkat

- WS 2012 Evaluation GuideDokumen176 halamanWS 2012 Evaluation GuideBnaren NarenBelum ada peringkat

- Anandrao HouseDokumen35 halamanAnandrao HouseAnonymous RGh8TgBelum ada peringkat

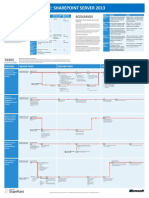

- Sharepoint 2013 BR PosterDokumen1 halamanSharepoint 2013 BR PosterAnonymous RGh8Tg100% (1)

- How To Diagram Arguments in Logic Premise and Conclusion IndicatorsDokumen18 halamanHow To Diagram Arguments in Logic Premise and Conclusion IndicatorsPrincipal HanguBelum ada peringkat

- Duane Broe Capstone ProjectDokumen58 halamanDuane Broe Capstone ProjectSelvi WulandariBelum ada peringkat

- Azure 3Dokumen12 halamanAzure 3felixhahn721Belum ada peringkat

- Multi Format LFDokumen17 halamanMulti Format LFIssac NewtonBelum ada peringkat

- Airtel Broadband Plan: Get 60GB Data at Rs 999 With Unlimited Free Calls. Book NowDokumen12 halamanAirtel Broadband Plan: Get 60GB Data at Rs 999 With Unlimited Free Calls. Book NowNelson RajaBelum ada peringkat

- Young Learners My Family Level 3Dokumen2 halamanYoung Learners My Family Level 3Farhan Muhammad IrhamBelum ada peringkat

- R&W Lesson PlanDokumen4 halamanR&W Lesson Planمحمد فاريزBelum ada peringkat

- Ekphrasis and Its Multifaceted Nature: Ways of Its Usage in Literature and CinematographyDokumen12 halamanEkphrasis and Its Multifaceted Nature: Ways of Its Usage in Literature and CinematographyFernando GarciaBelum ada peringkat

- SubnettingDokumen6 halamanSubnettingMadan Chaudhary100% (1)

- Bab 2Dokumen31 halamanBab 2Grace Padios MadriagaBelum ada peringkat

- ITE7 Chp1Dokumen66 halamanITE7 Chp1schools MashaelBelum ada peringkat

- Customs of The TagalogsDokumen7 halamanCustoms of The TagalogsJanna GuiwanonBelum ada peringkat

- B2 Course: Unit 63 - Passive Vs Active VoiceDokumen4 halamanB2 Course: Unit 63 - Passive Vs Active VoiceEugeniaBelum ada peringkat

- Meeting3-Block 1-Part 2-Introduction To PythonDokumen50 halamanMeeting3-Block 1-Part 2-Introduction To PythonHusam NaserBelum ada peringkat

- Assessment 3 - Assignment Ii (Group Genz)Dokumen23 halamanAssessment 3 - Assignment Ii (Group Genz)Nurman LismanBelum ada peringkat

- Sun Grid Engine UpdateDokumen55 halamanSun Grid Engine UpdateYasser AbdellaBelum ada peringkat

- ISO20022 MDRPart1 PaymentsClearingAndSettlement 2020 2021 v1 ForSEGReviewDokumen147 halamanISO20022 MDRPart1 PaymentsClearingAndSettlement 2020 2021 v1 ForSEGReviewArul SelvanBelum ada peringkat

- Chapter 2 - Sequences: SequenceDokumen16 halamanChapter 2 - Sequences: SequenceSahik KhnBelum ada peringkat

- Lesson Plan My FamilyDokumen4 halamanLesson Plan My FamilyKiara NavarroBelum ada peringkat

- Anagraw N Umyag2014Dokumen149 halamanAnagraw N Umyag2014Abrid IreglenBelum ada peringkat

- The YAP Prolog SystemDokumen30 halamanThe YAP Prolog SystemTom WestBelum ada peringkat

- The Kite Runner (Pashtun and Hazara Conflict)Dokumen7 halamanThe Kite Runner (Pashtun and Hazara Conflict)balliinjr100% (1)

- SNB KshudrarogaDokumen12 halamanSNB KshudrarogaImran Patel50% (2)

- MMS Final Year Thesis Collection - Aero Map For Formula Student (Paul Hendy - 2019)Dokumen42 halamanMMS Final Year Thesis Collection - Aero Map For Formula Student (Paul Hendy - 2019)isabellaBelum ada peringkat

- Datos mv440Dokumen39 halamanDatos mv440David Santos LatorreBelum ada peringkat

- HARMAN Employee Referral ProgramDokumen2 halamanHARMAN Employee Referral ProgramKrishna SrivastavaBelum ada peringkat

- Critical ReasoningDokumen10 halamanCritical ReasoningVaishnavi SomaniBelum ada peringkat

- Basic Organic Nomenclature0Dokumen1 halamanBasic Organic Nomenclature0RaghuSrinivasanBelum ada peringkat