Brocade VDX VCS Data Center Layer 2 Fabric Design Guide For Network OS

Diunggah oleh

david0young_2Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Brocade VDX VCS Data Center Layer 2 Fabric Design Guide For Network OS

Diunggah oleh

david0young_2Hak Cipta:

Format Tersedia

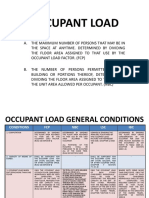

DATA CENTER

Brocade VDX/VCS Data Center

Layer 2 Fabric Design Guide for Brocade

Network OS v2.1.1

DATA CENTER

DESIGN GUIDE

CONTENTS

Introduction..........................................................................................................................................................................................................................................4

Building a Multi-Node VCS Fabric ................................................................................................................................................................................................4

Design Considerations ....................................................................................................................................................4

Topology....................................................................................................................................................................4

Clos Fabrics .............................................................................................................................................................. 4

Mesh Fabrics ............................................................................................................................................................ 5

VCS-to-VCS Connectivity ..........................................................................................................................................6

Switch Platform Considerations.............................................................................................................................. 7

Oversubscription Ratios ..........................................................................................................................................7

Scalability .................................................................................................................................................................8

Implementation ............................................................................................................................................................... 8

VCS Nuts and Bolts (Within the Fabric)...................................................................................................................................................................................10

Deciding Which Ports to Use for ISLs ...........................................................................................................................10

ISL Trunking............................................................................................................................................................10

Brocade ISL Trunk..................................................................................................................................................12

Brocade Long Distance ISL ...................................................................................................................................12

ECMP Load Balancing ...................................................................................................................................................12

Configurable Load Balancing ................................................................................................................................12

Operational Considerations...................................................................................................................................12

Brocade VDX Layer 2 Features (External to Fabric) ...........................................................................................................................................................13

Active-Standby Connectivity..........................................................................................................................................13

Active-Active Connectivity..............................................................................................................................................13

vLAG Enhancements .....................................................................................................................................................13

vLAG Configuration Guidelines with VMware ESX Server and Brocade VDX.............................................................14

vLAG Minimum Links.....................................................................................................................................................14

LACP SID and Selection Logic.......................................................................................................................................14

LACP SID Assignment....................................................................................................................................................14

LACP Remote Partner Validation ..................................................................................................................................14

Operational Consideration ............................................................................................................................................15

show lacp sys-id: ....................................................................................................................................................15

Edge Loop Detection (ELD) ...........................................................................................................................................16

Connecting the Fabric to Uplinks .................................................................................................................................16

Upstream Switches with MCT................................................................................................................................16

Upstream Switches Without MCT .........................................................................................................................16

Connecting the Servers to Fabric..........................................................................................................................18

Rack Mount Servers ..............................................................................................................................................18

Blade Servers .........................................................................................................................................................18

Manual Port Profiles ..............................................................................................................................................18

Dynamic Port Profile with VM Aware Network Automation .................................................................................18

Data Center Network and vCenter........................................................................................................................18

Network OS Virtual Asset Discovery Process .......................................................................................................18

VM-Aware Network Automation MAC Address Scaling ........................................................................................19

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

2 of 32

DATA CENTER

DESIGN GUIDE

Authentication ........................................................................................................................................................19

Port Profile Management ......................................................................................................................................19

Usage Restriction and Limits ................................................................................................................................19

Third-Party Software ..............................................................................................................................................20

User Experience .....................................................................................................................................................20

Building a 2-Switch ToR VCS Fabric.........................................................................................................................................................................................21

Design Considerations ..................................................................................................................................................21

Topology..................................................................................................................................................................21

Licensing.................................................................................................................................................................21

Implementation......................................................................................................................................................21

Building a 2-switch Aggregation Layer Using VCS..............................................................................................................................................................23

Design Considerations ..................................................................................................................................................23

Topology..................................................................................................................................................................23

Licensing.................................................................................................................................................................23

Implementation......................................................................................................................................................23

Building the Fabric .................................................................................................................................................24

24-Switch VCS Reference Architecture..................................................................................................................................................................................25

Appendix A: VCS Use Cases........................................................................................................................................................................................................26

VCS Fabric Technology in the Access Layer.................................................................................................................26

VCS Fabric Technology in the Collapsed Access/Aggregation Layer .........................................................................27

VCS Fabric Technology in a Virtualized Environment..................................................................................................28

VCS Fabric technology in Converged Network Environments ....................................................................................29

Brocade VDX 6710 Deployment Scenarios .................................................................................................................30

Glossary..............................................................................................................................................................................................................................................31

Related Documents ......................................................................................................................................................................................................................31

About Brocade.................................................................................................................................................................................................................................32

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

3 of 32

DATA CENTER

DESIGN GUIDE

INTRODUCTION

This document describes and provides high-level design considerations for deploying Brocade VCS Fabric

technology using the Brocade VDX series switches. It explains the steps and configurations needed to deploy the

following:

A 6-switch VCS Fabric topology providing physical or virtual server connectivity with iSCSI/NAS

A 2-switch Top of Rack (TOR) topology

A 2-switch VCS topology in Aggregation, aggregating 1 GbE switches

A 24-switch VCS topology

The target audience for this document includes sales engineers, field sales, partners, and resellers who want to

deploy VCS Fabric technology in a data center. It is assumed the reader of this document is already aware of the

VCS Fabric technology, terms, and nomenclatures. Explaining the VCS nomenclature is beyond the scope of this

document, and the reader is advised to peruse the publically available documents to become familiar with Brocade

VCS Fabric technology.

BUILDING A MULTI-NODE VCS FABRIC

Design Considerations

Topology

Please note that for all illustrations, the Brocade MLX is shown as the aggregation switch of choice, which is the

current Brocade recommendation. However, Brocade VCS is fully standards-compliant and can be deployed with any

standards-compliant third-party switch.

Multi-node fabric design is a function of the number of devices connected to the fabric, type of server/storage

connectivity (1 GbE/10 GbE), oversubscription and target latency. There are always tradeoffs that need to be made

in order to find the right balance between these four variables. Knowing the application communication pattern will

help you tailor the network architecture to maximize network performance.

When designing a fabric with VCS technology, which is topology agnostic, it is important to decide early in the

process what the performance goal of the fabric is. If the goal is to provide a low-latency fabric with the most path

availability, and scalability is not a priority, then full mesh is the most appropriate topology. However, if a balance

between latency, scalability, and availability is the goal, then a Clos topology is more appropriate. There are multiple

combinations possible in between these two, including partial mesh, ring, star, or other hybrid networks, which can

be designed to handle the tradeoffs between availability and latency. However, Brocade recommends that either a

full-mesh or a Clos topology be used to design VCS Fabrics to provide resilient, scalable, and highly available Layer 2

fabrics.

Multi-node fabrics have several use case models. Appendix 1 provides details of various such models for which

multi-node fabrics are targeted. Up to and including Network OS v2.0.1, direct connectivity between two separate

VCS fabrics is not supported. It is currently required that you place a L2/L3 switch as a hub with multiple VCS fabrics

in a spoke, to prevent loops in a network.

Clos Fabrics

Figure 1 shows a two-tier Clos Fabric. Generally, the top row of switches acts as core switches and provides

connectivity to the edge switches. A Clos Fabric is a scalable architecture with a consistent hop count (3 maximum)

for port-to-port connectivity. It is very easy to scale a Clos topology by adding additional switches in either the core or

edge. In addition, as a result of the introduction of routing at Layer 2 with VCS technology, traffic load is equally

distributed among all equal cost multipaths (ECMP). There are two or more paths between any two edge switches in

a resilient core-edge topology.

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

4 of 32

DATA CENTER

DESIGN GUIDE

Figure 1: 2-Tier Clos Fabric

Mesh Fabrics

Figure 2 shows a full-mesh fabric built with six switches. In a full-mesh topology, every switch is connected directly to

every other switch. A full-mesh topology is a resilient architecture with a consistently low number of hop counts (2

hops) between any two ports. A full mesh is the best choice when a minimum number of hops is needed and a

future increase in fabric scale is not anticipated, since a change in mesh size can be disruptive to the entire fabric.

For a mesh to be effective, traffic patterns should be evenly distributed with low overall bandwidth consumption.

Figure 2: Full-Mesh Fabric

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

5 of 32

DATA CENTER

DESIGN GUIDE

VCS-to-VCS Connectivity

VCS-to-VCS connectivity is supported in Network OS v2.1.0 release. VCS-to-VCS connectivity is supported for only a

certain set of topologies, due to the lack of a loop detection mechanism. It is highly recommended that VCS-to-VCS

connectivity be restricted to the following topologies only:

ELD, which is available in Network OS v2.1.1, can be used as a loop detection mechanism between

VCS fabrics. Prior to Network OS v2.1.1 the topology could not have any loops. Also, prior to Network

OS v2.1.1, any local loop even within a single cluster caused broadcast storms and brought down the

network. A local loop in one cluster impaced the other cluster.

Two VCS clusters can be directly connected to each otherone at the access layer and the other at the

aggregation layer.

Figure 3: VCS-to-VCS Cluster

One VCS cluster at the aggregation layer can be directly connected to up to 16 VCS clusters at the

access layer; however, access clusters must not be connected to each other.

All links connecting to the two clusters must be a part of a single vLAG, and all multicast control traffic

and data traffic should be limited to 10 Gbps within the vLAG. This limitation is due to the fact that

there is no distribution of multicast trafficmulticast traffic is always sent out on a primary link.

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

6 of 32

DATA CENTER

DESIGN GUIDE

Figure 4: VCS-to-VCS Cluster

Switch Platform Considerations

Brocade VCS Fabrics can be designed with the Brocade VDX 6720-24 (16/24 port), VDX 6720-60 (40/50/60 port)

switches, VDX 6710 (481 GbE Copper + 610GbE SFP+), VDX 6730-32 (2410 GbE SFP+, 88 GbE FC ports), and

VDX 6730-76 (6010 GbE SFP+, 168 GbE FC ports). The Brocade VDX 6720-24 switch provides a single ASICbased architecture delivering constant latency of ~600ns port-to-port. The Brocade VDX 6720-60 switch is multiASIC based, with latencies ranging from 600 ns to 1.8 us. When designing a Clos topology, using the higher port

count switches in the core enables greater scalability. The Brocade VDX 6710 provides cost-effective VCS fabric

connectivity to 1 GbE servers, while the Brocade VDX 6730 connects the VCS fabric to the Fibre Channel (FC)

Storage Area Network (SAN).

In addition, it is important to consider the airflow direction of the switches. The Brocade VDX is available in both

port-side exhaust and port-side intake configurations. Depending upon the hot-aisle, cold-aisle considerations, you

can choose the appropriate airflow.

Please note that the Brocade VDX 6710 does not require Port On Demand (POD) or Fibre Channel over Ethernet

(FCoE) License, and the VCS fabric must be through 10 GbE ports. However, 10 GbE ports may be used to connect

to servers. These server-facing ports will not support Data Center Bridging (DCB). Lastly, VCS fabric is supported

only with Brocade VDX 6710.

Oversubscription Ratios

Brocade VDX switches do not have dedicated uplinks. Any of the available 10 GbE ports can be used as uplinks to

provide desired oversubscription ratios. When designing a mesh network, the oversubscription ratio is directly

dependent on the number of uplinks and downlinks. For example, a 120-port, non-blocking mesh can be designed

with four 60-port Brocade VDX switches. Each Brocade VDX switch has 30 downstream ports and 30 upstream ports

(10 connected to each of three Brocade VDX switches). In this case, the oversubscription ratio is 1:1, as there are 30

upstream ports serving 30 downstream ports. In the case of a two-tier Clos topology, there are two levels of

oversubscription, if the fabric uplinks are connected to the core layer. For North-South traffic, oversubscription is the

product of the oversubscription of core switches and edge switches. For example, if the core switch with 60 ports

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

7 of 32

DATA CENTER

DESIGN GUIDE

has 20 uplinks and 40 downlinks, then it has an oversubscription ratio of 2:1 (40:20). Furthermore, if the edge

switch also has the same oversubscription, then the fabric oversubscription for North-South traffic is 4:1. For EastWest traffic, or if the uplinks are also connected at the edge, the oversubscription is dependent only on the

oversubscription of the edge switches, in other words, 2:1 in this example.

Scalability

When designing the fabric, it is important to consider the current scalability limits mentioned in the release notes of

the software running on the switches. These scalability numbers will be improved in future software releases without

requiring any hardware upgrades. This can be referenced in the release notes.

The Brocade VDX series of switches allows for the creation of arbitrary network topologies. Because of this flexibility,

it is not possible to cover all architectural scenarios. Therefore, the scope of this document is to provide a baseline

of architectural models to give the reader a sense of what can be built.

Implementation

Now that the topology, switch, oversubscription, and other variables have been decided, you can decide how to build

this network. As mentioned earlier, the Brocade VDX series of switches allows for the creation of arbitrary network

topologies. Because of this flexibility, it is not possible to cover all architectural scenarios. Therefore, the scope of

this document is to provide a baseline of architectural models, to give the reader a sense of what can be built. This

document describes one such modelhow to build a core-edge network using 424 port edge switches and 224

port switches in core with a 4:1 oversubscription ratio. This document discusses how to connect various types of

servers and storage to this fabric and how to connect the fabric to upstream devices. Figure 5 shows the basic

design of this topology, and Table 1 lists the equipment required for this design.

Figure 5: Topology for Reference Architecture

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

8 of 32

DATA CENTER

DESIGN GUIDE

Hardware

Quantity

Comments

BR-VDX 6720-24 (-F or R)

Network OS v2.1.0

10G-SFPP-TWX-0508

24 (6x4)

For ISLs

10G-SFPP-TWX-0308

Depends on number of servers connected

10G-SFPP-SR

32 (4x8)

For Brocade VDX side, assuming that Brocade MLX

already has connectivity and fiber cables

BR-VDX6720-24VCS-01

BR-VDX6720-24FCOE-01

iSCSI Initiators

As needed

iSCSI Targets

As needed

FCoE Initiators

As needed

FCoE Targets

As needed

Table 1: Equipment required for a 6-Switch VCS Fabric Solution

The topology used in the above example is a sample topology. Brocade VCS fabrics can be built with a mix and match

of any number of switches within the scalability limits of the software version running.

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

9 of 32

DATA CENTER

DESIGN GUIDE

VCS NUTS AND BOLTS (WITHIN THE FABRIC)

Deciding Which Ports to Use for ISLs

Any port can be used to form an Inter-Switch Link (ISL) on the Brocade VDX series of switches. No special

configuration is required to create an ISL. There are two port types supported on the Brocade VDX switchesedge

ports and fabric ports. An edge port is used for any non-VDX-to-VDX connectivityregardless of whether that is a

network switch external to the Brocade VDX when running in VCS mode or whether the port is used for end-device

connectivity. A fabric port is used to form an ISL to another Brocade VDX switch and can participate within a Brocade

VDX ISL Trunk Group.

ISL Trunking

When determining which ports to use for ISL Trunking, it is important to understand the concept of port groups on

Brocade VDX switches, as shown in

Figure 6. ISL Trunks can only be formed between ports of the same port group. In addition, the cable length should

be the same to connect the ports forming the ISLs.

Figure 6: Port Groups on the Brocade VDX 6720-60

Figure 7: Port Groups on the Brocade VDX 6720-24

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

10 of 32

DATA CENTER

DESIGN GUIDE

Figure 8: Port Groups on the Brocade VDX 6710-54

Figure 9: Port Groups on the Brocade VDX 6730-32

Figure 10: Port Groups on the Brocade VDX 6730-76

When building a fabric, it is very important to think in terms of ISL bandwidth. Brocade ISL Trunks can be formed

using up to 8 links providing up to 80 Gbps of bandwidth. The throughput of ISLs are, however, limited to 80 mpps

(million packets/sec), which results in lower bandwidth for small-size packets.

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

11 of 32

DATA CENTER

DESIGN GUIDE

Once it has been decided which ports you will use to form an ISL, VCS needs to be enabled, and the RBridge ID must

be defined. The VCS ID needs to be assigned for each switch that will become part of the fabric. The default VCS ID is

1, and the default RBridge ID is 1. Keep in mind that it is disruptive to change these parameters, and a switch

reboot will be required. During the reboot process, if there is no predefined fabric configuration, the default fabric

configuration will be used upon switch bring-up. Once the switches are in VCS mode, connect the ISLs and the VCS

fabric will form. Lastly, please also reference the section on Upgrade/Downgrade ConsiderationsVCS Fabric

Functionality.

Brocade ISL Trunk

A Brocade Trunk is a hardware-based Link Aggregation Group (LAG). Brocade Trunks are dynamically formed

between two adjacent switches. The trunk formation, which is not driven by Link Aggregation Control Protocol (LACP),

is instead controlled by the same FC trunking protocol that controls the trunk formation on FC switches that use the

Brocade Fabric OS (FOS). When connecting links between two adjacent Brocade VDX 6720s, Brocade Trunks are

enabled automatically, without requiring any additional configuration. Brocade Trunking operates at Layer 2 and is a

vastly superior technology compared to the software-based hashing used in standard LAG, which operates at Layer

2. Brocade Trunking evenly distributes traffic across the member links on a frame-by-frame basis, without the need

for hashing algorithms, and it can coexist with standard IEEE 802.3ad LAGs.

Brocade Long Distance ISL

Normally, an ISL port with Priority Flow Control (PFC) is supported for a 200 m distance on eAnvil-based platforms.

The longdistance-isl command extends that support up to a distance of 10 km, including 2 km and 5 km links.

For a 10 km ISL link, no other ISL links are allowed on the same ASIC. For 5 km and 2 km ISL links, another shortdistance ISL link can be configured. A maximum of three PFCs (per Priority Flow Control) can be supported on a longdistance ISL port.

To configure a long-distance ISL port, use the long-distance-isl command in interface configuration mode.

Please refer to the Network OS Command Reference and Network OS Administrators Guide, v2.1.1 for more

information on long-distance (LD) ISL.

ECMP Load Balancing

Configurable Load Balancing

Load balancing allows traffic distribution on static and dynamic LAGs and vLAG. Although it is not common, some

traffic patterns fail to distribute, leading to only one ECMP path for the entire traffic. This causes underutilization of

ECMP paths, resulting in a loss of data traffic, even though one or more ECMP paths are available to offload the

traffic. In Network OS v2.1.0, a new command is introduced to configure ECMP load balancing parameters, in order

to offer more flexibility to end users. This command allows users to select various parameters, which can then be

used to create a hashing scheme. Please refer to the Network OS Administrators Guide, v2.1.1 for more information

on hashing scheme and usage guidelines.

Operational Considerations

The ECMP hash value is used to add more randomness in selecting the ECMP paths.

The default value of ECMP hash is a random number, set during boot time.

The default ECMP load balance hashing scheme is based on source and destination IP, MAC address,

VLAN ID (VID), and TCP/UDP port.

In the presence of a large number of traffic streams, load balancing can be achieved without any

additional ECMP-related configuration.

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

12 of 32

DATA CENTER

DESIGN GUIDE

VDX6720_1(config-rbridge-id-2)# fabric ecmpload-balance ?

Possible completions:

dst-mac-vid

Destination MAC address and VID based load balancing

src-dst-ip

Source and Destination IP address based load balancing

src-dst-ip-mac-vid

Source and Destination IP and MAC address and VID based

load balancing

src-dst-ip-mac-vid-port Source and Destination IP, MAC address, VID and TCP/UDP

port based load balancing

src-dst-ip-port Source and Destination IP and TCP/UDP port based load balancing

src-dst-mac-vid Source and Destination MAC address and VID based load balancing

src-mac-vid

Source MAC address and VID based load balancing

VDX6720_2(config)# rbridge-id 2

VDX6720_2(config-rbridge-id-2)# fabric ecmp load-balance dst-mac-vid

VDX6720_2(config-rbridge-id-2)#

VDX6720_2(config-rbridge-id-2)# fabric ecmp load-balance-hash-swap 34456

VDX6720_2# show fabric ecmpload-balance

Fabric EcmpLoad Balance Information

-----------------------------------Rbridge-Id : 2

BROCADE VDX LAYER 2 FEATURES (EXTERNAL TO FABRIC)

Active-Standby Connectivity

Active/Standby connectivity is used to provide redundancy at the link layer in a network. The most common protocol

used at Layer 2 to provide Active/Standby connectivity is Spanning Tree Protocol (STP). The use of STP was

historically acceptable in traditional data center networks, but as the densities of servers in data centers increase,

there is a requirement to improve the bandwidth availability of the links by fully utilizing the available link capacity in

the networks.

Active-Active Connectivity

MCT and vLAGMulti-Chassis Trunking (MCT) is an industry-accepted solution to eliminate spanning tree in L2

topologies. Link Aggregation Group (LAG)-based MCT is a special case of LAG that is covered in IEEE 802.3ad, in

which the LAG ends terminate on two separate chassis that are directly connected to each other. Virtual LAG (vLAG),

a Brocade innovation, further extends the concept of LAG by allowing its formation across four physical switches that

may not be directly connected to each other (but that participate in the same VCS fabric).

vLAG Enhancements

In Brocade Network OS v2.1, the vLAG feature is enhanced to remove several usage restrictions imposed in the

previous Network OS releases. These are highlights of vLAG enhancement in Network OS v2.1:

The ability to specify a minimum number of links that must be active on a vLAG before it can form

aggregation is now supported in VCS mode. This was supported earlier only in standalone mode. The

existing minimum-links Command Line Interface (CLI) under the port channel is now available in VCS

mode.

The ability to validate a remote partner for dynamic vLAG is also added.

The maximum number of RBridges participating in a vLAG is now increased to 4.

The maximum number of ports participating in a vLAG is 32, with 16 from a single RBridge.

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

13 of 32

DATA CENTER

DESIGN GUIDE

vLAG Configuration Guidelines with VMware ESX Server and Brocade VDX

VMware recommends configuring an EtherChannel when the admin chooses IP-based hashing as Network Interface

Card (NIC) teaming. All other options of NIC teaming should use regular switch port configuration.

vLAG Minimum Links

The Minimum Links feature allows the user to specify the minimum number of links that must be active on the vLAG

before the links can be aggregated. Until the minimum number of links is reached, the port channel shows protocol

down. The default minimum number of links to form an aggregator is 1. The minimum link configuration allows the

user to create vLAGs with a strict lower limit on active participating port members.

As with all other port-channel configuration commands executed on a Brocade VCS operating in Fabric

Cluster mode, the user is required to configure the new minimum link value on each RBridge participating in

the vLAG. The port channel will be operationally down, if the number of active links in a vLAG falls below the

minimum number of links required to keep the port channel logically up. Similarly, the port channel is

operationally up when the number of active links in the vLAG becomes equal to the minimum number of

links required to bring the port channel logically up. The events that trigger a link active count change are as

follows: an active link going up or down, a link added to or deleted from a vLAG, or a participating RBridge

coming back up or going down.

Please note that only ports with the same speed are aggregated.

LACP SID and Selection Logic

As part of establishing dynamic LACP LAGs (or port channels), LACP PDU frames are exchanged between the switch

and the end devices. The exchange includes a unique identifier for both the switch and the device that is used to

determine which port channel a link should be associated with. In Network OS v2.1, additional support is added for

selecting a unique and consistent Local SID (LACP System ID), which is shared between all RBridges that are

connected to the same vLAG. This SID is unique for each switch in the VCS.

During vLAG split and rejoin events, when the member RBridge leaves from and joins into the cluster, SID reselection

logic is enhanced to support a knob to control split recovery.

Split Recovery: In Network OS v2.1.0, with no-ignore-split, SID is derived using the actual MAC address of one of the

participating RBridges (SID Master). So when a vLAG is formed, the SID from the first RBridge configured into the

vLAG is used. If the RBridge that owns the SID of the vLAG leaves the cluster, a new SID is selected from the MAC of

one of the other Rbridges, and vLAG converges again.

No Split Recovery: In this mode, a VSID (Virtual SID)which is a unique identifier derived using the VCS ID, similar to

Network OS 2.0is used as the LACP SID for the vLAG. Upon split, all RBridges continue to advertise the same VSID

as their LACP SID. No reconvergence is needed when nodes leave or (re)join the vLAG in the VCS mode.

LACP SID Assignment

In Network OS v2.1.0, SID is no longer a virtual MAC address derived using the VCS ID; instead, it is the actual MAC

address of the participating RBridges. This allows scaling of the maximum number of RBridges that can participate in

a VLAG, from 2 to 16.

LACP Remote Partner Validation

A vLAG members links in different RBridges sometimes may be connected to other standalone switches. In the

previous Network OS releases, there was no validation of the remote partner information for a dynamic vLAG across

the RBridges. The user had the responsibility to assure that the links in the dynamic vLAG were all connected to the

same remote device and key. In Network OS v2.1.0, remote partner validation support is added, which ensures that

the first received partner information is sent out to all member R0Bridges. If the vLAG has a member in another

RBridge, the aggregation fails, and vLAG is not formed.

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

14 of 32

DATA CENTER

DESIGN GUIDE

Operational Consideration

To ensure that the RBridges that join the fabric (RB3 in Figure 11) pick up the partner state, the remote SID state for

vLAGs is included in the local database exchange. The following show command provides information on the SID

Master.

show lacp sys-id:

Port-channel Po 10 - System ID: 0x8000,00-05-33-8d-0e-25 - SID Master: rbridge-id 1 (ignore-split

Disabled)

Port-channel Po 20 - System ID: 0x8000,01-e0-52-00-00-13 - SID Master: N/A (ignore-split Enabled

Default)

Figure 11: SID Update on LACP Join

If both RBridges are connected to the same remote device, the remote SID should match.

Figure 12: LACP SID Assignment

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

15 of 32

DATA CENTER

DESIGN GUIDE

If the RBridges are configured for the same vLAG but are connected to different remote devices, the remote

SID values do not match. Since the local SID state is forced to synchronize between the connecting

RBridges, the side whose Local SID is forced to change ends up with disabled links.

Edge Loop Detection (ELD)

Edge Loop Detection (ELD) is a Brocade Layer 2 loop detection mechanism. It uses PDUs to detect loops in the

network. This protocol is mainly intended for VCS-to-VCS loop prevention operation, but it can also be used in the

VCS-to-standalone mode of networks.

The ELD feature is implemented to block redundant links between two VCS fabrics: when a device detects a loop by

receiving packets originated from it, it should disable all redundant links in that network. This is to prevent packet

storm created due to loops caused by misconfiguration.

The primary purpose of ELD is to block a Layer 2 loop caused by misconfiguration. ELD should be used as a tool to

detect any loops in the network, rather than using it to replace Layer 2 protocols such as xSTP, Metro Ring Protocol

(MRP), and so on.

The basic ELD functionality is as follows: ELD is enabled on a specific port and VLAN combination. Each ELD-enabled

interface on an RBridge sends an ELD PDU. This PDU contains information about the VCS ID and RBridge ID of the

node it is sending from, the VLAN associated with this interface on which ELD is enabled, the ELD port priority

parameter, and so on. ELD can be configured on Access mode ports and Trunk mode ports, and PDUs follow port

configuration for tagging. The port priority parameter decides which port will be shut down in case ELD detects a

loop. These PDUs are transmitted from every ELD-enabled interface at the configured hello interval rate. When

these PDUs are received back at the originating VCS, ELD detects a loop; this results in shutting down redundant

interfaces based on the port parameter of the redundant links. If the port priority value is the same, then the

decision is based on the port number.

ELD uses the system MAC address of the primary RBridge of VCS with the multicast (bit 8) and local (bit 7) on. For

example, if the base MAC address of the primary RBridge of VCS is 00e0.5200.1800, then the destination MAC

address will be 03e0.5200.1800.

Please refer to the Network OS Administrators Guide, v2.1.1 for more information on ELD.

Connecting the Fabric to Uplinks

Upstream Switches with MCT

In order to connect VCS to upstream servers with MCT, set up a normal LACP-based LAG on the MLX side (or

equivalent core router), and vLAG will form automatically on the VDX side.

Upstream Switches Without MCT

When the upstream devices are not running MCT or cannot support MCT, there are two ways that fabric uplinks can

be connected.

Option 1: Form an EtherChannel between the Brocade VDX and upstream devices, as shown in Figure 13. In this

case, standard IEEE 802.3ad-based port trunks are formed between the fabric and upstream network devices. This

provids link level redundancy, but no node level redundancy. If a core Brocade VDX fails, all of the flows through the

MLX/RX (Brocade BigIron RX Series) that are connected to that device have no path to reach the fabric.

Option 2: Run STP on upstream devices with Active/Standby connections, as shown in Figure 14. Since VCS tunnels

all the STP Bridge Protocol Data Units (BPDUs) through, this topology is ideal to provide both node level and link level

redundancy. An important caveat to keep in mind here is that if there are more than two upstream switches

connected to the fabric, Rapid STP (RSTP) will default to STP, since RSTP requires point-to-point connectivitywhich

is not provided in the fabric.

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

16 of 32

DATA CENTER

DESIGN GUIDE

Figure 13: Connecting Fabric Uplinks to Upstream Devices, Option 1

Figure 14: Connecting Fabric Uplinks to Upstream Devices, Option 2

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

17 of 32

DATA CENTER

DESIGN GUIDE

Connecting the Servers to Fabric

Rack Mount Servers

This section describes how rack mount servers, either physical or virtual, can be connected to the Brocade

VCS Fabric. When connecting to the fabric in DCB mode, it is important that flow control is enabled on the

Converged Network Adapters CNAs.

Blade Servers

When connecting blade servers to a VCS Fabric, there are two connectivity optionsembedded switches and passthrough modules. Please consult Brocade support to validate the supported solution. Not all qualified solutions

have been published as of the release of this document.

Manual Port Profiles

Automatic Migration of Port Profiles (AMPP) is a Brocade innovation that allows seamless movement of virtual

machines within the Ethernet fabric. In todays networks, network parameters such as security or VLAN settings

need to be physically provisioned before a machine is moved from one physical server to another within the same

Layer 2 domain. Using the distributed intelligence feature, a VCS fabric is aware of the port profiles associated with

the MAC address of a Virtual Machine (VM) and automatically applies those policies to the new access port that the

VM has moved to. Since AMPP is MAC address based, it is hypervisor-agnostic and works with all third-party

hypervisors.

In Network OS v2.1.0, AMPP is supported over vLAG.

Dynamic Port Profile with VM Aware Network Automation

Server virtualization (VMs, and so forth) is used extensively in current datacenter environments, with VMware being

the dominant player in datacenter virtualization. The server hosts (VMware ESXs) are connected directly to the

physical switches through switch-ports (or edge-ports in the case of VCS). Many of these server hosts implement an

internal switch, called vSwitch, which is created to provide internal connectivity to the VMs and a distributed switch

that spans across multiple Server-Hosts. A new layer called the Virtual Access Layer (VAL) virtualizes connectivity

between the physical switch and virtual machine via vSwitch or dvSwitch. VAL is not visible to the physical switch;

thus, these VMs and other virtual assets remain hidden to the network administrator. The Brocade VM-aware

network automation provides the ability to discover these virtual assets. With VM-aware network automation, a

Brocade VDX 67XX switch can dynamically discover virtual assets and offer unprecedented visibility of dynamically

created virtual assets.

VM-aware network automation also allows network administrators to view these virtual assets using the Network OS

CLI. VM-aware network automation is supported on all the Brocade VDX platforms and can be configured in both VCS

and standalone mode. This feature is currently supported on VMware vCenter 4.0 and 4.1.

Data Center Network and vCenter

In current datacenter environments, vCenter is primarily used to manage Vmware ESX hosts. VMs are instantiated

using the vCenter user interface. In addition to creating these VMs the server administrator also associates these

with VSs (Virtual Switches), PGs (Port Groups), and DVPGs (Distributed Virtual Port Groups). VMs and their network

properties are primarily configured and managed on the vCenter/vSphere. Many VM propertiessuch as MAC

addressesare automatically configured by the vCenter, while some propertiessuch as VLAN and bandwidthare

assigned by vCenter through VAL.

Network OS Virtual Asset Discovery Process

The Brocade switch that is connected to hosts/VMs needs to be aware of network policies in order to allow or

disallow traffic. In Network OS v2.1.0, the discovery process starts upon boot-up, when the switch is preconfigured

with the relevant vCenters that exist in its environment. The discovery process entails making appropriate queries to

the vCenter. The queries are in the form of Simple Object Access Protocol (SOAP) requests/responses sent to the

vCenter.

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

18 of 32

DATA CENTER

DESIGN GUIDE

Figure 15: Virtual Asset Discovery Process

VM-Aware Network Automation MAC Address Scaling

In Network OS v2.1.1, the VM-aware network automation feature is enhanced to support 8000 VM MAC addresses.

VM-aware network automation is now capable of detecting up to 8000 VM MACs and supporting VM mobility of this

scale within a VCS fabric.

Authentication

In Network OS v2.1.1, before any discovery transactions are initiated, the first order of transactions involves

authentication with the vCenter. In order to authenticate with a specific vCenter, the following vCenter properties are

configured at the switchURL, Login, and Password. A new CLI is added to support this configuration.

Port Profile Management

After discovery, the switch/Network OS enters the port profile creation phase, where it creates port profiles on the

switch based on discovered DVPGs and port groups. The operation creates port profiles in the running-config of the

switch. Additionally, Network OS also automatically creates the interface/VLANs that are configured in the port

profiles, which also end up in the running-config.

The AMPP mechanism built into Brocade switches may provide a faster way to correlate the MAC address of a VM to

the port it is associated with. Network OS continues to allow this mechanism to learn the MAC address and associate

the port profile with the port. The The vCenter automation process simply enhances this mechanism by providing

automatic creation of port profiles or preassociating the MAC addresses before the VM is powered up.

Usage Restriction and Limits

Network OS creates port profiles automatically based on discovered DVPGs or port groups. The port profiles created

in this way contain the prefix auto- in their names. The user is urged not to modify the policies within these port

groups. If the user modifies these port profiles, the discovery mechanism at some point may overwrite the changes

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

19 of 32

DATA CENTER

DESIGN GUIDE

the user made. The user is also urged never to create port profiles whose names begin with auto- via CLI or from a

file replay.

The maximum number of VLANs that can be created on the switch is 3583, and port profiles are limited to 256

profiles per switch. A vCenter configuration that exceeds this limit leads to an error generated by Network OS.

Third-Party Software

To support the integration of vCenter and Network OS, the following third-party software is added in Network OS

v2.1.0:

Open Source PHP Module

Net-cdp-0.09

Libnet 1.1.4

User Experience

sw0# show vnetwork hosts

Host

VMNic Name

Iface

============================== =============

============

esx4-248803.englab.brocade.com

vmnic2

0/1

esx4-248802.englab.brocade.com

vmnic2

0/2

Associated MAC

(d)vSwitch

Switch-

================= ============

5c:f3:fc:0c:d9:f4

dvSwitch-1

5c:f3:fc:0c:d9:f6

dvSwitch-2

sw0# show vnetwork vms

VirtualMachine Associated MAC

IP Addr

Host

=============== ================= =========== =================================

RH5-078001 00:50:56:81:2e:d5 192.168.5.2

esx4-248803.englab.brocade.com

RH5-078002 00:50:56:81:08:3c

esx4-248803.englab.brocade.com

Please refer to the Network OS Administrators Guide, v2.1.1 for more information on VM-Aware Network

Automation.

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

20 of 32

DATA CENTER

DESIGN GUIDE

BUILDING A 2-SWITCH TOR VCS FABRIC

Traditionally, at the access layer in the data center, servers have been configured with standby links to Top of Rack

(ToR) switches running STP or other link level protocols to provide resiliency. As server virtualization increases the

density of servers in a rack, the demand for active/active server connectivity, link-level redundancy, and multichassis EtherChannel for node-level redundancy is increasing. A 2-switch, ToR VCS Fabric satisfies each of these

conditions with minimal configuration and setup.

Design Considerations

Topology

The variables that affect a 2-switch ToR design are oversubscription, the number of ports required for server/storage

connections, and bandwidth (1/10 GbE). Latency is not a consideration here, as only a single switch is traversed

(under normal operating conditions), as opposed to multiple switches.

Oversubscription, in a 2-switch topology, requires a simple ratio of uplinks to downlinks. In a 260 port switch ToR

with 4 ISL links, 112 usable ports remain. Of these 112 ports, if 40 are used for uplink and 80 for downlink,

oversubscription will be 2:1. However, if the servers are dual-homed in an active/active topology, there only 40

servers will be connected, with 1:1 oversubscription.

Licensing

VCS will operate in a 2-switch topology without the need to purchase additional software licenses. However, if FCoE

support is needed, a separate FCoE license must be purchased.

For VCS configurations that exceed two switches, VCS licenses are required to form a VCS fabric. In addition, if FCoE

is required, an FCoE licensein addition to a VCS licenseis required.

Implementation

Figure 16 shows a sample topology using a 260 switch Brocade VDX 6720 configuration. This topology

provides 2.5:1 oversubscription and 80 server ports to provide active/active connectivity for a rack of 50

servers and/or storage elements. Table 2 shows the Bill of Materials (BOM).

Hardware

Quantity

Comments

BR-VDX 6720-60 (-F or R)

Network OS v2.1.0

10G-SFPP-TWX-0108

For ISLs

10G-SFPP-SR

10

For uplinks on the VDX side

BR-VDX6720-24FCOE-01

N/A

iSCSI Initiators

As needed

iSCSI Targets

As needed

FCoE Initiators

As needed

FCoE Targets

As needed

Table 2: Equipment Required for a 2-Switch ToR Solution

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

21 of 32

DATA CENTER

DESIGN GUIDE

Figure 16: 2-Switch ToR VCS

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

22 of 32

DATA CENTER

DESIGN GUIDE

BUILDING A 2-SWITCH AGGREGATION LAYER USING VCS

At the aggregation layer in the data center, ToR switches have traditionally had standby links to ToR switches running

STP, or other link level protocols, to provide resiliency. As server virtualization increases the density of servers in a

rack, the demand for bandwidth from the access switches must increase, to reduce oversubscription. This in turn

drives the demand for active/active uplink connectivity from ToR switches. This chapter discusses the best practices

for setting up a two-node VCS Fabric for aggregation, which expands the Layer 2 domain without the need for

spanning tree.

Design Considerations

Topology

The variables that affect a 2-switch design are oversubscription and latency. Oversubscription is directly dependent

on the number of uplinks, downlinks, and ISLs. Depending upon the application, latency can be a deciding factor in

the topology design.

Oversubscription, in a 2-switch topology, is a simple ratio of uplinks to downlinks. In a 260 port switch Fabric with 4

ISL links, 112 usable ports remain. Any of these 112 ports can be used as either uplinks or downlinks to give the

desired oversubscription ratio.

Licensing

VCS operates in a 2-switch topology without the need to purchase additional software licenses. However, if FCoE

support is needed, a separate FCoE license must be purchased.

For VCS configurations that exceed 2 switches, VCS licenses are required to form a VCS fabric. In addition, if FCoE is

required, an FCoE license in addition to a VCS license is required.

Implementation

Figure 17 shows a sample topology using a 260 switch Brocade VDX 6720. This topology provides a 2.5:1

oversubscription and 80 downlink ports to provide active/active connectivity to 20 Brocade FCX 648 switches with

410G uplinks each. Each of these Brocade FCX switches have 48x1G downlink ports, providing 960 (4820) 1G

server ports. Table 3 shows the BOM.

Hardware

Quantity

Comments

BR-VDX 6720-60 (-F or R)

Network OS v2.0.1

10G-SFPP-TWX-0108

For ISLs

10G-SFPP-TWX-0108

80

For Brocade FCX Uplinks

10G-SFPP-SR

10

FCX-648 E or I

20

Table 3: Equipment List for a 2-Switch Aggregation Solution

For uplinks on VDX side

For 1 GbE server connectivity

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

23 of 32

DATA CENTER

DESIGN GUIDE

Figure 17: 2-Switch VCS Fabric in Aggregation

Building the Fabric

Please refer to the VCS Nuts and Bolts (Within the Fabric) section. The same best practices apply to a 2switch ToR solution.

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

24 of 32

DATA CENTER

DESIGN GUIDE

24-SWITCH VCS REFERENCE ARCHITECTURE

Figure 18: 24-Switch Brocade VCS Reference Architecture with 10 GbE Server Access

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

25 of 32

DATA CENTER

DESIGN GUIDE

APPENDIX A: VCS USE CASES

Brocade VCS fabric technology can be used in multiple places in the network. Traditionally, data centers are built

using three-tier architectures with access layers providing server connectivity, aggregation layers aggregating the

access layer devices, and the data center core layer acting as an interface between the campus core and the data

center. This appendix describes the value that VCS fabric technology delivers in various tiers of the data center.

VCS Fabric Technology in the Access Layer

Figure 19 shows a typical deployment of VCS Fabric technology in the access layer. The most common deployment

model in this layer is the 2-switch ToR, as discussed previously. In the access layer, VCS fabric technology can be

inserted in existing architectures, as it fully interoperates with existing LAN protocols, services, and architecture. In

addition, VCS Fabric technology delivers additional value by allowing active-active server connectivity to the network

without additional management overhead.

At the access layer, VCS Fabric technology allows 1 GbE and 10 GbE server connectivity and flexibility of

oversubscription ratios, and it is completely auto-forming, with zero configuration. Servers see the VCS ToR as a

single switch and can fully utilize the provisioned network capacity, thereby doubling the bandwidth of network

access.

Figure 19: VCS Fabric Technology in the Access Layer

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

26 of 32

DATA CENTER

DESIGN GUIDE

VCS Fabric Technology in the Collapsed Access/Aggregation Layer

Traditionally, Layer 2 (L2) networks have been broadcast-heavy, which forced the data center designers to build

smaller L2 domains to limit both broadcast domains and failure domains. However, in order to seamlessly move

virtual machines in the data center, it is absolutely essential that the VMs are moved within the same Layer 2

domain. In traditional architectures, therefore, VM mobility is severely limited to these small L2 domains.

Brocade has taken a leadership position in the market by introducing Transparent Interconnection of Lots of Links

(TRILL)-based VCS Fabric technology, which eliminates all these issues in the data center. Figure 20 shows how a

scaled-out self-aggregating data center edge layer can be built using VCS Fabric technology. This architecture allows

customers to build resilient and efficient networks by eliminating STP, as well as drastically reducing network

management overhead by allowing the network operator to manage the whole network as a single logical switch.

Figure 20: VCS Fabric Technology in the Access/Aggregation Layer

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

27 of 32

DATA CENTER

DESIGN GUIDE

VCS Fabric Technology in a Virtualized Environment

Today, when a VM moves within a data center, the server administrator needs to open a service request with the

network admin to provision the machine policy on the new network node where the machine is moved. This policy

may include, but is not limited to, VLANs, Quality of Service (QoS), and security for the machine. VCS Fabric

technology eliminates this provisioning step and allows the server admin to seamlessly move VMs within a data

center by automatically distributing and binding policies in the network at a per-VM level, using the Automatic

Migration of Port Profiles (AMPP) feature. AMPP enforces VM-level policies in a consistent fashion across the fabric

and is completely hypervisor-agnostic. Figure 21 shows the behavior of AMPP in a 10-node VCS fabric.

Figure 21: VCS Fabric Technology in a Virtualized Environment

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

28 of 32

DATA CENTER

DESIGN GUIDE

VCS Fabric technology in Converged Network Environments

VCS Fabric technology has been designed and built ground-up to support shared storage access to thousands of

applications or workloads. VCS Fabric technology allows for lossless Ethernet using DCB and TRILL, which allows

VCS Fabric technology to provide multi-hop, multi-path, highly reliable and resilient FCoE and Internet Small

Computer Systems Interface (iSCSI) storage connectivity. Figure 22 shows a sample configuration with iSCSI and

FCoE storage connected to the fabric.

Figure 22: VCS Fabric Technology in a Converged Network

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

29 of 32

DATA CENTER

DESIGN GUIDE

Brocade VDX 6710 Deployment Scenarios

In this deployment scenario, the Brocade VDX 6710s (VCS fabric-enabled) extend benefits natively to 1 GbE servers.

Figure 23: Brocade VDX 6710 Deployment Scenario

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

30 of 32

DATA CENTER

DESIGN GUIDE

GLOSSARY

BPDU

Bridge Protocol Datagram Unit

ELD

Edge Loop Detection protocol. Used on the edge ports of a VCS fabric to detect and

remove loops.

MAC

Media Access Control. In Ethernet, it refers to the 48-bit hardware address.

PDU

Protocol Datagram Unit

RBridge

Routing Bridge. A switch that runs the TRILL (Transparent Interconnection of Lots of

Links) protocol.

RSTP

Rapid Spanning Tree Protocol. An IEEE standard for building a loop-free LAN (Local-Area

Network), which allows ports to rapidly transition to forwarding state.

VCS

Virtual Cluster Switching. Brocade VCS Fabric technology is a method of grouping a

fabric of switches together to form a single virtual switch that can provide a transparent

bridging function.

vLAG

Virtual Link Aggregation Group. You can create a LAG using multiple switches in a VCS

fabric. vLAG provides better high availability and faster protection switching than a normal

LAG.

VLAN

Virtual LAN. Subdividing a LAN into logical VLANs allows separation of traffic from

different sources within the LAN.

xSTP

An abbreviation used in this document to indicate all types of Spanning Tree Protocol, for

instance, STP, RSTP, MSTP (Multiple STP), PVST+ (Per VLAN Spanning Tree Plus), and

RPVST+ (Rapid PVST+).

RELATED DOCUMENTS

For more information about Brocade VCS Fabric technology, please see the Brocade VCS Fabric Technical

Architecture:

http://www.brocade.com/downloads/documents/technical_briefs/vcs-technical-architecture-tb.pdf

For the Brocade Network Operating System Admin Guide and Network OS Command Reference:

http://www.brocade.com/downloads/documents/product_manuals/B_VDX/NOS_AdminGuide_v211.pdf

http://www.brocade.com/downloads/documents/product_manuals/B_VDX/NOS_CommandRef_v211.pdf

The Network OS Release notes can be found at http://my.brocade.com

For more information about the Brocade VDX Series of switches, please see the product Data sheets:

Brocade VDX 6710 Data Center Switch:

http://www.brocade.com/products/all/switches/product-details/vdx-6710-dc-switches/index.page

Brocade VDX 6720 Data Center Switch:

http://www.brocade.com/products/all/switches/product-details/vdx-6720-dc-switches/index.page

Brocade VDX 6730 Data Center Switch:

http://www.brocade.com/products/all/switches/product-details/vdx-6730-dc-switches/index.page

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

31 of 32

DATA CENTER

DESIGN GUIDE

ABOUT BROCADE

As information becomes increasingly mobile and distributed across the enterprise, todays organizations are

transitioning to highly virtualized infrastructure, which often increases overall IT complexity. To simplify this process,

organizations must have reliable, flexible network solutions that utilize IT resources whenever and wherever

neededenabling the full advantages of virtualization and cloud computing.

As a global provider of comprehensive networking solutions, Brocade has more than 15 years of experience in

delivering Ethernet, storage, and converged networking technologies that are used in the worlds most missioncritical environments. Based on the Brocade One strategy, this unique approach reduces complexity and disruption

by removing network layers, simplifying management, and protecting existing technology investments. As a result,

organizations can utilize cloud-optimized networks to achieve their goals of non-stop operations in highly virtualized

infrastructures where information and applications are available anywhere.

For more information, visit www.brocade.com.

2012 Brocade Communications Systems, Inc. All Rights Reserved. 04/12 GA-DG-434-00

Brocade, Brocade Assurance, the B-wing symbol, DCX, Fabric OS, MLX, SAN Health, VCS, and VDX are registered trademarks, and AnyIO,

Brocade One, CloudPlex, Effortless Networking, ICX, NET Health, OpenScript, and The Effortless Network are trademarks of Brocade

Communications Systems, Inc., in the United States and/or in other countries. Other brands, products, or service names mentioned may

be trademarks of their respective owners.

Notice: This document is for informational purposes only and does not set forth any warranty, expressed or implied, concerning any

equipment, equipment feature, or service offered or to be offered by Brocade. Brocade reserves the right to make changes to this

document at any time, without notice, and assumes no responsibility for its use. This informational document describes features that may

not be currently available. Contact a Brocade sales office for information on feature and product availability. Export of technical data

contained in this document may require an export license from the United States government.

Brocade VDX/VCS Data Center Layer 2 Fabric Design Guide for Brocade Network OS v2.1.1

32 of 32

Anda mungkin juga menyukai

- Deploying QoS for Cisco IP and Next Generation Networks: The Definitive GuideDari EverandDeploying QoS for Cisco IP and Next Generation Networks: The Definitive GuidePenilaian: 5 dari 5 bintang5/5 (2)

- Uds 21 User Administration Guide Rev1Dokumen254 halamanUds 21 User Administration Guide Rev1Garry MooreBelum ada peringkat

- CAN and FPGA Communication Engineering: Implementation of a CAN Bus based Measurement System on an FPGA Development KitDari EverandCAN and FPGA Communication Engineering: Implementation of a CAN Bus based Measurement System on an FPGA Development KitBelum ada peringkat

- vx1000 UseGuide v11Dokumen29 halamanvx1000 UseGuide v11Alan AquinoBelum ada peringkat

- Migrating to the Cloud: Oracle Client/Server ModernizationDari EverandMigrating to the Cloud: Oracle Client/Server ModernizationBelum ada peringkat

- NS1&NS1-TB UserManual EN V1.7Dokumen63 halamanNS1&NS1-TB UserManual EN V1.7Alessandra AquinoBelum ada peringkat

- SDH / SONET Explained in Functional Models: Modeling the Optical Transport NetworkDari EverandSDH / SONET Explained in Functional Models: Modeling the Optical Transport NetworkBelum ada peringkat

- Data Center Design With Cisco Nexus Switches and Virtual Portchannel: OverviewDokumen22 halamanData Center Design With Cisco Nexus Switches and Virtual Portchannel: OverviewMichal PindychBelum ada peringkat

- Microsoft Virtualization: Master Microsoft Server, Desktop, Application, and Presentation VirtualizationDari EverandMicrosoft Virtualization: Master Microsoft Server, Desktop, Application, and Presentation VirtualizationPenilaian: 4 dari 5 bintang4/5 (2)

- Vmware Cloud Foundation 4 X On Vxrail Architecture GuideDokumen96 halamanVmware Cloud Foundation 4 X On Vxrail Architecture GuideАудіофіл МеломановBelum ada peringkat

- Itm Bacnetservergatewaydesignguide v2-06Dokumen148 halamanItm Bacnetservergatewaydesignguide v2-06Malick Sene GUEYEBelum ada peringkat

- Engineering Encyclopedia: Ethernet LAN DesignDokumen64 halamanEngineering Encyclopedia: Ethernet LAN DesignAkram DriraBelum ada peringkat

- SHT 135 PDFDokumen53 halamanSHT 135 PDFDioBelum ada peringkat

- BCSD in A Nutshell - 1 Edition: ObjectiveDokumen63 halamanBCSD in A Nutshell - 1 Edition: Objectivests100Belum ada peringkat

- Brocade VDX 6720 Hardware Reference Manual VDX6720 - HardwareManualDokumen80 halamanBrocade VDX 6720 Hardware Reference Manual VDX6720 - HardwareManualdavid0young_2Belum ada peringkat

- VDX6740 VDX6740T HardwareManual1445066105439Dokumen78 halamanVDX6740 VDX6740T HardwareManual1445066105439Renata (Arbey Esneider Medina Roncancio)Belum ada peringkat

- Guide c07 733228Dokumen58 halamanGuide c07 733228tomtang77Belum ada peringkat

- CODESYS SoftMotion & IndraDrive - EtherCAT CoEDokumen33 halamanCODESYS SoftMotion & IndraDrive - EtherCAT CoEAjay SahuBelum ada peringkat

- Dell Wyse Datacenter MicrosoftRDS ReferenceArchitectureDokumen76 halamanDell Wyse Datacenter MicrosoftRDS ReferenceArchitectureBiG BearBelum ada peringkat

- Getting Started Guide: Blackberry Professional SoftwareDokumen40 halamanGetting Started Guide: Blackberry Professional Softwareapi-19810186Belum ada peringkat

- vdx8770 8 InstallguideDokumen125 halamanvdx8770 8 Installguidecrojasmo44Belum ada peringkat

- Rocket 272x: 6Gb/s SAS/SATA Host AdapterDokumen8 halamanRocket 272x: 6Gb/s SAS/SATA Host AdapterFerdinand ToursBelum ada peringkat

- PA Edge Connect DesignDokumen52 halamanPA Edge Connect DesignM PBelum ada peringkat

- OXO Connect Am Cloud Connect VPN Server Reference Design 8AL91215ENAA 5 enDokumen37 halamanOXO Connect Am Cloud Connect VPN Server Reference Design 8AL91215ENAA 5 enFelipe AlarconBelum ada peringkat

- HCX Architecture DesignDokumen52 halamanHCX Architecture DesignGunaShekarBelum ada peringkat

- SAN Migration GuideDokumen41 halamanSAN Migration Guidesushant_beuraBelum ada peringkat

- Dvs Simplified 1020Dokumen80 halamanDvs Simplified 1020daran75592 nobitcoin.netBelum ada peringkat

- Brocade Network OS Administrator S GuideDokumen242 halamanBrocade Network OS Administrator S GuidepaulohrodriguesBelum ada peringkat

- Packetfence Network Devices Configuration Guide: For Version 3.5.0Dokumen76 halamanPacketfence Network Devices Configuration Guide: For Version 3.5.0René FabricioBelum ada peringkat

- Cisco SD-WAN Migration Guide: July 23, 2019Dokumen55 halamanCisco SD-WAN Migration Guide: July 23, 2019avinash7kumar7sing-2Belum ada peringkat

- IoT Book ContentDokumen8 halamanIoT Book Contenttayari_lBelum ada peringkat

- Revised Trade Message Broadcast ArchitectureDokumen90 halamanRevised Trade Message Broadcast ArchitectureprashantgoruleBelum ada peringkat

- 0184.Z-Stack User's Guide For CC2530 ZigBee-PRO Network Processor - Sample Applications PDFDokumen74 halaman0184.Z-Stack User's Guide For CC2530 ZigBee-PRO Network Processor - Sample Applications PDFEugen NeagaBelum ada peringkat

- DC Access Design W. Nexus 5K-2K & VPCDokumen66 halamanDC Access Design W. Nexus 5K-2K & VPCjnichols1009Belum ada peringkat

- Crius Aio Pro V2.0 Guide For Megapirateng: WWW - Multi-Rotor - Co.UkDokumen1 halamanCrius Aio Pro V2.0 Guide For Megapirateng: WWW - Multi-Rotor - Co.Ukgallinap1Belum ada peringkat

- C07-572829-01 Design N5K N2K VPC DGDokumen120 halamanC07-572829-01 Design N5K N2K VPC DGOrtiz Edgar100% (1)

- Ce Software Release Notes Ce9Dokumen179 halamanCe Software Release Notes Ce9Anselmo Manahen Meléndez AndradeBelum ada peringkat

- WSCAD SUITE X Installation and LicensingDokumen56 halamanWSCAD SUITE X Installation and LicensingDiep Tran NgocBelum ada peringkat

- Gateway ProlinkDokumen124 halamanGateway ProlinkJJGLBelum ada peringkat

- ICS BRS Installation Guide - EN - V27 PDFDokumen128 halamanICS BRS Installation Guide - EN - V27 PDFMathias LustigBelum ada peringkat

- WR Workbench Ocd Ice Hardware Ref 2.6Dokumen126 halamanWR Workbench Ocd Ice Hardware Ref 2.6sweden043Belum ada peringkat

- VCF 4.3 On VxRail Architecture GuideDokumen92 halamanVCF 4.3 On VxRail Architecture GuideStephane VincentBelum ada peringkat

- PLX3x User ManualDokumen222 halamanPLX3x User Manualjdmurilloa64Belum ada peringkat

- Extio 2 Remote Peripheral Solutions Installation Kit Instruction EP-DPCX24Dokumen98 halamanExtio 2 Remote Peripheral Solutions Installation Kit Instruction EP-DPCX24akramhomriBelum ada peringkat

- Metasys System Extended Architecture Overview Technical BulletinDokumen41 halamanMetasys System Extended Architecture Overview Technical BulletinPedro BortotBelum ada peringkat

- NOS AdminGuide v411 PDFDokumen748 halamanNOS AdminGuide v411 PDFMaeckol Segura PeñaBelum ada peringkat

- DCN Next Generation Open Interface Release 3.0Dokumen224 halamanDCN Next Generation Open Interface Release 3.0Eleftherios ChamakiotisBelum ada peringkat

- DeviceNet User ManualDokumen28 halamanDeviceNet User ManualAbolghasem PouraminiBelum ada peringkat

- OSA Implementation PDFDokumen290 halamanOSA Implementation PDFaksmsaidBelum ada peringkat

- DCX 8510 8 InstallguideDokumen194 halamanDCX 8510 8 InstallguideRichard Farfan RiosBelum ada peringkat

- Brocade 300: Hardware Reference ManualDokumen46 halamanBrocade 300: Hardware Reference ManualYudhi Harma PutraBelum ada peringkat

- FESTO CPX-FB36 - EthernetIP-ModbusTCPIPDokumen130 halamanFESTO CPX-FB36 - EthernetIP-ModbusTCPIPTruong Anh TuanBelum ada peringkat

- WSDeveloperGuide 1.2.146.0Dokumen315 halamanWSDeveloperGuide 1.2.146.0Sudheer Kumar ChunduruBelum ada peringkat

- Dell EMC Switch Configuration Guide For VxRailDokumen37 halamanDell EMC Switch Configuration Guide For VxRailmakuaaBelum ada peringkat

- NetWorker 19.3 Installation GuideDokumen134 halamanNetWorker 19.3 Installation Guideasma merzouguiBelum ada peringkat

- Connecting Audiocodes SBC To Microsoft Teams Direct Routing Enterprise Model Configuration NoteDokumen53 halamanConnecting Audiocodes SBC To Microsoft Teams Direct Routing Enterprise Model Configuration Noteyogensalwan17Belum ada peringkat

- Ce Software Release Notes Ce8nansnDokumen85 halamanCe Software Release Notes Ce8nansnjuan carlos alvarez rojasBelum ada peringkat

- 2021 The Path To 100 Gbps Daa NodesDokumen36 halaman2021 The Path To 100 Gbps Daa NodesFisnik OsmaniBelum ada peringkat

- Cisco Model DPQ3925 8x4 DOCSIS 3.0 Wireless Residential Gateway With Embedded Digital Voice Adapter User GuideDokumen1 halamanCisco Model DPQ3925 8x4 DOCSIS 3.0 Wireless Residential Gateway With Embedded Digital Voice Adapter User GuideFederico DemoBelum ada peringkat

- Programming Standards - March14 PDFDokumen51 halamanProgramming Standards - March14 PDFivan_ferreyra5739Belum ada peringkat

- EMC ScaleIO Performance ReportsDokumen18 halamanEMC ScaleIO Performance Reportsdavid0young_2Belum ada peringkat

- EMC ScaleIO Best Practices - VMwareDokumen29 halamanEMC ScaleIO Best Practices - VMwaredavid0young_2Belum ada peringkat

- EMC ScaleIO Best Practices - SecurityDokumen10 halamanEMC ScaleIO Best Practices - Securitydavid0young_2Belum ada peringkat

- EMC ScaleIO Best Practices - PerformanceDokumen16 halamanEMC ScaleIO Best Practices - Performancedavid0young_2Belum ada peringkat

- Fos 820a FabricextengdeDokumen242 halamanFos 820a Fabricextengdedavid0young_2Belum ada peringkat

- IAG Contemporary Houses IIDokumen11 halamanIAG Contemporary Houses IIAditya Niloy100% (2)

- Search EnginesDokumen15 halamanSearch EnginesRam Sagar MouryaBelum ada peringkat

- Program C#Dokumen5 halamanProgram C#Shanmuga Sundaram ChellamBelum ada peringkat

- 33CLR2401LG1 PDFDokumen110 halaman33CLR2401LG1 PDFCleber AlmeidaBelum ada peringkat

- Using Open Source Tools For AT91SAM7S Cross Development Revision C PDFDokumen198 halamanUsing Open Source Tools For AT91SAM7S Cross Development Revision C PDFducchinh1001Belum ada peringkat

- PV Quick StartDokumen13 halamanPV Quick Startarielomar27Belum ada peringkat

- Interface and Line Numbers in Cisco RoutersDokumen8 halamanInterface and Line Numbers in Cisco RoutersSimon KohBelum ada peringkat

- Masonry Information: Masonry Cement: Product Data SheetDokumen4 halamanMasonry Information: Masonry Cement: Product Data SheetarylananylaBelum ada peringkat

- Control of Crack WidthsDokumen55 halamanControl of Crack WidthsMahmood Mufti100% (1)

- CV Update FikiDokumen5 halamanCV Update FikifikiBelum ada peringkat

- 3DMark06 Tes 1Dokumen156 halaman3DMark06 Tes 1ngototae100% (2)

- USB in A NutShellDokumen35 halamanUSB in A NutShellSandro Jairzinho Carrascal Ayora100% (1)

- XOS 9.0.3 Release NotesDokumen14 halamanXOS 9.0.3 Release Notesmike15rorkBelum ada peringkat

- KS1000 KingzipDokumen7 halamanKS1000 KingzipmrajansubramanianBelum ada peringkat

- Kss 82 Cread Cwrite enDokumen67 halamanKss 82 Cread Cwrite enEl BeppeBelum ada peringkat

- Case Study On Light and ArchitectureDokumen10 halamanCase Study On Light and Architecturetarun sharmaBelum ada peringkat