Neural Network

Diunggah oleh

Saranya RamaswamyDeskripsi Asli:

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Neural Network

Diunggah oleh

Saranya RamaswamyHak Cipta:

Format Tersedia

ENSEMBLE BASED CANCER DETECTION USING FAST

ADAPTIVE NEURAL NETWORK CLASSIFIER

1.

P.Ganesh Ram 2.E.Manova

1,2

Prefinal year students

Department of ece

Hindusthan institute of technology

Coimbatore-32

Email:mano.ebinezar@gmail.com

Mobile:8122289050

as a final classifier to determine whether the

ABSTRACT:

An artificial neural network

ensemble is a learning paradigm where several

suspected image block contains a lung nodule

INTRODUCTION:

artificial neural networks are jointly used to

Lung cancer is one of the most

solve a problem. In this paper, an automatic

common and deadly diseases in the world.

pathological

named

Detection of lung cancer in its early stage is the

Neural Ensemble-based Detection (NED) is

key of its cure. In general, measures for early

proposed, which utilizes an artificial neural

stage lung cancer diagnosis mainly includes

network ensemble to identify lung cancer cells.

those utilizing X-ray chest films, CT, MRI,

The neural algorithm we used is FANNC,

isotope, bronchoscopy, etc., among which a

which is a fast adaptive neural classifier that

very important measure is the so-called

performs one-pass incremental learning with

pathological

fast speed and high accuracy. The fundamental

specimens of needle biopsies obtained from the

operation of the artificial neural network is

bodies of the subjects to be diagnosed. At

local two-dimensional convolution rather than

present, the specimens of needle biopsies are

full connection with weighted multiplication.

usually analyzed by experienced pathologists.

Weighting coefficients of the convolution

Since senior pathologists are rare, reliable

kernels are formed by the artificial neural

pathological diagnosis is not always available.

network through back propagated training.

During the last decades, along with the rapid

The artificial convolution neural network acted

developments of image processing and pattern

diagnosis

procedure

diagnosis

that

analyzes

the

recognition techniques, computer-aided lung

CANCER DIAGNOSIS SYSTEM:

cancer diagnosis attracts more and more

attention.

NEURAL

X-ray chest films are valuable in

lung cancer diagnosis. However, there are

ENSEMBLE

BASED

cases where subsequent examination should be

performed to increase the reliability of

CANCER DETECTIONS:

In this paper, based on the

diagnoses. For example, if a patient has

recognition power of artificial neural network,

suffered pneumonia before, some tumors may

an automatic pathological diagnosis procedure

appear in abnormal lung areas so that they are

named Neural Ensemble based Detection

difficult to be distinguished from cicatrices.

(NED) is proposed. NED utilizes an artificial

Hence in this paper, NED is used to

neural network ensemble to identify cancer

accomplish this task through needle biopsy. In

cells in the images of the specimens of needle

needle biopsy, it involves removing some cells

biopsies obtained from the bodies of the

either surgically or in a less invasive

subjects to be diagnosed. The ensemble used is

procedure from a suspicious area within the

built

ensemble

body and examining them under a microscope

architecture and a novel prediction combining

to determine a diagnosis. A lung nodule is

method, which achieves not only a high rate of

relatively round lesion, or area of abnormal

overall identification but also a low rate of

tissue located within the lung. Lung nodules

false negative identification, i.e. a low rate of

are most often detected on a chest x-ray and do

judging cancer cells to be normal ones. In

not typically cause pain or other symptoms.

practical implementation, neural ensemble

Since in order to differentiate it from other

based lung cancer detection involves various

diseases like tuberculosis, pneumonia, etc, .we

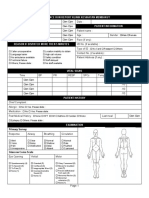

sessions:

used

on

specific

two-level

Cancer

diagnosis system

Image

compression

using PCA concepts

First level ensemble

Second level ensemble

needle

biopsy.

The

hardware

implementation of initial stage of cancer

detection in needle biopsy mainly includes a

light microscope, a digital camera, and an

image capturer. The digital camera is mounted

on the light microscope whose power of

amplification is much greater as 400 times

which picks up the video signals of the

haematoxylin-eosine (HE) stained specimens

of needle biopsies obtained from the bodies of

The hope is that this new basis will filter out

the subjects to be diagnosed. Those signals are

the noise and reveal hidden structure.

captured by the image capturer and then

transformed to 24 bit RGB color images that

are used in cancer cell identification. The

image captured is further compressed using

PCA concepts below to convert the image into

the form of data which can be further

processed to check whether the given specimen

has the cancer cells or not.

CODE FOR PCA IN MATLAB

SIMULATION:

clc all

data=imread(C:\Documents

settings\admin\My

and

Documents\My

Pictures\bj)

IMAGE COMPRESSION USING

function [signals,PC,V] = pca1(data)

PCA METHOD:

% PCA1: Perform PCA using covariance.

Principal component analysis

% data - MxN matrix of input data

(PCA) is a vector space transform often used

% signals - MxN matrix of projected data

to reduce multidimensional data sets to lower

% PC - each column is a PC

dimensions for analysis. PCA is the simplest

% V - Mx1 matrix of variances

and most useful of the true eigenvector-based

[M,N] = size(data);

multivariate analyses, because its operation is

% subtract off the mean for each dimension

to reveal the internal structure of data in an

mn = mean(data,2);

unbiased way. PCA will combine all collinear

data = data - repmat(mn,1,N);

data into a small number of independent

% calculate the covariance matrix

(orthogonal) axes, which can then safely be

covariance = 1 / (N-1) * data * data;

used for further analyses.PCA supplies the user

% find the eigenvectors and eigenvalues

with

[PC, V] = eig(covariance);

2D

picture

which

results

in

dimensionally-reduced image of the data is the

% extract diagonal of matrix as vector

ordination diagram of the 1st two principal

V = diag(V);

axes of the data. The goal of principal

% sort the variances in decreasing order

component analysis is to compute the most

[junk, rindices] = sort(-1*V);

meaningful basis to re-express a noisy data set.

V = V(rindices);

PC = PC(:,rindices);

% project the original data set

adenocarcinoma, about 38% are squamous cell

signals = PC * data;

carcinoma,

function [signals,PC,V] = pca2(data)

carcinoma, and about 8% are large cell

% PCA2: Perform PCA using SVD.

carcinoma. All the distributions approximate

% data - MxN matrix of input data

real-world proportion of those lung cancer

% (M dimensions, N trials)

types. In this first kind of ensemble, in each

% signals - MxN matrix of projected data

experiment we train five FANNC networks

% PC - each column is a PC

with the above cancerous cell types and then

% V - Mx1 matrix of variances

combine their predictions through full voting.

[M,N] = size (data);

Full voting holds a very strong claim that a

% subtract off the mean for each dimension

prediction is judged as the final output only

mn = mean(data,2);

when all the individual networks hold the

data = data - repmat(mn,1,N);

prediction. Full voting can only be used in

% construct the matrix Y

tasks where only two output classes exist,

Y = data / sqrt(N-1);

among which one output is more important

% SVD does it all

than the other. To use full voting, we should

[u,S,PC] = svd(Y);

modify the training data so that the number of

% calculate the variances

output class is reduced from five to two. This is

S = diag(S);

done

V = S .* S;

adenocarcinoma, squamous cell carcinoma,

% project the original data

small cell carcinoma, and large cell carcinomal

signals = PC * data;

to a big class, i.e. cancer cell. Therefore 75%

by

about

merging

22%

the

are

small

cancer

cell

classes

examples in the training sets belong to the class

FIRST LEVEL ENSEMBLE:

The lung cancer cell

identification includes a single artificial neural

cancer cell and the rest 25% belong to the class

normal cell.

FANNC ARCHITECTURE:

network in which the neural algorithm we used

The FANNC network is

is FANNC. It is a fast adaptive neural network

composed of four layers of units. The

classifier that performs one-pass incremental

activation function of hidden units is the

learning with fast speed and high accuracy.

Sigmoid function, and Gaussian weights

Among all cancer cells, about 32% are

connect the input units with the second-layer

units.FANNC uses the second-layer units to

particular, the unit number of hidden layers is

classify inputs internally and the third-layer

zero. This is different from some other neural

units to classify outputs internally, and sets up

algorithms that configure hidden units before

associations between those two layers to

the start of the learning course. While instances

implement supervised learning. Except for the

are fed, FANNC will adaptively append hidden

connections between the first and second-layer

units so that the knowledge coded in instances

units, all connections are bidirectional. The

could be learned. The unit-appending process

feedback connections, whose function is just to

will terminate after all the instances are fed.

transmit

Thus, the topology of FANNC is always

feedback

signal

to

implement

competition and resonance.

adaptively changing during the learning course.

When the first instance is fed, FANNC appends

two hidden units to the network, one in the

second-layer and one in the third-layer. Those

two units are connected with each other; the

feed-forward and feedback connections are all

set to 1. The third-layer unit is connected with

all

the

output

units,

the

feed-forward

connections are respectively set to the output

components of current instance and the

feedback connections are all set to 1. The

second-layer unit is connected with all the

ARCHITECTURE OF FANNC 1

input units through Gaussian weights. The

responsive centers are respectively set to the

input components of current instance, and the

LEARNING AND TRAINING

COURSE:

responsive characteristic widths are set to a

default value. In this paper in FANNC,we

introduces the notion of attracting Basin. An

The initial network is composed

of only input and output layers, whose unit

number is respectively set to the number of

components of the input and output vector. In

attracting basin is a region created by a training

vector. If a test vector falling into the region, it

will be captured by the training vector. In

FANNC each second-layer unit defines an

attracting basin by responsive centers and

Since the dynamical property of a Gaussian

responsive characteristic widths of Gaussian

weight is entirely determined by its responsive

weights connecting with it.

center and responsive characteristic width of

learned knowledge can be encoded in weight

only through modifying those two parameters.

METHOD FOR CONSTRUCTION

OF

FANNC

NETWORK

FOR

CLASSIFICATION:

Thus, during the training process, if the input

Pattern is located in an attracting basin that is

determined by ij's and ij's in equations, the

Basin will be slightly moved so that its center

STEP1: FANNC constructs its first

is closer to the input pattern. Else the nearest

attracting basin while the first instance is being

attracting basin will be found and modulated

fed. Also, it will add or move basins according

according to the relationship between the input

to the later instances.

pattern and the original basin, so that the basin

Assuming that instances input to the input units

Could cover the input pattern.

are

STEP 3:

AK=(ak1,ak2,..,akn) (k=1,2,3,,m)

In this step we are allocating some functions to

where k is the index of instance, and n is the

number of input units. The value input to the

second-layer unit j from the first-layer unit i is:

bInij = (-(aik-ij)/aij) ^2

where ij and ij are the responsive Center and

the responsive characteristic width of the

Gaussian weight connecting unit i with unit j.

STEP 2:The relationship between bInij and

other parameters satisfies:

Aik = ij ; bInij

k

i

(a -ij)

specific functions. The second-layer unit j

computes its activation value according to:

bj=f(bInij -j)

Where

j is the bias of unit j. f is the Sigmoid

Function as follows,

f(u)=( 1 /(1+e^-2))

A leakage competition1 is carried out

among all the second-layer units. The outputs

of the winners are transferred to related third-

1

bInij

second and third layer such that they bear some

layer units. The activation value of the third-

layer unit h is computed according to:

ch = f(bjvjh - h)

where i=1,2,h etc.,

The error between the expected networks

where bj is the activation value of the second

output and the characteristic third-layer unit

layer unit j.f is also a sigmoid function.

output, which is represented by the feed-

STEP4:

forward connections between the third-layer

The activation value of the output

unit l is computed according to:

and output units, is computed according to:

Errch = (( whl- dlk) 2) /q

where Errch is the characteristic error of the

dl = chwhl

Third-layer unit h. q is the number of output

units.

where dl is the activation value of the output

whl is the feed-forward weight connecting unit

unit l. ch is the activation value of the third-

h to output unit l. dlk is the expected output of

layer winner h. whl is the feed-forward weight

unit l.The unit u whose characteristic error is

connecting unit h to unit l.

the minimum among the entire third-layer units

ERROR CORRECTIONS:

is selected. It satisfies:

The error between real network output

Errcu = MIN(Errch)

and expected output is computed. Here we use

Where p is the number of third-layer units.

the average squared error as the measure:

If the error Errcu is in the allowable range, it

Err = ((dl -dlk)2)/q

where i=1,2,q etc.,

where q is the number of the output units. dl is

the real output of the output unit l and dl

k is its expected output.

If the error Err is in the allowable range,

it means that current instance is covered by an

means that the internal output classification

represented by unit u is applicable to the

current instance. Also, it is the internal input

Classification represented by the second-layer

units that should be adjusted. Thus, the unit t

whose activation value is the maximum among

those connecting with unit u is selected.

existing attracting basin. In addition, the

instance should be regarded as a more typical

pattern of the center of the basin. If the error

Err is beyond the allowable range, it means

that current instance is not covered by any

existing attracting basins.

It satisfies:

bt=MAX(bj)

where bj is the second-layer unit activation.

The errors are rectified using error correction

methods which plays an very important role in

cells.The overall classification of the ensemble

training of networks.

is given as flowchart below:

FEATURES OF CELL

IMAGES

SECOND LEVEL ENSEMBLE:

The cells that are judged to be cancer

cells by the first-level ensemble are passed to

the second-level ensemble that is responsible to

FIRST LEVEL ENSEMBLE

i.e., FULL VOTING

report the type of the cells. Here we also

employ Bagging to generate five FANNC

networks each has five output units and then

using

plurality

voting

to

combine

the

individual predictions. If more than one

no

CANCE

R

CELL?

individual prediction rank first according to the

number of votes, the identification is labeled as

wrong. Moreover, if the disputed cell is a

cancer cell, then both the number of false

identified images and the number of false

negatively identified images increase by one; if

RESULT:

NORMAL

CELL

yes

SECOND LEVEL

ENSEMBLE i.e.,

PLURALITY VOTING;

CANCER

CELL

CLASSFICATION

END

the disputed cell is a normal cell, then both the

number of false identified images and the

END

number of false positively identified images

increase by one. Ave. records the average value

of those five experiments, i.e. the results of 5fold cross validation. Through this ensemble

we shall classify the given cancer cell into any

one of the above mentioned cancerous

ALGORITHM FOR TWO LEVEL

ENSEMBLE BASED DETECTION:

TO CREATE NETWORK IN MATLAB:

\\ give the output of the pca as the input for

the connection weights are ever refined. There

first FANNC network\\

are many laws (algorithms) used to implement

Start the code with arrayed data as

the adaptive feedback required to adjust the

inputs

weights during training. The most common

Second and third layers are created

technique is backward-error propagation, more

using LOSSIG function

commonly known as back-propagation. The

Fourth layer as hardlims which is more

following steps explains how to increase the

helpful such that results in cancer or

weights such that it has effective trained

normal cells.

network.

(The above mentioned steps are solved using

NN TOOLBOX in mat lab where no of inputs

to the network was given by output of the pca

1. Present a training sample to the neural

network.

2. Compare the network's output to the

calculations)

desired

TRAINING NETWORK:

Calculate the error in each output

Training is widely used in the neural

network field to describe for determining an

optimized set of weights based on the statistics

of the given examples. Here we used is

supervised training or learning.

In supervised training, both the

inputs and the outputs are provided. The

network

then

processes

the

inputs

and

compares its resulting outputs against the

desired outputs. Errors are then propagated

back through the system, causing the system to

adjust the weights which control the network.

This process occurs over and over as the

weights are continually tweaked. The set of

data which enables the training is called the

"training set." During the training of a network

the same set of data is processed many times as

output

from

that

sample.

neuron.

3. For each neuron, calculate what the

output should have been, and a scaling

factor, how much lower or higher the

output must be adjusted to match the

desired output. This is the local error.

4. Adjust the weights of each neuron to

lower the local error.

5. Assign "blame" for the local error to

neurons at the previous level, giving

greater

responsibility

to

neurons

connected by stronger weights.

6. Repeat the steps above on the neurons

at the previous level to minimise the

error coeficients.

Actual algorithm explaining the functions of

for cancer detection. When the needle biopsies

the back propagation are

input are given the we shall found the output as

Initialize the weights in the network

he was a cancer patient are not.

Do

// For each example e in the training set//

O = neural-net-output(network, e) ;

CONCLUSION:

forward pass

Artificial neural networks have

T = teacher output for e

Calculate error (T - O) at the output units

Compute delta_wi for all weights from

hidden layer to output layer ; backward pass

already been widely exploited in computeraided lung cancer diagnosis. The artificial

neural

network

ensemble

is

recently

Compute delta_wi for all weights from

developed technology, which has the ability of

input layer to hidden layer ; backward pass

significantly improving the performance of a

continued

system where a single artificial neural network

Update the weights in the network

is used. Since it is very easy to be used, it has

Until all examples classified correctly or

the potential of profiting not only experts in

artificial neural network research but also

stopping criterion satisfied

engineers developing real world applications.

Return the network

(The

above

mentioned

error

corrections

formulas are used to manipulate the error

REFERENCES:

coefficients and overcome by the back

propagation technique. )

AT OUTPUT LAYER:

For first level ensemble based detection,

hardlims function is used such that output

either cancer or normal cell.

For second level ensemble based detection,

Logsig is used such that output is one of the

cancer cells in above mentioned 5 cancerous

cells. These are the above steps to be followed

Neural ensemble based detection by

Shah yun si

Fannc a fast network classifier by En

san sui

A neuro fuzzy soft computing (book)

by Shan &co.

www.mathworks.com

www.neuroscience.com

www.algorithmsgroup.com

Anda mungkin juga menyukai

- Detecting and Recognising Lung Cancer: Using Convolutional Neural NetworksDokumen25 halamanDetecting and Recognising Lung Cancer: Using Convolutional Neural NetworksRAJU MAURYABelum ada peringkat

- Lung CancerDokumen10 halamanLung Cancerbvkarthik2711Belum ada peringkat

- Lung Cancer Detection by Using Artificial Neural Network and Fuzzy Clustering MethodsDokumen4 halamanLung Cancer Detection by Using Artificial Neural Network and Fuzzy Clustering Methodsfree5050Belum ada peringkat

- LungcancerDokumen5 halamanLungcancersubhasis mitraBelum ada peringkat

- I Nternational Journal of Computational Engineering Research (Ijceronline - Com) Vol. 2 Issue. 7Dokumen4 halamanI Nternational Journal of Computational Engineering Research (Ijceronline - Com) Vol. 2 Issue. 7International Journal of computational Engineering research (IJCER)Belum ada peringkat

- Detecting Pneumonia Using Convolutions and Dynamic Capsule Routing For Chest X-Ray ImagesDokumen30 halamanDetecting Pneumonia Using Convolutions and Dynamic Capsule Routing For Chest X-Ray ImagesShoumik MuhtasimBelum ada peringkat

- Published Article AutomaticDetectionAndCountingODokumen13 halamanPublished Article AutomaticDetectionAndCountingOKEREN EVANGELINE I (RA1913011011002)Belum ada peringkat

- Final Report ETE 300 PDFDokumen5 halamanFinal Report ETE 300 PDFETE 18Belum ada peringkat

- Wu 2017Dokumen4 halamanWu 2017Vikas KumarBelum ada peringkat

- Diagnosis of Pneumonia From Chest X-Ray Images Using Deep LearningDokumen5 halamanDiagnosis of Pneumonia From Chest X-Ray Images Using Deep LearningShoumik MuhtasimBelum ada peringkat

- Article 1Dokumen4 halamanArticle 1Tashu SardaBelum ada peringkat

- 2D3D Clasification 01Dokumen11 halaman2D3D Clasification 01sadBelum ada peringkat

- Brain TumorDokumen4 halamanBrain TumorTARUN KUMARBelum ada peringkat

- Document3 TexDokumen4 halamanDocument3 TexNavin M ABelum ada peringkat

- Lung Paper - ModifiedDokumen12 halamanLung Paper - ModifiedMaheswari VutukuriBelum ada peringkat

- Zhao2018 AGILE CNNDokumen11 halamanZhao2018 AGILE CNNrekka mastouriBelum ada peringkat

- Img 19116Dokumen3 halamanImg 19116pavithraBelum ada peringkat

- Irjet V9i1124Dokumen5 halamanIrjet V9i1124BALAJIBelum ada peringkat

- Computer-Aided Detection of Mediastinal Lymph NodeDokumen7 halamanComputer-Aided Detection of Mediastinal Lymph NodeJostroBelum ada peringkat

- Abiwinanda 2018Dokumen7 halamanAbiwinanda 2018Avijit ChaudhuriBelum ada peringkat

- Teja - Technical Seminar PresentationDokumen28 halamanTeja - Technical Seminar PresentationArjun P LinekajeBelum ada peringkat

- 2023 A Literature Review On "Lung Cancer Detection Approaches Using Medical Images"Dokumen9 halaman2023 A Literature Review On "Lung Cancer Detection Approaches Using Medical Images"graythomasinBelum ada peringkat

- Detection and Classification of Brain Tumours From Mri Images Using Faster R-CNNDokumen6 halamanDetection and Classification of Brain Tumours From Mri Images Using Faster R-CNNpavithrBelum ada peringkat

- Research Article Robust Blood Cell Image Segmentation Method Based On Neural Ordinary Differential EquationsDokumen11 halamanResearch Article Robust Blood Cell Image Segmentation Method Based On Neural Ordinary Differential EquationsSamir GhoualiBelum ada peringkat

- Efficacy of Algorithms in Deep Learning On Brain Tumor Cancer Detection (Topic Area Deep Learning)Dokumen8 halamanEfficacy of Algorithms in Deep Learning On Brain Tumor Cancer Detection (Topic Area Deep Learning)International Journal of Innovative Science and Research TechnologyBelum ada peringkat

- Classification of MR Brain Images For Detection of Tumor With Transfer Learning From Pre-Trained CNN ModelsDokumen4 halamanClassification of MR Brain Images For Detection of Tumor With Transfer Learning From Pre-Trained CNN Modelseshwari2000Belum ada peringkat

- A Comparative Study of Tuberculosis DetectionDokumen5 halamanA Comparative Study of Tuberculosis DetectionMehera Binte MizanBelum ada peringkat

- 10 1002@ima 22445Dokumen13 halaman10 1002@ima 22445barti koksBelum ada peringkat

- Article 4Dokumen7 halamanArticle 4Tashu SardaBelum ada peringkat

- Lung Cancer Detection by Using Image Processing Approach: IOP Conference Series: Materials Science and EngineeringDokumen4 halamanLung Cancer Detection by Using Image Processing Approach: IOP Conference Series: Materials Science and EngineeringROHITH KATTABelum ada peringkat

- Medical Image Classification Using CNN ReportDokumen19 halamanMedical Image Classification Using CNN ReportSameer Thadimarri AP20110010028Belum ada peringkat

- Detection of Abnormalities in BrainDokumen13 halamanDetection of Abnormalities in BrainShashaBelum ada peringkat

- Automatic Detection of Pneumonia On Compressed Sensing Images Using Deep LearningDokumen4 halamanAutomatic Detection of Pneumonia On Compressed Sensing Images Using Deep LearningRahul ShettyBelum ada peringkat

- Brain Tumor Detection Using Deep Neural NetworkDokumen6 halamanBrain Tumor Detection Using Deep Neural Networkkokafi4540Belum ada peringkat

- Final PPT LungDokumen21 halamanFinal PPT Lunggursirat singh100% (2)

- Impact of Local Histogram Equalization On Deep Learning Architectures For Diagnosis Ofcovid-19 On Chest X-RaysDokumen5 halamanImpact of Local Histogram Equalization On Deep Learning Architectures For Diagnosis Ofcovid-19 On Chest X-RaysSerhanNarliBelum ada peringkat

- Deep Convolutional Neural Networks For Lung Nodule Detection: Improvement in Small Nodule IdentificationDokumen9 halamanDeep Convolutional Neural Networks For Lung Nodule Detection: Improvement in Small Nodule Identificationdreadrebirth2342Belum ada peringkat

- Brain Tumor Classification Using Deep Learning AlgorithmsDokumen12 halamanBrain Tumor Classification Using Deep Learning AlgorithmsIJRASETPublicationsBelum ada peringkat

- 1 s2.0 S2352914819301133 MainextDokumen11 halaman1 s2.0 S2352914819301133 MainextFarhan MaulanaBelum ada peringkat

- Lung Cancer Detection Using Machine Learning Algorithms and Neural Network On A Conducted Survey Dataset Lung Cancer DetectionDokumen4 halamanLung Cancer Detection Using Machine Learning Algorithms and Neural Network On A Conducted Survey Dataset Lung Cancer DetectionInternational Journal of Innovative Science and Research TechnologyBelum ada peringkat

- Abstract: Review Paper On A Review of Algorithms and Methodologies Used To Detect Breast Cancer in Women Harshil ShahDokumen13 halamanAbstract: Review Paper On A Review of Algorithms and Methodologies Used To Detect Breast Cancer in Women Harshil ShahSubarna LamsalBelum ada peringkat

- BasePaper CervicDokumen18 halamanBasePaper CervicMeenachi SundaramBelum ada peringkat

- Qin 2018Dokumen5 halamanQin 2018sadBelum ada peringkat

- MatterDokumen40 halamanMattervemaladileep77599Belum ada peringkat

- Research On JournalingDokumen6 halamanResearch On Journalinggraphic designerBelum ada peringkat

- Kancherla 2013Dokumen5 halamanKancherla 2013mindaBelum ada peringkat

- Folmsbee Et Al. - 2018 - Active Deep Learning Improved Training Efficiency of Convolutional Neural Networks For Tissue Classification inDokumen4 halamanFolmsbee Et Al. - 2018 - Active Deep Learning Improved Training Efficiency of Convolutional Neural Networks For Tissue Classification inCarlos Henrique LemosBelum ada peringkat

- Deep Neural Network Ensemble For Pneumonia LocalizationDokumen12 halamanDeep Neural Network Ensemble For Pneumonia LocalizationAmílcar CáceresBelum ada peringkat

- Lung Cancer DetectionDokumen8 halamanLung Cancer DetectionAyush SinghBelum ada peringkat

- Comparison of MLDokumen8 halamanComparison of MLjahanzebBelum ada peringkat

- Measurement: SensorsDokumen6 halamanMeasurement: SensorsMohana LeelaBelum ada peringkat

- Research Paper On Brain Tumor DetectionDokumen8 halamanResearch Paper On Brain Tumor Detectiongz8jpg31100% (1)

- AB Report Group 2Dokumen14 halamanAB Report Group 2Abyan HarahapBelum ada peringkat

- Peer Reviewed JournalsDokumen7 halamanPeer Reviewed JournalsSai ReddyBelum ada peringkat

- 10 1109@ismsit 2019 8932878Dokumen4 halaman10 1109@ismsit 2019 8932878Vemula Prasanth KumarBelum ada peringkat

- Conference TemplateDokumen5 halamanConference Templatenisha.vasudevaBelum ada peringkat

- Brain Tumor Segmentation Using A Fully Convolutional Neural Network With Conditional Random FieldsDokumen13 halamanBrain Tumor Segmentation Using A Fully Convolutional Neural Network With Conditional Random FieldsChandan MunirajuBelum ada peringkat

- Image Segmentation Techniques in Medical Analysis "A Boon For Detection of Cancer": A ReviewDokumen5 halamanImage Segmentation Techniques in Medical Analysis "A Boon For Detection of Cancer": A ReviewIJIERT-International Journal of Innovations in Engineering Research and TechnologyBelum ada peringkat

- Image Processing (Cse4019) : Project Title - Skin Cancer DetectionDokumen23 halamanImage Processing (Cse4019) : Project Title - Skin Cancer DetectionAayushi TiwariBelum ada peringkat

- Magnetic Resonance Imaging: Recording, Reconstruction and AssessmentDari EverandMagnetic Resonance Imaging: Recording, Reconstruction and AssessmentPenilaian: 5 dari 5 bintang5/5 (1)

- Adobe Scan 07 Oct 2023Dokumen1 halamanAdobe Scan 07 Oct 2023Saranya RamaswamyBelum ada peringkat

- Also Specify The Switching Time of The DesignDokumen3 halamanAlso Specify The Switching Time of The DesignSaranya RamaswamyBelum ada peringkat

- 15 Famous Indian Scientists and Their Inventions: Prafulla Chandra RayDokumen10 halaman15 Famous Indian Scientists and Their Inventions: Prafulla Chandra RaySaranya RamaswamyBelum ada peringkat

- Techniques For Low Power VLSI Design: A Novel Analysis of Leakage Power ReductionDokumen4 halamanTechniques For Low Power VLSI Design: A Novel Analysis of Leakage Power ReductionSaranya RamaswamyBelum ada peringkat

- Unit3 - Class1 - Concept of Neg FeedbackDokumen60 halamanUnit3 - Class1 - Concept of Neg FeedbackSaranya RamaswamyBelum ada peringkat

- (Common For Both) Low Power Vlsi DesignDokumen2 halaman(Common For Both) Low Power Vlsi DesignSaranya RamaswamyBelum ada peringkat

- Köppen Climate Classification - Wikipedia, The Free EncyclopediaDokumen15 halamanKöppen Climate Classification - Wikipedia, The Free EncyclopediaAndreea Tataru StanciBelum ada peringkat

- PR KehumasanDokumen14 halamanPR KehumasanImamBelum ada peringkat

- Working Capital in YamahaDokumen64 halamanWorking Capital in YamahaRenu Jindal50% (2)

- Time-Sensitive Networking - An IntroductionDokumen5 halamanTime-Sensitive Networking - An Introductionsmyethdrath24Belum ada peringkat

- Fss Presentation Slide GoDokumen13 halamanFss Presentation Slide GoReinoso GreiskaBelum ada peringkat

- Sam Media Recruitment QuestionnaireDokumen17 halamanSam Media Recruitment Questionnairechek taiBelum ada peringkat

- (123doc) - Toefl-Reading-Comprehension-Test-41Dokumen8 halaman(123doc) - Toefl-Reading-Comprehension-Test-41Steve XBelum ada peringkat

- GT-N7100-Full Schematic PDFDokumen67 halamanGT-N7100-Full Schematic PDFprncha86% (7)

- Vendor Information Sheet - LFPR-F-002b Rev. 04Dokumen6 halamanVendor Information Sheet - LFPR-F-002b Rev. 04Chelsea EsparagozaBelum ada peringkat

- AlpaGasus: How To Train LLMs With Less Data and More AccuracyDokumen6 halamanAlpaGasus: How To Train LLMs With Less Data and More AccuracyMy SocialBelum ada peringkat

- PLC Laboratory Activity 2Dokumen3 halamanPLC Laboratory Activity 2Kate AlindajaoBelum ada peringkat

- Carriage RequirementsDokumen63 halamanCarriage RequirementsFred GrosfilerBelum ada peringkat

- Log Building News - Issue No. 76Dokumen32 halamanLog Building News - Issue No. 76ursindBelum ada peringkat

- Ron Kangas - IoanDokumen11 halamanRon Kangas - IoanBogdan SoptereanBelum ada peringkat

- Farmer Producer Companies in OdishaDokumen34 halamanFarmer Producer Companies in OdishaSuraj GantayatBelum ada peringkat

- Rockaway Times 11818Dokumen40 halamanRockaway Times 11818Peter J. MahonBelum ada peringkat

- Duavent Drug Study - CunadoDokumen3 halamanDuavent Drug Study - CunadoLexa Moreene Cu�adoBelum ada peringkat

- Monkey Says, Monkey Does Security andDokumen11 halamanMonkey Says, Monkey Does Security andNudeBelum ada peringkat

- PSG College of Technology, Coimbatore - 641 004 Semester Examinations, SemesterDokumen3 halamanPSG College of Technology, Coimbatore - 641 004 Semester Examinations, SemesterBabitha DhanaBelum ada peringkat

- Functional DesignDokumen17 halamanFunctional DesignRajivSharmaBelum ada peringkat

- Chapter 1 ClassnotesDokumen35 halamanChapter 1 ClassnotesAllison CasoBelum ada peringkat

- Proceeding of Rasce 2015Dokumen245 halamanProceeding of Rasce 2015Alex ChristopherBelum ada peringkat

- IKEA SHANGHAI Case StudyDokumen5 halamanIKEA SHANGHAI Case StudyXimo NetteBelum ada peringkat

- Activity # 1 (DRRR)Dokumen2 halamanActivity # 1 (DRRR)Juliana Xyrelle FutalanBelum ada peringkat

- Evaluation TemplateDokumen3 halamanEvaluation Templateapi-308795752Belum ada peringkat

- My Personal Code of Ethics1Dokumen1 halamanMy Personal Code of Ethics1Princess Angel LucanasBelum ada peringkat

- Deal Report Feb 14 - Apr 14Dokumen26 halamanDeal Report Feb 14 - Apr 14BonviBelum ada peringkat

- SSGC-RSGLEG Draft Study On The Applicability of IAL To Cyber Threats Against Civil AviationDokumen41 halamanSSGC-RSGLEG Draft Study On The Applicability of IAL To Cyber Threats Against Civil AviationPrachita AgrawalBelum ada peringkat

- How Drugs Work - Basic Pharmacology For Healthcare ProfessionalsDokumen19 halamanHow Drugs Work - Basic Pharmacology For Healthcare ProfessionalsSebastián Pérez GuerraBelum ada peringkat

- Borang Ambulans CallDokumen2 halamanBorang Ambulans Callleo89azman100% (1)