UCS, Fiber Channel and SAN Connectivity, PT 1

Diunggah oleh

RomarMroJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

UCS, Fiber Channel and SAN Connectivity, PT 1

Diunggah oleh

RomarMroHak Cipta:

Format Tersedia

5/24/13

28th March 2012

UCS, Fiber Channel and SAN Connectivity, Pt 1

UCS, Fiber Channel and SAN Connectivity, Pt 1

Well; the several days wait for the next blog entry turned into several months. Such is the way it is with project schedules. This

blog session will include an introduction to SAN, supporting a VM centric data center. The following blog will concentrate on

Cisco UCS specific SAN FC support features.

This blogs entries will be broken down into a series as follows:

Introduction to SAN Storage Architcture and SAN (FC) as supported by Cisco UCS

SAN (FC) as supported by Cisco UCS

Special Backup Cases

Troubleshooting UCS Fiber Channel will be covered in the next blog entry.

Introduction to SAN Storage Architecture .

Choosing a system based either on a NAS (file based SMB/SAMBA/CIFS), or raw device (SAN) storage is an immediate

decision. For a data center hosting multi-tenant/virtual machines, raw device storage is a necessity, so as to support the volume

and speeds necessary for timely backups.

Fiber Channel: A SAN Protocol

Well, FC is a SAN lower level protocol suite with a little help from an applied higher layer SCSI protocol. There are features of FC

that make it very applicable to needs of a high density VM environment, with regular device backups:

Lossless transmission; by way of buffer credits

Device access partitioning efficiency:

a.

Fabric based zoning: limits host access to particular devices

b.

VSANs: allows extending FC switch to other virtual fabrics and prevents FC switch port wastage

Backup speed of raw devices exceeds NAS backups of files associated with an entire device

Supports multipath (load balancing) to storage devices, possibly necessitating 3rd party software

SAN Hardware Architecture

Theres plenty of documentation on SAN components and architecture, and this blog series wont be redundant in that regard.

This series concentrates on the implementation of Cisco UCS integration of FC, VHBAs, FCOE, Network Adapters and MDS

towards SAN FC, NPV and NPIV.

The NPV/NPIV fiber channel operation of the UCS system in End Host mode bears similarities to how UCS supports Ethernet

VLAN connectivity in End Host mode. Remember that the UCS Fabric Interconnects in End Host Mode behave like a host with

many ports and there is no MAC learning in the uplink facing side and the FI does MAC learning on the host facing side only. In a

similar fashion; the FI supports FC NPV in End Host mode, appearing as a device with many HBA connecting to few uplinks,

participating in no Domain ID, zoning or FC switch functions. The NPV FI is an (inexact) analogy to a host with many HBAs.

cloudfulness.blogspot.ca/2012/03/ucs-fiber-channel-and-san-connectivity.html

1/6

5/24/13

UCS, Fiber Channel and SAN Connectivity, Pt 1

The FI NPIV operation allows connected HBAs to login to a FC switch upstream of the FI NPV. The FI NPV performs the actual

FLOGI for all the connected HBAs via the FI uplink ports to a FC NPIV switch. A notable difference between the way an End Host

Mode FI operates in Ethernet vs Fiber Channel is that local intra-VLAN switching is supported for local hosts. Local switching is

not supported for Fiber Channel.

Basic Implementation caveats

Expense: Currently; SAN Fiber Channel (FC) is an extensively adopted standard, but requires an upfront cost:

a.

SAN requires a fabric including switch gear

b.

FC connected hosts require Host Bus Adapters (HBAs) for FC connectivity

Backups:

a.

Applications such as Cisco CUCM apps are highly integrated with their Linux OS. This has implications

involving exceptions w/r to how backups can be configured and executed in a SAN environment. This will be

explained more in the Backup Architecture section.

b.

SAN doesnt support file based backups. This issue has things in common with issue 2 above. Again; more

on this in the Backup Architecture section.

SAN (FC) as supported by Cisco UCS

The typical and most flexible implementation of Cisco UCS is as an End Host Mode implementation, where UCS appears as a

collection of hosts attached to the upstream switch. The other UCS Switching mode will not be included in this discussion

about UCS support of SANs. Therefore all following references are regarding the UCS in End Host Mode.

Implementation:

Network SAN basics:

NPort Virtualization: is (NPV) supported on the Cisco UCS Fabric Interconnect. NPV support allows the UCS to support

multiple FLOGIs, on behalf of the attached servers vHBAs. This action is very synergistic with the general End Host Mode (non

switch) operation, also including that an NPV device does not behave as a SAN switch, but as a login proxy.

NPort ID Virtualization: (NPIV) is the means for a fabric switch to associate multiple logins with a single NPort, such as via a

fabric interconnect NPV . The upstream FC switch (Cisco MDS switch for example) would be responsible for accepting the proxy

logins from the UCS fabric Interconnects.

The fabric interconnect NPV and MDS NPIV operation are integral for supporting a volume of virtual hosts such as would be

guests on UCS blade esxi hosts.

cloudfulness.blogspot.ca/2012/03/ucs-fiber-channel-and-san-connectivity.html

2/6

5/24/13

UCS, Fiber Channel and SAN Connectivity, Pt 1

Card level SAN basics:

Each Network Adaptor Palo UCS M81KR (for example) supports a programmable quantity of HBAs. Up to 128 NICs or HBAs

may be configured and assigned to VMs as necessary. HBAs assigned to a server are connected to the FI as (typical) N-Ports,

but the FI operating as an NPV device, is providing an F-Proxy port back to the servers HBA, with the relevant F Port existing

upstream on the MDS switch. In this fashion, the operation of the VMs HBA(s), FI and MDS are very much like the standard

operation of a physical server FC attachment to a NPIV switch via a NPV device.

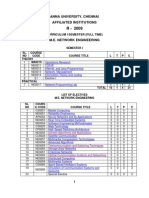

UCS SAN Connectivity Summary

Refer to the diagram.

cloudfulness.blogspot.ca/2012/03/ucs-fiber-channel-and-san-connectivity.html

3/6

5/24/13

UCS, Fiber Channel and SAN Connectivity, Pt 1

[http://2.bp.blogspot.com/-r2rQSMVHC1s/T3MJW_ZxgKI/AAAAAAAAACg/I5cy3pv1gE0/s1600/storage_v2.jpg]

As has been illustrated in my earlier blog, automatic pinning will place the servers mounted on specific blades to specific fabric

uplinks to an FI, unless manual pinning has been employed to point a server to a particular fabric uplink. Generally; with 2 IOMs,

server traffic may be directed up either of 2 fabric uplink paths; with one a path going to each FI. In this example, HBA uplinks

have purposely been split, so that path redundancy exists for the pair of HBAs for each server.

cloudfulness.blogspot.ca/2012/03/ucs-fiber-channel-and-san-connectivity.html

4/6

5/24/13

UCS, Fiber Channel and SAN Connectivity, Pt 1

The servers dual HBAs are each statically pinned so that they each have a unique path via separate fabrics. The pinning is

accomplished by the UCM cli interface. The IOMs are supporting a full quad uplink each. Multiple paths from each server are

supported throughout to the data store. However; the way that each data stores SCSI ID is seen at each HBA may convince the

O/S that each HBA is connected to a different data store, even though they are connected to the same. The answer for this is in

vendors multi-pathing client software for the real or virtual hosts. In the case of EMC; PowerPath client software will enable multiHBA equipped hosts to utilize multiple paths to a single data store and allow this illustrated model to provide benefits.

Special Backup Cases

Many SAN backup solutions require some level of client software to support the interoperation of FC, the underlying server

technology (virtual machines) and the applications running on the virtual machines. Lets take a look at a particular instance:

Integration of UCS, VMWARE, Cisco CUCM Applications and Client Backups

Update (6/28/2012): Practically Speaking

If the esxi image is to be stored on a local disc, there's doesn't seem to be much reason to configure more than two

vHBAs per UCS blade server. Bear in mind that the VMs are ignorant that HBAs or Fiber Channel is involved at all.

The VMs believe that they are employing SAS storage. The virtual environment adds FC header and a WWNN to the

storage packets. The CNA adds one WWPN per vHBA (one per fabric). In this instance, two vHBAs would provide for

all the redundancy that is needed for fiber channel access via either fabric.

Boot From SAN

However; in the instance of boot from SAN, then we have to take in the operational considerations.

This topic gets pretty good treatment from: http://virtualeverything.wordpress.com/2011/05/31/simplifying-sanmanagement-for-vmware-boot-from-san-utilizing-cisco-ucs-and-palo.

In a nutshell; two vHBAs per adapter would be members of a storage group including all LUNs in the cluster. This would

support VMotion and DRS. The same would apply to all blade adapters in the cluster.

Best practice (or requirement) for VMWARE boot from SAN includes that each blade should have its own LUN

containing the boot image. This operation would involve two additional vHBAs, which would be exclusive members of a

storage group that would include the blade's boot LUN as the only LUN member. Therefore taking in consideration the

normal DRS/HA operations plus boot from SAN, a total of four vHBAs could be effectively employed.

Posted 28th March 2012 by mj1pate

cloudfulness.blogspot.ca/2012/03/ucs-fiber-channel-and-san-connectivity.html

5/6

5/24/13

UCS, Fiber Channel and SAN Connectivity, Pt 1

Add a comment

Enter your comment...

Comment as: Google Account

Publish

Preview

cloudfulness.blogspot.ca/2012/03/ucs-fiber-channel-and-san-connectivity.html

6/6

Anda mungkin juga menyukai

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (895)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (74)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- Introduction To SANs TestDokumen31 halamanIntroduction To SANs TestJuan BautistaBelum ada peringkat

- Dell Compellent Sc4020 Deploy GuideDokumen184 halamanDell Compellent Sc4020 Deploy Guidetar_py100% (1)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- SRG - Connectrix B-Series Architecture and Management Overview FinalDokumen79 halamanSRG - Connectrix B-Series Architecture and Management Overview FinalMohit GautamBelum ada peringkat

- K. Schneider, Adtran - IPTVDokumen18 halamanK. Schneider, Adtran - IPTVRomarMroBelum ada peringkat

- How To Recognize Aluminum Electrical Wiring Hazards & How To Make Aluminum Wiring SafeDokumen13 halamanHow To Recognize Aluminum Electrical Wiring Hazards & How To Make Aluminum Wiring SafeRomarMroBelum ada peringkat

- Epson Print Head CleaningDokumen2 halamanEpson Print Head CleaningRomarMroBelum ada peringkat

- parks-IPTV 07 PDFDokumen8 halamanparks-IPTV 07 PDFRomarMroBelum ada peringkat

- Linux Advance Routing - HowtoDokumen158 halamanLinux Advance Routing - HowtobtdtheaBelum ada peringkat

- Designing A Network With Segmentation: Timothy Buns ITEC 6880 December 2017Dokumen19 halamanDesigning A Network With Segmentation: Timothy Buns ITEC 6880 December 2017RomarMroBelum ada peringkat

- Net Lec 6Dokumen84 halamanNet Lec 6raghuramsathyamBelum ada peringkat

- 8 Herbs That May Help Lower High Blood PressureDokumen12 halaman8 Herbs That May Help Lower High Blood PressureRomarMroBelum ada peringkat

- CUCM RTMT Trace LocationsDokumen27 halamanCUCM RTMT Trace LocationsRomarMroBelum ada peringkat

- Network SegmentationDokumen9 halamanNetwork SegmentationJose Luis GomezBelum ada peringkat

- Dss Systems Intel Technology GuideDokumen30 halamanDss Systems Intel Technology GuideRomarMroBelum ada peringkat

- SParx UML BasicsDokumen73 halamanSParx UML BasicsRomarMroBelum ada peringkat

- Designing A Network With Segmentation: Timothy Buns ITEC 6880 December 2017Dokumen19 halamanDesigning A Network With Segmentation: Timothy Buns ITEC 6880 December 2017RomarMroBelum ada peringkat

- User Guide: Bluetooth Headset With Digitally Enhanced Sound For Clear ConversationsDokumen16 halamanUser Guide: Bluetooth Headset With Digitally Enhanced Sound For Clear ConversationsNonZeroSumBelum ada peringkat

- Germany Travel and Driving GuideDokumen25 halamanGermany Travel and Driving GuideAuto EuropeBelum ada peringkat

- Istria Vacation Planner 2013Dokumen72 halamanIstria Vacation Planner 2013RomarMroBelum ada peringkat

- Cisco CCP 2 8 Admin GuideDokumen36 halamanCisco CCP 2 8 Admin GuideRomarMroBelum ada peringkat

- Palo Alto - CNSE - Panorama PartDokumen45 halamanPalo Alto - CNSE - Panorama PartRomarMroBelum ada peringkat

- Cisco VM-FEX Virtual NICDokumen9 halamanCisco VM-FEX Virtual NICRomarMroBelum ada peringkat

- An Illustrated Guide To IPsecDokumen13 halamanAn Illustrated Guide To IPsecRomarMroBelum ada peringkat

- PKCS - WikipediaDokumen2 halamanPKCS - WikipediaRomarMroBelum ada peringkat

- DMVPN Phase 3Dokumen15 halamanDMVPN Phase 3RomarMroBelum ada peringkat

- February 2010: Scrum: Developed and Sustained by Ken Schwaber and Jeff SutherlandDokumen21 halamanFebruary 2010: Scrum: Developed and Sustained by Ken Schwaber and Jeff Sutherlandscrumdotorg100% (2)

- SBOK Guide Glossary - SCRUMstudyDokumen26 halamanSBOK Guide Glossary - SCRUMstudyRomarMroBelum ada peringkat

- Basics - Three Types of Cisco UCS Network Adapters - EtherealMindDokumen14 halamanBasics - Three Types of Cisco UCS Network Adapters - EtherealMindRomarMroBelum ada peringkat

- VXLAN Overview - Cisco Nexus 9000 Series Switches - CiscoDokumen13 halamanVXLAN Overview - Cisco Nexus 9000 Series Switches - CiscoRomarMroBelum ada peringkat

- Mobile Cloud ToolsDokumen11 halamanMobile Cloud ToolsRomarMroBelum ada peringkat

- Cisco FabricPath GlanceDokumen2 halamanCisco FabricPath GlanceRomarMroBelum ada peringkat

- Agile and Scrum Glossary - SCRUMstudyDokumen17 halamanAgile and Scrum Glossary - SCRUMstudyRomarMroBelum ada peringkat

- Veritas™ Dynamic Multi-Pathing Administrator's Guide Solaris - DMP - Admin - 51sp1 - SolDokumen150 halamanVeritas™ Dynamic Multi-Pathing Administrator's Guide Solaris - DMP - Admin - 51sp1 - Solakkati123Belum ada peringkat

- XP Guide For Sun SolarisDokumen46 halamanXP Guide For Sun SolarisGuilleBelum ada peringkat

- Multipath and Dual CablingDokumen6 halamanMultipath and Dual Cablingamitkr2301Belum ada peringkat

- IBM Total Storage DS6000 Messages Reference GC26-7682-04Dokumen398 halamanIBM Total Storage DS6000 Messages Reference GC26-7682-04Alejandro SotoBelum ada peringkat

- HP 3PAR StoreServ 7000 Storage Site Planning ManualDokumen55 halamanHP 3PAR StoreServ 7000 Storage Site Planning Manualpruthivi dasBelum ada peringkat

- Configuring and Managing VsansDokumen14 halamanConfiguring and Managing VsansManishBelum ada peringkat

- Brocade Data Center Storage SDGDokumen38 halamanBrocade Data Center Storage SDGCipon MariponBelum ada peringkat

- Dell Emc Networking S4100 Series Spec Sheet PDFDokumen6 halamanDell Emc Networking S4100 Series Spec Sheet PDFchienkmaBelum ada peringkat

- Cisco UCS 6324 NetworkingInterconnectDokumen9 halamanCisco UCS 6324 NetworkingInterconnectJose RamirezBelum ada peringkat

- NEDokumen22 halamanNErajesh5500Belum ada peringkat

- Installation and Setup Guide For DD990 Storage SystemDokumen6 halamanInstallation and Setup Guide For DD990 Storage SystemMohan ArumugamBelum ada peringkat

- Cisco MDS 9000 Family NX-OS Inter-VSAN Routing Configuration GuideDokumen70 halamanCisco MDS 9000 Family NX-OS Inter-VSAN Routing Configuration Guidevetinh2000Belum ada peringkat

- StorageTek Tape Libraries Entry and Midrange Installation IDODokumen18 halamanStorageTek Tape Libraries Entry and Midrange Installation IDOKuswahyudi UtomoBelum ada peringkat

- Hardware Guide HPE Alletra 6010 - 6030 - 6050 - 6070 - 6090 - 2140Dokumen136 halamanHardware Guide HPE Alletra 6010 - 6030 - 6050 - 6070 - 6090 - 2140Hernan HerreraBelum ada peringkat

- EMC Symmetrix Remote Data Facility (SRDF) : Connectivity GuideDokumen150 halamanEMC Symmetrix Remote Data Facility (SRDF) : Connectivity GuidepeymanBelum ada peringkat

- MSA1000 Service GuideDokumen122 halamanMSA1000 Service GuideEwan McDonaldBelum ada peringkat

- In This Issue: September 2009 Volume 12, Number 3Dokumen32 halamanIn This Issue: September 2009 Volume 12, Number 3aqua01Belum ada peringkat

- FC PH 3 - 9.1Dokumen126 halamanFC PH 3 - 9.1tlidiaBelum ada peringkat

- Brocade Fabric OS v6.4.3f Release Notes v1.0 PDFDokumen149 halamanBrocade Fabric OS v6.4.3f Release Notes v1.0 PDFdheevettiBelum ada peringkat

- Huawei OceanStor 2600 V3 Storage System Data SheetDokumen6 halamanHuawei OceanStor 2600 V3 Storage System Data Sheetfarari mailBelum ada peringkat

- 9119-595 Sales ManualDokumen185 halaman9119-595 Sales Manualpacolopez888Belum ada peringkat

- Iscsi vs. Fibre Channel Sans: Three Reasons Not To Choose SidesDokumen4 halamanIscsi vs. Fibre Channel Sans: Three Reasons Not To Choose SidesCatalin NegruBelum ada peringkat

- Powerstore - Hardware Configuration GuideDokumen93 halamanPowerstore - Hardware Configuration GuideMikko VirtanenBelum ada peringkat

- Lan Standards News Trends Updates 2023 926396Dokumen44 halamanLan Standards News Trends Updates 2023 926396JOSE RAMOSBelum ada peringkat

- San Super Imp 1Dokumen37 halamanSan Super Imp 1Vinay Pandey1AT19CS120Belum ada peringkat

- CVD - Deployment Guide For Cisco HyperFlex 2.5 For Virtual Server InfrastructureDokumen234 halamanCVD - Deployment Guide For Cisco HyperFlex 2.5 For Virtual Server Infrastructurekinan_kazuki104100% (1)

- Rtu 310Dokumen12 halamanRtu 310Abdulmoied OmarBelum ada peringkat