31 Oct 2002

Diunggah oleh

Abhinav TripathiDeskripsi Asli:

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

31 Oct 2002

Diunggah oleh

Abhinav TripathiHak Cipta:

Format Tersedia

Texture Mapping

Why texture map?

How to do it

How to do it right

Spilling the beans

A couple tricks

Difficulties with

texture mapping

Projective mapping

Shadow mapping

Environment

mapping

Most slides courtesy of

Leonard McMillan and Jovan Popovic

Lecture 15 Slide 1 6.837 Fall 2002

Administrative

Office hours

Durand & Teller by appointment

Ngan Thursday 4-7 in W20-575

Yu Friday 2-5 in NE43-256

Deadline for proposal: Friday Nov 1

Meeting with faculty & staff about proposal

Next week

Web page for appointment

Lecture 15 Slide 2 6.837 Fall 2002

The Quest for Visual Realism

For more info on the computer artwork of Jeremy Birn

see http://www.3drender.com/jbirn/productions.html

Lecture 15 Slide 3 6.837 Fall 2002

Photo-textures

The concept is very simple!

Lecture 15 Slide 4 6.837 Fall 2002

Texture Coordinates

Void EdgeRec::init() {

… //Note: here we use w=1/z

Specify a texture wstart=1./z1; wend=1./z2; dw=wend-wstart; wcurr=wstart;

coordinate at each vertex sstart=s1; send=s2; ds=sstart-send; scurr=sstart;

tstart=t1; tend=t2; dt=tstart-tend; tcurr=tstart;

(s, t) or (u, v) }

Void EdgeRec::update () {

Canonical coordinates ycurr+=1; xcurr+=dx; wcurr+=dw;

scurr+=ds; tcurr+=dt;

where u and v are }

between 0 and 1 static void RenderScanLine ( … ) {

…

Simple modifications to for (e1 = AEL->ToFront(); e1 != NULL; e1 = AEL->Next() ) {

e2=AEL->NextPolyEdge(e1->poly);

triangle rasterizer x1=[e1->xcurr]; x2=[e2->xcurr]; dx=x2-x1;

w1=e1->wcurr; w2=e2->wcurr; dw=(w2-w1)/dx;

s1=e1->scurr; s2=e2->scurr; ds=(s2-s1)/dx;

(0,1) t1=e1->tcurr; t2=e2->tcurr; dt=(t2-t1)/dx;

for (int x=x1; x<x2; x++) {

w+=dw;

s+=ds; t+=dt;

if (w<wbuffer[x]) {

wbuffer[x]=w;

raster.setPixel(x, texturemap[s,t])

}

}

}

(0,0) (1,0) raster->write(y);

}

Lecture 15 Slide 5 6.837 Fall 2002

The Result

Let's try that out ... Texture mapping applet (image)

Wait a minute... that doesn't look right.

What's going on here?

Let's try again with a simpler texture... Texture mapping applet

(simple texture)

Notice how the texture seems to bend and warp along the diagonal

triangle edges. Let's take a closer look at what is going on.

Lecture 15 Slide 6 6.837 Fall 2002

Looking at One Edge

First, let's consider one edge from a given triangle. This

edge and its projection onto our viewport lie in a single

common plane. For the moment, let's look only at that

plane, which is illustrated below:

Lecture 15 Slide 7 6.837 Fall 2002

Visualizing the Problem

Notice that uniform steps on the image plane

do not correspond to uniform steps along the edge.

Let's assume that the viewport is located 1 unit away from the center of

projection.

Lecture 15 Slide 8 6.837 Fall 2002

Linear Interpolation in Screen Space

Compare linear interpolation in screen space

Lecture 15 Slide 9 6.837 Fall 2002

Linear Interpolation in 3-Space

to interpolation in 3-space

Lecture 15 Slide 10 6.837 Fall 2002

How to Make Them Mesh

Still need to scan convert in screen space... so we need a mapping from t

values to s values.

We know that the all points on the 3-space edge project onto our screen-

space line. Thus we can set up the following equality:

and solve for s in terms of t giving:

Unfortunately, at this point in the pipeline (after projection) we no

longer have z1 lingering around (Why?). However, we do have w1=

1/z1 and w2 = 1/z2 .

Lecture 15 Slide 11 6.837 Fall 2002

Interpolating Parameters

We can now use this expression for s to interpolate arbitrary parameters, such

as texture indices (u, v), over our 3-space triangle. This is accomplished by

substituting our solution for s given t into the parameter interpolation.

Therefore, if we premultiply all parameters that we wish to interpolate in

3-space by their corresponding w value and add a new plane equation to

interpolate the w values themselves, we can interpolate the numerators and

denominator in screen-space.

We then need to perform a divide a each step to get to map the screen-space

interpolants to their corresponding 3-space values.

Once more, this is a simple modification to our existing triangle rasterizer.

Lecture 15 Slide 12 6.837 Fall 2002

Modified Rasterizer

Void EdgeRec::init() {

…

wstart=1./z1; wend=1./z2; dw=wend-wstart; wcurr=wstart;

swstart=s1*w1; swend=s2*w2; dsw=swstart-swend; swcurr=swstart;

twstart=t1*w1; twend=t2*w2; dtw=twstart-twend; twcurr=twstart;

}

Void EdgeRec::update () {

ycurr+=1; xcurr+=dx; wcurr+=dw;

swcurr+=dsw; twcurr+=dtw;

}

static void RenderScanLine ( … ) {

…

for (e1 = AEL->ToFront(); e1 != NULL; e1 = AEL->Next() ) {

e2=AEL->NextPolyEdge(e1->poly);

x1=[e1->xcurr]; x2=[e2->xcurr]; dx=x2-x1;

w1=e1->wcurr; w2=e2->wcurr; dw=(w2-w1)/dx;

sw1=e1->swcurr; sw2=e2->swcurr; dsw=(sw2-sw1)/dx;

tw1=e1->twcurr; tw2=e2->twcurr; dtw=(tw2-tw1)/dx;

for (int x=x1; x<x2; x++) {

w+=dw;

float denom = 1.0f / w;

sw+=dsw; tw+=dtw;

correct_s=sw*denom; correct_t=tw*denom;

if (w<wbuffer[x]) {

wbuffer[x]=w;

raster.setPixel(x, texturemap[correct_s, correct_t])

}

}

}

raster->write(y);

}

Lecture 15 Slide 13 6.837 Fall 2002

Demonstration

For obvious reasons this method of interpolation is called perspective-

correct interpolation. The fact is, the name could be shortened to simply

correct interpolation. You should be aware that not all 3-D graphics APIs

implement perspective-correct interpolation.

Applet with

correct

interpolation

You can reduce the perceived artifacts of non-perspective correct

interpolation by subdividing the texture-mapped triangles into smaller

triangles (why does this work?). But, fundamentally the screen-space

interpolation of projected parameters is inherently flawed.

Applet with

subdivided

triangles

Lecture 15 Slide 14 6.837 Fall 2002

Reminds you something?

When we did Gouraud shading didn't we interpolate

illumination values, that we found at each vertex using screen-

space interpolation?

Didn't I just say that screen-space interpolation is wrong (I

believe "inherently flawed" were my exact words)?

Does that mean that Gouraud shading is wrong?

Lecture 15 Slide 15 6.837 Fall 2002

Gouraud is a big simplification

Gourand shading is wrong. However, you usually will not notice

because the transition in colors is very smooth (And we don't know what

the right color should be anyway, all we care about is a pretty picture).

There are some cases where

the errors in Gouraud shading

become obvious.

When switching between

different levels-of-detail

representations

At "T" joints. Applet showing errors in

Gouraud shading

Lecture 15 Slide 16 6.837 Fall 2002

Texture Tiling

Often it is useful to repeat or tile a texture over the surface of a

polygon.

…

if (w<wbuffer[x]) {

wbuffer[x]=w;

raster.setPixel(x, texturemap[tile(correct_s, max), tile(correct_t, max)])

}

…

int tile(int val, int size) {

if (val >= size) {

do { val -= size; } while (val >= size);

} else {

while (val < 0) { val += size; }

}

return val;

} Tiling applet

Can also use symmetries…

Lecture 15 Slide 17 6.837 Fall 2002

Texture Transparency

There was also a little code snippet to handle texture transparency.

…

if (w<wbuffer[x]) {

Color4=raster.setPixel(x,

oldColor=raster.getPixel(x);

textureColor= texturemap[tile(correct_s, max), tile(correct_t, max)];

float t= textureColor.transparency()

newColor = t*textureColor+ (1-t)*oldColor;

raster.setPixel(x, newColor)

wbuffer[x]=w; //NOT SO SIMPLE.. BEYOND THE SCOPE OF THIS LECTURE

}

…

Applet showing

texture

transparency

Lecture 15 Slide 18 6.837 Fall 2002

Summary of Label Textures

Increases the apparent complexity of

simple geometry

Must specify texture coordinates for

each vertex

Projective correction (can't linearly

interpolate in screen space)

Specify variations in shading within a

primitive

Two aspects of shading

Illumination

Surface Reflectace

Label textures can handle both kinds

of shading effects but it gets tedious

Acquiring label textures is surprisingly

tough

Lecture 15 Slide 19 6.837 Fall 2002

Difficulties with Label Textures

Tedious to specfiy texture coordinates for

every triangle

Textures are attached to the geometry

Easier to model variations in reflectance than

illumination

Can't use just any image as a label texture

The "texture" can't have projective

distortions

Reminder: linear interploation in image space

is not equivalent to linear interpolation in 3-

space (This is why we need "perspective-

correct" texturing). The converse is also true.

Textures are attached to the geometry

Easier to model variations in reflectance than

illumination

Makes it hard to use pictures as textures

Lecture 15 Slide 20 6.837 Fall 2002

Projective Textures

Treat the texture as a light source (like a slide projector)

No need to specify texture coordinates explicitly

A good model for shading variations due to illumination

A fair model for reflectance (can use pictures)

Lecture 15 Slide 21 6.837 Fall 2002

Projective Textures

texture Projected onto the interior of a cube

[Segal et al. 1992]

Lecture 15 Slide 22 6.837 Fall 2002

The Mapping Process

During the Illumination process:

For each vertex of triangle

(in world or lighting space)

Compute ray from the projective texture's

origin to point

Compute homogeneous texture coordinate,

[ti, tj, t]

(use projective matrix)

During scan conversion

(in projected screen space)

Interpolate all three texture coordinates

in 3-space

(premultiply by w of vertex)

Do normalization at each rendered pixel

i=ti/t j=tj/t

Access projected texture

Lecture 15 Slide 23 6.837 Fall 2002

Projective Texture Examples

Modeling from photograph

Using input photos as textures

[Debevec et al. 1996]

Lecture 15 Slide 24 6.837 Fall 2002

Adding Texture Mapping to Illumination

Texture mapping can be used to alter some or all of the constants in the

illumination equation. We can simply use the texture as the final color for the pixel,

or we can just use it as diffuse color, or we can use the texture to alter the normal,

or... the possibilities are endless!

Phong's Illumination Model

Constant Diffuse Color Diffuse Texture Color Texture used as Label Texture used as Diffuse Color

Lecture 15 Slide 25 6.837 Fall 2002

Texture-Mapping Tricks

Textures and Shading

Combining Textures

Bump Mapping

Solid Textures

Lecture 15 Slide 26 6.837 Fall 2002

Shadow Maps

Textures can also be used to generate shadows.

Projective Will be discussed in a later lecture

Textures with

Depth

Lecture 15 Slide 27 6.837 Fall 2002

Environment Maps

If, instead of using the ray from the surface point to the projected

texture's center, we used the direction of the reflected ray to index a

texture map. We can simulate reflections. This approach is not completely

accurate. It assumes that all reflected rays begin from the same point.

Lecture 15 Slide 28 6.837 Fall 2002

What's the Best Chart?

Lecture 15 Slide 29 6.837 Fall 2002

Reflection Mapping

Lecture 15 Slide 30 6.837 Fall 2002

Questions?

Image computed using the Dali

ray tracer by Henrik Wann

jensen

Environment map by Paul

Debevec

Lecture 15 Slide 31 6.837 Fall 2002

Lecture 15 Slide 32 6.837 Fall 2002

Texture Mapping Modes

Label textures

Projective textures

Environment maps

Shadow maps

…

Lecture 15 Slide 33 6.837 Fall 2002

The Best of All Worlds

All these texture mapping modes are great!

The problem is, no one of them does everything well.

Suppose we allowed several textures to be applied to each primitive

during rasterization.

Lecture 15 Slide 34 6.837 Fall 2002

Multipass vs. Multitexture

Multipass (the old way) - Render the image in multiple passes, and "add"

the results.

Multitexture - Make multiple texture accesses within the rasterizing loop

and "blend" results.

Blending approaches:

Texture modulation

Alpha Attenuation

Additive textures

Weird modes

Lecture 15 Slide 35 6.837 Fall 2002

Texture Mapping in Quake

Quake uses light maps in addition to texture maps. Texture maps are used

to add detail to surfaces, and light maps are used to store pre-computed

illumination. The two are multiplied together at run-time, and cached for

efficiency.

Texture Light Maps

Maps

Data RGB Intensity

Instanced Yes No

Resolution High Low

Light map image

by Nick Chirkov

Lecture 15 Slide 36 6.837 Fall 2002

Bump Mapping

Textures can be used to alter the surface normal of an object. This does not change the actual

shape of the surface -- we are only shading it as if it were a different shape! This technique is

called bump mapping. The texture map is treated as a single-valued height function. The

value of the function is not actually used, just its partial derivatives. The partial derivatives tell

how to alter the true surface normal at each point on the surface to make the object appear

as if it were deformed by the height function.

Since the actual shape of the object does not change, the silhouette edge of the object will

not change. Bump Mapping also assumes that the Illumination model is applied at every pixel

(as in Phong Shading or ray tracing).

Swirly Bump Map

Sphere w/Diffuse Texture & Bump Map

Sphere w/Diffuse Texture

Lecture 15 Slide 37 6.837 Fall 2002

v

Bump mapping v D

If Pu and Pv are orthogonal and N is normalized:

v N v

P = [ x(u , v), y (u , v), z (u , v)]T Initial point r N′ v

v v v

N = Pu × Pv Normal Pr' v

Pv

v v v

P D

P′ = P + B (u, v ) N Simulated elevated point after bump v

Pu

Variation of normal in u direction

v v v v B ( s − ∆, t ) − B ( s + ∆, t )

Bu =

N ′ ≈ N + Bu Pu + Bv Pv 2∆

142 v

43 B ( s, t − ∆ ) − B ( s, t + ∆ )

D Bv =

2∆

Variation of normal in v direction

Compute bump map partials

by numerical differentiation

Lecture 15 Slide 38 6.837 Fall 2002

Bump mapping v

N

v General case

P = [ x(u , v), y (u , v), z (u , v)]T Initial point

v

N′ v

Pv

v v v

N = Pu × Pv Normal

v v

v − N ×P

u

v

v v B(u , v) N v Pu

P′ = P + v Simulated elevated point after bump D v v

N × Pv

N

v v v v

v Bu (N × Pv ) − Bv (N × Pu )

Variation of normal in u direction

v Bu =

B ( s − ∆, t ) − B ( s + ∆, t )

N′ ≈ N + v 2∆

N B ( s, t − ∆ ) − B ( s, t + ∆ )

1444 42 4444 3 Bv =

v 2∆

D Variation of normal in v direction

Compute bump map partials

by numerical differentiation

Lecture 15 Slide 39 6.837 Fall 2002

Bump mapping derivation ≈0

v v v

v v B(u , v) N v v Bu N BN u

P′ = P + v Pu′ = Pu + v + v

N N N

≈0

v v

Assume B is very small... v v Bv N BN v

v v v Pv

′ = Pv + v + v

N ′ = Pu′ × Pv′ N N

v v v v v v

v v v Bu ( N × Pv ) Bv ( Pu × N ) Bu Bv ( N × N )

N ′ ≈ Pu × Pv + v + v + v 2

N N N

v v v v v v v v v

But Pu × Pv = N , Pu × N = − N × Pu and N × N = 0 so

v v v v

v v Bu ( N × Pv ) Bv ( N × Pu )

N′ ≈ N + v − v

N N

Lecture 15 Slide 40 6.837 Fall 2002

More Bump Map Examples

Bump Map

Cylinder w/Diffuse Texture Map Cylinder w/Texture Map & Bump Map

Lecture 15 Slide 41 6.837 Fall 2002

One More Bump Map Example

Notice that the shadow boundaries remain unchanged.

Lecture 15 Slide 42 6.837 Fall 2002

Displacement Mapping

We use the texture map to actually move the surface point. This is called

displacement mapping. How is this fundamentally different than bump

mapping?

The geometry must be displaced before visibility is determined. Is this

easily done in the graphics pipeline? In a ray-tracer?

Lecture 15 Slide 43 6.837 Fall 2002

Displacement Mapping Example

It is possible to use displacement maps

when ray tracing.

Image from

Geometry Caching for

Ray-Tracing Displacement Maps

by Matt Pharr and Pat Hanrahan.

Lecture 15 Slide 44 6.837 Fall 2002

Another Displacement Mapping Example

Image from Ken Musgrave

Lecture 15 Slide 45 6.837 Fall 2002

Three Dimensional or Solid Textures

The textures that we have discussed to this

point are two-dimensional functions mapped

onto two-dimensional surfaces. Another

approach is to consider a texture as a

function defined over a three-dimensional

surface. Textures of this type are called solid

textures.

Solid textures are very effective at

representing some types of materials such as

marble and wood. Generally, solid textures

are defined procedural functionsrather than

tabularized or sampled functions as used in

2-D (Any guesses why?)

The approach that we will explore is based

on An Image Synthesizer, by Ken Perlin,

SIGGRAPH '85.

The vase to the right is from this paper.

Lecture 15 Slide 46 6.837 Fall 2002

Noise and Turbulence

When we say we want to create an "interesting" texture, we usually don't

care exactly what it looks like -- we're only concerned with the overall

appearance. We want to add random variations to our texture, but in a

controlled way. Noise and turbulence are very useful tools for doing just

that.

A noise function is a continuous function that varies throughout space at

a uniform frequency. To create a simple noise function, consider a 3D

lattice, with a random value assigned to each triple of integer

coordinates:

Lecture 15 Slide 47 6.837 Fall 2002

Turbulence

Noise is a good start, but it looks pretty ugly all by itself. We can use noise

to make a more interesting function called turbulence. A simple turbulence

function can be computed by summing many different frequencies of noise

functions:

One Frequency Two Frequencies Three Frequencies Four Frequencies

Now we're getting somewhere. But even turbulence is rarely used all

by itself. We can use turbulence to build even more fancy 3D

textures...

Lecture 15 Slide 48 6.837 Fall 2002

Marble Example

We can use turbulence to generate beautiful 3D

marble textures, such as the marble vase created by

Ken Perlin. The idea is simple. We fill space with

black and white stripes, using a sine wave function.

Then we use turbulence at each point to distort those

planes.

By varying the frequency of the sin function,

you get a few thick veins, or many thin veins. Varying

the amplitude of the turbulence function controls how

distorted the veins will be.

Marble = sin(f * (x + A*Turb(x,y,z)))

Lecture 15 Slide 49 6.837 Fall 2002

Anda mungkin juga menyukai

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (894)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (587)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (119)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2219)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (73)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- Radical Candor: Fully Revised and Updated Edition: How To Get What You Want by Saying What You Mean - Kim ScottDokumen5 halamanRadical Candor: Fully Revised and Updated Edition: How To Get What You Want by Saying What You Mean - Kim Scottzafytuwa17% (12)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- Decision MatrixDokumen12 halamanDecision Matrixrdos14Belum ada peringkat

- Inner WordDokumen7 halamanInner WordMico SavicBelum ada peringkat

- Transport LayerDokumen12 halamanTransport LayerAbhinav TripathiBelum ada peringkat

- Presention On HDLC (High Level Data Link Control)Dokumen14 halamanPresention On HDLC (High Level Data Link Control)Abhinav TripathiBelum ada peringkat

- Recruitment Process in Infosis TechnologiesDokumen9 halamanRecruitment Process in Infosis TechnologiesAbhinav TripathiBelum ada peringkat

- TCP IP CompleteDokumen167 halamanTCP IP Completeapi-3777069100% (2)

- Synopsis BY ABHINAV TRIPATHIDokumen10 halamanSynopsis BY ABHINAV TRIPATHIAbhinav TripathiBelum ada peringkat

- Transport Layer Protocols: By:-Abhinav Tripathi Cse 6 SEMDokumen12 halamanTransport Layer Protocols: By:-Abhinav Tripathi Cse 6 SEMAbhinav Tripathi100% (1)

- COS1512 202 - 2015 - 1 - BDokumen33 halamanCOS1512 202 - 2015 - 1 - BLina Slabbert-van Der Walt100% (1)

- Grillage Method Applied to the Planning of Ship Docking 150-157 - JAROE - 2016-017 - JangHyunLee - - 최종Dokumen8 halamanGrillage Method Applied to the Planning of Ship Docking 150-157 - JAROE - 2016-017 - JangHyunLee - - 최종tyuBelum ada peringkat

- Mri 7 TeslaDokumen12 halamanMri 7 TeslaJEAN FELLIPE BARROSBelum ada peringkat

- Time Series Data Analysis For Forecasting - A Literature ReviewDokumen5 halamanTime Series Data Analysis For Forecasting - A Literature ReviewIJMERBelum ada peringkat

- BUDDlab Volume2, BUDDcamp 2011: The City of Euphemia, Brescia / ItalyDokumen34 halamanBUDDlab Volume2, BUDDcamp 2011: The City of Euphemia, Brescia / ItalyThe Bartlett Development Planning Unit - UCLBelum ada peringkat

- Recording and reporting in hospitals and nursing collegesDokumen48 halamanRecording and reporting in hospitals and nursing collegesRaja100% (2)

- Mitchell 1986Dokumen34 halamanMitchell 1986Sara Veronica Florentin CuencaBelum ada peringkat

- Procedural Text Unit Plan OverviewDokumen3 halamanProcedural Text Unit Plan Overviewapi-361274406Belum ada peringkat

- Appointment Letter JobDokumen30 halamanAppointment Letter JobsalmanBelum ada peringkat

- Mind MapDokumen1 halamanMind Mapjebzkiah productionBelum ada peringkat

- MySQL Cursor With ExampleDokumen7 halamanMySQL Cursor With ExampleNizar AchmadBelum ada peringkat

- 7 JitDokumen36 halaman7 JitFatima AsadBelum ada peringkat

- Instruction Manual For National Security Threat Map UsersDokumen16 halamanInstruction Manual For National Security Threat Map UsersJan KastorBelum ada peringkat

- Grammar Booster: Lesson 1Dokumen1 halamanGrammar Booster: Lesson 1Diana Carolina Figueroa MendezBelum ada peringkat

- Sample Statement of Purpose.42120706Dokumen8 halamanSample Statement of Purpose.42120706Ata Ullah Mukhlis0% (2)

- Laxmi Thakur (17BIT0384) Anamika Guha (18BIT0483) : Submitted byDokumen6 halamanLaxmi Thakur (17BIT0384) Anamika Guha (18BIT0483) : Submitted byLaxmi ThakurBelum ada peringkat

- The History of American School Libraries: Presented By: Jacob Noodwang, Mary Othic and Noelle NightingaleDokumen21 halamanThe History of American School Libraries: Presented By: Jacob Noodwang, Mary Othic and Noelle Nightingaleapi-166902455Belum ada peringkat

- Perceptron Example (Practice Que)Dokumen26 halamanPerceptron Example (Practice Que)uijnBelum ada peringkat

- حقيبة تعليمية لمادة التحليلات الهندسية والعدديةDokumen28 halamanحقيبة تعليمية لمادة التحليلات الهندسية والعدديةAnjam RasulBelum ada peringkat

- NMIMS MBA Midterm Decision Analysis and Modeling ExamDokumen2 halamanNMIMS MBA Midterm Decision Analysis and Modeling ExamSachi SurbhiBelum ada peringkat

- Co2 d30 Laser MarkerDokumen8 halamanCo2 d30 Laser MarkerIksan MustofaBelum ada peringkat

- The Stolen Bacillus - HG WellsDokumen6 halamanThe Stolen Bacillus - HG Wells1mad.cheshire.cat1Belum ada peringkat

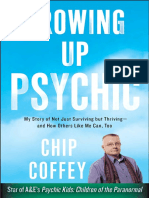

- Growing Up Psychic by Chip Coffey - ExcerptDokumen48 halamanGrowing Up Psychic by Chip Coffey - ExcerptCrown Publishing Group100% (1)

- Pedestrian Safety in Road TrafficDokumen9 halamanPedestrian Safety in Road TrafficMaxamed YusufBelum ada peringkat

- A Comparative Marketing Study of LG ElectronicsDokumen131 halamanA Comparative Marketing Study of LG ElectronicsAshish JhaBelum ada peringkat

- Saline Water Intrusion in Coastal Aquifers: A Case Study From BangladeshDokumen6 halamanSaline Water Intrusion in Coastal Aquifers: A Case Study From BangladeshIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalBelum ada peringkat

- Google Fusion Tables: A Case StudyDokumen4 halamanGoogle Fusion Tables: A Case StudySeanBelum ada peringkat