I Jomed

Diunggah oleh

Safiqulatif AbdillahJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

I Jomed

Diunggah oleh

Safiqulatif AbdillahHak Cipta:

Format Tersedia

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

DETERMINING SUFFICIENCY OF SAMPLE SIZE IN

MANAGEMENT SURVEY RESEARCH ACTIVITIES

By

HASHIM, Yusuf Alhaji

Email: yusufalhajihashim@gmail.com

Phone: 234803-660-351-5

Department of Business Administration, College of Business and

Management Studies (CBMS), Kaduna Polytechnic, Kaduna-Nigeria.

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

Abstract

This paper describes the procedure for determining sufficient sample size for both continuous

and categorical data. Tables for determining the sufficient sample size were given as adapted

from Krejcie and Morgan (1970) and Bartlett, et al., (2001). The main objective of this study is

to explore the procedures for determining a sufficient sample size for management survey

research activities. The study recommends that researchers should endeavour to use sufficient

sample size in conducting survey studies to minimize errors in inference back to the

population.

Introduction

1

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

Management involves the effective and efficient utilization both human and material resources in

achieving organizational goals and objectives. In the pursuance of organizational goals and

objectives, management encounters problems in terms of resources constraints, dynamism in the

operating environment. Therefore, most of the problems that management encounters are found

in all functional areas: finance, human resources, marketing, production, research and

development.

In order to solve managerial problems, managers engage in research. The purpose of research is

to collect data that will help management solve problem that have been identified and defined.

Data can be collected in a variety of ways. One of the most common methods is through the use

of surveys. Surveys can be done by using a variety of methods. The most common methods are

the telephone survey, the mailed questionnaire survey, personal interview, surveying records, and

direct observation (Bluman, 2004).

Management research reports require reliable forms of evidence from which to draw robust

conclusions. It is usually not cost effective or practicable to collect and examine all the data that

might be available. Instead it is often necessary to draw a sample of information from the whole

population to enable the detailed examination required to take place. Samples can be drawn for

several reasons: for example to draw inferences across the entire population; or to draw

illustrative examples of certain types of behavior. Bluman (2004) argues that in conducting the

research, management use samples to collect data and information about a particular variable

from a large population. Using samples saves time and money and, some cases enable the

researcher to get more detailed information about a particular subject. A simple survey of

published manuscripts reveals numerous errors and questionable approaches to sample size

2

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

selection, and serves as proof that improvement is needed. Many researchers could benefit from

a real-life primer on the tools needed to properly conduct research, including, but not limited to,

sample size selection.

Landman (1998) points out that, when a test sample does not truly represent the population from

which it is drawn, the test sample is considered a bias sample. It then becomes impossible to

predict the population. The argument by Landman (1998) raises the question: How large a

sample size is required to infer research findings back to the population? This question is at the

heart of the problem of this study.

The main aim of the study is to explore the processes of determining a sufficient sample size for

management survey research activities. The specific objectives of the study are:

i)

describe sufficient sample size required given a finite population,

ii)

describe maximum sampling error for samples of varying sizes,

Sample Design

Bluman (2004) argues samples can not be selected in a haphazard way because the information

obtained might be biased. To obtained samples that are unbiased (i.e. give each subject of the

population an equally likely chance of being selected) management use four basic methods of

sampling: random, systematic, stratified, and cluster sampling.

Sample design covers the method of selection, the sample structure and plans for analyzing and

interpreting the results. Sample designs can vary from simple to complex and depend on the type

of information required and the way the sample is selected. The design will impact upon the size

of the sample and the way in which analysis is carried out. In simple terms the tighter the

required precision and the more complex the design, the larger the sample size.

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

The design may make use of the characteristics of the population, but it does not have to be

proportionally representative (VFM Studies). It may be necessary to draw a larger sample than

would be expected from some parts of the population; for example, to select more from a

minority grouping to ensure that we get sufficient data for analysis on such groups.

Many designs are built around random selection. This permits justifiable inference from the

sample to the population, at quantified levels of precision. Given due regard to other aspects of

design, random selection guards against bias in a way that selecting by judgment or convenience

cannot. Sampling can provide a valid, defensible methodology but it is important to match the

type of sample needed to the type of analysis required (VFM Studied). The first step in good

sample design is to ensure that the specification of the target population is as clear and complete

as possible to ensure that all elements within the population are represented. The target

population is sampled using a sampling frame. Often the units in the population can be identified

by existing information; for example, pay-rolls, company lists, government registers and so on. A

sampling frame could also be geographical; for example postcodes.

Determining Sample Size

A common goal of survey research is to collect data representative of a population. The

researcher uses information gathered from the survey to generalize findings from a drawn sample

back to a population, within the limits of random error. However, when critiquing business

education research, Wunsch (1986:31) stated that two of the most consistent flaws included (1)

disregard for sampling error when determining sample size, and (2) disregard for response and

nonresponse bias. Within a quantitative survey design, determining sample size and dealing

with nonresponse bias is essential. One of the real advantages of quantitative methods is their

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

ability to use smaller groups of people to make inferences about larger groups that would be

prohibitively expensive to study (Holton & Burnett, 1997:71).

Standard textbook authors and researchers offer tested methods that allow studies to take full

advantage of statistical measurements, which in turn give researchers the upper hand in

determining the correct sample size. Sample size is one of the four inter-related features of a

study design that can influence the detection of significant differences, relationships or

interactions (Peers, 1996). Generally, these survey designs try to minimize both alpha error

(finding a difference that does not actually exist in the population) and beta error (failing to find

a difference that actually exists in the population) (Peers, 1996).

Foundations for Sample Size Determination

Primary Variables off Measurement

The researcher must make decisions as to which variables will be incorporated into formula

calculations. For example, if the researcher plans to use a seven-point scale to measure a

continuous variable, e.g., job satisfaction, and also plans to determine if the respondents differ by

certain categorical variables, e.g., gender, educational level, and others, which variable(s) should

be used as the basis for sample size? This is important because the use of gender as the primary

variable will result in a substantially larger sample size than if one used the seven-point scale as

the primary variable of measure. Cochran (1977:81) addressed this issue by stating that One

method of determining sample size is to specify margins of error for the items that are regarded

as most vital to the survey. An estimation of the sample size needed is first made separately for

each of these important items. When these calculations are completed, researchers will have a

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

range of ns, usually ranging from smaller ns for scaled, continuous variables, to larger ns for

dichotomous or categorical variables.

The researcher should make sampling decisions based on these data. If the ns for the variables

of interest are relatively close, the researcher can simply use the largest n as the sample size and

be confident that the sample size will provide the desired results. More commonly, there is a

sufficient variation among the ns so that we are reluctant to choose the largest, either from

budgetary considerations or because this will give an over-all standard of precision substantially

higher than originally contemplated. In this event, the desired standard of precision may be

relaxed for certain of the items, in order to permit the use of a smaller value of n. The researcher

may also decide to use this information in deciding whether to keep all of the variables identified

in the study. In some cases, the ns are so discordant that certain of them must be dropped from

the inquiry; . . . (Cochran, 1977:81).

Error Estimation

Cochrans (1977) formula uses two key factors: (1) the risk the researcher is willing to accept in

the study, commonly called the margin of error, or the error the researcher is willing to accept,

and (2) the alpha level, the level of acceptable risk the researcher is willing to accept that the true

margin of error exceeds the acceptable margin of error; i.e., the probability that differences

revealed by statistical analyses really do not exist; also known as Type I error. Another type of

error Type II error, also known as beta error. Type II error occurs when statistical procedures

result in a judgment of no significant differences when these differences do indeed exist.

Alpha Level

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

The alpha level used in determining sample size in most educational research studies is either .05

or .01 (Ary, Jacobs, & Razavieh, 1996). In Cochrans formula, the alpha level is incorporated

into the formula by utilizing the t-value for the alpha level selected (e.g., t-value for alpha level

of .05 is 1.96 for sample sizes above 120). Researchers should ensure they use the correct t-value

when their research involves smaller populations, e.g., t-value for alpha of .05 and a population

of 60 is 2.00. In general, an alpha level of .05 is acceptable for most research. An alpha level of .

10 or lower may be used if the researcher is more interested in identifying marginal relationships,

differences or other statistical phenomena as a precursor to further studies. An alpha level of .01

may be used in those cases where decisions based on the research are critical and errors may

cause substantial financial or personal harm, e.g., major programmatic changes (Bartlett, Kotrlik,

and Higgins, 2001).

Acceptable Margin of Error

The general rule relative to acceptable margins of error in educational and social research is as

follows: For categorical data, 5% margin of error is acceptable, and, for continuous data, 3%

margin of error is acceptable (Krejcie & Morgan, 1970). For example, a 3% margin of error

would result in the researcher being confident that the true mean of a seven point scale is within

.21 (.03 times seven points on the scale) of the mean calculated from the research sample.

For a dichotomous variable, a 5% margin of error would result in the researcher being confident

that the proportion of respondents who were male was within 5% of the proportion calculated

from the research sample. Researchers may increase these values when a higher margin of error

is acceptable or may decrease these values when a higher degree of precision is needed (Bartlett,

et al., 2001).

Variance Estimation

7

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

A critical component of sample size formulas is the estimation of variance in the primary

variables of interest in the study. The researcher does not have direct control over variance and

must incorporate variance estimates into research design. Cochran (1977) listed four ways of

estimating population variances for sample size determinations: (1) take the sample in two steps,

and use the results of the first step to determine how many additional responses are needed to

attain an appropriate sample size based on the variance observed in the first step data; (2) use

pilot study results; (3) use data from previous studies of the same or a similar population; or (4)

estimate or guess the structure of the population assisted by some logical mathematical results.

In many educational and social research studies, it is not feasible to use any of the first three

ways and the researcher must estimate variance using the fourth method (Bartlett, 2001). A

researcher typically needs to estimate the variance of scaled and categorical variables. To

estimate the variance of a scaled variable, one must determine the inclusive range of the scale,

and then divide by the number of standard deviations that would include all possible values in

the range, and then square this number.

When estimating the variance of a dichotomous (proportional) variable such as gender, Krejcie

and Morgan (1970) recommended that researchers should use .50 as an estimate of the

population proportion. This proportion will result in the maximization of variance, which will

also produce the maximum sample size. This proportion can be used to estimate variance in the

population. For example, squaring .50 will result in a population variance estimate of .25 for a

dichotomous variable.

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

Basic Sample Size Determination

Continuous Data

Before proceeding with sample size calculations, assuming continuous data, the researcher

should determine if a categorical variable will play a primary role in data analysis. If so, the

categorical sample size formulas should be used. If this is not the case, the sample size formulas

for continuous data described in this section are appropriate. Cochrans (1977) sample size

formula for continuous data is:

(t)2 * (s) 2

no= ----------------(d)2

Where t = value for selected alpha level in each tail (the alpha level of .05 indicates the level of

risk the researcher is willing to take that true margin of error may exceed the

acceptable margin of error.)

Where s = estimate of standard deviation in the population

Where d = acceptable margin of error for mean being estimate (number of points on primary

scale * acceptable margin of error)

Cochrans (1977) correction formula should be used to calculate the final sample size:

no

n1 = -----------------------------(1 + no / P)

Where P = population size

Where n0 = required return sample size according to Cochrans formula

Where n1 = required return sample size because sample exceed required % of population.

9

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

These procedures result in the minimum returned sample size.

However, since many educational and social research studies often use data collection methods

such as surveys and other voluntary participation methods, the response rates are typically well

below 100%. Salkind (1997:107) recommended oversampling when he stated that If you are

mailing out surveys or questionnaires, . . . . count on increasing your sample size by 40%-50% to

account for lost mail and uncooperative subjects.

However, many researchers criticize the use of over-sampling to ensure that this minimum

sample size is achieved and suggestions on how to secure the minimal sample size are scarce.

Fink (1995) argues that Oversampling can add costs to the survey and is often necessary.

If the researcher decides to use oversampling, Bartlett, et al., (2001) suggest that four methods

may be used to determine the anticipated response rate: (1) take the sample in two steps, and use

the results of the first step to estimate how many additional responses may be expected from the

second step; (2) use pilot study results; (3) use responses rates from previous studies of the same

or a similar population; or (4) estimate the response rate.

Estimating response rates is not an exact science. A researcher may be able to consult other

researchers or review the research literature in similar fields to determine the response rates that

have been achieved with similar and, if necessary, dissimilar populations (Bartlett, et al., 2001).

Categorical Data

10

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

The sample size formulas and procedures used for categorical data are very similar to continuous

data, but some variations do exist. Cochrans (1977) sample size formula for categorical data is:

(t)2 * (p)(q)

no= --------------------(d)2

Where t = value for selected alpha level in each tail (the alpha level indicates the level of risk the

researcher is willing to take that true margin of error may exceed the acceptable

margin of error).

Where (p)(q) = estimate of variance (maximum possible proportion * 1- maximum possible

proportion produces maximum possible sample size).

Where d = acceptable margin of error for proportion being estimated (error researcher is willing

to except).

Cochrans (1977) correction formula should be used to calculate the final sample size. These

calculations are as follows:

no

n1= -----------------------------(1 + no / Population)

Where no = required return sample size according to Cochrans formula

Where n1 = required return sample size because sample > required % of population.

(These procedures result in a minimum returned sample size).

Methodology

11

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

The paper adopts the descriptive research design in explaining the processes and requirements of

determining sufficient sample size for management survey research activities. The choice of the

method is informed by the fact that we intend to primarily describe the sufficient sample sizes

required for management survey research activities. The emphasis is on describing rather than

judge or interprets. Klopper (1990) argues that researchers use descriptive research design with

the aim of recording in the form of a written report of that which has been perceived. The paper

relies purely on secondary data collected from e-books, online journal articles and textbooks.

Determining Sufficiency of Sample Size

When we sample, we are drawing a subgroup of cases from some population of possible cases. The

sample will deviate from the true nature of the population by a certain amount due ton chance variations

of drawing few cases from many possible cases. This is called sampling error (Isaac & Michael, 1995).

The issue of determining sample size arises with regard to the investigators wish to assure that the

sample statistic (usually the sample mean) is as close to, or within a specified distance from, the true

statistic (mean) of the entire population under review (Hill, 1998).

A crude method of checking the sufficiency of data is describe as split-half analysis of consistency

(Martin & Bateson, 1986). Here the data is divided randomly into two halves which are then analysed

separately. If both sets of data clearly generate the same conclusions, then sufficient data is claimed to

have been collected. If the two conclusions differ, then more data is required. True split half analysis

involves calculating the correlation between the two data sets. If the correlation coefficient is sufficiently

high then the data can be said to be reliable (Hill, 1998). Martin and Bateson (1986) advocate a

correlation coefficient of greater than 0.7.

12

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

Alreck and Settle (1995) dispute the logic that sample size is necessarily dependant on population size.

They provide their analogy as follows: suppose you were warming a bowl of soup and wished to know if

it was hot enough to serve. You would probably taste a spoonful. A sample size of one spoonful. Now

suppose you increased the population of soup, and you were heating a large urn of soup for a large crowd.

The supposed population of soup has increased, but you still only require a sample size of one spoonful to

determine whether the soup is hot enough to serve.

A formula for determining sample size can be derived provided the investigator is prepared to specify

how much error is acceptable and how much confidence is required (Roscoe, 1975; and Alreck & Settle,

1995 in Hill, 1998).

A significance level of 5% has been established as a generally acceptable level of confidence in most

behavioral sciences (Hill, 1998). Roscoe (1975) seems to use 10% as a rule thumb acceptable level, while

Wersberg and Bowen (1977) cite 3% and 4% as the acceptable level in survey research for forecasting

election results. Further, Wersberg and Bowen (1977: 41) provide a table of maximum sampling error

related to sample size for simple randomly selected samples. The table insinuates that if you are prepared

to accept an error level of 5% in survey, then you require a sample size of 400 observations. If 10% is

acceptable then a sample of 100 is acceptable, provided the sampling procedure is simple random.

Table 1: Maximum sampling error for samples of varying sizes

Sample

size

Error

2,000

2.2

1,500

2.6

1000

3.2

750

3.6

700

3.8

13

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

600

4.1

500

4.5

400

5.0

300

5.8

200

7.2

100

10.3

Source: Wersberg and Bowen (1977:41) in Hill (1998).

Krejcie and Morgan (1970) have produced a table for determining required sample size given a

finite population. The table is applicable to any population of a defined (finite) size.

14

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

Table 2: Required sample size, given a finite population

N-----n

N-----n

N-----n

N-----n

N-----n

10-----10

100-----80

280-----162

800-----260

2800-----338

15-----14

110-----86

290-----165

850-----265

3000-----341

20-----19

120-----92

300-----169

900-----269

3500-----346

25-----24

130-----97

320-----175

950-----274

4000-----351

30-----28

140-----103

340-----181

1000-----278

4500-----354

35-----32

150-----108

360-----186

1100-----285

5000-----357

40-----36

160-----113

380-----191

1200-----291

6000-----361

45-----40

170-----118

400-----196

1300-----297

7000-----364

50-----44

180-----123

420-----201

1400-----302

8000-----367

55-----48

190-----127

440-----205

1500-----306

9000-----368

60-----52

200-----132

460-----210

1600-----310

10000-----370

65-----56

210-----136

480-----214

1700-----313

15000-----375

70-----59

220-----140

500-----217

1800-----317

20000-----377

75-----63

230-----144

550-----226

1900-----320

30000-----379

80-----66

240-----148

600-----234

2000-----322

40000-----380

85-----70

250-----152

650-----242

2200-----327

50000-----381

90-----73

260-----155

700-----248

2400-----331

75000-----382

95-----76

270-----159

750-----254

2600-----335

100000-----384

Source: Krejcie and Morgan (1970:608) in Hill (1998).

Where N= Population size, and n= sample size required.

Krejcie and Morgan (1970) state that, using the above calculation, as the population increases the

sample size increases at a diminishing rate and remains, eventually constant at slightly more than

380 cases.

15

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

According to Gay and Diehl (1992), generally the number of respondents acceptable for a study

depends on the type research involved- descriptive, correlational or experimental. For descriptive

research the sample should be 10% of population. But if the population is small then 20% may

be required. In correlational research at least 30 subjects are required to establish a relationship.

Hill (1998) suggests 30 subjects per group as the minimum for experimental research. Isaac and

Michael (1995) provide conditions where research with large samples is essential and also where

small samples are justifiable. Accordingly Isaac and Michael (1995) opined that large samples

are essential:

a) When a large number of uncontrolled variables are interacting unpredictably and it

is desirable to minimize their separate effects; to mix the effects randomly and

hence cancel out imbalances.

b) When the total sample is to be sub-divided into several sub-samples to be

compared with one another.

c) When the parent population consists of a wide range of variables and

characteristics, and there is a risk therefore of missing or misrepresenting those

differences.

d) When differences in the results are expected to be small.

Isaac and Michael (1995) provide conditions where research with small sample sizes is

justifiable as:

a) In cases of small sample economy. That is when it not economically feasible to

collect a large sample.

16

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

b) When computer monitoring. This may take two forms: (i) Where the input of huge

amounts of data may itself introduce a source of error- namely, key punch

mistakes. (ii) Where, as an additional check on reliability of the computer program

a small sample is selected from the main data and analysed by hand. The purpose

of this is to compare the small data and the large sample data for similar results.

c) In cases of exploratory research and pilot studies. Sample sizes of 10 to 30 are

sufficient in these cases.

d) When the research involves in-depth case study. That is, when the study requires

methodology such as interview and where enormous amount of qualitative data are

forthcoming from each individual respondent.

Bartlett, et al., (2001) developed another table for determining minimum returned sample size for

a Given Population Size for Continuous and categorical Data.

Table 3: Table for determining minimum returned sample size for a Given Population Size for

Continuous and categorical Data

Sample size

17

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

Categorical data

Continuous data

(margin of error=.05)

(margin of error=.03)

Population size

Alpha = .10, alpha = .05, alpha = .01, alpha = .50, alpha = .50, alpha =.05,

t=1.65

t= 1.96

t= 2.58

t=1.65

t= 1.96

t=2.58

100

46

55

68

74

80

87

200

59

75

102

116

132

154

300

65

85

123

143

169

207

400

69

92

137

162

196

250

500

72

96

147

176

218

286

600

73

100

155

187

235

316

700

75

102

161

196

249

341

800

76

104

166

203

260

363

900

76

105

170

209

270

382

1,000

77

106

173

213

278

399

1,500

79

110

183

230

306

461

2,000

83

112

189

239

232

499

4,000

83

119

198

254

351

570

6,000

83

119

209

259

362

598

8,000

83

119

209

262

367

613

10,000

83

119

209

264

370

623

Source: Bartlett, Kotrlik, and Higgins (2001).

Accordingly, researchers may use this table if the margin of error shown is appropriate for their

study, however, the appropriate sample size must be calculated if these error rates are not

appropriate (Bartlett, et al., 2001).

18

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

Conclusions

Although it is not unusual for researchers to have different opinions as to how sample size should

be calculated, the procedures used in the process should always be reported, allowing the reader

to make his or her own judgments as to whether they accept the researchers assumptions and

procedures. In general, a researcher should use standard factors in determining the sample size.

Using adequate sample along with high quality data collection efforts will results in more

reliable, valid, and generalizable results, it could also result in time saving and other resources

(Bartlett, et al., 2001).

From the foregoing, it appears that determining sample size is not a cut-and-dried procedure.

Also the nature of methodology used is a major consideration in selecting sample size (Hill,

1998). Due to problems arising from getting enough respondents and generalization of findings,

Gay and Diehl (1992) opined that there is a great deal in the replication of findings. Hill (1998)

suggests replication of findings to mean: (i) increase the subject pool, and (ii) create greater

validity for generalizability.

Recommendation

Having describe the procedures involved in determining sufficient sample size for management

survey research activities, the following recommendations are proffer:

19

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

Researchers should endeavour to use sufficient sample size in conducting survey research to

minimize errors in inference back to the population;

Sample design should be built around the method of sample selection; and

When estimating the variance of a dichotomous (proportional) variable such as gender,

researchers should use .50 as an estimate of the population proportion (Krejcie and Morgan,

1970).

References

Alreck, P.L. and Settle, R.B. (1995). The Survey Research Handbook. (2nd.). in Hill, R. (1998).

What Sample Size is Enough in Internet Survey Research. Interpersonal

Computing and Technology: An electronic Journal for the 21 st Century. Available at:

http://www.emoderators.com/ipct-j/1998/n3-4/hill.hmtl

Bartlett, J.E., Kotrlik, J.W., and Higgins, C.C. (2001). Organizational Research: Determining

Appropriate sample Size in Survey Research. Information Technology, Learning, and

Performance Journal, 19(1), pp 43-50 (Spring).

20

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

Bluman, A.G. (2004). Elementary Statistics: a step by step approach. (5th.). New York:

McGraw-Hill.

Cochran, W.G. (1977). Sampling Techniques. (3rd.). In Bartlett, J.E., Kotrlik, J.W., and Higgins,

C.C. (2001). Organizational Research: Determining Appropriate sample Size in

Survey Research. Information Technology, Learning, and Performance Journal, 19(1),

pp 43-50 (Spring).

Fink, A. (1995). The Survey Handbook. In Bartlett, J.E., Kotrlik, J.W., and Higgins, C.C. (2001).

Organizational Research: Determining Appropriate sample Size in Survey Research.

Information Technology, Learning, and Performance Journal, 19(1), pp 43-50 (Spring).

Gay, L.R. and Diehl, P.L. (1992). Research Methods for Business and Management. In Hill, R.

(1998). What Sample Size is Enough in Internet Survey Research? Interpersonal

Computing and Technology: An electronic Journal for the 21st Century. Available at:

http://www.emoderators.com/ipct-j/1998/n3-4/hill.hmtl

Hill, R. (1998). What Sample Size is Enough in Internet Survey Research? Interpersonal

Computing and Technology: An electronic Journal for the 21st Century. Available at:

http://www.emoderators.com/ipct-j/1998/n3-4/hill.hmtl

Isaac, S. and Micheal, W.B. (1995). Handbook in Research and Evaluation. In Hill, R. (1998).

What Sample Size is Enough in Internet Survey Research? Interpersonal

Computing and Technology: An electronic Journal for the 21st Century. Available at:

http://www.emoderators.com/ipct-j/1998/n3-4/hill.hmtl

Krejcie, R.V. and Morgan, D.W. (1970). Determining Sample Size for Research Activities. In

Hill, R. (1998). What Sample Size is Enough in Internet Survey Research?

Interpersonal Computing and Technology: An electronic Journal for the 21 st Century.

Available at: http://www.emoderators.com/ipct-j/1998/n3-4/hill.hmtl

Martin, P. and Bateson, P. (1986). Measuring Behaviour: An introductory Guide. in Hill, R.

(1998). What Sample Size is Enough in Internet Survey Research? Interpersonal

Computing and Technology: An electronic Journal for the 21st Century. Available at:

http://www.emoderators.com/ipct-j/1998/n3-4/hill.hmtl

Peer, I. (1996). Statistical Analysis for Education and Psychology Researchers. in Bartlett, J.E.,

Kotrlik, J.W., and Higgins, C.C. (2001). Organizational Research: Determining

Appropriate sample Size in Survey Research. Information Technology, Learning, and

Performance Journal, 19(1), pp 43-50 (Spring).

Roscoe, J.T. (1975). Fundamentals Research Statistics for Behavioural Sciences. (2nd.). in Hill,

R. (1998). What Sample Size is Enough in Internet Survey Research? Interpersonal

Computing and Technology: An electronic Journal for the 21st Century. Available at:

http://www.emoderators.com/ipct-j/1998/n3-4/hill.hmtl

Salkind, N.J. (1997). Exploring Research. (3rd.). In Bartlett, J.E., Kotrlik, J.W., and Higgins,

C.C. (2001). Organizational Research: Determining Appropriate sample Size in

21

International Journal of Organisational Management & Entrepreneurship Development, 6(1),

pp: 119-130

Survey Research. Information Technology, Learning, and Performance Journal, 19(1),

pp 43-50 (Spring).

VFM Studies. (n.d.). A Practical Guide to Sampling. Statistical and Technical Team: National

Audit Office.

Weisberg, H.F. and Bowen, B.D. (1977). An introduction to Survey Research and Data Analysis.

In Hill, R. (1998). What Sample Size is Enough in Internet Survey Research?

Interpersonal Computing and Technology: An electronic Journal for the 21 st Century.

Available at: http://www.emoderators.com/ipct-j/1998/n3-4/hill.hmtl

22

Anda mungkin juga menyukai

- Education Research in Belize for Belize by Belizeans: Volume 1Dari EverandEducation Research in Belize for Belize by Belizeans: Volume 1Belum ada peringkat

- BYOD 2013 Literature ReviewDokumen33 halamanBYOD 2013 Literature ReviewLaurens Derks100% (2)

- Lesson 4 Sample Size DeterminationDokumen16 halamanLesson 4 Sample Size Determinationana mejicoBelum ada peringkat

- Ipad Policy PDFDokumen5 halamanIpad Policy PDFARTroom2012Belum ada peringkat

- Management Ofc: Examples From PracticeDokumen10 halamanManagement Ofc: Examples From PracticeKaterina HadzijevskaBelum ada peringkat

- EDU612 Practicum Educational Management & Planning Syllabus 2021Dokumen7 halamanEDU612 Practicum Educational Management & Planning Syllabus 2021Rufina B VerdeBelum ada peringkat

- Cronbach AlphaDokumen5 halamanCronbach AlphaDrRam Singh KambojBelum ada peringkat

- Pps-Probability Proptional SamplingDokumen13 halamanPps-Probability Proptional SamplingdranshulitrivediBelum ada peringkat

- Models of Teacher EducationDokumen18 halamanModels of Teacher EducationBill Bellin0% (2)

- Task AnalysisDokumen6 halamanTask AnalysisfaiziaaaBelum ada peringkat

- Understanding and Evaluating Survey ResearchDokumen4 halamanUnderstanding and Evaluating Survey Researchsina giahkarBelum ada peringkat

- Meghanlee 2 8 ReflectionDokumen2 halamanMeghanlee 2 8 Reflectionapi-253373409Belum ada peringkat

- Operations Management PDFDokumen2 halamanOperations Management PDFWaleed MalikBelum ada peringkat

- Sampling TheoryDokumen44 halamanSampling TheoryMa. Mae ManzoBelum ada peringkat

- GFK K.pusczak-Sample Size in Customer Surveys - PaperDokumen27 halamanGFK K.pusczak-Sample Size in Customer Surveys - PaperVAlentino AUrishBelum ada peringkat

- 20 Years of Performance Measurement in Sustainable Supply Chain Management 2015Dokumen21 halaman20 Years of Performance Measurement in Sustainable Supply Chain Management 2015Dr. Vikas kumar ChoubeyBelum ada peringkat

- Broader Management SkillsDokumen41 halamanBroader Management SkillsMohd ObaidullahBelum ada peringkat

- Business CH 6Dokumen6 halamanBusiness CH 6ladla100% (2)

- 2011 The Influence of Workload On Performance of Teachers in Public Primary Schools in Kombewa Division, Icisumu West District, KenyaDokumen88 halaman2011 The Influence of Workload On Performance of Teachers in Public Primary Schools in Kombewa Division, Icisumu West District, KenyaJeromeDelCastilloBelum ada peringkat

- Audit Action Plan SampleDokumen1 halamanAudit Action Plan SampleDave Noel AytinBelum ada peringkat

- Interactive Board Training For Teachers of The Intellectual DisabledDokumen7 halamanInteractive Board Training For Teachers of The Intellectual DisabledrachaelmcgahaBelum ada peringkat

- Cur 528 Signature Assignment PaperDokumen10 halamanCur 528 Signature Assignment Paperapi-372005043Belum ada peringkat

- Departmental Action Plan AnnotatedDokumen8 halamanDepartmental Action Plan Annotatedapi-412208005Belum ada peringkat

- Types of Probability SamplingDokumen5 halamanTypes of Probability SamplingSylvia NabwireBelum ada peringkat

- Rme101q Exam Prep 2017Dokumen12 halamanRme101q Exam Prep 2017Nkululeko Kunene0% (1)

- Collaborative Inquiry in Teacher Professional DevelopmentDokumen15 halamanCollaborative Inquiry in Teacher Professional DevelopmentDian EkawatiBelum ada peringkat

- Think Pair Share Its Effects On The Academic Performnace of ESL StudentsDokumen8 halamanThink Pair Share Its Effects On The Academic Performnace of ESL StudentsArburim IseniBelum ada peringkat

- Action Research PosterDokumen1 halamanAction Research Posterapi-384464451Belum ada peringkat

- Paired Data, Correlation & RegressionDokumen6 halamanPaired Data, Correlation & Regressionvelkus2013Belum ada peringkat

- ReadabilityDokumen78 halamanReadabilityminibeanBelum ada peringkat

- Sustaining Student Numbers in The Competitive MarketplaceDokumen12 halamanSustaining Student Numbers in The Competitive Marketplaceeverlord123Belum ada peringkat

- Working Capital Management and Profitability Evidence From Manufacturing Sector in Malaysia 2167 0234 1000255 PDFDokumen9 halamanWorking Capital Management and Profitability Evidence From Manufacturing Sector in Malaysia 2167 0234 1000255 PDFPavithra RamanathanBelum ada peringkat

- Sustainability Assessment Questionnaire (SAQ)Dokumen12 halamanSustainability Assessment Questionnaire (SAQ)Indiara Beltrame BrancherBelum ada peringkat

- Individual Develoment Plan - Part IV of RPMS (Highly Proficient)Dokumen1 halamanIndividual Develoment Plan - Part IV of RPMS (Highly Proficient)Lorenz Kurt Santos100% (1)

- APJ5Feb17 4247 1Dokumen21 halamanAPJ5Feb17 4247 1Allan Angelo GarciaBelum ada peringkat

- Cur528 Assessing Student Learning Rachel FreelonDokumen6 halamanCur528 Assessing Student Learning Rachel Freelonapi-291677201Belum ada peringkat

- Cur-528 The Evaluation ProcessDokumen7 halamanCur-528 The Evaluation Processapi-3318994320% (1)

- Writing The Third Chapter "Research Methodology": By: Dr. Seyed Ali FallahchayDokumen28 halamanWriting The Third Chapter "Research Methodology": By: Dr. Seyed Ali FallahchayMr. CopernicusBelum ada peringkat

- Memo PersuasionDokumen2 halamanMemo PersuasionSunil KumarBelum ada peringkat

- Defense Powerpoint Pr2Dokumen19 halamanDefense Powerpoint Pr2John Joel PradoBelum ada peringkat

- Management of Change - What Does A Good' System Look Like?Dokumen3 halamanManagement of Change - What Does A Good' System Look Like?svkrishnaBelum ada peringkat

- 1010 - Analytical Data - Interpretation and TreatmentDokumen16 halaman1010 - Analytical Data - Interpretation and TreatmentAnonymous ulcdZToBelum ada peringkat

- Improving Human PerformanceDokumen6 halamanImproving Human PerformanceRajuBelum ada peringkat

- Methods HardDokumen16 halamanMethods HardChristine Khay Gabriel Bulawit100% (1)

- Guidelines For Writing HRM Case StudiesDokumen3 halamanGuidelines For Writing HRM Case StudiesMurtaza ShabbirBelum ada peringkat

- How To Make SPSS Produce All Tables in APA Format AutomaticallyDokumen32 halamanHow To Make SPSS Produce All Tables in APA Format AutomaticallyjazzloveyBelum ada peringkat

- Sample Size PDFDokumen10 halamanSample Size PDFReza HakimBelum ada peringkat

- Normal Distribution Questions ExDokumen3 halamanNormal Distribution Questions ExGimson D Parambil100% (1)

- Assessment Guidelines Under The New Normal Powerpoint PResentationDokumen50 halamanAssessment Guidelines Under The New Normal Powerpoint PResentationJOHNELL PANGANIBANBelum ada peringkat

- Fire and Arson Investigation Investigación de Incendios ETM-JUne-2020Dokumen15 halamanFire and Arson Investigation Investigación de Incendios ETM-JUne-2020Elio PimentelBelum ada peringkat

- Time ManagementDokumen4 halamanTime ManagementZainab DashtiBelum ada peringkat

- Day (2002) School Reform and Transition in Teacher Professionalism and IdentityDokumen16 halamanDay (2002) School Reform and Transition in Teacher Professionalism and Identitykano73100% (1)

- The Key To Classroom ManagementDokumen11 halamanThe Key To Classroom Managementfwfi0% (1)

- Applying Total Quality Management in AcademicsDokumen1 halamanApplying Total Quality Management in AcademicsSreeBelum ada peringkat

- Quantitative ResearchDokumen13 halamanQuantitative Researchmayankchomal261Belum ada peringkat

- Test BiasDokumen23 halamanTest BiasKeren YeeBelum ada peringkat

- Kaizen Final Ass.Dokumen7 halamanKaizen Final Ass.Shruti PandyaBelum ada peringkat

- Strategic Management Practices and Students' Academic Performance in Public Secondary Schools: A Case of Kathiani Sub-County, Machakos CountyDokumen11 halamanStrategic Management Practices and Students' Academic Performance in Public Secondary Schools: A Case of Kathiani Sub-County, Machakos CountyAnonymous CwJeBCAXpBelum ada peringkat

- Definition of ResearchDokumen2 halamanDefinition of ResearchAyana Gacusana0% (1)

- Preliminaries 3Dokumen12 halamanPreliminaries 3Vanito SwabeBelum ada peringkat

- VentilationDokumen7 halamanVentilationDodowi CspogBelum ada peringkat

- QPS Sample GuidelinesDokumen23 halamanQPS Sample GuidelinesSafiqulatif AbdillahBelum ada peringkat

- I JomedDokumen23 halamanI JomedSafiqulatif AbdillahBelum ada peringkat

- 10 Facts On Patient SafetyDokumen3 halaman10 Facts On Patient SafetySafiqulatif AbdillahBelum ada peringkat

- 4612 Early Warning Score (June 2013)Dokumen8 halaman4612 Early Warning Score (June 2013)Safiqulatif AbdillahBelum ada peringkat

- Guide To Surgical Site MarkingDokumen24 halamanGuide To Surgical Site MarkingSafiqulatif AbdillahBelum ada peringkat

- Policy Critical ResultDokumen6 halamanPolicy Critical ResultSafiqulatif AbdillahBelum ada peringkat

- Critical Result ReportingDokumen9 halamanCritical Result ReportingSafiqulatif AbdillahBelum ada peringkat

- SkriningDokumen6 halamanSkriningSafiqulatif AbdillahBelum ada peringkat

- Preventing Patient ReboundsDokumen11 halamanPreventing Patient ReboundsSafiqulatif AbdillahBelum ada peringkat

- PPI Di HD Versi CDCDokumen63 halamanPPI Di HD Versi CDCSafiqulatif AbdillahBelum ada peringkat

- TOI Breaking Bad NewsDokumen15 halamanTOI Breaking Bad NewsSafiqulatif AbdillahBelum ada peringkat

- Reducing Hospital ReadmissionDokumen6 halamanReducing Hospital ReadmissionSafiqulatif AbdillahBelum ada peringkat

- Governance Board Manual QualityDokumen22 halamanGovernance Board Manual QualitySafiqulatif AbdillahBelum ada peringkat

- Board Committees and TOR QPS CommitteeDokumen44 halamanBoard Committees and TOR QPS CommitteeSafiqulatif AbdillahBelum ada peringkat

- Preventing Patient ReboundsDokumen11 halamanPreventing Patient ReboundsSafiqulatif AbdillahBelum ada peringkat

- Cdc-Guideline For Disinfection and Sterilization in Health-Care Facilities-2008Dokumen158 halamanCdc-Guideline For Disinfection and Sterilization in Health-Care Facilities-2008fuentenatura100% (1)

- Practice Guidelines For Chronic Pain Management .13Dokumen24 halamanPractice Guidelines For Chronic Pain Management .13Safiqulatif AbdillahBelum ada peringkat

- International Hospital Inpatient Quality MeasuresDokumen1 halamanInternational Hospital Inpatient Quality MeasuresSafiqulatif AbdillahBelum ada peringkat

- 2013-2014 Clinical Pathway ApplicationDokumen7 halaman2013-2014 Clinical Pathway ApplicationSafiqulatif AbdillahBelum ada peringkat

- NationalguidelinesDokumen26 halamanNationalguidelines18saBelum ada peringkat

- 6th Central Pay Commission Salary CalculatorDokumen15 halaman6th Central Pay Commission Salary Calculatorrakhonde100% (436)

- 6th Central Pay Commission Salary CalculatorDokumen15 halaman6th Central Pay Commission Salary Calculatorrakhonde100% (436)

- Obat YanmedDokumen2 halamanObat YanmedSafiqulatif AbdillahBelum ada peringkat

- Systematic SamplingDokumen18 halamanSystematic SamplingPius MbindaBelum ada peringkat

- Chi - Square Test: PG Students: DR Amit Gujarathi DR Naresh GillDokumen32 halamanChi - Square Test: PG Students: DR Amit Gujarathi DR Naresh GillNaresh GillBelum ada peringkat

- Tutorial ANCOVA - BIDokumen2 halamanTutorial ANCOVA - BIAlice ArputhamBelum ada peringkat

- Populasi-Sampel - Sampling: I Ketut Swarjana Itekes Bali 2020Dokumen12 halamanPopulasi-Sampel - Sampling: I Ketut Swarjana Itekes Bali 2020Dayu DewiBelum ada peringkat

- Discriminant Correspondence AnDokumen10 halamanDiscriminant Correspondence AnalbgomezBelum ada peringkat

- Standad DevDokumen8 halamanStandad DevMuhammad Amir AkhterBelum ada peringkat

- Assignemnt 2Dokumen4 halamanAssignemnt 2utkarshrajput64Belum ada peringkat

- Factors IBBLDokumen24 halamanFactors IBBLsumaiya sumaBelum ada peringkat

- Prof. Dr. Moustapha Ibrahim Salem Mansourms@alexu - Edu.eg 01005857099Dokumen34 halamanProf. Dr. Moustapha Ibrahim Salem Mansourms@alexu - Edu.eg 01005857099Ahmed ElsayedBelum ada peringkat

- Class 3 Navie BayesDokumen21 halamanClass 3 Navie Bayesshrey patelBelum ada peringkat

- Ujian Nisbah Bagi Dua PopulasiDokumen5 halamanUjian Nisbah Bagi Dua PopulasiNadzri HasanBelum ada peringkat

- Exercise On Predictive Analytics in HRDokumen109 halamanExercise On Predictive Analytics in HRPragya Singh BaghelBelum ada peringkat

- Unit2 MathsDokumen5 halamanUnit2 MathsBhavya BabuBelum ada peringkat

- Robust Estimators: Winsorized MeansDokumen5 halamanRobust Estimators: Winsorized MeansKoh Siew KiemBelum ada peringkat

- ANOVA PresentationDokumen29 halamanANOVA PresentationAdrian Nathaniel CastilloBelum ada peringkat

- T Test and Testing of HypothesisDokumen3 halamanT Test and Testing of HypothesisSaad KhanBelum ada peringkat

- Muhammad HasibDokumen17 halamanMuhammad Hasibahmaddjody.jrBelum ada peringkat

- 3 ForecastingDokumen53 halaman3 ForecastingMarsius SihombingBelum ada peringkat

- Chapter 16 Statistical Quality Control: Quantitative Analysis For Management, 11e (Render)Dokumen20 halamanChapter 16 Statistical Quality Control: Quantitative Analysis For Management, 11e (Render)Jay BrockBelum ada peringkat

- ENME392-Sample FinalDokumen8 halamanENME392-Sample FinalSam AdamsBelum ada peringkat

- Elem Stat Handout1Dokumen4 halamanElem Stat Handout1Leah Oljol RualesBelum ada peringkat

- Business Statistics - Hypothesis Testing, Chi Square and AnnovaDokumen7 halamanBusiness Statistics - Hypothesis Testing, Chi Square and AnnovaRavindra BabuBelum ada peringkat

- UNIT - IV - 3 - Research Design - ExperimentDokumen32 halamanUNIT - IV - 3 - Research Design - Experimentaashika shresthaBelum ada peringkat

- Business Stat AssignmentDokumen2 halamanBusiness Stat Assignmentmedhane negaBelum ada peringkat

- Correlation and Regression CorrectedDokumen2 halamanCorrelation and Regression Correctedyathsih24885Belum ada peringkat

- The Influence of Work Team, Trust in Superiors and Achievement Motivation On Organizational Commitment of UIN Sultan Syarif Kasim Riau LecturersDokumen14 halamanThe Influence of Work Team, Trust in Superiors and Achievement Motivation On Organizational Commitment of UIN Sultan Syarif Kasim Riau LecturersajmrdBelum ada peringkat

- Econometrics AssignmentDokumen4 halamanEconometrics AssignmentShalom FikerBelum ada peringkat

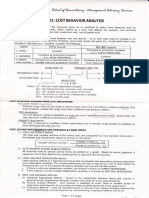

- 01 - Cost Behavior AnalysisDokumen4 halaman01 - Cost Behavior AnalysisVince De GuzmanBelum ada peringkat

- Chi-Square Test: Advance StatisticsDokumen26 halamanChi-Square Test: Advance StatisticsRobert Carl GarciaBelum ada peringkat

- The Scientific Method: H P D IDokumen9 halamanThe Scientific Method: H P D I-Belum ada peringkat