Assignment 1 - Image Decryption by Single Layer Neural Network

Diunggah oleh

yankev0 penilaian0% menganggap dokumen ini bermanfaat (0 suara)

12 tayangan4 halamanMachine Language Homework 1

Judul Asli

Assignment 1- Image Decryption by Single Layer Neural Network

Hak Cipta

© © All Rights Reserved

Format Tersedia

PDF, TXT atau baca online dari Scribd

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniMachine Language Homework 1

Hak Cipta:

© All Rights Reserved

Format Tersedia

Unduh sebagai PDF, TXT atau baca online dari Scribd

0 penilaian0% menganggap dokumen ini bermanfaat (0 suara)

12 tayangan4 halamanAssignment 1 - Image Decryption by Single Layer Neural Network

Diunggah oleh

yankevMachine Language Homework 1

Hak Cipta:

© All Rights Reserved

Format Tersedia

Unduh sebagai PDF, TXT atau baca online dari Scribd

Anda di halaman 1dari 4

Neural Network Programming Assignment 1:

Image Decryption by a Single-Layer Neural Network

Professor CCC developed a method of image encryption. This method uses two image

keys, say 𝐾1 and 𝐾2 for encrypting an input image, say 𝐼. The encryption derives an

encrypted image, say 𝐸, by mixing the two image keys and the input image. The

mixing model is

𝐸 = 𝑤1 𝐾1 + 𝑤2 𝐾2 + 𝑤3 𝐼.

Note that these 𝐾1 , 𝐾2 , and 𝐸 have the same dimension. To give you an example,

Figure 1 and Figure 2 are respectively the two image, 𝐾1 and 𝐾2 , keys used in the

encryption. Figure 3 is an example image (𝐼) to be encrypted. The encrypted image 𝐸

derived after the encryption is shown in Figure 4.

Figure 1. Image Key 1 (𝐾1 ).

Figure 2. Image Key 2 (𝐾2 ).

Figure 3. The input image to be encrypted (𝐼).

Figure 4. The encrypted image (𝐸).

To decrypt the image 𝐼 from 𝐸, you will need the two image keys and try to find the

mixing parameters 𝑤1 , 𝑤2 , and 𝑤3 . Once these requisites are available, you then can

derive the original input image by

𝐸 − w1 𝐾1 − 𝑤2 𝐾2

𝐼= . (1)

𝑤3

Now, you are required to derive the mixing parameters by using a single-layer neural

network. The network architecture would be like the following:

𝐾1 (𝑥, 𝑦) 𝑤1

𝑤2

𝐾2 (𝑥, 𝑦) 𝐸(𝑥, 𝑦)

purelin

𝑤3

𝐼(𝑥, 𝑦)

By collecting every corresponding pixel 𝑘 on the four images, 𝐾1 , 𝐾2 , 𝐼 (inputs) and

𝐸 (target outputs), we obtain a training set {[𝐾1 (𝑘), 𝐾2 (𝑘), 𝐼(𝑘)], 𝐸(𝑘)} for 1 ≤

𝑘 ≤ 𝑊 × 𝐻 . Using this training set, we can learn the mixing parameters 𝐰 =

[𝑤1 , 𝑤2 , 𝑤3 ]T by using the training algorithm of the single-layer neural network based

on the gradient descent method. That is,

𝒘(𝑘 + 1) = 𝐰(𝑘) + 𝛼(𝑡(𝑘) − 𝑎(𝑘))𝐱(𝑘),

where 𝐰(𝑘) = [𝑤1 (𝑘), 𝑤2 (𝑘), 𝑤3 (𝑘)]T , 𝐱(𝑘) = [𝐾1 (𝑘), 𝐾2 (𝑘), 𝐼(𝑘)]T , 𝑎(𝑘) =

𝐰(𝑘)𝑇 𝐱(k), 𝑡(𝑘) = 𝐸(𝑘), and α is the learning rate. The training algorithm is listed

as follows

Algorithm: Adaptive Least Mean Square Error Method by Gradient Descent

Input: training samples {[𝐾1 (𝑖), 𝐾2 (𝑖), 𝐼(𝑖)], 𝐸(𝑖)}𝑊×𝐻

𝑖=1 , where W and H are the

width and height of the images, respectively,

MaxIterLimit: the maximal number of epochs allowed to train the network,

𝜖: the vigilance level for checking the convergence of weight vectors,

𝛼: the learning rate (suggest to be a small constant such as 0.00001)

Output: 𝐰 = [𝑤1 , 𝑤2 , 𝑤3 ]

Steps:

1. Set 𝐸𝑝𝑜𝑐ℎ = 1.

𝑇

2. Randomize 𝐰 𝑬𝒑𝒐𝒄𝒉 (0) = [𝑤1𝐸𝑝𝑜𝑐ℎ (0), 𝑤2𝐸𝑝𝑜𝑐ℎ (0), 𝑤3𝐸𝑝𝑜𝑐ℎ (0)]

3. While (𝐸𝑝𝑜𝑐ℎ=1 || 𝐸𝑝𝑜𝑐ℎ<MaxIterLimit && ‖𝐰 𝑬𝒑𝒐𝒄𝒉 − 𝐰 𝐸𝑝𝑜𝑐ℎ−1 ‖ > 𝜖) do

(1) For k = 1 to 𝑊 × 𝐻

i. 𝑎(𝑘) = 𝐰 𝐸𝑝𝑜𝑐ℎ (𝑘)𝑇 𝐱(k), where 𝐱(𝑘) = [𝐾1 (𝑘), 𝐾2 (𝑘), 𝐼(𝑘)]T

ii. 𝑒(𝑘) = 𝐸(𝑘) − 𝑎(𝑘)

iii. 𝒘𝑬𝒑𝒐𝒄𝒉 (𝑘 + 1) = 𝐰 𝑬𝒑𝒐𝒄𝒉 (𝑘) + 𝛼 ⋅ 𝑒(𝑘) ⋅ 𝐱(𝑘)

(2) End For

(3) 𝐸𝑝𝑜𝑐ℎ = 𝐸𝑝𝑜𝑐ℎ + 1

4. End While

After deriving the mixing parameter 𝐰 from the algorithm, you have to decrypt

another encrypted image 𝐸’ given in Figure 5 using 𝐾1 , 𝐾2 , and the derived 𝐰

according to Equation (1). Are you wondering what the original image looks like? If yes,

then design the neural network for the decryption right away. You’ll find it’s a great

and interesting accomplishment to retrieve a clean image from such a cluttered image

using a simple neural network.

Figure 5. Another encrypted image (𝐸′) to be decrypted.

Things you need to submit to the course website include

(1) Source codes with good comments on statements;

(2) A 3~5-page report with

A. the way how you prepare the training samples

B. all parameters, such as MaxIterLimit, α , and 𝜖 , you used for the

training algorithm,

C. the derived weight vector 𝐰,

D. the printed image 𝐼’ decrypted from 𝐸’,

E. the problems you encountered, and

F. what you have learned from this work.

Note: the link to download all required images are given on the course website. The

downloaded file is a compressed archive containing five gray images (K1, K2, E, I, and

E’) in PNG format and five text files with each storing the pixel data of each image. You

can choose either the png or the txt for your use. MATLAB is recommended to do this

assignment for its powerful capability in handling various kinds of image data.

Anda mungkin juga menyukai

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (119)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (587)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2219)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (894)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (73)

- Objective CDokumen403 halamanObjective Chilmisonererdogan100% (1)

- Soccer Eye Q Developing Game AwarenessDokumen27 halamanSoccer Eye Q Developing Game AwarenessyankevBelum ada peringkat

- Sample Affidavit of HeirshipDokumen2 halamanSample Affidavit of HeirshipLeo Cag96% (24)

- Texas Unemployment Benefits Guide: How to Check SSN and File for BenefitsDokumen31 halamanTexas Unemployment Benefits Guide: How to Check SSN and File for Benefitsmarcel100% (1)

- Grow 6 Inches Taller in 90 DaysDokumen90 halamanGrow 6 Inches Taller in 90 Daysyankev100% (6)

- PAN Application Acknowledgment Receipt For Form 49A (Physical Application)Dokumen1 halamanPAN Application Acknowledgment Receipt For Form 49A (Physical Application)tanisq10Belum ada peringkat

- Ch3 - Digital Evidence & FraudsDokumen43 halamanCh3 - Digital Evidence & FraudsSarthak GuptaBelum ada peringkat

- Application Form For End User Certificate UnderDokumen4 halamanApplication Form For End User Certificate Underakashaggarwal88Belum ada peringkat

- AuditScripts Critical Security Control Executive Assessment Tool V6.1aDokumen4 halamanAuditScripts Critical Security Control Executive Assessment Tool V6.1aBruno RaimundoBelum ada peringkat

- Perception Thresholds For Augmented Reality Navigation Schemes in Large DistancesDokumen2 halamanPerception Thresholds For Augmented Reality Navigation Schemes in Large DistancesyankevBelum ada peringkat

- Vision-Based Location Positioning Using Augmented Reality For Indoor NavigationDokumen9 halamanVision-Based Location Positioning Using Augmented Reality For Indoor NavigationyankevBelum ada peringkat

- QT IntroductionDokumen101 halamanQT IntroductionyankevBelum ada peringkat

- Implementing Virtual 3D Model and Augmented Reality Navigation For Library in UniversityDokumen3 halamanImplementing Virtual 3D Model and Augmented Reality Navigation For Library in UniversityyankevBelum ada peringkat

- MTA 98-375 Sample QuestionsDokumen20 halamanMTA 98-375 Sample Questionsyankev0% (1)

- airsim Documentation: Getting StartedDokumen86 halamanairsim Documentation: Getting StartedyankevBelum ada peringkat

- A Survey of Augmented Reality Navigation: Gaurav Bhorkar Gaurav - Bhorkar@aalto - FiDokumen6 halamanA Survey of Augmented Reality Navigation: Gaurav Bhorkar Gaurav - Bhorkar@aalto - FiyankevBelum ada peringkat

- Collaborative Augmented Reality For Outdoor Navigation and Information BrowsingDokumen11 halamanCollaborative Augmented Reality For Outdoor Navigation and Information BrowsingyankevBelum ada peringkat

- INSY5336 Ch.4 FunctionsDokumen27 halamanINSY5336 Ch.4 FunctionsyankevBelum ada peringkat

- Motivational Quotes PosterDokumen1 halamanMotivational Quotes PosteryankevBelum ada peringkat

- M01 - Introduction To Software EngineeringDokumen24 halamanM01 - Introduction To Software EngineeringyankevBelum ada peringkat

- Programming Languages Ch01 - IntroductionDokumen36 halamanProgramming Languages Ch01 - IntroductionyankevBelum ada peringkat

- Iowa State University International Graduate InstructionsDokumen4 halamanIowa State University International Graduate InstructionsyankevBelum ada peringkat

- Artificial Intelligence IntroductionDokumen9 halamanArtificial Intelligence IntroductionyankevBelum ada peringkat

- QUIZ 5 SolutionDokumen2 halamanQUIZ 5 SolutionyankevBelum ada peringkat

- Operating Systems Concepts 9th Edition Chapter9Dokumen77 halamanOperating Systems Concepts 9th Edition Chapter9yankevBelum ada peringkat

- MTA SSG SoftDev Individual Without Crop PDFDokumen65 halamanMTA SSG SoftDev Individual Without Crop PDFMr. Ahmed ElmasryBelum ada peringkat

- Operating Systems Concepts 9th Edition Chapter8Dokumen64 halamanOperating Systems Concepts 9th Edition Chapter8yankevBelum ada peringkat

- Operating Systems Concepts 5th Edition Chapter7Dokumen44 halamanOperating Systems Concepts 5th Edition Chapter7yankev0% (1)

- QT IntroductionDokumen101 halamanQT IntroductionyankevBelum ada peringkat

- Operating Systems Concepts 9th Edition - Ch05Dokumen66 halamanOperating Systems Concepts 9th Edition - Ch05yankevBelum ada peringkat

- Sport Foot Magazine 2015-10-14 N°42Dokumen92 halamanSport Foot Magazine 2015-10-14 N°42yankevBelum ada peringkat

- Operating Systems Concepts 9th - Ch02Dokumen54 halamanOperating Systems Concepts 9th - Ch02yankev100% (1)

- Sport Foot Magazine 2015-11-11 N°46Dokumen91 halamanSport Foot Magazine 2015-11-11 N°46yankevBelum ada peringkat

- Communication Systems 5th Edition - Simon Haykin Ch4Dokumen43 halamanCommunication Systems 5th Edition - Simon Haykin Ch4yankev100% (2)

- Click To Get Netflix Cookies #10Dokumen23 halamanClick To Get Netflix Cookies #10kmkmkmBelum ada peringkat

- Critical Analysis of A WebsiteDokumen41 halamanCritical Analysis of A WebsiteAlamin SheikhBelum ada peringkat

- Deh 41304CDokumen43 halamanDeh 41304CahmedhassankhanBelum ada peringkat

- Internet BankingDokumen2 halamanInternet Bankingapi-323535487Belum ada peringkat

- RFID BENEFITSDokumen12 halamanRFID BENEFITSrz2491Belum ada peringkat

- Gerald Castillo, 28jun 1220 San JoseDokumen2 halamanGerald Castillo, 28jun 1220 San JoseGustavo Fuentes QBelum ada peringkat

- Current LogDokumen30 halamanCurrent LogGabriel Gamer922Belum ada peringkat

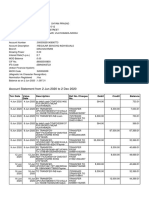

- Account Statement From 2 Jun 2020 To 2 Dec 2020: TXN Date Value Date Description Ref No./Cheque No. Debit Credit BalanceDokumen11 halamanAccount Statement From 2 Jun 2020 To 2 Dec 2020: TXN Date Value Date Description Ref No./Cheque No. Debit Credit Balanceanitha anuBelum ada peringkat

- Portable Programmer: FeaturesDokumen1 halamanPortable Programmer: FeaturesC VelBelum ada peringkat

- Seal Sign 2.4Dokumen130 halamanSeal Sign 2.4pepito123Belum ada peringkat

- Iso 27001:2005 Iso 27001:2005Dokumen1 halamanIso 27001:2005 Iso 27001:2005Talha SherwaniBelum ada peringkat

- SADlab 3 - 2005103Dokumen2 halamanSADlab 3 - 2005103Rahul ShahBelum ada peringkat

- Proposed Mississippi Sports Betting RulesDokumen21 halamanProposed Mississippi Sports Betting RulesUploadedbyHaroldGaterBelum ada peringkat

- Guide to Effective CommunicationDokumen39 halamanGuide to Effective CommunicationArunendu MajiBelum ada peringkat

- MSC Project ProposalDokumen4 halamanMSC Project ProposalUsman ChaudharyBelum ada peringkat

- Oversight The National Payement System PDFDokumen33 halamanOversight The National Payement System PDFdaniell tadesseBelum ada peringkat

- Public Key Cryptography:: UNIT-3Dokumen34 halamanPublic Key Cryptography:: UNIT-3Shin chan HindiBelum ada peringkat

- Gfi Event Manager ManualDokumen162 halamanGfi Event Manager Manualcristi0408Belum ada peringkat

- Vivares vs. STC, G.R. No. 202666, September 29, 2014Dokumen11 halamanVivares vs. STC, G.R. No. 202666, September 29, 2014Lou Ann AncaoBelum ada peringkat

- Revised BCA SyllabusDokumen30 halamanRevised BCA SyllabusHarishBelum ada peringkat

- Applied Cryptography 1Dokumen5 halamanApplied Cryptography 1Sehrish AbbasBelum ada peringkat

- Reliance Industries Limited: Hazira Manufacturing DivisionDokumen20 halamanReliance Industries Limited: Hazira Manufacturing DivisionTracy WhiteBelum ada peringkat

- QNAP Turbo NAS User Manual V3.7 ENG PDFDokumen703 halamanQNAP Turbo NAS User Manual V3.7 ENG PDFgibonulBelum ada peringkat

- SCCM Administrative ChecklistDokumen3 halamanSCCM Administrative ChecklistMandar JadhavBelum ada peringkat