Principal Component Analysis: Points of Significance

Diunggah oleh

cfisicasterJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Principal Component Analysis: Points of Significance

Diunggah oleh

cfisicasterHak Cipta:

Format Tersedia

THIS MONTH

POINTS OF SIGNIFICANCE a b c

A 0.6 PC1 0.6

B

C PC2

Expression

Expression

PC score

Principal component D

E σ2

F

G

PC3

PC4

σ2

analysis

PC5

1 H 1 1

0 I 0 0 PC6 0

0

−1 −1 −1

a b c d e f a b c d e f a b c d e f 1 2 3 4 5 6 1 2 3 4 5 6

Sample Sample Sample PC PC

PCA helps you interpret your data, but it will not d e

PC1 PC1 + PC2 PC1 + PC2 + PC3

always find the important patterns. A

1 C

A

B

0.5 B

Expression

C D

Principal component analysis (PCA) simplifies the complexity in D F

PC2

E 0

© 2017 Nature America, Inc., part of Springer Nature. All rights reserved.

E

high-dimensional data while retaining trends and patterns. It does F

G −0.5

H

this by transforming the data into fewer dimensions, which act as 1

0

H

I

I

−1 G

summaries of features. High-dimensional data are very common in −1

a b c d e f a b c d e f a b c d e f −0.5 0 0.5 1

Sample PC1

biology and arise when multiple features, such as expression of many

genes, are measured for each sample. This type of data presents sev- Figure 2 | PCA reduction of nine expression profiles from six to two

eral challenges that PCA mitigates: computational expense and an dimensions. (a) Expression profiles for nine genes (A–I) across six samples

(a−f), coded by color on the basis of shape similarity, and the expression

increased error rate due to multiple test correction when testing each

variance of each sample. (b) PC1–PC6 of the profiles in a. PC1 and PC2

feature for association with an outcome. PCA is an unsupervised reflect clearly visible trends, and the remaining capture only small

learning method and is similar to clustering1—it finds patterns with- fluctuations. (c) Transformed profiles, expressed as PC scores and σ2 of each

out reference to prior knowledge about whether the samples come component score. (d) The profiles reconstructed using PC1–PC3. (e) The 2D

from different treatment groups or have phenotypic differences. coordinates of each profile based on the scores of the first two PCs.

PCA reduces data by geometrically projecting them onto lower

dimensions called principal components (PCs), with the goal of + y/√2 (Fig. 1c). These coefficients are stored in a ‘PCA loading

finding the best summary of the data using a limited number of matrix’, which can be interpreted as a rotation matrix that rotates

PCs. The first PC is chosen to minimize the total distance between data such that the projection with greatest variance goes along the

the data and their projection onto the PC (Fig. 1a). By minimizing first axis. At first glance, PC1 closely resembles the linear regression

this distance, we also maximize the variance of the projected points, line3 of y versus x or x versus y (Fig. 1c). However, PCA differs from

σ 2 (Fig. 1b). The second (and subsequent) PCs are selected similarly, linear regression in that PCA minimizes the perpendicular distance

with the additional requirement that they be uncorrelated with all between a data point and the principal component, whereas linear

previous PCs. For example, projection onto PC1 is uncorrelated with regression minimizes the distance between the response variable

projection onto PC2, and we can think of the PCs as geometrically and its predicted value.

orthogonal. This requirement of no correlation means that the maxi- To illustrate PCA on biological data, we simulated expression

mum number of PCs possible is either the number of samples or the profiles for nine genes that fall into one of three patterns across six

number of features, whichever is smaller. The PC selection process samples (Fig. 2a). We find that the variance is fairly similar across

has the effect of maximizing the correlation (r2) (ref. 2) between samples (Fig. 2a), which tells us that no single sample captures the

data and their projection and is equivalent to carrying out multiple patterns in the data appreciably more than another. In other words,

linear regression3,4 on the projected data against each variable of the we need all six sample dimensions to express the data fully.

original data. For example, the projection onto PC2 has maximum Let’s now use PCA to see whether a smaller number of combina-

r2 when used in multiple regression with PC1. tions of samples can capture the patterns. We start by finding the

The PCs are defined as a linear combination of the data’s original six PCs (PC1–PC6), which become our new axes (Fig. 2b). We next

variables, and in our two-dimensional (2D) example, PC1 = x/√2 transform the profiles so that they are expressed as linear combina-

tions of PCs—each profile is now a set of coordinates on the PC

a b c axes—and calculate the variance (Fig. 2c). As expected, PC1 has the

v y u

σ2 v, PC2

y x~y

u, PC1 largest variance, with 52.6% captured by PC1 and 47.0% captured by

1

x 1.0 y~x

PC2. A useful interpretation of PCA is that r2 of the regression is the

y 1.0 percent variance (of all the data) explained by the PCs. As additional

x

u 1.78

x

PCs are added to the prediction, the difference in r2 corresponds

−1

to the variance explained by that PC. However, all the PCs are not

v 0.22

−2 −1 0 1 2 typically used because the majority of variance, and hence patterns

in the data, will be limited to the first few PCs. In our example, we

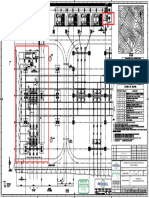

Figure 1 | PCA geometrically projects data onto a lower-dimensional space. can ignore PC3−PC6, which contribute little (0.4%) to explaining

(a) Projection is illustrated with 2D points projected onto 1D lines along the variance, and express the data in two dimensions instead of six.

a path perpendicular to the line (illustrated for the solid circle). (b) The

Figure 2d verifies visually that we can faithfully reproduce the

projections of points in a onto each line. σ2 for projected points can vary

(e.g., high for u and low for v). (c) PC1 maximizes the σ2 of the projection profiles using only PC1 and PC2. For example, the root mean

and is the line u from a. The second (v, PC2) is perpendicular to PC1. Note square (r.m.s.) distances of the original profile A from its 1D,

that PC1 is not the same as linear regression of y vs. x (y~x, dark brown) or x 2D and 3D reconstructions are 0.29, 0.03 and 0.01, respectively.

vs. y (x~y, light brown). Dashed lines indicates distances being minimized. Approximations using two or three PCs are useful, because we

NATURE METHODS | VOL.14 NO.7 | JULY 2017 | 641

THIS MONTH

a b c d a Nonlinear patterns b Nonorthogonal patterns c Obscured clusters

C4 150

C1

C3 150

C

C5 3

C2 100

B5 100

B3 2

B4

B1 50

B2 50

A5 1

A1

A2 B

PC2

PC2

0 0

−y

A3 0 D

A4

R R

E4 −50 −50

−1

E1

E5

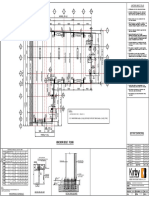

E Figure 4 | The assumptions of PCA place limitations on its use.

E2 A

D4 −2 −100 −100 (a–c) Limitations of PCA are that it may miss nonlinear data patterns

E3

D5

D2

(a); structure that is not orthogonal to previous PCs may not be well

D3

D1 −2 0 2 −200 0 200 −200 0 200 characterized (b); and PC1 (blue) may not split two obvious clusters (c). PC2

PC1 PC1 x

1 0

is shown in orange.

© 2017 Nature America, Inc., part of Springer Nature. All rights reserved.

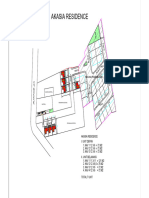

Figure 3 | PCA can help identify clusters in the data. (a) Complete linkage variables use different scales, such as expression and phenotype data,

hierarchical clustering of previously described expression profiles1 with the

it may be appropriate to standardize them such that each variable

expression of all 26 genes (listed vertically) represented with lines across 15

samples (horizontally). (b) When shown as coefficients of the first two PCs,

has unit variance. However, if the variables are already on the same

profiles group in a similar manner to the hierarchical clustering—groups scale, standardization is not normally appropriate, as it may actually

D and E are still difficult to separate. (c) PCA is not scale invariant. Shown distort the data. For instance, after standardization, gene expression

are the first two PC components of profiles whose first and second variable that varies dramatically owing to biological function may look simi-

(subject) were scaled by 300 and 200, respectively. A grouping very different lar to gene expression that varies only owing to noise.

from that in b is obtained. (d) The plot of the two scaled variables in each PCA is a good data summary when the interesting patterns

profile, ignoring the remaining 13 variables. The grouping of points is very

increase the variance of projections onto orthogonal components.

similar to that in c, because PCA puts more weight on variables with larger

absolute magnitude. But PCA also has limitations that must be considered when inter-

preting the output: the underlying structure of the data must be lin-

can summarize the data as a scatter plot. In our case, this plot ear (Fig. 4a), patterns that are highly correlated may be unresolved

easily identifies that the profiles fall into three patterns (Fig. 2e). because all PCs are uncorrelated (Fig. 4b), and the goal is to maxi-

Moreover, the projected data in such plots often appear less noisy, mize variance and not necessarily to find clusters (Fig. 4c).

which enhances pattern recognition and data summary. Conclusions made with PCA must take these limitations into

Such PCA plots are often used to find potential clusters. To account. As with all statistical methods, PCA can be misused. The

relate PCA to clustering, we return to the 26 expression profiles scaling of variables can cause different PCA results, and it is very

across 15 subjects from a previous column1, which we grouped important that the scaling is not adjusted to match prior knowledge

using hierarchical clustering (Fig. 3a). It turns out that we can of the data. If different scalings are tried, they should be described.

recover these clusters using only two PCs (Fig. 3b), reducing the PCA is a tool for identifying the main axes of variance within a data

dimensionality from 15 (the number of subjects) to 2. set and allows for easy data exploration to understand the key vari-

Scale matters with PCA. We illustrate this by showing PC1 and ables in the data and spot outliers. Properly applied, it is one of the

PC2 coefficients of each profile after artificially scaling up the most powerful tools in the data analysis tool kit.

expression in the first two subjects in every profile by factors of

COMPETING FINANCIAL INTERESTS

300 and 200 so that they are dominant (Fig. 3c). This scenario

The authors declare no competing financial interests.

might arise if expression in the first two subjects was measured

using a different technique, resulting in dramatically different Jake Lever, Martin Krzywinski & Naomi Altman

variance. In fact, when a small set of variables has a much larger 1. Altman, N. & Krzywinski, M. Nat. Methods 14, 545–546 (2017).

magnitude than others, the components in the PCA analysis are 2. Altman, N. & Krzywinski, M. Nat. Methods 12, 899−900 (2015).

3. Altman, N. & Krzywinski, M. Nat. Methods 12, 999–1000 (2015).

heavily weighted along those variables, while other variables are 4. Krzywinski, M. & Altman, N. Nat. Methods 12, 1103–1104 (2015).

ignored. As a consequence, the PCA simply recovers the values of

these high-magnitude variables (Fig. 3d). Jake Lever is a PhD candidate at Canada’s Michael Smith Genome Sciences Centre.

Martin Krzywinski is a staff scientist at Canada’s Michael Smith Genome Sciences

If the variance is dramatically different across variables (e.g., Centre. Naomi Altman is a Professor of Statistics at The Pennsylvania State

expression across patients in the scaled data in Fig. 3c), or if the University.

642 | VOL.14 NO.7 | JULY 2017 | NATURE METHODS

Anda mungkin juga menyukai

- 2007-2013 Toyota Tundra Electrical Wiring Diagrams PDFDokumen502 halaman2007-2013 Toyota Tundra Electrical Wiring Diagrams PDFMarcos Cabrera100% (2)

- 3-Storey Multipurpose HallDokumen9 halaman3-Storey Multipurpose Halljkligvk. jukjBelum ada peringkat

- The Green Hill - PartesDokumen55 halamanThe Green Hill - PartesVidal Apaza VargasBelum ada peringkat

- DRAKE - OceanographyDokumen472 halamanDRAKE - Oceanographycfisicaster0% (1)

- Doorking 1834 ManualDokumen66 halamanDoorking 1834 ManualclspropleaseBelum ada peringkat

- People/Occupancy Rules of Thumb: Bell - Ch10.indd 93 8/17/07 10:39:31 AMDokumen8 halamanPeople/Occupancy Rules of Thumb: Bell - Ch10.indd 93 8/17/07 10:39:31 AMPola OsamaBelum ada peringkat

- Turekian - OceansDokumen168 halamanTurekian - OceanscfisicasterBelum ada peringkat

- Too Much Time in Social Media and Its Effects On The 2nd Year BSIT Students of USTPDokumen48 halamanToo Much Time in Social Media and Its Effects On The 2nd Year BSIT Students of USTPLiam FabelaBelum ada peringkat

- The Rough Guide to Beijing (Travel Guide eBook)Dari EverandThe Rough Guide to Beijing (Travel Guide eBook)Penilaian: 2 dari 5 bintang2/5 (1)

- DX225LCA DX340LCA Sales MaterialDokumen46 halamanDX225LCA DX340LCA Sales MaterialAntonio Carrion100% (9)

- Zaxis 450 Class/460Lch Hydraulic Circuit Diagram (Backhoe) : Attach To Vol. No.: TT16J-E-02Dokumen1 halamanZaxis 450 Class/460Lch Hydraulic Circuit Diagram (Backhoe) : Attach To Vol. No.: TT16J-E-02RAJA DEEPANBelum ada peringkat

- Anexo N°2 EGP - EEC.D.27.PE.P.58025.16.092.01 Montalvo Ver02 O&MDokumen1 halamanAnexo N°2 EGP - EEC.D.27.PE.P.58025.16.092.01 Montalvo Ver02 O&MJosue Rojas de la CruzBelum ada peringkat

- JKR SPJ 1988 Standard Specification of Road Works - Section 1 - GeneralDokumen270 halamanJKR SPJ 1988 Standard Specification of Road Works - Section 1 - GeneralYamie Rozman100% (1)

- PoS PrincipalComponentsAnalysisDokumen3 halamanPoS PrincipalComponentsAnalysisクマール ヴァンツBelum ada peringkat

- Be - Computer Engineering - Semester 8 - 2023 - May - Iloc II - Project Management Rev 2019 C' SchemeDokumen2 halamanBe - Computer Engineering - Semester 8 - 2023 - May - Iloc II - Project Management Rev 2019 C' Scheme2020.dimple.madhwaniBelum ada peringkat

- WC PaperDokumen2 halamanWC Paperharshalbhamare987Belum ada peringkat

- 2023 Nov - Constitutional LawDokumen2 halaman2023 Nov - Constitutional LawAmit SCMBelum ada peringkat

- Receiver 20Dokumen4 halamanReceiver 20ratata ratataBelum ada peringkat

- 2023 Aug - Law of Crimes - 75 - 25Dokumen4 halaman2023 Aug - Law of Crimes - 75 - 25Amit SCMBelum ada peringkat

- PINE H6 Model B 20181212 SchematicDokumen18 halamanPINE H6 Model B 20181212 SchematicAllam Karthik MudhirajBelum ada peringkat

- RLQP 2023Dokumen1 halamanRLQP 2023ashish1803cuberBelum ada peringkat

- Nocturno+Op9+nº2+ (F Chopin)Dokumen1 halamanNocturno+Op9+nº2+ (F Chopin)Francisco Garcia RuedaBelum ada peringkat

- Contoh Rencana Site Pasirjati Kodya-Model - PDF NEWDokumen1 halamanContoh Rencana Site Pasirjati Kodya-Model - PDF NEWMas KholiBelum ada peringkat

- Sismin 8535 Tata LetakDokumen1 halamanSismin 8535 Tata Letaktegar budi (tbs)Belum ada peringkat

- H Anchor Bolt Plan: C B B B B A ADokumen1 halamanH Anchor Bolt Plan: C B B B B A AArsene LupinBelum ada peringkat

- Adr 1Dokumen4 halamanAdr 1mumbailab2020Belum ada peringkat

- Finale 2009 - (Bamm - Alto Sax2 PDFDokumen2 halamanFinale 2009 - (Bamm - Alto Sax2 PDFKervin GallardoBelum ada peringkat

- ARDO Schema ElectricaDokumen1 halamanARDO Schema Electricazavaidoc100% (1)

- A SM E U: View " A - A " of Support Type 12 Detail of Dynamic Anchor Bolt Detail of P/N 100Dokumen1 halamanA SM E U: View " A - A " of Support Type 12 Detail of Dynamic Anchor Bolt Detail of P/N 100Albet MulyonoBelum ada peringkat

- Em 2023Dokumen1 halamanEm 2023Mik VlogsBelum ada peringkat

- Be - Electrical Engineering - Semester 6 - 2022 - December - Special Electrical Machinerev 2019 C Scheme PDFDokumen1 halamanBe - Electrical Engineering - Semester 6 - 2022 - December - Special Electrical Machinerev 2019 C Scheme PDFOmkar GuptaBelum ada peringkat

- Be Mechanical Engineering Semester 3 2023 May Engineering Mathematics III Rev 2019 C SchemeDokumen2 halamanBe Mechanical Engineering Semester 3 2023 May Engineering Mathematics III Rev 2019 C Schemevalanjusahil27Belum ada peringkat

- Nada Violin ReMDokumen1 halamanNada Violin ReMEsteban AyalaBelum ada peringkat

- Nada Violin ReMDokumen1 halamanNada Violin ReMStbn AyalaBelum ada peringkat

- HF6 Winch With Controls Only DrawingDokumen1 halamanHF6 Winch With Controls Only DrawingVitor MarquesBelum ada peringkat

- Valve Linkage BoltDokumen1 halamanValve Linkage BoltMichael FieldsBelum ada peringkat

- Nov 2022 AUDITING IDokumen2 halamanNov 2022 AUDITING IVishakha VishwakarmaBelum ada peringkat

- 4.IT Sem8 BDA DLOCDokumen2 halaman4.IT Sem8 BDA DLOCKDBelum ada peringkat

- Alto Saxophone - Con Te PartiroDokumen1 halamanAlto Saxophone - Con Te PartiroandreramsBelum ada peringkat

- H128 76-10526-Detail Design: Drainage - Level 1 (High Level) Part 2Dokumen1 halamanH128 76-10526-Detail Design: Drainage - Level 1 (High Level) Part 2Mazen IsmailBelum ada peringkat

- Mathematics - 203-IXDokumen2 halamanMathematics - 203-IXKrishna ParikBelum ada peringkat

- Dlcoa Dec 2022 (Rev-2019-C Scheme)Dokumen1 halamanDlcoa Dec 2022 (Rev-2019-C Scheme)hajimastanbaapBelum ada peringkat

- A SM E U: View " A - A " of Support Type 11 Detail of Dynamic Anchor Bolt Detail of P/N 100Dokumen1 halamanA SM E U: View " A - A " of Support Type 11 Detail of Dynamic Anchor Bolt Detail of P/N 100Albet MulyonoBelum ada peringkat

- Skematik Pov Mega8Dokumen1 halamanSkematik Pov Mega8iwan ncoeyBelum ada peringkat

- Commodity DerivativesDokumen3 halamanCommodity DerivativesGaurav GhareBelum ada peringkat

- Iron Man (Trumpet)Dokumen2 halamanIron Man (Trumpet)santiago cerdaBelum ada peringkat

- Iron Man (Trumpet) PDFDokumen2 halamanIron Man (Trumpet) PDFsantiago cerdaBelum ada peringkat

- Ampeg B15N Clone Schem AnnotatedDokumen1 halamanAmpeg B15N Clone Schem AnnotatedDmedBelum ada peringkat

- Takeup-Payoff StandDokumen6 halamanTakeup-Payoff StandEr Rishu Ramesh VishBelum ada peringkat

- Distribution System ModelDokumen1 halamanDistribution System ModelAudre Maren DayadayBelum ada peringkat

- 02 May 2023 UpDokumen1 halaman02 May 2023 Upaaryamankattali75Belum ada peringkat

- Regulator SchematicDokumen1 halamanRegulator SchematicJuanmaccBelum ada peringkat

- The Chicken: C E7 F C D7Dokumen1 halamanThe Chicken: C E7 F C D7James ConnorBelum ada peringkat

- Concerto No: 1: (Hans Sperlstraße 7)Dokumen1 halamanConcerto No: 1: (Hans Sperlstraße 7)shmackBelum ada peringkat

- 1 - Que Onda É Essa de Carimbó TCFX - Melodia em C Clave de SolDokumen1 halaman1 - Que Onda É Essa de Carimbó TCFX - Melodia em C Clave de SolEvanio EvangelistaBelum ada peringkat

- TE Dec 2022Dokumen2 halamanTE Dec 2022bottomfragger993Belum ada peringkat

- 74 Vios / Yaris: Headlight (Except Projector Type) (S/D From Aug. 2018 Production)Dokumen1 halaman74 Vios / Yaris: Headlight (Except Projector Type) (S/D From Aug. 2018 Production)VôĐốiBelum ada peringkat

- Rubrics For CO1 Assignment 5Dokumen6 halamanRubrics For CO1 Assignment 5ShArIf AhMeDBelum ada peringkat

- Mulher Chorona - Trumpet in BB 2Dokumen2 halamanMulher Chorona - Trumpet in BB 2Kalebe PinheiroBelum ada peringkat

- A B C D E: Adjacent LotDokumen4 halamanA B C D E: Adjacent LotKarl SantiagoBelum ada peringkat

- LLB Question Paper With QuestionDokumen3 halamanLLB Question Paper With QuestionKetan shindeBelum ada peringkat

- Booklet Final Liz+valeria-28Dokumen1 halamanBooklet Final Liz+valeria-28Valeria ZamoraBelum ada peringkat

- Be - Computer Engineering Ai, DS, ML - Semester 5 - 2022 - December - Web Computing Rev 2019 C SchemeDokumen2 halamanBe - Computer Engineering Ai, DS, ML - Semester 5 - 2022 - December - Web Computing Rev 2019 C SchemeSahil SurveBelum ada peringkat

- Section 3 Hydraulic SystemDokumen2 halamanSection 3 Hydraulic SystemMhh AutoBelum ada peringkat

- VRO-5223087-17016-ID-MET-PL-730-1 (Ubicacion Tie Ins 30k)Dokumen1 halamanVRO-5223087-17016-ID-MET-PL-730-1 (Ubicacion Tie Ins 30k)QAQC Consorcio EHCSIBelum ada peringkat

- Ubicacion de Cisternas SR - PilarDokumen1 halamanUbicacion de Cisternas SR - PilarSolita Gonzales ArevaloBelum ada peringkat

- Michael J. McPhaden - Agus Santoso - Wenju Cai - El Niño Southern Oscillation in A Changing ClimateDokumen502 halamanMichael J. McPhaden - Agus Santoso - Wenju Cai - El Niño Southern Oscillation in A Changing ClimatecfisicasterBelum ada peringkat

- Math 121A: Homework 7 SolutionsDokumen9 halamanMath 121A: Homework 7 SolutionscfisicasterBelum ada peringkat

- Math 121A: Homework 9 Solutions: I Z Z Z ZDokumen3 halamanMath 121A: Homework 9 Solutions: I Z Z Z ZcfisicasterBelum ada peringkat

- Math 121A: Homework 4 Solutions: N N N RDokumen6 halamanMath 121A: Homework 4 Solutions: N N N RcfisicasterBelum ada peringkat

- Math 121A: Homework 8 Solutions: X 1 X 1 2 X 1 X 1 2Dokumen12 halamanMath 121A: Homework 8 Solutions: X 1 X 1 2 X 1 X 1 2cfisicasterBelum ada peringkat

- Math 121A: Midterm 1 Solutions: AnswerDokumen5 halamanMath 121A: Midterm 1 Solutions: AnswercfisicasterBelum ada peringkat

- Math 121A: Homework 11 Solutions: F y F yDokumen9 halamanMath 121A: Homework 11 Solutions: F y F ycfisicasterBelum ada peringkat

- Math 121A: Midterm InformationDokumen2 halamanMath 121A: Midterm InformationcfisicasterBelum ada peringkat

- Math 121A: Homework 5 Solutions: 1 0 1 Z 0 1 y Z 0 1 0 1 Z 0 1 0 2 1 0 1 0 2Dokumen7 halamanMath 121A: Homework 5 Solutions: 1 0 1 Z 0 1 y Z 0 1 0 1 Z 0 1 0 2 1 0 1 0 2cfisicasterBelum ada peringkat

- Math 121A: Homework 6 SolutionsDokumen8 halamanMath 121A: Homework 6 SolutionscfisicasterBelum ada peringkat

- Math 121A: Homework 10 SolutionsDokumen9 halamanMath 121A: Homework 10 SolutionscfisicasterBelum ada peringkat

- Solutions To Sample Final QuestionsDokumen28 halamanSolutions To Sample Final QuestionscfisicasterBelum ada peringkat

- Math 121A: Midterm 2Dokumen1 halamanMath 121A: Midterm 2cfisicasterBelum ada peringkat

- Math 121A: Homework 2 SolutionsDokumen5 halamanMath 121A: Homework 2 SolutionscfisicasterBelum ada peringkat

- Math 121A: Homework 1 Solutions: K 1 1 N K 1 K K 1 K 1 K 1 K K 1 KDokumen5 halamanMath 121A: Homework 1 Solutions: K 1 1 N K 1 K K 1 K 1 K 1 K K 1 KcfisicasterBelum ada peringkat

- Math 121A: Homework 3 solutions: log i /3 iπ/6 2πin/3Dokumen6 halamanMath 121A: Homework 3 solutions: log i /3 iπ/6 2πin/3cfisicasterBelum ada peringkat

- Math 121A: Homework 6 (Due March 13) : S C S CDokumen1 halamanMath 121A: Homework 6 (Due March 13) : S C S CcfisicasterBelum ada peringkat

- Math 121A: Homework 5 (Due March 6) : Part 1: Multiple IntegrationDokumen2 halamanMath 121A: Homework 5 (Due March 6) : Part 1: Multiple IntegrationcfisicasterBelum ada peringkat

- Math 121A: Homework 8 (Due April 10)Dokumen2 halamanMath 121A: Homework 8 (Due April 10)cfisicasterBelum ada peringkat

- Math 121A: Solutions To Final Exam: AnswerDokumen12 halamanMath 121A: Solutions To Final Exam: AnswercfisicasterBelum ada peringkat

- Lich King Chorus PDFDokumen21 halamanLich King Chorus PDFMacgy YeungBelum ada peringkat

- Aermod - DRM - Course NotesDokumen25 halamanAermod - DRM - Course NotesGhulamMustafaBelum ada peringkat

- Fashion Designing Sample Question Paper1Dokumen3 halamanFashion Designing Sample Question Paper1Aditi VermaBelum ada peringkat

- ZX400LCH 5GDokumen16 halamanZX400LCH 5Gusmanitp2Belum ada peringkat

- CasesDokumen4 halamanCasesSheldonBelum ada peringkat

- Global Review of Enhances Geothermal SystemDokumen20 halamanGlobal Review of Enhances Geothermal SystemKatherine RojasBelum ada peringkat

- TFTV3225 Service Manual 102010 Coby 26-32Dokumen21 halamanTFTV3225 Service Manual 102010 Coby 26-32bigbrother4275% (4)

- Solved Suppose That The Velocity of Circulation of Money Is VDokumen1 halamanSolved Suppose That The Velocity of Circulation of Money Is VM Bilal SaleemBelum ada peringkat

- Introduction To Content AnalysisDokumen10 halamanIntroduction To Content AnalysisfelixBelum ada peringkat

- Muhammad Safuan Othman (CD 4862)Dokumen24 halamanMuhammad Safuan Othman (CD 4862)Andy100% (1)

- Es 590Dokumen35 halamanEs 590Adnan BeganovicBelum ada peringkat

- Rectangular Wire Die Springs ISO-10243 Standard: Red Colour Heavy LoadDokumen3 halamanRectangular Wire Die Springs ISO-10243 Standard: Red Colour Heavy LoadbashaBelum ada peringkat

- Juniper M5 M10 DatasheetDokumen6 halamanJuniper M5 M10 DatasheetMohammed Ali ZainBelum ada peringkat

- IM0973567 Orlaco EMOS Photonview Configuration EN A01 MailDokumen14 halamanIM0973567 Orlaco EMOS Photonview Configuration EN A01 Maildumass27Belum ada peringkat

- Google-Analytics 01Dokumen28 halamanGoogle-Analytics 01Smm Store24Belum ada peringkat

- Odontogenic CystsDokumen5 halamanOdontogenic CystsBH ASMRBelum ada peringkat

- LIC Form - Intimation of Death Retirement Leaving ServiceDokumen1 halamanLIC Form - Intimation of Death Retirement Leaving ServicekaustubhBelum ada peringkat

- Safe and Gentle Ventilation For Little Patients Easy - Light - SmartDokumen4 halamanSafe and Gentle Ventilation For Little Patients Easy - Light - SmartSteven BrownBelum ada peringkat

- Tech Bee JavaDokumen57 halamanTech Bee JavaA KarthikBelum ada peringkat

- Managerial Economics - 1Dokumen36 halamanManagerial Economics - 1Deepi SinghBelum ada peringkat

- Nature Hill Middle School Wants To Raise Money For A NewDokumen1 halamanNature Hill Middle School Wants To Raise Money For A NewAmit PandeyBelum ada peringkat

- Execution Lac 415a of 2006Dokumen9 halamanExecution Lac 415a of 2006Robin SinghBelum ada peringkat

- Peace Corps Guatemala Welcome Book - June 2009Dokumen42 halamanPeace Corps Guatemala Welcome Book - June 2009Accessible Journal Media: Peace Corps DocumentsBelum ada peringkat

- Geometric Entities: Basic Gear TerminologyDokumen5 halamanGeometric Entities: Basic Gear TerminologyMatija RepincBelum ada peringkat

- Energy Facts PDFDokumen18 halamanEnergy Facts PDFvikas pandeyBelum ada peringkat

- 3d Mug Tutorial in 3d MaxDokumen5 halaman3d Mug Tutorial in 3d MaxCalvin TejaBelum ada peringkat