From Biological To Artificial Neuron Model

Diunggah oleh

krishnareddy_ch3130Deskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

From Biological To Artificial Neuron Model

Diunggah oleh

krishnareddy_ch3130Hak Cipta:

Format Tersedia

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

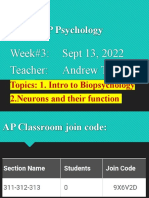

CHAPTER I From Biological to Artificial Neuron Model

Martin Gardner in his book titled 'The Annotated Snark" has the following note for the last illustration sketched by Holiday for Lewis Carroll's nonsense poem 'The Hunting of the Snark': "64. Holiday's illustration for this scene, showing the Bellman ringing a knell for the passing of the Baker, is quite a remarkable puzzle picture. Thousands of readers must have glanced at this picture without noticing (though they may have shivered with subliminal perception) the huge, almost transparent head of the Baker, abject terror on his features, as a gigantic beak (or is it a claw?) seizes his wrist and drags him into the ultimate darkness." You also may have not noticed that the face at the first glance, however with a little more care, you will it easily. As a further note on Martin Gardner's note, we can ask the question "Is there is any conventional computer at present with the capability of perceiving both the trees and Baker's transparent head in this picture at the same time?" Most probably, the answer is no. Although such a visual perception is an easy task for human being, we are faced with difficulties when sequential computers are to be programmed to perform visual operations. In a conventional computer, usually there exist a single processor implementing a sequence of arithmetic and logical operations, nowadays at speeds approaching billion

EE543 LECTURE NOTES . METU EEE . ANKARA

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

operations per second. However this type of devices have ability neither to adapt their structure nor to learn in the way that human being does. There is a large number of tasks for which it is proved to be virtually impossible to device an algorithm or sequence of arithmetic and/or logical operations. For example, in spite of many attempts, a machine has not yet been produced which will automatically read handwritten characters, or recognize words spoken by any speaker let alone translate from one language to another, or drive a car, or walk and run as an animal or human being [Hecht-Nielsen 88] What makes such a difference seems to be neither because of the processing speed of the computers nor because of their processing ability. Todays processors have a speed 106 times faster than the basic processing elements of the brain called neuron. When the abilities are compared, the neurons are much simpler. The difference is mainly due to the structural and operational trend. While in a conventional computer the instructions are executed sequentially in a complicated and fast processor, the brain is a massively parallel interconnection of relatively simple and slow processing elements.

1.1. Biological Neuron

It is claimed that the human central nervous system is comprised of about 1,3x1010 neurons and that about 1x1010 of them takes place in the brain. At any time, some of these neurons are firing and the power dissipation due this electrical activity is estimated to be in the order of 10 watts. Monitoring the activity in the brain has shown that, even when asleep, 5x107 nerve impulses per second are being relayed back and forth between the brain and other parts of the body. This rate is increased significantly when awake [Fischer 1987]. A neuron has a roughly spherical cell body called soma (Figure 1.1). The signals generated in soma are transmitted to other neurons through an extension on the cell body called axon or nerve fibres. Another kind of extensions around the cell body like bushy tree is the dendrites, which are responsible from receiving the incoming signals generated by other neurons. [Noakes 92]

EE543 LECTURE NOTES . METU EEE . ANKARA

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

Figure 1.1. Typical Neuron

An axon (Figure 1.2), having a length varying from a fraction of a millimeter to a meter in human body, prolongs from the cell body at the point called axon hillock. At the other end, the axon is separated into several branches, at the very end of which the axon enlarges and forms terminal buttons. Terminal buttons are placed in special structures called the synapses which are the junctions transmitting signals from one neuron to another (Figure 1.3). A neuron typically drive 103 to 104 synaptic junctions

Figure 1.2. Axon

The synaptic vesicles holding several thousands of molecules of chemical transmitters, take place in terminal buttons. When a nerve impulse arrives at the synapse, some of these chemical transmitters are discharged into synaptic cleft, which is the narrow gap between the terminal button of the neuron transmitting the signal and the membrane of the neuron receiving it. In general the synapses take place between an axon branch of a

EE543 LECTURE NOTES . METU EEE . ANKARA

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

neuron and the dendrite of another one. Although it is not very common, synapses may also take place between two axons or two dendrites of different cells or between an axon and a cell body.

Figure 1.3. The synapse

Neurons are covered with a semi-permeable membrane, with only 5 nanometer thickness. The membrane is able to selectively absorb and reject ions in the intracellular fluid. The membrane basically acts as an ion pump to maintain a different ion concentration between the intracellular fluid and extracellular fluid. While the sodium ions are continually removed from the intracellular fluid to extracellular fluid, the potassium ions are absorbed from the extracellular fluid in order to maintain an equilibrium condition. Due to the difference in the ion concentrations inside and outside, the cell membrane become polarized. In equilibrium the interior of the cell is observed to be 70 milivolts negative with respect to the outside of the cell. The mentioned potential is called the resting potential. A neuron receives inputs from a large number of neurons via its synaptic connections. Nerve signals arriving at the presynaptic cell membrane cause chemical transmitters to be released in to the synaptic cleft. These chemical transmitters diffuse across the gap and join to the postsynaptic membrane of the receptor site. The membrane of the postsynaptic cell gathers the chemical transmitters. This causes either a decrease or an increase in the soma potatial, called graded potantial, depending on the type of the chemicals released in to the synaptic cleft. The kind of synapses encouraging depolarization is called excitatory and the others discouraging it are called inhibitory

EE543 LECTURE NOTES . METU EEE . ANKARA 4

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

synapses. If the decrease in the polarization is adequate to exceed a threshold then the post-synaptic neuron fires. The arrival of impulses to excitatory synapses adds to the depolarization of soma while inhibitory effect tends to cancel out the depolarizing effect of excitatory impulse. In general, although the depolarization due to a single synapse is not enough to fire the neuron, if some other areas of the membrane are depolarized at the same time by the arrival of nerve impulses through other synapses, it may be adequate to exceed the threshold and fire. At the axon hillock, the excitatory effects result in the interruption the regular ion transportation through the cell membrane, so that the ionic concentrations immediately begin to equalize as ions diffuse through the membrane. If the depolarization is large enough, the membrane potential eventually collapses, and for a short period of time the internal potential becomes positive. The action potential is the name of this brief reversal in the potential, which results in an electric current flowing from the region at action potential to an adjacent region on axon with a resting potential. This current causes the potential of the next resting region to change, so the effect propagates in this manner along the axon membrane.

Figure 1.4. The action potential on axon

EE543 LECTURE NOTES . METU EEE . ANKARA

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

Once an action potential has passed a given point, it is incapable of being reexcited for a while called refractory period. Because the depolarized parts of the neuron are in a state of recovery and can not immediately become active again, the pulse of electrical activity always propagates in only forward direction. The previously triggered region on the axon then rapidly recovers to the polarized resting state due to the action of the sodium potassium pumps. The refractory period is about 1 milliseconds, and this limits the nerve pulse transmission so that a neuron can typically fire and generate nerve pulses at a rate up to 1000 pulses per second. The number of impulses and the speed at which they arrive at the synaptic junctions to a particular neuron determine whether the total excitatory depolarization is sufficient to cause the neuron to fire and so to send a nerve impulse down its axon. The depolarization effect can propagate along the soma membrane but these effects can be dissipated before reaching the axon hillock. However, once the nerve impulse reaches the axon hillock it will propagate until it reaches the synapses where the depolarization effect will cause the release of chemical transmitters into the synaptic cleft. The axons are generally enclosed by myelin sheath that is made of many layers of Schwann cells promoting the growth of the axon. The speed of propagation down the axon depends on the thickness of the myelin sheath that provides for the insulation of the axon from the extracellular fluid and prevents the transmission of ions across the membrane. The myelin sheath is interrupted at regular intervals by narrow gaps called nodes of Ranvier where extracellular fluid makes contact with membrane and the transfer of ions occur. Since the axons themselves are poor conductors, the action potential is transmitted as depolarizations occur at the nodes of Ranvier. This happens in a sequential manner so that the depolarization of a node triggers the depolarization of the next one. The nerve impulse effectively jumps from a node to the next one along the axon each node acting rather like a regeneration amplifier to compensate for losses. Once an action potential is created at the axon hillock, it is transmitted through the axon to other neurons. It is mostly tempted to conclude the signal transmission in the nervous system as having a digital nature in which a neuron is assumed to be either fully active or inactive. However this conclusion is not that correct, because the intensity of a neuron signal is coded in the frequency of pulses. A better conclusion would be to interpret the biological neural systems as if using a form of pulse frequency modulation to transmit

EE543 LECTURE NOTES . METU EEE . ANKARA 6

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

information. The nerve pulses passing along the axon of a particular neuron are of approximately constant amplitude but the number generated pulses and their time spacing is controlled by the statistics associated with the arrival at the neuron's many synaptic junctions of sufficient excitatory inputs [Mller and Reinhardt 90]. The representation of biophysical neuron output behavior is shown schematically in Figure 1.5 [Kandel 85, Sejnowski 81]. At time t=0 a neuron is excited; at time T, typically it may be of the order of 50 milliseconds, the neuron fires a train of impulses along its axon. Each of these impulses is practically of identical amplitude. Some time later, say around t=T+, the neuron may fire another train of impulses, as a result of the same excitation, though the second train of impulses will usually contain a smaller number. Even when the neuron is not excited, it may send out impulses at random, though much less frequently than the case when it is excited.

Figure 1.5. Representation of biophysical neuron output signal after excitation at tine t=0

A considerable amount of research has been performed aiming to explain the electrochemical structure and operation of a neuron, however still remains several questions, which need to be answered in future.

1.2. Artificial Neuron Model

As it is mentioned in the previous section, the transmission of a signal from one neuron to another through synapses is a complex chemical process in which specific transmitter substances are released from the sending side of the junction. The effect is to raise or lower the electrical potential inside the body of the receiving cell. If this graded potential reaches a threshold, the neuron fires. It is this characteristic that the artificial neuron model proposed by McCulloch and Pitts, [McCulloch and Pitts 1943] attempt to reproduce. The neuron model shown in Figure 1.6 is the one that widely used in artificial neural networks with some minor modifications on it.

EE543 LECTURE NOTES . METU EEE . ANKARA 7

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

Figure 1.6. Artificial Neuron

The artificial neuron given in this figure has N input, denoted as u1, u2, ...uN. Each line connecting these inputs to the neuron is assigned a weight, which are denoted as w1, w2, .., wN respectively. Weights in the artificial model correspond to the synaptic connections in biological neurons. The threshold in artificial neuron is usually represented by and the activation corresponding to the graded potential is given by the formula:

a = ( w ju j ) +

j =1 N

(1.2.1)

The inputs and the weights are real values. A negative value for a weight indicates an inhibitory connection while a positive value indicates an excitatory one. Although in biological neurons, has a negative value, it may be assigned a positive value in artificial neuron models. If is positive, it is usually referred as bias. For its mathematical convenience we will use (+) sign in the activation formula. Sometimes, the threshold is combined for simplicity into the summation part by assuming an imaginary input u0 =+1 and a connection weight w0 = . Hence the activation formula becomes:

a=

j =0

w ju j

(1.2.2)

The output value of the neuron is a function of its activation in an analogy to the firing frequency of the biological neurons:

EE543 LECTURE NOTES . METU EEE . ANKARA

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

x = f (a )

(1.2.3)

Furthermore the vector notation

a = w Tu +

(1.2.4)

is useful for expressing the activation for a neuron. Here, the jth element of the input vector u is uj and the jth element of the weight vector of w is wj. Both of these vectors are of size N. Notice that, wTu is the inner product of the vectors w and u, resulting in a scalar value. The inner product is an operation defined on equal sized vectors. In the case these vectors have unit length, the inner product is a measure of similarity of these vectors. Originally the neuron output function f(a) in McCulloch Pitts model proposed as threshold function, however linear, ramp and sigmoid and functions (Figure 1.6.) are also widely used output functions: Linear:

f ( a ) = a

(1.2.5)

Threshold:

f (a) = 0 a0 1 0<a

(1.2.6)

Ramp: 0 f (a ) = a / 1

a0 0<a <a

(1.2.7)

EE543 LECTURE NOTES . METU EEE . ANKARA

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

threshold

ramp

linear

sigmoid

Figure 1.7. Some neuron output functions

Sigmoid:

f (a) =

1 1 + e a

(1.2.8)

Though its simple structure, McCulloch-Pitts neuron is a powerful computational device. McCulloch and Pitts proved that a synchronous assembly of such neurons is capable in principle to perform any computation that an ordinary digital computer can, though not necessarily so rapidly or conveniently [Hertz et al 91].

Example 1.1: When the threshold function is used as the neuron output function, and binary input values 0 and 1 are assumed, the basic Boolean functions AND, OR and NOT of two variables can be implemented by choosing appropriate weights and threshold values, as shown in Figure 1.8. The first two neurons in the figure receives two binary inputs u1, u2 and produces y(u1, u2) for the Boolean functions AND and OR respectively. The last neuron implements the NOT function.

EE543 LECTURE NOTES . METU EEE . ANKARA

10

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

Boole Function

VE x = u1 u2 u1 u2

Logic Gate

x

Artificial Neuron

1

u1 u2 -1.5 1 1 -0.5 1 1 0.5 x 1 x x

1

VEYA x = u1 V u2 u1 u2 x u1 u2

DEL x = u1

u1

1 u1

Figure 1.8. Implementation of Boolean functions by artificial neuron

1.3. Network of Neurons

While a single artificial neuron is not able to implement some boolean functions, the problem is overcome by connecting the outputs of some neurons as input to the others, so constituting a neural network. Suppose that we have connected many artificial neurons that we introduced in Section 1.2 to form a network. In such a case, there are several neurons in the system, so we assign indices to the neurons to discriminate between them. Then to express the activation ith neuron, the formulas are modified as follows:

ai = ( w ji x j ) + i

j =1 N

(1.3.1)

where xj may be either the output of a neuron determined as x j = f j ( a j ). or an external input determined as: xj=uj (1.3.3) (1.3.2)

EE543 LECTURE NOTES . METU EEE . ANKARA

11

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

In some applications the threshold value i is determined by the external inputs. Due to the equation (1.3.1) sometimes it may be convenient to think all the inputs are connected to the network only through the threshold of some special neurons called the input neurons. They are just conveying the input value connected to their threshold as j= uj to their output xj with a linear output transfer function fj(a)=a. For a neural network we can define a state vector x in which the ith component is the output of ith neuron, that is xi. Furthermore we define a weight matrix W, in which the component wji is the weight of the connection from neuron j to neuron i. Therefore we can represent the system as:

x = f ( W T x + ) (1.3.4)

Here is the vector whose ith component is i and f is used to denote the vector function such that the function fi is applied at the ith component of the vector. Example 1.2: A simple example often given to illustrate the behavior of a neural networks is the one used to implement the XOR (exclusive OR) function. Notice that it is not possible to obtain exclusive-or or equivalence function, by using a single neuron. However this function can be obtained when outputs of some neurons are connected as inputs to some other neurons. Such a function can be obtained in several ways, only two of them being shown in Figure 1.9.

-1.5 -1.5 1 x1 -1 -1

1 -0.5 x3 1 x5 x4 1

u1

x1 1 1

-1

x3 x4

u1

u2

-1

x2 1

u2

x2

Figure 1.9. Two different implementations of the exclusive-or function by using artificial neurons

EE543 LECTURE NOTES . METU EEE . ANKARA

12

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

The second neural network of Figure 1.9 can be represented as:

where f1 and f2 being linear identity function, f3 and f4 being threshold functions. In the case of binary input, ui {0,1} or bipolar input, that is uj {-1,1}, all of fi may be chosen as threshold function. The diagonal entries of the weight matrix are zero, since the neurons do not have self-feedback in this example. The weight matrix is upper triangular, since the network is feedforward.

1.4. Network Architectures

Neural computing is an alternative to programmed computing which is a mathematical model inspired by biological models. This computing system is made up of a number of artificial neurons and a huge number of interconnections between them. According to the structure of the connections, we identify different classes of network architectures (Figure 1.10).

x1 x2 1 u1 u2 xi uj x1 x2

1 u1 u2

...

uj

... ... ...

xM hidden layer output layer

... ... ... ...

xM xi

...

uN input layer

uN input neurons hidden neurons output neurons

Figure 1.10 a) layered feedforward neural network b) nonlayered recurrent neural network

EE543 LECTURE NOTES . METU EEE . ANKARA

13

Ugur HALICI

ARTIFICIAL NEURAL NETWORKS

CHAPTER 1

In feedforward neural networks, the neurons are organized in the form of layers. The neurons in a layer get input from the previous layer and feed their output to the next layer. In this kind of networks connections to the neurons in the same or previous layers are not permitted. The last layer of neurons is called the output layer and the layers between the input and output layers are called the hidden layers. The input layer is made up of special input neurons, transmitting only the applied external input to their outputs. In a network if there is only the layer of input nodes and a single layer of neurons constituting the output layer then they are called single layer network. If there are one or more hidden layers, such networks are called multilayer networks. The structures, in which connections to the neurons of the same layer or to the previous layers are allowed, are called recurrent networks. For a feed-forward network always exists an assignment of indices to neurons resulting in a triangular weight matrix. Furthermore if the diagonal entries are zero this indicates that there is no self-feedback on the neurons. However in recurrent networks, due to feedback, it is not possible to obtain a triangular weight matrix with any assignment of the indices.

EE543 LECTURE NOTES . METU EEE . ANKARA

14

Anda mungkin juga menyukai

- Excitable Cells: Monographs in Modern Biology for Upper School and University CoursesDari EverandExcitable Cells: Monographs in Modern Biology for Upper School and University CoursesBelum ada peringkat

- Ch01p Biolocigal Artificial Neuron 2016-10-05Dokumen44 halamanCh01p Biolocigal Artificial Neuron 2016-10-05talkanat1Belum ada peringkat

- Theory: History of EEGDokumen7 halamanTheory: History of EEGdipakBelum ada peringkat

- Brains Bodies Behaviour (Psychology)Dokumen18 halamanBrains Bodies Behaviour (Psychology)Cassia LmtBelum ada peringkat

- Gen BioDokumen86 halamanGen BioPaul MagbagoBelum ada peringkat

- Module 3.1 Biology, Brain N Nervous SystemDokumen30 halamanModule 3.1 Biology, Brain N Nervous Systemamnarosli05Belum ada peringkat

- Biofact Sheet Nerves SynapsesDokumen4 halamanBiofact Sheet Nerves SynapsesJonMortBelum ada peringkat

- Nervous SystemDokumen20 halamanNervous SystemIsheba Warren100% (3)

- From Biological To Artificial Neuron ModelDokumen46 halamanFrom Biological To Artificial Neuron ModelskBelum ada peringkat

- Unit 1 BiopsychologyDokumen51 halamanUnit 1 BiopsychologyWriternal CommunityBelum ada peringkat

- NeurophysicsDokumen8 halamanNeurophysicsMakavana SahilBelum ada peringkat

- NervousDokumen13 halamanNervousJoan GalarceBelum ada peringkat

- BIO 361 Neural Integration LabDokumen5 halamanBIO 361 Neural Integration LabnwandspBelum ada peringkat

- Neurons. The Building Blocks of Nervous SystemDokumen3 halamanNeurons. The Building Blocks of Nervous SystemGinelQuenna De VillaBelum ada peringkat

- Unit-9 NERVOUS SYSTEMDokumen37 halamanUnit-9 NERVOUS SYSTEMAmrita RanaBelum ada peringkat

- NeurophysiologyDokumen31 halamanNeurophysiologyChezkah Nicole MirandaBelum ada peringkat

- DONE BIO MOD Nervous System PDFDokumen9 halamanDONE BIO MOD Nervous System PDFCristie SumbillaBelum ada peringkat

- Basic and Applied Studies of Spontaneous Expression Using The Facial Action Coding System (FACS)Dokumen21 halamanBasic and Applied Studies of Spontaneous Expression Using The Facial Action Coding System (FACS)nachocatalanBelum ada peringkat

- AP Psychology Module 9Dokumen29 halamanAP Psychology Module 9Klein ZhangBelum ada peringkat

- CH 4 - CoordinationDokumen23 halamanCH 4 - CoordinationnawarakanBelum ada peringkat

- Architecture of Neural NWDokumen79 halamanArchitecture of Neural NWapi-3798769Belum ada peringkat

- Group 9 Neuron Structure & FunctionDokumen14 halamanGroup 9 Neuron Structure & FunctionMosesBelum ada peringkat

- 10 Neuro PDFDokumen16 halaman10 Neuro PDFKamoKamoBelum ada peringkat

- Neurons and The Action PotentialDokumen3 halamanNeurons and The Action PotentialnesumaBelum ada peringkat

- Biological Bases Study GuideDokumen13 halamanBiological Bases Study Guideapi-421695293Belum ada peringkat

- Physiology of Nerve ImpulseDokumen7 halamanPhysiology of Nerve ImpulseBryan tsepang Nare100% (1)

- Lecture 03Dokumen25 halamanLecture 03hafsahaziz762Belum ada peringkat

- End Sem Report+ Final1 DocumentsDokumen27 halamanEnd Sem Report+ Final1 Documentsসাতটি তারার তিমিরBelum ada peringkat

- UNIT-1 Neural Networks-1 What Is Artificial Neural Network?Dokumen59 halamanUNIT-1 Neural Networks-1 What Is Artificial Neural Network?Ram Prasad BBelum ada peringkat

- 10 NeuroDokumen16 halaman10 NeuroAriol ZereBelum ada peringkat

- 03 - The Third-Person View of The MindDokumen22 halaman03 - The Third-Person View of The MindLuis PuenteBelum ada peringkat

- Biological Basis of BehaviorDokumen44 halamanBiological Basis of BehaviorAarohi PatilBelum ada peringkat

- End - Sem - For MergeDokumen42 halamanEnd - Sem - For Mergeমেঘে ঢাকা তারাBelum ada peringkat

- Intro Animal Physiology Ch-2.1Dokumen97 halamanIntro Animal Physiology Ch-2.1Jojo MendozaBelum ada peringkat

- Nerve Muscle PhysiologyDokumen79 halamanNerve Muscle Physiologyroychanchal562100% (2)

- Notes - Nervous SystemDokumen8 halamanNotes - Nervous Systemdarkadain100% (1)

- Neuron:: Fundamentals of Psychology Fundamentals of PsychologyDokumen3 halamanNeuron:: Fundamentals of Psychology Fundamentals of PsychologyChaudhary Muhammad Talha NaseerBelum ada peringkat

- What Is This Unit About?Dokumen6 halamanWhat Is This Unit About?Toby JackBelum ada peringkat

- 23 Nervous System: Encounter The PhenomenonDokumen5 halaman23 Nervous System: Encounter The PhenomenonJasmine AlsheikhBelum ada peringkat

- The Processing & Information at Higher Levels: Tutik ErmawatiDokumen19 halamanThe Processing & Information at Higher Levels: Tutik ErmawatiApsopela SandiveraBelum ada peringkat

- Lab Exercise 3Dokumen3 halamanLab Exercise 3amarBelum ada peringkat

- BIO 201 Chapter 12, Part 1 LectureDokumen35 halamanBIO 201 Chapter 12, Part 1 LectureDrPearcyBelum ada peringkat

- Anatomy and Physiology of The NerveDokumen18 halamanAnatomy and Physiology of The NervejudssalangsangBelum ada peringkat

- Lecture 2Dokumen5 halamanLecture 2Mahreen NoorBelum ada peringkat

- Anatomy and Physiology of NeuronsDokumen4 halamanAnatomy and Physiology of NeuronsVan Jasper Pimentel BautistaBelum ada peringkat

- Drugs and The BrainDokumen7 halamanDrugs and The Brainebola123Belum ada peringkat

- Impulses and Conduct Them Along The Membrane. ... The Origin of The Membrane Voltage Is TheDokumen3 halamanImpulses and Conduct Them Along The Membrane. ... The Origin of The Membrane Voltage Is TheHAZELANGELINE SANTOSBelum ada peringkat

- Functions of Nerve CellsDokumen3 halamanFunctions of Nerve CellsSucheta Ghosh ChowdhuriBelum ada peringkat

- Neuronal CommunicationDokumen9 halamanNeuronal CommunicationNatalia RamirezBelum ada peringkat

- NeuronDokumen23 halamanNeuronUnggul YudhaBelum ada peringkat

- Week 10 BioDokumen7 halamanWeek 10 BioTaze UtoroBelum ada peringkat

- Physiology Unit 4 - 231102 - 212048Dokumen23 halamanPhysiology Unit 4 - 231102 - 212048SanchezBelum ada peringkat

- Nervous System Review Answer KeyDokumen18 halamanNervous System Review Answer Keydanieljohnarboleda67% (3)

- Neurons and Neuroglia - PPTX 2nd LecDokumen23 halamanNeurons and Neuroglia - PPTX 2nd Leczaraali2405Belum ada peringkat

- Nervous CommunicationDokumen13 halamanNervous CommunicationWang PengfeiBelum ada peringkat

- Nervous System PowerpointDokumen39 halamanNervous System PowerpointManveer SidhuBelum ada peringkat

- Analzying Sensory Somatic Responces - System Reinforcemnt Worksheet1Dokumen2 halamanAnalzying Sensory Somatic Responces - System Reinforcemnt Worksheet1Creative DestinyBelum ada peringkat

- Analzying Sensory Somatic Responces - System Reinforcemnt Worksheet1Dokumen2 halamanAnalzying Sensory Somatic Responces - System Reinforcemnt Worksheet1Phoebe Sudweste QuitanegBelum ada peringkat

- The Nervous System and Sense OrgansDokumen75 halamanThe Nervous System and Sense OrgansMik MiczBelum ada peringkat

- 11 - Histology Lecture - Structure of Nervous TissueDokumen50 halaman11 - Histology Lecture - Structure of Nervous TissueAMIRA HELAYEL100% (1)

- 4 2-PharmacologyDokumen33 halaman4 2-PharmacologyAmiel GarciaBelum ada peringkat

- GCSE Psychology Topic A How Do We See Our World?Dokumen28 halamanGCSE Psychology Topic A How Do We See Our World?Ringo ChuBelum ada peringkat

- Chapter 7 - NERVOUS SYSTEM PDFDokumen58 halamanChapter 7 - NERVOUS SYSTEM PDFMary LimlinganBelum ada peringkat

- Neurons and Its FunctionDokumen4 halamanNeurons and Its FunctionKristol MyersBelum ada peringkat

- Neuronal CommunicationDokumen9 halamanNeuronal CommunicationNatalia RamirezBelum ada peringkat

- 9.00 Exam 1 Notes: KOSSLYN CHAPTER 2 - The Biology of Mind and Behavior: The Brain in ActionDokumen12 halaman9.00 Exam 1 Notes: KOSSLYN CHAPTER 2 - The Biology of Mind and Behavior: The Brain in ActiondreamanujBelum ada peringkat

- Psychology and Life 20th Edition Gerrig Test BankDokumen50 halamanPsychology and Life 20th Edition Gerrig Test BankquwirBelum ada peringkat

- NervousDokumen30 halamanNervousRenjith Moorikkaran MBelum ada peringkat

- Brain Mnemonic SDokumen36 halamanBrain Mnemonic SLouie OkayBelum ada peringkat

- MB 49 WinDokumen119 halamanMB 49 WinJackson OrtonBelum ada peringkat

- The Biological Basis of Behavior: Psychology: An Introduction Charles A. Morris & Albert A. Maisto © 2005 Prentice HallDokumen55 halamanThe Biological Basis of Behavior: Psychology: An Introduction Charles A. Morris & Albert A. Maisto © 2005 Prentice HallEsmeralda ArtigasBelum ada peringkat

- NeuronsDokumen18 halamanNeuronsErica Mae MejiaBelum ada peringkat

- Lecture No.1 Behavioral NeuroscienceDokumen5 halamanLecture No.1 Behavioral NeuroscienceAbrar AhmadBelum ada peringkat

- BiologicalBasisBehavior Bluestone PDFDokumen30 halamanBiologicalBasisBehavior Bluestone PDFdaphnereezeBelum ada peringkat

- Anaphy BipoDokumen3 halamanAnaphy BipoRhea RaveloBelum ada peringkat

- Week 6 Work Sheet 14Dokumen3 halamanWeek 6 Work Sheet 14Jennifer EdwardsBelum ada peringkat

- Lecture Note Asas Psikologi (The Biological Perspective)Dokumen66 halamanLecture Note Asas Psikologi (The Biological Perspective)Muhammad FaizBelum ada peringkat

- Barrons Ap Psych Notes 3Dokumen18 halamanBarrons Ap Psych Notes 3Sulphia IqbalBelum ada peringkat

- Nervous System: © 2012 Pearson Education, IncDokumen185 halamanNervous System: © 2012 Pearson Education, IncCjay AdornaBelum ada peringkat

- Praktikum Faal - PhysioEx Saraf MotorikDokumen4 halamanPraktikum Faal - PhysioEx Saraf MotorikFeri AlmanaBelum ada peringkat

- Psychology 114 Term 1 and 2 + Video Links (Edited)Dokumen101 halamanPsychology 114 Term 1 and 2 + Video Links (Edited)Amber WentzelBelum ada peringkat

- Weekly AssignmentDokumen7 halamanWeekly AssignmentBajwa AyeshaBelum ada peringkat

- Excitation Contraction Coupling: Nandini GoyalDokumen32 halamanExcitation Contraction Coupling: Nandini GoyalNandini GoyalBelum ada peringkat

- Biological Approach Booklet 2021Dokumen41 halamanBiological Approach Booklet 2021Abigail HARTBelum ada peringkat

- Abpg1103 - Introduction To Psychology. 2Dokumen56 halamanAbpg1103 - Introduction To Psychology. 2Anonymous HK3LyOqR2gBelum ada peringkat

- Test Bank For Understanding Psychology 10th Edition by MorrisDokumen91 halamanTest Bank For Understanding Psychology 10th Edition by MorrisHyde67% (3)

- Psychology 4th Edition Schacter Test BankDokumen86 halamanPsychology 4th Edition Schacter Test BankdawnholmesrtcongdbszBelum ada peringkat

- Genpsy 1ST SemDokumen28 halamanGenpsy 1ST SemAubrey RosalesBelum ada peringkat

- Bahasa Inggris Stahl Ion ChanelDokumen5 halamanBahasa Inggris Stahl Ion ChanelMuhammad Zul Fahmi AkbarBelum ada peringkat

- ChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveDari EverandChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveBelum ada peringkat

- ChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindDari EverandChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindBelum ada peringkat

- Scary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldDari EverandScary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldPenilaian: 4.5 dari 5 bintang4.5/5 (55)

- The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldDari EverandThe Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our WorldPenilaian: 4.5 dari 5 bintang4.5/5 (107)

- Generative AI: The Insights You Need from Harvard Business ReviewDari EverandGenerative AI: The Insights You Need from Harvard Business ReviewPenilaian: 4.5 dari 5 bintang4.5/5 (2)

- Artificial Intelligence: The Insights You Need from Harvard Business ReviewDari EverandArtificial Intelligence: The Insights You Need from Harvard Business ReviewPenilaian: 4.5 dari 5 bintang4.5/5 (104)

- ChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessDari EverandChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessBelum ada peringkat

- Artificial Intelligence: A Guide for Thinking HumansDari EverandArtificial Intelligence: A Guide for Thinking HumansPenilaian: 4.5 dari 5 bintang4.5/5 (30)

- Four Battlegrounds: Power in the Age of Artificial IntelligenceDari EverandFour Battlegrounds: Power in the Age of Artificial IntelligencePenilaian: 5 dari 5 bintang5/5 (5)

- 100M Offers Made Easy: Create Your Own Irresistible Offers by Turning ChatGPT into Alex HormoziDari Everand100M Offers Made Easy: Create Your Own Irresistible Offers by Turning ChatGPT into Alex HormoziBelum ada peringkat

- The Roadmap to AI Mastery: A Guide to Building and Scaling ProjectsDari EverandThe Roadmap to AI Mastery: A Guide to Building and Scaling ProjectsBelum ada peringkat

- ChatGPT: Is the future already here?Dari EverandChatGPT: Is the future already here?Penilaian: 4 dari 5 bintang4/5 (21)

- HBR's 10 Must Reads on AI, Analytics, and the New Machine AgeDari EverandHBR's 10 Must Reads on AI, Analytics, and the New Machine AgePenilaian: 4.5 dari 5 bintang4.5/5 (69)

- The AI Advantage: How to Put the Artificial Intelligence Revolution to WorkDari EverandThe AI Advantage: How to Put the Artificial Intelligence Revolution to WorkPenilaian: 4 dari 5 bintang4/5 (7)

- Working with AI: Real Stories of Human-Machine Collaboration (Management on the Cutting Edge)Dari EverandWorking with AI: Real Stories of Human-Machine Collaboration (Management on the Cutting Edge)Penilaian: 5 dari 5 bintang5/5 (5)

- Who's Afraid of AI?: Fear and Promise in the Age of Thinking MachinesDari EverandWho's Afraid of AI?: Fear and Promise in the Age of Thinking MachinesPenilaian: 4.5 dari 5 bintang4.5/5 (13)

- T-Minus AI: Humanity's Countdown to Artificial Intelligence and the New Pursuit of Global PowerDari EverandT-Minus AI: Humanity's Countdown to Artificial Intelligence and the New Pursuit of Global PowerPenilaian: 4 dari 5 bintang4/5 (4)

- Your AI Survival Guide: Scraped Knees, Bruised Elbows, and Lessons Learned from Real-World AI DeploymentsDari EverandYour AI Survival Guide: Scraped Knees, Bruised Elbows, and Lessons Learned from Real-World AI DeploymentsBelum ada peringkat

- Power and Prediction: The Disruptive Economics of Artificial IntelligenceDari EverandPower and Prediction: The Disruptive Economics of Artificial IntelligencePenilaian: 4.5 dari 5 bintang4.5/5 (38)

- Competing in the Age of AI: Strategy and Leadership When Algorithms and Networks Run the WorldDari EverandCompeting in the Age of AI: Strategy and Leadership When Algorithms and Networks Run the WorldPenilaian: 4.5 dari 5 bintang4.5/5 (21)

- The Digital Mind: How Science is Redefining HumanityDari EverandThe Digital Mind: How Science is Redefining HumanityPenilaian: 4.5 dari 5 bintang4.5/5 (2)

- Hands-On System Design: Learn System Design, Scaling Applications, Software Development Design Patterns with Real Use-CasesDari EverandHands-On System Design: Learn System Design, Scaling Applications, Software Development Design Patterns with Real Use-CasesBelum ada peringkat

- Python Machine Learning - Third Edition: Machine Learning and Deep Learning with Python, scikit-learn, and TensorFlow 2, 3rd EditionDari EverandPython Machine Learning - Third Edition: Machine Learning and Deep Learning with Python, scikit-learn, and TensorFlow 2, 3rd EditionPenilaian: 5 dari 5 bintang5/5 (2)

- Architects of Intelligence: The truth about AI from the people building itDari EverandArchitects of Intelligence: The truth about AI from the people building itPenilaian: 4.5 dari 5 bintang4.5/5 (21)