Sequential Analysis: Publication Details, Including Instructions For Authors and Subscription Information

Diunggah oleh

Kaifeng ChenDeskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Sequential Analysis: Publication Details, Including Instructions For Authors and Subscription Information

Diunggah oleh

Kaifeng ChenHak Cipta:

Format Tersedia

PLEASE SCROLL DOWN FOR ARTICLE

This article was downloaded by:

On: 25 June 2011

Access details: Access Details: Free Access

Publisher Taylor & Francis

Informa Ltd Registered in England and Wales Registered Number: 1072954 Registered office: Mortimer House, 37-

41 Mortimer Street, London W1T 3JH, UK

Sequential Analysis

Publication details, including instructions for authors and subscription information:

http://www.informaworld.com/smpp/title~content=t713597296

Bayesian Detection of Changes of a Poisson Process Monitored at Discrete

Time Points Where the Arrival Rates are Unknown

Marlo Brown

a

a

Department of Mathematics, Niagara University, New York, USA

To cite this Article Brown, Marlo(2008) 'Bayesian Detection of Changes of a Poisson Process Monitored at Discrete Time

Points Where the Arrival Rates are Unknown', Sequential Analysis, 27: 1, 68 77

To link to this Article: DOI: 10.1080/07474940701801994

URL: http://dx.doi.org/10.1080/07474940701801994

Full terms and conditions of use: http://www.informaworld.com/terms-and-conditions-of-access.pdf

This article may be used for research, teaching and private study purposes. Any substantial or

systematic reproduction, re-distribution, re-selling, loan or sub-licensing, systematic supply or

distribution in any form to anyone is expressly forbidden.

The publisher does not give any warranty express or implied or make any representation that the contents

will be complete or accurate or up to date. The accuracy of any instructions, formulae and drug doses

should be independently verified with primary sources. The publisher shall not be liable for any loss,

actions, claims, proceedings, demand or costs or damages whatsoever or howsoever caused arising directly

or indirectly in connection with or arising out of the use of this material.

Sequential Analysis, 27: 6877, 2008

Copyright Taylor & Francis Group, LLC

ISSN: 0747-4946 print/1532-4176 online

DOI: 10.1080/07474940701801994

Bayesian Detection of Changes of a Poisson Process

Monitored at Discrete Time Points Where

the Arrival Rates are Unknown

Marlo Brown

Department of Mathematics, Niagara University, New York, USA

Abstract: We look at a Poisson process where the arrival rate changes at some unknown

integer. At each integer, we count the number of arrivals that happened in that time interval.

We assume that the arrival rates before and after the change are unknown. For a loss

function consisting of the cost of late detection and a penalty for early stopping, we develop,

using dynamic programming, the one- and two-step look-ahead Bayesian stopping rules. We

provide some numerical results to illustrate the effectiveness of the detection procedures.

Keywords: Bayesian stopping rules; Change-point detection; Dynamic programming;

Poisson processes; Risk; Unknown arrival rates.

Subject Classications: 62L15; 60G40.

1. INTRODUCTION

Detection of changes in the distribution of random variables has become very

important in many aspects of life today. When there is an increase in the arrival

rates of patients coming to a hospital, it is important to detect this change as soon

as possible. This could be due to environmental factors or other issues. This is also

important in industry, where quality control depends upon being able to detect

changes in the process mean as soon as possible.

There are many published papers dealing with the topic of detection of

changes in the distribution of data. Shewhans (1926) control charts for means

and standard deviations, Pages (1954) continuous inspection schemes, and Pages

(1962) cumulative sum schemes are all process control procedures designed to detect

Received July 5, 2005, Revised January 24, 2007, February 13, 2007, August 9, 2007,

Accepted November 6, 2007

Recommended by N. Mukhopadhyay

Address correspondence to Marlo Brown, Department of Mathematics, Niagara

University, NY 14109, USA; Fax: 716-286-8215; E-mail: mbrown@niagara.edu

D

o

w

n

l

o

a

d

e

d

A

t

:

0

9

:

4

7

2

5

J

u

n

e

2

0

1

1

Bayesian Detection of Changes of a Poission Process 69

changes in the distributions of sequences of observed data. Chernoff and Zacks

(1964), Hinkley and Hinkley (1970), Kander and Zacks (1966), and Smith (1975)

investigated the Bayesian detection procedures for xed a sample sizes. See Zacks

(1983) for a survey of papers on change-point problems. Shiryaev (1978) studied

optimal Bayesian procedures for detecting changes when the process is stopped

as soon as a change is detected. Zacks and Barzily (1981) extended the work of

Shiryaev for the case of Bernoulli sequences. Optimal stopping rules were described

based on dynamic programming procedures. In general, nding optimal stopping

rules is quite difcult. Zacks and Barzily gave explicit formulas for nding one- and

two-step-ahead suboptimal procedures.

Several studies were published recently on detecting changes in the intensity of

a homogeneous ordinary Poisson process. Among these studies, we mention Peskir

and Shiryaev (2002), Herberts and Jensen (2004), and Brown and Zacks (2006a).

These papers dealt with a Poisson process that is monitored continuously.

Brown and Zacks (2006b) also studied a Poisson process that is monitored only

at discrete time points. In that paper, we look at the sequence of random variables

X

i

, where X

i

is the number of arrivals that occur in the time interval (i 1. i].

Thus, we see only the number of arrivals that happen in each time interval, not

exactly where the arrivals occurred within that time interval. In all of the above

papers, it was assumed that the arrival rates both before and after the change are

known. In the present paper, we assume that the arrival rates before and after the

change are unknown and the change is positive. We assume that, the change point

t is an unknown integer. We use a Bayesian approach. We put Shiryaevs (1978)

geometric prior distribution on the change point t and a uniform 0 - z

1

- z

2

- M

prior distribution on the two arrival rates z

1

and z

2

.

In Section 2, we discuss the posterior process of calculating the probability

that a change has already occurred by time n. In Section 3, we discuss the optimal

stopping rule based on dynamic programming procedures. We assume there is a cost

associated with stopping early and a cost associated with late detection. We develop

optimal stopping rules that will minimize the expected cost. In Section 4, we give

an explicit formulation of the two-step-ahead stopping rule. We conclude with a

numerical example showing how our method works.

2. THE BAYESIAN FRAMEWORK

We have a Poisson process, but we are only able to observe this process at xed

discrete time points. Let X

i

be the number of arrivals in the interval (i 1. i]. We

denote

n

as the o-eld generated by ]X

1

. . . . . X

n

]. At the end of some unknown

interval indexed by an integer t, the rate of arrivals increases from z

1

to z

2

.

We assume the arrival rates before and after the change, namely, z

1

and z

2

, are

unknown, as is the change point t. Our goal is to nd a detection procedure that

will detect the change point t as soon as possible. The event ]t = 0] represents the

case where the change happens prior to the rst observed interval. In this case all the

observations are taken from a process with the arrival rate at z

2

. The event ]t = r]

means that the change happens at the end of the rth interval. Dene T

r

=

r

i=1

X

i

as

the total number of arrivals before time r and T

nr

=

n

i=r+1

X

i

as the total number

of arrivals after time r. T

0

0 and T

0

= 0. Thus, when t = r, T

r

is a Poisson (rz

1

)

D

o

w

n

l

o

a

d

e

d

A

t

:

0

9

:

4

7

2

5

J

u

n

e

2

0

1

1

70 Brown

and T

nr

is Poisson ((n r)z

2

). If (z

1

. z

2

) are both known, the likelihood of t is

given by

L (t

n

) =

n1

r=0

I (t = r)z

T

r

1

z

T

nr

2

e

z

1r

z

2

(nr)

+I (t n)e

z

1

n

z

T

n

1

. (2.1)

When (z

1

. z

2

) are unknown, the prole likelihood function of t is the expected

value of (2.1) with respect to the prior distribution of (z

1

. z

2

). We assume a priori

that (z

1

. z

2

) is uniformly distributed in some interval 0 - z

1

- z

2

- M. That is, we

know that when the change happens, the arrival rates have increased, and we also

know that the arrival rates are bounded and uniform in a certain interval. Thus the

prole likelihood function of t, given

n

, is

L

(t

n

) = I (t = 0)

M

0

z

2

0

e

z

2

n

z

T

n

2

Jz

1

Jz

2

+

n1

r=1

I (t = r)

M

0

z

2

0

z

T

r

1

z

T

nr

2

e

z

1

rz

2

(nr)

Jz

1

Jz

2

+I (t n)

M

0

z

2

0

e

z

1

n

z

T

n

1

Jz

1

Jz

2

. (2.2)

For r = 0, let

L

0

=

M

0

z

2

0

e

z

2

n

z

T

n

2

Jz

1

Jz

2

=

(T

n

+1)!

n

T

n

+2

P(T

n

+1; Mn). (2.3)

where P(X; z) is the survival function of the Poisson distribution with parameter z.

For 1 r n 1, let

L

r

=

M

0

z

T

nr

2

e

z

2

(nr)

z

2

0

z

T

r

1

e

z

1

r

Jz

1

Jz

2

=

T

r

!

r

T

r

+1

M

0

z

T

nr

2

e

z

2

(nr)

P(T

r

; rz

2

)Jz

2

= T

r

!

;=0

r

;

(T

n

+; +1)!

(T

r

+; +1)!n

T

n

+; +2

P(T

n

+; +1; Mn). (2.4)

Finally, let

L

n

=

M

0

M

z

1

e

z

1

n

z

T

n

1

Jz

2

Jz

1

=

T

n

!

n

T

n

+1

MP(T

n

; Mn)

T

n

+1

n

P(T

n

+1; Mn)

. (2.5)

We put Shiryaevs geometric prior distribution h on the change point t,

h (t = i) =

for i = 0

(1 )(1 )

i1

for i 1.

(2.6)

D

o

w

n

l

o

a

d

e

d

A

t

:

0

9

:

4

7

2

5

J

u

n

e

2

0

1

1

Bayesian Detection of Changes of a Poission Process 71

Thus, we obtain that the posterior probability that there has been a change by time

n is

t

n

=

L

0

+(1 )

n1

r=0

(1 )

r

L

r

L

0

+(1 )

n1

r=0

(1 )

r

L

r

+(1 )(1 )

n

L

n

. (2.7)

Let

t

n

= 1 t

n

be the probability that there has not been a change by time n.

3. OPTIMAL STOPPING RULE

In this section, we nd an optimal stopping rule. Suppose the cost of stopping early

is c

1

loss units and the cost per time unit of stopping late is c

2

loss units. We want

to nd a stopping rule that will minimize the expected cost. We assume without loss

of generality that c

1

= 1 and c

2

= c is the relative cost of stopping late. Let R

n

be

the risk (or minimal expected loss) at time n. Therefore,

R

n

(

n

) = min(

t

n

. ct

n

+E(R

n+1

n

)). (3.1)

The stopping rule, then, would be to stop sampling when the risk of stopping

is smaller than the risk of continuing. According to (3.1), this rule is equivalent to

saying we stop sampling at the rst n such that

t

n

- ct

n

+E(R

n+1

n

). (3.2)

In order to calculate R

n

, we use the principle of dynamic programming. Suppose

we must stop after n

observations if we havent stopped before. Let R

(;)

n

be the

risk at time n when at most ; more observations are allowed. When ; = 0. n

= n.

Therefore at time n we must stop and our risk is

R

(0)

n

(

n

) =

t

n

. (3.3)

In general, for ; 1, we have a choice: we can stop and say that a change

has occurred, or we can continue and take another observation. If we decide to

continue, our risk is

R

(;)

n

= ct

n

+E

R

(;1)

n+1

n

. Therefore, our risk at time n is

R

(;)

n

= min(

t

n

.

R

(;)

n

). We can further write this as

R

(;)

n

=

t

n

+

R

(;)

n

t

n

=

t

n

+

c

t

n

(c +1) +E

R

(;1)

n+1

n+1

.

(3.4)

Theorem 3.1. The sequence ](

t

n

.

n

). n 1] is a super martingale.

Proof. Taking the conditional expectation of t

n+1

given the o-eld generated by

the rst n observations, we obtain

E(t

n+1

n+1

) = E(E(I (t - n +1)

n+1

)

n

)

= E(E(I (t - n)

n+1

)

n

) +E(E(I (t = 1)

n+1

)

n

)

=

t

n

+

t

n

. (3.5)

D

o

w

n

l

o

a

d

e

d

A

t

:

0

9

:

4

7

2

5

J

u

n

e

2

0

1

1

72 Brown

Therefore,

E

t

n+1

n+1

= (1 )

t

n

-

t

n

a.s. (3.6)

Accordingly, the sequence ]

t

n

. n 0] is a super martingale.

Corollary 3.1.

lim

n

t

n

= 0 a.s (3.7)

Proof. From equation (3.6),

E

t

n+1

n+1

= (1 )

t

n

= (1 )

n

(1 ). (3.8)

Thus, the lim sup

n

E(

t

n

) = 0. Since

t

n

0 with probability 1 for all n, we

obtain (3.7).

Thus, the one-step look-ahead procedure states that we stop sampling and

declare that a change has happened at the rst n such that

t

n

- q

=

c

c +

. (3.9)

Dene M

(;)

n

= E

R

(;1)

n+1

t

n+1

for ; 1, and M

(0)

n

0. Thus, the functions M

(;)

n

satisfy the recursive relationship

M

(;)

n

= E

c

t

n+1

(c +) +M

(;1)

n+1

. (3.10)

Hence, the risk can be written as a function of M

(;)

n

, namely,

R

(;)

n

=

t

n

+|c

t

n

(c +) +M

(;1)

n

]

. (3.11)

We then make the decision to stop when c

t

n

(c +) +M

(;1)

n

> 0, which is

equivalent to stopping when

t

n

- q

+

M

(;1)

n

c+

. We can dene the ;th-step-ahead

boundary to be l

(;)

n

= max

0. q

+

M

(;1)

n

c+

.

Lemma 3.1. lim

;

l

(;)

n

= l

n

exists. That is, there exists an optimal stopping

boundary.

Proof. By induction one can show that M

(;)

n

are decreasing in ;. Thus, l

(;)

n

are

decreasing functions of ;. Also, l

(;)

n

> 0 for all ;. By the monotone convergence

theorem, the limit exists.

Lemma 3.2. lim

n

l

(;)

n

= q

.

Proof. Let

I =

i c

t

n+1

(X

n

. i)(c +) > 0

. (3.12)

Therefore, if i I. then

t

n+1

- q

. Since by Corollary 2.1,

t

n

0,

lim

n

P

i

t

n+1

(X

n

. i) - q

] = 1. (3.13)

Therefore, lim

n

M

(1)

n

= 0. Thus, lim

n

l

(2)

n

= q

. By induction, we can show this

for all ;.

D

o

w

n

l

o

a

d

e

d

A

t

:

0

9

:

4

7

2

5

J

u

n

e

2

0

1

1

Bayesian Detection of Changes of a Poission Process 73

4. EXPLICIT FORMULATION OF THE TWO-STEP-AHEAD BOUNDARY

In this section, we evaluate the two-step-ahead boundary. Substituting ; = 2 into

equation (3.10), we obtain

R

(2)

n

=

t

n

+

c

t

n

(c +) +M

(1)

n

. (4.1)

To evaluate the two-step-ahead risk, we nd an expression for M

(1)

n

. First, we express

t

n+1

recursively as a function of

t

n

:

t

n+1

t

n

(1 )

V

n

D

n

(

n

)

D

n+1

(

n+1

)

. (4.2)

where, according to (2.5),

V

n

=

L

(n+1)

n+1

L

(n)

n

(4.3)

and L

(n)

r

is dened as L

r

when the likelihood is being calculated with respect to

n

,

the o-eld generated by the rst n observations. According to (3.10),

M

(1)

n

= E

c

t

n+1

(c +)

. (4.4)

We only need to take the expectation over those X

n+1

for which

t

n+1

> q

.

Lemma 4.1. If X

n+1

M, then

t

n+1

-

t

n

.

Proof. Let X

n+1

= m M. Let W

r

=

L

(n+1)

r

L

(n)

r

. For r = 0,

W

0

=

(T

n

+m+1)!n

T

n

+2

P(T

n

+m+1; M(n +1))

(T

n

+1)!(n +1)

T

n

+m+2

P(T

n

+1; Mn)

. (4.5)

If we divide equation (4.5) by

V

n

given in (4.3), we obtain,

W

0

V

n

=

E

G

, where E and

G are dened as

E = P(T

n

+m+1; M(n +1))

Mn

T

n

+1

P(T

n

; Mn) P(T

n

+1; Mn)

G = P(T

n

+1; Mn)

M(n +1)

T

n

+m+1

P(T

n

+m; M(n +1)) (4.6)

P(T

n

+m+1; M(n +1))

We show that W

0

>

V

n

. This is equivalent to showing that the rst term in E is

greater than the rst term in G. (See Lemma A.1 Appendix for details). Therefore,

W

0

V

n

> 1. Now, let 0 - r - n:

W

r

=

;

r

;

(T

n

+;+m+1)!P(T

n

+m+;+1;M(n+1))

(T

r

+;+1)!(n+1)

T

n

+;+m+1

;

r

;

(T

n

+;+1)!P(T

n

+;+1;Mn)

(T

r

+;+1)!n

T

n

+;+1

. (4.7)

D

o

w

n

l

o

a

d

e

d

A

t

:

0

9

:

4

7

2

5

J

u

n

e

2

0

1

1

74 Brown

To show that W

r

>

V

n

, it sufces to show that each term in the numerator times

V

n

is greater than the corresponding term in the denominator of (4.7) for each ;. r,

and n. This is equivalent to showing E

;

> G

;

, where E

;

and G

;

axe given by

E

;

=

MT

n

!(T

n

+; +m+1)!

(n +1)

;+1

P(T

n

+; +m+1; M(n +1))P(T

n

; Mn)

(T

n

+1)!(T

n

+; +m+1)!

n(n +1)

;+1

P(T

n

+; +m+1; M(n +1))P(T

n

+1; Mn); (4.8)

G

;

=

(T

n

+; +1)!(T

n

+m)

n

;+1

P(T

n

+; +1; Mn)P(T

n

+m; M(n +1))

(T

n

+; +1)!(T

n

+m+1)!

(n +1)n

;+1

P(T

n

+; +1; Mn)P(T

n

+m+1; M(n +1)). (4.9)

The inequality E

;

> G

;

can be shown by induction on ;, making use of

Lemma A.1 in the appendix. Therefore, W

r

>

V

n

for all r - n. Thus,

t

n+1

t

n

=

V

n

(1 )

L

(n)

0

+(1 )

n1

r=0

(1 )

r

L

(n)

r

+(1 )(1 )

n

L

(n)

n

L

(n+1)

0

+(1 )

n

r=0

(1 )

r

L

(n+1)

r

+(1 )(1 )

n+1

L

(n+1)

n+1

.

(4.10)

From (4.8) and (4.9), we see that L

(n+1)

r

= W

r

L

(n)

r

>

V

n

L

(n)

r

. Therefore,

t

n+1

t

n

-

(1 )

V

n

D

n

(

n

)

V

n

D

n

(

n

) +(1 )(1 )

n

L

(n+1)

n

+L

(n+1)

n+1

- 1. (4.11)

Therefore, if the (n +1)th observation is greater than M, the posterior probability

that a change has not occurred will decrease.

We use Lemma 4.1, to evaluate the two-step-ahead stopping rule. According

to equation (4.1), the two-step-ahead risk function is R

(2)

n

=

t

n

+

c

t

n

(c +) +

M

(1)

n

where M

(1)

n

is dened as M

(1)

n

= E||c

t

n+1

(c +)]

]. We only need to take

the expectation over those X

n+1

for which

t

n+1

> q

. Note that if

t

n

- q

, then

t(X

n

. k) - q

for k M. Thus for

t

n

- q

,

M

(1)

n

=

i-M

D

n+1

(X

n

. i)

D

n

(X

n

)

c (c +)

t

n

(1 )

V

n

(i)D

n

(X

n

)

D

n+1

(X

n

. i)

=

i-M

c

D

n+1

(X

n

. i)

D

n

(X

n

)

(c +)

t

n

(1 )

V

n

(i)

(4.12)

Thus the two-step-ahead boundary is

B

n.2

=

+

i-M

D

n+1

(X

n

. i)

D

n

(X

n

)

t

n

(1 )

V

n

+

(4.13)

Notice B

n.2

= l

(2)

n

when

t

n

- q

. Also when

t

n

> q

, both the one- and two-step

look ahead procedure will tell us to continue sampling. Thus the two-step-ahead

D

o

w

n

l

o

a

d

e

d

A

t

:

0

9

:

4

7

2

5

J

u

n

e

2

0

1

1

Bayesian Detection of Changes of a Poission Process 75

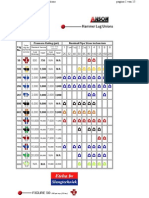

Table 1. Simulated values to show detection using the

2-step-ahead boundary

n T

n

t

n

B

n.2

1 0 0.9770 0.7788

2 1 0.9828 0.7907

3 1 0.9877 0.7786

4 4 0.9055 0.8236

5 4 0.9520 0.8100

6 6 0.9186 0.8246

7 6 0.9484 0.8144

8 7 0.9450 0.8164

9 8 0.9420 0.8181

10 9 0.9392 0.8196

11 14 0.8018 0.8552

12 15 0.8231 0.8526

13 17 0.7960 0.8571

14 19 0.7704 0.8571

15 23 0.6370 0.8571

16 24 0.6744 0.8571

17 28 0.5437 0.8571

18 29 0.5859 0.8571

19 30 0.6217 0.8571

20 31 0.6518 0.8571

procedure is equivalent to the procedure which tells us to stop when

t

n

- B

n.2

.

In Table 1, we do an example. We look at simulated values for z

1

= 1, when n 10

and z

2

= 2 when n > 10. We use a prior distribution of = 0.01, = 0.01. We

assume we know that the upper bound on the arrival rate M = 3, and the cost c of

stopping late is c = 0.06. In this example, using formula 3.9, we calculate the one-

step-ahead boundary q

= 0.8571. We show at each n, the total number of arrivals

up to time n, the posterior probability that a change has not taken place

t

n

and

the two-step-ahead boundary B

n.2

. One can observe that the B

n.2

approaches q

for

large n. This illustrates Lemma 3.2.

From looking at Table 1 one observes that we would stop at time 11. Since the

change took place at time 10, this shows that the detection rule is quick. We also

see the convergence between the one- and two-step-ahead stopping rules.

APPENDIX A

Lemma A.1. For all n. k, and m positive integers, and m M > 0,

k +m

n +1

P(k +m; M(n +1))P(k 1; Mn) >

k

n

P(k +m1; M(n +1))P(k; Mn). (A.1)

Proof. Taking differences and utilizing the relationship between the cumulative

distribution function of the Poisson distribution and the gamma distribution,

D

o

w

n

l

o

a

d

e

d

A

t

:

0

9

:

4

7

2

5

J

u

n

e

2

0

1

1

76 Brown

we obtain

k +m

n +1

P(k +m; M(n +1))P(k 1; Mn)

k

n

P(k +m1; M(n +1))P(k; Mn)

=

k +m

n +1

1

0

M

k+m+1

(n +1)

k+m+1

(k +m)!

x

k+m

e

M(n+1)x

1

0

M

k

n

k

(k 1)!

x

k1

e

Mnx

Jx Jx

k

n

1

0

M

k+m

(n +1)

k+m

(k +m1)!

x

k+m1

e

M(n+1)x

1

0

M

k+1

n

k+1

k!

x

k

e

Mnx

Jx Jx. (A.2)

Simplifying, we obtain that equation (A.2) is equal to

M

2k+m+1

1

0

(n +1)

k+m

(k +m1)!

x

k+m1

e

M(n+1)x

1

0

n

k

(k 1)!

x

k1

e

Mnx

(x x)Jx Jx. (A.3)

We will divide the integral into where x - x and a second integral where x > x.

Thus, equation (A.3) is equivalent to M

2k+m+1

times

1

0

(n +1)

k+m

(k +m1)!

x

k+m1

e

M(n+1)x

x

0

n

k

(k 1)!

x

k1

e

Mnx

(x x)Jx Jx

+

1

0

(n +1)

k+m

(k +m1)!

x

k+m1

e

M(n+1)x

1

x

n

k

(k 1)!

x

k1

e

Mnx

(x x)Jx Jx. (A.4)

Note that the second integral in (A.4) can be rewritten as

1

0

(n +1)

k+m

(k +m1)!

x

k+m1

e

M(n+1)x

1

x

n

k

(k 1)!

x

k1

e

Mnx

(x x)Jx Jx

=

1

0

x

0

M(n +1)

k+m

(k +m1)!

x

k+m1

e

M(n+1)x

n

k

(k 1)!

x

k1

e

Mnx

(x x)Jx Jx. (A.5)

Thus, equation (A.2) is

k +m

n +1

P(k +m; M(n +1))P(k 1; Mn)

k +m

n

P(k; M(n +1))P(k +m; Mn)

= M

2k+m+1

1

0

x

0

(n +1)

k+m

n

k

k!(k 1)!

x

k1

x

k1

e

Mn(x+x)

(x

m

e

Mx

x

m

e

Mx

)Jx Jx. (A.6)

g(x) = x

m

e

Mx

is an increasing function over (0,1) when m > M. Hence,

(n +1)

k+1

n

k

k!(k 1)!

x

k1

x

k1

e

n(x+x)

(x

m

e

Mx

x

m

e

Mx

) > 0 (A.7)

for all 0 - x - x - 1. Therefore,

k +m

n +1

P(k +m. M(n +1))P(k 1; Mn) >

k

n

P(k +m1; M(n +1))P(k; Mn)

(A.8)

for all n. k. m, and M such that m M.

D

o

w

n

l

o

a

d

e

d

A

t

:

0

9

:

4

7

2

5

J

u

n

e

2

0

1

1

Bayesian Detection of Changes of a Poission Process 77

ACKNOWLEDGMENTS

I would like to thank Dr. Shelemyahu Zacks for his helpful advice in preparing this

article. I would also like to thank the Editor, Associate Editor, and referees for their

helpful suggestions.

REFERENCES

Brown, M. and Zacks, S. (2006a). Note on Optimal Stopping for Possible Change

in the Intensity of an Ordinary Polsson Process, Probability and Statistics Letters

76: 14171425.

Brown, M. and Zacks, S. (2006b). On the Bayesian Detection of a Change in the Arrival

Rate of a Poisson Process Monitored at Discrete Epochs, International Journal for

Statistics and Systems 1: 113.

Chernoff, H. and Zacks, S. (1964). Estimating the Current Mean of a Normal Distribution

Which is Subjected to Changes in Time, Annals of Mathematical Statistics 35: 9991018.

Herberts, T. and Jensen, U. (2004). Optimal Detection of a Change Point in a

Poisson Process for Different Observation Schemes, Scandinavian Journal of Statistics

31: 347366.

Hinkley, D. and Hinkley, E. (1970). Inference about the Change-Point in a Sequence of

Binomial Variables, Biometrika 57: 477488.

Kander, Z. and Zacks, S. (1966). Test Procedures for Possible Changes in Parameters of

Statistical Distributions Occurring at Unknown Time Points, Annals of Mathematical

Statistics 37: 11961210.

Page, E. S. (1954). Continuous Inspection Schemes, Biometrika 41: 100114.

Page, E. S. (1962). Cumulative Sum Schemes Using Gouging, Technometrics 4: 97109.

Peskir, G. and Shiryaev, A. N. (2002). Solving the Poisson Disorder Problem, in Advances

in Finance and Stochastics: Essays in Honor of Dieter Sonderman, K. Sandmann and

P. J. Schonbucher, eds., pp. 295312, New York: Springer.

Shewhart, W. A. (1926). Quality Control Charts, Bell System Technical Journal 5: 593603.

Shiryaev, A. N. (1978). Statistical Sequential Analysis: Optimal Stopping Rules, in

Translations of American Mathematical Society 38, Providence: American Mathematical

Society, 149.

Smith, A. F. M. (1975). A Bayesian Approach to Inference about a Change-Point in the

Sequence of Random Variables, Biometrika 62: 407416.

Zacks, S. (1983). Survey of Classical and Bayesian Approaches to the Change-Point

Problem: Fixed Sample and Sequential Procedures for Testing and Estimation,

in Recent Advances in Statistics, M H. Rizvi, J. Rastogi, and D. Siegmund, eds.,

pp. 245269, New York: Academic Press.

Zacks, S. and Barzily, Z. (1981). Bayes Procedures for Detecting a Shift in the Probability

of Success in a Series of Bernoulli Trials, Journal of Statistical Planning and Inference

5: 107119.

D

o

w

n

l

o

a

d

e

d

A

t

:

0

9

:

4

7

2

5

J

u

n

e

2

0

1

1

Anda mungkin juga menyukai

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (588)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (400)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (121)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- Mathematics - Ijmcar - An Approach For Continuous Method For The GeneralDokumen12 halamanMathematics - Ijmcar - An Approach For Continuous Method For The GeneralTJPRC PublicationsBelum ada peringkat

- Lecture 3-1: HFSS 3D Design Setup: Introduction To ANSYS HFSSDokumen40 halamanLecture 3-1: HFSS 3D Design Setup: Introduction To ANSYS HFSSRicardo MichelinBelum ada peringkat

- 4 Hydrates & Hydrate PreventionDokumen26 halaman4 Hydrates & Hydrate PreventionWael Badri100% (1)

- BroombastickDokumen3 halamanBroombastickAllen SornitBelum ada peringkat

- Sedimentary Structures NotesDokumen12 halamanSedimentary Structures NotesTanmay KeluskarBelum ada peringkat

- Hamerlug Unions (Anson)Dokumen15 halamanHamerlug Unions (Anson)Leonardo ViannaBelum ada peringkat

- Prismic R10: Product SpecificationDokumen2 halamanPrismic R10: Product SpecificationParag HemkeBelum ada peringkat

- Pcs 0626Dokumen11 halamanPcs 0626Diêm Công ViệtBelum ada peringkat

- ST-130 - Manual PDFDokumen27 halamanST-130 - Manual PDFJean TorreblancaBelum ada peringkat

- Robinson VacDokumen10 halamanRobinson VacJajajaBelum ada peringkat

- Physics of Artificial GravityDokumen15 halamanPhysics of Artificial GravityWilliam RiveraBelum ada peringkat

- CEG 4011 S15 TibbettsDokumen5 halamanCEG 4011 S15 TibbettsGeotekers ItenasBelum ada peringkat

- Tachi e 2004Dokumen12 halamanTachi e 2004Ahsan Habib TanimBelum ada peringkat

- 2021 Physicsbowl Exam Equations: Continued On Next PageDokumen3 halaman2021 Physicsbowl Exam Equations: Continued On Next PageThe Entangled Story Of Our WorldBelum ada peringkat

- Ko 2015Dokumen22 halamanKo 2015Mudavath Babu RamBelum ada peringkat

- 01 Cleveland Ruth 1997 PDFDokumen21 halaman01 Cleveland Ruth 1997 PDFMarianne Zanon ZotinBelum ada peringkat

- Mark Scheme For Papers 1 and 2: Science TestsDokumen60 halamanMark Scheme For Papers 1 and 2: Science TestsDavid MagasinBelum ada peringkat

- On A Stress Resultant Geometrically Exact Shell Model Part IDokumen38 halamanOn A Stress Resultant Geometrically Exact Shell Model Part IzojdbergBelum ada peringkat

- TDS For Rubber Lining 1056HTDokumen2 halamanTDS For Rubber Lining 1056HTYao WangBelum ada peringkat

- Interactive Powerpoint Presentation On QuadrilateralsDokumen3 halamanInteractive Powerpoint Presentation On QuadrilateralsSkoochh KooBelum ada peringkat

- Design of Stub For Transmission Line TowersDokumen26 halamanDesign of Stub For Transmission Line Towersdebjyoti_das_685% (13)

- Nitro Shock AbsorbersDokumen18 halamanNitro Shock AbsorbersPavan KumarBelum ada peringkat

- SOALAN BiologiDokumen12 halamanSOALAN BiologiLoong Wai FamBelum ada peringkat

- Disentangling Classical and Bayesian Approaches To Uncertainty AnalysisDokumen19 halamanDisentangling Classical and Bayesian Approaches To Uncertainty Analysiszilangamba_s4535Belum ada peringkat

- Lutensol TO Types: Technical InformationDokumen15 halamanLutensol TO Types: Technical InformationLjupco AleksovBelum ada peringkat

- En 132011 3 2015 2019 PDFDokumen68 halamanEn 132011 3 2015 2019 PDFPrem Anand100% (2)

- Convergence IndicatorDokumen21 halamanConvergence Indicatorsikandar100% (1)

- Force Relations and Dynamics of Cutting Knife in A Vertical Disc Mobile Wood Chipper - Leonardo El J Pract TechnolDokumen14 halamanForce Relations and Dynamics of Cutting Knife in A Vertical Disc Mobile Wood Chipper - Leonardo El J Pract TechnolNguyenHuanBelum ada peringkat

- Engineering - Catalog - Screw Conveyor PDFDokumen64 halamanEngineering - Catalog - Screw Conveyor PDFanxaanBelum ada peringkat