Confidence Estimation of The State Predictor Method: (Petzold, Bagci, Trumler, Ungerer) @informatik - Uni-Augsburg - de

Diunggah oleh

Adi DanielDeskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Confidence Estimation of The State Predictor Method: (Petzold, Bagci, Trumler, Ungerer) @informatik - Uni-Augsburg - de

Diunggah oleh

Adi DanielHak Cipta:

Format Tersedia

Condence Estimation of the State Predictor

Method

Jan Petzold, Faruk Bagci, Wolfgang Trumler, and Theo Ungerer

Institute of Computer Science

University of Augsburg

Eichleitnerstr. 30, 86159 Augsburg, Germany

{petzold, bagci, trumler, ungerer}@informatik.uni-augsburg.de

Abstract. Pervasive resp. ubiquitous systems use context information

to adapt appliance behavior to human needs. Even more convenience is

reached if the appliance foresees the users desires. By means of context

prediction systems get ready for future human activities and can act

proactively.

Predictions, however, are never 100% correct. In case of unreliable pre-

diction results it is sometimes better to make no prediction instead of a

wrong prediction. In this paper we propose three condence estimation

methods and apply them to our State Predictor Method. The condence

of a prediction is computed dynamically and predictions may only be

done if the condence exceeds a given barrier. Our evaluations are based

on the Augsburg Indoor Location Tracking Benchmarks and show that

the prediction accuracy with condence estimation may rise by the fac-

tor 1.95 over the prediction method without condence estimation. With

condence estimation a prediction accuracy is reached up to 90%.

1 Introduction

Pervasive resp. ubiquitous systems aim at more convenience for daily activities

by relieving humans from monotonic routines. Here context prediction plays a de-

cisive role. Because of the sometimes unreliable results of predictions it is better

to make no prediction instead of a wrong prediction. Humans may be frustrated

by too many wrong predictions and wont believe in further predictions even

when the prediction accuracy improves over time. Therefore condence estima-

tion of context prediction methods is necessary. This paper proposes condence

estimation of the State Predictor Method [7,8,9,10], which is used for next lo-

cation prediction. Three condence estimation methods were developed for the

State Predictor Method.

The proposed condence estimation techniques can also be transferred to

other prediction methods like Markov, Neural Network, or Bayesian Predictors.

In the next section the State Predictor Method is introduced followed by sec-

tion 3 that denes three methods of condence estimation. Section 4 gives the

evaluation results, section 5 outlines the related work, and the paper ends by a

conclusion.

P. Markopoulos et al. (Eds.): EUSAI 2004, LNCS 3295, pp. 375386, 2004.

c Springer-Verlag Berlin Heidelberg 2004

376 J. Petzold et al.

2 The State Predictor Method

The State Predictor Method for next context prediction is motivated by branch

prediction techniques of current high performance microprocessors. We devel-

oped various types of predictors, 1-state vs. 2-state, 1-level vs. 2-level, and global

vs. local [7]. We describe two examples.

First, the 1-level 2-state predictor, or shorter the 2-state predictor, is based

on the 2-bit branch predictor. Analogically to the branch predictor the 2-state

predictor holds two states for every possible next context (a weak and a strong

state).

Figure 1 shows the state diagram of a 2-state predictor for a person who is

currently in context X with the three possible next contexts A, B, and C. If the

person is the rst time in context X and changes e.g. to context C the predictor

sets C0 as initial state. Next time the person is in context X context C will

be predicted as next context. If the prediction proves as correct the predictor

switches into the strong state C1 predicting the context C as next context again.

As long as the prediction is correct the predictor stays in the strong state C1.

If the person changes from context X in another context unequal C (e.g. A),

the predictor switches in the weak state C0 predicting still context C. If the

predictor is in a weak state (e.g. C0) and misses again, then the predictor is

set to the weak state of the new context which will be predicted next time. The

2-state predictor can be dynamically extended by new following contexts during

run-time.

GFED @ABC

A1

A

GFED @ABC

A0

A

B

z

z

z

z

z

z

z

C

D

D

D

D

D

D

D

GFED @ABC

B0

B

z

z

z

z

z

z

z

A

z

z

z

z

z

z

z

C

GFED @ABC

C0

C

D

D

D

D

D

D

D

A

D

D

D

D

D

D

D

B

GFED @ABC

B1

B

z

z

z

z

z

z

z

GFED @ABC

C1

C

C

D

D

D

D

D

D

D

Fig. 1. 2-state predictor with three contexts

The second example is the global 2-level 2-state predictor which consists of

a shift register and a pattern history table (see gure 2). A shift register of

length n stores the pattern of the last n occurred contexts. By this pattern the

shift register selects a 2-state predictor in the pattern history table which holds

for every pattern a 2-state predictor. These 2-state predictors are used for the

prediction. The length of the shift register is called the order of the predictor.

Condence Estimation of the State Predictor Method 377

shift register

p

1

. . . p

r

pattern history table

pattern 2-state

p

1

. . . p

r

C1

. . .

Fig. 2. Global 2-level 2-state predictor

The 2-level predictors can be extended using the Prediction by Partial Match-

ing (PPM) [10] or the Simple Prediction by Partial Matching (SPPM). PPM

means, a maximum order m is applied in the rst level instead of the xed or-

der. Then, starting with this maximum order m, a pattern is searched according

to the last m contexts. If no pattern of the length m is found, the pattern of

the length m1 is looked for, i.e. the last m1 contexts. This process can be

accomplished until the order 1 is reached. SPPM means, if the predictor with

the highest order cant deliver a result, the predictor with order 1 is requested

to do the prediction.

3 Condence Estimation Methods

3.1 Strong State

The Strong State condence estimation method is an extension of the 2-state

predictor and be applied to the 2-state predictor itself and the 2-level predictor

with 2-state predictor in the second level.

GFED @ABC ?>=< 89:;

A1

A

GFED @ABC

A0

A

B

z

z

z

z

z

z

z

C

D

D

D

D

D

D

D

GFED @ABC

B0

B

z

z

z

z

z

z

z

A

z

z

z

z

z

z

z

C

GFED @ABC

C0

C

D

D

D

D

D

D

D

A

D

D

D

D

D

D

D

B

GFED @ABC ?>=< 89:;

B1

B

z

z

z

z

z

z

z

GFED @ABC ?>=< 89:;

C1

C

C

D

D

D

D

D

D

D

Fig. 3. Strong State - Prediction only in the strong states

The idea of the Strong State method is simply that the predictor supplies the

prediction result only if the prediction is in a strong state. The dierence between

weak state (no prediction provided because of low condence) and strong state

(the context transition has been done at least two consecutive times) acts as

378 J. Petzold et al.

barrier for a good prediction. Figure 3 shows the 2-state predictor for the three

contexts A, B, and C. The prediction result of the appropriate context will be

supplied only in the strong states which are double framed in the gure.

As a generalization this method can be extended for the n-state predictor

that uses n (n > 2) states instead of the two states of the 2-state predictor.

Therefore a barrier k with 1 k n must be chosen to separate the weak and

the strong states. For the 2-state predictor (n = 2) it is k = 1. Let 1 s n be

the value of the current state of the 2-state predictor, then we can dene:

s k : supply prediction result (1)

s < k : detain prediction result (2)

No additional memory costs arise from the Strong State method.

3.2 Threshold

The Threshold condence estimation method is independent of the used predic-

tion algorithm. This method compares the accuracy of the previous predictions

with a given threshold. If the prediction accuracy is greater than the threshold

the prediction is assumed condent and the prediction result will be supplied. For

the 2-level predictors we consider all 2-state predictors for itself. That means for

all patterns we investigate separately the prediction accuracy of the appropriate

2-state predictor. The prediction accuracy of a 2-state predictor is calculated

from the numbers of correct and incorrect predictions of this predictor, more

precisely, the prediction accuracy is the fraction of the correct predictions and

the number of all predictions. Let c be the number of correct predictions, i the

number of incorrect predictions, and the threshold, then the method can be

described as follows:

c

c +i

: supply prediction result (3)

c

c +i

< : detain prediction result (4)

The threshold is a value between 0 (only mispredictions) and 1 (100% cor-

rect predictions in the past) and should be chosen well above 0.5 (50% correct

predictions).

For the global 2-level predictors the pattern history table must be extended

by two values, the number of correct predictions c and the number of incorrect

predictions i (see gure 4).

The global 2-level 2-state predictor starts with an empty pattern history ta-

ble. After the rst occurrence of a pattern the predictor cant deliver a prediction

result. If the context c follows this pattern the 2-state predictor will be initialized

with the weak state of the context c. Furthermore 0 will be set as initial values

for the entries of the number of correct predictions and the number of incorrect

predictions. After the second occurrence of this pattern a rst prediction is done

but with unknown reliability. The system cannot estimate the condence, since

Condence Estimation of the State Predictor Method 379

shift register

p

1

. . . p

r

pattern history table

pattern 2-state c i

p

1

. . . p

r

C1 x y

. . .

Fig. 4. Global 2-level 2-state predictor with threshold

there are no prediction results yet. Also the values of the correct and incorrect

predictions cannot be calculated by the formula for estimating the condence,

because the denominator is equal 0. If the prediction is proved as correct the

value of the number of correct predictions will be increased to 1. Otherwise the

number of incorrect predictions will be incremented. Furthermore the 2-state

predictor will be adapted accordingly. After the next occurrence of the pattern

the values can be calculated by the formula and the result of the formula can be

compared with the threshold.

A disadvantage of the Threshold method is the getting stuck over the

threshold. That means if the previous predictions were all correct the prediction

accuracy is much greater than the threshold. If now a behavior change to another

context occurs and incorrect predictions follow, it takes a long time until the

prediction accuracy is less than the threshold. Thus the uncondent predictor

will be considered as condent. A remedy is the method with condence counter.

3.3 Condence Counter

The Condence Counter method is independent of the used prediction algorithm,

too. This method estimates the prediction accuracy with a saturation counter.

The counter consists of n + 1 states (see gure 5).

n c

i

n 1

i

k

i

1

c

i

0

i

c

Fig. 5. Condence Counter - state graph

The initial state can be chosen optionally. Let s be the current state of the

condence counter. If a prediction result is proved as correct (c) the counter will

increase, that means the state graph changes from state s into the state s + 1.

If s = n the counter keeps the state s. Otherwise if the prediction is incorrect

(i) the counter switches into the state s 1. If s = 0 the counter keeps the state

s. Furthermore there is a state k with 0 k n + 1, which acts as a barrier

value: If s is greater or equal k the predictor is assumed as condent, otherwise

the predictor is uncondent and the prediction result will not be supplied. The

method can be described as follows.

s k : supply prediction result (5)

s < k : detain prediction result (6)

380 J. Petzold et al.

The prediction accuracy will be considered separately for every 2-state pre-

dictor. The pattern history table must be extended by one column which stores

the current state of the condence counter cc (see gure 6). Memory costs are

between the strong state and the threshold methods.

shift register

p

1

. . . p

r

pattern history table

pattern 2-state cc

p

1

. . . p

r

C1 k

. . .

Fig. 6. Global 2-level 2-state predictor with condence counter

In the following the initial phase for the example of the global 2-level 2-state

predictor is explained. Initially the pattern history table is empty. After the

rst occurrence of a pattern the algorithm cannot deliver a prediction result.

If the context c follows, the 2-state predictor of this pattern will be initialized.

Furthermore the condence counter of this pattern sets a value between 0 and

n as initial value, for example the value k. After the second occurrence of the

pattern the condence of the 2-state predictor can be estimated by the initial

state of the condence counter. If k was chosen as the initial state the prediction

result will be supplied, since k classies the predictor as condent. Otherwise, if

the current state of the condence counter is less than k, the prediction result

will be detained.

4 Evaluation

For our application, the Smart Doorplates [12], we choose next location predic-

tion instead of general context prediction. The Smart Doorplates direct visitors

to the current location of an oce owner based on a location tracking system

and predict the next location of the oce owner if absent.

The evaluation was performed with the Augsburg Indoor Location Tracking

Benchmarks [6]. These benchmarks consist of movement sequences of four test

persons (two of these are selected in the following) in a university oce building

reported separately during the summer term and the fall term 2003. In the

evaluation we dont consider the corridor, because a person can leave a room

only to the corridor. Furthermore we investigate only the global 2-level 2-state

predictors with order 1 to 5, the PPM predictor, and the SPPM predictor. All

considered predictors were evaluated for every test person with the corresponding

summer benchmarks followed by the fall benchmarks. The abbreviations in the

gures and tables stand for: G - global, 2L - 2-level, 2S - 2-state. The order and

the maximum order respectively is given in parentheses.

The training phase of the State Predictor Method depends on the order of the

predictor. As some patterns might never occur for predictors with high order,

the training phase of the 2-state predictors in the second level never starts.

Condence Estimation of the State Predictor Method 381

Therefore we consider the training for every pattern separately. The training

phase of the 2-state predictor for a pattern includes only the rst occurrence of

the pattern. After the second occurrence of the pattern the training phase of the

2-state predictor ends.

Next we introduce some denitions which will be needed for explanations:

demand number of the predictions which are requested by the user. In

our evaluation this number corresponds with the number of rooms a test

person has entered during the measurements.

supply number of prediction results which are delivered from the sys-

tem. This corresponds with the number of condent predictions which can

be calculated from the requested predictions minus the predictions of the

training phase of every 2-state predictor and the uncondent predictions.

quality fraction of the number of the correct predictions and the supply.

quality =

# correct predictions

supply

quantity ratio of supply and demand

quantity =

supply

demand

gain the factor which gives the improvement of the quality with con-

dence estimation opposite the quality without condence estimation.

gain =

quality with condence estimation

quality without condence estimation

4.1 Strong State

Figures 7 and 8 and tables 1 and 2 show the results of the measurements with

the Strong State method. The gures show the quality and the quantity of the

prediction method with and without condence estimation. The graphs with

condence estimation are denoted by SS in brackets. Quality, quantity, and gain

were measured for the two test persons.

Table 1. Gain - Person A

G-2L-2S (1) (2) (3) (4) (5)

Gain 1,46 1,48 1,47 1,50 1,39

G-2L-2S -PPM(5) -SPPM(5)

Gain 1,64 1,53

Table 2. Gain - Person D

G-2L-2S (1) (2) (3) (4) (5)

Gain 1,74 1,53 1,39 1,42 1,28

G-2L-2S -PPM(5) -SPPM(5)

Gain 1,40 1,62

The gain is always greater than 1, that means the quality improves with

condence estimation for all predictors. The greatest gain (1.74) is reached by

the global 2-level 2-state predictor with order 1 of test person D (see gure 8 and

382 J. Petzold et al.

Strong state - test person A

0,00%

10,00%

20,00%

30,00%

40,00%

50,00%

60,00%

70,00%

80,00%

90,00%

100,00%

G

-

2

L

-

2

S

(

1

)

G

-

2

L

-

2

S

(

1

)

[

S

S

]

G

-

2

L

-

2

S

(

2

)

G

-

2

L

-

2

S

(

2

)

[

S

S

]

G

-

2

L

-

2

S

(

3

)

G

-

2

L

-

2

S

(

3

)

[

S

S

]

G

-

2

L

-

2

S

(

4

)

G

-

2

L

-

2

S

(

4

)

[

S

S

]

G

-

2

L

-

2

S

(

5

)

G

-

2

L

-

2

S

(

5

)

[

S

S

]

G

-

2

L

-

2

S

-

P

P

M

(

5

)

G

-

2

L

-

2

S

-

P

P

M

(

5

)

[

S

S

]

G

-

2

L

-

2

S

-

S

P

P

M

(

5

)

G

-

2

L

-

2

S

-

S

P

P

M

(

5

)

[

S

S

]

Quality

Quantity

Fig. 7. Quality and Quantity - Person A

Strong state - test person D

0,00%

10,00%

20,00%

30,00%

40,00%

50,00%

60,00%

70,00%

80,00%

90,00%

100,00%

G

-

2

L

-

2

S

(

1

)

G

-

2

L

-

2

S

(

1

)

[

S

S

]

G

-

2

L

-

2

S

(

2

)

G

-

2

L

-

2

S

(

2

)

[

S

S

]

G

-

2

L

-

2

S

(

3

)

G

-

2

L

-

2

S

(

3

)

[

S

S

]

G

-

2

L

-

2

S

(

4

)

G

-

2

L

-

2

S

(

4

)

[

S

S

]

G

-

2

L

-

2

S

(

5

)

G

-

2

L

-

2

S

(

5

)

[

S

S

]

G

-

2

L

-

2

S

-

P

P

M

(

5

)

G

-

2

L

-

2

S

-

P

P

M

(

5

)

[

S

S

]

G

-

2

L

-

2

S

-

S

P

P

M

(

5

)

G

-

2

L

-

2

S

-

S

P

P

M

(

5

)

[

S

S

]

Quality

Quantity

Fig. 8. Quality and Quantity - Person D

table 2). The reason is that the predictor very often changes between various

weak states.

If we consider the quantity we can see that the value falls for all measure-

ments. In the case of the global 2-level 2-state predictor with order 5 the quantity

falls even below 10%. That means the algorithm delivers the prediction result

only in one out of ten requests.

4.2 Threshold

Figures 9 to 14 show the measurement results of the Threshold method. For

the two test persons the quality, the quantity, and the gain were measured. The

threshold varies between 0.2 and 0.8.

Threshold - test person A - quality

0,00%

10,00%

20,00%

30,00%

40,00%

50,00%

60,00%

70,00%

80,00%

90,00%

100,00%

0,0 0,2 0,3 0,4 0,5 0,6 0,7 0,8

G-2L-2S(1)

G-2L-2S(2)

G-2L-2S(3)

G-2L-2S(4)

G-2L-2S(5)

G-2L-2S-PPM(5)

G-2L-2S-SPPM(5)

Fig. 9. Quality - Person A

Threshold - test person D - quality

0,00%

10,00%

20,00%

30,00%

40,00%

50,00%

60,00%

70,00%

80,00%

90,00%

100,00%

0,0 0,2 0,3 0,4 0,5 0,6 0,7 0,8

G-2L-2S(1)

G-2L-2S(2)

G-2L-2S(3)

G-2L-2S(4)

G-2L-2S(5)

G-2L-2S-PPM(5)

G-2L-2S-SPPM(5)

Fig. 10. Quality - Person D

Normally the quality can be increased by increasing the threshold. The gures

of test person A show that the quality decreases by a threshold greater than or

equal 0.7. The reason is that the 2-state predictors reach a prediction accuracy

between 60 and 70 percent. The best quality with threshold was reached by the

predictor with order 1 for all test persons.

Also here the quantity decreases by increasing the threshold. The best quan-

tity with a high threshold was reached by the global 2-level 2-state predictor

with Prediction by Partial Matching. The gain shows the same behavior as the

Condence Estimation of the State Predictor Method 383

Threshold - test person A - quantity

0,00%

10,00%

20,00%

30,00%

40,00%

50,00%

60,00%

70,00%

80,00%

90,00%

100,00%

0,0 0,2 0,3 0,4 0,5 0,6 0,7 0,8

G-2L-2S(1)

G-2L-2S(2)

G-2L-2S(3)

G-2L-2S(4)

G-2L-2S(5)

G-2L-2S-PPM(5)

G-2L-2S-SPPM(5)

Fig. 11. Quantity - Person A

Threshold - test person D - quantity

0,00%

10,00%

20,00%

30,00%

40,00%

50,00%

60,00%

70,00%

80,00%

90,00%

100,00%

0,0 0,2 0,3 0,4 0,5 0,6 0,7 0,8

G-2L-2S(1)

G-2L-2S(2)

G-2L-2S(3)

G-2L-2S(4)

G-2L-2S(5)

G-2L-2S-PPM(5)

G-2L-2S-SPPM(5)

Fig. 12. Quantity - Person D

Threshold - test person A - gain

1,00

1,10

1,20

1,30

1,40

1,50

1,60

1,70

1,80

1,90

2,00

0,2 0,3 0,4 0,5 0,6 0,7 0,8

G-2L-2S(1)

G-2L-2S(2)

G-2L-2S(3)

G-2L-2S(4)

G-2L-2S(5)

G-2L-2S-PPM(5)

G-2L-2S-SPPM(5)

Fig. 13. Gain - Person A

Threshold - test person D - gain

1,00

1,10

1,20

1,30

1,40

1,50

1,60

1,70

1,80

1,90

2,00

0,2 0,3 0,4 0,5 0,6 0,7 0,8

G-2L-2S(1)

G-2L-2S(2)

G-2L-2S(3)

G-2L-2S(4)

G-2L-2S(5)

G-2L-2S-PPM(5)

G-2L-2S-SPPM(5)

Fig. 14. Gain - Person D

quality. If the threshold increases the gain increases too. The measurements of

test person A show that the gain decreases if the threshold is greater than or

equal 0.7. The best gain of 1.9 was reached by the predictor G-2L-2S(1) with a

threshold value of 0.9 for test person D (see gure 14).

4.3 Condence Counter

Figures 15 to 20 show the result of the measurements of the Condence Counter

method. For evaluation a condence counter with n = 3 was chosen, which can

be described by two bits as follows:

11

c

i

10

i

01

c

i

00

i

.

c

We set the state 10 as the initial state. We performed the measurements

with k {00, 01, 10, 11}, whereas k = 00 means the predictor works analog to

the correspond predictor without condence estimation. Again the quality, the

quantity, and the gain were measured.

As expected the quality increases in all cases if k increases. Accordingly the

quantity decreases with a higher k. The gain is analog to the quality. The best

quality of 90% (see gure 15) and the best gain of 1.95 (see gure 20) was reached

by the predictor G-2L-2S(1) with k = 11. For all test persons the best quantity

384 J. Petzold et al.

Confidence counter - test person A - quality

0,00%

10,00%

20,00%

30,00%

40,00%

50,00%

60,00%

70,00%

80,00%

90,00%

100,00%

00 01 10 11

G-2L-2S(1)

G-2L-2S(2)

G-2L-2S(3)

G-2L-2S(4)

G-2L-2S(5)

G-2L-2S-PPM(5)

G-2L-2S-SPPM(5)

Fig. 15. Quality - Person A

Confidence counter - test person D - quality

0,00%

10,00%

20,00%

30,00%

40,00%

50,00%

60,00%

70,00%

80,00%

90,00%

100,00%

00 01 10 11

G-2L-2S(1)

G-2L-2S(2)

G-2L-2S(3)

G-2L-2S(4)

G-2L-2S(5)

G-2L-2S-PPM(5)

G-2L-2S-SPPM(5)

Fig. 16. Quality - Person D

Confidence counter - test person A - quantity

0,00%

10,00%

20,00%

30,00%

40,00%

50,00%

60,00%

70,00%

80,00%

90,00%

100,00%

00 01 10 11

G-2L-2S(1)

G-2L-2S(2)

G-2L-2S(3)

G-2L-2S(4)

G-2L-2S(5)

G-2L-2S-PPM(5)

G-2L-2S-SPPM(5)

Fig. 17. Quantity - Person A

Confidence counter - test person D - quantity

0,00%

10,00%

20,00%

30,00%

40,00%

50,00%

60,00%

70,00%

80,00%

90,00%

100,00%

00 01 10 11

G-2L-2S(1)

G-2L-2S(2)

G-2L-2S(3)

G-2L-2S(4)

G-2L-2S(5)

G-2L-2S-PPM(5)

G-2L-2S-SPPM(5)

Fig. 18. Quantity - Person D

Confidence counter - test person A - gain

1,00

1,10

1,20

1,30

1,40

1,50

1,60

1,70

1,80

1,90

2,00

01 10 11

G-2L-2S(1)

G-2L-2S(2)

G-2L-2S(3)

G-2L-2S(4)

G-2L-2S(5)

G-2L-2S-PPM(5)

G-2L-2S-SPPM(5)

Fig. 19. Gain - Person A

Confidence counter - test person D - gain

1,00

1,10

1,20

1,30

1,40

1,50

1,60

1,70

1,80

1,90

2,00

01 10 11

G-2L-2S(1)

G-2L-2S(2)

G-2L-2S(3)

G-2L-2S(4)

G-2L-2S(5)

G-2L-2S-PPM(5)

G-2L-2S-SPPM(5)

Fig. 20. Gain - Person D

were reached by the predictors with prediction by partial matching followed by

the predictors with simple prediction by partial matching. The predictors with

order 5 reached the lowest quantity in all cases.

5 Related Work

A number of context prediction methods are used in ubiquitous computing [1,4,

5,14,15]. None of them regards the condence of the predictions.

Condence Estimation of the State Predictor Method 385

Because the State Predictor Method was motivated by some branch predic-

tion methods of current high performance microprocessors, we are inuenced by

the condence estimation methods in this research area. Smith [11] proposed

a condence estimator based on the saturation counter method. This approach

corresponds to the Condence Counter method. Grunwald et al. [2] investigated

additionally to the given techniques a method which counts the correct predic-

tions of every branch and compare this number with a threshold. If this number

is greater than the threshold the branch has a high condence, otherwise a low

condence. This method corresponds to the Threshold condence estimation

method.

Jacobson et al. [3] use a table of shift registers as condence estimator, the so

called n-bit correct/incorrect registers additionally to the base branch predictor.

For every correct prediction a 0 and for a incorrect prediction a 1 will be entered

into the register. A so-called reduction function classies the condence as high

or low. An example of the function is the number of 1s compared to a threshold.

If the number of 1s is greater than the threshold, the condence is classied as

low, otherwise as high. Furthermore Jacobson et al. propose a two level method

which works with two of the introduced tables.

Tyson et al. [13] investigated the distribution of the incorrect predictions.

They observed that a low number of branches include the main part of incorrect

predictions. They proposed a condence estimator which assigns a high con-

dence to determined number of branches, and a low condence to the other.

The methods of Jacobson et al. and Tyson et al. may inuence further con-

dence estimation methods in context prediction.

6 Conclusion

This paper introduced condence estimation into the State Predictor Method.

The motivation was that in many cases it is better to make no prediction in-

stead of a wrong prediction to avoid frustrations by too many mispredictions.

The condence estimation methods may automatically suppress predictions in

a rst training state of the predictor where mispredictions may occur often. We

proposed three methods for condence estimation.

For all three methods the prediction accuracy increases with condence esti-

mation. The best gain of 1.95 was reached for the Condence Counter method.

In this case the prediction accuracy without condence estimation was 44.5%,

and the prediction accuracy with condence estimation reached 86.6%. But the

quantity decreases with the estimation, that means the system delivers predic-

tion results less often. The best result was reached again with the Condence

Counter method. Here a prediction accuracy of about 90% is reached. But in

this case the quantity is about 33%.

We plan to combine condence estimation with other predition methods, in

particular Markov, Neural Network, and Bayesian Predictors.

386 J. Petzold et al.

References

1. D. Ashbrook and T. Starner. Using GPS to learn signicant locations and predict

movement across multiple users. Personal and Ubiquitous Computing, 7(5):275

286, 2003.

2. D. Grunwald, A. Klauser, S. Manne, and A. Pleszkun. Condence Estimation for

Speculation Control. In Proceedings of the 29th Annual International Symposium

on Computer Architecture, pages 122131, Barcelona, Spain, June-July 1998.

3. E. Jacobsen, E. Rotenberg, and J. E. Smith. Assigning Condence to Conditional

Branch Predictions. In Proceedings of the 29th Annual International Symposium

on Microarchitecture, pages 142152, Paris, France, December 1996.

4. M. C. Mozer. The Neural Network House: An Environment that Adapts to its

Inhabitants. In AAAI Spring Symposium on Intelligent Environments, pages 110

114, Menlo Park, CA, USA, 1998.

5. D. J. Patterson, L. Liao, D. Fox, and H. Kautz. Inferring High-Level Behavior from

Low-Level Sensors. In 5th International Conference on Ubiquitous Computing,

pages 7389, Seattle, WA, USA, 2003.

6. J. Petzold. Augsburg Indoor Location Tracking Benchmarks. Technical Report

2004-9, Institute of Computer Science, University of Augsburg, Germany, February

2004. http://www.informatik.uni-augsburg.de/skripts/techreports/.

7. J. Petzold, F. Bagci, W. Trumler, and T. Ungerer. Context Prediction Based

on Branch Prediction Methods. Technical Report 2003-14, Institute of Computer

Science, University of Augsburg, Germany, July 2003. http://www.informatik.uni-

augsburg.de/skripts/techreports/.

8. J. Petzold, F. Bagci, W. Trumler, and T. Ungerer. Global and Local Context

Prediction. In Articial Intelligence in Mobile Systems 2003 (AIMS 2003), Seattle,

WA, USA, October 2003.

9. J. Petzold, F. Bagci, W. Trumler, and T. Ungerer. The State Predictor Method

for Context Prediction. In Adjunct Proceedings Fifth International Conference on

Ubiquitous Computing, Seattle, WA, USA, October 2003.

10. J. Petzold, F. Bagci, W. Trumler, T. Ungerer, and L. Vintan. Global State Context

Prediction Techniques Applied to a Smart Oce Building. In The Communication

Networks and Distributed Systems Modeling and Simulation Conference, San Diego,

CA, USA, January 2004.

11. J. E. Smith. A Study of Branch Prediction Strategies. In Proceedings of the 8th

Annual Symposium on Computer Architecture, pages 135147, Minneapolis, MI,

USA, May 1981.

12. W. Trumler, F. Bagci, J. Petzold, and T. Ungerer. Smart Doorplate. In First Inter-

national Conference on Appliance Design (1AD), Bristol, GB, May 2003. Reprinted

in Pers Ubiquit Comput (2003) 7: 221-226.

13. G. Tyson, K. Lick, and M. Farrens. Limited Dual Path Execution. Technical

Report CSE-TR 346-97, University of Michigan, 1997.

14. K. van Laerhoven, K. A. Aidoo, and S. Lowette. Real-time Analysis of Data

from Many Sensors with Neural Networks. In Proceedings of the International

Symposium on Wearable Computers (ISWC 2001), Zurich, Switzerland, October

2001.

15. K. van Laerhoven and O. Cakmakci. What Shall We Teach Our Pants? In Pro-

ceedings of the 4th International Symposium on Wearable Computers (ISWC 2000),

pages 7783, Atlanta, GA, USA, 2000.

Anda mungkin juga menyukai

- Dynamic Cache Management TechniqueDokumen31 halamanDynamic Cache Management TechniqueJohn ElekwaBelum ada peringkat

- The Design of A Custom 32-Bit RISC CPU and Port To GCC Compiler BDokumen179 halamanThe Design of A Custom 32-Bit RISC CPU and Port To GCC Compiler B吕治宽Belum ada peringkat

- Sample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignDari EverandSample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignBelum ada peringkat

- CPU Structure and FunctionDokumen30 halamanCPU Structure and Functiondbunet100% (1)

- Analysis For Power System State EstimationDokumen9 halamanAnalysis For Power System State EstimationAnonymous TJRX7CBelum ada peringkat

- Computer Organzation and Architecture Question BankDokumen10 halamanComputer Organzation and Architecture Question Bankmichael100% (2)

- State Estimation: Shrita Singh 17D170009 Samarth Siddhartha 17B030011Dokumen21 halamanState Estimation: Shrita Singh 17D170009 Samarth Siddhartha 17B030011Samarth SiddharthaBelum ada peringkat

- Switching Activity Estimation of Finite State Machines For Low Power SynthesisDokumen4 halamanSwitching Activity Estimation of Finite State Machines For Low Power SynthesisbrufoBelum ada peringkat

- App Econ - Week6 PDFDokumen6 halamanApp Econ - Week6 PDFFil DeVinBelum ada peringkat

- Tank Monitoring A Pamn Case StudyDokumen22 halamanTank Monitoring A Pamn Case StudyPSBelum ada peringkat

- Design and Simulation of Aircraft Controls With 3D Environment OptionsDokumen6 halamanDesign and Simulation of Aircraft Controls With 3D Environment Optionsgeorgez111Belum ada peringkat

- Fall 2015 - CS704 - 3 - MS150200187Dokumen5 halamanFall 2015 - CS704 - 3 - MS150200187Muddssar Hussain100% (1)

- IEEE Asia Pacific Conference and Exhibition of The IEEE-Power Engineering Society On Transmission and DistributiDokumen6 halamanIEEE Asia Pacific Conference and Exhibition of The IEEE-Power Engineering Society On Transmission and DistributiSaddam HussainBelum ada peringkat

- Forecast of The Evolution of The Contagious Disease Caused by Novel Coronavirus (2019-Ncov) in ChinaDokumen8 halamanForecast of The Evolution of The Contagious Disease Caused by Novel Coronavirus (2019-Ncov) in ChinaRigine Pobe MorgadezBelum ada peringkat

- Olma 2021Dokumen56 halamanOlma 2021KarimaBelum ada peringkat

- Quantum State Tomography Via Linear Regression Estimation: Scientific ReportsDokumen6 halamanQuantum State Tomography Via Linear Regression Estimation: Scientific ReportsYang YiBelum ada peringkat

- This Study Addresses The Robust Fault Detection Problem For Continuous-Time Switched Systems With State Delays.Dokumen5 halamanThis Study Addresses The Robust Fault Detection Problem For Continuous-Time Switched Systems With State Delays.sidamineBelum ada peringkat

- Enhancement of Anomalous Data Mining in Power System Predicting-Aided State EstimationDokumen10 halamanEnhancement of Anomalous Data Mining in Power System Predicting-Aided State Estimationdoud98Belum ada peringkat

- ExamView - Chapter 3 ReviewDokumen6 halamanExamView - Chapter 3 Reviewjfinster1987100% (2)

- Review 1Dokumen1 halamanReview 1MR. VYSHNAV V SBelum ada peringkat

- Bayesian Modeling of Uncertainty in Low-Level VisionDokumen27 halamanBayesian Modeling of Uncertainty in Low-Level VisionSivaloges SingamBelum ada peringkat

- 02 Computational Methods For Numerical Analysis With R - 2Dokumen30 halaman02 Computational Methods For Numerical Analysis With R - 2MBCBelum ada peringkat

- Levels of Reliability MethodsDokumen27 halamanLevels of Reliability MethodsManuel CampidelliBelum ada peringkat

- Distributed State Estimation of Multi-Region Power System Based On Consensus TheoryDokumen16 halamanDistributed State Estimation of Multi-Region Power System Based On Consensus Theorysami_aldalahmehBelum ada peringkat

- Meter Placement Impact On Distribution System State EstimationDokumen4 halamanMeter Placement Impact On Distribution System State EstimationMowheadAdelBelum ada peringkat

- WillemsPisonRousseeuwVanaelst RobustHotellingTest Metrika 2002Dokumen14 halamanWillemsPisonRousseeuwVanaelst RobustHotellingTest Metrika 2002Nicolas Enrique Rodriguez JuradoBelum ada peringkat

- ML (AutoRecovered)Dokumen4 halamanML (AutoRecovered)IQRA IMRANBelum ada peringkat

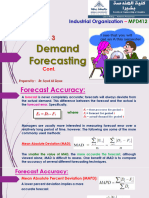

- MPD412 - Ind Org - Lecture-03-Forecasting - Part BDokumen26 halamanMPD412 - Ind Org - Lecture-03-Forecasting - Part BMohamed OmarBelum ada peringkat

- CS6700 Reinforcement Learning PA1 Jan May 2024Dokumen4 halamanCS6700 Reinforcement Learning PA1 Jan May 2024Rahul me20b145Belum ada peringkat

- Estimation of Brain Dynamic Interactions With Time-Varying Vector Autoregressive Modeling Using fMRIDokumen5 halamanEstimation of Brain Dynamic Interactions With Time-Varying Vector Autoregressive Modeling Using fMRIInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- MVFOSMDokumen5 halamanMVFOSMNabil MuhammadBelum ada peringkat

- STLF Using Predictive Modular NNDokumen6 halamanSTLF Using Predictive Modular NNgsaibabaBelum ada peringkat

- Observability of Power Systems Based On Fast Pseudorank Calculation of Sparse Sensitivity MatricesDokumen6 halamanObservability of Power Systems Based On Fast Pseudorank Calculation of Sparse Sensitivity Matricesjaved shaikh chaandBelum ada peringkat

- Dynamic Markov Compression: A Two-State Model For Binary SequencesDokumen2 halamanDynamic Markov Compression: A Two-State Model For Binary Sequencesgparya009Belum ada peringkat

- Design of A Novel Chaotic Synchronization System: Yung-Nien Wang and He-Sheng WangDokumen6 halamanDesign of A Novel Chaotic Synchronization System: Yung-Nien Wang and He-Sheng Wang王和盛Belum ada peringkat

- Probabilistic Satey Analysis Under Random and Fuzzy Uncertain ParametersDokumen54 halamanProbabilistic Satey Analysis Under Random and Fuzzy Uncertain ParametersSEULI SAMBelum ada peringkat

- Modeling of Signal-to-Noise Ratio and Error Patterns in Vehicle-to-Infrastructure CommunicationsDokumen5 halamanModeling of Signal-to-Noise Ratio and Error Patterns in Vehicle-to-Infrastructure Communicationsanon_275541658Belum ada peringkat

- KIDIST and FEYISADokumen7 halamanKIDIST and FEYISAchalaBelum ada peringkat

- BAB 7 Multiple Regression and Other Extensions of The SimpleDokumen17 halamanBAB 7 Multiple Regression and Other Extensions of The SimpleClaudia AndrianiBelum ada peringkat

- Branch PredictionDokumen5 halamanBranch PredictionsuryaBelum ada peringkat

- Regression 2006-03-01Dokumen53 halamanRegression 2006-03-01Rafi DawarBelum ada peringkat

- 10 Inference For Regression Part2Dokumen12 halaman10 Inference For Regression Part2Rama DulceBelum ada peringkat

- Efficient Power System State EstimationDokumen5 halamanEfficient Power System State Estimationc_u_r_s_e_dBelum ada peringkat

- MA 585: Time Series Analysis and Forecasting: February 12, 2017Dokumen15 halamanMA 585: Time Series Analysis and Forecasting: February 12, 2017Patrick NamBelum ada peringkat

- A Markov State-Space Model of Lupus Nephritis Disease DynamicsDokumen11 halamanA Markov State-Space Model of Lupus Nephritis Disease DynamicsAntonio EleuteriBelum ada peringkat

- Kalman ObserverDokumen5 halamanKalman Observerkhadars_5Belum ada peringkat

- Using Cumulative Count of Conforming CCC-Chart To Study The Expansion of The CementDokumen10 halamanUsing Cumulative Count of Conforming CCC-Chart To Study The Expansion of The CementIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalBelum ada peringkat

- Metode Inversi Pada Pengolahan MTDokumen16 halamanMetode Inversi Pada Pengolahan MTMuhammad Arif BudimanBelum ada peringkat

- An Introduction To Nonlinear Model Predictive ControlDokumen23 halamanAn Introduction To Nonlinear Model Predictive ControlSohibul HajahBelum ada peringkat

- CV For Log Normally Distributed Parameters Values - Proost2019Dokumen3 halamanCV For Log Normally Distributed Parameters Values - Proost2019Nilkanth ChapoleBelum ada peringkat

- Distributed State Estimator - Advances and DemonstrationDokumen11 halamanDistributed State Estimator - Advances and DemonstrationThiagarajan RamachandranBelum ada peringkat

- 14 IFAC Symposium On System Identification, Newcastle, Australia, 2006Dokumen19 halaman14 IFAC Symposium On System Identification, Newcastle, Australia, 2006Wasyhun AsefaBelum ada peringkat

- Simple Linear Regression Analysis - ReliaWikiDokumen29 halamanSimple Linear Regression Analysis - ReliaWikiMohmmad RezaBelum ada peringkat

- Paper 22 - Adaptive Outlier-Tolerant Exponential Smoothing Prediction Algorithms With Applications To Predict The Temperature in SpacecraftDokumen4 halamanPaper 22 - Adaptive Outlier-Tolerant Exponential Smoothing Prediction Algorithms With Applications To Predict The Temperature in SpacecraftEditor IJACSABelum ada peringkat

- Quantifying Transmission Reliability Margin: J. Zhang, I. Dobson, F.L. AlvaradoDokumen6 halamanQuantifying Transmission Reliability Margin: J. Zhang, I. Dobson, F.L. AlvaradouoaliyuBelum ada peringkat

- Project 3: Technical University of DenmarkDokumen10 halamanProject 3: Technical University of DenmarkRiyaz AlamBelum ada peringkat

- Malvic EngDokumen7 halamanMalvic Engmisterno2Belum ada peringkat

- Imran Meah Student ID-664509927 Implementation of Multi-Sensor Fusion Using Kalman FilterDokumen9 halamanImran Meah Student ID-664509927 Implementation of Multi-Sensor Fusion Using Kalman FiltermodphysnoobBelum ada peringkat

- 3.1 Characterization of The Region of AttractionDokumen7 halaman3.1 Characterization of The Region of AttractiontetrixBelum ada peringkat

- Bootstrap Prediction IntervalDokumen12 halamanBootstrap Prediction IntervalJoseph Guy Evans HilaireBelum ada peringkat

- Bar Shalom, Daum Et Al 2009 - The Probabilistic Data Association FilterDokumen19 halamanBar Shalom, Daum Et Al 2009 - The Probabilistic Data Association FilterRudialiBelum ada peringkat

- Factorization of Boundary Value Problems Using the Invariant Embedding MethodDari EverandFactorization of Boundary Value Problems Using the Invariant Embedding MethodBelum ada peringkat

- The Processor: The Hardware/Software Interface 5Dokumen149 halamanThe Processor: The Hardware/Software Interface 5ayylmao kekBelum ada peringkat

- Instruction PipelineDokumen27 halamanInstruction PipelineEswin AngelBelum ada peringkat

- Computer Architecture Prof. Madhu Mutyam Department of Computer Science and Engineering Indian Institute of Technology, MadrasDokumen15 halamanComputer Architecture Prof. Madhu Mutyam Department of Computer Science and Engineering Indian Institute of Technology, MadrasElisée Ndjabu100% (1)

- Dpco Unit 3,4,5 QBDokumen18 halamanDpco Unit 3,4,5 QBAISWARYA MBelum ada peringkat

- PH 4 QuizDokumen119 halamanPH 4 Quizstudent1985100% (1)

- PipelineDokumen142 halamanPipelinesanjay hansdakBelum ada peringkat

- Week 4 - PipeliningDokumen44 halamanWeek 4 - Pipeliningdress dressBelum ada peringkat

- Beyond RISC - The Post-RISC Architecture Submitted To: IEEE Micro 3/96Dokumen20 halamanBeyond RISC - The Post-RISC Architecture Submitted To: IEEE Micro 3/96Andreas DelisBelum ada peringkat

- Chapter 1 EditDokumen463 halamanChapter 1 Edittthanhhoa17112003Belum ada peringkat

- COA UNIT - III Processor and Control UnitDokumen127 halamanCOA UNIT - III Processor and Control UnitDevika csbsBelum ada peringkat

- Spectre and Meltdown Attacks Detection Using MachiDokumen53 halamanSpectre and Meltdown Attacks Detection Using Machimuneeb rahmanBelum ada peringkat

- CPU With Systems BusDokumen35 halamanCPU With Systems BusTanafe33% (3)

- Reducing Pipeline Branch PenaltiesDokumen4 halamanReducing Pipeline Branch PenaltiesSwagato MondalBelum ada peringkat

- Comparison of Pentium Processor With 80386 and 80486Dokumen23 halamanComparison of Pentium Processor With 80386 and 80486Tech_MX60% (5)

- Comp Arch Proj Report 2Dokumen11 halamanComp Arch Proj Report 2Nitu VlsiBelum ada peringkat

- Deep-Submicron Microprocessor Design IssuesDokumen12 halamanDeep-Submicron Microprocessor Design IssuesgoltangoBelum ada peringkat

- 2162 Term Project: The Tomasulo Algorithm ImplementationDokumen5 halaman2162 Term Project: The Tomasulo Algorithm ImplementationThomas BoyerBelum ada peringkat

- Nehalem ArchitectureDokumen7 halamanNehalem ArchitectureSuchi SmitaBelum ada peringkat

- Computer Architecture Project 2: Understanding Gem5 Branch Predictor StructureDokumen5 halamanComputer Architecture Project 2: Understanding Gem5 Branch Predictor StructureAsif MalikBelum ada peringkat

- Pentium 4Dokumen108 halamanPentium 4Pavithra MadhuwanthiBelum ada peringkat

- 18 740 Fall15 Lecture05 Branch Prediction AfterlectureDokumen93 halaman18 740 Fall15 Lecture05 Branch Prediction Afterlecturejialong jiBelum ada peringkat

- Computer Architecture: BranchingDokumen37 halamanComputer Architecture: Branchinginfamous0218Belum ada peringkat

- Slides Chapter 6 PipeliningDokumen60 halamanSlides Chapter 6 PipeliningWin WarBelum ada peringkat

- Cse-Vii-Advanced Computer Architectures (10cs74) - SolutionDokumen111 halamanCse-Vii-Advanced Computer Architectures (10cs74) - SolutionShinu100% (1)

- The Processor: CPU Performance FactorsDokumen66 halamanThe Processor: CPU Performance FactorsvlkumashankardeekshithBelum ada peringkat

- BranchScope A New Side-Channel AttackDokumen15 halamanBranchScope A New Side-Channel AttackPrakash ChandraBelum ada peringkat