Rayleigh Quotients and Inverse Iteration: Restriction To Real Symmetric Matrices

Diunggah oleh

Serap InceçayırDeskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Rayleigh Quotients and Inverse Iteration: Restriction To Real Symmetric Matrices

Diunggah oleh

Serap InceçayırHak Cipta:

Format Tersedia

LECTURE 27

Rayleigh Quotients and

Inverse Iteration

Restriction to real symmetric matrices

Most algorithmic ideas in numerical linear algebra are

usually applicable to general matrices, or simplify for

symmetric matrices.

For eigenvalue problems the dierences are substantial.

We simplify matters by assuming (for now) that A is real

and symmetric.

We assume =

2

until further notice.

A is real and symmetric:

it has real eigenvalues

a complete set of orthogonal eigenvectors

Notation:

real eigenvalues:

1

,

2

, . . . ,

m

orthonormal eigenvectors: q

1

, q

2

, . . . , q

m

Most of what we describe now pertains to Phase 2 of the

eigenvalue calculation.

Think of A as real, symmetric, and tridiagonal.

27-1

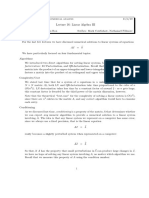

Rayleigh quotient

Rayleigh quotient of a vector x R

m

:

r(x) =

x

T

Ax

x

T

x

Note

If x is an eigenvector, then r(x) = , the corresponding

eigenvalue.

One way to motivate this formula:

Given x, what scalar minimizes Ax x?

i.e., What scalar acts most like an eigenvalue?

This is an m1 least-squares problem:

x Ax

Here, x is the matrix, is the unknown, and Ax is the

right-hand side.

m equations for 1 unknown

Use the normal equations to derive

= r(x).

27-2

Thus r(x) is a natural eigenvalue estimate.

Expand x as a linear combination of q

1

, q

2

, . . . , q

m

:

x =

m

j=1

a

j

q

j

Then

r(x) =

m

j=1

a

2

j

m

j=1

a

2

j

i.e., r(x) is a weighted mean of the eigenvalues of A.

If

a

j

a

J

< for all j = J (i.e., x is close to q

J

) then

r(x) r(q

J

) = O(

2

)

Use this fact:

r(q

J

) =

J

27-3

Power iteration

Let v

(0)

be unit-norm: v

(0)

= 1.

Power iteration produces a sequence v

(i)

q

1

.

ALGORITHM 27.1: POWER ITERATION

Initial v

(0)

with v

(0)

= 1

for k = 1, 2, . . . do

w = Av

(k1)

% apply A

v

(k)

= w/w % normalize

(k)

= (v

(k)

)

T

Av

(k)

% compute Rayleigh quotient

end for

Note

Good termination criteria are vital in practice.

To analyze, write

v

(0)

= a

1

q

1

+ a

2

q

2

+ . . . + a

m

q

m

Since v

(k)

is some multiple of A

k

v

(0)

. Then there exist

27-4

constants c

k

such that

v

(k)

= c

k

A

k

v

(0)

= c

k

A

k

(a

1

q

1

+ a

2

q

2

+ . . . + a

m

q

m

)

= c

k

(a

1

k

1

q

1

+ a

2

k

2

q

2

+ . . . a

m

k

m

q

m

)

= c

k

k

1

_

a

1

q

1

+ a

2

_

1

_

k

q

2

+ . . . +

_

1

_

k

q

m

_

We used

A

k

q

j

=

k

j

q

j

.

Theorem

Let |

1

| > |

2

| . . . |

m

| 0 and q

T

1

v

(0)

= 0.

Then the iterates of Algorithm 27.1 satisfy

v

(k)

(q

1

) = O

_

k

_

and

|

(k)

1

| = O

_

2k

_

as k .

If

1

> 0, all signs are all + or all .

If

1

< 0, signs alternate.

27-5

Here are 3 shortcomings:

1. It only nds the eigenvector corresponding to the

largest eigenvalue.

2. Convergence is linear, with the error reduced by a

constant factor

at each iteration.

3. If

2

1

, convergence can be very slow!

Note

Use deation to nd next eigenvector:

A

1

q

1

q

T

1

=

m

i=2

i

q

i

q

T

i

Inverse iteration

Goal: amplify dierences between eigenvalues and accel-

erate convergence.

Observation: for any that is not an eigenvalue of A:

the eigenvector of (A I)

1

are the same as the

eigenvectors of A

the corresponding eigenvalues are

_

1

_

m

j=1

.

27-6

Suppose is close to an eigenvalue

J

of A.

Then

1

(

j

)

may be much larger than

1

for all j = J.

Power iteration on (A I)

1

should converge rapidly

to q

J

.

This idea is called inverse iteration.

ALGORITHM 27.2: INVERSE ITERATION

Initial v

(0)

with v

(0)

= 1

to some value near

J

for k = 1, 2, . . . do

Solve (A I)w = v

(k1)

apply (A I)

1

v

(k)

= w/w normalize

(k)

= (v

(k)

)

T

Av

(k)

compute Rayleigh quotient

end for

What if is chosen as an eigenvalue of A? Then

A I is singular

(A I)w = v

(k1)

is highly ill-conditioned

Turns out these are not problems:

It can be shown that if the system is solved by a backward

stable algorithm to produce a solution w, then w/ w

will be close to w/w even though w and w may not

be.

27-7

Properties:

convergence is still linear

we can control the rate of the linear convergence by

improving the quality of .

If is much closer to one eigenvalue of A than to the

others, the largest eigenvalue of (AI)

1

will be much

larger than the rest.

Theorem

Suppose

J

is the closest eigenvalue to and

K

is the

second closest, i.e.,

|

J

| < |

K

| |

j

|

for all j = J.

Suppose that q

T

J

v

(0)

= 0.

Then the iterates of Algorithm 27.2 satisfy

v

(k)

(q

J

) = O

_

J

K

k

_

and

|

(k)

J

| = O

_

J

K

2k

_

27-8

Inverse iteration is used in practice to calculate eigenvec-

tors of a matrix when eigenvalues are already known.

Rayleigh quotient iteration

We have seen:

e-value estimate from e-vector estimate (Rayleigh

quotient)

e-vector estiamte from e-value estimate (inverse it-

eration)

We now combine these. The idea is to continually improve

the eigenvalue estimates to increase the rate of conver-

gence of inverse iteration at every step.

This algorithm is called Rayleigh quotient iteration.

RAYLEIGHQUOTIENT ITERATION

Initial v

(0)

with v

(0)

= 1

(0)

= (v

(0)

)

T

Av

(0)

for k = 1, 2, . . . do

Solve (A

(k1)

I)w = v

(k1)

(A

(k1)

I)

1

v

(k)

= w/w normalize

(k)

= (v

(k)

)

T

Av

(k)

% update Rayleigh quotient

end for

Each iteration triples the number of digits of accuracy

(cubic convergence).

27-9

Theorem

Rayleigh quotient iteration converges to an e-value/e-

vector pair for (almost all) starting vectors v

(0)

.

When it converges, the convergence is cubic

i.e., if

J

is an eigenvalue of A and v

(0)

is suciently

close to the eigenvector q

J

, then as k

v

(k+1)

(q

J

) = O(v

(k)

(q

J

)

3

)

and

|

(k+1)

J

| = O(|

(k)

J

|

3

).

Note

The signs are not necessarily the same on both sides!

Example 27.1

A =

_

_

2 1 1

1 3 1

1 1 4

_

_

Let

v

(0)

=

3

_

_

1

1

1

_

_

27-10

Applying Rayleigh quotient iteration to A, we obtain the

following eigenvalue estimates:

(0)

= 5

(1)

= 5.2131 . . .

(2)

= 5.214319743184 . . .

The actual eigenvalue is = 5.214319743377.

Only 3 iterations has produced 10 digits of accuracy!

Three more iteration should increase this to 270 digits of

accuracy - if we had a computer capable of storing this!

Operation counts

We conclude by tabulating the amount of work required

at each step of the three algorithms we have described.

Suppose A is dense.

Each step of power iteration requires a matrix-vector mul-

tiplication: O(m

2

) ops

Each step of inverse iteration requires the solution of a

linear system: O(m

3

) ops.

Note: we actually have the same coecient matrix and

dierent right-hand sides.

If we store the LU or QR factorization, each step only

involves a backward substitution, and hence costs only

27-11

O(m

2

) ops (after decomposing A).

For Rayleigh iteration, the matrix to be inverted at each

step changes, so it is hard to beat O(m

3

) ops per step.

The saving grace of course is that very few steps may be

sucient.

These gures improve dramatically if A is tridiagonal:

All three algorithms only take O(m) ops in this case.

Similarly, if A is non-symmetric and we have to deal with

Hessenberg matrices, the op count increases to O(m

2

)

for each algorithm.

27-12

Anda mungkin juga menyukai

- Assignment Report - Data MiningDokumen24 halamanAssignment Report - Data MiningRahulBelum ada peringkat

- Flight Dynamics StabilityDokumen165 halamanFlight Dynamics StabilityGeorges Ghazi50% (2)

- Notes About Numerical Methods With MatlabDokumen50 halamanNotes About Numerical Methods With MatlabhugocronyBelum ada peringkat

- Quantum Mechanics Lecture NotesDokumen439 halamanQuantum Mechanics Lecture Notesjake parkBelum ada peringkat

- Lecture 16Dokumen4 halamanLecture 16Neritya SinghBelum ada peringkat

- Engineering ComputationDokumen16 halamanEngineering ComputationAnonymous oYtSN7SBelum ada peringkat

- hw8 Solns PDFDokumen7 halamanhw8 Solns PDFDon QuixoteBelum ada peringkat

- The Eigenvalue Problem SolvedDokumen16 halamanThe Eigenvalue Problem SolvedNimisha KbhaskarBelum ada peringkat

- Bentbib A.H. Kanber A.Dokumen14 halamanBentbib A.H. Kanber A.LucianamxsBelum ada peringkat

- Full Marks:-70Dokumen7 halamanFull Marks:-70KiranKumarDashBelum ada peringkat

- 22 4 Numrcl Detrmntn Eignvl EignvcDokumen8 halaman22 4 Numrcl Detrmntn Eignvl EignvcDaniel SolhBelum ada peringkat

- Theorist's Toolkit Lecture 6: Eigenvalues and ExpandersDokumen9 halamanTheorist's Toolkit Lecture 6: Eigenvalues and ExpandersJeremyKunBelum ada peringkat

- Iterative Methods for Computing EigenvaluesDokumen10 halamanIterative Methods for Computing EigenvaluesMauricio Rojas ValdiviaBelum ada peringkat

- Complexe I GenDokumen2 halamanComplexe I GenCody SageBelum ada peringkat

- MATH2071: LAB 8: The Eigenvalue ProblemDokumen16 halamanMATH2071: LAB 8: The Eigenvalue ProblemM.Y M.ABelum ada peringkat

- Lecture 16: Linear Algebra III: cs412: Introduction To Numerical AnalysisDokumen7 halamanLecture 16: Linear Algebra III: cs412: Introduction To Numerical AnalysisZachary MarionBelum ada peringkat

- Lecture 17Dokumen20 halamanLecture 17amjadtawfeq2Belum ada peringkat

- Two-dimensional spectral submanifolds approach conservative limit smoothlyDokumen19 halamanTwo-dimensional spectral submanifolds approach conservative limit smoothlyRobert SzalaiBelum ada peringkat

- Eigenvalue EigenvectorDokumen69 halamanEigenvalue EigenvectordibyodibakarBelum ada peringkat

- Eigenvalue Problem PDFDokumen35 halamanEigenvalue Problem PDFMikhail TabucalBelum ada peringkat

- Math 5610 Fall 2018 Notes of 10/15/18 Undergraduate ColloquiumDokumen12 halamanMath 5610 Fall 2018 Notes of 10/15/18 Undergraduate Colloquiumbb sparrowBelum ada peringkat

- N×N A N×N N×NDokumen34 halamanN×N A N×N N×NSubhasish MahapatraBelum ada peringkat

- Kramer's RuleDokumen7 halamanKramer's RuleLurkermanguyBelum ada peringkat

- 03a1 MIT18 - 409F09 - Scribe21Dokumen8 halaman03a1 MIT18 - 409F09 - Scribe21Omar Leon IñiguezBelum ada peringkat

- Appendix Robust RegressionDokumen17 halamanAppendix Robust RegressionPRASHANTH BHASKARANBelum ada peringkat

- Introduction To Algebraic Eigenvalue Problem: Bghiggins/Ucdavis/November - 2020Dokumen28 halamanIntroduction To Algebraic Eigenvalue Problem: Bghiggins/Ucdavis/November - 2020Gani PurwiandonoBelum ada peringkat

- Theorist's Toolkit Lecture 2-3: LP DualityDokumen9 halamanTheorist's Toolkit Lecture 2-3: LP DualityJeremyKunBelum ada peringkat

- Linear Algebra Via Complex Analysis: Alexander P. Campbell Daniel Daners Corrected Version January 24, 2014Dokumen17 halamanLinear Algebra Via Complex Analysis: Alexander P. Campbell Daniel Daners Corrected Version January 24, 2014IgorBorodinBelum ada peringkat

- PDF 4Dokumen11 halamanPDF 4shrilaxmi bhatBelum ada peringkat

- VAR Stability AnalysisDokumen11 halamanVAR Stability AnalysisCristian CernegaBelum ada peringkat

- NLAFull Notes 22Dokumen59 halamanNLAFull Notes 22forspamreceivalBelum ada peringkat

- Matrix Factorizations and Low Rank ApproximationDokumen11 halamanMatrix Factorizations and Low Rank ApproximationtantantanBelum ada peringkat

- InvpowerDokumen1 halamanInvpowerAhmed OmranBelum ada peringkat

- Lecture 19Dokumen52 halamanLecture 19Axel Coronado PopperBelum ada peringkat

- MIR2012 Lec1Dokumen37 halamanMIR2012 Lec1yeesuenBelum ada peringkat

- Chapter 3Dokumen80 halamanChapter 3getaw bayuBelum ada peringkat

- MA412 FinalDokumen82 halamanMA412 FinalAhmad Zen FiraBelum ada peringkat

- CVEN 302 Exam 2 ReviewDokumen79 halamanCVEN 302 Exam 2 ReviewSalam FaithBelum ada peringkat

- Algebraic Methods in Data Science: Lesson 3: Dan GarberDokumen14 halamanAlgebraic Methods in Data Science: Lesson 3: Dan Garberrany khirbawyBelum ada peringkat

- Possible Questions Exam Non-Equilibrium Statistical MechanicsDokumen4 halamanPossible Questions Exam Non-Equilibrium Statistical MechanicsEgop3105Belum ada peringkat

- Matrix norms and error analysis summaryDokumen15 halamanMatrix norms and error analysis summaryulbrich100% (1)

- Eigen Values, Eigen Vectors - Afzaal - 1Dokumen81 halamanEigen Values, Eigen Vectors - Afzaal - 1syedabdullah786Belum ada peringkat

- Unique - Solution MatriksDokumen4 halamanUnique - Solution MatriksMasyitah FitridaBelum ada peringkat

- 1 Linear Systems: Definition 1Dokumen12 halaman1 Linear Systems: Definition 1solid7942Belum ada peringkat

- Uniform Convergence and Power SeriesDokumen5 halamanUniform Convergence and Power Series1br4h1m0v1cBelum ada peringkat

- PS1BDokumen3 halamanPS1BAltantsooj BatsukhBelum ada peringkat

- Adaline, Widrow HoffDokumen3 halamanAdaline, Widrow HoffAnnaDumitracheBelum ada peringkat

- Lesson 1Dokumen27 halamanLesson 1Batuhan ÇolaklarBelum ada peringkat

- QFT BoccioDokumen63 halamanQFT Bocciounima3610Belum ada peringkat

- Computation of Matrix Eigenvalues and EigenvectorsDokumen16 halamanComputation of Matrix Eigenvalues and EigenvectorsD.n.PrasadBelum ada peringkat

- ManuscriptDokumen9 halamanManuscriptxfliuBelum ada peringkat

- R Programming: Basic ExercisesDokumen7 halamanR Programming: Basic ExercisesBom VillatuyaBelum ada peringkat

- Cycle Time of Stochastic Max-Plus Linear SystemsDokumen17 halamanCycle Time of Stochastic Max-Plus Linear SystemsMuanalifa AnyBelum ada peringkat

- FEM SlidesDokumen31 halamanFEM SlidesAnya CooperBelum ada peringkat

- 6 The Power MethodDokumen6 halaman6 The Power MethodAnonymous pMVR77x1Belum ada peringkat

- 1 Transformations in Multiple Linear Regression: 1.1 LogarithmicDokumen4 halaman1 Transformations in Multiple Linear Regression: 1.1 LogarithmicGowtham BharatwajBelum ada peringkat

- Behaviour of Nonlinear SystemsDokumen11 halamanBehaviour of Nonlinear SystemsCheenu SinghBelum ada peringkat

- Experiment No 2Dokumen2 halamanExperiment No 221bme145Belum ada peringkat

- Quantum Mechanics: Approximate SolutionsDokumen49 halamanQuantum Mechanics: Approximate SolutionsMD2889Belum ada peringkat

- Difference Equations in Normed Spaces: Stability and OscillationsDari EverandDifference Equations in Normed Spaces: Stability and OscillationsBelum ada peringkat

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)Dari EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)Belum ada peringkat

- The Matrix Cookbook: Kaare Brandt Petersen Michael Syskind Pedersen Version: January 5, 2005Dokumen43 halamanThe Matrix Cookbook: Kaare Brandt Petersen Michael Syskind Pedersen Version: January 5, 2005Vanessa SSantosBelum ada peringkat

- PCA1Dokumen45 halamanPCA1Prateek SinghBelum ada peringkat

- POD Tutorial: Proper Orthogonal Decomposition ExplainedDokumen22 halamanPOD Tutorial: Proper Orthogonal Decomposition ExplainedAfiorBelum ada peringkat

- BBM 3A978 1 349 14944 5 2F1Dokumen30 halamanBBM 3A978 1 349 14944 5 2F1farooqBelum ada peringkat

- DETERMINING LOCATIONS FOR POWER SYSTEM STABILIZERSDokumen9 halamanDETERMINING LOCATIONS FOR POWER SYSTEM STABILIZERSSherif HelmyBelum ada peringkat

- IT B.tech AR CS Detailed Syllabus 21-03-13Dokumen223 halamanIT B.tech AR CS Detailed Syllabus 21-03-13sukanyaBelum ada peringkat

- Linear algebra concepts and problemsDokumen2 halamanLinear algebra concepts and problemsAshwin KM0% (1)

- M.E. Comm. SystemsDokumen58 halamanM.E. Comm. SystemsarivasanthBelum ada peringkat

- Difference EquationDokumen22 halamanDifference EquationtapasBelum ada peringkat

- Tutorial 11 - Matrices 3Dokumen2 halamanTutorial 11 - Matrices 3LexBelum ada peringkat

- 1803SupplementaryNotesnExercises ODEsDokumen333 halaman1803SupplementaryNotesnExercises ODEsJackozee HakkiuzBelum ada peringkat

- Matrices and CalculusDokumen3 halamanMatrices and CalculusSudalai MadanBelum ada peringkat

- Demir - IJCTA - 2000 Floquet Theory and Non-Linear Perturbation Analysis For Oscillators With Differential-Algebraic EquationsDokumen23 halamanDemir - IJCTA - 2000 Floquet Theory and Non-Linear Perturbation Analysis For Oscillators With Differential-Algebraic EquationszhangwenBelum ada peringkat

- Pvar Stata ModulDokumen29 halamanPvar Stata ModulYusuf IndraBelum ada peringkat

- Btech Cse r2007 2008 SyllabusDokumen106 halamanBtech Cse r2007 2008 SyllabusSurya TejaBelum ada peringkat

- UG - Undergrad - Aeros - Engin - Draft - 3Dokumen52 halamanUG - Undergrad - Aeros - Engin - Draft - 3DxmplesBelum ada peringkat

- Fismat UasDokumen22 halamanFismat UasNoviaBelum ada peringkat

- Automatic Control Systems Book OverviewDokumen9 halamanAutomatic Control Systems Book OverviewDeeksha SaxenaBelum ada peringkat

- 3.6 Iterative Methods For Solving Linear SystemsDokumen35 halaman3.6 Iterative Methods For Solving Linear SystemsAbdulaziz H. Al-MutairiBelum ada peringkat

- Using Random Forests v4.0Dokumen33 halamanUsing Random Forests v4.0rollschachBelum ada peringkat

- Kernel Methodsfor Machine Learningwith Mathand PythoDokumen215 halamanKernel Methodsfor Machine Learningwith Mathand PythoKabir WestBelum ada peringkat

- (Mathematical Modelling - Theory and Applications 10) C. Rocşoreanu, A. Georgescu, N. Giurgiţeanu (Auth.) - The FitzHugh-Nagumo Model - Bifurcation and Dynamics-Springer Netherlands (2000)Dokumen244 halaman(Mathematical Modelling - Theory and Applications 10) C. Rocşoreanu, A. Georgescu, N. Giurgiţeanu (Auth.) - The FitzHugh-Nagumo Model - Bifurcation and Dynamics-Springer Netherlands (2000)neel1237Belum ada peringkat

- Aljabar Linear Lanjut PDFDokumen57 halamanAljabar Linear Lanjut PDFFiQar Ithang RaqifBelum ada peringkat

- The Numerati by Stephen BakerDokumen3 halamanThe Numerati by Stephen BakerZaki RostomBelum ada peringkat

- Nonlinear spring oscillationsDokumen60 halamanNonlinear spring oscillationsNoriel J ABelum ada peringkat

- Information Processing Letters: Malik Magdon-IsmailDokumen4 halamanInformation Processing Letters: Malik Magdon-IsmailNo12n533Belum ada peringkat