Robot SLAM: Build Maps and Track Location

Diunggah oleh

niranjan_187Deskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Robot SLAM: Build Maps and Track Location

Diunggah oleh

niranjan_187Hak Cipta:

Format Tersedia

Simultaneous localization and mapping for Robot mapping

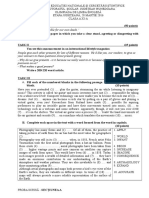

Simultaneous localization and mapping (SLAM) is a technique used by robots and autonomous vehicles to build up a map within an unknown environment (without a priori knowledge), or to update a map within a known environment (with a priori knowledge from a given map), while at the same time keeping track of their current location. Operational definition Maps are used to determine a location within an environment and to depict an environment for planning and navigation; they support the assessment of actual location by recording information obtained from a form of perception and comparing it to a current set of perceptions. The benefit of a map in aiding the assessment of a location increases as the precision and quality of the current perceptions decrease. Maps generally represent the state at the time that the map is drawn; this is not necessarily consistent with the state of the environment at the time the map is used. The complexity of the technical processes of locating and mapping under conditions of errors and noise do not allow for a coherent solution of both tasks. Simultaneous localization and mapping (SLAM) is a concept that binds these processes in a loop and therefore supports the continuity of both aspects in separated processes; iterative feedback from one process to the other enhances the results of both consecutive steps. Mapping is the problem of integrating the information gathered by a set of sensors into a consistent model and depicting that information as a given representation. It can be described by the first characteristic question, What does the world look like? Central aspects in mapping are the representation of the environment and the interpretation of sensor data. In contrast to this, localization is the problem of estimating the place (and pose) of the robot relative to a map; in other words, the robot has to answer the second characteristic question, Where am I? Typically, solutions comprise tracking, where the initial place of the robot is known, and global localization, in which no or just some a priori

knowledge of the environmental characteristics of the starting position is given.

SLAM is therefore defined as the problem of building a model leading to a new map, or repetitively improving an existing map, while at the same time localizing the robot within that map. In practice, the answers to the two characteristic questions cannot be delivered independently of each other. Before a robot can contribute to answering the question of what the environment looks like, given a set of observations, it needs to know e.g.: the robot's own kinematics, which qualities the autonomous acquisition of information has, and, from which sources additional supporting observations have been made.

It is a complex task to estimate the robot's current location without a map or without a directional reference.[1] Here, the location is just the position of the robot or might include, as well, its orientation. Mapping SLAM in the mobile robotics community generally refers to the process of creating geometrically consistent maps of the environment. Topological maps are a method of environment representation which capture the connectivity (i.e., topology) of the environment rather than creating a geometrically accurate map. As a result, algorithms that create topological maps are not referred to as SLAM. SLAM is tailored to the available resources, hence not aimed at perfection, but at operational compliance. The published approaches are employed in unmanned aerial vehicles, autonomous underwater vehicles, planetary rovers, newly emerging domestic robots and even inside the human body.

It is generally considered that "solving" the SLAM problem has been one of the notable achievements of the robotics research in the past decades. The related problems of data association and computational complexity are amongst the problems yet to be fully resolved. Significant recent advances in the feature based SLAM literature involved the re-examination the probabilistic foundation for Simultaneous Localisation and Mapping (SLAM), and posed the problem in terms of multi-object Bayesian filtering with random finite sets. Algorithms were developed based on Probability Hypothesis Density (PHD) filtering techniques that provide superior performance to leading feature-based SLAM algorithms in challenging measurement scenarios with high false alarm rates and high missed detection rates without the need for data association. Sensing SLAM will always use several different types of sensors to acquire data with statistically independent errors. Statistical independence is the mandatory requirement to cope with metric bias and with noise in measures. Such optical sensors may be one dimensional (single beam) or 2D(sweeping) laser rangefinders, 3D Flash LIDAR, 2D or 3D sonar sensors and one or more 2D cameras. Recent approaches apply quasi-optical wireless ranging for multilateration (RTLS) or multi-angulation in conjunction with SLAM as a tribute to erratic wireless measures. A special kind of SLAM for human pedestrians uses a shoe mounted inertial measurement unit as the main sensor and relies on the fact that pedestrians are able to avoid walls. This approach called FootSLAM can be used to automatically build floor plans of buildings that can then be used by an indoor positioning system. Locating The results from sensing will feed the algorithms for locating. According to propositions of geometry, any sensing must include at

least one lateration and (n+1) determining equations for an ndimensional problem. In addition, there must be some additional a priori knowledge about orienting the results versus absolute or relative systems of coordinates with rotation and mirroring. Modeling Contribution to mapping may work in 2D modeling and respective representation or in 3D modeling and 2D projective representation as well. As a part of the model, the kinematics of the robot is included, to improve estimates of sensing under conditions of inherent and ambient noise. The dynamic model balances the contributions from various sensors, various partial error models and finally comprises in a sharp virtual depiction as a map with the location and heading of the robot as some cloud of probability. Mapping is the final depicting of such model, the map is either such depiction or the abstract term for the model.

Anda mungkin juga menyukai

- Automatic Target Recognition: Fundamentals and ApplicationsDari EverandAutomatic Target Recognition: Fundamentals and ApplicationsBelum ada peringkat

- FPGA Implementation of CORDIC-Based QRD-RLS AlgorithmDokumen5 halamanFPGA Implementation of CORDIC-Based QRD-RLS Algorithmsappal73asBelum ada peringkat

- Ground-Based Surveying Versus The Need For A Shift To Unmanned Aerial Vehicles (Uavs) Current Challenges and Future DirectionDokumen9 halamanGround-Based Surveying Versus The Need For A Shift To Unmanned Aerial Vehicles (Uavs) Current Challenges and Future DirectionErmias AlemuBelum ada peringkat

- Waroth Thesis PDFDokumen210 halamanWaroth Thesis PDFWaroth KuhirunBelum ada peringkat

- Real Time Implementation of Adaptive Beam Former For Phased Array Radar Over DSP KitDokumen10 halamanReal Time Implementation of Adaptive Beam Former For Phased Array Radar Over DSP KitAI Coordinator - CSC JournalsBelum ada peringkat

- LTE - PRACH DetectionDokumen12 halamanLTE - PRACH DetectionAshish ShuklaBelum ada peringkat

- Evaluation of Transmit Diversity in MIMO-radar Direction FindingDokumen21 halamanEvaluation of Transmit Diversity in MIMO-radar Direction Findingpadroking11Belum ada peringkat

- Introduction to Beamforming and Space-Time Adaptive ProcessingDokumen35 halamanIntroduction to Beamforming and Space-Time Adaptive Processinggvks007100% (1)

- MIMO Signal Processing ConsiderationsDokumen4 halamanMIMO Signal Processing Considerationsfiqur1Belum ada peringkat

- Mobile Train Radio Communication PPT SlideshareDokumen28 halamanMobile Train Radio Communication PPT SlideshareSuchith RajBelum ada peringkat

- 25.4. LTE Planning: PrachDokumen7 halaman25.4. LTE Planning: Prachshakthie5Belum ada peringkat

- Unit-4 Tracking RadarDokumen58 halamanUnit-4 Tracking RadarMani PrinceBelum ada peringkat

- 3.3G Cellular, WMAN (WIMAX), WLAN (WIFI), WPAN (Bluetooth and UWB), Mobile Ad Hoc Networks (MANET), and Wireless Sensor NetworksDokumen36 halaman3.3G Cellular, WMAN (WIMAX), WLAN (WIFI), WPAN (Bluetooth and UWB), Mobile Ad Hoc Networks (MANET), and Wireless Sensor Networksekhy29Belum ada peringkat

- Digital Phased Arrays ChallangesDokumen17 halamanDigital Phased Arrays ChallangesVivek KadamBelum ada peringkat

- Arrays Notes PDFDokumen20 halamanArrays Notes PDFSukhada Deshpande.Belum ada peringkat

- I.seker - Calibration Methods For Phased Array RadarsDokumen16 halamanI.seker - Calibration Methods For Phased Array RadarsAlex YangBelum ada peringkat

- Ultra Wide BandDokumen34 halamanUltra Wide Bandapi-19937584Belum ada peringkat

- ANT Mod5Dokumen100 halamanANT Mod5DmanBelum ada peringkat

- WSN Introduction: Wireless Sensor Network Design Challenges and ProtocolsDokumen48 halamanWSN Introduction: Wireless Sensor Network Design Challenges and ProtocolsVaishnavi BalasubramanianBelum ada peringkat

- Sar Image FormationDokumen15 halamanSar Image FormationMusyarofah HanafiBelum ada peringkat

- Extracted Pages From RADAR SYSTEMS - Unit-4Dokumen17 halamanExtracted Pages From RADAR SYSTEMS - Unit-4ChintuBelum ada peringkat

- Radar Cross SectionDokumen9 halamanRadar Cross SectionConstantin DorinelBelum ada peringkat

- An Overview of The GSM SystemDokumen32 halamanAn Overview of The GSM System0796105632Belum ada peringkat

- Radar Engineering LectureDokumen49 halamanRadar Engineering LectureWesley GeorgeBelum ada peringkat

- Beam FormingDokumen33 halamanBeam Formingathar naveedBelum ada peringkat

- Analysis of Wireless Network in Nile University of Nigeria Using Markov Chain Redition With Gnss Logger App As ReceiverDokumen16 halamanAnalysis of Wireless Network in Nile University of Nigeria Using Markov Chain Redition With Gnss Logger App As Receiverabdulmalik saniBelum ada peringkat

- White Paper PRACH Preamble Detection and Timing Advance Estimation For ...Dokumen30 halamanWhite Paper PRACH Preamble Detection and Timing Advance Estimation For ...Vivek KesharwaniBelum ada peringkat

- Slot Antenna: A Radiator Made By Cutting A SlotDokumen9 halamanSlot Antenna: A Radiator Made By Cutting A SlotMarcos Marcelon Alcantara Abueva IIIBelum ada peringkat

- Pathloss Model Evaluation For Long Term Evolution in OwerriDokumen6 halamanPathloss Model Evaluation For Long Term Evolution in OwerriInternational Journal of Innovative Science and Research TechnologyBelum ada peringkat

- Micro PDFDokumen55 halamanMicro PDFHem BhattaraiBelum ada peringkat

- Radar Systems Vr19 Unit1 1Dokumen126 halamanRadar Systems Vr19 Unit1 1Mummana Mohan ShankarBelum ada peringkat

- Multi-Standard Receiver DesignDokumen54 halamanMulti-Standard Receiver DesignWalid_Sassi_TunBelum ada peringkat

- Modeling and Simulation of Scheduling Algorithms in LTE NetworksDokumen62 halamanModeling and Simulation of Scheduling Algorithms in LTE Networksمهند عدنان الجعفريBelum ada peringkat

- Basic SDR ImplementationDokumen27 halamanBasic SDR Implementationsatishkumar123Belum ada peringkat

- Extracted Pages From RADAR SYSTEMS - Unit-1Dokumen31 halamanExtracted Pages From RADAR SYSTEMS - Unit-1Mahendar ReddyBelum ada peringkat

- Chapter 12Dokumen101 halamanChapter 12Wei GongBelum ada peringkat

- CMC Unit-IDokumen30 halamanCMC Unit-Iabhi1984_luckyBelum ada peringkat

- Gps Receiver Design TutorialDokumen3 halamanGps Receiver Design TutorialPete100% (1)

- Image Denoising With The Non-Local Means AlgorithmDokumen9 halamanImage Denoising With The Non-Local Means AlgorithmstefanBelum ada peringkat

- Coherent Mimo Waveform LVDokumen6 halamanCoherent Mimo Waveform LVbinhmaixuanBelum ada peringkat

- Introduction To Antenna Test Ranges Measurements InstrumentationDokumen13 halamanIntroduction To Antenna Test Ranges Measurements InstrumentationgnanalakshmiBelum ada peringkat

- Signal Processing Algorithms For MIMO Radar: Chun-Yang ChenDokumen155 halamanSignal Processing Algorithms For MIMO Radar: Chun-Yang ChenSon Nguyen VietBelum ada peringkat

- Frequency Selective SurfacesDokumen7 halamanFrequency Selective SurfacesannetteaugustusBelum ada peringkat

- FFT in Imaging ProcessDokumen5 halamanFFT in Imaging ProcessJose AriasBelum ada peringkat

- OFDMADokumen55 halamanOFDMAamelalic75% (4)

- MIMO Radar A Idea Whose Time Has ComeDokumen8 halamanMIMO Radar A Idea Whose Time Has Cometrongnguyen29Belum ada peringkat

- Lecture #22: Synthetic Aperture Radar: Fundamentals of Radar Signal ProcessingDokumen58 halamanLecture #22: Synthetic Aperture Radar: Fundamentals of Radar Signal ProcessingMuhammad RizwanBelum ada peringkat

- Radar .... 2016Dokumen84 halamanRadar .... 2016kusumaBelum ada peringkat

- IIT-BHU Literature Review of Direction Finding AntennasDokumen29 halamanIIT-BHU Literature Review of Direction Finding AntennasVaishali SinghBelum ada peringkat

- Radar Data and Relationships: Radar Bands Linear DB ScaleDokumen2 halamanRadar Data and Relationships: Radar Bands Linear DB ScaleSusana RojasBelum ada peringkat

- Mobile Radio PropogationDokumen59 halamanMobile Radio PropogationKaruna LakhaniBelum ada peringkat

- Communication Satellites PDFDokumen2 halamanCommunication Satellites PDFShannonBelum ada peringkat

- Wireless Sensor Network Architecture and ProtocolsDokumen42 halamanWireless Sensor Network Architecture and ProtocolsMounisha g bBelum ada peringkat

- Performance Analysis of UAV-based Mixed RF-UWOC TRDokumen11 halamanPerformance Analysis of UAV-based Mixed RF-UWOC TRDebaratiBelum ada peringkat

- The LabVIEW Simulation of Space-Time Coding Technique in The MIMO-OfDM SystemDokumen6 halamanThe LabVIEW Simulation of Space-Time Coding Technique in The MIMO-OfDM Systemivy_publisherBelum ada peringkat

- Wireless and Mobile Computing: University of GujratDokumen42 halamanWireless and Mobile Computing: University of GujratIqra HanifBelum ada peringkat

- Mobile Radio Modulation Techniques under 40 CharactersDokumen3 halamanMobile Radio Modulation Techniques under 40 CharactersAbhinav0% (1)

- Rake ReceiverDokumen48 halamanRake ReceiverIk Ram100% (1)

- Satellite CommunicationDokumen114 halamanSatellite CommunicationNuthal SrinivasanBelum ada peringkat

- DSP Lab Manual AlignDokumen134 halamanDSP Lab Manual AlignSilent Trigger GamingBelum ada peringkat

- Lenovo ThinkSystem ST550Dokumen88 halamanLenovo ThinkSystem ST550Luz Claudia Diburga ValdiviaBelum ada peringkat

- AUT Time Table 22 23Dokumen13 halamanAUT Time Table 22 23Akash BommidiBelum ada peringkat

- Step 3 Vol 01 TCCSDokumen191 halamanStep 3 Vol 01 TCCSHadi Karyanto100% (2)

- Fuel Supply: Group 13CDokumen16 halamanFuel Supply: Group 13CLuis David Leon GarciaBelum ada peringkat

- User manual for the M20.04 digital system controllerDokumen22 halamanUser manual for the M20.04 digital system controllerKiril Petranov- PetranoffBelum ada peringkat

- Digital Land Surveying: Aicte Sponsored Short Term CourseDokumen2 halamanDigital Land Surveying: Aicte Sponsored Short Term CourseDiwakar GurramBelum ada peringkat

- ETL Developer ProfileDokumen2 halamanETL Developer ProfileKrishBelum ada peringkat

- Oracle Application Express: Developing Database Web ApplicationsDokumen12 halamanOracle Application Express: Developing Database Web Applicationsanton_428Belum ada peringkat

- Global PEB System and Solutions Company ProfileDokumen10 halamanGlobal PEB System and Solutions Company ProfileswapnilBelum ada peringkat

- Deep Dive On Amazon Guardduty: Needle - Needle.Needle Wait These Are All NeedlesDokumen36 halamanDeep Dive On Amazon Guardduty: Needle - Needle.Needle Wait These Are All Needlesmasterlinh2008Belum ada peringkat

- Chapter 10: Performance Measurement, Monitoring, and Program EvaluationDokumen20 halamanChapter 10: Performance Measurement, Monitoring, and Program EvaluationShauntina SorrellsBelum ada peringkat

- Module2 16949Dokumen16 halamanModule2 16949sopon567100% (1)

- Computer Science PracticalDokumen41 halamanComputer Science Practicalsikander.a.khanixd26Belum ada peringkat

- Introduction - Digital TransmissionDokumen93 halamanIntroduction - Digital TransmissionSilvers RayleighBelum ada peringkat

- D788 HitachiSemiconductorDokumen6 halamanD788 HitachiSemiconductorBrio Deginta Rama YudaBelum ada peringkat

- FP GuideDokumen102 halamanFP GuideVipin RajputBelum ada peringkat

- Wstk6202 EZR32LG 915MHz Wireless Starter KitDokumen29 halamanWstk6202 EZR32LG 915MHz Wireless Starter Kittrash54Belum ada peringkat

- Adobe Experience Manager Architect: Adobe Certified Expert Exam GuideDokumen8 halamanAdobe Experience Manager Architect: Adobe Certified Expert Exam GuideZjadacz AntymateriiBelum ada peringkat

- Zeeta308d PDFDokumen25 halamanZeeta308d PDFGeorcchi CheeckBelum ada peringkat

- 20.ASPNET-Lab Exercises and SolutionsDokumen69 halaman20.ASPNET-Lab Exercises and SolutionsEdmonBelum ada peringkat

- Microcontroller MCQ Quiz: Test Your Knowledge of Microcontrollers with 20 Multiple Choice QuestionsDokumen5 halamanMicrocontroller MCQ Quiz: Test Your Knowledge of Microcontrollers with 20 Multiple Choice QuestionsDeepika SharmaBelum ada peringkat

- Moxom Com CN ProductsDokumen4 halamanMoxom Com CN Productsabra77Belum ada peringkat

- ICGT Question Bank 13ME301 InternalDokumen13 halamanICGT Question Bank 13ME301 Internalవిష్ణువర్ధన్రెడ్డిBelum ada peringkat

- Xi Subiect Olimpiada Sectiunea A Var1Dokumen2 halamanXi Subiect Olimpiada Sectiunea A Var1theodor2radu0% (1)

- Software TestingDokumen57 halamanSoftware Testingpravin7may8680Belum ada peringkat

- Installation Instructions: Sirius Retrofit Kit - P/N 65 11 0 406 351Dokumen8 halamanInstallation Instructions: Sirius Retrofit Kit - P/N 65 11 0 406 351Sébastien MahutBelum ada peringkat

- Causes and Effects of Traffic JamsDokumen15 halamanCauses and Effects of Traffic JamsZoran Tgprs100% (1)

- HP LaserJet - Install An HP Universal Print Driver (UPD) Using A USB ConnectionDokumen7 halamanHP LaserJet - Install An HP Universal Print Driver (UPD) Using A USB Connectionaleksandar71Belum ada peringkat

- Virtualizer Pro DSP2024PDokumen40 halamanVirtualizer Pro DSP2024PNestor CustódioBelum ada peringkat