Gaussian PDF

Diunggah oleh

ezad22Deskripsi Asli:

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Gaussian PDF

Diunggah oleh

ezad22Hak Cipta:

Format Tersedia

Bayess Decision Criterion

P( A B ) = P ( B A) P ( A) P( B )

Each term in Bayes's theorem has a conventional name: P(A) is the prior probability or marginal probability of A. It is "prior" in the sense that it does not take into account any information about B. P(A|B) is the conditional probability of A, given B. It is also called the posterior probability because it is derived from or depends upon the specified value of B. P(B|A) is the conditional probability of B given A. P(B) is the prior or marginal probability of B, and acts as a normalizing constant.

posterior = likelihood prior normalizin gcons tan t

In words: the posterior probability is proportional to the prior probability times the likelihood. In addition, the ratio P(B|A)/P(B) is sometimes called the standardised likelihood, so the theorem may also be paraphrased as

posterior = standard likelihood prior

1.0 Decision costs

Two ways to lose information 1. 2. Cost C0 information lost when a transmitted digital 1 is received digital 0 (error) Cost C1 information lost when a transmitted digital 0 is received digital 1 (error)

There is no cost when no information is lost, i.e., when correct decisions are made.

2.0

Expected conditional decision costs

Expected conditional cost, C ( 0 v ) , incurred when a detected voltage is interpreted as digital 0 is given by C ( 0 v ) = C 0 P (1 v ) (1) C 0 = cost if decision is in error ( v ) is interpreted as digital 0; P(1 v ) = the a posteriori probability that decision is in error.

Similarly, by symmetry,

C (1 v ) = C1 P( 0 v ) ,

(2)

i.e., expected conditional cost incurred when

is interpreted as digital 1.

3.0

Optimum decision rule

Rational rule: interpret each detected voltage, the expected conditional cost, i.e.,

v , as either a 0 a 1 so as to minimize

(3)

< C (1 v ) C ( 0 v ) >

0

Interpretation of (3): If upper inequality holds, decides binary 1; if lower inequality holds, decides binary 0. Substituting (2) into (3) gives the following equation.

P( 0 v ) < C 0 P(1 v ) > C1

0

(4)

If costs of both types of error are same, then (4) represents a maximum a posteriori (MAP) probability decision criteria (one form of Bayess decision criterion). Bayess theorem:

P ( v,0 ) = p ( v ) P ( 0 / v ) = P ( 0) p ( v 0 ) and P ( v,1) = p ( v ) P (1 v ) = P (1) p ( v 1)

The following can be deduced. p( v 0) P( 0) P( 0 v ) = p( v ) and p ( v 1) P(1) P (1 v ) = p( v )

(5)

(6)

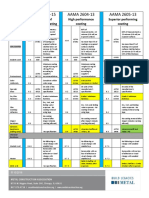

Figure below illustrates the conditional probability density function for the case of zero mean Gaussian noise.

The following can be deduced from (5) and (6):

P( 0 v ) p( v 0 ) P( 0) = P (1 v ) p ( v 1) P (1)

(7)

Substituting (7) into (4) gives

p( v 0) P( 0) < C 0 p ( v 1) P (1) > C1

0

(8)

or

p ( v 0 ) < C 0 P (1) p ( v 1) > C1 P ( 0 )

0

(9)

p(v 0) is called the likelihood ratio, a function of p ( v 1)

and

C 0 P (1) is called likelihood C1 P ( 0 )

threshold Lth . If C 0 = C1 and P ( 0) = P (1) (or C 0 P(1) = C1 P ( 0) ), then Lth =1 and (9) is called maximum likelihood decision criterion. Relationship between maximum likelihood, MAP and Bayess decision criteria: Receiver A priori probabilities known Yes Decision costs known Yes Assumptions Decision criterion

Bayes

None

p ( v 0 ) < C 0 P (1) p ( v 1) > C1 P ( 0 )

0

MAP

Yes

No

C 0 = C1

Max. Likelihood

No

No

C 0 P(1) = C1 P ( 0)

p ( v 0) < P (1) p ( v 1) > P ( 0)

0

p( v 0) < 1 p ( v 1) >

0

4.0

Optimum decision threshold voltage

Bayess decision criterion sets optimum reference (threshold voltage), v th in receiver decision circuit. v th minimizes the expected conditional cost of each decision and satisfies: p ( v 0) C 0 P (1) = = Lth (10) p( v 1) C1 P( 0 ) If Lth =1 (for statistically independent, equiprobable symbols with equal error costs), then voltage threshold would occur at the intersection of the two conditional pdfs. Assume voltage levels for binary 0 and 1 are represented by 0 volt and V volts, respectively. If binary 1 and 0 are equi-probable the decision threshold is located exactly hslfway between the voltage levels representing 1 and 0. If 0 is transmitted more often than 1 the threshold moves towards the transmitted voltage representing 1. Once the decision threshold has been established the total probability of error can then be calculated. If binary 0 (0 volt) is sent, the probability that it will be received as a 1 is the probability that noise will exceed +

P0 = P ), i.e., 1

A volts (assuming 2

Pe 0 =

exp ( v / 2 2 )

A/ 2

( 2 )

2

dv

(11)

If a 1 is sent (A volts) the probability that it will be received as a 0 is the probability that the noise voltage will be between A / 2 and , i.e.,

Pe1 =

A / 2

exp v / 2 2

( 2 )

2

)dv

(11)

These types of error are mutually exclusive, since sending a 0 precludes sending a 1. Symmetry of Gaussian function results in Pe 0 = Pe1 , and the total probability of error is P0 Pe 0 +P Pe1 . This can be reduced to 1

Pe = Pe1 ( P0 + P1 ) = Pe1

i.e.,

Pe =

A / 2

exp v / 2 2

( 2 )

2

)dv

(12)

This equation can be written in terms of two integrals

Pe =

0

exp v / 2 2

( 2 )

2

)dv

exp v 2 / 2 2

A / 2

( 2 )

2

) dv

(13)

Using the fact that Gaussian distribution is symmetrical about its mean value, equation (13) reduces to 2 2 A / 2 exp ( v / 2 ) 1 Pe = + dv 2 0 ( 2 2 ) Substituting y = v / 2 2 , the equation reduces to

Pe = 1 1 + 2

A / 2 2

exp y 2 dy

i.e.,

Pe =

1 1 erf A / 2 2 2

[ (

)]}

(14)

The error probability therefore depends solely on the ratio of the peak pulse voltage A to the rms noise voltage .

Anda mungkin juga menyukai

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (895)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Nelson Physics 11Dokumen586 halamanNelson Physics 11Jeff An90% (10)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (74)

- Exploring Chemical Analysis Solutions Manual 3rd PDFDokumen2 halamanExploring Chemical Analysis Solutions Manual 3rd PDFMichelle50% (2)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- Discussion Tray DryerDokumen3 halamanDiscussion Tray DryerIskandar ZulkarnainBelum ada peringkat

- Guia Procedimiento Inspeccion MFL PDFDokumen6 halamanGuia Procedimiento Inspeccion MFL PDFleonciomavarezBelum ada peringkat

- Introduction To Ic Assembly and Packaging Process: by Ezad Razif B Ahmat RozaliDokumen17 halamanIntroduction To Ic Assembly and Packaging Process: by Ezad Razif B Ahmat Rozaliezad22Belum ada peringkat

- Presentation 14 JAN 2015: Details Name For IC Package Picture For All 4 Trends in PackagingDokumen3 halamanPresentation 14 JAN 2015: Details Name For IC Package Picture For All 4 Trends in Packagingezad22Belum ada peringkat

- Exe13 - Fet DC & Ac AnalysisDokumen2 halamanExe13 - Fet DC & Ac Analysisezad22Belum ada peringkat

- Exe11 - Fet DC AnalysisDokumen2 halamanExe11 - Fet DC Analysisezad22Belum ada peringkat

- Exercise Unit 2: Rectifier Q1Dokumen2 halamanExercise Unit 2: Rectifier Q1ezad22Belum ada peringkat

- EXE2 - RECTIFIER (Halfwave Fullwave Center Tapped) Set 2Dokumen4 halamanEXE2 - RECTIFIER (Halfwave Fullwave Center Tapped) Set 2ezad22Belum ada peringkat

- Ex 15 - Fet DC AnalysisDokumen2 halamanEx 15 - Fet DC Analysisezad22Belum ada peringkat

- EXE1 - Diode With Power Supply (Set 1)Dokumen2 halamanEXE1 - Diode With Power Supply (Set 1)ezad22Belum ada peringkat

- EXE1 - Diode With Power Supply (Set 2)Dokumen2 halamanEXE1 - Diode With Power Supply (Set 2)ezad22Belum ada peringkat

- Exe9 - BJT DC & Ac Analysis With Rs & RL - Set 1Dokumen2 halamanExe9 - BJT DC & Ac Analysis With Rs & RL - Set 1ezad22Belum ada peringkat

- EXE2 - RECTIFIER (Halfwave Fullwave Center Tapped) Set 1Dokumen4 halamanEXE2 - RECTIFIER (Halfwave Fullwave Center Tapped) Set 1ezad22Belum ada peringkat

- Exercise Unit 2 & 3 - Diode & BJT DC AnalysisDokumen2 halamanExercise Unit 2 & 3 - Diode & BJT DC Analysisezad22Belum ada peringkat

- Assignment 1 - Artificial IntelligenceDokumen8 halamanAssignment 1 - Artificial Intelligenceezad22Belum ada peringkat

- Ex - BJT DC AnalysisDokumen2 halamanEx - BJT DC Analysisezad22Belum ada peringkat

- Find I and V .: Exercise Chapter 2: Diode With DC SuppliesDokumen2 halamanFind I and V .: Exercise Chapter 2: Diode With DC Suppliesezad22Belum ada peringkat

- 1. Sketch the output waveforms for Figure 1 with reference to input as shown below. 1.2kΩDokumen2 halaman1. Sketch the output waveforms for Figure 1 with reference to input as shown below. 1.2kΩezad22Belum ada peringkat

- Exercise ch2 - Diode DC SuppliesDokumen2 halamanExercise ch2 - Diode DC Suppliesezad22Belum ada peringkat

- Name: - Date: - Title: Exercise Chapter 2 & 3Dokumen2 halamanName: - Date: - Title: Exercise Chapter 2 & 3ezad22Belum ada peringkat

- Name: - Date: - Title: Exercise Chapter 2: RectifierDokumen2 halamanName: - Date: - Title: Exercise Chapter 2: Rectifierezad22Belum ada peringkat

- Name: - Date: - Title: Exercise Chapter 2: RectifierDokumen2 halamanName: - Date: - Title: Exercise Chapter 2: Rectifierezad22Belum ada peringkat

- Jadual Jan Jun 20011 Version2.1 CadanganDokumen81 halamanJadual Jan Jun 20011 Version2.1 Cadanganezad22Belum ada peringkat

- TopicDokumen1 halamanTopicezad22Belum ada peringkat

- Haldia PP Specs PDFDokumen2 halamanHaldia PP Specs PDFkashyap8291Belum ada peringkat

- CP 2Dokumen1 halamanCP 2shameer bashaBelum ada peringkat

- KU To CentiPoiseDokumen4 halamanKU To CentiPoiseUna Si Ndéso100% (1)

- CHM510 - SpeDokumen7 halamanCHM510 - SpeafifiBelum ada peringkat

- Policies For PDF ContentDokumen3 halamanPolicies For PDF Contentvijay123inBelum ada peringkat

- The Mechanism of The Leuckart Reaction PDFDokumen12 halamanThe Mechanism of The Leuckart Reaction PDFatomoscoBelum ada peringkat

- Computer Vision Three-Dimensional Data From ImagesDokumen12 halamanComputer Vision Three-Dimensional Data From ImagesminhtrieudoddtBelum ada peringkat

- 14.4D ExerciseDokumen18 halaman14.4D ExerciseKnyazev DanilBelum ada peringkat

- Tower Dynamics Damping ProjectDokumen5 halamanTower Dynamics Damping ProjectreaperhellmanBelum ada peringkat

- Fundamentals of Noise and Vibration Analysis For Engineers: Second EditionDokumen9 halamanFundamentals of Noise and Vibration Analysis For Engineers: Second EditionjeyaselvanBelum ada peringkat

- HT NotesDokumen197 halamanHT NotesT.AnbukumarBelum ada peringkat

- Part I: Multiple Choice: Physisc 82 - 1 Semester AY '16 - 17Dokumen4 halamanPart I: Multiple Choice: Physisc 82 - 1 Semester AY '16 - 17deusleanBelum ada peringkat

- Class 12 - PhysicsDokumen222 halamanClass 12 - PhysicsviddusagarBelum ada peringkat

- The Little Liste: Lvashington, D.CDokumen5 halamanThe Little Liste: Lvashington, D.CNur AgustinusBelum ada peringkat

- Composite Lecture 2Dokumen28 halamanComposite Lecture 2Nuelcy LubbockBelum ada peringkat

- Atomic Spectroscopy 1Dokumen40 halamanAtomic Spectroscopy 1SOURAV BHATTACHARYABelum ada peringkat

- 0625 m18 Ms 62Dokumen7 halaman0625 m18 Ms 62Syed AshabBelum ada peringkat

- Catalogue Axces Silenciadores de EscapeDokumen47 halamanCatalogue Axces Silenciadores de EscapeBenjamín AlainBelum ada peringkat

- DQ1A SolutionDokumen2 halamanDQ1A SolutionmeepmeeBelum ada peringkat

- Bisection MethodDokumen4 halamanBisection MethodSulaiman AhlakenBelum ada peringkat

- Emttl QBDokumen3 halamanEmttl QBvijay bhaskar nathiBelum ada peringkat

- KINEMATICS - Scientific PaperDokumen8 halamanKINEMATICS - Scientific PaperDai VenusBelum ada peringkat

- Cathedrals of Science - The Personalities and Rivalries That Made Modern ChemistryDokumen400 halamanCathedrals of Science - The Personalities and Rivalries That Made Modern ChemistryPushkar Pandit100% (1)

- Make An Analemmatic SundialDokumen3 halamanMake An Analemmatic SundialjoaojsBelum ada peringkat

- Mechanism and Degrees of Freedom 2Dokumen10 halamanMechanism and Degrees of Freedom 2Hamza TariqBelum ada peringkat

- MATH1902 - Course OutlineDokumen4 halamanMATH1902 - Course OutlineRoy LinBelum ada peringkat