Syscall Proxying - Simulating Remote Execution

Diunggah oleh

Reda EightyNevezHak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Syscall Proxying - Simulating Remote Execution

Diunggah oleh

Reda EightyNevezHak Cipta:

Format Tersedia

Syscall Proxying - Simulating remote execution

Maximiliano Caceres <maximiliano.caceres@corest.com>

Copyright 2002 CORE SECURITY TECHNOLOGIES

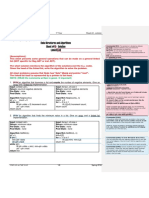

Table of Contents

Abstract . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1 General concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2 The UNIX way . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3 The Windows way . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3 Syscall Proxying . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4 A rst glimpse into an implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6 Optimizing for size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8 Fat client, thin server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8 A sample implementation for Linux . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11 What about Windows? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15 The real world: applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16 The Privilege Escalation phase . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17 Redening the word "shellcode" . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17 Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18 Acknowledgements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

Abstract

A critical stage in a typical penetration test is the "Privilege Escalation" phase. An auditor faces this stage when access to an intermediate host or application in the target system is gained, by means of a previous successful attack. Access to this intermediate target allows for staging more effective attacks against the system by taking advantage of existing webs of trust and a more privileged position in the target systems network. This "attacker prole" switch is referred to as pivoting along this document. Pivoting on a compromised host can often be an onerous task, sometimes involving porting tools or exploits to a different platform and deploying them. This includes installing required libraries and packages and sometimes even a C compiler in the target system!.

Syscall Proxying is a technique aimed at simplifying the privilege escalation phase. By providing a direct interface into the targets operating system it allows attack code and tools to be automagically in control of remote resources. Syscall Proxying transparently "proxies" a process system calls to a remote server, effectively simulating remote execution. Basic knowledge of exploit coding techniques as well as assembler programming is required.

General concepts

A typical software process interacts, at some point in its execution, with certain resources: a le in disk, the screen, a networking card, a printer, etc. Processes can access these resources through system calls (syscalls for short). These syscalls are operating system services, usually identied with the lowest layer of communication between a user mode process and the OS kernel. The strace tool available in Linux systems process...". From man strace:

1

"...intercepts and records the system calls which are called by a

Students, hackers and the overly-curious will nd that a great deal can be learned about a system and its system calls by tracing even ordinary programs. And programmers will nd that since system calls and signals are events that happen at the user/kernel interface, a close examination of this boundary is very useful for bug isolation, sanity checking and attempting to capture race conditions. For example, this is an excerpt from running strace uname -a in a Linux system (some lines were removed for clarity):

[max@m21 max]$ strace uname -a execve("/bin/uname", ["uname", "-a"], [/* 24 vars */]) = 0 (...) uname({sys="Linux", node="m21.corelabs.core-sdi.com", ...}) = 0 fstat64(1, {st_mode=S_IFCHR|0620, st_rdev=makedev(136, 2), ...}) = 0 mmap2(NULL, 4096, PROT_READ|PROT_WRITE, MAP_PRIVATE|MAP_ANONYMOUS, -1, 0) = 0x4018e000 write(1, "Linux m21.corelabs.core-sdi.com "..., 85) = 85 munmap(0x4018e000, 4096) = 0 _exit(0) = ?

Linux m21.corelabs.core-sdi.com 2.4.7-10 #1 Thu Sep 6 17:27:27 EDT 2001 i686 unknown [max@m21 max]$

The strace process forks and calls the execve syscall to execute the uname program.

1

Similar tools are ktrace in BSD systems and truss in Solaris. Theres a strace like tool for Windows NT, available at http://razor.bindview. com/tools/desc/strace_readme.html.

The uname program calls the uname syscall of the same name (see man 2 uname) to obtain system information. The information is returned inside a struct provided by the caller. The write syscall is called to print the returned information to le descriptor 1, stdout. exit signals the kernel that the process nished with status 0. Different operating systems implement syscall services differently, sometimes depending on the processors architecture. Looking at a reasonable set of "mainstream" operating systems and architectures (i386 Linux, i386 *BSD, i386/SPARC Solaris and i386 Windows NT/2000) two very distinct groups arise: UNIX and Windows.

The UNIX way

UNIX systems use a generic and homogeneous mechanism for calling system services, usually in the form of a "trap", a "software interrupt" or a "far call". syscalls are classied by number and arguments are passed either through the stack, registers or a mix of both. The number of system services is usually kept to a minimum (about 270 syscalls can be seen in OpenBSD 2.9), as more complex functionality is provided on higher user-level functions in the libc library. Usually theres a direct mapping between syscalls and the related libc functions.

The Windows way

The native API is little mentioned in the Windows NT documentation, so there is no well-established convention for referring to it. "native API" and "native system services" are both appropriate names, the word "native" serving to distinguish the API from the Win32 API, which is the interface to the operating system used by most Windows applications. It is commonly assumed that the native API is not documented in its entirety by Microsoft because they wish to maintain the exibility to modify the interface in new versions of the operating system without being bound to ensure backwards compatibility. To document the complete interface would be tantamount to making an open-ended commitment to support what may come to be perceived as obsolete functionality. Indeed, perhaps to demonstrate that this interface should not be used by production applications, Windows 2000 has removed some of the native API routines that were present in Windows NT 4.0 and has changed some data structures in ways that are incompatible with the expectations of Windows NT 4.0 programs. (...) The routines that comprise the native API can usually be recognized by their names, which come in two forms: NtXxx and ZwXxx. For user mode programs, the two forms of the name are equivalent and are just two different symbols for the same entry point in ntdll.dll. Gary Nebbett, Windows NT/2000 Native API Reference

Due to this "lack of denition" for its system services, the high number of functions in this category (more than 1000), and the fact that the bulk of functionality for some of them is part of large user mode dynamic libraries, well refer to "Windows syscalls" to any function in any dynamic library available to a user mode process. For simplicitys sake, this denition includes higher level functions than those dened in ntdll.dll, and sometimes very far above the user / kernel limit.

Syscall Proxying

From the point of view of a process the resources it has access to, and the kind of access it has on them, denes the "context" on which it is executed. For example, a process that reads data from a le might do so using the open, read and close syscalls (see Figure 1).

Figure 1. A process reading data from a le

Syscall proxying inserts two additional layers between the process and the underlying operating system. These layers are the syscall stub or syscall client layer and the syscall server layer. The syscall client layer acts as the nexus between the running process and the underlying system services. This layer is responsible for marshaling each syscall arguments and generating a proper request that the syscall server can

understand. It is also responsible for sending this request to the syscall server and returning back the results to the calling process. The syscall server layer receives requests from the syscall client to execute specic syscalls using the underlying operating system services. This layer marshals back the syscall arguments from the request in a way that the underlying OS can understand and calls the specic service. After the syscall nishes, its results are marshaled and sent back to the client. For example, the same reader process from Figure 1 with these two additional layers would look like Figure 2.

Figure 2. A process reading data from a le with the new layers

Now, if these two layers, the syscall client layer and the syscall server layer, are separated by a network link, the process would be reading from a le on a remote system, and it will never know the difference (see Figure 3).

Figure 3. Syscall Proxying in action

Separating the syscall client from the syscall server effectively changes the "context" on which the process is executing. In fact, the process "seems" to be executing "remotely" at the host where the syscall server is running (and well see later that it executes with the privileges of the remote process running the syscall server). It is important to note that neither the original le reader program was modied (besides changing the calls to the operating systems syscalls to calls into the syscall client) to accomplish remote execution, nor did its inner logic change (for example, if the program counted occurrences of the word coconut in the le, it will still count the same way when working with the remote server). This technique is referred to as Syscall Proxying along this document.

A rst glimpse into an implementation

When looking at Figure 3 three letters instantly come to my mind: RPC!

Syscall Proxying can be trivially implemented using an RPC model. Each call to an operating system syscall is replaced by the corresponding client stub. Accordingly, a server that "exports" through the RPC mechanism all these syscalls in the remote system is needed (see Figure 4).

Figure 4. The RPC model

There are several RPC implementations that can be used, among these are: Open Network Computing (ONC) RPC (also known as SunRPC) Distributed Computing Environment (DCE) RPC RPC specication from the International Organization for Standardization (ISO) Custom implementation

Check Comparing Remote Procedure Calls [http://hissa.nist.gov/rbac/5277/titlerpc.html] for a comparison of the rst three implementations. In the RPC model explained above a lot of effort, symmetrically duplicated between the client and the server, is devoted both to converting back and forth from a common data representation format and to communicating through different calling conventions. These conversions made communication possible between a client and a server implemented in different platforms. Also, the RPC model attempts to attain generality, in making it possible to perform ANY procedure call across a network. If we can move all this logic to the client layer (making the client tightly coupled with the specic servers platform) we can drastically reduce the servers size, taking advantage of a generic mechanism for calling any syscall (this mechanism is "generic" when talking about a single platform).

Optimizing for size

Lets say that we have a hunch that there should be some added value in optimizing the syscall server size in memory. Also, for simplicitys sake, well move into the nice and warm UNIX / i386 world for a couple of lines. If we look carefully into the syscalls we are "proxying" around, they all have a lot in common: Homogeneous way of passing arguments. syscall arguments are either passed through the stack, the registers or a mix of both. Also, these arguments fall into one of these categories: integers pointers to integers pointers to buffers pointers to structs Simple calling mechanism. A register is set with the number of the syscall wed like to invoke and a generic trap / software interrupt / far call is executed.

Fat client, thin server

In order to accomplish the goal of reducing the syscall servers size, well make client code do all the work of marshaling each calls arguments directly into the format needed by the servers platform. In fact, the client request will be an image of the servers stack, just before handling the request. In this way, clients will be completely dependent on the servers platform but the syscall server will just implement communication, and a generic way of passing the already prepared arguments to the specic syscall (see Figure 5).

Figure 5. Fat client, thin server

Packing arguments in the client is trivial for integer parameters, but not so for pointers. Theres no relationship at all between the client and the server processes memory space. Addresses from the client probably dont make sense in the server and vice versa.

10

To accommodate for this issue the server, on accepting a new connection from the client, sends back his ESP (the stack pointer). The client then creates extra buffer space in the request, and "relocates" each pointer argument to point to somewhere inside the request. Knowing that the server reads requests straight into its stack, the client calculates the correct value for each pointer, as it will be in the server process stack (this is why we got the servers ESP on the rst place). See Figure 6 for a sample of how open() arguments are marshaled.

Figure 6. Marshaling arguments for the open() syscall

A sample implementation for Linux

To invoke a given syscall in a i386 Linux system a program has to: 1. Load the EAX register with the desired syscall number.

11

2. Load the syscalls arguments in the EBX, ECX, EDX, ESI, EDI registers 2 . 3. Call software interrupt 0x80 (int $0x80) 4. Check the returned value in the EAX register. Take a look at Example 1 to see how does the open() syscall in a Linux system looks when examined through gdb.

Example 1. Debugging open() in i386 Linux

[max@m21 max]$ gdb ex GNU gdb Red Hat Linux 7.x (5.0rh-15) (MI_OUT) Copyright 2001 Free Software Foundation, Inc. GDB is free software, covered by the GNU General Public License, and you are welcome to change it and/or distribute copies of it under certain conditions. Type "show copying" to see the conditions. There is absolutely no warranty for GDB. Type "show warranty" for details. This GDB was configured as "i386-redhat-linux"... (gdb) break open Breakpoint 1 at 0x8050f60 (gdb) r Starting program: /usr/home/max/ex Breakpoint 1, 0x08050f60 in __libc_open () (gdb) l 1 #include <sys/types.h> 2 #include <fcntl.h> 3 4 int main() 5 { 6 int fd; 7 8 fd = open("coconuts", O_RDONLY); 9 10 if(fd<0) (gdb) x/20i $eip 0x8050f60 <__libc_open>: push %ebx 0x8050f61 <__libc_open+1>: 0x8050f65 <__libc_open+5>: 0x8050f69 <__libc_open+9>: 0x8050f6d <__libc_open+13>: 0x8050f72 <__libc_open+18>:

2

mov mov mov mov int

0x10(%esp,1),%edx 0xc(%esp,1),%ecx 0x8(%esp,1),%ebx $0x5,%eax $0x80

With some exceptions like the socket functions, where some arguments are passed through the stack.

12

0x8050f74 0x8050f75 0x8050f7a 0x8050f80

<__libc_open+20>: <__libc_open+21>: <__libc_open+26>: <__libc_open+32>:

pop cmp jae ret

%ebx $0xfffff001,%eax 0x8056f50 <__syscall_error>

The breakpoint stops execution inside libcs wrapper for the open() syscall. Arguments passed through the stack to libc_open are copied into the respective registers. EAX is loaded with 5, the syscall number for open(). The software interrupt for system services is triggered. So, given that a generic and simple mechanism for calling any syscall is in place, and using the architecture and argument marshaling techniques explained in the section called Fat client, thin server, we can code a simple server that looks like Example 2.

Example 2. Pseudocode for a simple Linux server

channel = set_up_communication() channel.send(ESP) while channel.has_data() do request = channel.read() copy request in stack pop registers int 0x80 push eax channel.send(stack)

Sets up the communication channel between the client and the server. To keep things simple, well assume this is a socket. Copies the complete request to the servers stack. Remember how does the request look like (see Figure 6). The request block is sent back to the client. This is done to return any OUT 3 pointer arguments back to the client application. This might be redundant in some cases but since our intention is to keep the server simple, it wont handle these cases differently. An excerpt from a simple implementation of a syscall server implementing this behavior can be seen at Example 3. This excerpt refers only to the main read request / process / write response loop.

3

Arguments used to return information from the syscall, as in read().

13

Example 3. A simple syscall server snippet for i386 Linux

push read_request: mov push xor movl read_request2: mov mov int add sub jnz pop sub read_request3: movl mov int add sub jnz do_request: pop pop pop pop pop pop int push push push push push push do_send_answer: mov mov mov $4,%eax (%ebp),%ebx %esp,%ecx # __NR_write # fd # buff %eax %ebx %ecx %edx %esi %edi $0x80 %edi %esi %edx %ecx %ebx %eax $3,%eax %esp,%ecx $0x80 %eax,%ecx %eax,%edx read_request3 # __NR_read # buff # # # # no error checking new buff byte to read do while not zero $3,%al %esp,%ecx $0x80 %eax,%ecx %eax,%edx read_request2 %edx %edx,%esp # # # # # # __NR_read buff no error checking! new buff byte to read do while not zero %ebp,%esp %esp %eax,%eax $4,%edx %ebx # fd

# count

# get number of bytes in packet # save space for incoming data

14

mov sub int jmp pop pop

%ebp,%edx %esp,%edx $0x80 read_request %eax %ebp

# count # no error checking!

Read request straight into ESP. Pop registers from the top of the request (the request is in the stack). Invoke the syscall. EAX already holds the syscall number (it was part of the request). Send back the request buffer as response, since buffers might contain data (for example if calling read()). In this way, we are able to code a simple but yet very powerful syscall server in a few bytes. The sample complete implementation of the server described above, with absolutely no effort made on optimizing the code, is about a hundred bytes long.

What about Windows?

Yeah! What about it? Ah, yes! Windows is a whole different thing. As explained in the section called The Windows way: ...well refer to "Windows syscalls" to any function in any dynamic library available to a user mode process. Even though the boundaries for syscalls or the native API are not clearly dened, there is a simple and common mechanism for loading any DLL and calling any function in it. In the same spirit as the Linux server, the windows one will also be based on the techniques explained in the section called Fat client, thin server. Theres one subtle difference: in the UNIX world we used an integer to identify which syscall to invoke, in the Windows world theres no such thing (at least not with our denition of windows syscall). So, our server will provide functionality for calling any function in its process address space. What does this mean? Initially, that the server can only call functions that are part of whatever DLLs are already loaded by the process. But wait, two special functions are already part of the servers address space: LoadLibrary and GetProcAddress. The LoadLibrary function maps the specied executable module into the address space of the calling process. (...)

15

The GetProcAddress function retrieves the address of an exported function or variable from the specied dynamic-link library (DLL). Windows Platform SDK: DLLs, Processes, and Threads Using these two functions along with the capability of calling any function in the servers address space, we can fulll the initial goal of "calling any function on any DLL" (see Example 4).

Example 4. Pseudocode for a simple Windows server

channel = set_up_communication() channel.send(ESP) channel.send(address of LoadLibrary) channel.send(address of GetProcAddress) while channel.has_data() do request = channel.read() copy request in stack pop ebx call [ebx] push eax channel.send(stack)

The addresses of LoadLibrary and GetProcAddress in the servers address space are passed back to the syscall client upon initialization. The client can then use the generic "call any address" mechanism implemented by the servers main loop to call these functions and load new DLLs in memory. The client passes the exact address of the function it wants to call inside the request. The stack is already prepared for the call (as in Figure 6). In this way, an equivalent server can be coded in the Windows platform. Argument marshaling on the client side is not so trivial, since we are providing a method for calling ANY function. A generic method for "packing" any kind of arguments (integers, pointers, structures, pointers to pointer to structures with pointers, etc) is necessary.

The real world: applications

Syscall Proxying could be used for any task related with remote execution, or with the distribution of tasks among different systems (all goals in common with RPC). The fact is that, for generic remote execution uses, Syscall Proxying (implemented as a non RPC server) lags behind RPCs efciency and interoperability. But, theres one use in which Syscall Proxying "Fat client, thin server" architecture wins: exploiting code injection vulnerabilities.

16

Code execution vulnerabilities are those that allow an attacker to execute arbitrary code in the target system. Typical incarnations of code injection vulnerabilities are: buffer overows and user-supplied format strings. Attacks for these vulnerabilities usually come in 2 parts 4 : Injection Vector (deployment). The portion of the attack directed at exploiting the specic vulnerability and obtaining control of the instruction pointer (EIP / PC registers).. Payload (deployed). What to execute once we are in control. Not related to the bug at all. A common piece of code used as attack payload is the "shellcode": Shell Code: So now that we know that we can modify the return address and the ow of execution, what program do we want to execute? In most cases well simply want the program to spawn a shell. From the shell we can then issue other commands as we wish. But what if there is no such code in the program we are trying to exploit? How can we place arbitrary instruction into its address space? The answer is to place the code with are trying to execute in the buffer we are overowing, and overwrite the return address so it points back into the buffer. Aleph One, Smashing The Stack For Fun And Prot Shell code allows the attacker to have interactive control of the target system after a successful attack.

The Privilege Escalation phase

The "Privilege Escalation" phase is a critical stage in a typical penetration test. An auditor faces this stage when access to a target host has been obtained, usually by succesfully executing an attack. Once a single hosts security has been compromised, either the auditors goals have been accomplished or they have not. Access to this intermediate target allows for staging more effective attacks against the system by taking advantage of existing webs of trust between hosts and a more privileged position in the target systems network. When the auditor uses this "vantage point" inside the targets network, he has effectively changed his "attacker prole" from an external attacker to an attacker with access to an internal system. To successfully "pivot", the auditor needs to be able to use his tools (for info gathering, attack, etc) at the compromised host. This usually involves porting the tools or exploits to a different platform and deploying them on the host, sometimes including the installation of required libraries and packages and even a C compiler. These tasks can be accomplished using a remote shell on the compromised system, although they are far from following a formal process, and some times require a lot of effort on the auditors side.

4

Taken from Greg Hoglunds, Advanced Buffer Overow Techniques, Black Hat Briengs USA 2000.

17

Redening the word "shellcode"

Lets suppose that all our penetration testing tools are developed with Syscall Proxying in mind. They all use a syscall stub for communicating with the operating system. Now, if instead of supplying code that spawns a shell as the attack payload, we use a "thin syscall server" we can see the following advantages: Transparent pivoting. Whenever an attack is executed succesfully, a syscall server is deployed in the remote host. All of the available tools can now be used in the auditors host but "proxying" syscalls on the newly compromised one. These tools seem to be executing on the remote system. "Local" privilege escalation. Attacks that obtain remote access with constraints, such as vulnerabilities in services inside chroot jails or in services that lower the user privileges can now be further explored. Once a syscall server is in place, local exploits / tools can be used to leverage access to higher privileges in the host. No shell? Who cares! In certain scenarios even though a certain system is vulnerable, it is not possible to execute a shell (picture a chrooted service with no root privileges and no local vulnerabilities). In this situation, deploying a syscall server still allows the auditor to "proxy" attacks against other systems accessible from the originally compromised system.

Conclusions

Syscall Proxying is a powerful technique when staging attacks against code injection vulnerabilities (buffer overows, user supplied format strings, etc) to successfully turn the compromised host into a new attack vantage point. It can also come handy when "shellcode" customization is needed for a certain attack (calling setuid(0), deactivating signals, etc). Syscall Proxying can be viewed as part of a framework for developing new penetration testing tools. Developing attacks that actively use the Syscall Proxying mechanism effectively raises their value.

Acknowledgements

The term "Syscall Proxying" along with a rst implementation (a RPC client-server model) was originally brought up by Oliver Friedrichs and Tim Newsham. Later on, Gerardo Richarte and Luciano Notarfrancesco from CORE ST rened the concept and created the rst shellcode implementation for Linux. The CORE IMPACT team worked on a generic syscall abstraction creating the ProxyCall client interface, along with several different server implementations as shellcode for Windows, intel *BSD and SPARC Solaris. The ProxyCall interface constitutes the basis for IMPACTs multi-platform module framework and they are the basic building block for IMPACTs agents. "Pivoting" was coined by Ivan Arce in mid 2001.

18

Anda mungkin juga menyukai

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- Invoice Template Doc TopDokumen2 halamanInvoice Template Doc TopReda EightyNevezBelum ada peringkat

- Modeling SystemDokumen56 halamanModeling SystemReda EightyNevezBelum ada peringkat

- My Life Is BrilliantDokumen2 halamanMy Life Is BrilliantReda EightyNevezBelum ada peringkat

- Blog Skins 36521Dokumen5 halamanBlog Skins 36521Reda EightyNevezBelum ada peringkat

- LicenseDokumen2 halamanLicenseCatalin BiclineruBelum ada peringkat

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (895)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (588)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (74)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (121)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- JAVA V.S. C++: Programming Language ComparisonDokumen15 halamanJAVA V.S. C++: Programming Language ComparisonLaxminarayana MergojuBelum ada peringkat

- Phrack Issue 68 #1Dokumen39 halamanPhrack Issue 68 #1Rishab ChoubeyBelum ada peringkat

- FAQs S7 1200 v1 1Dokumen124 halamanFAQs S7 1200 v1 1ee01096Belum ada peringkat

- Waveforms™ SDK Reference Manual: Revised April 4, 2019Dokumen121 halamanWaveforms™ SDK Reference Manual: Revised April 4, 2019tommyyBelum ada peringkat

- 2020 - BCA 2 Sem Programming LanguageDokumen16 halaman2020 - BCA 2 Sem Programming Languagemanishkharwar.hhBelum ada peringkat

- BCom Computer Applications I YearDokumen10 halamanBCom Computer Applications I YearSheryl MirandaBelum ada peringkat

- SubtitleCreator Subtitle Filter DocumentationDokumen6 halamanSubtitleCreator Subtitle Filter DocumentationAbdulMajidYousoffBelum ada peringkat

- New Microsoft Word DocumentDokumen83 halamanNew Microsoft Word DocumentHarshaBelum ada peringkat

- Com 124 Notes FinalDokumen18 halamanCom 124 Notes FinalNafruono Jonah100% (1)

- SALOME 7 2 0 Release Notes PDFDokumen32 halamanSALOME 7 2 0 Release Notes PDFdavid0775Belum ada peringkat

- Unit - IDokumen36 halamanUnit - IJit AggBelum ada peringkat

- Sheet4 Linked-Lists S2018 - SolutionDokumen8 halamanSheet4 Linked-Lists S2018 - Solutionmahmoud emadBelum ada peringkat

- C++ Bank ManagementDokumen11 halamanC++ Bank Managementsumeetdude100Belum ada peringkat

- Mpi Lab ManualDokumen79 halamanMpi Lab ManualSK KashyapBelum ada peringkat

- Pascal (Programming Language) : Jump To Navigationjump To SearchDokumen16 halamanPascal (Programming Language) : Jump To Navigationjump To SearchClaude VinyardBelum ada peringkat

- C Programming Questions and Answers 2Dokumen17 halamanC Programming Questions and Answers 2Shakir HussainBelum ada peringkat

- Assignment - 10 - Compiler DesignDokumen2 halamanAssignment - 10 - Compiler Designdheena thayalanBelum ada peringkat

- Luis Argüelles Méndez-A Practical Introduction To Fuzzy Logic Using LISP-Springer (2016) PDFDokumen376 halamanLuis Argüelles Méndez-A Practical Introduction To Fuzzy Logic Using LISP-Springer (2016) PDFAnonymous 0p0ra9Belum ada peringkat

- Lecture 1 "Effective Programm Technique"/ Politechnika WroclawskaDokumen31 halamanLecture 1 "Effective Programm Technique"/ Politechnika WroclawskaMiguel Ângel Vásquez GuzmánBelum ada peringkat

- The 8051 Assembly LanguageDokumen89 halamanThe 8051 Assembly LanguageNishant GargBelum ada peringkat

- BCA 1st To 6th SemDokumen111 halamanBCA 1st To 6th SemEhtesam khanBelum ada peringkat

- B.C.A.-Sem.1 To 6 - (2012-13) PDFDokumen57 halamanB.C.A.-Sem.1 To 6 - (2012-13) PDFshilpashree75% (8)

- Using Assembler in DelphiDokumen44 halamanUsing Assembler in DelphimullerafBelum ada peringkat

- Cs201 MCQ SamarDokumen7 halamanCs201 MCQ SamarJaved IqbalBelum ada peringkat

- C Sample Two Mark Question and Answer: 1) Who Invented C Language?Dokumen13 halamanC Sample Two Mark Question and Answer: 1) Who Invented C Language?rajapst89% (9)

- (William A. Wulf) Compilers and Computer ArchitectureDokumen7 halaman(William A. Wulf) Compilers and Computer ArchitectureFausto N. C. VizoniBelum ada peringkat

- Addressing Modes Notes With ExamplesDokumen10 halamanAddressing Modes Notes With ExamplesDevika csbsBelum ada peringkat

- PG Diploma in GeoinformaticsDokumen16 halamanPG Diploma in GeoinformaticskamaltantiBelum ada peringkat

- C Language TrainingDokumen4 halamanC Language TrainingRana RoyBelum ada peringkat

- Data Structures Part1 PDFDokumen130 halamanData Structures Part1 PDFFarhan AkbarBelum ada peringkat