Kmeans and Adaptive K Means

Diunggah oleh

Sathish KumarDeskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Kmeans and Adaptive K Means

Diunggah oleh

Sathish KumarHak Cipta:

Format Tersedia

Sachin M. Jambhulkar et al.

/ International Journal of Engineering Science and Technology (IJEST)

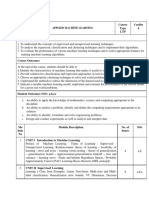

Comparison Of K-means and Adaptive K-means With VHDL Implementation

#1.Sachin M. Jambhulkar, #2.Nishant M. Borkar,#3Swati Sorte

Electronics Engineering ,RTM Nagpur University. Address-sach_jambhulkar@yahoo.co.in Abstract Image Segmentation based on K-means algorithm is presented in GUI (Graphical User Interface). The proposed method gives K-means algorithm and adaptive k-means clustering to obtain high performance and efficiency. This method proposes initialization at different groups of centroid by using K-means algorithm. In addition, it solves a plane by selecting the number of clusters using datasets from images by frame size and the absolute value between the means and additional steps for convergence is added. The iteration time on image segmentation will be determine by using adaptive k-means clustering. In this algorithm K-means and Adaptive K-means method for better image segmentation will be comparing. The methods will be implemented in VHDL. Keywords- SEGMENTATION,K-MEANS.ADAPTIVE K-MEANS, VHDL.

I. INTRODUCTION Segmentation-Image Segmentation can be grouped under a general framework of image engineering. It consist of three layers as follows:(1)Image Processing (Low Layer) (2)Image Analysis (Middle Layer) (3)Image Processing (High Layer) Image Segmentation is most critical tasks of image analysis. It is used of image either to distinguish objects their background or to partition an image onto the related regions. It is plane the human eye responds more quickly and accurately to that happening in a scene. Colour is helpful in making many objects in stand out. It is also considered to be one of the

most popular problems in a computer vision. There are different techniques to solve image segmentation problem as threshold approach , cantor based approaches, region based approaches, cluster based approaches and other optimizations approaches using image framework. The clustering approach can be divided into two general groups as a partition and hierarchical Clustering algorithm. Partitioned algorithms such as K-means and adaptive clustering are widely used in many applications such as data mining, compression, image segmentation and machine learning. The advantages of clustering algorithm are that the classification is simple and easy to implement. K-means- K-means stands for clusters .A cluster is a collection of data objects that are similar to one another within the same cluster and dissimilar to the object in other cluster. Clusters analysis has been widely used in a numerous application including pattern recognition, data analysis, image processing and market research. The k-means algorithms takes the input parameter K and partitions a set of N objects into K cluster so that the resulting intra cluster similarity is high but the intercluster similarity is low. Cluster similarity is measured in regard to the mean value of objects in a cluster. The basic steps of K-means clustering are simple. In the beginning we determine number of cluster and we assume or canter of these clusters. We can take any random objects as the initial centroids. Adaptive K-means-Each region is characterised by a slowly varying intensity that is distribution of function. Therefore to avoid this function K-means clustering. Adaptive K-means clustering is used to decrease the number of iterations. The segmented image consist of very few levels (typically as 2-4).The segmented recognition

ISSN : 0975-5462

NCICT Special Issue Feb 2011

22

Sachin M. Jambhulkar et al. / International Journal of Engineering Science and Technology (IJEST)

system as assume that the segmented image retains a crude representation of the image. The technique we develop can be regarded as a generalization of the K-means clustering algorithm in two respects as it is adaptive and includes spatial constraints. The intensity function are constant in each region and equal to the K-means cluster canters as the algorithm progresses, the intensities are updated by averaging over a sliding window whose size progressively of image decreases. The performance our technique is clearly superior to K-means for the class of image we are considering. By using adaptive K-means clustering to overcome associate regions with the detected boundaries very few time. II. RELATED WORKS

igFF

A) K-meansFf K-means depends on main four steps are as The algorithm is iterative in nature .XXN are data points or vectors or observations.Each follows: observations will be assigned to one and only one (1)Initialisation cluster. C(i) denotes cluster number for the ith (2)Classification observations. K-means minimizes within cluster (3)Computation point scatter. (4)Convergence Condition. K A first it randomly selects K of the objects, each 2 1 K W (C ) = xi x j = N k xi mk initially respects a cluster mean or center.For each 2 k =1 C ( i ) =k C ( j ) =k k =1 C ( i ) =k of the object is assigned to the cluster to which it is the most similar based on the distances between the Where, object and the cluster mean. It then computes the mk = It is mean vector of the Kth cluster. new mean for each cluster. Nk= It is the number of observations in Kth Then the K-means algorithm will do the three steps cluster. below until convergence. For compute the cluster means mk as follows (1)Determine the centroid coordinate. (2)Determine the distances of each object to the xi centroids. i:C ( i ) =k mk = , k =1, , K . (3)Group the object based on minimum distances. Nk The K-means algorithm for portioning The membership for each data point belongs to based on the mean value of the objects in the cluster. Input as the number of cluster K and a nearest center,depending on the minimum distances database containing N objects. Output as set of K .This membership distances as follows. clusters that minimize the square error criterion. 2 Every data in the subclass shares a common trait. C (i ) = arg min xi mk , i = 1, , N

1k K

For initialization step with C and each data point Xi as compute its minimum distances with each canter Xj. For each canter Xj , recomputed the new centre from all data point Xi belong to this center.

ISSN : 0975-5462

NCICT Special Issue Feb 2011

23

Sachin M. Jambhulkar et al. / International Journal of Engineering Science and Technology (IJEST)

size of regions and smoothing their boundaries. III. INITIALIZATION METHOD B)Adaptive K-meansThe grey-scale image as a collection of regions of uniform or slowly varying intensity. Which typically takes values between o to 255. A segmentation of the image into regions will be denoted by X. Where Xs=I means that the pixel at s belongs to region i. The different regions types or classes are K. Where, P(x)=It is the priori density of the region process. P(x/y)=It is the conditional density of the observed image. We model the regions process by a Markova random field. That is if Ns as a neighbourhood of the pixel at S, then A)K-means- It starts by reading the data as 2D matrix and then calculates the mean of the first frame size as F1=300300,F2=150150,F3=100100,F4=5050, F5=3030, F6=1010 or F7=77. This number is the number of clusters and their values are the centroids values as indicated in the steps below (1)Read the data set as a matrix. (2)Calculate the means of each frame depending on the frame size and putting them array. (3)Sort the means array in an ascending array. (4)Comparing between the current and the next element in the means array .If they are equal then keep the current element and remove the next otherwise keep both. (5)Repeat step 4 until the end of the means array. These are equal to the number of clusters and their values.

START

We consider images defined on the Cartesian grid and a neighbohood consisting of the 8 nearest pixels.

Initialization Of Centroid Find the Cluster Center Distances of Points to Centroid

Where, Z is normalizing constant and the summation is over all cliques C. We consider images defined on the Cartesian grid and neighbourhood consisting of the 8 nearest pixels. The clique potential Vc depend only on the pixels that belong to clique C. The two-point clique potential are defined as follows

Grouping Based On Minimum Distances New Center Calculate the mean for new Center

Yes

|Ci-Ci+1|>mean

No

New Center=Ci

New Center=(Ci+Ci+1)/2

The parameter is positive , so that two neighbouring pixels are more likely to belong to same class than to different classes. Increasing the

ISSN : 0975-5462

NCICT Special Issue Feb 2011

24

Sachin M. Jambhulkar et al. / International Journal of Engineering Science and Technology (IJEST)

End Of Cluster

Yes

Do Center Moved

Fig: K-means Clustering

No

EN D

B)Adaptive K-meansFor estimating the distribution of region X and the intensity function s. s are defined on the same grid as the original grey-scale image Y and the region distribution X. When the number of pixels of the type i inside the window cantered at S is too small, the estimate s is not very Reliable. A reasonable choice for the minimum of pixels necessary for estimating s is Tmin=W the window width. The maximization is done at every point in the image and the cycle is a repeated until convergence. This is iterated conditional modes approach proposed adaptive K-means clustering. Now we consider overall adaptive clustering algorithm .We obtain an initial estimate of X by the K-means algorithm it alternates between estimating of X and Intensity function s.We define an iteration consist of one update of X and one update of function of s. The window size for the intensity function estimation is kept constant until this procedure convergence, usually in less than ten iterations. Our stopping criterion is that the last iteration convergences in one cycle. The whole procedure is iteration with a new window size. The adaptation is achieved by varying the window size W.Initially the window for estimating the intensity functions is the whole image and the intensity function of each region are constant. The segmentation becomes better and smaller window size give more reliable and accurate estimates. Thus the algorithm starting from global estimates, slowly adapts to the local characteristics of each region .

The algorithm stops when the minimum window size reached. Typically we keep reducing the window size by a factor of two, until a minimum window size W=7 pixels. If window size kept constant and equal to whole image , then the result is same as in the approach .The no adaptive clustering algorithm is only an intermediate result of clustering algorithm. It results in segmentation similar to the K-means only edge is smoother. The strength of the approach lies in the variation of the window size which allow the intensity function to adjust to the local characteristics of the image. The smoothness constraint on the local intensity function . Also there is no need to split our regions into a set of connected pixels as is done. The noise variance is known as or be estimated independently of the algorithm. Thus given an image segmented to be segmented the most important parameter to choose is the variance. The noise variance controls the amount of detail by algorithm .In the following section we discuss the choice of K more extensively. The amount of computation for the estimation of X depends on the image dimensions, the number of different window used, the number of classes and course, image content. The amount computation for each local intensity calculation does not depend on the image size.

ISSN : 0975-5462

NCICT Special Issue Feb 2011

25

Sachin M. Jambhulkar et al. / International Journal of Engineering Science and Technology (IJEST) Get segmentation X by K-means

Window equal whole image n=1

parallel algorithms are possible. However, except in high-volume commercial applications, ASICs are often considered too costly for many designs. In addition, if an error exists in the hardware design and is not discovered before product shipment, it cannot be corrected without a very costly product recall. V. IMPLEMENTATION IN VHDL In image processing ,several algorithm belong to a category called windowing operators. Window operators use a window or neighbourhood of pixels, to calculate their output. I/P

Given X get new estimates s Reduce Window size W N=1 W>Wmin X converged STOP

Fig: Adaptive K-means Clustering

Given s Get new estimation X n=n+1

W=Wmin

n<nmax

Read I/P image

IV. INTRODUCTION OF VHDL VHSIC (Very High Speed Integrated Circuit) Hardware Design Language (VHDL) has become a sort of industry standard for high-level hardware design. Hardware, of course, offers much greater speed than a software implementation, but one must consider the increase in development time inherent in creating a hardware design. Most software designers are familiar with C, but in order to develop a hardware system, one must either learn a hardware design language such as VHDL or Verilog, or use a software-to-hardware conversion scheme, such as Streams-C [3], which converts C code to VHDL, or MATCH [4], which converts MATLAB code to VHDL. While the goals of such conversion schemes are admirable, they are currently in development and surely not suited to high-speed applications such as video processing. Application Specific Integrated Circuits (ASICs) represent a technology in which engineers create a fixed hardware design using a variety of tools. Once a design has been programmed onto an ASIC, it cannot be changed. Since these chips represent true, custom hardware, highly optimized,

Calculate x and y sum

Process on image with matrix that i/p windo w (X)

Get final sum

Write sum to file

O/P

Figure- Simulation of VHDL

VI.

CONCLUSIONS

ISSN : 0975-5462

NCICT Special Issue Feb 2011

26

Sachin M. Jambhulkar et al. / International Journal of Engineering Science and Technology (IJEST)

The number of iteration affects most methods for its computational cost performance for reaching convergence. Therefore adaptive K-means algorithm is proposed to improve the standard Kmeans algorithm using modification and additional steps for convergence condition. It can be concluded that the proposed scheme was succefully in estimating the number of cluster, decreasing the number of iteration of K-means clustering, therefore increasing the speed of execution process. Thus it can be conclude that with a large absolute value there small number of clusters. Adaptive K-means clustering as segmentation of objects with smooth surface. The intensity of each region is modeled as slowly varying function plus whiter Gaussian Noise. The adaptive K-means clustering technique we developed can be regarded as a generalization of the K-means clustering algorithm to pictures of industrial objects, building, aerial photography, faces and optical chracters.The segmented images are useful for image display.

REFERENCE [1] Rafael C. Gonzalez, Richard E. Woods Digital Image Processing Second Edition Prentice-Hall Inc,2002. [2] M.G.H. Omran, A. Salman and A.P. Engelbrecht Dynamic clustering using particle swarm optimization with application in image segmentation Pattern and Applica, 2005. [3] B. Jeon, Y. Yung and K. Hong Image segmentation by unsupervised sparse clustering pattern recombination letters27science direct, 2006. [4] A.K. Jain , M.N. Murty ,P.J.Flynn Data Clustering: a review ACM Compute, Surveys 31,1996. [5] J. Bhaskar, VHDL primer, second edition BS Publication, 2005. [6] Shi Zhong and Joydeep Ghosh, A Unified Framework for Model-based Clustering, Journal of Machine Learning Research 4(USA), 1001-1037, 2003. [7] J.B.MacQueen Some methods for classification and analysis of multivariate observation, In: Le Cam, L.M. Neyman , J.(Eds.), University of California,1967.

ISSN : 0975-5462

NCICT Special Issue Feb 2011

27

Anda mungkin juga menyukai

- Accounting Information Systems 14e Chapter 1Dokumen38 halamanAccounting Information Systems 14e Chapter 1JustYourAverageStudent100% (3)

- Abductive Inference Computation Philosophy TechnologyDokumen314 halamanAbductive Inference Computation Philosophy Technologyusernameabout100% (1)

- VO Thesis Proposal 082716Dokumen35 halamanVO Thesis Proposal 082716Victor OkhoyaBelum ada peringkat

- Advances in Human Factors in CybersecurityDokumen250 halamanAdvances in Human Factors in CybersecuritySpeedy ServicesBelum ada peringkat

- Poetter-Compris-AI ML DS Infographics-Cheat SheetsDokumen239 halamanPoetter-Compris-AI ML DS Infographics-Cheat SheetsMohammad Mashrequl IslamBelum ada peringkat

- Lab 5: 16 April 2012 Exercises On Neural NetworksDokumen6 halamanLab 5: 16 April 2012 Exercises On Neural Networksಶ್ವೇತ ಸುರೇಶ್Belum ada peringkat

- ML Unit-1Dokumen12 halamanML Unit-120-6616 AbhinayBelum ada peringkat

- STS Lesson 9Dokumen4 halamanSTS Lesson 9Villaran, Jansamuel F.Belum ada peringkat

- Artificial IntelligencDokumen322 halamanArtificial IntelligencSHRI VARSHA R 19EC016Belum ada peringkat

- SAR AI PaperDokumen26 halamanSAR AI PaperDharmesh PanchalBelum ada peringkat

- JNU Project Design with K-Means ClusteringDokumen26 halamanJNU Project Design with K-Means ClusteringFaizan Shaikh100% (1)

- Assignment#3 AIDokumen5 halamanAssignment#3 AITaimoor aliBelum ada peringkat

- Customer Segmentation Using Clustering and Data Mining TechniquesDokumen6 halamanCustomer Segmentation Using Clustering and Data Mining TechniquesefiolBelum ada peringkat

- The International Journal of Engineering and Science (The IJES)Dokumen4 halamanThe International Journal of Engineering and Science (The IJES)theijesBelum ada peringkat

- K-Means Image Segmentation in RGB and L*a*b* Color SpacesDokumen8 halamanK-Means Image Segmentation in RGB and L*a*b* Color SpacesbrsbyrmBelum ada peringkat

- An Integration of K-Means and Decision Tree (ID3) Towards A More Efficient Data Mining AlgorithmDokumen7 halamanAn Integration of K-Means and Decision Tree (ID3) Towards A More Efficient Data Mining AlgorithmJournal of ComputingBelum ada peringkat

- International Refereed Journal of Engineering and Science (IRJES)Dokumen8 halamanInternational Refereed Journal of Engineering and Science (IRJES)www.irjes.comBelum ada peringkat

- s18 Cu6051np Cw1 17031944 Nirakar SigdelDokumen18 halamans18 Cu6051np Cw1 17031944 Nirakar SigdelSantosh LamichhaneBelum ada peringkat

- ML Application in Signal Processing ClusteringDokumen27 halamanML Application in Signal Processing Clusteringaniruddh nainBelum ada peringkat

- An Improved K-Means Algorithm Based On Mapreduce and Grid: Li Ma, Lei Gu, Bo Li, Yue Ma and Jin WangDokumen12 halamanAn Improved K-Means Algorithm Based On Mapreduce and Grid: Li Ma, Lei Gu, Bo Li, Yue Ma and Jin WangjefferyleclercBelum ada peringkat

- Cal 99Dokumen7 halamanCal 99sivakumarsBelum ada peringkat

- FRP PDFDokumen19 halamanFRP PDFmsl_cspBelum ada peringkat

- Cluster Analysis Techniques in RDokumen16 halamanCluster Analysis Techniques in RTalha FarooqBelum ada peringkat

- I Jsa It 04132012Dokumen4 halamanI Jsa It 04132012WARSE JournalsBelum ada peringkat

- Fuzzy C-Means Clustering Using Principal Component Analysis For Image SegmentationDokumen4 halamanFuzzy C-Means Clustering Using Principal Component Analysis For Image SegmentationInternational Journal of Innovative Science and Research TechnologyBelum ada peringkat

- Locating Tumours in The MRI Image of The Brain by Using Pattern Based K-Means and Fuzzy C-Means Clustering AlgorithmDokumen11 halamanLocating Tumours in The MRI Image of The Brain by Using Pattern Based K-Means and Fuzzy C-Means Clustering AlgorithmIJRASETPublicationsBelum ada peringkat

- Real Object Recognition Using Moment Invariants: Muharrem Mercimek, Kayhan Gulez and Tarik Veli MumcuDokumen11 halamanReal Object Recognition Using Moment Invariants: Muharrem Mercimek, Kayhan Gulez and Tarik Veli Mumcuavante_2005Belum ada peringkat

- Data Science Analysis Final ProjectDokumen10 halamanData Science Analysis Final ProjectSrikarrao NaropanthBelum ada peringkat

- K-Means Clustering Method For The Analysis of Log DataDokumen3 halamanK-Means Clustering Method For The Analysis of Log DataidescitationBelum ada peringkat

- Iijit 2014 06 12 024Dokumen6 halamanIijit 2014 06 12 024International Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Text Clustering and Validation For Web Search ResultsDokumen7 halamanText Clustering and Validation For Web Search ResultsInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- PkmeansDokumen6 halamanPkmeansRubén Bresler CampsBelum ada peringkat

- Aircraft Recognition in High Resolution Satellite Images: P.C.Hemalatha, Mrs.M.Anitha M.E.Dokumen5 halamanAircraft Recognition in High Resolution Satellite Images: P.C.Hemalatha, Mrs.M.Anitha M.E.theijesBelum ada peringkat

- Analysis and Study of K Means Clustering Algorithm IJERTV2IS70648Dokumen6 halamanAnalysis and Study of K Means Clustering Algorithm IJERTV2IS70648Somu NaskarBelum ada peringkat

- A Modified K-Means Algorithm For Noise Reduction in Optical Motion Capture DataDokumen5 halamanA Modified K-Means Algorithm For Noise Reduction in Optical Motion Capture DataheboboBelum ada peringkat

- Domain Specific Cbir For Highly Textured ImagesDokumen7 halamanDomain Specific Cbir For Highly Textured ImagescseijBelum ada peringkat

- Segmentation of Range and Intensity Image Sequences by ClusteringDokumen5 halamanSegmentation of Range and Intensity Image Sequences by ClusteringSeetha ChinnaBelum ada peringkat

- CS583 ClusteringDokumen40 halamanCS583 ClusteringruoibmtBelum ada peringkat

- Parallel K-Means Algorithm For SharedDokumen9 halamanParallel K-Means Algorithm For SharedMario CarballoBelum ada peringkat

- Perception Based Texture Classification, Representation and RetrievalDokumen6 halamanPerception Based Texture Classification, Representation and Retrievalsurendiran123Belum ada peringkat

- Seed 2Dokumen11 halamanSeed 2mzelmaiBelum ada peringkat

- 18 A Comparison of Various Distance Functions On K - Mean Clustering AlgorithmDokumen9 halaman18 A Comparison of Various Distance Functions On K - Mean Clustering AlgorithmIrtefaa A.Belum ada peringkat

- Ijcnn03 710Dokumen5 halamanIjcnn03 710api-3706534Belum ada peringkat

- A Genetic K-Means Clustering Algorithm Based On The Optimized Initial CentersDokumen7 halamanA Genetic K-Means Clustering Algorithm Based On The Optimized Initial CentersArief YuliansyahBelum ada peringkat

- An Analysis of Fuzzy C Means and Logical Average Distance Measure Algorithms Using MRI Brain ImagesDokumen5 halamanAn Analysis of Fuzzy C Means and Logical Average Distance Measure Algorithms Using MRI Brain ImagesiirBelum ada peringkat

- Clustering Techniques - Hierarchical, K-Means ClusteringDokumen22 halamanClustering Techniques - Hierarchical, K-Means ClusteringTanya SharmaBelum ada peringkat

- Detection of Rooftop Regions in Rural Areas Using Support Vector MachineDokumen5 halamanDetection of Rooftop Regions in Rural Areas Using Support Vector MachineijsretBelum ada peringkat

- Journal of Computer Applications - WWW - Jcaksrce.org - Volume 4 Issue 2Dokumen5 halamanJournal of Computer Applications - WWW - Jcaksrce.org - Volume 4 Issue 2Journal of Computer ApplicationsBelum ada peringkat

- I Jcs It 2015060141Dokumen5 halamanI Jcs It 2015060141SunilAjmeeraBelum ada peringkat

- Create List Using RangeDokumen6 halamanCreate List Using RangeYUKTA JOSHIBelum ada peringkat

- BirchDokumen6 halamanBirchhehehenotyoursBelum ada peringkat

- Application of Modified K-Means Clustering Algorithm For Satellite Image Segmentation Based On Color InformationDokumen4 halamanApplication of Modified K-Means Clustering Algorithm For Satellite Image Segmentation Based On Color InformationNguyen TraBelum ada peringkat

- DynamicclusteringDokumen6 halamanDynamicclusteringkasun prabhathBelum ada peringkat

- Tmp48e TMPDokumen7 halamanTmp48e TMPFrontiersBelum ada peringkat

- 1 getPDF - JSPDokumen4 halaman1 getPDF - JSPLuis Felipe Rau AndradeBelum ada peringkat

- KNN and SVM Based Satellite Image Classification: IjireeiceDokumen5 halamanKNN and SVM Based Satellite Image Classification: IjireeiceNajoua AziziBelum ada peringkat

- Clustering Algorithms on Iris DatasetDokumen6 halamanClustering Algorithms on Iris DatasetShrey DixitBelum ada peringkat

- Texture Classification Based On Symbolic Data Analysis: AbstractDokumen6 halamanTexture Classification Based On Symbolic Data Analysis: Abstractultimatekp144Belum ada peringkat

- Image Classification Using K-Mean AlgorithmDokumen4 halamanImage Classification Using K-Mean AlgorithmInternational Journal of Application or Innovation in Engineering & ManagementBelum ada peringkat

- Modeling Key Parameters For Greenhouse Using Fuzzy Clustering TechniqueDokumen4 halamanModeling Key Parameters For Greenhouse Using Fuzzy Clustering TechniqueItaaAminotoBelum ada peringkat

- 2875 27398 1 SPDokumen4 halaman2875 27398 1 SPAhmad Luky RamdaniBelum ada peringkat

- A Fast Algorithm of Temporal Median Filter For Background SubtractionDokumen8 halamanA Fast Algorithm of Temporal Median Filter For Background SubtractionManuel Alberto Chavez SalcidoBelum ada peringkat

- Clustering algorithms and techniques explainedDokumen34 halamanClustering algorithms and techniques explainedRicha JainBelum ada peringkat

- Manifold Learning from Random ProjectionsDokumen8 halamanManifold Learning from Random Projectionss_sarafBelum ada peringkat

- A Novel Statistical Fusion Rule For Image Fusion and Its Comparison in Non Subsampled Contourlet Transform Domain and Wavelet DomainDokumen19 halamanA Novel Statistical Fusion Rule For Image Fusion and Its Comparison in Non Subsampled Contourlet Transform Domain and Wavelet DomainIJMAJournalBelum ada peringkat

- Data Cluster Algorithms in Product Purchase SaleanalysisDokumen6 halamanData Cluster Algorithms in Product Purchase SaleanalysisQuenton CompasBelum ada peringkat

- Machine Learning:: Session 1: Session 2Dokumen15 halamanMachine Learning:: Session 1: Session 2aakash vermaBelum ada peringkat

- CTAC97: Cluster Analysis Using TriangulationDokumen8 halamanCTAC97: Cluster Analysis Using TriangulationNotiani Nabilatussa'adahBelum ada peringkat

- ImageamcetranksDokumen6 halamanImageamcetranks35mohanBelum ada peringkat

- Improving BERT Model Using Contrastive Learning For Biomedical Relation ExtractionDokumen10 halamanImproving BERT Model Using Contrastive Learning For Biomedical Relation ExtractionCông NguyễnBelum ada peringkat

- SYS210 6 7 9 13 RevisionDokumen17 halamanSYS210 6 7 9 13 RevisionRefalz AlkhoBelum ada peringkat

- Personalized MedicineDokumen18 halamanPersonalized MedicineDileep singh Rathore 100353Belum ada peringkat

- Spam ClassifierDokumen8 halamanSpam ClassifierAnish JangraBelum ada peringkat

- Get Rich in The A.IDokumen10 halamanGet Rich in The A.Ijhovis254Belum ada peringkat

- Gartner Report Provides Strategic Planning Guidance for AIDokumen12 halamanGartner Report Provides Strategic Planning Guidance for AIsamisdr3946Belum ada peringkat

- Applied Machine Learning Course OverviewDokumen3 halamanApplied Machine Learning Course OverviewAadarsh ChauhanBelum ada peringkat

- HistoryOfObjectRecognition PDFDokumen2 halamanHistoryOfObjectRecognition PDFMohd ShahrilBelum ada peringkat

- TELA - Reference PDFDokumen16 halamanTELA - Reference PDFJunior GuerreiroBelum ada peringkat

- 270+ Machine Learning: ProjectsDokumen15 halaman270+ Machine Learning: ProjectsShikamaru NaraBelum ada peringkat

- (WIRED Guides) Matthew Burgess - WiRED - Artificial Intelligence - How Machine Learning Will Shape The Next Decade-Random House (2021)Dokumen112 halaman(WIRED Guides) Matthew Burgess - WiRED - Artificial Intelligence - How Machine Learning Will Shape The Next Decade-Random House (2021)vcepatnaBelum ada peringkat

- Detection and Classification of Leukemia and Myeloma Using Soft Computing TechniquesDokumen13 halamanDetection and Classification of Leukemia and Myeloma Using Soft Computing TechniquesIJRASETPublicationsBelum ada peringkat

- AI Unit III Part 2Dokumen72 halamanAI Unit III Part 2Shilpa SahuBelum ada peringkat

- Slides-1 5 2Dokumen15 halamanSlides-1 5 2jamer estradaBelum ada peringkat

- Ai Powered SearchDokumen9 halamanAi Powered SearchStoica AlexandruBelum ada peringkat

- Bhandari2020 PaperDokumen24 halamanBhandari2020 PapersaraswathiBelum ada peringkat

- How Is Turing Test Used To Evaluate Intelligence of A Machine?Dokumen4 halamanHow Is Turing Test Used To Evaluate Intelligence of A Machine?Secdition 30Belum ada peringkat

- Talent-Playbook GlobalBUDokumen28 halamanTalent-Playbook GlobalBUMuhammad NoumanBelum ada peringkat

- M.tech (Cse) 2017 18Dokumen59 halamanM.tech (Cse) 2017 18Wili SysBelum ada peringkat

- A Study in Industrial Robot ProgrammingDokumen26 halamanA Study in Industrial Robot ProgrammingPatel Dhaval100% (1)