Stats CS

Diunggah oleh

Lavanya ShastriDeskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Stats CS

Diunggah oleh

Lavanya ShastriHak Cipta:

Format Tersedia

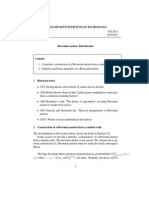

Probability of events:

Law of Total Probability:

If

are mutually exclusive and collectively exhaustive then:

Cumulative Distribution Function: Multiplication rule: Quotient rule:

Bayes rule: Conditional probability:

Addition rule: If we say A & B are mutually exclusive Independence: A and B are independent X,Y are independent if for every X and Y Probability Table: ( ) (( ) ) 1

Probability Tree:

Expected Value:

Variance: (Positive)

Joint Distribution:

Marginal Distribution:

Covariance: ( )

Correlation Coefficient:

If

then

If X,Y independent If X,Y are not necessarilyindependent Continuous Random variables: Density function: The area under between a and b is Total area under is 1

negative linear relationship, -1 is perfect negative no linear relationship positive linear relationship, 1 perfect positive Corr is a measure of linear association, it does not imply causality! Uniform Distribution: X~U[a,b] X can get any value between a and b for every x

Normal Distribution:

Binomial Distribution: X is the number of successes in n independent trials; probability for success is p (for failure 1-p)

If

the in the table

given in a table Steps for solution: 1. Look for 2. Transform to ( ) 3. look for the answer in the table If X,Y are from normal distribution that is also Normal A Simple Random Sample A sample of size n from a population of N objects, where - every object has an equal probability of being selected - objects are selected independently follow the same probability distribution are independent Two ways to think about it: - Sample with replacement - Sample only a small fraction of the whole population Sample Mean Distribution: Population Size N, , Sample Size Sample Mean for large n (n>30) ( )

If

and

then

Sample Proportion Distribution: - The proportion of the population that has the characteristic of interest - The proportion of the Sample that has the characteristic of interest For large n ( , ( ( ))

Confidence interval for sample mean when is known: Need to make sure that are Normal or z-values: (look for in the normal table) The margin of error: confidence interval: confident that: For a given ME, , and ( )

( )

( ) ( )

we need a sample of size:

Confidence interval for the population proportion: The confidence interval:

Confidence interval for sample mean when Need to make sure that are Normal or

is unknown:

If the population is normally distributed, then with n-1 degrees of freedom (values in table) confidence interval: confident that: ( )

has a t distribution

The margin of error: For a given ME, , and ( ) ( )

we need a sample of size:

( ) ( )

Hypothesis testing (mean): 1. Formulate the hypothesis 2. Calculate (and ), decide on 3. Assume is true 4.Decide on hypothesis by (a)Rejection area: reject , if

or or

(b) p-value:

p-value

p-value

p-value

reject

if p-value

p-value

p-value

p-value

Hypothesis testing (proportion) 1. Formulate the hypothesis 2. Calculate , decide on 3. Assume is true 4.Decide on hypothesis by (a)Rejection area: reject , if (b) p-value: reject if p-value

p-value (

) p-value (

or

p-value

Linear regression: Assumptions: 1. Y is linearly related to X 2. The error term The variance of the errorterm, , does not depend on the x-values. 3. The error terms are alluncorrelated. Estimation of model: Th r i a r al lin ar r lation: The regression provides estimators for the coefficients and to the standard error.

Least squares method: The relation is represented as a line: or Choose such that:

is minimized

The coefficient of determination:

Confidence interval for coefficients (k independet variables):

The precentage of explanid variation: R is the correlation coefficient between y and x Adjusted R-squares: For multiple regression we adjust the R-squares by the number of independent variables:

Prediction of y given

Test statistic for (k independent variables) Standard error of single Y-values given X: ( )

Prediction interval for single Y-values given X: (k independent variables)

Testing the linear realationship in multiple regression: at least for one Check the p-value given in the regression output and compare to the desired reject if p-value

Standard error of the mean Y given X: Confidence interval for the mean Y given X: (k independent variables)

Testing the linear realationship in simple regression:

Check the p-value for and compare to the desired reject if p-value Non linear relationships: 1. Logarithmic transformation of the dependent variable: Use when the y grows or depreciate at a certain rate in all 2. Transformation of the independent variables Replace by , , or any other transforamtion that makes sense. Try when the relation is not linear and 1 does not make sense

Categorial variables: If a qualitative variable can have only one of two values, introduce an independent variable that is 0 for the one value and 1 for the other. for k categories we need k-1 variables (base category is when all variables are 0) Trends if the dependent variable increases or decreases in time, use a time counter or de-trend the data first. Seasonal behavior if there are seasonal patterns, use dummies or seasonally adjusted data. Time Lags if a independent variable influences the dependent variable over a number of time periods, include past values in the model. Multicollinearity a high correlation between two or more independent variables.Effects: The standard errors of the s are inflated The magnitude (or even the signs) of the s may be different from what we expect Adding or removing variables produces large changes in the coefficient estimates or their signs The p-value of the F-test is small, but none of the t-values is significant

Decision Theory: How to build a decision tree: 1. List all decisions and uncertainties 2. Arrange them in a tree using decision and chance nodes Time 3. Label the tree with probabilities (for chance nodes) and payoffs(at least at the end of each branch). 4. Solve the tree/ fold back by taking the maximum of payoffs/ minimum of costs for decision nodes and the expected value for chance nodes toget the EMV (expected monetary value) Solve

Regressions examples: *Obtain 90% CI for expected change in TASTE if the concentration of LACTIC ACID increased by .01:

*What is the expected increase in peak load for 3 degree increase in high temp:

Prediction: Use output to estimate a 90% lower bound for predicted BAC of individual who had 4 beers 30 minutes ago: First calculate BAC hat. To obtain a lower bound on the blood alcohol level, we need to estimate S Forecast. See formula. S Forecast = 0.01844 So a lower bound =

Find 90% confidence interval for the expected weight of a car full of ore:

Anda mungkin juga menyukai

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (119)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (587)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2219)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- AP Stats Unit 3 Practice TestDokumen4 halamanAP Stats Unit 3 Practice Testbehealthybehappy96Belum ada peringkat

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (894)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- SocionicsDokumen33 halamanSocionicsInna Virtosu100% (2)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (73)

- Euclidean Quantum Gravity Hawking PDFDokumen2 halamanEuclidean Quantum Gravity Hawking PDFFelicia0% (3)

- HiggsDokumen36 halamanHiggsAchilleas Kapartzianis100% (1)

- Information GeometryDokumen6 halamanInformation GeometryJavier Garcia RajoyBelum ada peringkat

- T2 Skript BertlmannDokumen186 halamanT2 Skript BertlmannFaulwienixBelum ada peringkat

- Department of Physics: Federal Urdu University of Arts, Science and Technology, KarachiDokumen3 halamanDepartment of Physics: Federal Urdu University of Arts, Science and Technology, KarachiHashir SaeedBelum ada peringkat

- PQT-Assignment 2 PDFDokumen2 halamanPQT-Assignment 2 PDFacasBelum ada peringkat

- Isds 361a PDFDokumen5 halamanIsds 361a PDFomanishrBelum ada peringkat

- Section and SolutionDokumen4 halamanSection and SolutionFiromsaBelum ada peringkat

- AE211 Tutorial 11 - SolutionsDokumen3 halamanAE211 Tutorial 11 - SolutionsJoyce PamendaBelum ada peringkat

- The Schrodinger Equation: Fisika KuantumDokumen13 halamanThe Schrodinger Equation: Fisika KuantumIndah pratiwiBelum ada peringkat

- Topics in Representation Theory: SU (2) Representations and Their ApplicationsDokumen5 halamanTopics in Representation Theory: SU (2) Representations and Their Applicationsayub_faridi2576Belum ada peringkat

- Nb pseudopotential UPF fileDokumen65 halamanNb pseudopotential UPF filearnoldBelum ada peringkat

- Tuning, CP Violation LecturesDokumen90 halamanTuning, CP Violation LecturesSaneli Carbajal VigoBelum ada peringkat

- STAT3008 Exercise 5 SolutionsDokumen8 halamanSTAT3008 Exercise 5 SolutionsKewell ChongBelum ada peringkat

- The World As A Hologram: Related ArticlesDokumen21 halamanThe World As A Hologram: Related ArticleswalzBelum ada peringkat

- SCC P620138Dokumen15 halamanSCC P620138Zahid HameedBelum ada peringkat

- The Geometry of Gauge Fields: Czechoslovak Journal of Physics January 1979Dokumen7 halamanThe Geometry of Gauge Fields: Czechoslovak Journal of Physics January 1979cifarha venantBelum ada peringkat

- Conservation of Momentum: Rocket PropulsionDokumen3 halamanConservation of Momentum: Rocket PropulsionAnupam DixitBelum ada peringkat

- A Compare and Contrast Classical Conditioning With Operant ConditioningDokumen2 halamanA Compare and Contrast Classical Conditioning With Operant ConditioningAdrian Joen Rapsing100% (1)

- 6.MIT15 - 070JF13 - Lec6 - Introduction To Brownian MotionDokumen10 halaman6.MIT15 - 070JF13 - Lec6 - Introduction To Brownian MotionMarjo KaciBelum ada peringkat

- 6739 - List of Lessons - DG - 200114Dokumen3 halaman6739 - List of Lessons - DG - 200114Iman Christin WirawanBelum ada peringkat

- Testing Claims About Population ProportionsDokumen18 halamanTesting Claims About Population ProportionsHamsaveni ArulBelum ada peringkat

- Physics of Nanoscale Devices - Unit 5 - Week 3Dokumen4 halamanPhysics of Nanoscale Devices - Unit 5 - Week 3K04Anoushka TripathiBelum ada peringkat

- AtomsFirst2e Day6 Sec3.7-3.8 CHMY171 Fall 2015Dokumen17 halamanAtomsFirst2e Day6 Sec3.7-3.8 CHMY171 Fall 2015Claudius MorpheusBelum ada peringkat

- Normal DistributionsDokumen10 halamanNormal DistributionsCikgu Zaid IbrahimBelum ada peringkat

- Quantum Field TheoryDokumen24 halamanQuantum Field TheoryYolanda DidouBelum ada peringkat

- Law of Diminishing Marginal UtilityDokumen4 halamanLaw of Diminishing Marginal UtilityGracieAndMeBelum ada peringkat

- Patrick Das Gupta 1Dokumen14 halamanPatrick Das Gupta 1Shikhar AsthanaBelum ada peringkat