Real-Time Operating Systems and Scheduling: 2add. Timing Anomalies in Processors

Diunggah oleh

NA KarthikDeskripsi Asli:

Judul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

Real-Time Operating Systems and Scheduling: 2add. Timing Anomalies in Processors

Diunggah oleh

NA KarthikHak Cipta:

Format Tersedia

Real-Time Operating Systems

and Scheduling

2add. Timing Anomalies in Processors

Lothar Thiele

by the author

2+-1

Swiss Federal

Institute of Technology

Computer Engineering

and Networks Laboratory

2+-2

Timing Peculiarities in Modern Computer Architectures

The following example is taken from an exercise in

Systemprogrammierung.

It was not! constructed for challenging the timing

predictability of modern computer architectures; the

strange behavior was found by chance.

A straightforward GGT algorithm was executed on an

UltraSparc (Sun) architecture and timing was

measured.

Goal in this lecture: Determine the cause(s) for the

strange timing behavior.

2+-3

Program

Only the relevant assembler program is shown (and

the related C program); the calling main function just

jumps to label ggt 1.000.000 times.

.text

.global ggt

.align 32

ggt: ! %o0:= x,%o1 := y

cmp %o0, %o1

blu,a ggt ! if (%o0 < %o1) {goto ggt;}

sub %o1, %o0, %o1 ! %o1 = %o1 - %o0

bgu,a ggt ! if (%o0 > %o1) {goto ggt;}

sub %o0, %o1, %o0 ! %o0 = %o1 - %o0

retl

nop

int ggt_c (int x, int y) {

while (x != y) {

if (x < y) { y -= x; }

else { x -= y;}

}

return (x);

}

Here, we will introduces nop

statements; there are NOT

executed.

2+-4

Observation

Depending on the number of nop statements before

the ggt label, the execution time of ggt(17, 17*97)

varies by a factor of almost 2. The execution time of

ggt(17*97, 17) varies by a factor of almost 4.

This behavior is periodic in the number of nop

statements, i.e. it repeats after 8 nop statements.

Measurements:

2.79

0.64

2.78

0.62

time[s]

ggt(17*97,17)

0.35 1

0.35 3

0.36 2

0.36 0

time[s]

ggt(17,17*97)

nop

0.63

0.64

0.62

0.63

time[s]

ggt(17*97,17)

0.35 5

0.64 7

0.65 6

0.37 4

time[s]

ggt(17,17*97)

nop

2+-5

Simple Calculations

The CPU is UltraSparc with 360 MHz clock rate.

Problem 1 (ggt(17,17*97) ):

Fast execution: 96*3*1.000.000 / 0.35 = 823 MIPS and

0.35 * 360 / 96 = 1.31 cycles per iteration.

Slow execution: 96*3*1.000.000 / 0.65 = 443 MIPS and

0.65 * 360 / 96 = 2.44 cycles per iteration.

Therefore, the difference is about 1 cycle per iteration.

Problem 2 ( ggt(17*97, 17) ):

Fast execution: 96*4*1.000.000 / 0.63 = 609 MIPS and

0.63 * 360 / 96 = 2.36 cycles per iteration.

Slow execution: 96*4*1.000.000 / 2.78 = 138 MIPS and

2.78 * 360 / 96 = 10.43 cycles per iteration.

Therefore, the difference is about 8 cycles per iteration.

2+-6

Explanations

Problem 1 (ggt(17,17*97) ):

The first three instructions (cmp, blu, sub) are called 96

times before ggt returns. The timing behavior depends on

the location of the program in address space.

The reason is most probably the implementation of the 4

word instruction buffer between the instruction cache and

the pipeline: The instruction buffer can not be filled by

different cache lines in one cycle.

In the slow execution, one needs to fill the instruction buffer

twice for each iteration. This needs at least two cycles

(despite of any parallelism in the pipeline).

2+-7

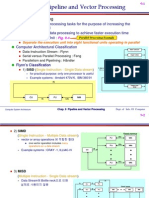

Block Diagram of UltraSparc

Instruction buffer

for hiding latency

to cache

2+-8

User Manual (page 361 )

2+-9

Address Alignment

Cache line:

Instruction buffer:

0 nop

Cache line:

Instruction buffer:

5 nop

Cache lines:

Instruction buffer:

6 nop

2 fetches are necessary

as sub is missing

cmp blu sub

cmp blu sub nop nop nop nop nop

cmp blu sub

cmp blu sub

cmp blu nop nop nop nop nop nop

cmp blu

sub

2+-10

Explanations

Problem 2 (ggt(17*97,17) ):

The loop is executed (cmp, blu, sub, bgu, sub) 96 times,

where the first sub instruction is not executed (since blu is

used with ',a suffix, which means, that instruction in the

delay slot is not executed if branch is not taken). Therefore,

there are four instructions to be executed, but the loop has 5

instructions in total.

The main reason for this behavior is most probably due to

the branch prediction scheme used in the architecture.

In particular, there is a prediction of the next block of 4

instructions to be fetched into the instruction buffer. This

scheme is based on a two bit predictor and is also used to

control the pipeline and to prevent stalls.

But there is a problem due to the optimization of the state

information that is stored (prediction for blocks of

instructions and single instructions):

2+-11

User Manual (page 342 )

We exactly have this situation, if

there are 1 or three nops

statements inserted

2+-12

Conclusions

Innocent changes (just moving code in address

space) can easily change the timing by a factor of 4.

In our example, the timing oddities are caused by two

different architectural features of modern superscalar

processors:

branch prediction

instruction buffer

It is hard to predict the timing of modern processors;

this is bad in all situations, where timing is of

importance (embedded systems, hard real-time

systems).

What is a proper approach to predictable system

design ?

Anda mungkin juga menyukai

- Amdahl's Law Example #2: - Protein String Matching CodeDokumen23 halamanAmdahl's Law Example #2: - Protein String Matching CodeAsif AmeerBelum ada peringkat

- Exam2 Fa2014 SolutionDokumen14 halamanExam2 Fa2014 Solutionrafeak rafeakBelum ada peringkat

- DVCon Europe 2015 TA5 1 PaperDokumen7 halamanDVCon Europe 2015 TA5 1 PaperJon DCBelum ada peringkat

- Exercise 4Dokumen4 halamanExercise 4Mashavia AhmadBelum ada peringkat

- Synthesis Netlist - Library Model - Dofile CommandsDokumen30 halamanSynthesis Netlist - Library Model - Dofile CommandsPrafulani GajbhiyeBelum ada peringkat

- Scilab Ninja: Module 6: Discrete-Time Control SystemsDokumen8 halamanScilab Ninja: Module 6: Discrete-Time Control Systemsorg25grBelum ada peringkat

- Itwiki PHPDokumen47 halamanItwiki PHPwildan sudibyoBelum ada peringkat

- Mahitha Scan Insertion Observation PDFDokumen30 halamanMahitha Scan Insertion Observation PDFPrafulani Gajbhiye100% (1)

- Assignment 1Dokumen2 halamanAssignment 1Samira YomiBelum ada peringkat

- OS Assignment-1 - 22-23Dokumen12 halamanOS Assignment-1 - 22-23Sreenidhi SaluguBelum ada peringkat

- Imp 22Dokumen31 halamanImp 22Panku RangareeBelum ada peringkat

- Assignment QuestionsDokumen3 halamanAssignment QuestionsSarbendu PaulBelum ada peringkat

- Pipelining: Basic and Intermediate ConceptsDokumen69 halamanPipelining: Basic and Intermediate ConceptsmanibrarBelum ada peringkat

- Lecture 03Dokumen30 halamanLecture 03hamza abbasBelum ada peringkat

- Daa Full NotesDokumen119 halamanDaa Full NotesJim Moriarty100% (1)

- Design of An 8 Bit Barrel Shifter: Gene Vea, Perry Hung, Ricardo Rosas, Kevin YooDokumen15 halamanDesign of An 8 Bit Barrel Shifter: Gene Vea, Perry Hung, Ricardo Rosas, Kevin YoodivyeddBelum ada peringkat

- 358 ENSC180-02-Scripts Flow Control and Data StructuresDokumen32 halaman358 ENSC180-02-Scripts Flow Control and Data StructuresLayomi Dele-DareBelum ada peringkat

- Lecture3 Jan10 EfficiencyDokumen34 halamanLecture3 Jan10 EfficiencystarkBelum ada peringkat

- Lec02 Efficient Fibonacci Number Model of ComputationDokumen28 halamanLec02 Efficient Fibonacci Number Model of ComputationChitral MauryaBelum ada peringkat

- Advanced Computer Systems Architecture Lect-2Dokumen38 halamanAdvanced Computer Systems Architecture Lect-2HussainShabbirBelum ada peringkat

- Report Template PDFDokumen9 halamanReport Template PDFevd89Belum ada peringkat

- Parallel Quick Sort AlgorithmDokumen8 halamanParallel Quick Sort Algorithmravg10Belum ada peringkat

- DAA NotesDokumen80 halamanDAA NotesAnilBelum ada peringkat

- DSE 3153 26 Sep 2023Dokumen10 halamanDSE 3153 26 Sep 2023KaiBelum ada peringkat

- Begin Parallel Programming With OpenMP - CodeProjectDokumen8 halamanBegin Parallel Programming With OpenMP - CodeProjectManojSudarshanBelum ada peringkat

- Tutorial Module 4Dokumen9 halamanTutorial Module 4Neupane Er KrishnaBelum ada peringkat

- Multipath 1 NotesDokumen37 halamanMultipath 1 NotesNarender KumarBelum ada peringkat

- CodeDokumen73 halamanCodeSaravanan PaBelum ada peringkat

- 09 ParallelizationRecap PDFDokumen62 halaman09 ParallelizationRecap PDFgiordano manciniBelum ada peringkat

- OS Assignment1-1 PDFDokumen7 halamanOS Assignment1-1 PDFYash KhilosiyaBelum ada peringkat

- Hardware Realisation of A Computer System: Architecting SpeedDokumen18 halamanHardware Realisation of A Computer System: Architecting SpeedMandy LoresBelum ada peringkat

- An Optimized Square Root Algorithm For Implementation in Fpga HardwareDokumen8 halamanAn Optimized Square Root Algorithm For Implementation in Fpga HardwareMartin LéonardBelum ada peringkat

- 14 Analysis of AlgorithmsDokumen59 halaman14 Analysis of AlgorithmsLuís MiguelBelum ada peringkat

- RandomX DesignDokumen8 halamanRandomX Designwaldoalvarez00Belum ada peringkat

- Pi-Calculation by Parallel Programming: Mr. Paopat RatpunpairojDokumen8 halamanPi-Calculation by Parallel Programming: Mr. Paopat RatpunpairojKaew PaoBelum ada peringkat

- Project - Behavioral Model: Homework 2 - April 24, 2005Dokumen2 halamanProject - Behavioral Model: Homework 2 - April 24, 2005Shawn SagarBelum ada peringkat

- Subiect EngDokumen3 halamanSubiect EngVictoria IacobBelum ada peringkat

- VHDL Fir FilterDokumen31 halamanVHDL Fir FilterSyam SanalBelum ada peringkat

- KCS401 - OS - Final Question BankDokumen6 halamanKCS401 - OS - Final Question Bankparidhiagarwal129Belum ada peringkat

- Edt Testkompress by Kiran K S: Embedded Deterministic TestDokumen51 halamanEdt Testkompress by Kiran K S: Embedded Deterministic Testsenthilkumar100% (8)

- LBISTDokumen10 halamanLBISTprakashthamankarBelum ada peringkat

- MATH49111/69111: Scientific Computing: 26th October 2017Dokumen29 halamanMATH49111/69111: Scientific Computing: 26th October 2017Pedro Luis CarroBelum ada peringkat

- Assembler Assignment: Embedded Systems EnggDokumen7 halamanAssembler Assignment: Embedded Systems EnggNick AroraBelum ada peringkat

- cs152 Fa97 mt2 SolDokumen18 halamancs152 Fa97 mt2 SolMuhammad Fahad NaeemBelum ada peringkat

- Systemc-Ams Tutorial: Institute of Computer Technology Vienna University of Technology Markus DammDokumen26 halamanSystemc-Ams Tutorial: Institute of Computer Technology Vienna University of Technology Markus DammAamir HabibBelum ada peringkat

- Performance: LatencyDokumen7 halamanPerformance: LatencyNi TinBelum ada peringkat

- Clock PowerDokumen8 halamanClock PowerSathish BalaBelum ada peringkat

- CS 433 Homework 4: Problem 1 (7 Points)Dokumen14 halamanCS 433 Homework 4: Problem 1 (7 Points)FgfughuBelum ada peringkat

- ch09 Morris ManoDokumen15 halamanch09 Morris ManoYogita Negi RawatBelum ada peringkat

- Milestone 2-UpdatedDokumen13 halamanMilestone 2-UpdatedHưng Nguyễn ThànhBelum ada peringkat

- Numerical Libraries For Petascale Computing: Brett Bode William GroppDokumen34 halamanNumerical Libraries For Petascale Computing: Brett Bode William GroppPeng XuBelum ada peringkat

- Lecture 2-The Big-Oh NotationDokumen30 halamanLecture 2-The Big-Oh NotationOrniel Naces JamandraBelum ada peringkat

- Assigment No - 2 CSE-316 Operating System: Submitted To: - Submitted By:-Yogesh Gandhi C2801B48 Reg. No-10808452Dokumen8 halamanAssigment No - 2 CSE-316 Operating System: Submitted To: - Submitted By:-Yogesh Gandhi C2801B48 Reg. No-10808452Yogesh GandhiBelum ada peringkat

- Mit Ocw Complex Digital Systems Lab1Dokumen6 halamanMit Ocw Complex Digital Systems Lab1Monika YadavBelum ada peringkat

- FPGA Implementation of IEEE-754 Karatsuba MultiplierDokumen4 halamanFPGA Implementation of IEEE-754 Karatsuba MultiplierSatyaKesavBelum ada peringkat

- Preliminary Specifications: Programmed Data Processor Model Three (PDP-3) October, 1960Dari EverandPreliminary Specifications: Programmed Data Processor Model Three (PDP-3) October, 1960Belum ada peringkat

- PLC: Programmable Logic Controller – Arktika.: EXPERIMENTAL PRODUCT BASED ON CPLD.Dari EverandPLC: Programmable Logic Controller – Arktika.: EXPERIMENTAL PRODUCT BASED ON CPLD.Belum ada peringkat

- Projects With Microcontrollers And PICCDari EverandProjects With Microcontrollers And PICCPenilaian: 5 dari 5 bintang5/5 (1)

- Image and Depth From A Conventional Camera With A Coded ApertureDokumen61 halamanImage and Depth From A Conventional Camera With A Coded ApertureNA KarthikBelum ada peringkat

- Station Code Station Name Arrival DepartureDokumen1 halamanStation Code Station Name Arrival DepartureNA KarthikBelum ada peringkat

- 3 Button MouseDokumen14 halaman3 Button Mousekatalyst97Belum ada peringkat

- Microprocessor Systems ADokumen1 halamanMicroprocessor Systems ANA KarthikBelum ada peringkat

- DNS SeminarDokumen30 halamanDNS SeminarNA KarthikBelum ada peringkat

- Java Design Patterns With ExamplesDokumen92 halamanJava Design Patterns With ExamplesPawan96% (25)

- Ale Edi IdocDokumen4 halamanAle Edi IdocPILLINAGARAJUBelum ada peringkat

- IBM System z10 Enterprise Class (z10 EC)Dokumen49 halamanIBM System z10 Enterprise Class (z10 EC)keolie100% (1)

- Linear-Quadratic Regulator (LQR) - WikipediaDokumen4 halamanLinear-Quadratic Regulator (LQR) - WikipediaRicardo VillalongaBelum ada peringkat

- Course 20339-1A - Planning and Administering SharePoint 2016Dokumen8 halamanCourse 20339-1A - Planning and Administering SharePoint 2016Ashish Kolambkar0% (1)

- 10.2.1.4 Packet Tracer - Configure and Verify NTPDokumen2 halaman10.2.1.4 Packet Tracer - Configure and Verify NTPIon TemciucBelum ada peringkat

- Testking - Sun.310 081.exam.q.and.a.30.03.06 ArnebookDokumen100 halamanTestking - Sun.310 081.exam.q.and.a.30.03.06 ArnebookvmeshalkinBelum ada peringkat

- Software Architecture in Practice: Part One: Envisioning ArchitectureDokumen49 halamanSoftware Architecture in Practice: Part One: Envisioning ArchitectureSam ShoukatBelum ada peringkat

- Top 40 Cloud Computing Interview Questions & Answers: 1) What Are The Advantages of Using Cloud Computing?Dokumen8 halamanTop 40 Cloud Computing Interview Questions & Answers: 1) What Are The Advantages of Using Cloud Computing?Divya ChauhanBelum ada peringkat

- Level 11 Passage 2Dokumen3 halamanLevel 11 Passage 2JefaradocsBelum ada peringkat

- 8th ChapterDokumen39 halaman8th ChapterMasthan BabuBelum ada peringkat

- Geoservices Rest SpecDokumen220 halamanGeoservices Rest SpecgreenaksesBelum ada peringkat

- City Engine Help 2010.3Dokumen828 halamanCity Engine Help 2010.3Jesus Manuel GonzálezBelum ada peringkat

- Ccna CCNPDokumen36 halamanCcna CCNPHimanshu PatariaBelum ada peringkat

- f5 Bigip v13 Secassessment PDFDokumen105 halamanf5 Bigip v13 Secassessment PDFBttrngrmBelum ada peringkat

- 11g R2 RACDokumen27 halaman11g R2 RACmdyakubhnk85Belum ada peringkat

- DR Vineeta KhemchandanDokumen3 halamanDR Vineeta KhemchandanengineeringwatchBelum ada peringkat

- Approaches To System DevelopmentDokumen56 halamanApproaches To System DevelopmentKoko Dwika PutraBelum ada peringkat

- Task 01 - DNS, ARPDokumen4 halamanTask 01 - DNS, ARPArif Shakil0% (2)

- Basic Computer Skills AECC-2 BA Bcom BSC BBA Combined PDFDokumen2 halamanBasic Computer Skills AECC-2 BA Bcom BSC BBA Combined PDFBalmur Saiteja100% (2)

- AP7101-Advanced Digital Signal ProcessingDokumen8 halamanAP7101-Advanced Digital Signal ProcessingDhamodharan Srinivasan50% (2)

- Systems Analysis and Design 6Dokumen41 halamanSystems Analysis and Design 6kickerzz04Belum ada peringkat

- PLSQL - BB Sample QusDokumen9 halamanPLSQL - BB Sample Qusjayeshrane2107Belum ada peringkat

- SQL and Modeling WordDokumen58 halamanSQL and Modeling WordKent MabaitBelum ada peringkat

- Hive and Presto For Big DataDokumen31 halamanHive and Presto For Big Dataparashar1505Belum ada peringkat

- P14x TC2 EN 2.1 PDFDokumen9 halamanP14x TC2 EN 2.1 PDFHung Cuong PhamBelum ada peringkat

- Solution To Assi1Dokumen8 halamanSolution To Assi1Yogesh GandhiBelum ada peringkat

- GX Developer FX - Beginner's Manual 166391-A (10.05) PDFDokumen46 halamanGX Developer FX - Beginner's Manual 166391-A (10.05) PDFAlessandro JesusBelum ada peringkat

- Wood Identification Using Convolutional Neural NetworksDokumen4 halamanWood Identification Using Convolutional Neural NetworksezekillBelum ada peringkat

- Data Mining-Lecture6Dokumen6 halamanData Mining-Lecture6ashraf8Belum ada peringkat