Advanced Processor Superscalarclass

Diunggah oleh

Kanaga Varatharajan50%(2)50% menganggap dokumen ini bermanfaat (2 suara)

583 tayangan73 halamanThe document discusses different types of processor architectures including CISC, RISC, superscalar, VLIW, and superpipelined processors. It provides details on their characteristics such as clock rates, cycles per instruction, instruction pipelines, and functional units. The key differences between CISC and RISC processors are described, such as CISC having microprogrammed control units, lower clock rates, and higher CPI compared to RISC. Superscalar processors allow multiple instructions to be issued per cycle to reduce the effective CPI.

Deskripsi Asli:

Judul Asli

Advanced Processor Superscalarclass Ppt

Hak Cipta

© Attribution Non-Commercial (BY-NC)

Format Tersedia

PPT, PDF, TXT atau baca online dari Scribd

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniThe document discusses different types of processor architectures including CISC, RISC, superscalar, VLIW, and superpipelined processors. It provides details on their characteristics such as clock rates, cycles per instruction, instruction pipelines, and functional units. The key differences between CISC and RISC processors are described, such as CISC having microprogrammed control units, lower clock rates, and higher CPI compared to RISC. Superscalar processors allow multiple instructions to be issued per cycle to reduce the effective CPI.

Hak Cipta:

Attribution Non-Commercial (BY-NC)

Format Tersedia

Unduh sebagai PPT, PDF, TXT atau baca online dari Scribd

50%(2)50% menganggap dokumen ini bermanfaat (2 suara)

583 tayangan73 halamanAdvanced Processor Superscalarclass

Diunggah oleh

Kanaga VaratharajanThe document discusses different types of processor architectures including CISC, RISC, superscalar, VLIW, and superpipelined processors. It provides details on their characteristics such as clock rates, cycles per instruction, instruction pipelines, and functional units. The key differences between CISC and RISC processors are described, such as CISC having microprogrammed control units, lower clock rates, and higher CPI compared to RISC. Superscalar processors allow multiple instructions to be issued per cycle to reduce the effective CPI.

Hak Cipta:

Attribution Non-Commercial (BY-NC)

Format Tersedia

Unduh sebagai PPT, PDF, TXT atau baca online dari Scribd

Anda di halaman 1dari 73

Advanced Computer Architecture

Processors and Memory Hierarchy

4.1 Advanced Processor Technology

Design Space of Processors

Processors can be mapped to a space that has

clock rate and cycles per instruction (CPI) as

coordinates. Each processor type occupies a region

of this space.

Newer technologies are enabling higher clock rates.

Manufacturers are also trying to lower the number

of cycles per instruction.

Thus the future processor space is moving toward

the lower right of the processor design space.

CISC and RISC Processors

Complex Instruction Set Computing (CISC)

processors like the Intel 80486, the Motorola

68040, the VAX/8600, and the IBM S/390 typically

use microprogrammed control units, have lower

clock rates, and higher CPI figures than

Reduced Instruction Set Computing (RISC)

processors like the Intel i860, SPARC, MIPS R3000,

and IBM RS/6000, which have hard-wired control

units, higher clock rates, and lower CPI figures.

Superscalar Processors

This subclass of the RISC processors allow multiple

instructoins to be issued simultaneously during

each cycle.

The effective CPI of a superscalar processor should

be less than that of a generic scalar RISC

processor.

Clock rates of scalar RISC and superscalar RISC

machines are similar.

VLIW Machines

Very Long Instruction Word machines typically

have many more functional units that superscalars

(and thus the need for longer 256 to 1024 bits

instructions to provide control for them).

These machines mostly use microprogrammed

control units with relatively slow clock rates

because of the need to use ROM to hold the

microcode.

Superpipelined Processors

These processors typically use a multiphase clock

(actually several clocks that are out of phase with

each other, each phase perhaps controlling the

issue of another instruction) running at a relatively

high rate.

The CPI in these machines tends to be relatively

high (unless multiple instruction issue is used).

Processors in vector supercomputers are mostly

superpipelined and use multiple functional units for

concurrent scalar and vector operations.

Instruction Pipelines

Typical instruction includes four phases:

fetch

decode

execute

write-back

These four phases are frequently performed in a

pipeline, or assembly line manner, as illustrated

on the next slide (figure 4.2).

Pipeline Definitions

Instruction pipeline cycle the time required for each

phase to complete its operation (assuming equal delay in all

phases)

Instruction issue latency the time (in cycles) required

between the issuing of two adjacent instructions

Instruction issue rate the number of instructions issued

per cycle (the degree of a superscalar)

Simple operation latency the delay (after the previous

instruction) associated with the completion of a simple

operation (e.g. integer add) as compared with that of a

complex operation (e.g. divide).

Resource conflicts when two or more instructions demand

use of the same functional unit(s) at the same time.

Pipelined Processors

A base scalar processor:

issues one instruction per cycle

has a one-cycle latency for a simple operation

has a one-cycle latency between instruction issues

can be fully utilized if instructions can enter the pipeline at a rate on

one per cycle

For a variety of reasons, instructions might not be able to

be pipelines as agressively as in a base scalar processor.

In these cases, we say the pipeline is underpipelined.

CPI rating is 1 for an ideal pipeline. Underpipelined

systems will have higher CPI ratings, lower clock rates, or

both.

Processors and Coprocessors

Central processing unit (CPU) is essentially a scalar

processor which may have many functional units (but

usually at least one ALU arithmetic and logic unit).

Some systems may include one or more coprocessors which

perform floating point or other specialized operations

INCLUDING I/O, regardless of what the textbook says.

Coprocessors cannot be used without the appropriate CPU.

Other terms for coprocessors include attached processors

or slave processors.

Coprocessors can be more powerful than the host CPU.

Instruction Set Architectures

CISC

Many different instructions

Many different operand data types

Many different operand addressing formats

Relatively small number of general purpose registers

Many instructions directly match high-level language constructions

RISC

Many fewer instructions than CISC (freeing chip space for more

functional units!)

Fixed instruction format (e.g. 32 bits) and simple operand

addressing

Relatively large number of registers

Small CPI (close to 1) and high clock rates

Architectural Distinctions

CISC

Unified cache for instructions and data (in most cases)

Microprogrammed control units and ROM in earlier

processors (hard-wired controls units now in some CISC

systems)

RISC

Separate instruction and data caches

Hard-wired control units

CISC Scalar Processors

Early systems had only integer fixed point facilities.

Modern machines have both fixed and floating

point facilities, sometimes as parallel functional

units.

Many CISC scalar machines are underpipelined.

Representative systems:

VAX 8600

Motorola MC68040

Intel Pentium

RISC Scalar Processors

Designed to issue one instruction per cycle

RISC and CISC scalar processors should have same

performance if clock rate and program lengths are equal.

RISC moves less frequent operations into software, thus

dedicating hardware resources to the most frequently used

operations.

Representative systems:

Sun SPARC

Intel i860

Motorola M88100

AMD 29000

SPARC stands for scalable processor architecture

Scalability is due to use of number of register windows

(explained on next slide)

Floating point unit (FPU) is implemented on a separate

chip

Source: Kai Hwang

General characteristics

Large number of

instructions

More options in the

addressing modes

Lower clock rate

High CPI

Widely used in personal

computer (PC) industry

SPARC runs each procedure with a set of thirty two 32-bit registers

Eight of these registers are global registers shared by all

procedures

Remaining twenty four registers are window registers associated

with only one procedure

Concept of using overlapped registers is the most important

feature introduced

Each register window is divided into three sections Ins, Locals

and Outs

Locals are addressable by each procedure and Ins & Outs are shared

among procedures

SPARCs and Register Windows

The SPARC architecture makes clever use of the logical

procedure concept.

Each procedure usually has some input parameters, some

local variables, and some arguments it uses to call still

other procedures.

The SPARC registers are arranged so that the registers

addressed as Outs in one procedure become available as

Ins in a called procedure, thus obviating the need to copy

data between registers.

This is similar to the concept of a stack frame in a higher-

level language.

64 bit RISC processor on a single chip

It executes 82 instructions, all of them in single clock

cycle

There are nine functional units connected by multiple data

paths

There are two floating point units namely multiplier unit

and adder unit, both of which can execute concurrently

CISC vs. RISC

CISC Advantages

Smaller program size (fewer instructions)

Simpler control unit design

Simpler compiler design

RISC Advantages

Has potential to be faster

Many more registers

RISC Problems

More complicated register decoding system

Hardwired control is less flexible than microcode

Superscalar, Vector Processors

Scalar processor: executes one instruction per

cycle, with only one instruction pipeline.

Superscalar processor: multiple instruction

pipelines, with multiple instructions issued per

cycle, and multiple results generated per cycle.

Vector processors issue one instructions that

operate on multiple data items (arrays). This is

conducive to pipelining with one result produced

per cycle.

Superscalar Constraints

It should be obvious that two instructions may not

be issued at the same time (e.g. in a superscalar

processor) if they are not independent.

This restriction ties the instruction-level parallelism

directly to the code being executed.

The instruction-issue degree in a superscalar

processor is usually limited to 2 to 5 in practice.

Superscalar Pipelines

One or more of the pipelines in a superscalar

processor may stall if insufficient functional units

exist to perform an instruction phase (fetch,

decode, execute, write back).

Ideally, no more than one stall cycle should occur.

In theory, a superscalar processor should be able

to achieve the same effective parallelism as a

vector machine with equivalent functional units.

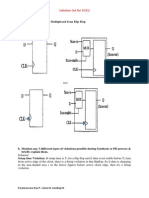

Typical Supserscalar Architecture

A typical superscalar will have

multiple instruction pipelines

an instruction cache that can provide multiple

instructions per fetch

multiple buses among the function units

In theory, all functional units can be

simultaneously active.

Pipelining in Superscalar

Processors

A superscalar processor of

degree m can issue m

instructions per cycle

To fully utilize, at every

cycle, there must be m

instructions for execution

Dependence on compilers

is very high

Figure depicts three

instruction pipeline

Example 1

A typical superscalar

architecture

Multiple instruction pipelines

are used, instruction cache

supplies multiple instructions per

fetch

Multiple functional units are

built into integer unit and floating

point unit

Multiple data buses run though

functional units, and in theory, all

such units can be run simultaneously

Example 2

A superscalar architecture by IBM

Three functional units namely

branch processor, fixed point processor

and floating point processor, all of which

can operate in parallel

Branch processor can facilitate

execution of up to five instructions

per cycle

Number of buses of varying width are

provided to support high instruction

and data bandwidths.

Processors

Advanced Processor Technology

Design Space of Processors

Instruction-Set Architectures

CISC Scalar Processors

RISC Scalar Processors

Superscalar and Vector Processors

Superscalar and Vector Processors

VLIW Architecture

Vector and Symbolic Processors

VLIW Architecture

VLIW = Very Long Instruction Word

Instructions usually hundreds of bits long.

Each instruction word essentially carries multiple

short instructions.

Each of the short instructions are effectively

issued at the same time.

(This is related to the long words frequently used

in microcode.)

Compilers for VLIW architectures should optimally

try to predict branch outcomes to properly group

instructions.

Pipelining in VLIW Processors

Decoding of instructions is easier in VLIW than in

superscalars, because each region of an

instruction word is usually limited as to the type of

instruction it can contain.

Code density in VLIW is less than in superscalars,

because if a region of a VLIW word isnt needed

in a particular instruction, it must still exist (to be

filled with a no op).

Superscalars can be compatible with scalar

processors; this is difficult with VLIW parallel and

non-parallel architectures.

Pipelining in VLIW Architecture

Each instruction in VLIW

architecture specifies multiple

instructions

Execute stage has multiple

operations

Instruction parallelism and data

movement in VLIW architecture

are specified at compile time

CPI of VLIW architecture is lower than superscalar processor

VLIW Opportunities

Random parallelism among scalar operations is

exploited in VLIW, instead of regular parallelism in

a vector or SIMD machine.

The efficiency of the machine is entirely dictated

by the success, or goodness, of the compiler in

planning the operations to be placed in the same

instruction words.

Different implementations of the same VLIW

architecture may not be binary-compatible with

each other, resulting in different latencies.

VLIW Summary

VLIW reduces the effort required to detect

parallelism using hardware or software techniques.

The main advantage of VLIW architecture is its

simplicity in hardware structure and instruction set.

Unfortunately, VLIW does require careful analysis

of code in order to compact the most appropriate

short instructions into a VLIW word.

Vector Processors

A vector processor is a coprocessor designed to

perform vector computations.

A vector is a one-dimensional array of data items

(each of the same data type).

Vector processors are often used in multipipelined

supercomputers.

Architectural types include:

register-to-register (with shorter instructions and

register files)

memory-to-memory (longer instructions with memory

addresses)

Vector Instructions

Register-based instructions

Memory-based instructions

V

i

represent vector register

of length n

s

i

represent scalar register

of length n

M(1:n) represent memory

array of length n

Vector Pipelines

Scalar pipeline

Each Execute-Stage

operates upon a scalar

operand

Vector pipeline

Each Execute-Stage

operates upon a vector

operand

Characteristics

Register-to-Register Vector Instructions

Assume V

i

is a vector register of length n, s

i

is a

scalar register, M(1:n) is a memory array of length

n, and is a vector operation.

Typical instructions include the following

V

1

V

2

V

3

(element by element operation)

s

1

V

1

V

2

(scaling of each element)

V

1

V

2

s

1

(binary reduction - i.e. sum of products)

M(1:n) V

1

(load a vector register from memory)

V

1

M(1:n) (store a vector register into memory)

V

1

V

2

(unary vector -- i.e. negation)

V

1

s

1

(unary reduction -- i.e. sum of vector)

Memory-to-Memory Vector Instructions

Tpyical memory-to-memory vector instructions

(using the same notation as given in the previous

slide) include these:

M

1

(1:n) M

2

(1:n) M

3

(1:n) (binary vector)

s

1

M

1

(1:n) M

2

(1:n) (scaling)

M

1

(1:n) M

2

(1:n) (unary vector)

M

1

(1:n) M

2

(1:n) M(k) (binary reduction)

Pipelines in Vector Processors

Vector processors can usually effectively use large

pipelines in parallel, the number of such parallel

pipelines effectively limited by the number of

functional units.

As usual, the effectiveness of a pipelined system

depends on the availability and use of an effective

compiler to generate code that makes good use of

the pipeline facilities.

Symbolic Processors

Symbolic processors are somewhat unique in that

their architectures are tailored toward the

execution of programs in languages similar to

LISP, Scheme, and Prolog.

In effect, the hardware provides a facility for the

manipulation of the relevant data objects with

tailored instructions.

These processors (and programs of these types)

may invalidate assumptions made about more

traditional scientific and business computations.

Symbolic Processors

Applications in the areas of pattern recognition,

expert systems, artificial intelligence, cognitive

science, machine learning, etc.

Symbolic processors differ from numeric

processors in terms of:-

Data and knowledge representations

Primitive operations

Algorithmic behavior

Memory

I/O communication

Example

Symbolic Lisp Processor

Multiple processing units

are provided which can

work in parallel

Operands are fetched

from scratch pad or stack

Processor executes most

of the instructions in single

machine cycle

Hierarchical Memory Technology

Memory in system is usually characterized as

appearing at various levels (0, 1, ) in a hierarchy,

with level 0 being CPU registers and level 1 being

the cache closest to the CPU.

Each level is characterized by five parameters:

access time t

i

(round-trip time from CPU to ith level)

memory size s

i

(number of bytes or words in the level)

cost per byte c

i

transfer bandwidth b

i

(rate of transfer between levels)

unit of transfer x

i

(grain size for transfers)

Memory Generalities

It is almost always the case that memories at

lower-numbered levels, when compare to those at

higher-numbered levels

are faster to access,

are smaller in capacity,

are more expensive per byte,

have a higher bandwidth, and

have a smaller unit of transfer.

In general, then, t

i-1

< t

i

, s

i-1

< s

i

, c

i-1

> c

i

, b

i-1

> b

i

,

and x

i-1

< x

i

.

The Inclusion Property

The inclusion property is stated as:

M

1

c M

2

c ... c M

n

The implication of the inclusion property is that all

items of information in the innermost memory

level (cache) also appear in the outer memory

levels.

The inverse, however, is not necessarily true. That

is, the presence of a data item in level M

i+1

does

not imply its presence in level M

i

. We call a

reference to a missing item a miss.

The Coherence Property

The inclusion property is, of course, never

completely true, but it does represent a desired

state. That is, as information is modified by the

processor, copies of that information should be

placed in the appropriate locations in outer

memory levels.

The requirement that copies of data items at

successive memory levels be consistent is called

the coherence property.

Coherence Strategies

Write-through

As soon as a data item in M

i

is modified, immediate

update of the corresponding data item(s) in M

i+1

, M

i+2

,

M

n

is required. This is the most aggressive (and

expensive) strategy.

Write-back

The update of the data item in M

i+1

corresponding to a

modified item in M

i

is not updated unit it (or the

block/page/etc. in M

i

that contains it) is replaced or

removed. This is the most efficient approach, but

cannot be used (without modification) when multiple

processors share M

i+1

, , M

n

.

Locality of References

In most programs, memory references are assumed to

occur in patterns that are strongly related (statistically) to

each of the following:

Temporal locality if location M is referenced at time t, then it

(location M) will be referenced again at some time t+At.

Spatial locality if location M is referenced at time t, then another

location MAm will be referenced at time t+At.

Sequential locality if location M is referenced at time t, then

locations M+1, M+2, will be referenced at time t+At, t+At, etc.

In each of these patterns, both Am and At are small.

H&P suggest that 90 percent of the execution time in most

programs is spent executing only 10 percent of the code.

Working Sets

The set of addresses (bytes, pages, etc.)

referenced by a program during the interval from t

to t+e, where e is called the working set

parameter, changes slowly.

This set of addresses, called the working set,

should be present in the higher levels of M if a

program is to execute efficiently (that is, without

requiring numerous movements of data items from

lower levels of M). This is called the working set

principle.

Hit Ratios

When a needed item (instruction or data) is found

in the level of the memory hierarchy being

examined, it is called a hit. Otherwise (when it is

not found), it is called a miss (and the item must

be obtained from a lower level in the hierarchy).

The hit ratio, h, for M

i

is the probability (between 0

and 1) that a needed data item is found when

sought in level memory M

i

.

The miss ratio is obviously just 1-h

i

.

We assume h

0

= 0 and h

n

= 1.

Access Frequencies

The access frequency f

i

to level M

i

is

(1-h

1

) (1-h

2

) h

i

.

Note that f

1

= h

1

, and

=

=

n

i

i

f

1

1

Effective Access Times

There are different penalties associated with misses at

different levels in the memory hierarcy.

A cache miss is typically 2 to 4 times as expensive as a cache hit

(assuming success at the next level).

A page fault (miss) is 3 to 4 magnitudes as costly as a page hit.

The effective access time of a memory hierarchy can be

expressed as

1

1 1 1 2 2 1 2 1

(1 ) (1 )(1 ) (1 )

n

eff i i

i

n n n

T f t

ht h h t h h h h t

=

=

= + + +

The first few terms in this expression dominate, but the

effective access time is still dependent on program behavior

and memory design choices.

Hierarchy Optimization

Given most, but not all, of the various parameters

for the levels in a memory hierarchy, and some

desired goal (cost, performance, etc.), it should be

obvious how to proceed in determining the

remaining parameters.

Example 4.7 in the text provides a particularly easy

(but out of date) example which we wont bother

with here.

Virtual Memory

To facilitate the use of memory hierarchies, the

memory addresses normally generated by modern

processors executing application programs are not

physical addresses, but are rather virtual addresses

of data items and instructions.

Physical addresses, of course, are used to

reference the available locations in the real

physical memory of a system.

Virtual addresses must be mapped to physical

addresses before they can be used.

Virtual to Physical Mapping

The mapping from virtual to physical addresses

can be formally defined as follows:

if has been allocated to store

,

the data identified by virtual address

if data missing in

t

m M

m

f v m

v is M

e

The mapping returns a physical address if a

memory hit occurs. If there is a memory miss, the

referenced item has not yet been brought into

primary memory.

Mapping Efficiency

The efficiency with which the virtual to physical

mapping can be accomplished significantly affects

the performance of the system.

Efficient implementations are more difficult in

multiprocessor systems where additional problems

such as coherence, protection, and consistency

must be addressed.

Virtual Memory Models (1)

Private Virtual Memory

In this scheme, each processor has a separate virtual address

space, but all processors share the same physical address space.

Advantages:

Small processor address space

Protection on a per-page or per-process basis

Private memory maps, which require no locking

Disadvantages

The synonym problem different virtual addresses in different/same

virtual spaces point to the same physical page

The same virtual address in different virtual spaces may point to

different pages in physical memory

Virtual Memory Models (2)

Shared Virtual Memory

All processors share a single shared virtual address space, with each

processor being given a portion of it.

Some of the virtual addresses can be shared by multiple processors.

Advantages:

All addresses are unique

Synonyms are not allowed

Disadvantages

Processors must be capable of generating large virtual addresses

(usually > 32 bits)

Since the page table is shared, mutual exclusion must be used to

guarantee atomic updates

Segmentation must be used to confine each process to its own address

space

The address translation process is slower than with private (per

processor) virtual memory

Memory Allocation

Both the virtual address space and the physical

address space are divided into fixed-length pieces.

In the virtual address space these pieces are called

pages.

In the physical address space they are called page

frames.

The purpose of memory allocation is to allocate

pages of virtual memory using the page frames of

physical memory.

Address Translation Mechanisms

[Virtual to physical] address translation requires use of a

translation map.

The virtual address can be used with a hash function to locate the

translation map (which is stored in the cache, an associative

memory, or in main memory).

The translation map is comprised of a translation lookaside buffer,

or TLB (usually in associative memory) and a page table (or tables).

The virtual address is first sought in the TLB, and if that search

succeeds, not further translation is necessary. Otherwise, the page

table(s) must be referenced to obtain the translation result.

If the virtual address cannot be translated to a physical address

because the required page is not present in primary memory, a

page fault is reported.

Anda mungkin juga menyukai

- Verilog HDL Samir Palnitkar Solution ManualDokumen7 halamanVerilog HDL Samir Palnitkar Solution ManualKanaga Varatharajan33% (3)

- Implementing A Source Synchronous Interface v2.0Dokumen47 halamanImplementing A Source Synchronous Interface v2.0Gautham PopuriBelum ada peringkat

- Raspberry Pi Internship PresentationDokumen2 halamanRaspberry Pi Internship PresentationJitendra SonawaneBelum ada peringkat

- Siemens Az S350 UDokumen69 halamanSiemens Az S350 UVikas Srivastav100% (12)

- P11Mca1 & P8Mca1 - Advanced Computer Architecture: Unit V Processors and Memory HierarchyDokumen45 halamanP11Mca1 & P8Mca1 - Advanced Computer Architecture: Unit V Processors and Memory HierarchyMohanty AyodhyaBelum ada peringkat

- Introduction To Embedded SystemDokumen42 halamanIntroduction To Embedded SystemMohd FahmiBelum ada peringkat

- Design Choices - EthernetDokumen29 halamanDesign Choices - EthernetJasmine MysticaBelum ada peringkat

- FPGA Design MethodologiesDokumen9 halamanFPGA Design MethodologiesSambhav VermanBelum ada peringkat

- Project FileDokumen131 halamanProject FileshaanjalalBelum ada peringkat

- 5 - Internal MemoryDokumen22 halaman5 - Internal Memoryerdvk100% (1)

- Unit 1 Computer ArchitectureDokumen36 halamanUnit 1 Computer ArchitectureAnshulBelum ada peringkat

- System On Chip SoC ReportDokumen14 halamanSystem On Chip SoC Reportshahd dawood100% (1)

- Low Power VLSI Design: J.Ramesh ECE Department PSG College of TechnologyDokumen146 halamanLow Power VLSI Design: J.Ramesh ECE Department PSG College of TechnologyVijay Kumar TBelum ada peringkat

- Single Chip Solution: Implementation of Soft Core Microcontroller Logics in FPGADokumen4 halamanSingle Chip Solution: Implementation of Soft Core Microcontroller Logics in FPGAInternational Organization of Scientific Research (IOSR)Belum ada peringkat

- Introduction To System On ChipDokumen110 halamanIntroduction To System On ChipKiệt PhạmBelum ada peringkat

- Microprocessor UNIT - IVDokumen87 halamanMicroprocessor UNIT - IVMani GandanBelum ada peringkat

- Introduction To The I/O Buses Technical and Historical Background For The I/O BusesDokumen9 halamanIntroduction To The I/O Buses Technical and Historical Background For The I/O BusesshankyguptaBelum ada peringkat

- 2.sys Bus ArchDokumen14 halaman2.sys Bus ArchChar MondeBelum ada peringkat

- Buffer InsertionDokumen47 halamanBuffer InsertionAkshay DesaiBelum ada peringkat

- Introduction To Asics: Ni Logic Pvt. LTD., PuneDokumen84 halamanIntroduction To Asics: Ni Logic Pvt. LTD., PuneankurBelum ada peringkat

- Chapter 8 - CPU PerformanceDokumen40 halamanChapter 8 - CPU PerformanceNurhidayatul FadhilahBelum ada peringkat

- Partitioning An ASICDokumen6 halamanPartitioning An ASICSwtz ZraonicsBelum ada peringkat

- SOC TestingDokumen27 halamanSOC TestingMaria AllenBelum ada peringkat

- Chapter 6 - IO (Part 2)Dokumen42 halamanChapter 6 - IO (Part 2)Nurhidayatul FadhilahBelum ada peringkat

- 03b - FIE - Parcial #3 PLD - Resumen 04c 09Dokumen36 halaman03b - FIE - Parcial #3 PLD - Resumen 04c 09amilcar93Belum ada peringkat

- Computer ArchitectureDokumen104 halamanComputer ArchitectureapuurvaBelum ada peringkat

- Arduino-Based Embedded System ModuleDokumen8 halamanArduino-Based Embedded System ModuleIJRASETPublicationsBelum ada peringkat

- CDN Creating Analog Behavioral ModelsDokumen24 halamanCDN Creating Analog Behavioral ModelsRafael MarinhoBelum ada peringkat

- Organization: Basic Computer Organization and DesignDokumen29 halamanOrganization: Basic Computer Organization and DesignGemechisBelum ada peringkat

- Rs-232 To I2c Protocol ConverterDokumen4 halamanRs-232 To I2c Protocol ConverterHarsha100% (2)

- Atrenta Tutorial PDFDokumen15 halamanAtrenta Tutorial PDFTechy GuysBelum ada peringkat

- Register Transfer Methodology: PrincipleDokumen25 halamanRegister Transfer Methodology: PrincipleKhalidTaherAl-HussainiBelum ada peringkat

- Lec20 RTL DesignDokumen40 halamanLec20 RTL DesignVrushali patilBelum ada peringkat

- Zynq 7020Dokumen4 halamanZynq 7020NGUYỄN HOÀNG LINHBelum ada peringkat

- Unit - IV: Input - OutputDokumen50 halamanUnit - IV: Input - Outputkarvind08Belum ada peringkat

- DVD2 JNTU Set1 SolutionsDokumen12 halamanDVD2 JNTU Set1 Solutionsకిరణ్ కుమార్ పగడాలBelum ada peringkat

- Register and Its OperationsDokumen56 halamanRegister and Its OperationsSARTHAK VARSHNEYBelum ada peringkat

- New Cortex-R Processors For Lte and 4g Mobile BasebandDokumen6 halamanNew Cortex-R Processors For Lte and 4g Mobile BasebandSaiteja ReddyBelum ada peringkat

- BGK MPMC Unit-3 Q and ADokumen21 halamanBGK MPMC Unit-3 Q and AMallesh ArjaBelum ada peringkat

- Hardware DesignDokumen42 halamanHardware DesignSruthi PaletiBelum ada peringkat

- Chapter 7: Register Transfer: ObjectivesDokumen7 halamanChapter 7: Register Transfer: ObjectivesSteffany RoqueBelum ada peringkat

- ESARM Unit-III and IV Slides MergedDokumen301 halamanESARM Unit-III and IV Slides MergedShashipreetham LakkakulaBelum ada peringkat

- VLSI Design - VerilogDokumen30 halamanVLSI Design - Veriloganand_duraiswamyBelum ada peringkat

- Superscaling in Computer ArchitectureDokumen9 halamanSuperscaling in Computer ArchitectureC183007 Md. Nayem HossainBelum ada peringkat

- Routing: by Manjunatha Naik V Asst. Professor Dept. of ECE, RNSITDokumen26 halamanRouting: by Manjunatha Naik V Asst. Professor Dept. of ECE, RNSITmanjunathanaikvBelum ada peringkat

- Superscalar ArchitectureDokumen9 halamanSuperscalar Architectureenggeng7Belum ada peringkat

- Bus System & Data TransferDokumen5 halamanBus System & Data Transferlmehta16Belum ada peringkat

- Cortex R4 White PaperDokumen20 halamanCortex R4 White PaperRAJARAMBelum ada peringkat

- NBB - Module II - System PartitioingDokumen60 halamanNBB - Module II - System Partitioing20D076 SHRIN BBelum ada peringkat

- DDR SDR Sdram ComparisionDokumen12 halamanDDR SDR Sdram ComparisionSrinivas CherukuBelum ada peringkat

- CMOS Power Dissipation and Trends: R. AmirtharajahDokumen60 halamanCMOS Power Dissipation and Trends: R. AmirtharajahmarshaldvtBelum ada peringkat

- Clocks Basics in 10 Minutes or Less: Edgar Pineda Field Applications Engineer Arrow Components MexicoDokumen20 halamanClocks Basics in 10 Minutes or Less: Edgar Pineda Field Applications Engineer Arrow Components MexicoshyamsubbuBelum ada peringkat

- Systems On Chip (SoC) - 01Dokumen47 halamanSystems On Chip (SoC) - 01AlfiyanaBelum ada peringkat

- Haps-54 March2009 ManualDokumen74 halamanHaps-54 March2009 Manualjohn92691Belum ada peringkat

- ASIC TimingDokumen54 halamanASIC TimingKrishna MohanBelum ada peringkat

- ArchitectureDokumen21 halamanArchitecturepriyankaBelum ada peringkat

- Power Dissipation in CMOS Circuits: Advanced VLSI EEE 6405 Slide1 Abm Harun-Ur RashidDokumen28 halamanPower Dissipation in CMOS Circuits: Advanced VLSI EEE 6405 Slide1 Abm Harun-Ur RashidAbu RaihanBelum ada peringkat

- Lecture1 - Introduction To Embbeded System DesignDokumen29 halamanLecture1 - Introduction To Embbeded System DesignĐức Anh CBelum ada peringkat

- FSM Implementations: TIE-50206 Logic Synthesis Arto Perttula Tampere University of Technology Fall 2017Dokumen25 halamanFSM Implementations: TIE-50206 Logic Synthesis Arto Perttula Tampere University of Technology Fall 2017antoniocljBelum ada peringkat

- Computer System Design: System-on-ChipDari EverandComputer System Design: System-on-ChipPenilaian: 2 dari 5 bintang2/5 (1)

- Design and Fabrication of Pneumatic Sheet Metal Cutting and Bending MachineDokumen9 halamanDesign and Fabrication of Pneumatic Sheet Metal Cutting and Bending MachineKanaga VaratharajanBelum ada peringkat

- (Application Form) Amma Two Wheeler Scheme Tamilnadu - Amma Scooter Scheme - Pradhan Mantri AgreementDokumen4 halaman(Application Form) Amma Two Wheeler Scheme Tamilnadu - Amma Scooter Scheme - Pradhan Mantri AgreementKanaga VaratharajanBelum ada peringkat

- Ijsrdv1i9084 PDFDokumen2 halamanIjsrdv1i9084 PDFKanaga VaratharajanBelum ada peringkat

- CPP Basic SyntaxDokumen4 halamanCPP Basic SyntaxSumeet BhardwajBelum ada peringkat

- Annexure-I Consumer Redressal Forum Complaint FormatDokumen2 halamanAnnexure-I Consumer Redressal Forum Complaint FormatKanaga VaratharajanBelum ada peringkat

- Carry Select Adders: - Consider The Following Partitioned AdditionDokumen14 halamanCarry Select Adders: - Consider The Following Partitioned AdditionKanaga VaratharajanBelum ada peringkat

- Adithya Institute of Technology Coimbatore - 107 Lesson Plan DetailsDokumen12 halamanAdithya Institute of Technology Coimbatore - 107 Lesson Plan Detailshakkem bBelum ada peringkat

- ErrorsDokumen4 halamanErrorsjeanne pauline cruzBelum ada peringkat

- Introduction To Pic 16F877ADokumen37 halamanIntroduction To Pic 16F877AAbdul Muqeet Ahmed KhanBelum ada peringkat

- TM SAM MF-HF 5000 Series 150&250W 98 124351Dokumen60 halamanTM SAM MF-HF 5000 Series 150&250W 98 124351citaccitacBelum ada peringkat

- How To Make SMPS Pass Surge & Lightning TestDokumen10 halamanHow To Make SMPS Pass Surge & Lightning TestdcasdcasdcasdcBelum ada peringkat

- Ber-Ask 8Dokumen5 halamanBer-Ask 8Premi.R ece2017Belum ada peringkat

- Experiment 3 - CAÑADokumen10 halamanExperiment 3 - CAÑAJaphetRayModestoCanaBelum ada peringkat

- Panasonic PTvx430 Projector SpecDokumen7 halamanPanasonic PTvx430 Projector SpecUsman GhaniBelum ada peringkat

- Datasheet lm124Dokumen4 halamanDatasheet lm124Valdark Valente MdlBelum ada peringkat

- X-O T P B: MetrahitDokumen8 halamanX-O T P B: MetrahitArnel PBelum ada peringkat

- TD Bom L&teccDokumen89 halamanTD Bom L&teccamitdesai92Belum ada peringkat

- Compact Component System: InstructionsDokumen29 halamanCompact Component System: InstructionsGabi DimaBelum ada peringkat

- CBSE Class 9 Computer Science Sample Paper 2017Dokumen1 halamanCBSE Class 9 Computer Science Sample Paper 2017Vaibhav bhandari0% (1)

- Apex DC Motor Drive ICDokumen1 halamanApex DC Motor Drive ICSuman RachaBelum ada peringkat

- D73668GC21 AppdDokumen10 halamanD73668GC21 Appdpraveen2kumar5733Belum ada peringkat

- Divya ResumeDokumen2 halamanDivya ResumeDivyaRamBelum ada peringkat

- Nat sm45Dokumen40 halamanNat sm45daniBelum ada peringkat

- 15ec62 Paper2Dokumen2 halaman15ec62 Paper2Nikhil KulkarniBelum ada peringkat

- VNX Architectural Overview Final ProducedDokumen40 halamanVNX Architectural Overview Final ProducedBaiyant SinghBelum ada peringkat

- Msi b360m Mortar DatasheetDokumen1 halamanMsi b360m Mortar DatasheetAlan VestaBelum ada peringkat

- AWG Specifications TableDokumen6 halamanAWG Specifications TableMaralo SinagaBelum ada peringkat

- Team H Project: Water Level Detection and Automatic Switch OffDokumen11 halamanTeam H Project: Water Level Detection and Automatic Switch OffVaibhav MadhukarBelum ada peringkat

- Low Voltage Dual 35mW Audio Power Amplifier Circuits (BTL 300mW)Dokumen3 halamanLow Voltage Dual 35mW Audio Power Amplifier Circuits (BTL 300mW)Sergiu SpiridonBelum ada peringkat

- Datasheet Moc 4030 PDFDokumen4 halamanDatasheet Moc 4030 PDFMarcelituz RojasBelum ada peringkat

- ParametricTesting PDFDokumen15 halamanParametricTesting PDFudoru9461Belum ada peringkat

- PAFM1000W144: 1000W - Ham Radio 2M/144Mhz Power Amplifier ModuleDokumen5 halamanPAFM1000W144: 1000W - Ham Radio 2M/144Mhz Power Amplifier ModuleJuan Enrique Castillo CanoBelum ada peringkat

- RK515DTBG30ADokumen1 halamanRK515DTBG30ALuzmila SotoBelum ada peringkat

- 7.IDMT Earth Fault RelayDokumen3 halaman7.IDMT Earth Fault Relayarjuna4306Belum ada peringkat