IR Models

Diunggah oleh

Vijaya NatarajanDeskripsi Asli:

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

IR Models

Diunggah oleh

Vijaya NatarajanHak Cipta:

Format Tersedia

Information Retrieval

Course Instructor : Dr S.Natarajan

Professor and Key Resource Person

Department of Information Science and Engineering

PES Institute of Technology

Bengaluru

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Alternative Algebraic Models

Part II IR

Alternative Probabilistic Models

Bayesian Networks

Inference Network Model

Belief Network Model

Comparison of Bayesian Network Models

Computational Costs of Bayesian Networks

The Impact of Bayesian Networks

Structured Text Retrieval Models

Model Based on Non-overlapping Lists

Model Based on Proximal Nodes

Models for Browsing

Flat Browsing

Structure Guided Browsing

The Hypertext Model

Trends and Research Issues

The Information Retrieval Cycle

Source

Selection

Search

Query

Selection

Ranked List

Examination

Documents

Delivery

Documents

Query

Formulation

Resource

source reselection

System discovery

Vocabulary discovery

Concept discovery

Document discovery

The Central Problem in IR

Information Seeker Authors

Concepts Concepts

Query Terms

Document Terms

Do these represent the same concepts?

The IR Black Box

Documents Query

Hits

Representation

Function

Representation

Function

Query Representation Document Representation

Comparison

Function

Index

Representing Text

Documents Query

Hits

Representation

Function

Representation

Function

Query Representation Document Representation

Comparison

Function

Index

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Alternative Algebraic Models

Alternative Probabilistic Models

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Alternative Algebraic Models

Alternative Probabilistic Models

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Alternative Algebraic Models

Alternative Probabilistic Models

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Alternative Algebraic Models

Alternative Probabilistic Models

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Alternative Algebraic Models

Alternative Probabilistic Models

Representing Documents

The quick brown

fox jumped over

the lazy dogs

back.

Document 1

Document 2

Now is the time

for all good men

to come to the

aid of their party.

the

is

for

to

of

quick

brown

fox

over

lazy

dog

back

now

time

all

good

men

come

jump

aid

their

party

0

0

1

1

0

1

1

0

1

1

0

0

1

0

1

0

0

1

1

0

0

1

0

0

1

0

0

1

1

0

1

0

1

1

Term

D

o

c

u

m

e

n

t

1

D

o

c

u

m

e

n

t

2

Stopword

List

Boolean View of a Collection

quick

brown

fox

over

lazy

dog

back

now

time

all

good

men

come

jump

aid

their

party

0

0

1

1

0

0

0

0

0

1

0

0

1

0

1

1

0

0

1

0

0

1

0

0

1

0

0

1

1

0

0

0

0

1

Term

D

o

c

1

D

o

c

2

0

0

1

1

0

1

1

0

1

1

0

0

1

0

1

0

0

1

1

0

0

1

0

0

1

0

0

1

0

0

0

0

0

1

D

o

c

3

D

o

c

4

0

0

0

1

0

1

1

0

0

1

0

0

1

0

0

1

0

0

1

0

0

1

0

0

1

0

0

0

1

0

1

0

0

1

D

o

c

5

D

o

c

6

0

0

1

1

0

0

1

0

0

1

0

0

1

0

0

1

0

1

0

0

0

1

0

0

1

0

0

1

1

1

1

0

0

0

D

o

c

7

D

o

c

8

Each column represents the view of

a particular document: What terms

are contained in this document?

Each row represents the view of a

particular term: What documents

contain this term?

To execute a query, pick out rows

corresponding to query terms and

then apply logic table of

corresponding Boolean operator

Sample Queries

fox

dog 0

0

0

0

1

1

0

0

1

1

0

0

0

1

0

0

Term

D

o

c

1

D

o

c

2

D

o

c

3

D

o

c

4

D

o

c

5

D

o

c

6

D

o

c

7

D

o

c

8

dog . fox 0 0 1 0 1 0 0 0

dog v fox 0 0 1 0 1 0 1 0

dog fox 0 0 0 0 0 0 0 0

fox dog 0 0 0 0 0 0 1 0

dog AND fox Doc 3, Doc 5

dog OR fox Doc 3, Doc 5, Doc 7

dog NOT fox empty

fox NOT dog Doc 7

good

party

0

0

1

0

0

0

1

0

0

0

1

1

0

0

1

1

g . p 0 0 0 0 0 1 0 1

g . p o 0 0 0 0 0 1 0 0

good AND party Doc 6, Doc 8

over 1 0 1 0 1 0 1 1

good AND party NOT over Doc 6

Term

D

o

c

1

D

o

c

2

D

o

c

3

D

o

c

4

D

o

c

5

D

o

c

6

D

o

c

7

D

o

c

8

The Boolean Model

Example:

Terms: K1, ,K8.

Documents:

1. D1 = {K1, K2, K3, K4, K5}

2. D2 = {K1, K2, K3, K4}

3. D3 = {K2, K4, K6, K8}

4. D4 = {K1, K3, K5, K7}

5. D5 = {K4, K5, K6, K7, K8}

6. D6 = {K1, K2, K3, K4}

Query: K1. (K2 v K3)

Answer: {D1, D2, D4, D6} ({D1, D2, D3, D6} {D3, D5})

= {D1, D2, D6}

(1,0,0) means

present in Ka and

absent in Kb and

Kc.

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Alternative Algebraic Models

Alternative Probabilistic Models

The Vector Model

Define:

W

ij

> 0 whenever k

i

e dj

Wiq >= 0 associated with the pair (ki,q)

vec(dj) = (w1j,w2j ..., wtj)

vec(q) = (w1q, w2q, ..., wtq)

To each term ki is associated a unitary vector vec(i)

The unitary vectors vec(i) and vec(j) are assumed to

be orthonormal (i.e., index terms are assumed to

occur independently within the documents)

The t unitary vectors vec(i) form an orthonormal

basis for a t-dimensional space

In this space, queries and documents are

represented as weighted vectors

The Vector Model:

Example I

k1 k2 k3

q - dj

d1 1 0 1 2

d2 1 0 0 1

d3 0 1 1 2

d4 1 0 0 1

d5 1 1 1 3

d6 1 1 0 2

d7 0 1 0 1

q 1 1 1

d1

d2

d3

d4 d5

d6

d7

k1

k2

k3

The Vector Model:

Example II

d1

d2

d3

d4 d5

d6

d7

k1

k2

k3

k1 k2 k3

q - dj

d1 1 0 1 4

d2 1 0 0 1

d3 0 1 1 5

d4 1 0 0 1

d5 1 1 1 6

d6 1 1 0 3

d7 0 1 0 2

q 1 2 3

The Vector Model:

Example III

d1

d2

d3

d4 d5

d6

d7

k1

k2

k3

k1 k2 k3

q - dj

d1 2 0 1 5

d2 1 0 0 1

d3 0 1 3 11

d4 2 0 0 2

d5 1 2 4 17

d6 1 2 0 5

d7 0 5 0 10

q 1 2 3

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Alternative Algebraic Models

Alternative Probabilistic Models

Probabilistic Model

Objective: to capture the IR problem using a

probabilistic framework

Given a user query, there is an ideal answer set

Querying as specification of the properties of

this ideal answer set (clustering)

But, what are these properties?

Guess at the beginning what they could be (i.e.,

guess initial description of ideal answer set)

Improve by iteration

Probabilistic Model

An initial set of documents is retrieved somehow

User inspects these docs looking for the relevant

ones (in truth, only top 10-20 need to be inspected)

IR system uses this information to refine

description of ideal answer set

By repeting this process, it is expected that the

description of the ideal answer set will improve

Have always in mind the need to guess at the very

beginning the description of the ideal answer set

Description of ideal answer set is modeled in

probabilistic terms

Conditional

Probability

Conditional

Probability

Pluses and Minuses

Advantages:

Docs ranked in decreasing order of probability of

relevance

Disadvantages:

need to guess initial estimates for P(ki | R)

method does not take into account tf and idf factors

Brief Comparison of Classic Models

Boolean model does not provide for partial matches

and is considered to be the weakest classic model

Salton and Buckley did a series of experiments that

indicate that, in general, the vector model outperforms

the probabilistic model with general collections

This seems also to be the view of the research

community

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Fuzzy Set Model

Extended Boolean Model

Set Theoretic Models

The Boolean model imposes a binary criterion

for deciding relevance

The question of how to extend the Boolean

model to accomodate partial matching and a

ranking has attracted considerable attention in

the past

We discuss now two set theoretic models for

this:

Fuzzy Set Model

Extended Boolean Model

84

boolean logic

fuzzy logic

85

fuzzy membership functions

86

fuzzy membership functions

(another example)

87

Membership function of fuzzy logic

Age

25 40 55

Young Old

1

Middle

0.5

DOM

Degree of

Membership

Fuzzy values

Fuzzy values have associated degrees of membership in the set.

0

Linguistic Values

M

e

m

b

e

r

s

h

i

p

0

1

Height

short medium tall

160 170 180 (cm)

M

e

m

b

e

r

s

h

i

p

0

1

Weight

light medium heavy

50 70 90 (kg)

Fuzzy Set Model

Queries and docs represented by sets of index

terms: matching is approximate from the start

This vagueness can be modeled using a fuzzy

framework, as follows:

with each term is associated a fuzzy set

each doc has a degree of membership in this fuzzy

set

This interpretation provides the foundation for many

models for IR based on fuzzy theory

In here, we discuss the model proposed by Ogawa,

Morita, and Kobayashi (1991)

Alternative Set Theoretic Models

-Fuzzy Set Model

Model

a query term: a fuzzy set

a document: degree of membership in this set

membership function

Associate membership function with the elements

of the class

0: no membership in the set

1: full membership

0~1: marginal elements of the set

documents

Fuzzy Set Theory

Framework for representing classes whose

boundaries are not well defined

Key idea is to introduce the notion of a degree of

membership associated with the elements of a set

This degree of membership varies from 0 to 1 and

allows modeling the notion of marginal membership

Thus, membership is now a gradual notion, contrary

to the crispy notion enforced by classic Boolean logic

Examples

Assume U={d

1

, d

2

, d

3

, d

4

, d

5

, d

6

}

Let A and B be {d

1

, d

2

, d

3

} and {d

2

, d

3

, d

4

},

respectively.

Assume

A

={d

1

:0.8, d

2

:0.7, d

3

:0.6, d

4

:0, d

5

:0, d

6

:0}

and

B

={d

1

:0, d

2

:0.6, d

3

:0.8, d

4

:0.9, d

5

:0, d

6

:0}

={d

1

:0.2, d

2

:0.3, d

3

:0.4, d

4

:1, d

5

:1, d

6

:1}

=

{d

1

:0.8, d

2

:0.7, d

3

:0.8, d

4

:0.9, d

5

:0, d

6

:0}

=

{d

1

:0, d

2

:0.6, d

3

:0.6, d

4

:0, d

5

:0, d

6

:0}

) ( 1 ) ( u u

A

A

=

)) ( ), ( max( ) ( u u u

B A B A

=

)) ( ), ( min( ) ( u u u

B A B A

=

Fuzzy Information Retrieval

(Continued)

physical meaning

A document d

j

belongs to the fuzzy set associated to

the term k

i

if its own terms are related to k

i

, i.e.,

i,j

=1.

If there is at least one index term k

l

of d

j

which is

strongly related to the index k

i

, then

i,j

~1.

k

i

is a good fuzzy index

When all index terms of d

j

are only loosely related to

k

i

,

i,j

~0.

k

i

is not a good fuzzy index

Fuzzy Information Retrieval

basic idea

Expand the set of index terms in the query

with related terms (from the thesaurus) such

that additional relevant documents can be

retrieved

A thesaurus can be constructed by defining a

term-term correlation matrix c whose rows

and columns are associated to the index terms

in the document collection

keyword connection matrix

Example

q=(k

a

. (k

b

v k

c

))

=(k

a

. k

b

. k

c

) v (k

a

. k

b

. k

c

) v(k

a

. k

b

. k

c

)

=cc

1

+cc

2

+cc

3

D

a

D

b

D

c

cc

3

cc

2

cc

1

D

a

: the fuzzy set of documents

associated to the index k

a

d

j

eD

a

has a degree of membership

a,j

> a predefined threshold K

D

a

: the fuzzy set of documents

associated to the index k

a

(the negation of index term k

a

)

Example

)) 1 )( 1 ( 1 ( )) 1 ( 1 ( ) 1 ( 1

) 1 ( 1

, , , , , , , , ,

3

1

,

, 3 2 1 ,

j c j b j a j c j b j a j c j b j a

i

j

i

cc

j cc cc cc j q

=

=

=

[

=

+ +

Query q=k

a

. (k

b

v k

c

)

disjunctive normal form q

dnf

=(1,1,1) v (1,1,0) v (1,0,0)

(1) the degree of membership in a disjunctive fuzzy set is computed

using an algebraic sum (instead of max function) more smoothly

(2) the degree of membership in a conjunctive fuzzy set is computed

using an algebraic product (instead of min function)

Recall

) ( 1 ) ( u u

A

A

=

Fuzzy Set Model

Q: gold silver truck

D1: Shipment of gold damaged in a fire

D2: Delivery of silver arrived in a silver truck

D3: Shipment of gold arrived in a truck

IDF (Select Keywords)

a = in = of = 0 = log

3/3

arrived = gold = shipment = truck = 0.176 = log

3/2

damaged = delivery = fire = silver = 0.477 = log

3/1

8 Keywords (Dimensions) are selected

arrived(1), damaged(2), delivery(3), fire(4), gold(5),

silver(6), shipment(7), truck(8)

Fuzzy Set Model

Fuzzy Set Model

Fuzzy Set Model

Sim(q,d): Alternative 1

Sim(q,d

3

) > Sim(q,d

2

) > Sim(q,d

1

)

Sim(q,d): Alternative 2

Sim(q,d

3

) > Sim(q,d

2

) > Sim(q,d

1

)

Fuzzy Information Retrieval

Fuzzy IR models have been discussed mainly in the

literature associated with fuzzy theory

Experiments with standard test collections are not

available

Difficult to compare at this time

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Fuzzy Set Model

Extended Boolean Model

Extended Boolean Model

Booelan retrieval is simple and elegant

But, no ranking is provided

How to extend the model?

interpret conjunctions and disjunctions in terms of

Euclidean distances

Extended Boolean Model

Boolean model is simple and elegant.

But, no provision for a ranking

As with the fuzzy model, a ranking can be

obtained by relaxing the condition on set

membership

Extend the Boolean model with the notions of

partial matching and term weighting

Combine characteristics of the Vector model

with properties of Boolean algebra

The Idea

The extended Boolean model (introduced by

Salton, Fox, and Wu, 1983) is based on a

critique of a basic assumption in Boolean

algebra

Let,

q = kx . ky

wxj = fxj * idf(x) associated with [kx,dj]

max(idf(i))

Further, wxj = x and wyj = y

Conclusions

Model is quite powerful

Properties are interesting and might be useful

Computation is somewhat complex

However, distributivity operation does not hold

for ranking computation:

q1 = (k1 v k2) . k3

q2 = (k1 . k3) v (k2 . k3)

sim(q1,dj) = sim(q2,dj)

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Alternative Algebraic Models

Generalized Vector Model

Latent Semantic Indexing Model

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Alternative Algebraic Models

Generalized Vector Model

Latent Semantic Indexing Model

Neural Network Model

Latent Semantic Analysis

SVD: Dimensionality Reduction

LSI, SVD, & Eigenvectors

SVD decomposes:

Term x Document matrix X as

X=TSD

Where T,D left and right singular vector matrices, and

S is a diagonal matrix of singular values

Corresponds to eigenvector-eigenvalue

decompostion: Y=VLV

Where V is orthonormal and L is diagonal

T: matrix of eigenvectors of Y=XX

D: matrix of eigenvectors of Y=XX

S: diagonal matrix L of eigenvalues

Computing Similarity in LSI

SVD details

SVD Details (contd)

Example

+ Q : Light waves.

+ D

1

: Particle and wave models of

light.

+ D

2

: Surfing on the waves under

star lights.

+ D

3

: Electro-magnetic models for

fotons.

How LSI Works?

+uses multidimensional vector space to place

all documents and terms.

+Each dimension in that space corresponds to

a concept existing in the collection.

+Thus underlying topics of the document is

encoded in a vector.

+Common related terms in a document and

query will pull document and query vector

close to each other.

GOOGLE USES LSI

Increasing its weight in ranking pages

~ sign before the search term stands for the semantic

search

~phone

the first link appearing is the page for Nokia

although page does not contain the word phone

~humor

retrieved pages contain its synonyms; comedy, jokes,

funny

Google AdSense sandbox

check which advertisements google would put on your

page

Computing an Example

Let (Mij) be given by the matrix

Compute the matrices (K), (S), and (D)

t

k1 k2 k3

q - dj

d1 2 0 1 5

d2 1 0 0 1

d3 0 1 3 11

d4 2 0 0 2

d5 1 2 4 17

d6 1 2 0 5

d7 0 5 0 10

q 1 2 3

159

IR models

Singular Value Decomposition(SVD)

Least squares methods

Given (x1,y1), (x2,y2), , (xn,yn)

Compute: F(_) =m_ + b

SS(m,b) =

=

n

i

i i

b m

x f y

1

2

,

)) ( ( min arg

160

161

IR models

Singular Value Decomposition(SVD)

Given document-by-term matrix A

Compute latent semantic matrix

^

A

2

^

|| || A A = A

162

Singular Value Decomposition

0

=

0

terms

documents

t

1

t

M

d

1

d

N

u

1

u

2

:

:

u

M

v

1

T

v

2

T

.. v

N

T

A V

T

S U

A = USV

T

UU

T

=I ; VV

T

=I

S : singular values

163

Truncated-SVD

A

k

= U

k

S

k

V

T

k

best approximation of A

164

IR models

165

IR models

166

IR models

167

IR models

Latent semantic indexing model

Pros and Cons

A clean formal framework

Computation cost (SVD)

Setting of k critical (empirically 300-1000)

Effective on small collections (CACM,), but

variable on large collections (TREC)

Part II- IR

Introduction

Taxonomy of IR Models

Retrieval Adhoc and Filtering

A Formal Characterization of IR Models

Classic IR

Basic Concepts

Boolean Model

Vector Model

Probabilistic Model

Alternative Set Theoretic Models

Alternative Algebraic Models

Generalized Vector Model

Latent Semantic Indexing Model

Neural Network Model

08.11.05 170

The human brain

Seat of consciousness and cognition

Perhaps the most complex information processing

machine in nature

Historically, considered as a monolithic information

processing machine

08.11.05 171

Beginners Brain Map

Forebrain (Cerebral Cortex):

Language, maths, sensation,

movement, cognition, emotion

Cerebellum: Motor

Control

Midbrain: Information Routing;

involuntary controls

Hindbrain: Control of

breathing, heartbeat, blood

circulation

Spinal cord: Reflexes,

information highways between

body & brain

08.11.05 172

Brain : a computational machine?

Information processing: brains vs computers

- brains better at perception / cognition

- slower at numerical calculations

Evolutionarily, brain has developed algorithms

most suitable for survival

Algorithms unknown: the search is on

Brain astonishing in the amount of information it

processes

Typical computers: 10

9

operations/sec

Housefly brain: 10

11

operations/sec

08.11.05 173

Brain facts & figures

Basic building block of nervous system: nerve

cell (neuron)

~ 10

12

neurons in brain

~ 10

15

connections between them

Connections made at synapses

The speed: events on millisecond

scale in

neurons, nanosecond scale in silicon chips

08.11.05 174

Neuron - classical

Dendrites

Receiving stations of neurons

Don't generate action potentials

Cell body

Site at which information received is

integrated

Axon

Generate and relay action potential

Terminal

Relays information to next neuron

in the pathway

http://www.educarer.com/images/brain-nerve-axon.jpg

Part II IR

Alternative Probabilistic Models

Bayesian Networks

Inference Network Model

Belief Network Model

Comparison of Bayesian Network Models

Computational Costs of Bayesian Networks

The Impact of Bayesian Networks

Structured Text Retrieval Models

Model Based on Non-overlapping Lists

Model Based on Proximal Nodes

Models for Browsing

Flat Browsing

Structure Guided Browsing

The Hypertext Model

Trends and Research Issues

Alternative Probabilistic Models

Probability Theory

Semantically clear

Computationally clumsy

Why Bayesian Networks?

Clear formalism to combine evidences

Modularize the world (dependencies)

Bayesian Network Models for IR

Inference Network (Turtle & Croft, 1991)

Belief Network (Ribeiro-Neto & Muntz, 1996)

Bayesian Inference

Schools of thought in probability

freqentist (inference) -is one of a number of

possible techniques of formulating generally

applicable schemes for making statistical

inference:

Epistemology(ical)--The branch of philosophy

that studies the nature of knowledge, its

presuppositions and foundations, and its extent

and validity.

Bayesian Inference

Basic Axioms:

0 < P(A) < 1 ;

P(sure)=1;

P(A V B)=P(A)+P(B) if A and B are mutually

exclusive

Bayesian Inference

Other formulations

P(A)=P(A A B)+P(A A B)

P(A)= E

i

P(A A B

i

) , where B

i,i

is a set of

exhaustive and mutually exclusive events

P(A) + P(A) = 1

P(A|K) belief in A given the knowledge K

if P(A|B)=P(A), we say:A and B are independent

if P(A|B A C)= P(A|C), we say: A and B are

conditionally independent, given C

P(A A B)=P(A|B)P(B)

P(A)= E

i

P(A | B

i

)P(B

i

)

Bayesian Inference

Bayes Rule : the heart of Bayesian techniques

P(H|e) = P(e|H)P(H) / P(e)

Where, H : a hypothesis and e is an evidence

P(H) : prior probability

P(H|e) : posterior probability

P(e|H) : probability of e if H is true

P(e) : a normalizing constant, then we

write:

P(H|e) ~ P(e|H)P(H)

Bayesian Networks

y

i

: parent nodes (in this case, root nodes)

x : child node

y

i

cause x

Y the set of parents of x

The influence of Y on x

can be quantified by any function

F(x,Y) such that E

x

F(x,Y) = 1

0 < F(x,Y) < 1

For example, F(x,Y)=P(x|Y)

y

t y

2

y

1

x

Bayesian Networks

In a Bayesian network each

variable x is conditionally

independent of all its

non-descendants, given its

parents.

For example:

P(x

4

, x

5

| x

2

,

x

3

)= P(x

4

| x

2

,

x

3

) P( x

5

| x

3

)

x

2

x

1

x

3

x

4

x

5

Part II IR

Alternative Probabilistic Models

Bayesian Networks

Inference Network Model

Belief Network Model

Comparison of Bayesian Network Models

Computational Costs of Bayesian Networks

The Impact of Bayesian Networks

Structured Text Retrieval Models

Model Based on Non-overlapping Lists

Model Based on Proximal Nodes

Models for Browsing

Flat Browsing

Structure Guided Browsing

The Hypertext Model

Trends and Research Issues

Part II IR

Alternative Probabilistic Models

Bayesian Networks

Inference Network Model

Belief Network Model

Comparison of Bayesian Network Models

Computational Costs of Bayesian Networks

The Impact of Bayesian Networks

Structured Text Retrieval Models

Model Based on Non-overlapping Lists

Model Based on Proximal Nodes

Models for Browsing

Flat Browsing

Structure Guided Browsing

The Hypertext Model

Trends and Research Issues

213

Bayesian Belief Networks

Bayesian belief networks (also known as Bayesian

networks, probabilistic networks): allow class

conditional independencies between subsets of variables

A (directed acyclic) graphical model of causal relationships

Represents dependency among the variables

Gives a specification of joint probability distribution

X

Y

Z

P

Nodes: random variables

Links: dependency

X and Y are the parents of Z, and Y is

the parent of P

No dependency between Z and P

Has no loops/cycles

214

Bayesian Belief Network: An Example

Family

History (FH)

LungCancer

(LC)

PositiveXRay

Smoker (S)

Emphysema

Dyspnea

LC

~LC

(FH, S) (FH, ~S) (~FH, S) (~FH, ~S)

0.8

0.2

0.5

0.5

0.7

0.3

0.1

0.9

Bayesian Belief Network

CPT: Conditional Probability Table

for variable LungCancer:

[

=

=

n

i

Y Parents

i

x

i

P x x P

n

1

)) ( | ( ) ,..., (

1

shows the conditional probability for

each possible combination of its parents

Derivation of the probability of a

particular combination of values of X,

from CPT:

Belief Network Model

Assumption

P(d

j

|q) is adopted as the rank of the document d

j

with

respect to the query q. It reflects the degree of

coverage provided to the concept d

j

by the concept

q.

Belief Network Model

The Probability Space

Define:

K={k

1

, k

2

, ...,k

t

} the sample space (a concept space)

u c K a subset of K (a concept)

k

i

an index term (an elementary concept)

k=(k

1

, k

2

, ...,k

t

) a vector associated to each u such that

g

i

(k)=1 k

i

e u

k

i

a binary random variable associated with the index

term k

i

, (k

i

= 1 g

i

(k)=1 k

i

e u)

Belief Network Model

A Set-Theoretic View

Define:

a document d

j

and query q as concepts in K

a generic concept c in K

a probability distribution P over K, as

P(c)=E

u

P(c|u) P(u)

P(u)=(1/2)

t

P(c) is the degree of coverage of the space K

by c

Belief Network Model

The rank of d

j

P(d

j

|q) = P(d

j

A q) / P(q)

~ P(d

j

A q)

~ E

u

P(d

j

A q | u) P(u)

~ E

u

P(d

j

| u)

P(q | u) P(u)

~ E

k

P(d

j

| k)

P(q | k) P(k)

Part II IR

Alternative Probabilistic Models

Bayesian Networks

Inference Network Model

Belief Network Model

Comparison of Bayesian Network Models

Computational Costs of Bayesian Networks

The Impact of Bayesian Networks

Structured Text Retrieval Models

Model Based on Non-overlapping Lists

Model Based on Proximal Nodes

Models for Browsing

Flat Browsing

Structure Guided Browsing

The Hypertext Model

Trends and Research Issues

Bayesian Network Models

Comparison

Inference Network Model is the first and well known

Belief Network adopts a set-theoretic view

Belief Network adopts a clearly define sample space

Belief Network provides a separation between query

and document portions

Belief Network is able to reproduce any ranking

produced by the Inference Network while the

converse is not true (for example: the ranking of the

standard vector model)

Part II IR

Alternative Probabilistic Models

Bayesian Networks

Inference Network Model

Belief Network Model

Comparison of Bayesian Network Models

Computational Costs of Bayesian Networks

The Impact of Bayesian Networks

Structured Text Retrieval Models

Model Based on Non-overlapping Lists

Model Based on Proximal Nodes

Models for Browsing

Flat Browsing

Structure Guided Browsing

The Hypertext Model

Trends and Research Issues

Bayesian Network Models

Impact

The major strength is net combination of distinct

evidential sources to support the rank of a given

document.

Part II IR

Alternative Probabilistic Models

Bayesian Networks

Inference Network Model

Belief Network Model

Comparison of Bayesian Network Models

Computational Costs of Bayesian Networks

The Impact of Bayesian Networks

Structured Text Retrieval Models

Model Based on Non-overlapping Lists

Model Based on Proximal Nodes

Models for Browsing

Flat Browsing

Structure Guided Browsing

The Hypertext Model

Trends and Research Issues

Part II IR

Alternative Probabilistic Models

Bayesian Networks

Inference Network Model

Belief Network Model

Comparison of Bayesian Network Models

Computational Costs of Bayesian Networks

The Impact of Bayesian Networks

Structured Text Retrieval Models

Model Based on Non-overlapping Lists

Model Based on Proximal Nodes

Models for Browsing

Flat Browsing

Structure Guided Browsing

The Hypertext Model

Trends and Research Issues

Part II IR

Alternative Probabilistic Models

Bayesian Networks

Inference Network Model

Belief Network Model

Comparison of Bayesian Network Models

Computational Costs of Bayesian Networks

The Impact of Bayesian Networks

Structured Text Retrieval Models

Model Based on Non-overlapping Lists

Model Based on Proximal Nodes

Models for Browsing

Flat Browsing

Structure Guided Browsing

The Hypertext Model

Trends and Research Issues

249

Models for browsing

Flat browsing: String

o Just as a list of paper

o No context cues provided

Structure guided: Tree

o Hierarchy

o Like directory tree in the computer

Hypertext (Internet!): Directed graph

o No limitations of sequential writing

o Modeled by a directed graph: links from unit A to unit B

units: docs, chapters, etc.

o A map (with traversed path) can be helpful

Flat browsing

The documents might be represented

as dots in a plan or as elements in a list.

Relevance feedback

Disadvantage : In a given page or

screen there may not be any indication

about the context where the user is.

Part II IR

Alternative Probabilistic Models

Bayesian Networks

Inference Network Model

Belief Network Model

Comparison of Bayesian Network Models

Computational Costs of Bayesian Networks

The Impact of Bayesian Networks

Structured Text Retrieval Models

Model Based on Non-overlapping Lists

Model Based on Proximal Nodes

Models for Browsing

Flat Browsing

Structure Guided Browsing

The Hypertext Model

Trends and Research Issues

Structure guided browsing

Organized in a directory structure. It

groups documents covering related

topics.

The same idea can be applied to a

single document.

Using history map.

Part II IR

Alternative Probabilistic Models

Bayesian Networks

Inference Network Model

Belief Network Model

Comparison of Bayesian Network Models

Computational Costs of Bayesian Networks

The Impact of Bayesian Networks

Structured Text Retrieval Models

Model Based on Non-overlapping Lists

Model Based on Proximal Nodes

Models for Browsing

Flat Browsing

Structure Guided Browsing

The Hypertext Model

Trends and Research Issues

Part II IR

Alternative Probabilistic Models

Bayesian Networks

Inference Network Model

Belief Network Model

Comparison of Bayesian Network Models

Computational Costs of Bayesian Networks

The Impact of Bayesian Networks

Structured Text Retrieval Models

Model Based on Non-overlapping Lists

Model Based on Proximal Nodes

Models for Browsing

Flat Browsing

Structure Guided Browsing

The Hypertext Model

Trends and Research Issues

Trends and Research Issues

Three types of products and systems which can benefit directly from

research in models for information retrieval

(i) Library Systems

(ii) Specialized Systems

(iii) Web

Library Systems- interest in cognition and behavioural issues oriented

particularly at a better understanding of which critirea

the users adopt to judge relevance

Computer scientist- how knowledge about the user affects

in the ranking strategies and the user interface

implemented in the system

Investigation of how the Boolean Model ( largely adopted by

most library systems ) affect the user of a library

Trends and Research Issues (contd)

Specialized Systems Ex: LEXIS-NEXIS retrieval system which

provides access to a very large collection

of legal and business documents

Key problem is how to find retrieve

(almost) all documents which might be

relevant to the user information need

without also retrieving a large collection

of unrelated documents

Sophisticated ranking algorithms based

on several evidential sources are highly

desirable and relevant

Trends and Research Issues

Web: User does not know what he/she wants or has given great

difficulty in properly formulating his/her request

This needs research in advanced user interfaces

From the point of view of ranking engine, an interesting

problem is to study how the paradigm adopted for the

user interface affects the ranking

Indexes maintained by various Web search engines are

almost disjoint (intersection corresponds to less than 2%

of the total number of pages indexed

Research on meta-search engines (engines which work

by fusing the rankings are generated by other search

engines) seems highly promising

Anda mungkin juga menyukai

- IR - ModelsDokumen58 halamanIR - ModelsMourad100% (3)

- Completed Unit II 17.7.17Dokumen113 halamanCompleted Unit II 17.7.17Dr.A.R.KavithaBelum ada peringkat

- Pattern Recognition Lecture "Template Matching": Prof. Dr. Marcin GrzegorzekDokumen28 halamanPattern Recognition Lecture "Template Matching": Prof. Dr. Marcin GrzegorzekWîsñü ÅrîBelum ada peringkat

- Cs8080 Ir Unit2 I Modeling and Retrieval EvaluationDokumen42 halamanCs8080 Ir Unit2 I Modeling and Retrieval EvaluationGnanasekaranBelum ada peringkat

- 1.BIT 02105 DATA STRUCTURES AND ALGORITHMS Course OutlineDokumen3 halaman1.BIT 02105 DATA STRUCTURES AND ALGORITHMS Course Outlinestaticbreeze100Belum ada peringkat

- Mathematics with Applications for the Management, Life, and Social SciencesDari EverandMathematics with Applications for the Management, Life, and Social SciencesPenilaian: 5 dari 5 bintang5/5 (2)

- Introduction of IR ModelsDokumen67 halamanIntroduction of IR ModelsMagarsa BedasaBelum ada peringkat

- CSD3009 - DATA-STRUCTURES-AND-ANALYSIS-OF-ALGORITHMS - LTP - 1.0 - 29 - CSD3009-DATA-STRUCTURES-AND-ANALYSIS-OF-ALGORITHMS - LTP - 1.0 - 1 - Data Structures and Analysis of AlgorithmsDokumen3 halamanCSD3009 - DATA-STRUCTURES-AND-ANALYSIS-OF-ALGORITHMS - LTP - 1.0 - 29 - CSD3009-DATA-STRUCTURES-AND-ANALYSIS-OF-ALGORITHMS - LTP - 1.0 - 1 - Data Structures and Analysis of AlgorithmsTony StarkBelum ada peringkat

- Introduction of IR ModelsDokumen62 halamanIntroduction of IR ModelsMagarsa BedasaBelum ada peringkat

- IR FinalDokumen57 halamanIR Finalyashchheda2002Belum ada peringkat

- Exemplar-Based Knowledge Acquisition: A Unified Approach to Concept Representation, Classification, and LearningDari EverandExemplar-Based Knowledge Acquisition: A Unified Approach to Concept Representation, Classification, and LearningBelum ada peringkat

- KRR Lecture 1 2 Intro LogicDokumen86 halamanKRR Lecture 1 2 Intro LogicLiviu NitaBelum ada peringkat

- Foundations: Information Modeling and Information ArchitectureDokumen84 halamanFoundations: Information Modeling and Information ArchitectureMichaelBelum ada peringkat

- Downloaded From: B.E. Computer Science and Engineering 3 & 4 Semesters Curriculum and Syllabi Semester IiiDokumen34 halamanDownloaded From: B.E. Computer Science and Engineering 3 & 4 Semesters Curriculum and Syllabi Semester Iiiprakash_vitBelum ada peringkat

- IR Models: - Why IR Models? - Boolean IR Model - Vector Space IR Model - Probabilistic IR ModelDokumen46 halamanIR Models: - Why IR Models? - Boolean IR Model - Vector Space IR Model - Probabilistic IR Modelkerya ibrahimBelum ada peringkat

- CIS664-Knowledge Discovery and Data MiningDokumen74 halamanCIS664-Knowledge Discovery and Data MiningrbvgreBelum ada peringkat

- SyllabusDokumen3 halamanSyllabusAyush ThakurBelum ada peringkat

- IR Chap4Dokumen32 halamanIR Chap4biniam teshomeBelum ada peringkat

- IR Chap4Dokumen32 halamanIR Chap4biniam teshomeBelum ada peringkat

- Data Structure ModuleDokumen10 halamanData Structure ModuleZahra TahirBelum ada peringkat

- ISR Question For Oral ExamDokumen23 halamanISR Question For Oral Examshruti.narkhede.it.2019Belum ada peringkat

- Alogrithms and Data Structure SyllabusDokumen7 halamanAlogrithms and Data Structure SyllabusJoshua CheungBelum ada peringkat

- IRS 2nd ChapDokumen42 halamanIRS 2nd ChapggfBelum ada peringkat

- Ita3002 Data-Structures Eth 1.0 37 Ita3002Dokumen2 halamanIta3002 Data-Structures Eth 1.0 37 Ita3002tamilzhini tamilzhiniBelum ada peringkat

- IR Systems Usually Adopt Index Terms To Process Queries Index TermDokumen24 halamanIR Systems Usually Adopt Index Terms To Process Queries Index Termsmilerash658440Belum ada peringkat

- Bcse202l Data-Structures-And-Algorithms TH 1.0 70 Bcse202lDokumen2 halamanBcse202l Data-Structures-And-Algorithms TH 1.0 70 Bcse202lKarthik VBelum ada peringkat

- New Research PDFDokumen2 halamanNew Research PDFRobert DoanBelum ada peringkat

- Electronics and Computer Engineering Batch 2011 Onwards - Finalised - 04!04!13Dokumen23 halamanElectronics and Computer Engineering Batch 2011 Onwards - Finalised - 04!04!13DrVikas Singh BhadoriaBelum ada peringkat

- Cse2002 Data-structures-And-Algorithms LTP 2.0 2 Cse2002 Data-structures-And-Algorithms LTP 2.0 1 Cse2002-Data Structures and AlgorithmsDokumen3 halamanCse2002 Data-structures-And-Algorithms LTP 2.0 2 Cse2002 Data-structures-And-Algorithms LTP 2.0 1 Cse2002-Data Structures and Algorithmspankaj yadavBelum ada peringkat

- TY DSA SyallbusDokumen5 halamanTY DSA SyallbusjayBelum ada peringkat

- IT304 - Data Structures and AlgorithmsDokumen4 halamanIT304 - Data Structures and AlgorithmsOp PlayerBelum ada peringkat

- IR Unit 2Dokumen54 halamanIR Unit 2jaganbecsBelum ada peringkat

- IR Basics Lec28 Oct 3 2011Dokumen26 halamanIR Basics Lec28 Oct 3 2011Mathesh ParamasivamBelum ada peringkat

- 1152CS239-Intro. To Data Science-SyllabusDokumen6 halaman1152CS239-Intro. To Data Science-SyllabusShashitha ReddyBelum ada peringkat

- Introduction To Data Mining For Bioinformatics: Fall 2005 Peter Van Der Putten (Putten - at - Liacs - NL)Dokumen50 halamanIntroduction To Data Mining For Bioinformatics: Fall 2005 Peter Van Der Putten (Putten - at - Liacs - NL)Raghavendra Prasad ReddyBelum ada peringkat

- IRS Unit 1 and Unit 2Dokumen28 halamanIRS Unit 1 and Unit 2Mohammed DanishBelum ada peringkat

- Heq Mar18 Dip Oop ReportDokumen14 halamanHeq Mar18 Dip Oop ReportMayura DilBelum ada peringkat

- A Literature Review of Image Retrieval Based On Semantic ConceptDokumen7 halamanA Literature Review of Image Retrieval Based On Semantic Conceptc5p7mv6jBelum ada peringkat

- ALL PH.D - CourseSyllabusDokumen27 halamanALL PH.D - CourseSyllabusVIPIN KUMAR MAURYABelum ada peringkat

- Review: Information Retrieval Techniques and ApplicationsDokumen10 halamanReview: Information Retrieval Techniques and Applicationsnul loBelum ada peringkat

- R For Data Science Import Tidy Transform Visualize and Model DataDokumen4 halamanR For Data Science Import Tidy Transform Visualize and Model Dataaarzu qadriBelum ada peringkat

- Chapter 2 Modeling: Modern Information Retrieval by R. Baeza-Yates and B. RibeirDokumen47 halamanChapter 2 Modeling: Modern Information Retrieval by R. Baeza-Yates and B. RibeirIrvan MaizharBelum ada peringkat

- Datascienceusing Python TrainingDokumen11 halamanDatascienceusing Python Training015Hardik ChughBelum ada peringkat

- C++ Adv - STLDokumen5 halamanC++ Adv - STLandrewpaul20059701Belum ada peringkat

- DSA SyllabusDokumen7 halamanDSA SyllabusAdarsh RBelum ada peringkat

- Information Retrieval HandoutDokumen5 halamanInformation Retrieval HandoutsdfasdBelum ada peringkat

- Digital SyllabusDokumen2 halamanDigital SyllabusPrakruthi K PBelum ada peringkat

- Practical Python AI Projects: Mathematical Models of Optimization Problems with Google OR-ToolsDari EverandPractical Python AI Projects: Mathematical Models of Optimization Problems with Google OR-ToolsBelum ada peringkat

- Comparative Investigation of K-Means and K-Medoid Algorithm On Iris DataDokumen4 halamanComparative Investigation of K-Means and K-Medoid Algorithm On Iris DataIJERDBelum ada peringkat

- Query Languages: Chapter SevenDokumen36 halamanQuery Languages: Chapter SevenSooraaBelum ada peringkat

- Web Search Algorithms and PageRankDokumen101 halamanWeb Search Algorithms and PageRankCeornea Paula100% (1)

- Lec 1Dokumen13 halamanLec 1Pheonix OrionBelum ada peringkat

- Syllabus of VTU 2016Dokumen19 halamanSyllabus of VTU 2016Rajdeep ChatterjeeBelum ada peringkat

- Chapter 4 IR ModelsDokumen43 halamanChapter 4 IR ModelsTolosa TafeseBelum ada peringkat

- Information Retrieval 6 IR ModelsDokumen14 halamanInformation Retrieval 6 IR ModelsVaibhav KhannaBelum ada peringkat

- Syllabus - PGD - DS - Batch-7 PDFDokumen12 halamanSyllabus - PGD - DS - Batch-7 PDFVinayak RaoBelum ada peringkat

- MAT3002 - APPLIED-LINEAR-ALGEBRA - LT - 1.0 - 1 - Applied Linear AlgebraDokumen2 halamanMAT3002 - APPLIED-LINEAR-ALGEBRA - LT - 1.0 - 1 - Applied Linear Algebraleyag41538Belum ada peringkat

- Introduction Information RetrievalDokumen73 halamanIntroduction Information RetrievalVijaya NatarajanBelum ada peringkat

- Knowledge Engineering Oxford 17 02 10 NatarajanDokumen66 halamanKnowledge Engineering Oxford 17 02 10 NatarajanVijaya NatarajanBelum ada peringkat

- DM and Visualization SJBIT 19 07 10Dokumen99 halamanDM and Visualization SJBIT 19 07 10Vijaya NatarajanBelum ada peringkat

- Ai Intro PesitDokumen64 halamanAi Intro PesitVijaya NatarajanBelum ada peringkat

- Fuzzy Classification VtuDokumen119 halamanFuzzy Classification VtuVijaya NatarajanBelum ada peringkat

- Paper For Worldcomp-FinalpaperDokumen7 halamanPaper For Worldcomp-FinalpaperVijaya NatarajanBelum ada peringkat

- YS3060 Spectrophotometer Operating Manual - 20170515 PDFDokumen47 halamanYS3060 Spectrophotometer Operating Manual - 20170515 PDFNelson BarriosBelum ada peringkat

- Cloud ComputingDokumen25 halamanCloud ComputinganjanaBelum ada peringkat

- SJ-20130219085558-002-ZXSDR R8861 (HV1.0) Product Description - 587031Dokumen28 halamanSJ-20130219085558-002-ZXSDR R8861 (HV1.0) Product Description - 587031Rehan Haider JafferyBelum ada peringkat

- MX Data SheetsDokumen14 halamanMX Data Sheetssatriam riawanBelum ada peringkat

- R-5000Series 230231325A RelNotes PDFDokumen17 halamanR-5000Series 230231325A RelNotes PDFBayu Yudi PrasajaBelum ada peringkat

- Architechture of 8085: Q. Explane Micro Processor 8085 With Its Block Diagram?Dokumen13 halamanArchitechture of 8085: Q. Explane Micro Processor 8085 With Its Block Diagram?Shubham SahuBelum ada peringkat

- 1Dokumen29 halaman1Ronniel de RamosBelum ada peringkat

- SM-G970F Tshoo 7 PDFDokumen64 halamanSM-G970F Tshoo 7 PDFGadis Sisca MeilaniBelum ada peringkat

- GigE JAI Camera SetupDokumen7 halamanGigE JAI Camera SetupAdi SuryaBelum ada peringkat

- Hydraulic Fluid Contamination TestingDokumen5 halamanHydraulic Fluid Contamination TestingPrabhakar RamachandranBelum ada peringkat

- Virtual and Augmented Reality in Marketing 2018Dokumen56 halamanVirtual and Augmented Reality in Marketing 2018Ashraf KaraymehBelum ada peringkat

- 8LT Product CatalogDokumen35 halaman8LT Product CatalogmuhammetnaberBelum ada peringkat

- 121 Lec DelimitedDokumen96 halaman121 Lec DelimitedJonathanA.RamirezBelum ada peringkat

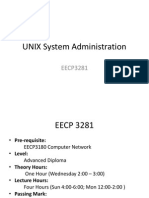

- EECP3281 Course IntroductionDokumen20 halamanEECP3281 Course IntroductionmichaeldalisayBelum ada peringkat

- Cloud StorageDokumen5 halamanCloud StoragejunigrBelum ada peringkat

- Republiqf of GaberestDokumen2 halamanRepubliqf of GaberestMadalina NituBelum ada peringkat

- Satellite l670 l675 Pro l670Dokumen255 halamanSatellite l670 l675 Pro l670sadfsdfBelum ada peringkat

- Performance Comparison of Hive, Impala and Spark SQLDokumen6 halamanPerformance Comparison of Hive, Impala and Spark SQLnailaBelum ada peringkat

- 6.3.3.8 Packet Tracer - Inter-VLAN Routing Challenge InstructionsDokumen2 halaman6.3.3.8 Packet Tracer - Inter-VLAN Routing Challenge InstructionsSesmaBelum ada peringkat

- PQ ProductcatalogusDokumen72 halamanPQ ProductcatalogusJohn FreackBelum ada peringkat

- ABAP - Cross Applications - Part 1 (2 of 2) (Emax Technologies) 131 PagesDokumen131 halamanABAP - Cross Applications - Part 1 (2 of 2) (Emax Technologies) 131 PagesRavi Jalani100% (1)

- THK Ball Screw En16Dokumen382 halamanTHK Ball Screw En16Bonell Antonio Martinez Vegas100% (1)

- Ni Pci-6024e PDFDokumen14 halamanNi Pci-6024e PDFBoureanu CodrinBelum ada peringkat

- Latitude 3190: Owner's ManualDokumen71 halamanLatitude 3190: Owner's ManualAriel AlasBelum ada peringkat

- 8150 Service Manual Rev BDokumen470 halaman8150 Service Manual Rev BfortronikBelum ada peringkat

- Descargar Gratis Libro Side by Side Third EditionDokumen21 halamanDescargar Gratis Libro Side by Side Third EditionMelissa Santos Canales17% (6)

- Bogen Technical Data Sheet IKS9 Rev 2 1Dokumen7 halamanBogen Technical Data Sheet IKS9 Rev 2 1ElectromateBelum ada peringkat

- Embedded System Project ReportDokumen55 halamanEmbedded System Project ReportManoj Saini0% (1)

- Aixcmds5 PDFDokumen838 halamanAixcmds5 PDFAvl SubbaraoBelum ada peringkat

- Digital Logic Design Memory and Programmable Logic DevicesDokumen25 halamanDigital Logic Design Memory and Programmable Logic Devicesnskprasad89Belum ada peringkat