HDX - Presentation - 12.01.2005

Diunggah oleh

Hassam AhmadJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

HDX - Presentation - 12.01.2005

Diunggah oleh

Hassam AhmadHak Cipta:

Format Tersedia

HDX deployment Plan

January 12

th

2005

NPE - TX

Existing Backbone Network After Resiliency Phase -I

25

18

25

28

29

24

31

9

7

31

24

29

25

23

31

23

24

29

21

31

25

5

6

7

1

12

20

2

4

10

11

17

14

6

7

6

6

9

11

6

9

not available

not available

not available

not available

not available

not available

not available

not available

not available

not available

not available

not available

not available

0 16 32 48 64

R1-1 AHDB-JIPR

R1-1 DLHI-JIPR

R1-1 BHPL-DLHI

R1-1 AHDB-BHPL

R1-1 AHDB-INDR

R1-1 BHPL-INDR

R1-2 MUMB-SURT

R1-2 AHDB-SURT

R1-2 AHDB-INDR

R1-2 BHPL-INDR

R1-2 BHPL-NGPR

R1-2 MUMB-NGPR

R1-2 KLYN-NGPR

R1-2 KLYN-MUMB

R1-3 HYDR-NGPR

R1-3 HYDR-SNGR

R1-3 PUNE-SNGR

R1-3 MUMB-PUNE

R1-3 MUMB-NGPR

R1-3 DHUL-KLYN

R1-3 KLYN-MUMB

R1-3 DHUL-PUNE

R2 BANG-HYDR

R2 BANG-KSGR

R2 KSGR-CHNN

R2 CHNN-VWDA

R2 HYDR-VWDA

L1 Used L1 Free L2 Used L2 Free

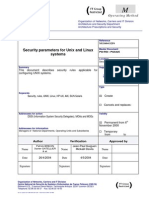

EXPRESS BANDWIDTH UTILISATION FOR RING 1-1, 1-2, 1-3, 2

[Part-1]

EXPRESS BANDWIDTH UTILISATION FOR RING 3-1, 3-2, 3-3, 4, 6

, 7 & 8 [Part-2]

25

18

25

28

29

24

31

9

7

31

24

29

25

23

31

23

24

29

21

31

25

5

6

7

1

12

20

2

4

10

11

17

14

6

7

6

6

9

11

6

9

not available

not available

not available

not available

not available

not available

not available

not available

not available

not available

not available

not available

not available

0 16 32 48 64

R1-1 AHDB-JIPR

R1-1 DLHI-JIPR

R1-1 BHPL-DLHI

R1-1 AHDB-BHPL

R1-1 AHDB-INDR

R1-1 BHPL-INDR

R1-2 MUMB-SURT

R1-2 AHDB-SURT

R1-2 AHDB-INDR

R1-2 BHPL-INDR

R1-2 BHPL-NGPR

R1-2 MUMB-NGPR

R1-2 KLYN-NGPR

R1-2 KLYN-MUMB

R1-3 HYDR-NGPR

R1-3 HYDR-SNGR

R1-3 PUNE-SNGR

R1-3 MUMB-PUNE

R1-3 MUMB-NGPR

R1-3 DHUL-KLYN

R1-3 KLYN-MUMB

R1-3 DHUL-PUNE

R2 BANG-HYDR

R2 BANG-KSGR

R2 KSGR-CHNN

R2 CHNN-VWDA

R2 HYDR-VWDA

L1 Used L1 Free L2 Used L2 Free

Driving Forces for Backbone Capacity Enhancement

RDN Bandwidth requirements

Need to carry FLAG - ILD traffic between Mumbai and

Chennai Cable landing Stations on three diverse paths with

terrestrial availability matching to that of sub-marine network

ILD bandwidth dispersion across NLD network

CDMA Phase-I expansion & CDMA Phase II

Wire line & Leased Bandwidth

Need for improving Network availability ~99.99% using the

state of the art mesh restoration functionality

Supports only 2F-BLSR Ring

140 Gbps switch fabric (40 + 40) Gbps/(4X2F) Ring in Aggregate

& 60 Gbps on tribs.

Reserves 50% bandwidth for BLSR protection

In current architecture additional lambda in all rings excepting

Ring 4 & Ring 6 require additional DX in central line (Delhi,

Bhopal, Nagpur, Hyderabad, Bangalore)

Additional DX required at all three way locations Mumbai,

Allahabad, Ahmedabad, Pune and Vijayawada etc. after every

second lambda.

Interconnection between collocated DXs through hard patches

truncates the capacity of DX and require additional trib cards

Limitations of existing Nortel DX

Features of Nortel HDX vs DX

Features HDX Nortel Nortel Optera DX

Cross Connect Fabric

Size

640 Gbps scalable upto 3.84

Tbps ( Multiple Chasis)

140 Gbps

BLSR Rings about 20 X 2 F BLSR 4 X 2 F BLSR

Network Architecture /

Topology

Linear, Ring and Mesh

Architecture/ Mesh

Restoration is available

Linear & Ring

Protection Restoration

Capabilities

Mesh Restoration improves the

availability across multiple

routes

Does not support

more than one Fiber

Cut in Ring

Capacity truncation on

account of hard

patches

No capacity truncated

Capacity is

truncated

One Nortel HDX is equivalent to approx five DXs

Higher switch fabric size supporting multiple rings

Besides BLSR, supports Shared Protection and Intelligent

mesh restoration functionality, which makes network more

resilient.

It can provide availability better than the Ring Architecture

Better bandwidth utilization(can be loaded up to 70% of

ring capacity as against 50% in BLSR).

Significant reduction in electronics for wavelength

augmentation & Quick deployment

(Only 10G ports at HDX & XR cards at in between

REGENs to be added)

Benefits of HDX

Grow HDX way instead of adding more DXs

Identified 14 strategic locations for HDX deployment

Deploy HDX

HDX to HDX Mesh Wavelengths addition for

backbone capacity augmentation

Transfer of Existing BLSR Rings to HDX

Redeploy 16 nos. of freed DXs at identified sites for resiliency

project / Other Switch locations / Collector Splitting

Backbone Network Growth Plan

Strategic Locations for HDX (Having at least three

diverse routes)

S. No. DX Locations HDX

1 Mumbai Landing Station HDX

2 Mumbai MCN HDX

3 Chennai Landing Station HDX

4 Chennai MCN HDX

5 Hyderabad HDX

6 Bangalore HDX

7 Delhi HDX

8 Bhopal HDX

9 Nagpur HDX

10 Ahmedabad HDX

11 Allahabad HDX

12 Vijayawada HDX

13 Bhuvaneshwar HDX

14 Ranchi HDX

TOTAL 14

HDX Equipment Description &

Protection Schemes

January 12

th

2005

ASON: ITU-T, IETF, OIF

ITU - T

IETF

OIF

Implementation

agreements

GMPLS protocols

ASON requirements

and architecture

OIF: Optical Interworking Forum

IETF: Internet Engineering Task Force

Optical Cross-Connect: HDX

Highly Modular / Scalable OXC Infrastructure

Fully Non-blocking STS-1 / VC-4 Switch Matrix

HDX: 640G-1.28T, Single Shelf

3.84Tb/s Multi-Shelf Architecture

Integrated DWDM Optics - SFP Modules

Flexible service restoration and topologies

Mesh, Ring, Linear Protection

Restoration based on service attributes

Intelligent OXC enabling Next Generation Networking

Distributed, redundant & Integrated Control Plane

ITU-T ASON G.807/G.8080 ASON, GMPLS (Generalized Multi

Protocol Label Switching) signaling

Network topology discovery and awareness

Multi-Services Management

STS-1/VC-4 to STS-192c/VC-4-64c

Ports at 2.5/10/40Gb/s, 155/622Mb/s

Managed by Preside Optical Manager

Dimension : L X W X H : 1500 X 600 X 2200 cm

Power Consumption : 5400 Watts (Max)

1. Switch circuit pack

2. Shelf Controller

3. MXT card

4. Traffic Cards ( 16 Nos of traffic slots)

1. 10G SR or DWDM cards 4 ports per card

2. 2.5G (STM16) card 16 ports per card (IR, SR, LR)

3. STM4 / STM1 card - 16 ports per card (IR, SR, LR )

5. Power Supply Module (PSM)

6. Fan Module

Switch circuit pack.

Main Circuit Packs of HDX

Matrix and Timing (MXT) circuit pack.

Switch circuit pack diag

Fig. A.

Link Protection Mechanism offers the flexibility & simplicity to engineer Availability

Additionally, Mesh Restoration can be applied over any protection as a 2nd level

Protection & Restoration Schemes

Dedicated

W

P

Predetermined and

reserved backup for

fast switching (no

sharing)

Revertive

Fast switching

High availability

At least half of the

network capacity is

reserved for

protection

Unprotected

W

No protection at

transmission layer

Lowest cost &

availability

Shared

Predetermined &

configured backup for

fast switching

Revertive

Sharing over

segments w/o

common risks

SDH like performance

& behavior

Availability function of

network Engineering

May be

shared

W

W

P

P

Dynamic

Restoration paths are

dynamically created

(not predetermined)

Revertive

High sharing

Slower restoration

time

Restoration &

availability function of

network Engineering

W

P

Mesh Protection Mesh Protection Mesh Restoration No Protection

APS

Standard APS

Ring

Linear

Fast switching

Limited / no sharing of

protection capacity

APS Protection

50 msec N/A Up to 200 msec Secs 50 msec

A call is a service between two service access points. (End to End as in DX OPC)

A Connection is a collection of Nodal cross-connections that allow the

transport of data between two service access points. (Nodal as in DX OPC). At

least one connection must be associated with a call.

The following figure-A shows an example of the call and connection

concept.

A call between node A and node Z needs to be established . The original

connection for tat call could be passing through A-B-Z.

If the call needs to be restored due to failure in the original connection,

an alternative connection will be required and it could be A-C-D-Z.

Call and connection concept

Call and connection concept

Call and connection concept ( Continued)

Figure-A

Control Plane Call Management

Two types of call routing are supported at call creation: implicit and

explicit.

I mplicit Routing:

An implicit call is a call for which only the source and destination points

are specified for its connection(s), along with other service attributes

specified at the time of the connection request include:

Rate

CoS- Class of Service is used to define the call protection.

Routing Matric (Cost and Metric2)

Maximum Martic Value

Restoration option (enable or disable)

Explicit Routing:

An explicit call is a call for which the route is manually specified for its connection(s).

Explicit call are supported with three levels of granularity.

Node only : The control plane will compute the missing information (port / timeslot)

based on the given CoS and constraints.

Node and ports : The control plane will compute the timeslot for each port

Nodes, ports and timeslots : No calculation required from the control plane.

Control Plane Call Management

Call Engineering rules

The following is the list of call engineering rules.

Implicit routing is supported for all CoS , expect APS rings.

Explicit routing is supported for all CoS, including APS rings.

Explicit calls can be routed over failed and blocked ports.

For any given call a max. of 20 hops is allowed.

Control Plane Call Management

enables Rapid Service Delivery

1. Simple Point & Click service activation Single Step Provisioning

2. Automated Route selection (constrain-based routing engine)

1. Support Implicit Routing as well as Explicit Routing

3. Routing based on CoS / Protection, diversity and various Call attributes

1. Protection: Ring, Linear, Shared Mesh, Unprotected

2. Attributes: Mesh Restoration, Call alarms ( Loss of Service, Restoration Complete)

3. Diversity based on Nodes, Trunks and SRLG

Steps:

1) Select Call Attributes:

- Dest./Egress point

- Bandwidth

- Source/Ingress point

- CoS / Protection

2) Select Activation

Successful creation of circuit

between A & Z points

Optical Manager

EMS

- Routing Metric

Distributed Intelligence

Call Management on HDX

Call Manager offers simple point and click operations for

call creation, deletion, query, restoration, edit and bridge and roll.

It also provide a graphical display of all connections associated with a particular call.

Call Edit Capability :

After the call has been created, the following attributes can be modified.

1. Call label

2. Automatic mesh restoration options (enable/disable)

3. Call alarms (enable/disable)

Call editing is supporting for any established calls in any call state, up, down, restored,

etc.

Protection and Restoration : CoS & AMR

The control plane supports the co-existence of multiple protection and /or

restoration mechanism through the use of Class of Service (CoS) and

automatic mesh restoration (AMR).

CoS : is a call attribute used to define the call protection behavior and to

differentiate service quality levels.

Along with other routing criteria, CoS is specified at the time of call creation.

The control plan routes the calls over facilities which match the desired CoS.

Three Types of CoS :

Automatic protection switching(APS)

APS refers to well known protection schemes 1+1

Linear/MSP and 2 Fiber / 4 Fiber BLSR / MS SP Ring

The control plane helps in automatic routing of calls over

APS working facilities.

The APS CoS supports all call rates from VC4 to VC4-64c.

Calls over 1+1 Linear/MSP facilities can be implicitly or

explicitly routed.

APS - Automatic Protection Switching

Unprotected CoS does not offer any protection at the transport

layer, thus provides the lowest cost service.

Calls over unprotected facilities can be implicitly or explicitly

routed.

The unprotected CoS is supported on all call rates from VC4 to

VC4-64c.

Unprotected

SCN Communications

Control Plane I-NNI Traffic

Overview

Control Plane requires a Signaling

Communication Network to carry routing &

signaling traffic.

In-band SCN, using underlying DCC channels

MSDCC and RSDCC ports

Characteristics

In-fiber through embedded control channels

running IP over PPP (as per ITU-T G.7712)

IETF Standard (RFC2328) IP OSPF protocol

Resiliency;

Multiple DCC for redundancy per link

IP-Reroute around failure

Dedicated to Control Plane traffic

Comms link

Optical fibre

I-NNI

SCN

I-NNI

SCN

SCN / IP Traffic re-route

SCN

Failure

No I-NNI

Failure

Control Plane

Routing & Signaling

1. GMPLS based protocols to establish and manage optical circuits

Distributed Routing for topology management (GMPLS OSPF-TE based, ITU-T G.7715.1)

- Distributed Signaling for Connection Management (GMPLS CR-LDP based, ITU-T G.7713.3).

Support Call / Connection model required in G.8080, OIF latest IA.

2. Path Computation

1. Source based routing, Least hop-shortest path Dijkstra algorithm

2.Two user provisionable TE metrics are provided; cost, metric2

3.Traffic differentiation & CoS support

3. Control Plane resiliency & re-synchronization

1) Each OXC/NE regularly broadcasts link state

information (LSI)

2) OXC/NE uses LSIs to build/update local

network topology database

3) Local topology database is used to compute

optimal route based routing criteria

4) Signaling message sent along optimal route

for path establishment

Controller

Controller

EMS/OSS

(OM)

Adjacency Discovery

AutoDiscovery

Control Plane Discovery and Initialization

Auto-Discovery features supported :

1. NE Self Discovery :

1. Process to learn facilities learns local facilities characteristics and status, and updates its database with this local

node information.

2. Adjacency Discovery

1. Adjacency discovery information is automatically propagated identifying the source network element and port

information.

3. Peer Discovery

1. Automatic initiation of an I-NNI (Link Mgmt) and an OSPF-TE session (bandwidth availability) with neighbors.

2. Link Attributes validation and synchronization the Topology Databases on both nodes.

4. Network Topology Discovery

1. Each NE shares its Topology Database to all its neighbors. Each NE Topology Manager therefore learns /

maintains the complete network topology through this process (via LSA Link State Advertisement)

I-NNI

Cost and Metric

What is Cost?

What is Metric2 ?

Routing Criteria (Metric):

Shared mesh (SM)

The shared mesh protecting route is pre-determined and configured for fast

switching.

Provides deterministic and guaranteed performance(like APS) with a switch

time between 50 msec to 200 msec.

The control plane is involved in the provisioning of the shared mesh tunnels.

The control plane helps in automatic routing of calls over shared mesh

working routes.

Can be implicitly or explicitly routed.

The Shared mesh CoS is supported on all call rates from VC4 to VC4-64c

Shared Mesh Protection

Tunnels

Tunnel :

Is a set of continuous bandwidth grouped together that will be

reserved for shared mesh Call Creation.

Can be logically configured to have a size of 2.5G or 10G

virtual ports

A 10 G port can contain either one 10G virtual port or upto

four 2.5G virtual ports.

Tunnel

Working Tunnel

Protection Tunnel

Protection Tunnels are reserved bandwidth that can be

used upon a failure of the working tunnel.

Shared Mesh Protection Tunnel Segments ( PTS) are always

configured on a per span basis for maximum sharing

Each PTS can be shared up to a maximum of three diversely

routed working tunnels

Protection tunnel

Shared Mesh Protection

1. Mesh Protection defined on a Tunnel / logical facility (bundling)

2. Protection Tunnel is pre-determined and shared (on a per link basis)

3. Control Channel associated with Protection Tunnel

4. Sharing is possible when W & P Tunnels dont have a common risk (SRLG Shared Risk

Link Group)

5. Engineering rules to provide switch times < 200 msec

1. SDH like behavior, predictable & deterministic

2. Reversion, WTR

6. W & P build by control plane, however protection switch doesnt require any control plane

assistance

7. Protection Switching Priorities: Lockout, Force, Automatic, Manual

MSPP

Mesh Restoration

enabled

Mesh Restoration

disabled

1

st

Level of Restoration

Upon a single failure ring,

linear, shared mesh, or

dedicated systems will

rapidly restore service

2

nd

Level of Restoration

Mesh Restoration used as a

2nd Level of Restoration is

activated upon a dual link, or

node failure event

Leverages existing capacity

to re-route services

improving availability

Initiate 2

nd

level backup for each of

the spare segments in use

Primary Protect Path

Secondary Protect Path

Working

Path

Dynamic Second Level of Restoration

Mesh Restoration as a 2

nd

level of

restoration improves the networks

resiliency to multiple outages

Mesh Restoration

1. Failure Detection using SDH failure indicators ( LOS, LOF, AIS etc.)

2. Dynamic restoration for best survivability and efficiency

3. Revertive behavior with Wait To Restore WTR

4. Maintenance Friendly; Force Restoration, Lockout Restoration

5. Common Approach for Unprotected, APS Ring and Mesh Protection

1. On first failure, Ring or Mesh protection attempts to protect service

2. Mesh Restoration takes over if 1

st

level cant protect (i.e. 2

nd

level) or facility is unprotected

6. Higher Availability & Robustness could be achieved by using mesh restoration or

protection & restoration with proper spare capacity to survive multiple failures

failure

notification

I

1

S

1

D

1

I

2

P

1

P

2

Mesh Restoration

Built on the distributed routing and signaling capabilities of the control plane, using a

simple and robust restoration algorithm.

The control plane, in conjunction with SDH transport layer detection mechanism, learns

the location of the failure in the signaling notification,computes the next best route

based on feedback information, and reroutes each connection.

Since this mechanism relies heavily on software processing and signaling networks, the

restoration time is slower than APS or shared mesh protection.

Automatic Mesh Restoration

AMR is a call attribute used to enable to disable the automatic

Mesh restoration on a per call basis.

Can be used with any type of CoS and can be edited after

call creation.

Initiated upon failure detection and the first level of protection

is failed or there is no first level of protection .

AMR uses the same SDH failure indicators for rapid failure

detection ( LOS, LOF, AIS etc.)

Automatic Mesh Restoration

Mesh Restoration will recover from multiple failures as long as the bandwidth is

available for restoration.

If a fault occurs such that service on the working call path is affected (after all

underlying protection schemes have been applied), the Control Plane will attempt

to restore the call by redialing a new path through the network.

This restoration will (implicitly) make use of any available working or spare

bandwidth on Unprotected, 1+ 1 Linear working ports or Shared Mesh working

tunnels.

Restoration over ring working facilities is not allowed.

The control plane will make periodic attempt to restore traffic every 60 seconds

until resources are available and call is successfully restored or until the failure

affecting the call has been cleared.

HDX does not support pre-emption of existing traffic.

Automatic Mesh Restoration

User Request Based Restoration

Forced restoration :

The forced restoration is a user initiated restoration, which moves a call

from its original path to the next best route based on the routing

criteria, if no higher priority request is (lockout) active.

Lockout restoration :

Lockout restoration ensures that a call is not moved from its original path

by suppressing the restoration, even in the presence of failure

condition or other manual operations.

Mesh Protection / Mesh Restoration

Trade-off: Bandwidth Efficiency vs. Restoration Speed

Dedicated Dynamic Shared

Increased intelligence, control & complexity

Efficient bandwidth utilization & low cost

Simple, fast & reliable

Redundant & high cost

Shared Mesh Protection A nice balance

Bandwidth efficiency CAPEX savings ...

Deploy working only where required

Share protection capacity network-wide

Deterministic switch times ... 50 - 200 ms

Simple to manage, pre-determined protection paths ... SDH-like

Shared Mesh Protection combines the advantages of Mesh and

SDH protection

HDX / HDXc & Optical Intelligence

Managing a Mesh Network

Optical

Manager

Traffic Plane

Control Plane

N

e

t

w

o

r

k

D

a

t

a

,

E

v

e

n

t

s

Optical

Planner

Mesh Protection

Shared Protection built in Transport Plane

Protection Switching Priorities (Lockout, Forced, Auto, Manual)

Protection Switch alarms (active, failed, etc.)

Single & Multi-Link, Priority levels, Local, Revertive, WTR

Unavailability of protection path notifications

In-Service Roll Over (local)

Mesh Restoration & Connect Mgmt

Distributed architecture with local & end-to-end

Priority levels, Revertive, WTR

Restoration Priorities (Lockout, Forced, Auto)

Nesting of Restoration over Protection (APS & Mesh)

Call/Service failure and Restoration notifications

Optimization, Bridge & Roll (end-to-end)

Mesh & Control Plane Management

Fit into the existing management architecture

Addition to basic functions SLAT, Config, S/W download

Addition of call management functions

Management of Mesh restoration/protections (Tunnels)

Planning Tools

Capacity Planning

Mesh Protection / Restoration analysis

Traffic impact assessment under failure/maintenance scenarios

Capacity planning with what if scenarios

Traffic optimization

Bulk Export /

Import

Montreal Lab Config :

2.5G APS & Unprotected CoS

501-P6 503-P6

502-P6 504-P6

502-12 501-P8

5

0

4

-

P

1

3

5

0

1

-

P

1

3

503-P16

501-P4

501-P1 504-P1

5

0

3

-

P

1

5

0

2

-

P

1

5

0

2

-

P

9

5

0

1

-

P

1

2

1+1

2FR 2.5G

501-P5

501-P1

502-P15

502-P4

Unprotected

NODE3 NODE1

NODE2

NODE4

502-P12

502-P14

502-P3

503-P13

503-P15

501-P6

5

0

4

-

P

2

5

0

1

-

P

2

501-P3 504-P3

5

0

4

-

P

1

2

5

0

1

-

P

1

2

5

0

2

-

P

1

1

5

0

1

-

P

3

502-P7

5

0

1

-

P

1

6

5

0

1

-

P

9

5

0

1

-

P

1

4

W3

W1

W2

W2

P2

P3

P1-P2-P3

P3

P1

P2

P4

W4

Montreal Lab Config:

2.5G Shared Mesh

503-P14

503-P12

501-P10

NODE3 NODE1

NODE2

NODE4

5

0

2

-

P

1

0

5

0

2

-

1

1

501-P7

5

0

3

-

P

1

1

503-P10

501-P2

501-P6

502-P2

501-P12

501-P11

501-P10

501-P5

502-P3

501-P4

5

0

9

/

5

1

0

-

4

5

0

3

/

5

0

4

-

2

5

0

9

/

5

1

0

-

3

5

0

3

/

5

0

4

-

1

10G 4FR

510-1

509-3 503-3

5

0

7

-

2

5

0

9

-

4

Montreal Lab Config

10G Shared Mesh/APS/Unprotected

NODE3 NODE1

NODE2

NODE4

Unprotected

10G 2FR

509-1

509-2

510-2

10G 1+1

503-P8

502-P8

503-P3

503-P2

501-14

501-P16

501-P15

501-P8

507-P1

502-P8

508-P1

502-P3

501-P5

501-P10

W1

P1

P1

2.5G

1+1

Subtending

DX network

HDX Deployment Phase I & II & Wavelength Plan

HDX Deployment Phase I : 7 nos. of HDX Ordered

for Mumbai Chennai Connectivity ( Wavelengths

Lambda 5 & 6 Chosen)

Mumbai MCN & Mumbai Cable Landing Station

Nagpur, Hyderabad, Bangalore, Chennai & Vijayawada

I & C to start from second week of January 2005.

Resiliency Site : Ranchi HDX Ordered

HDX Deployment Phase II : 5 nos. of HDX To be ordered

for remaining sites ( Wavelengths : Lambda 9 & 10 Chosen)

Ahmedabad, Delhi, Bhopal Bhuvaneshwar, Allahabad,

HDX Deployment Phase I & II :

For all HDX to HDX - 10G mesh links, the

wavelength is to be taken through REGEN XR cards

only.

Mesh wavelengths can not be taken through DX

10G aggregates.

Hence, REGEN bays are being introduced at the in-

between DX sites like Surat, Pune, Belgaum,

Hassan, Madurai etc.

Additional DWDM route created between Bangalore

to Chennai via Kolar by blowing G.655 cable from

Kolar to Krishanagiri.

Improved Network Availability

The Resiliency improvement program by way of providing

multiple paths to Switch locations and DWDM rings splitting (

shorter ring circumference)

&

HDX based Mesh Restoration capability on diverse paths

will definitely improve the overall back bone network

availability and customer satisfaction.

Other SDH resiliency rings being implemented will provide multiple

(diverse) routes for non-DX switch and STP locations connectivities.

Thank You!

Anda mungkin juga menyukai

- Dell Emc UnityDokumen10 halamanDell Emc UnityHassam AhmadBelum ada peringkat

- The Geometry GuideDokumen4 halamanThe Geometry GuideAnonymous lrvT5FBelum ada peringkat

- 07505310e Power Systems Handbook 3rded PDFDokumen106 halaman07505310e Power Systems Handbook 3rded PDFHassam AhmadBelum ada peringkat

- 0350046J0C - CXC206 - Lo ResDokumen132 halaman0350046J0C - CXC206 - Lo ResroybutcherBelum ada peringkat

- Reference Manual: Command Line Interface Industrial ETHERNET Switch RSB20, OCTOPUS OS20/OS24 ManagedDokumen274 halamanReference Manual: Command Line Interface Industrial ETHERNET Switch RSB20, OCTOPUS OS20/OS24 ManagedHassam AhmadBelum ada peringkat

- 5751 Activity 9.0 SGDokumen29 halaman5751 Activity 9.0 SGHassam AhmadBelum ada peringkat

- 5751 Activity 9.0 SGDokumen254 halaman5751 Activity 9.0 SGHassam AhmadBelum ada peringkat

- 5751 Overview 9.0 Ig PDFDokumen212 halaman5751 Overview 9.0 Ig PDFHassam AhmadBelum ada peringkat

- User Manual: Basic Configuration Industrial ETHERNET (Gigabit) Switch RS20/RS30/RS40, MS20/MS30, OCTOPUSDokumen202 halamanUser Manual: Basic Configuration Industrial ETHERNET (Gigabit) Switch RS20/RS30/RS40, MS20/MS30, OCTOPUSHassam AhmadBelum ada peringkat

- Manhattan Gmat FlashCards EIV 2009Dokumen21 halamanManhattan Gmat FlashCards EIV 2009girdharilalBelum ada peringkat

- 323-1701-180.r08.0 Technical Specificaions PDFDokumen248 halaman323-1701-180.r08.0 Technical Specificaions PDFHassam AhmadBelum ada peringkat

- User Manual: Basic Configuration Industrial ETHERNET (Gigabit) Switch RS20/RS30/RS40, MS20/MS30, OCTOPUSDokumen202 halamanUser Manual: Basic Configuration Industrial ETHERNET (Gigabit) Switch RS20/RS30/RS40, MS20/MS30, OCTOPUSHassam AhmadBelum ada peringkat

- Official Ielts Practice Materials 1Dokumen86 halamanOfficial Ielts Practice Materials 1Ram95% (106)

- Umux SDH Stm-4 Unit Syn4E: Cost Effective Umux Solution For Eos (Ethernet Over SDH) and SDH Stm-4 Network ApplicationsDokumen2 halamanUmux SDH Stm-4 Unit Syn4E: Cost Effective Umux Solution For Eos (Ethernet Over SDH) and SDH Stm-4 Network ApplicationsHassam AhmadBelum ada peringkat

- IELTS Listening Practice Test 1 Answers & ExplanationsDokumen1 halamanIELTS Listening Practice Test 1 Answers & Explanationsmanpreetsodhi08Belum ada peringkat

- Uni IIoT Conference Program HMIDokumen1 halamanUni IIoT Conference Program HMIHassam AhmadBelum ada peringkat

- 06 - The Critical Reasoning Guide 4th EditionDokumen115 halaman06 - The Critical Reasoning Guide 4th EditionHassam AhmadBelum ada peringkat

- Manhattan Gmat FlashCards EIV 2009Dokumen21 halamanManhattan Gmat FlashCards EIV 2009girdharilalBelum ada peringkat

- GMAT Club Math Book v3 - Jan-2-2013Dokumen126 halamanGMAT Club Math Book v3 - Jan-2-2013prabhakar0808Belum ada peringkat

- 5751 Activity 9.0 SGDokumen254 halaman5751 Activity 9.0 SGHassam AhmadBelum ada peringkat

- 323-1853-310 (6110 R5.0 Provisioning) Issue 3 PDFDokumen276 halaman323-1853-310 (6110 R5.0 Provisioning) Issue 3 PDFHassam AhmadBelum ada peringkat

- Optera Metro 4100-4200 Lab ManualDokumen41 halamanOptera Metro 4100-4200 Lab ManualHassam AhmadBelum ada peringkat

- 5122aen Omea Rls4 SGDokumen202 halaman5122aen Omea Rls4 SGHassam AhmadBelum ada peringkat

- 450-3101-012.r11.0.i02interface User LoginDokumen72 halaman450-3101-012.r11.0.i02interface User LoginHassam AhmadBelum ada peringkat

- Free GMAT FlashcardsDokumen79 halamanFree GMAT FlashcardsAnshul SharmaBelum ada peringkat

- Quant 700 To 800 Level QuestionsDokumen87 halamanQuant 700 To 800 Level QuestionsHassam AhmadBelum ada peringkat

- CP3e 2 HW 91nxDokumen15 halamanCP3e 2 HW 91nxHassam AhmadBelum ada peringkat

- Quant Session 4 - Geometry + Co-Ordinate Geometry - SolutionsDokumen36 halamanQuant Session 4 - Geometry + Co-Ordinate Geometry - SolutionsHassam AhmadBelum ada peringkat

- Optical Metro 5200 Overview Oct 2009 Charter Visit 2Dokumen34 halamanOptical Metro 5200 Overview Oct 2009 Charter Visit 2Hassam AhmadBelum ada peringkat

- 6th Central Pay Commission Salary CalculatorDokumen15 halaman6th Central Pay Commission Salary Calculatorrakhonde100% (436)

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5782)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (587)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (72)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (344)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (119)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- Compact 3G Router with High Speed Wireless and Ethernet ConnectionsDokumen3 halamanCompact 3G Router with High Speed Wireless and Ethernet ConnectionsSkydive77Belum ada peringkat

- Translated DlmsDokumen33 halamanTranslated DlmsvietbkfetBelum ada peringkat

- NBG6515 V1.00 (AAXS.6) C0 Release NoteDokumen3 halamanNBG6515 V1.00 (AAXS.6) C0 Release Notepetrik ayanBelum ada peringkat

- 7.3.1.6 Lab - Exploring DNS Traffic PDFDokumen11 halaman7.3.1.6 Lab - Exploring DNS Traffic PDFAvinash reddy SarabudlaBelum ada peringkat

- 4 5807769857004930192Dokumen43 halaman4 5807769857004930192Biniam NegaBelum ada peringkat

- 3GPP MPEG-4 Wireless: Fixed Network CameraDokumen2 halaman3GPP MPEG-4 Wireless: Fixed Network CamerafemacomputersBelum ada peringkat

- Docker Swarm Code PDFDokumen257 halamanDocker Swarm Code PDFSunitha9Belum ada peringkat

- Jncis Ent SwitchingDokumen189 halamanJncis Ent Switchingbubu beanBelum ada peringkat

- Sx10 Sx20 Sx80 Mx200g2 Mx300g2 Mx700 Mx800 Getting Started GuideDokumen34 halamanSx10 Sx20 Sx80 Mx200g2 Mx300g2 Mx700 Mx800 Getting Started GuideRoger JeríBelum ada peringkat

- Data CommunicationDokumen543 halamanData CommunicationAnders S. VoyanBelum ada peringkat

- Cisco Voice T1, E1, IsDN Configuration and TroubleshootingDokumen21 halamanCisco Voice T1, E1, IsDN Configuration and Troubleshootingtimbrown88Belum ada peringkat

- A Computer Network Is A Group of Interconnected ComputersDokumen55 halamanA Computer Network Is A Group of Interconnected ComputersShruti PillaiBelum ada peringkat

- 11.11 Practice Set: Review QuestionsDokumen3 halaman11.11 Practice Set: Review QuestionsBui Nhu100% (1)

- Configure UNIX and Linux SecurityDokumen33 halamanConfigure UNIX and Linux SecurityRadu BucosBelum ada peringkat

- USPP(HLR)_DP001_E1_1 Data Planning GuideDokumen39 halamanUSPP(HLR)_DP001_E1_1 Data Planning Guidekaijage kishekyaBelum ada peringkat

- NS2 1wdw1Dokumen43 halamanNS2 1wdw1bsgindia82Belum ada peringkat

- UATDokumen128 halamanUATRadit MannyukBelum ada peringkat

- Preboard - Computer Application (165) Class 10 - WatermarkDokumen4 halamanPreboard - Computer Application (165) Class 10 - WatermarkJimit PatelBelum ada peringkat

- Mangesh PatilDokumen3 halamanMangesh Patilcrushboy18Belum ada peringkat

- Cisco-Sdwan-Casestudy-Smallbranch HLD LLDDokumen100 halamanCisco-Sdwan-Casestudy-Smallbranch HLD LLDroniegrokBelum ada peringkat

- Nfjfiititjjfifjrnfhufuruii (83888i7 ( (388Dokumen4 halamanNfjfiititjjfifjrnfhufuruii (83888i7 ( (388Danger LogBelum ada peringkat

- 9.3.1.4 Packet Tracer - Skills Integration Challenge Instructions PDFDokumen3 halaman9.3.1.4 Packet Tracer - Skills Integration Challenge Instructions PDFJaime RicardoBelum ada peringkat

- Huawei E9000 Server Network Technology White PaperDokumen120 halamanHuawei E9000 Server Network Technology White PaperPablo PanchigBelum ada peringkat

- CISCO Voice Over Frame Relay, ATM and IPDokumen61 halamanCISCO Voice Over Frame Relay, ATM and IPFahad RizwanBelum ada peringkat

- CCNA - EIGRP Authentication ConfigurationDokumen3 halamanCCNA - EIGRP Authentication ConfigurationJay Mehta100% (1)

- Socks4 ProxiesDokumen66 halamanSocks4 ProxiesrysrBelum ada peringkat

- (IJCST-V9I1P4) :sangram Keshari Nayak, Sarojananda MishraDokumen7 halaman(IJCST-V9I1P4) :sangram Keshari Nayak, Sarojananda MishraEighthSenseGroupBelum ada peringkat

- CRN Tj100me r6.3Dokumen19 halamanCRN Tj100me r6.3Anonymous 6PurzyegfXBelum ada peringkat

- BARUDokumen3 halamanBARURian KoezBelum ada peringkat

- Classnotes - VCS PDFDokumen6 halamanClassnotes - VCS PDFMy SolutionBelum ada peringkat