19 Metaheuristic Opt - Genetic Algorithms

Diunggah oleh

Josh PilipovskyJudul Asli

Hak Cipta

Format Tersedia

Bagikan dokumen Ini

Apakah menurut Anda dokumen ini bermanfaat?

Apakah konten ini tidak pantas?

Laporkan Dokumen IniHak Cipta:

Format Tersedia

19 Metaheuristic Opt - Genetic Algorithms

Diunggah oleh

Josh PilipovskyHak Cipta:

Format Tersedia

Metaheuristic Optimization:

Genetic Algorithms

AE 3340: Design and Systems Engineering Methods

Prof. Brian German

Daniel Guggenheim School

of Aerospace Engineering

Challenges to Classical Optimization Methods

The optimization methods that we have discussed so far are most

suited for:

Problems in which all of the design variables are continuous

Problems in which there are few local optima

Ideally just “unimodal” problems with one local optimum that is also globally optimal

If our problem has many discrete variables and/or many local

optima, we may need another approach!

Daniel Guggenheim School

2 of Aerospace Engineering

Metaheuristic Optimization

A category of optimization methods that are intended for these

types of problems is called metaheuristic optimization.

A heuristic is a “rule of thumb” that is devised by (human) intuition

or experience based on observations and past experience.

In the context of optimization, examples of heuristics could be:

“I should restart the algorithm at a different point in the design

space to make sure that it was not trapped in a local minimum.”

“Sometimes, I need to enable the algorithm to ‘search uphill’ to

find a better local neighborhood in the design space.”

Daniel Guggenheim School

3 of Aerospace Engineering

Metaheuristic Optimization

A metaheuristic (or meta-heuristic) is:

“…a master strategy that guides and modifies other heuristics to

produce solutions beyond those that are normally generated in

a quest for local optimality. The heuristics guided by such a

meta-strategy may be high level procedures or may embody

nothing more than a description of available moves for

transforming one solution into another, together with an associated

evaluation rule.”

Glover, F.W. and Laguna, M., Tabu Search,

Vol. 1, pp. 17, Springer, 1998.

Daniel Guggenheim School

4 of Aerospace Engineering

Metaheuristic Optimization

Metaheuristic optimization methods generally have the following

characteristics in common:

Employ algorithms that are stochastic, i.e. that involve

selection of random values of design variables. Stochasticity is

a key to enabling the methods to find a global optimum.

However, the methods have more “smarts” than purely random

search.

Generally of “discrete origin,” i.e. design variables are often

treated as discrete, even if they are truly continuous. This

requires that all variables be discretized in some way.

Daniel Guggenheim School

5 of Aerospace Engineering

Metaheuristic Optimization

Zeroth-order, i.e. they do not use gradients or Hessians

Inspired by analogies with physics, biology, or other fields

Require many function calls and large computation time

Difficult to know a priori how to adjust heuristic or metaheuristic

parameters to solve a particular problem efficiently

Must be careful that our metaheuristic algorithm does not require

more function calls and time that a grid search or an exhaustive

random search!

Daniel Guggenheim School

6 of Aerospace Engineering

Metaheuristic Optimization

A note on nomenclature:

Metaheuristic optimization algorithms almost always involve

stochastic elements. They could therefore be categorized as a

subclass of stochastic optimization algorithms.

However, stochastic optimization is broader class that also include

problems in which the objective function and the constraints are

random variables, i.e. probabilistic optimization problems

Metaheuristic algorithms use stochasticity in the algorithms

themselves to solve deterministic optimization problems.

Daniel Guggenheim School

7 of Aerospace Engineering

Metaheuristic Optimization Algorithms

There are many types of metaheuristic algorithms. (You can

create your own!). Examples

Simulated annealing

Genetic algorithms

Particle swarm algorithms

Ant colony optimization

We will talk only about genetic algorithms in this course.

Daniel Guggenheim School

8 of Aerospace Engineering

Metaheuristic Optimization

Most metaheuristic optimization algorithms are naturally formulated

for side-constrained optimization problems of the form:

Minimize: 𝑓 𝒙

Subject to: 𝒙𝑳 ≤ 𝒙 ≤ 𝒙𝑼

More complicated constraints can be handled either by penalty

functions, or in some cases, by directly “filtering” designs

generated in the stochastic search.

Without penalty functions, equality constraints are challenging!

Daniel Guggenheim School

9 of Aerospace Engineering

Genetic Algorithms

Genetic algorithms are inspired by an analogy with natural

selection (“survival of the fittest”) in Darwin’s theory of evolution.

Many designs are represented as individuals in a population.

Certain individuals are selected for reproduction based on their

fitness relative to other individuals in the population.

After reproduction, some of the individuals are replaced by their

offspring, and a new generation of the population is formed.

The invention of genetic algorithms is credited to John Holland.

Daniel Guggenheim School

10 of Aerospace Engineering

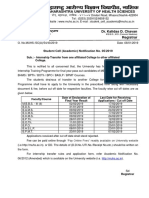

Genetic Algorithms

Reproduction

Fig. 3.1 from Dréo J., Pétrowski, A., Siarry, P., and Taillard, E., Metaheuristics

for Hard Optimization: Methods and Case Studies, Springer 2006.

Daniel Guggenheim School

11 of Aerospace Engineering

Genetic Algorithms

We will examine each of the steps in turn:

Initialization

Selection

Crossover

Reproduction

Mutation

Fitness evaluation

Replacement

Daniel Guggenheim School

12 of Aerospace Engineering

Initialization

To initialize the algorithm, a population of individuals must be

generated.

If the problem involves continuous variables, these should be

discretized over a desired range and to a desired resolution as binary

numbers. It is preferable to represent the binary numbers in Gray

code.

Concatenating the binary numbers for each variable forms a single

string of 𝑛 binary digits known as the chromosome for the individual.

The initial population of 𝑚 individuals is generated by randomly

sampling bits in each chromosome.

A rule of thumb is that 2𝑛 ≤ 𝑚 ≤ 4𝑛.

Daniel Guggenheim School

13 of Aerospace Engineering

Selection

There are two common forms for selecting individuals for

reproduction:

Proportional selection

Tournament selection

We will look at both methods.

Daniel Guggenheim School

14 of Aerospace Engineering

Selection

The general idea behind proportional selection can be stated as

follows:

The expected number of selections of an individual for

reproduction is proportional to its fitness.

Fitness is intended to be maximized, so the measure of fitness

should monotonically increase with 𝑓(𝒙) if the optimization goal is

to maximize, or monotonically increase with −𝑓(𝒙) if the goal is to

minimize.

Daniel Guggenheim School

15 of Aerospace Engineering

Selection

A common form of proportional selection is called roulette wheel

selection.

In roulette selection, each candidate is given a slice of an

imaginary roulette wheel with the size of the slice proportional to its

fitness. A random number is then generated to select a parent.

Daniel Guggenheim School

16 of Aerospace Engineering

Selection

Example of roulette selection:

Candidates A, B and C have fitnesses 1, 10, and 5, respectively.

The roulette wheel would have the form A:[0,1], B:[1,11], C:[11,16].

Two random numbers between 0 and 16 are generated: 12 and 3.

A

C

Thus, the two parents are C and B.

B

Daniel Guggenheim School

17 of Aerospace Engineering

Selection

Proportional selection encounters issues when there are very large or

very small differences in the fitness function between individuals.

In the former case, a candidate with fitness orders of magnitude higher

than the others would dominate the gene pool, diminishing the ability to

explore.

In the latter case, if the population evolves to all be nearly optimal, the

roulette wheel becomes nearly evenly spaced and the selection process

resembles a random draw, hindering exploitation of the optimum.

Sometimes “monotone-preserving” transformations to the objective

function are made to create a fitness function, e.g. log, exponential, or

power law transformations, to address these issues.

Daniel Guggenheim School

18 of Aerospace Engineering

Selection

However, in many cases, proportional selection gives each

candidate a fair chance of becoming a parent.

This is important as we wish to retain some unfit candidates to

promote exploration of the design space, yet narrow in on the best

candidates to promote exploitation.

Daniel Guggenheim School

19 of Aerospace Engineering

Selection

Tournament selection overcomes some of the difficulties of

proportional selection.

As its name implies, tournament selection holds “tournaments”

between different individuals to determine which will reproduce.

There are two general types of tournament selection:

Deterministic tournament

Stochastic tournament

Daniel Guggenheim School

20 of Aerospace Engineering

Selection

Deterministic tournament selection

How it works:

𝑘 individuals are chosen at random from the population. This is

the tournament size.

The 𝑘 individuals participate in a “tournament” that compares

their fitness values.

The individual with the highest fitness “wins” the tournament and

is selected for reproduction.

Daniel Guggenheim School

21 of Aerospace Engineering

Selection

Stochastic tournament selection

How it works for a binary tournament (𝑘 = 2):

2 individuals are chosen at random from the population.

Select the most fit individual with a probability 0.5 ≤ 𝑝 ≤ 1

Choose the second most fit individual with probability (1 − 𝑝)

Can be generalized to arbitrary 𝑘 by consideration of order

statistics.

Daniel Guggenheim School

22 of Aerospace Engineering

Selection

Since both proportional and tournament selection involve

stochastic elements, there is no guarantee that the individuals in

the population with the best fitness will be selected as parents or

will persist into the next generation.

Elitism is the concept of copying the best individual(s) from one

generation to the subsequent generation.

There are several strategies for implementing elitism, including

copying the best individuals either before or after reproduction.

Daniel Guggenheim School

23 of Aerospace Engineering

Crossover

Crossover is the genetic recombination of two parent individuals

into two children.

It is the main mechanism of global search in GA.

After selecting two parents, a random number is generated to

determine if crossover occurs. The probability is typically high

(70% to 80%). If no crossover occurs, both parents simply

become children (for most algorithms).

Daniel Guggenheim School

24 of Aerospace Engineering

Crossover

There are three common types of crossover:

One-point crossover

Two-point crossover

Uniform crossover

Daniel Guggenheim School

25 of Aerospace Engineering

Crossover

One-point crossover

1. Given two parents with chromosome length 𝑙, a random

integer 𝑘 between 1 and 𝑙 − 1 is generated. The value 𝑘

indicates after which bit a “dividing line” should be drawn for

the crossover.

2. Swap the relevant bits following 𝑘, i.e. bits 𝑘 + 1 to 𝑙.

Daniel Guggenheim School

26 of Aerospace Engineering

Crossover

Example of one-point crossover:

Parent A: 01110011 Parent B: 11001100 (𝑙 = 8)

Randomly generate an integer 𝑘 between 1 and 7: 4

Parent A: 0111ȁ0011 Parent B: 1100ȁ1100

Swap the bits following the 𝑘 𝑡ℎ bit:

Child A:01111100 Child B:11000011

Daniel Guggenheim School

27 of Aerospace Engineering

Crossover

Two-point crossover is similar to one-point crossover except two

random numbers between 1 and 𝑙 − 1 are generated.

The first number represents the bit after which to start the swap,

and the second number represents how many bits to swap. If the

second number is large enough such that the end of the string is

reached, continue counting from the beginning of the string.

Two-point crossover is less sensitive to the ordering of individual

design variable strings in the overall chromosome.

Daniel Guggenheim School

28 of Aerospace Engineering

Crossover

Example of two-point crossover:

Parent A: 00110011 Parent B: 11001100 (𝑙 = 8)

Randomly generate an integer between 1 and 7 (where to start): 7

Parent A: 0011001ȁ1 Parent B: 1100110ȁ0

Generate another integer between 1 & 7 (how many bits to swap): 3

Parent A: 00 11001 1 Parent B: 11 00110 0

Child A: 11110010 Child B: 00001101

Daniel Guggenheim School

29 of Aerospace Engineering

Crossover

In uniform crossover, each bit is given a probability to swap. The

probability is typically 𝑝 = 0.5. For each bit, we generate a

sample 𝑢 from a uniform distribution over the range (0,1). If 𝑢 <

𝑝, we swap the bits, 𝑢 > 𝑝, we do not swap it.

Example of uniform crossover:

Parent A: 00110011 Parent B: 11001100 (𝑙 = 8)

Decide if each bit will swap: [Yes, No, No, No, No, Yes, No, No]

Parent A: 00110011 Parent B: 11001100

Child A: 10110011 Child B: 01001000

Daniel Guggenheim School

30 of Aerospace Engineering

Mutation

After successive generations of selection and crossover, it will be likely

that certain alleles will be eliminated from the gene pool (or may never

have even occurred).

That is, a certain value at a certain position in the binary string is no

longer in any of the candidates and can never appear again in any

future children via crossover.

To maintain genetic diversity, mutation is added in the form of randomly

flipping a few of the bits in the childrens’ chromosomes.

It is important to implement mutation in a restrained manner or the GA

will be little more than a glorified random search.

Daniel Guggenheim School

31 of Aerospace Engineering

Mutation

There are several ways to implement mutation. Here are two:

Similar to crossover, give each child a small probability to

mutate (about 10%). If a child is chosen to mutate, randomly flip

a user-defined number of bits in the child's chromosome. The

locations within the string for the bit flips are chosen randomly.

Every bit of every child's chromosome is given a small user-

defined probability to flip (typically between 1% and 10%).

There are many other possible mutation strategies!

Daniel Guggenheim School

32 of Aerospace Engineering

Replacement

Replacement is typically accomplished in one of the follow two

ways:

Replace all 𝜇 parents by all 𝜆 children to form the population for

the next generation (this usually implies that 𝜆= 𝜇 children are

generated to preserve the population size over generations).

Select the best 𝜇 individuals from all 𝜇 + 𝜆 parents and

offspring to form the subsequent population.

Notice that the second method incorporates elitism.

Daniel Guggenheim School

33 of Aerospace Engineering

Anda mungkin juga menyukai

- KF CSDokumen6 halamanKF CSJosh PilipovskyBelum ada peringkat

- Hydration PaperDokumen7 halamanHydration PaperJosh PilipovskyBelum ada peringkat

- Rhetorical AppealDokumen1 halamanRhetorical AppealJosh PilipovskyBelum ada peringkat

- Rhetorical Appeal: 1. Pathos - Appeal To Emotion 2. Logos-Appeal To Reason 3. Ethos - Appeal To CredibilityDokumen1 halamanRhetorical Appeal: 1. Pathos - Appeal To Emotion 2. Logos-Appeal To Reason 3. Ethos - Appeal To CredibilityJosh PilipovskyBelum ada peringkat

- Audre LordeDokumen1 halamanAudre LordeJosh Pilipovsky0% (1)

- AbstractDokumen1 halamanAbstractJosh PilipovskyBelum ada peringkat

- May 2013 SatDokumen59 halamanMay 2013 SatJosh PilipovskyBelum ada peringkat

- Shoe Dog: A Memoir by the Creator of NikeDari EverandShoe Dog: A Memoir by the Creator of NikePenilaian: 4.5 dari 5 bintang4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeDari EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifePenilaian: 4 dari 5 bintang4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceDari EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RacePenilaian: 4 dari 5 bintang4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)Dari EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Penilaian: 4 dari 5 bintang4/5 (98)

- Grit: The Power of Passion and PerseveranceDari EverandGrit: The Power of Passion and PerseverancePenilaian: 4 dari 5 bintang4/5 (588)

- The Little Book of Hygge: Danish Secrets to Happy LivingDari EverandThe Little Book of Hygge: Danish Secrets to Happy LivingPenilaian: 3.5 dari 5 bintang3.5/5 (400)

- The Emperor of All Maladies: A Biography of CancerDari EverandThe Emperor of All Maladies: A Biography of CancerPenilaian: 4.5 dari 5 bintang4.5/5 (271)

- Never Split the Difference: Negotiating As If Your Life Depended On ItDari EverandNever Split the Difference: Negotiating As If Your Life Depended On ItPenilaian: 4.5 dari 5 bintang4.5/5 (838)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyDari EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyPenilaian: 3.5 dari 5 bintang3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealDari EverandOn Fire: The (Burning) Case for a Green New DealPenilaian: 4 dari 5 bintang4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureDari EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FuturePenilaian: 4.5 dari 5 bintang4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryDari EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryPenilaian: 3.5 dari 5 bintang3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnDari EverandTeam of Rivals: The Political Genius of Abraham LincolnPenilaian: 4.5 dari 5 bintang4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaDari EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaPenilaian: 4.5 dari 5 bintang4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersDari EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersPenilaian: 4.5 dari 5 bintang4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaDari EverandThe Unwinding: An Inner History of the New AmericaPenilaian: 4 dari 5 bintang4/5 (45)

- Rise of ISIS: A Threat We Can't IgnoreDari EverandRise of ISIS: A Threat We Can't IgnorePenilaian: 3.5 dari 5 bintang3.5/5 (137)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreDari EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You ArePenilaian: 4 dari 5 bintang4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Dari EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Penilaian: 4.5 dari 5 bintang4.5/5 (121)

- Her Body and Other Parties: StoriesDari EverandHer Body and Other Parties: StoriesPenilaian: 4 dari 5 bintang4/5 (821)

- Research Sb19Dokumen30 halamanResearch Sb19Henry LopezBelum ada peringkat

- Steps of Highway SurveyDokumen35 halamanSteps of Highway SurveySantosh RaiBelum ada peringkat

- Artigo Publicado Revista Estudos de Psicologia Campinas 2022Dokumen13 halamanArtigo Publicado Revista Estudos de Psicologia Campinas 2022Antonio Augusto Pinto JuniorBelum ada peringkat

- PDF Eapp q1m3 Tehnques in Smmrizng Diff Acad Texts r5 - CompressDokumen24 halamanPDF Eapp q1m3 Tehnques in Smmrizng Diff Acad Texts r5 - CompressArjayBelum ada peringkat

- The Impact of Ownership Structure On Corporate Debt Policy: A Time-Series Cross-Sectional AnalysisDokumen14 halamanThe Impact of Ownership Structure On Corporate Debt Policy: A Time-Series Cross-Sectional Analysisyudhi prasetiyoBelum ada peringkat

- APG-Audit Planning PDFDokumen4 halamanAPG-Audit Planning PDFdio39saiBelum ada peringkat

- Thinking Skills ExplainedDokumen7 halamanThinking Skills Explainedmr_jamzBelum ada peringkat

- Econometrics Assinment 2Dokumen3 halamanEconometrics Assinment 2Maria AsgharBelum ada peringkat

- Literature ReviewDokumen3 halamanLiterature ReviewHasib Ahmed100% (1)

- Duggan and Reichgelt 2006 Measuring Information System Delivery QualityDokumen383 halamanDuggan and Reichgelt 2006 Measuring Information System Delivery QualityJohanes PurbaBelum ada peringkat

- Causes and Consequences of Low Self-Esteem in Children and AdolescentsDokumen2 halamanCauses and Consequences of Low Self-Esteem in Children and AdolescentsRb Cudiera ApeBelum ada peringkat

- Internship - Transfer Wint 2018-2 - 14012019Dokumen1 halamanInternship - Transfer Wint 2018-2 - 14012019Ajay KastureBelum ada peringkat

- 2019 Graduate DissertationsDokumen2 halaman2019 Graduate Dissertationsbony irawanBelum ada peringkat

- Ia Biology IbDokumen7 halamanIa Biology IbAlicia BBelum ada peringkat

- BATL050Dokumen11 halamanBATL050Roxana TroacaBelum ada peringkat

- Practical Report Guideline EEE250Dokumen8 halamanPractical Report Guideline EEE250MOHD ASYRAAF BIN SAIDINBelum ada peringkat

- Alvin A. Arens Auditing and Assurance Services Ed 16 (2017) - MHS-halaman-530-550-halaman-9 PDFDokumen1 halamanAlvin A. Arens Auditing and Assurance Services Ed 16 (2017) - MHS-halaman-530-550-halaman-9 PDFNeni Henday100% (1)

- ST Aidan's Newsletter & Magazine - Jan 2019Dokumen35 halamanST Aidan's Newsletter & Magazine - Jan 2019Pippa Rose SmithBelum ada peringkat

- Track Presentation Type Asme Paper Nupaper Title Author First Nam Author Last NamcompanyDokumen7 halamanTrack Presentation Type Asme Paper Nupaper Title Author First Nam Author Last NamcompanyYvesfBelum ada peringkat

- Collegiate Education - D11891Dokumen169 halamanCollegiate Education - D11891Dr Ronald NazarethBelum ada peringkat

- O&M Module PDF File-1Dokumen177 halamanO&M Module PDF File-1Strewbary BarquioBelum ada peringkat

- VISA Processing AssistanceDokumen1 halamanVISA Processing AssistanceMC Alcazar ObedBelum ada peringkat

- Surveillance - Synopticon & PanopticonDokumen9 halamanSurveillance - Synopticon & PanopticonXiaofeng DaiBelum ada peringkat

- BT 406 M.C.Q File by Amaan KhanDokumen37 halamanBT 406 M.C.Q File by Amaan KhanMuhammad TahirBelum ada peringkat

- A Prayer For Owen Meany EssayDokumen48 halamanA Prayer For Owen Meany Essayafibahiwifagsw100% (2)

- Unit 1 - Lesson IDokumen9 halamanUnit 1 - Lesson Imalaaileen6Belum ada peringkat

- Pilot Study in The Research ProcedureDokumen9 halamanPilot Study in The Research ProcedureCaroline SantosoqBelum ada peringkat

- CORE 1 - Assess Market OpportunitiesDokumen76 halamanCORE 1 - Assess Market OpportunitiesMarizon Pagalilauan Muñiz100% (4)

- Pengaruh Informasi Pelayanan Prolanis Dan Kesesuaian Waktu Terhadap Pemanfaatan Prolanis Di Pusat Layanan Kesehatan UnairDokumen8 halamanPengaruh Informasi Pelayanan Prolanis Dan Kesesuaian Waktu Terhadap Pemanfaatan Prolanis Di Pusat Layanan Kesehatan UnairFicka HanafiBelum ada peringkat

- Career Counseling & Low Ses Populations PresentationDokumen13 halamanCareer Counseling & Low Ses Populations Presentationapi-275969485Belum ada peringkat